Abstract

This chapter studies the effect of the quadrature on the isogeometric analysis of the wave propagation and structural vibration problems. The dispersion error of the isogeometric elements is minimized by optimally blending two standard Gauss-type quadrature rules. These blending rules approximate the inner products and increase the convergence rate by two extra orders when compared to those with fully-integrated inner products. To quantify the approximation errors, we generalize the Pythagorean eigenvalue error theorem of Strang and Fix. To reduce the computational cost, we further propose a two-point rule for C 1 quadratic isogeometric elements which produces equivalent inner products on uniform meshes and yet requires fewer quadrature points than the optimally-blended rules.

Access provided by CONRICYT-eBooks. Download chapter PDF

Similar content being viewed by others

6.1 Introduction

Partial differential eigenvalue problems arise in a wide variety of applications, for example the vibration of elastic bodies (structural vibration) or multi-group diffusion in nuclear reactors [58]. Finite element analysis of these differential eigenvalue problems leads to the matrix eigenvalue problem with the entries of the matrices which are usually approximated by numerical integration. The effect of these numerical integration methods on the eigenvalue and eigenfunction errors has been investigated in the literature; see for example Fix [29], Strang and Fix [58], and others [8,9,10]. Sharp and optimal estimates of the numerical eigenfunctions and eigenvalues of finite element analysis are established in [8, 9].

Hughes et al. [41] unified the analysis of the spectrum properties of the eigenvalue problem with the dispersion analysis for wave propagation problems. They established a duality principle between them: any numerical scheme that reduces the dispersion error of the wave propagation problems reduces the eigenvalue errors of the different eigenvalues problems and vice versa. Moreover, they share the same convergence property in the sense of convergence rates [15, 43, 54]. In this work, we focus on developing quadrature rules to optimize the dispersion errors and then apply these rules to the approximation of differential eigenvalue problems.

The dispersion analysis of the finite element method and spectral element method has been studied extensively; see for example Thomson and Pinsky[59, 60], Ihlenburg and Babuska [44], Ainsworth [1,2,3], and many others [23, 28, 35,36,37,38, 45, 46, 63]. Thomson and Pinsky studied the dispersive effects of using the Legendre, spectral, and Fourier local approximation basis functions for finite element methods when applied to the Helmholtz equation [59]. The choice of the basis functions has a negligible effect on the dispersion errors. Nevertheless, the continuity of the basis functions has a significant impact. Hughes et al. [41] showed that high continuities (up to C p−1 for p-th order isogeometric elements) on the basis functions result in dramatically smaller dispersion errors than that of finite elements.

Ainsworth [1] and [2] established that the optimal convergence rate, which is of order 2p, of the dispersion error for the p-th order standard finite elements and spectral elements, respectively. The work was complete as they established the analysis for arbitrary polynomial order. The dispersive properties of these methods have been studied in detail and the most effective scheme was conjectured to be a mixed one of these two [3, 49, 56]. Ainsworth and Wajid beautifully established the optimal blending of these two methods for arbitrary polynomial order in 2010 in [3]. The blending was shown to provide two orders of extra accuracy (superconvergence) in the dispersion error, which includes the fourth order superconvergence result obtained by a modified integration rule for linear finite elements in [35]. Also, this blending scheme is equivalent to the use of nonstandard quadrature rules and therefore it can be efficiently implemented by replacing the standard Gaussian quadrature by a nonstandard rule [3].

This blending idea can be extended to isogeometric analysis (IGA), a numerical method that bridges the gap between computer aided design (CAD) and finite element analysis (FEA). We refer to [13, 19, 21, 40] for its initial development and to [20, 26, 33, 34, 41,42,43, 47, 48, 50] for its applications. The feature that distinguishes isogeometric elements from finite and spectral elements is the fact that the basis functions have up to p − 1 continuous derivatives across element boundaries, where p is the order of the underlying polynomial. The publications [4, 19, 20, 41,42,43, 55] show that highly continuous isogeometric analysis delivers more robustness and better accuracy per degree of freedom than standard finite elements. Nevertheless, a detailed analysis of the solution cost reveals that IGA is more expensive to solve on a per degree of freedom basis than the lower continuous counterparts, such as finite element analysis [16,17,18, 52]. To exploit the reduction in cost, a set of solution strategies which control the continuity of the basis functions to deliver optimal solution costs were proposed [31, 32].

The dispersion analysis of isogeometric elements is studied in [41, 43, 54], presenting significant advantages over finite elements. Hughes et al. [41] showed that the dispersion error of the isogeometric analysis with high continuity (up to C p−1 for p-th order basis function) on the basis functions is smaller than that of the lower continuity finite element counterparts. Dedè et al. [24] study the dispersion analysis of the isogeometric elements for the two-dimensional harmonic plane waves in an isotropic and homogeneous elastic medium. The anisotropic curves are represented using NURBS-based IGA and the errors associated with the compressional and shear wave velocities for different directions of the wave vector are modeled. Recently, the dispersion error minimization for isogeometric analysis has been performed numerically in Puzyrev et al. [54] and analytically in Calo et al. [15].

In this work, we seek blending quadrature rules for isogeometric element to minimize the dispersion error of the scheme and hence increase its accuracy and robustness. We focus on the dispersion analysis of isogeometric elements and apply the blending ideas introduced by [3] for finite and spectral elements to isogeometric elements by using a modified inner product. The new blending schemes reduce the errors in the approximation of the eigenvalues (and, in some cases, the eigenfunctions). Using the optimal blending, convergence rates of the dispersion error is increased by two additional orders. To analyze the errors, we characterize the errors in the eigenvalues and the eigenfunctions for all the modes. The total “error budget” of the numerical method consists of the errors arising from the approximation of eigenvalues and eigenfunctions. When the stiffness and mass terms are fully integrated, for each eigenvalue, the sum of the eigenvalue error and the square of the eigenfunction error in the L 2-norm scaled by the exact eigenvalue equals the square of the error in the energy norm. Once one of these terms are not fully integrated, this is not true any more. To account for the error of the approximated/modified inner product, we generalize Strang’s Pythagorean eigenvalue theorem to include the effect of inexact integration.

The outline of the remainder of this chapter is as follows. We first describe the model problem in Sect. 6.2. In Sect. 6.3, we present a generalization of the Pythagorean eigenvalue error theorem that accounts for the error of the modified inner products. In Sect. 6.4, we describe the optimal blending of finite and spectral elements and present an optimal blending scheme for isogeometric analysis. In Sect. 6.5, we develop a two-point quadrature rule for periodic boundaries. Numerical examples for one-dimensional and two-dimensional problems are given in Sect. 6.6. Finally, Sect. 6.7 summarizes our findings and describes future research directions.

6.2 Problem Setting

We begin with the differential eigenvalue problem

where Δ = ∇2 is the Laplacian and \(\varOmega \subset \mathbb {R}^d, d=1,2,3\) is a bounded open domain with Lipschitz boundary. This eigenvalue problem has a countable infinite set of eigenvalues \({\lambda _j} \in {\mathbb {R}}\)

and an associated set of orthonormal eigenfunctions u j

where δ jk is the Kronecker delta which is equal to 1 when i = j and 0 otherwise (see for example [58]). The normalized eigenfunctions form an L 2-orthonormal basis. Moreover, using integration by parts and (6.1), they are orthogonal also in the energy inner product

Let V be the solution space, a subspace of the Hilbert space \({{H^1_0}(\varOmega )}\). The standard weak form for the eigenvalue problem: Find all eigenvalues \({\lambda _j} \in {\mathbb {R}}\) and eigenfunctions u j ∈ V such that,

where

and (⋅, ⋅) is the L 2 inner product. These two inner products are associated with the following energy and L 2 norms

The Galerkin-type formulation of the eigenvalue problem (6.1) is the discrete form of (6.5): Seek \(\lambda _j^h \in {\mathbb {R}}\) and \(u_j^h \in V^h \subset V\) such that

which results in the generalized matrix eigenvalue problem

where K is referred as the stiffness matrix, M is referred as the mass matrix, and (λ h, u h) are the unknown eigenpairs.

We described the differential eigenvalue problem and its Galerkin discretization above. For dispersion analysis, we study the classical wave propagation equation

where c is the wave propagation speed. We abuse the notation of unknown u here. Assuming time-harmonic solutions of the form u(x, t) = e −iωt u(x) for a given temporal frequency ω, the wave equation reduces to the well-known Helmholtz equation

where the wavenumber k = ω∕c represents the ratio of the angular frequency ω to the wave propagation speed c. The wavelength is equal to 2π∕k. The discretization of (6.11) leads to the following linear equation system

The equivalence between (6.1) and (6.11) or (6.9) and (6.12) is established by setting λ or λ h = k 2. Based on this equivalence, a duality principle between the spectrum analysis of the differential eigenvalue problem and the dispersion analysis of the wave propagation is established in [41]. In practice, the wavenumber is approximated and we denote it as k h. In general, k h ≠ k. Then the solution of (6.12) is a linear combination of plane waves with numerical wavenumbers k h. Hence the discrete and exact waves have different wavelengths. The goal of the dispersion analysis is to quantify this difference and define this difference as the dispersion error of a specific numerical method. That is, dispersion analysis seeks to quantify how well the discrete wavenumber k h approximates the continuous/exact k. Finally, in the view of unified analysis in [41], this dispersion error describes the errors of the approximated eigenvalues to the exact ones for (6.8) or (6.9).

6.3 Pythagorean Eigenvalue Error Theorem and Its Generalization

The theorem was first described in Strang and Fix [58] and was referred as the Pythagorean eigenvalue error theorem in Hughes [43]. In this section, we revisit this theorem in detail and generalize it.

6.3.1 The Theorem

Following Strang and Fix [58], the Rayleigh-Ritz idea for the steady-state equation  (

( is a differential operator) was extended to the differential eigenvalue problem. The idea leads to the finite element approximation of the eigenvalue problem. Equation (6.5) resembles the variational formulation for the steady-state equation. Hence, one expects the approximated eigenfunction errors are of the same convergence rates as those in steady-state problems. Definitely, the a priori error estimation of the eigenfunction will depend on the index j (as in j-th eigenvalue) and the accuracy will deteriorate as j increases. In fact, the errors of the approximated eigenvalues also increase and hence deteriorate the accuracy as j increases [7, 41, 58].

is a differential operator) was extended to the differential eigenvalue problem. The idea leads to the finite element approximation of the eigenvalue problem. Equation (6.5) resembles the variational formulation for the steady-state equation. Hence, one expects the approximated eigenfunction errors are of the same convergence rates as those in steady-state problems. Definitely, the a priori error estimation of the eigenfunction will depend on the index j (as in j-th eigenvalue) and the accuracy will deteriorate as j increases. In fact, the errors of the approximated eigenvalues also increase and hence deteriorate the accuracy as j increases [7, 41, 58].

The a priori error analysis for the approximation of eigenfunctions and eigenvalues has a prominent connection. The motivation to derive the Pythagorean eigenvalue error theorem as stated below (see also Lemma 6.3 in [58]) is to elucidate the relation the between the eigenvalue and eigenvector errors to the total approximation error.

Theorem 6.1

For each discrete mode, with the normalization ∥u j∥ = 1 and \( \| u_j^h \| = 1\) , we have

By the Minmax Principle (discovered by Poincaré, Courant, and Fischer; referred by Strang and Fix), all finite element approximated eigenvalues bound the exact ones from above, that is

This allows us to write (6.13) in the conventional Pythagorean theorem formulation

This theorem was established with a simple proof in [58]. Alternatively, we present here

This theorem tells that for each discrete mode, the square of the error in the energy norm consists of the eigenvalue error and the product of the eigenvalue and the square of the eigenfunction error in the L 2-norm. We can rewrite (6.13) as

which implies

This tells further the relation among the eigenvalue errors, eigenfunction error in L 2 norm, and eigenfunction error in energy norm. Once error estimation for eigenfunction error in energy norm is established, the other two are obvious. Also, the inequality (6.19) does not hold for methods that do not approximate all eigenvalues from above (that is violating (6.14)), for example, the spectral element method [2]. In general, the spectral element method is realized by using the Gauss-Legendre-Lobatto nodes to define the interpolation nodes for Lagrange basis functions in each element. This quadrature rule induces an error in the approximation of the inner products, but preserves the optimal order of convergence of the scheme. In fact, these errors in the inner product allow the numerical scheme to approximate eigenvalues from below. If the discrete method does not fully reproduce the inner products associated with the stiffness and mass matrices or these inner products are approximated using numerical integration, this theorem needs to be extended to account for the errors introduced by the approximations of the inner products.

6.3.2 The Quadrature

Now to derive the generalized Pythagorean eigenvalue error theorem, we first introduce the numerical integration with quadratures. The entries of the stiffness and mass matrices K and M in (6.9) are given by the inner products

where ϕ i(x) are the piecewise polynomial basis functions. Here, we consider basis functions for finite elements, spectral elements, and isogeometric analysis. M and K are symmetric positive definite matrices. Moreover, in the 1D matrices have 2p + 1 diagonal entries.

In practice, the integrals in (6.20) and (6.21) are evaluated numerically, that is, approximated by quadrature rules. Now we give a brief description of the quadrature rules for approximating the inner products (6.20) and (6.21). On a reference element \(\hat K\), an (n + 1)-point quadrature rule for a function f(x) is of the form

where \(\hat {\varpi }_l\) are the weights, \(\hat {n_l}\) are the nodes, and \(\hat {E}_{n+1}\) is the error of the quadrature rule. For each element K, there is an invertible affine map σ such that \(K = \sigma (\hat K)\), which leads to the correspondence between the functions on K and \(\hat K\). Let J K be the corresponding Jacobian of the mapping. Then (6.22) induces a quadrature rule over the element K given by

where \(\varpi _{l,K} = \text{det}(J_K) \hat \varpi _l\) and \(n_{l,K} = \sigma (\hat n_l)\).

The quadrature rule is exact for a given function f(x) when the remainder E n+1 is exactly zero. For example, the standard (n + 1)-point Gauss-Legendre (GL or Gauss) quadrature is exact for the linear space of polynomials of degree at most 2n + 1 (see, for example, [12, 57]).

The classical Galerkin finite element analysis typically employs the Gauss quadrature with p + 1 (where p is the polynomial order) quadrature points per parametric direction that fully integrates every term in the bilinear forms defined by the weak form. A quadrature rule is optimal if the function is evaluated with the minimal number of nodes (for example, Gauss quadrature with n + 1 evaluations is optimal for polynomials of order 2n + 1 in one dimension).

Element-level integrals may be approximated using other quadrature rules, for example the Gauss-Lobatto-Legendre (GLL or Lobatto) quadrature rule that is used in the spectral element method (SEM). The Lobatto quadrature evaluated at n + 1 nodes is accurate for polynomials up to degree 2n + 1. However, selecting a rule with p + 1 evaluations for a polynomial of order p and collocating the Lagrange nodes with the quadrature positions renders the mass matrix diagonal in 1D, 2D and 3D for arbitrary geometrical mappings. This resulting diagonal mass matrix is a more relevant result than the reduction in the accuracy of the calculation. Particularly, given that this property preserves the optimal convergence order for these higher-order schemes. Lastly, the spectral elements possess a superior phase accuracy when compared with the standard finite elements of the same polynomial order [2].

Isogeometric analysis based on NURBS (Non-Uniform Rational B-Splines) has been described in a number of papers (e.g. [13, 19, 20, 41]). Isogeometric analysis employs piecewise polynomial curves composed of linear combinations of B-spline basis functions. B-spline curves of polynomial order p may have up to p − 1 continuous derivatives across element boundaries. Three different refinement mechanisms are commonly used in isogeometric analysis, namely the h-, p- and k-refinement, as detailed in [20]. We refer the reader to [53] for the definition of common concepts of isogeometric analysis such as knot vectors, B-spline functions, and NURBS.

The derivation of optimal quadrature rules for NURBS-based isogeometric analysis with spaces of high polynomial degree and high continuity has attracted significant attention in recent years [5, 6, 11, 12, 14, 39, 42]. The efficiency of Galerkin-type numerical methods for partial differential equations depends on the formation and assembly procedures, which, in turn, largely depend on the efficiency of the quadrature rule employed. Integral evaluations based on full Gauss quadrature are known to be efficient for standard C 0 finite element methods, but inefficient for isogeometric analysis that uses higher-order continuous spline basis functions [51].

Hughes et al. [42] studied the effect of reduced Gauss integration on the finite element and isogeometric analysis eigenvalue problems. By using p Gauss points (i.e., underintegrating using one point less), one modifies the mass matrix only (in 1D). By using less than p Gauss points (i.e., underintegrating using several points less), both mass and stiffness matrices are underintegrated. Large underintegration errors may lead to the loss of stability since the stiffness matrix becomes singular. As shown in [42], this kind of underintegration led to the results that were worse than the fully integrated ones and the highest frequency errors diverged as the mesh was refined. However, as we show in the next sections, using properly designed alternative quadratures may lead to more accurate results.

The assembly of the elemental matrices into the global stiffness and mass matrices is done in a similar way for all Galerkin methods we analyze in this chapter. Similarly, the convergence rate for all Galerkin schemes we analyze is the same. However, the heterogeneity of the high-order finite element (C 0 elements, i.e., SEM and FEA) basis functions leads to a branching of the discrete spectrum and a fast degradation of the accuracy for higher frequencies. In fact, the degraded frequencies in 1D are about half of all frequencies, while in 3D this proportion reduces to about seven eighths. On uniform meshes, B-spline basis functions of the highest p − 1 continuity, on the contrary, are homogeneous and do not exhibit such branching patterns other than the outliers that correspond to the basis functions with support on the boundaries of the domain.

6.3.3 The Generalization

Now we consider the generalization. Applying quadrature rules to (6.8), we have the approximated form

where

and

where \(\{\varpi _{l,K}^{(1)}, n_{l,K}^{(1)} \}\) and \(\{\varpi _{l,K}^{(2)}, n_{l,K}^{(2)} \}\) specify two (possibly different) quadrature rules. This leads to the matrix eigenvalue problem

where the superscripts on K and M and the tildes specify the effect of the quadratures.

Remark 6.1

For multidimensional problems on tensor product grids, the stiffness and mass matrices can be expressed as Kronecker products of 1D matrices [30]. For example, in the 2D case, the components of K and M can be represented as fourth-order tensors using the definitions of the matrices and the basis functions for the 1D case [22, 30]

where \(\mathbf {M} ^{1D} _{i j}\) and \(\mathbf {K} ^{1D} _{i j}\) are the mass and stiffness matrices of the 1D problem as given by (6.20) and (6.21). We refer the reader to [22] for the description of the summation rules.

To understand the errors of the approximations of eigenvalues and eigenfunctions when quadratures are applied, we measure the errors they induce in the inner products. The following theorem generalizes the Pythagorean eigenvalue error theorem to account for these modified inner products [54].

Theorem 6.2

For each discrete mode, with the normalization ∥u j∥ = 1 and \(( \widetilde u_j^h, \widetilde u_j^h)_h = 1\) , we have

where ∥⋅∥E,h is the energy norm evaluated by a quadrature rule.

Proof

By definition and linearity of the bilinear forms, we have

From (6.5), we have

Thus, adding and subtracting a term \(\lambda _j (\tilde u_j^h, \tilde u_j^h)\), (6.31) is rewritten as

From (6.24) and the definition of the modified energy norm ∥⋅∥E,h, we have

Noting that \(( \widetilde u_j^h, \widetilde u_j^h)_h = 1\), we have

Thus, adding and subtracting a term \(\widetilde \lambda _j^h - \lambda _j \) gives

which completes the proof.

The equation in (6.30) can be rewritten as

in which the first term on the right-hand side is the relative error of the approximated eigenvalue, the second term represent the error of eigenfunction in L 2 norm, the third term shows the eigenvalue-scaled error due to the modification of the inner product associated with the stiffness, and the last term shows the error due to the modification of the inner product associated with the mass.

The left-hand side and the first two terms on the right-hand side resemble the Pythagorean eigenvalue error theorem, while the extra two terms reveal the effect of numerical integration of the inner products associated with the stiffness and the mass. In the cases when these inner products are integrated exactly, these two extra terms are zeros. Consequently, Theorem 6.2 reduces to the standard Pythagorean eigenvalue error theorem.

6.4 Optimal Blending for Finite Elements and Isogeometric Analysis

Several authors (e.g. [3, 27, 56]) studied the blended spectral-finite element method that uses nonstandard quadrature rules to achieve an improvement of two orders of accuracy compared with the fully integrated schemes. This method is based on blending the full Gauss quadrature, which exactly integrates the bilinear forms to produce the mass and stiffness matrices, with the Lobatto quadrature, which underintegrates them. This methodology exploits the fact that the fully integrated finite elements exhibit phase lead when compared with the exact solutions, while the underintegrated with Lobatto quadrature methods, such as, spectral elements have phase lag.

Ainsworth and Wajid [3] chose the blending parameter to maximize the order of accuracy in the phase error. They showed that the optimal choice for the blending parameter is given by weighting the spectral element and the finite element methods in the ratio \(\frac {p}{p+1}\). As mentioned above, this optimally blended scheme improves by two orders the convergence rate of the blended method when compared against the finite or spectral element methods that were the ingredients used in the blending. The blended scheme can be realized in practice without assembling the mass matrices for either of the schemes, but instead by replacing the standard Gaussian quadrature rule by an alternative rule, as Ainsworth and Wajid clearly explained in [3]. Thus, no additional computational cost is required by the blended scheme although the ability to generate a diagonal mass matrix by the underintegrated spectral method is lost.

To show how an improvement in the convergence rate is achieved, consider, for example, the approximate eigenfrequencies written as a series in Λ = ωh for the linear finite and spectral elements, respectively [3]

When these two schemes are blended using a blending parameter τ, the approximate eigenfrequencies become

For τ = 0 and τ = 1, the above expression reduces to the ones obtained by the finite element and spectral element schemes, respectively. The choice of τ = 1∕2 allows the middle term of (6.34) to vanish and adds two additional orders of accuracy to the phase approximation when compared with the standard schemes. Similarly, by making the optimal choice of blending parameter \(\tau = \frac {p}{(p+1)}\) in high-order schemes, they removed the leading order term from the error expansion.

The numerical examples in Sect. 6.6 show that a similar blending can be applied to the isogeometric mass and stiffness matrices to reduce the eigenvalue error. For C 1 quadratic elements, the approximate eigenfrequencies are

Similarly, blending these two rules utilizing a parameter τ gives

Thus the optimal ratio of the Lobatto and Gauss quadratures is 2 : 1 (τ = 2∕3) similar to the optimally blended spectral-finite element scheme. For C 2 cubic elements, we determine that a non-convex blending with τ = 5∕2 allows us to remove the leading error term and thus achieve two additional orders of accuracy.

Remark 6.2

In general, for C 0 elements such as the finite elements and spectral elements, the optimal blending is [3]: \(\tau = \frac {p}{(p+1)}\) for arbitrary p. This is, however, not true for isogeometric C k elements, where 1 ≤ k ≤ p − 1 and p ≥ 3. Finding the optimal blending parameter for p ≥ 3 with k > 0 remains an open question. For p ≤ 7 with k = p − 1 and the discussion on its generalization, we refer the reader to [15].

Equations (6.32)–(6.36) show that the absolute errors in the eigenfrequencies converge with the rates of \(O\left ( \varLambda ^{2p+1} \right )\) and \(O\left ( \varLambda ^{2p+3} \right ) \) for the standard and optimal schemes, respectively. If we consider the relative eigenfrequency errors, from Eqs. (6.35) and (6.36), these take the form

that is, the convergence rate for frequencies computed using IGA approximations is \(O\left ( \varLambda ^{2p} \right ) \) as shown in [19, 55]. The optimal blending in IGA leads to a \(O\left ( \varLambda ^{2p+2} \right ) \) convergence rate for the relative eigenfrequencies. This superconvergence result is similar to the one achieved by the optimally-blending of the spectral and finite element methods of [3].

Remark 6.3

Wang et al. [61, 62] constructed super-convergent isogeometric finite elements for dispersion by blending two alternative quadrature methods. They used full Gauss and a method which reduces the bandwidth of the mass and stiffness method. Although the construction is different, algebraically the resulting algebraic system is identical for uniform meshes.

6.5 Two-Point Rules for C 1 Quadratic Isogeometric Analysis

The optimally-blended rules presented above first introduce an auxiliary parameter for combining two different standard quadrature rules. Then the parameter is determined by eliminating the highest order term in the error expansion. We can achieve a similar result by designing a nonstandard quadrature rule here.

For C 1 quadratic isogeometric analysis, the blending requires evaluations of the function at two sets of quadrature nodes on each element, which is not computationally efficient. In this section, we present a two-point rule which eliminates the leading order term in the error expansion hence results in an equivalent but computationally efficient scheme for the C 1 quadratic isogeometric elements.

We consider uniform meshes with periodic boundary conditions for the eigenvalue problem in 1D. In the reference interval [−1, 1], the two point rules are listed in Table 6.1.

These two-point rules share some sense of symmetry and lead to the same matrix eigenvalue problem. On uniform meshes with periodic boundary conditions, all these rules give the same dispersion errors.

In a periodic boundary domain discretized with a uniform mesh, we show numerically that these two-point rules lead to the same set of eigenvalues and eigenfunctions as these obtained by the optimally-blended schemes. In fact, they result in the same stiffness and mass matrices. The two-point rules fail when we use a boundary condition other than periodic, for example, Dirichlet or Neumann conditions. This happens since the two-point rule does not integrate the stiffness terms exactly near the boundary elements where the derivatives of the B-splines basis functions do not vanish; see Fig. 6.1. We will understand and address this shortcoming in future work.

For multidimensional cases, we assume that a tensor product grid is placed on the domain Ω. Then generalize these two-point rules to be 2d-point rules for d-dimensional problems by simple tensor construction. We conclude that these two-point rules developed above remain valid for higher dimensional problems. More details are referred to [15, 25].

6.6 Numerical Examples

In this section, we present numerical examples of the one- and two-dimensional problems described in Sect. 6.2 to show how the use of optimal quadratures reduce the approximation errors in isogeometric analysis.

The 1D elliptic eigenvalue problem has the following exact eigenvalues and their corresponding eigenfunctions

for j = 1, 2, …. The approximate eigenvalues \(\lambda _j^h\) are sorted in ascending order and are compared to the corresponding exact eigenvalues λ j. The total number of degrees of freedom (discrete modes) is N = 1000.

Figure 6.2 compares the approximation errors of C 1 quadratic isogeometric elements using the standard Gaussian quadrature and the optimal rule. We show the relative eigenvalue errors \(\frac {\mu _l^h - {\lambda _l}}{\lambda _l}\), the L 2-norm eigenfunction errors \(\left \| {{u_l} - v_l^h} \right \|{ }_0^2\) and the relative energy-norm errors \(\frac {\left \| {{u_l} - v_l^h} \right \|{ }_E^2}{\lambda _l}\). This format of error representation clearly illustrates the budget of the generalized Pythagorean eigenvalue theorem. The error in the L 2 norm \(1 - \left \| {v_l^h} \right \|{ }_0^2\) is shown only in the case when it is not zero.

In Fig. 6.2, the use of the optimal quadrature leads to more accurate results. Surprisingly, not only the eigenvalues, but also the eigenfunctions of the problem are better approximated in this particular case. The optimal ratio of blending of the Lobatto and Gauss quadrature rules in this case is 2:1, which is the same to the ratio proposed by Ainsworth and Wajid (2010) for the finite element case.

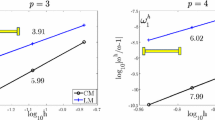

Figure 6.3 shows the dispersion errors in the eigenvalue approximation with C 1 quadratic isogeometric elements. The size of the meshes used in these simulations increases from 10 to 2560 elements. These results confirm two extra orders of convergence in the eigenvalue errors.

To study the behavior of discrete eigenfunctions from different parts of the spectrum, in Fig. 6.4 we compare the discrete and analytical eigenfunctions for C 1 quadratic elements. We show the 200th and the 400th eigenfunctions, where the error is low, and the 600th and the 800th eigenfunctions, for which the approximation is worse. As expected, both the fully- and under-integrated methods provide similar eigenfunctions. There is no loss of accuracy in eigenfunction approximation due to the use of the non-standard optimal quadrature rules.

Discrete 200th (top left), 400th (top right), 600th (bottom left) and 800th (bottom right) eigenfunctions for C 1 quadratic elements. The discrete eigenfunctions resulting from the optimal (red squares) and the standard scheme (blue line) are compared with the analytical eigenfunctions (black line). The total number of discrete modes is 1000

We also note that for practical applications, one may look for a scheme that reduces errors in the desired intervals of wavenumber (frequency) for a given mesh size. Such blending schemes are also possible and (though not being optimal, i.e. not delivering superconvergence) they are superior in the eigenvalue approximation compared to the optimal blending in certain ranges of wavenumber that are of practical interest in wave propagation problems. We refer the reader to [54] for further details.

Next, we continue our study with the dispersion properties of the two-dimensional eigenvalue problem on tensor product meshes. Optimal quadratures for multidimensional problems are formed by tensor product of the one dimensional case. The exact eigenvalues and eigenfunctions of the 2D eigenvalue problem are given by

for k, l = 1, 2, …. Again, the approximate eigenvalues \(\lambda _{kl}^h\) are sorted in ascending order.

Figure 6.5 compares the eigenvalue errors of the standard Gauss using C 1 quadratic elements with the optimal scheme (τ = 2∕3). The latter has significantly better approximation properties.

These results demonstrate that the use of optimal quadratures in isogeometric analysis significantly improves the accuracy of the discrete approximations when compared to the fully-integrated Gauss-based method.

Figure 6.6 compares the eigenvalue errors for C 2 cubic isogeometric elements. Again, the optimal scheme has significantly better approximation properties than the standard method. The scale and representation format are different from those of Fig. 6.5.

Figure 6.7 compares the dispersion errors of the standard Gauss fully-integrated method with the optimally-blended scheme and the two-point rule described in the previous section. In this example, we use periodic knots at the boundaries of the domain. As can be seen from Fig. 6.7, the two-point rule leads to the same results as those obtained by the optimally-blended scheme. At the same time, this rule is computationally cheaper than the three-point Gauss rule or any blended scheme.

6.7 Conclusions and Future Outlook

To understand the dispersion properties of isogeometric analysis and to improve them, we generalize the Pythagorean eigenvalue error theorem to account for the effects of the modified inner products on the resulting weak forms. We show that the blended quadrature rules reduce the phase error of the numerical method for the eigenvalue problems.

The proposed optimally-blended scheme further improves the superior spectral accuracy of isogeometric analysis. We achieve two extra orders of convergence in the eigenvalues by applying these blended rules. We present and test two-point rules which reduce the number of quadrature nodes and the computational cost, and at the same time, produce the same eigenvalues and eigenfunctions. We believe that one can extend the method to arbitrary high-order C p−1 isogeometric elements by identifying suitable quadrature rules. Nevertheless, for higher-order polynomial approximations the only known optimal quadratures are the result of blending a Gauss rule and a Lobatto quadrature rule. The search for this class of quadratures that result in super-convergent dispersion properties and use fewer quadrature points will be the subject of our future work.

Another future direction is the study on the non-uniform meshes and non-constant coefficient wave propagation problems. The study with variable continuity is also of interest. We will study the impact of the variable continuities of the basis functions on the dispersion properties of the numerical methods and how the dispersion can be minimized by designing goal-oriented quadrature rules.

References

Ainsworth, M.: Discrete dispersion relation for hp-version finite element approximation at high wave number. SIAM J. Numer. Anal. 42(2), 553–575 (2004)

Ainsworth, M., Wajid, H.A.: Dispersive and dissipative behavior of the spectral element method. SIAM J. Numer. Anal. 47(5), 3910–3937 (2009)

Ainsworth, M., Wajid, H.A.: Optimally blended spectral-finite element scheme for wave propagation and nonstandard reduced integration. SIAM J. Numer. Anal. 48(1), 346–371 (2010)

Akkerman, I., Bazilevs, Y., Calo, V.M., Hughes, T.J.R., Hulshoff, S.: The role of continuity in residual-based variational multiscale modeling of turbulence. Comput. Mech. 41(3), 371–378 (2008)

Antolin, P., Buffa, A., Calabro, F., Martinelli, M., Sangalli, G.: Efficient matrix computation for tensor-product isogeometric analysis: the use of sum factorization. Comput. Methods Appl. Mech. Eng. 285, 817–828 (2015)

Auricchio, F., Calabro, F., Hughes, T.J.R., Reali, A., Sangalli, G.: A simple algorithm for obtaining nearly optimal quadrature rules for NURBS-based isogeometric analysis. Comput. Methods Appl. Mech. Eng. 249, 15–27 (2012)

Babuska, I.M., Sauter, S.A.: Is the pollution effect of the fem avoidable for the Helmholtz equation considering high wave numbers? SIAM J. Numer. Anal. 34(6), 2392–2423 (1997)

Banerjee, U.: A note on the effect of numerical quadrature in finite element eigenvalue approximation. Numer. Math. 61(1), 145–152 (1992)

Banerjee, U., Osborn, J.E.: Estimation of the effect of numerical integration in finite element eigenvalue approximation. Numer. Math. 56(8), 735–762 (1989)

Banerjee, U., Suri, M.: Analysis of numerical integration in p-version finite element eigenvalue approximation. Numer. Methods Partial Differ. Equ. 8(4), 381–394 (1992)

Bartoň, M., Calo, V.M.: Gaussian quadrature for splines via homotopy continuation: rules for C2 cubic splines. J. Comput. Appl. Math. 296, 709–723 (2016)

Bartoň, M., Calo, V.M.: Optimal quadrature rules for odd-degree spline spaces and their application to tensor-product-based isogeometric analysis. Comput. Methods Appl. Mech. Eng. 305, 217–240 (2016)

Bazilevs, Y., Calo, V.M., Cottrell, J., Hughes, T.J.R., Reali, A., Scovazzi, G.: Variational multiscale residual-based turbulence modeling for large eddy simulation of incompressible flows. Comput. Methods Appl. Mech. Eng. 197(1), 173–201 (2007)

Calabrò, F., Sangalli, G., Tani, M.: Fast formation of isogeometric Galerkin matrices by weighted quadrature. Comput. Methods Appl. Mech. Eng. 316, 606–622 (2017)

Calo, V.M., Deng, Q., Puzyrev, V.: Dispersion optimized quadratures for isogeometric analysis. arXiv:1702.04540 (2017, preprint)

Collier, N., Pardo, D., Dalcin, L., Paszynski, M., Calo, V.M.: The cost of continuity: a study of the performance of isogeometric finite elements using direct solvers. Comput. Methods Appl. Mech. Eng. 213, 353–361 (2012)

Collier, N., Dalcin, L., Pardo, D., Calo, V.M.: The cost of continuity: performance of iterative solvers on isogeometric finite elements. SIAM J. Sci. Comput. 35(2), A767–A784 (2013)

Collier, N., Dalcin, L., Calo, V.M.: On the computational efficiency of isogeometric methods for smooth elliptic problems using direct solvers. Int. J. Numer. Methods Eng. 100(8), 620–632 (2014)

Cottrell, J.A., Reali, A., Bazilevs, Y., Hughes, T.J.R.: Isogeometric analysis of structural vibrations. Comput. Methods Appl. Mech. Eng. 195(41), 5257–5296 (2006)

Cottrell, J., Hughes, T.J.R., Reali, A.: Studies of refinement and continuity in isogeometric structural analysis. Comput. Methods Appl. Mech. Eng. 196(41), 4160–4183 (2007)

Cottrell, J.A., Hughes, T.J.R., Bazilevs, Y.: Isogeometric Analysis: Toward Integration of CAD and FEA. Wiley, Hoboken (2009)

De Basabe, J.D., Sen, M.K.: Grid dispersion and stability criteria of some common finite-element methods for acoustic and elastic wave equations. Geophysics 72(6), T81–T95 (2007)

De Basabe, J.D., Sen, M.K.: Stability of the high-order finite elements for acoustic or elastic wave propagation with high-order time stepping. Geophys. J. Int. 181(1), 577–590 (2010)

Dedè, L., Jäggli, C., Quarteroni, A.: Isogeometric numerical dispersion analysis for two-dimensional elastic wave propagation. Comput. Methods Appl. Mech. Eng. 284, 320–348 (2015)

Deng, Q., Bartoň, M., Puzyrev, V., Calo, V.M.: Dispersion-minimizing optimal quadrature rules for c 1 quadratic isogeometric analysis. Comput. Methods Appl. Mech. Eng. 328, 554–564 (2018)

Elguedj, T., Bazilevs, Y., Calo, V.M., Hughes, T.J.R.: B-bar and F-bar projection methods for nearly incompressible linear and non-linear elasticity and plasticity using higher-order NURBS elements. Comput. Methods Appl. Mech. Eng. 197(33), 2732–2762 (2008)

Esterhazy, S., Melenk, J.: An analysis of discretizations of the Helmholtz equation in L 2 and in negative norms. Comput. Math. Appl. 67(4), 830–853 (2014). https://doi.org/10.1016/j.camwa.2013.10.005

Ewing, R., Heinemann, R., et al.: Incorporation of mixed finite element methods in compositional simulation for reduction of numerical dispersion. In: SPE Reservoir Simulation Symposium. Society of Petroleum Engineers (1983)

Fix, G.J.: Effect of quadrature errors in finite element approximation of steady state, eigenvalue and parabolic problems. In: Aziz, A.K. (ed.) The Mathematical Foundation of the Finite Element Method with Applications to Partial Differential Equations, pp. 525–556 (1972)

Gao, L., Calo, V.M.: Fast isogeometric solvers for explicit dynamics. Comput. Methods Appl. Mech. Eng. 274, 19–41 (2014)

Garcia, D., Pardo, D., Dalcin, L., Paszyski, M., Collier, N., Calo, V.M.: The value of continuity: refined isogeometric analysis and fast direct solvers. Comput. Methods Appl. Mech. Eng. 316, 586–605 (2016)

Garcia, D., Bartoň, M., Pardo, D.: Optimally refined isogeometric analysis. Proc. Comput. Sci. 108, 808–817 (2017)

Gómez, H., Calo, V.M., Bazilevs, Y., Hughes, T.J.R.: Isogeometric analysis of the Cahn–Hilliard phase-field model. Comput. Methods Appl. Mech. Eng. 197(49), 4333–4352 (2008)

Gomez, H., Hughes, T.J.R., Nogueira, X., Calo, V.M.: Isogeometric analysis of the isothermal Navier–Stokes–Korteweg equations. Comput. Methods Appl. Mech. Eng. 199(25), 1828–1840 (2010)

Guddati, M.N., Yue, B.: Modified integration rules for reducing dispersion error in finite element methods. Comput. Methods Appl. Mech. Eng. 193(3), 275–287 (2004)

Harari, I.: Reducing spurious dispersion, anisotropy and reflection in finite element analysis of time-harmonic acoustics. Comput. Methods Appl. Mech. Eng. 140(1–2), 39–58 (1997)

Harari, I., Slavutin, M., Turkel, E.: Analytical and numerical studies of a finite element PML for the Helmholtz equation. J. Comput Acoust. 8(1), 121–137 (2000)

He, Z., Cheng, A., Zhang, G., Zhong, Z., Liu, G.: Dispersion error reduction for acoustic problems using the edge-based smoothed finite element method (ES-FEM). Int. J. Numer. Methods Eng. 86(11), 1322–1338 (2011)

Hiemstra, R.R., Calabrò, F., Schillinger, D., Hughes, T.J.R.: Optimal and reduced quadrature rules for tensor product and hierarchically refined splines in isogeometric analysis. Comput. Methods Appl. Mech. Eng. 316, 966–1004 (2016)

Hughes, T.J.R., Cottrell, J.A., Bazilevs, Y.: Isogeometric analysis: CAD, finite elements, NURBS, exact geometry and mesh refinement. Comput. Methods Appl. Mech. Eng. 194(39), 4135–4195 (2005)

Hughes, T.J.R., Reali, A., Sangalli, G.: Duality and unified analysis of discrete approximations in structural dynamics and wave propagation: comparison of p-method finite elements with k-method NURBS. Comput. Methods Appl. Mech. Eng. 197(49), 4104–4124 (2008)

Hughes, T.J.R., Reali, A., Sangalli, G.: Efficient quadrature for NURBS-based isogeometric analysis. Comput. Methods Appl. Mech. Eng. 199(5), 301–313 (2010)

Hughes, T.J.R., Evans, J.A., Reali, A.: Finite element and NURBS approximations of eigenvalue, boundary-value, and initial-value problems. Comput. Methods Appl. Mech. Eng. 272, 290–320 (2014)

Ihlenburg, F., Babuška, I.: Dispersion analysis and error estimation of Galerkin finite element methods for the Helmholtz equation. Int. J. Numer. Methods Eng. 38(22), 3745–3774 (1995)

Komatitsch, D., Tromp, J.: Introduction to the spectral element method for three-dimensional seismic wave propagation. Geophys. J. Int. 139(3), 806–822 (1999)

Komatitsch, D., Vilotte, J.P.: The spectral element method: an efficient tool to simulate the seismic response of 2d and 3d geological structures. Bull. Seismol. Soc. Am. 88(2), 368–392 (1998)

Lipton, S., Evans, J.A., Bazilevs, Y., Elguedj, T., Hughes, T.J.R.: Robustness of isogeometric structural discretizations under severe mesh distortion. Comput. Methods Appl. Mech. Eng. 199(5), 357–373 (2010)

Liu, J., Dedè, L., Evans, J.A., Borden, M.J., Hughes, T.J.R.: Isogeometric analysis of the advective Cahn–Hilliard equation: spinodal decomposition under shear flow. J. Comput. Phys. 242, 321–350 (2013)

Marfurt, K.J.: Accuracy of finite-difference and finite-element modeling of the scalar and elastic wave equations. Geophysics 49(5), 533–549 (1984)

Motlagh, Y.G., Ahn, H.T., Hughes, T.J.R., Calo, V.M.: Simulation of laminar and turbulent concentric pipe flows with the isogeometric variational multiscale method. Comput. Fluids 71, 146–155 (2013)

Nguyen, L.H., Schillinger, D.: A collocated isogeometric finite element method based on Gauss–Lobatto Lagrange extraction of splines. Comput. Methods Appl. Mech. Eng. 316, 720–740 (2016)

Pardo, D., Paszynski, M., Collier, N., Alvarez, J., Dalcin, L., Calo, V.M.: A survey on direct solvers for Galerkin methods. SeMA J. 57(1), 107–134 (2012)

Piegl, L., Tiller, W.: The NURBS Book. Springer, New York (1997)

Puzyrev, V., Deng, Q., Calo, V.M.: Dispersion-optimized quadrature rules for isogeometric analysis: modified inner products, their dispersion properties, and optimally blended schemes. Comput. Methods Appl. Mech. Eng. 320, 421–443 (2017). http://dx.doi.org/10.1016/j.cma.2017.03.029. http://www.sciencedirect.com/science/article/pii/S004578251631920X

Reali, A.: An isogeometric analysis approach for the study of structural vibrations. Master’s Thesis, University of Pavia (2004)

Seriani, G., Oliveira, S.P.: Optimal blended spectral-element operators for acoustic wave modeling. Geophysics 72(5), SM95–SM106 (2007)

Stoer, J., Bulirsch, R.: Introduction to Numerical Analysis, vol. 12. Springer, New York (2013)

Strang, G., Fix, G.J.: An Analysis of the Finite Element Method, vol. 212. Prentice-Hall, Englewood Cliffs (1973)

Thompson, L.L., Pinsky, P.M.: Complex wavenumber Fourier analysis of the p-version finite element method. Comput. Mech. 13(4), 255–275 (1994)

Thompson, L.L., Pinsky, P.M.: A Galerkin least-squares finite element method for the two-dimensional Helmholtz equation. Int. J. Numer. Methods Eng. 38(3), 371–397 (1995)

Wang, D., Liu, W., Zhang, H.: Novel higher order mass matrices for isogeometric structural vibration analysis. Comput. Methods Appl. Mech. Eng. 260, 92–108 (2013)

Wang, D., Liu, W., Zhang, H.: Superconvergent isogeometric free vibration analysis of Euler–Bernoulli beams and Kirchhoff plates with new higher order mass matrices. Comput. Methods Appl. Mech. Eng. 286, 230–267 (2015)

Yue, B., Guddati, M.N.: Dispersion-reducing finite elements for transient acoustics. J. Acoust. Soc. Am. 118(4), 2132–2141 (2005)

Acknowledgements

This publication was made possible in part by the CSIRO Professorial Chair in Computational Geoscience at Curtin University and the Deep Earth Imaging Enterprise Future Science Platforms of the Commonwealth Scientific Industrial Research Organisation, CSIRO, of Australia. Additional support was provided by the National Priorities Research Program grant 7-1482-1-278 from the Qatar National Research Fund (a member of The Qatar Foundation), by the European Union’s Horizon 2020 Research and Innovation Program of the Marie Skłodowska-Curie grant agreement No. 644202, and by the Projects of the Spanish Ministry of Economy and Competitiveness MTM2016-76329-R (AEI/FEDER, EU). The Spring 2016 Trimester on “Numerical methods for PDEs”, organized with the collaboration of the Centre Emile Borel at the Institut Henri Poincare in Paris supported VMC’s visit to IHP in October, 2016.

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Rights and permissions

Copyright information

© 2018 Springer Nature Switzerland AG

About this chapter

Cite this chapter

Bartoň, M., Calo, V., Deng, Q., Puzyrev, V. (2018). Generalization of the Pythagorean Eigenvalue Error Theorem and Its Application to Isogeometric Analysis. In: Di Pietro, D., Ern, A., Formaggia, L. (eds) Numerical Methods for PDEs. SEMA SIMAI Springer Series, vol 15. Springer, Cham. https://doi.org/10.1007/978-3-319-94676-4_6

Download citation

DOI: https://doi.org/10.1007/978-3-319-94676-4_6

Published:

Publisher Name: Springer, Cham

Print ISBN: 978-3-319-94675-7

Online ISBN: 978-3-319-94676-4

eBook Packages: Mathematics and StatisticsMathematics and Statistics (R0)