Abstract

Modern-day, self-propelled, heavier-than-air flight traces its roots back to 1903 when the Wright brothers remained in air for 12 s in Kitty Hawk, NC. Just 30 years later, the Douglas Company introduced the 12-passenger twin-engine DC-1 and Boeing introduced the 247, a 10-passenger, all-metal twin-engine aircraft with a retractable landing gear; soon thereafter, the era of jet engines was ushered in. About 60 years later, in the 1990s, Boeing introduced the 777, the biggest twin-engine jet ever to fly and the first aircraft to be produced using computer-aided design and engineering! Now, aircrafts like the superjumbo Airbus A380 and the Boeing 787 carry more than a billion passengers each year around the world. This dramatic advance in passenger flight is filled with inventions, innovative designs, new and novel materials and technology advancements, accidents, and lessons learned. Driven by societal needs, economy, government regulations, and more, materials and components design, process development, property assessment, and life prediction have all been central to these advances. In this chapter, we follow this fascinating thread by focusing on materials and technology development for aircraft fuselage and aircraft engine over time.

Access provided by CONRICYT-eBooks. Download chapter PDF

Similar content being viewed by others

Keywords

- Airframe

- Jet engines

- Aluminum alloys

- Titanium alloys

- Nickel-based superalloys

- Precipitation hardening

- Polymer matrix composites (PMC)

- Intermetallics

- Ceramic matrix composites (CMC)

- Thermal barrier coatings (TBC) and environmental barrier coatings (EBC)

- Castings

- High-temperature properties

- Weight savings

- Reduced noise

- Reduced emissions

History of Aviation

The Early Years

The dream to “soar like a bird” is as old as mankind, but the concept of an airplane has only been around for two centuries or so. Over this period, flight can be classified into the early period of lighter-than-air (balloons) and the subsequent development of heavier-than-air flying machines. Prior to this period, men and women tried to strap on wings or built machines with flapping wings to imitate birds, but with little success. Around 1490, Leonardo da Vinci sketched plans for a man-carrying machine with flapping wings (an ornithopter), a full-size model of which can be found in the Smithsonian Air and Space Museum in Washington DC, and a picture of it is seen in Fig. 5.1.

In 1783, the first clearly recorded manned balloon flight took place after the Montgolfier brothers developed the hot-air balloon and flew it across Paris. Two weeks later, hot air gave way to the first hydrogen-filled balloon flown by Professor Jacques Charles and the Robert brothers in Paris as well (Wiki: Montgolfier_brothers, January 19, 2018a). However, early balloon flight suffered from the inability to guide the direction of flight and was pretty much left to the mercy of the wind direction. The next 100 years saw the design and building of the fixed-wing aircraft. Sir George Cayley in 1799 described and defined the forces of lift and drag and generated the design for the first fixed-wing aircraft . He subsequently built and flew several fixed-wing crafts between 1799 and 1853. These aircrafts embodied the fixed wing to provide lift, flappers to provide thrust, and a movable tail (rudder) to provide control, and thus the science of modern-day aircraft was born (Wiki: George Cayley; April 09, 2018c).

In roughly the following three decades, from about 1850 to 1880, designers began testing various types of engines that would propel their air vehicles. In 1857, Felix du Temple flew a model monoplane whose propellers were driven by a clockwork spring and later, a small steam engine. It took off under its own power, flew a short distance, and landed safely, recording the first successful flight of a powered aircraft (Wiki: Félix_du_Temple_de_la_Croix; March 10, 2018d). In 1864, Siegfried Marcus built an internal combustion engine with a carburetor (that he called a vaporisater) and an electrical ignition system that used a primitive magneto to generate a spark, although this engine was targeted for use in automobiles (www.asme.org; siegfried-marcus; MacRae 2012). In 1866, the Royal Aeronautic Society was formed in England, and in 1868, it sponsored the “First Great Aeronautical Exhibition.” In 1876, Nikolaus Otto in Germany developed the four-stroke gasoline engine which is the basis for the modern internal combustion engine (Encyclopedia Britannica; Nikolaus Otto; October 03, 2016).

Between 1880 and 1900, several attempts to fly using powered vehicles were made with various levels of success and failure in Europe, the United States, and Australia and included pioneers like Otto Lilienthal, Lawrence Hargrave, Samuel Langley, Hiram Maxim, Wilbur Wright, and Orville Wright. In December 1903, Orville and Wilbur Wright made the first powered flight (Fig. 5.2) in a fully controllable aircraft capable of sustaining itself in the air at Kitty Hawk, North Carolina (heavier-than-air, gasoline-powered, propeller-driven biplane; Flyer I). The flight only lasted 12 s and extended 120 ft (Wiki; Wright brothers; April 03, 2018e). They made a few additional flights that day before a strong gust of wind rolled the aircraft over and smashed it.

The first powered, controlled flight by a heavier-than-air machine; Wright brothers, Kitty Hawk, NC, December 17, 1903 (https://www.airspacemag.com/history-of-flight/wright-brothers-first-flight-photo-annotated-180949489/). (Figure used with permission of the Smithsonian National Air and Space Museum, NASM 2003-3463)

The Flyer had a wooden frame made of spruce and ash. The frame was covered with a finely woven cotton cloth and sealed with canvas paint. The metal fittings were made from mild steel, and the aircraft was rigged with15-gauge bicycle spoke wire. The engine block was cast from an aluminum alloy consisting of 92% aluminum and 8% copper. Ninety years later, Gayle and Goodway (1994) demonstrated that this engine crankcase was composed of a bimodal distribution of GP zones (discussed below), indicative of precipitation hardening , even though the concept of precipitation hardening was unknown in 1903. The other parts of the engine were made from steel or cast iron, with the exception of the spark points which contained tiny bits of platinum (Crouch 2018).

From 1903 to 1940

The 30 years that followed the historic Wright brothers flight in Kitty Hawk were filled with inventions, development of new technologies, and implementation, in part spurred by the need for higher speeds, higher altitudes, improved reliability and increased maneuverability during World War I. Notable events included the following:

-

i.

The development in 1914 of the automatic gyrostabilizer that became the basis for “automatic pilot”

-

ii.

Several improvements in structure, control, and propulsion system design between 1914 and 1918

-

iii.

The introduction of the first all-metal airplane (Junkers J4, Fig. 5.3), built largely using a lightweight precipitation-hardenable aluminum alloy that was trade-named Duralumin , by Hugo Junkers in Germany in 1917

-

iv.

The inauguration of airmail service in the United States in Washington, DC, and in 1920, the first transcontinental airmail service from San Francisco to New York

Junker J4. (From: http://hugojunkers.bplaced.net/junkers-j4-j-i.html)

In 1919, Britain and France introduced airborne passenger service across the English Channel. Charles Lindbergh completed the first nonstop solo flight from New York to Paris on the Spirit of St. Louis in 1927 in a single-seater Ryan monoplane. The year 1933 was special when the Douglas Company introduced the 12-passenger twin-engine DC-1 (Fig. 5.4a; wiki: Douglas DC-1; October 2017), and Boeing introduced the 247 (Wiki: Boeing 247; April 2018f) which was a twin-engine 10-passenger monoplane that was propeller-driven and had retractable landing gear that reduced drag during flight (Fig. 5.4b). In 1935, the first practical radar system for meteorological application was patented in England and was later extensively used during World War II to detect incoming aircrafts. By 1936, the Douglas Company produced the DC-3 (Wiki: Douglas DC-3; March 2018g) which incorporated many aviation-related engineering advances including almost completely enclosed engines to reduce drag, new types of wing flaps for better control, and variable-pitch propellers, whose angle could be altered in flight to improve efficiency and thrust. The DC-3 could accommodate 20 passengers and could be configured with sleeping berths for long-distance flights. By 1938, 80% of US passengers were flying in DC-3s, and a dozen foreign airlines had adopted the planes.

(a) Douglas DC-1 . (https://en.wikipedia.org/wiki/Douglas_DC-1; image from https://en.wikipedia.org/wiki/File:Douglas_DC-1.jpg) and (b) Boeing 247. (https://en.wikipedia.org/wiki/Boeing_247; image from https://commons.wikimedia.org/wiki/File:Boeing,_247.jpg)

From 1940 to 2000

The period spanning World War II (1938–1945) witnessed significant scientific and technical developments in radar technology, radiowave navigation, and instrumented landing systems in the United Kingdom, Germany, and the United States. In 1942, Adolf Galland, the director general of fighters for the Luftwaffe and one of Germany’s top pilots, flew a prototype of one of the world’s first jets, the Messerschmitt ME 262 at 540 miles per hour. The first jet-powered commercial aircraft was the de Havilland Comet introduced in 1949 and making its first flight from London to South Africa in May 1952 (Wiki: De Havilland Comet; March 2018h).

The jet engine had a profound impact on commercial aviation. As late as the 1950s, transatlantic flights in propeller-driven planes lasted more than 15 h. But in the 1960s, aircrafts such as Boeing’s classic 707 cut that time in half. Boeing introduced the 707 as its first four-engine jetliner (Fig. 5.5a), was in commercial service between 1958 and 1979, and is credited with ushering in the jet age (Wiki: Boeing 707; April 2018i). Increases in speed certainly pushed commercial aviation along, but the business of flying was also demanding bigger and bigger airplanes. In response, the Boeing 747 (Wiki: Boeing 747; April 2018j), a wide-bodied jet that was fitted with turbofan engines and came into service in 1969, is perhaps the biggest success story in modern commercial aviation (Fig. 5.5b). Other aircraft companies introduced their own commercial versions, the most notable being the DC-10 from McDonnell Douglas in the early 1970s (Wiki: McDonnell Douglas DC-10; April 2018k) and the L-1011 from Lockheed Corporation (Wiki: Lockheed L-1011 TriStar; April 2018l), both fitted with three-engines, two below the wings and one at the base of the vertical stabilizer/tail. Whereas the L-1011 used Rolls-Royce engines, the DC-10 original variants used GE engines (CF6) but subsequent longer-range variants used Pratt & Whitney turbofan engines.

(a) The Boeing 707 (https://en.wikipedia.org/wiki/Boeing_707; figure from https://commons.wikimedia.org/wiki/File:Boeing_707-138B_Qantas_Jett_Clipper_Ella_N707JT.jpg) and (b) the Boeing 747 (jumbo jet) (https://en.wikipedia.org/wiki/Boeing_747; figure from https://commons.wikimedia.org/wiki/File:Pan_Am_Boeing_747-121_N732PA_Bidini.jpg)

Perhaps the two most popular commercial jet aircraft models today are the Boeing 737 and the Airbus 320 . The Boeing 737 (Wiki: Boeing 737; April 2018m) was initially envisioned as a short- to medium-range twinjet aircraft and eventually developed into several variants. It entered airline service in 1968 as the original 100 version, and soon thereafter as the 200 version. Subsequently, in the 1980s, the longer 300, 400, and 500 models were launched, and were collectively called the 737 Classic series and had wing improvements and CF56 turbofan engines. The 737 Next Generation was introduced in the 1990s (600, 700, 800, and 900 models) and included increased wingspan, upgraded cockpit, and redesigned interior. Their lengths ranged from 102 to 138 ft.

On the other side of the Atlantic, a European consortium arose in the early 1970s under the name of G.I.E. Airbus Industrie, headquartered in France. Since then, Airbus has made significant progress in the aircraft market. Perhaps the most popular of the Airbus fleet is the family of the A320 aircrafts (Wiki: Airbus A320 Family; April 2018n), the biggest competitor for the Boeing 737 series. The first member of the A320 was launched in 1984 and was introduced into service by Air France in 1988. Like the 737, it used CFM56 GE engines till the V2500 engines became available toward the end of the 1980s. More recently, a new class of Airbus 320 called Airbus A320neo (new engine options) with the CFM International LEAP-1A engine and the Pratt & Whitney PW1100G engine was introduced by Lufthansa in January 2016.

In 1995, Boeing introduced the twin-engine 777 (Fig. 5.6a), the biggest two-engine jet ever to fly and the first aircraft to be produced through computer-aided design and engineering (Wiki: Boeing 777; April 2018o). The 777 is equipped with the GE90 engine (Fig. 5.6b) which is one of the world’s most powerful turbofan engines (Wiki: General Electric GE90; March 2018p), although larger variants are now available like the GE90-115B which in 2002 set a world record of 127,900 lbf thrust. The GE90 engine was the world’s first engine to be fitted with carbon fiber composite fan blades.

(a) The twin-engine Boeing 777 ; image from (https://commons.wikimedia.org/wiki/File:United_Airlines_777_N797UA_LAX.jpg) and (b) the GE9X engine that powers it (https://en.wikipedia.org/wiki/General_Electric_GE9X). (Image used with permission from GE Aviation)

From 2000 to Present

Two of the state-of-the-art aircrafts introduced in the 2000s were the Airbus A380 and Boeing’s 787 Dreamliner . The A380 (Fig. 5.7a) is currently the world’s largest passenger aircraft (Wiki: Airbus A380; April 2018q) and is a four-engine, twin-isle, full-fuselage double-deck aircraft with 525 passengers seating capacity in a typical three-class configuration. The airports in which it operates had to be upgraded to handle it. It entered commercial service in 2007 with Singapore Airlines and is intended to fly long-range, nonstop flight segments (designed for 9780 miles) and at a cruising speed of Mach 0.85 (560 mph). The four engines that power the aircraft are either the Rolls-Royce Trent 900 engine or the General Electric/Pratt & Whitney Alliance Engine GP7200, each delivering roughly 70,000 lbf thrust. Different sections of the aircraft are made in Spain, Germany, France, and England and assembled in France. Carbon fiber-reinforced plastic composites are used for the central box of the wings, the horizontal stabilizers, the fin, the rear fuselage section, and the ceiling beams. The panels for the upper fuselage use a metal-plastic laminate composite called GLARE (see below), all in an effort to keep the vehicle weight to a minimum. Currently however, there are some questions and concerns about the sustainability of the A380 because it is too big, too expensive (purchase and operation costs), and does not fit the operation model (point-to-point versus hub-and-spoke) of many airlines. There has been no buy-in from US airlines thus far and only ten airports in North America currently handle A380s. This also has led to an unbalanced distribution of these aircrafts, primarily in the middle-eastern Gulf countries where the total population is less than a tenth of that in North America. Furthermore, major freight carriers have not bought into the A380 and resale market for these behemoths when they come off lease appears gloomy (Goldstein 2018).

(a) The Airbus A380 (superjumbo); (http://www.aviationfigure.com/15-interesting-facts-about-airbus-a380/); picture taken from https://commons.wikimedia.org/wiki/File:1er_vol_be d_l’_A380.jpg and (b) the Boeing 787 Dreamliner (http://compositesmanufacturingmagazine.com/2018/01/boeing-787-10-dreamliner-cleared-commercial-service-federal-aviation-administration/). (Image taken from https://commons.wikimedia.org/wiki/File:All_Nippon_Airways_Boeing_787_Dreamliner_two.jpg)

Airbus has recently also introduced the A350 XWB (extra wide body) long-haul, twin-engine family of aircrafts (Wiki: Airbus A350 XWB; April 2018s) with wing and fuselage constructed primarily of carbon fiber-reinforced plastics, seating from 280 to 360 passengers in a three-class layout, all to compete with the Boeing 787 and 777 fleet. Two versions in the family, the A350-900 and the A350-1000, entered service in January 2015 and February 2018, respectively.

The Boeing 787 Dreamliner (Fig. 5.7b) is a long-haul, mid-size wide-bodied, twin-engine jet airliner intended to replace the 767 but designed to be 20% more fuel efficient (Wiki: Boeing 787 Dreamliner; April 2018r and http://compositesmanufacturingmagazine.com, 2018). Its variants (three of them, 787-8, 787-9, and 787-10) seat 240–330 passengers in typical three-class seating configurations. The 787 is the first major commercial airplane to have a composite fuselage and composite wings and uses composites in most other airframe components. Boeing lists its materials by weight as 50% composite, 20% aluminum, 15% titanium, 10% steel, and 5% others (Hale 2006). Aluminum has been used throughout the wing and tail leading edges, and titanium is predominantly present within the elements of the engines and fasteners, while various individual components are composed of steel. The 787 Dreamliner’s distinguishing features include mostly electrical flight systems, raked wingtips, and noise-reducing chevrons on its engine nacelles. The two different engine models are the Rolls-Royce Trent 1000 or the General Electric GEnx engines. The 787’s cabin windows are larger than any other civil air transport in service and have a higher eye level so passengers can maintain a view of the horizon. The composite fuselage permits larger windows without the need for structural reinforcement. Instead of plastic window shades, the windows use smart glass (allowing flight attendants and passengers to adjust five levels of sunlight and visibility to their liking, reducing cabin glare while maintaining a view to the outside world. The internal cabin pressure and humidity control (programmable) are superior to previous aircrafts. The 787-8 entered commercial service in October 2011, while the 787-9 (a stretched variant), which is 20 ft longer and can fly about 500 miles (830 km) farther than the 787-8, entered commercial service in August 2014.

In the 2010 timeframe, the Boeing 737 Max series (Wiki: Boeing 737 Max; April 2018t) evolved with improved winglets and enhanced efficiency and fitted with the new CFM International LEAP engines (CFM International is a joint venture between GE Aviation in the United States and Safran Aircraft Engines (previously Snecma) in France). The 737 Max entered service in 2017, and Boeing had more than 3800 firm orders for the 737 Max as of July 2017.

Lastly, Boeing is now in the development and testing stage of its new wide-bodied, twin-engine long-haul series, the 777X (Wiki: Boeing 777X; March 2018u), which is expected to feature the new GE9X engines, new composite wings with folding wingtips, and other technologies incorporated in the 787. Two variants at least are scheduled, one being the 777-8 (seating 365) and the 777-9 (seating 414), the latter being a stretched version. Current plans are to have the 777-9 available in the 2019–2020 timeframe for commercial flight. Interestingly, the 777X is planned to retain its aluminum fuselage as compared to the composite fuselage of the 787 Dreamliner and its competitor, the A350XWB. The rationale is that the 787 was a replacement for the 767 and it needed fuselage redesign. Since all new tooling was required anyway, a composite fuselage was the result. In contrast, the 777’s fuselage cross section remained unchanged, and so the decision was made to harvest much of the novel 787 technology but retain a metal fuselage.

The Jet Engine (From 1937 to Present)

A major milestone in aircraft development was the design of jet engines in 1937. The jet engine concept and design evolved independently in Britain (credited to Frank Whittle) and in Germany (credited to Hans Von Ohain). In 1939, the first jet aircraft, the Heinkel 178, took off, powered by von Ohain’s HE S-3 engine (Wiki: Heinkel He 178; April 2018v). Almost 2 years later, in 1941, the Gloster E.28/39 was the first British jet to be flown using a Whittle engine (Wiki: Gloster E.28/39; April 2018w). This section details the general specifications of major jet engine types in a roughly chronological manner, synthesized mostly from publicly available information, largely drawn from Wikipedia. Undoubtedly it is the technology that was developed around this engine that has advanced air travel to the point it is at today.

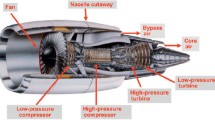

In the early days, the turbojet engine dominated the scene. In the turbojet design, the air is sucked in through the compressor in its entirety and combusted in the combustion chamber with the fuel, and then the hot gases exit the engine through the nozzle via the turbine (that provided for the compressor rotation). The design was not fuel efficient at all as all the air had to be combusted; furthermore, the engine was extremely noisy. This led to the design of bypass jet engines (Fig. 5.8) and their implementation in the civilian air transportation sector. In this design, some of the air bypasses the core, thereby improving fuel efficiency because only part of the air inducted by the fan needed to be mixed with the fuel and combusted; furthermore, the noise was significantly reduced because a layer of cool bypass air surrounded the hot air coming out of the turbines. The ratio of the mass flow of air bypassing the engine core compared to the mass flow of air passing through the core is referred to as the bypass ratio. Engines can be low-bypass or high-bypass. Most commercial airliners today employ the high-bypass type engine and have a huge fan at the front of the engine that generates the air intake, with most of the air bypassing the core. The common bypass ratio used to differentiate this type of engine from the type above is a bypass ratio of 3:1, which means three times the volume of air bypasses the core compared to the volume of air that travels through the core.

A schematic illustration of a bypass engine (File:Turbofan operation.png. (2014, December 23). Wikimedia Commons, the free media repository. (Retrieved, April 14, 2018 from https://commons.wikimedia.org/w/index.php?title=File:Turbofan_operation.png&oldid=143753231)

The family of CF6 high-bypass turbofan engines (produced by General Electric Aviation) was based on the TF39 (the very first high-bypass aircraft engine) and has been extensively used in many civilian aircrafts including the Airbus A310, Boeing 747-400, and the Boeing767 (Wiki: General Electric CF6; April 2018x). Since its introduction in 1971 in the DC-10, several variants of the CF6 have evolved with different thrusts, compressor and turbine stages, and fan size (the CF6-50, CF6-80, CF6-80A, CF6-80C2, and CF6-80E1).

In parallel, Pratt & Whitney’s JTD9 engine (Wiki: Pratt & Whitney JT9D; January 2018y) was the first high-bypass engine to power a wide-bodied airliner (twin-aisle aircraft), the Boeing 747-100 (the original “jumbo jet”). Several models of the JTD9 were developed with thrusts ranging from 45,800 lbf to 56,000 lbf. Production ceased around 1990, and the PW4000 engine family with thrusts ranging from 52,000 lbf to ~99,000 lbf became the successor to the JTD9 engines (Wiki: Pratt & Whitney PW4000; March 2018z). Three distinct families of the PW4000 are produced and are categorized based on fan diameter. The first family is the 94″-diameter fan with thrust ranging from 52,000 to 62,000 lbf, powering aircrafts such as the Airbus A310-300, the Boeing 747-400, and the Boeing 767-200/300. The second family is the 100″-diameter fan engine developed specifically for the Airbus A330 twinjet. It has thrust ranging from 64,500 to 68,600 lbf. The third family is the 112″-diameter fan engine developed specifically for Boeing’s 777 and is currently available on the 777-200ER; it has thrust ranging from 86,700 to ~99,000 lbf, and it entered service in June 1995.

Pratt & Whitney’s PW2000, which went into service in the 1980s (Wiki: Pratt & Whitney PW2000; January 2018aa), covered the mid-thrust range (~37,000 to ~43,000 lbf) and powered all models of the Boeing 757. An improved version of it was introduced in the mid-1990s called the reduced temperature configuration which increased reliability and durability and provided enhanced environmental performance while providing reduced total maintenance costs.

The CFM56 series is by a significant margin the most successful commercial aircraft engine of all time, with over 22,000 installed engines delivered as of 2015 (Morrison 2015). It is a family of high-bypass turbofan aircraft engines made by CFM International (CFMI), with a thrust range of 18,500–34,000 lbf (82–150 kN). The first engines entered service in 1982. In the early 1980s, Boeing selected the CFM56 engine for the Boeing 737-300 variant. As the 737 wings were closer to the ground than previous applications for the CFM56, it required several modifications to the engine. The fan diameter was reduced, which reduced the bypass ratio. The overall thrust was also reduced, from 24,000 to 20,000 lbf (107–89 kN), mostly due to the reduction in bypass ratio (Wiki: CFM International CFM56; March 2018ab). Subsequently, derivatives within this family of engines (CFM56-5A) have been fitted specifically to the Airbus A320 to power short- to medium-range flights.

The LEAP is a new engine design based on and designed to replace the CFM56 series, with 16% savings in fuel consumption by using more composite materials (polymer matrix and ceramic matrix composites) and achieving higher-bypass ratios of over 10:1. The engine also has some of the first FAA-approved 3-D-printed components. LEAP entered service in 2016 with major applications in the Airbus A320neo and the Boeing 737 Max families (Wiki: CFM International LEAP; April 2018ac).

The GE90 engines are a family of high-bypass turbofan engines built for the Boeing 777, entering service in 1995, and are physically some of the largest engines in aviation history. The fan diameter of the original series is 123″ (310 cm). The fan blade is made from carbon fiber composite, the first ever in commercial aviation, and uniquely curved to make it larger, lighter, and more aerodynamic than the more traditional titanium blade (discussed below). General Electric Aviation has continued to improve upon the original GE90 design and the GE90-115B, a high-thrust variant of the original GE90 generating up to 115,300 lbf thrust at sea level, making it the world’s most powerful commercial jet engine, built exclusively for Boeing’s largest 777 models—777-200LR and 777-300ER. Designed using three-dimensional aerospace computer modeling technology, the carbon fiber composite blade draws a massive amount of air into the engine while operating at a low noise level. Each blade is about 4 ft long and weighs less than 50 lbs.

Next came the GEnx engine which powers the four-engine Boeing 747-8 and the Boeing 787 Dreamliner (Wiki: General Electric GEnx; January 2018ad). The GEnx uses advanced materials and design processes to reduce weight, improve performance, and provide a fuel-efficient commercial aircraft engine. The GEnx engine is the world’s first commercial jet engine with both a front fan case and fan blades made of carbon fiber composites. In addition, it has a reduced blade count in the fan (weight savings ) and incorporates an innovative combustor technology called TAPS (twin-annular pre-swirl) that reduce NOx gases. Further, the low-pressure turbine (LPT) is lighter and more efficient than its predecessor and incorporates titanium aluminide blades in the sixth and seventh stages, further reducing engine weight by approximately 400 lbs. and contributing to increased fuel efficiency.

The GE9X is the latest high-bypass turbofan engine under development (Wiki: General Electric GE9X; March 2018ae) and is the engine targeted to power Boeing’s new 777X long-haul airliner that is anticipated to enter service around 2020. The engine incorporates several advanced materials and revolutionary design that will make it 10% more fuel efficient than the GE90-115B while having reduced noise and NOx emissions. The bypass ratio is planned for 10:1 and the fan diameter is (134″) 340 cm. It has fewer blades (16 blades) than the GEnx (18 blades), and the bulk of the fan blades is made out of carbon fiber composite making the engine lighter and allowing the fan to spin faster. The fan blades have steel leading edges and glass fiber trailing edges to better absorb bird impacts. The TAPS technology is utilized in the combustor as in the GEnx, while ceramic matrix composite (CMC) liners are used in two combustor liners, two nozzles, and the shroud that enable withstanding higher temperatures. The first five stages in the compressor use “blisk” technology (integrated bladed disks), and the low-pressure turbine airfoils are made of titanium aluminides that are lighter than the more conventional nickel-based parts. Novel manufacturing technologies such as 3-D printing are used to produce various parts in the engine.

Meanwhile, Pratt & Whitney has been developing the PW1000G family of engines, including the PW1100G with a 81″ diameter fan composed of 20 blades, a high-bypass ratio of 12.5:1 and a thrust range of 24,000–35,000 lbf that entered service in January, 2016, and is currently powering the Airbus A320neo family of aircrafts (Wiki: Pratt & Whitney PW1000G; April 2018af). Others in the family are smaller engines and include PW1200G, PW1400G, and PW1700G with anticipated service entry dates in the 2019–2021 timeframe. These engines distinguish themselves with a new technology called geared turbofan technology (GTF) whereby a gearbox is introduced between the fan and the low-pressure compressor that enables them to spin at different speeds that are optimal for each (e.g., 4000–5000 rpm for the fan and 12,000–15,000 rpm for the low-pressure spool); this permits lower fan speeds which in turn enable higher-bypass ratios which results in reduced fuel consumption and reduced noise .

Engine Alliance is a joint venture between General Electric and Pratt & Whitney that manufactured the GP7000 turbofan engine and is an option for the Airbus A380 (Wiki: Engine Alliance GP7000; March 2018ag). With a fan diameter of 116″ (hollow titanium fan blades), a maximum thrust of 74,000–80,000 lbf, and a bypass ratio of 8.8:1, two versions, the GP7270 (the passenger version) and the GP7277 (the freighter version) were created for the A380, although Airbus subsequently canceled its freighter version of the A380.

On the other side of the Atlantic, another major aircraft engine producer, Rolls-Royce, has been developing several engines that have shared the market in powering modern passenger flights. Examples include the Trent 500, 700, 800, and 900, the Trent 1000, the Trent 7000, and the Trent XWB (Wiki: Rolls-Royce Trent; March 2018ah). All are developments of the RB211 high-bypass engine (37,400–60,600 lbf thrust) that first entered service in 1972 and has powered aircrafts such as the Lockheed L-1011 and the Boeing 747, 757, and 767. The Trent series evolved in the 1990s with thrust ratings ranging from 53,000 to 97,000 pounds-force (240–430 kN). Versions of the Trent are in service on the Airbus A330, A340, A350, and A380 and Boeing 777 and 787. Table 5.1 summarizes details on some of these engines, their thrust capabilities, the aircrafts these engines power, and when they first went into service.

As innovations, developments, and implementation in aircraft structure and engine design have evolved, so has the range of materials implemented in making them. Thus, wood and cloth from the early days have progressively given way to metals and alloys, and more recently, to the incorporation of composites (both polymer matrix and ceramic matrix) and coatings. Such innovations in materials in the aircraft industry have been enabled by significant advances in materials processing and manufacturing technology, and have progressively enhanced performance through weight savings , increased speeds, improved fuel efficiency, reduced emissions , and quieter aircrafts . In the rest of this chapter, the focus is on the materials science and engineering developments that have made their impact on the evolution of modern commercial aircrafts; emphasis is placed on the fuselage, wings, and empennages on the one hand, and the propulsion system (aircraft engines) on the other. In so doing, and to maintain a manageable perimeter, the coverage is centered on subsonic passenger aircrafts powered by air-breathing turbofan bypass engines.

The Fuselage, Wing, and Empennage

In the early days of powered flight, roughly from 1903 to 1930, achieving the absolute minimum in weight was a practical requirement, in significant part due to the limitation of propulsion systems. Consequently, the strength-to-weight ratio was the primary selection criterion for materials for aircraft structure and propulsion. Nowadays, while lightweight is still very important, it is not sufficient and current design criteria are much more complicated. The transition from internal combustion piston engines to turbine engines was a quantum leap in aircraft technology although early turbine engines were limited by materials limitations, especially high operating temperatures. The desire to fly faster and longer distances placed additional new constraints such as higher temperatures due to frictional heating, and thus skin materials have progressed from wood and fabric to high-strength aluminum alloys, titanium alloys, and carbon fiber-reinforced polymer matrix composites. Multiple aircraft accidents that used high-strength aluminum alloys in the 1950s led to the recognition of the importance of damage tolerance under varying loading conditions, and today properties like fracture toughness and fatigue crack growth resistance are incorporated as primary design criteria in many aircraft structural products (Williams and Starke 2003). Developments of new alloys with improvement in such properties in fact have enabled revisions in design and further weight savings . Thus, there is a complex interplay between material properties-material chemistry-material processing and component design that has continually evolved from societal needs, desires, and constraints as well as from field experience that together have enabled substantial enhancements in aircraft performance, particularly over the recent decades.

The basic airframe structure of an airplane can be broadly dived into four major components. The fuselage or the main body is where passengers and baggage are located and to which the wings and the empennage are attached. The wings provide the lift to the aircraft, with the front being called the leading edge and the rear being called the trailing edge. Ailerons and flaps are located on the trailing edge of the wings and can influence the wing surface area and airflow over the wing, thereby affecting lift in various phases of the flight (Fig. 5.9). Many modern aircraft also include winglets at the outer ends of the wings that help in reducing drag. The empennage or the ‘tail” of the aircraft includes the horizontal and vertical stabilizers (these control pitch and yaw, respectively), elevator, and rudder. The rudder is a part of the vertical stabilizer that allows the airplane to turn left or right when it is activated, while the elevator is located on the rear part of the horizontal stabilizer and moves up or down to enable moving the nose of the plane up or down. The undercarriage refers to the landing gear assembly and wheels and tires.

The basic nomenclature associated with the aircraft wing structure. (Source: http://aerospaceengineeringblog.com/wp-content/uploads/2012/08/Airplane-Wing-Part-Diagram-Terminology.png; http://www.cfinotebook.net/notebook/aerodynamics-and-performance/aircraft-components-and-structure)

As these different components serve various complimentary functions, they also experience very different loading profiles (tension, compression, shear, constant load, fluctuating loads) during takeoff, in flight, and during landing, and therefore have received very different design and materials selection considerations. The interested reader is directed to the paper by Starke and Staley (1996) for a deeper discussion of loading modes experienced by the various components of the airframe. Although polymer matrix composites (PMCs) are being used in modern commercial aircrafts (e.g., PMCs in the horizontal stabilizer of the Airbus A340 and the Boeing 777), aluminum alloys continue to be the primary choice at present for airframes (Starke and Staley 1996). Titanium alloys have seen an increased role in commercial airliners such as the landing gear beam of the Boeing 747 as well as the landing gear assembly of the Boeing 777 (Williams and Starke 2003) but more so in military aircrafts as in the case of the SR-71 which had an all-titanium skin.

Aluminum Alloys

Aluminum alloys are typically used in wrought form (which means the starting material is an ingot/billet that is then either rolled into sheet or plate, extruded into bars or rods, or forged into net-shape that are then final-machined to dimensions) or as cast products. Primary structures (those whose failure endangers the aircraft (Starke and Staley 1996)) are wrought products, whereas secondary structures can be wrought or cast products . Sheet and plate in the 1–10-mm-thickness range are used for fuselage skin, while thicker plates in the range of 25–50 mm are used for wing covers, and the thickest plates up to 150 mm are used for bulkheads and wing spars. Extrusions are used for longerons and stringers in the fuselage as well as in the wings (these are the bars/rods that reinforce the fuselage axially or along the length of the wing structure; see Figs. 5.9 and 5.10). Forgings (open-die and closed-die) compete with thick plates for bulkheads and other internal structures.

Structural components of the airframe fuselage . (Source: http://www.flight-mechanic.com/fixed-wing-aircraft/)

Castings for airframe structures are produced using techniques such as sand casting , investment casting, and permanent mold casting (Kalpakjian and Schmid 2008), and the selection depends on size, weight, required dimensional accuracy, part criticality, cost, and property requirements. The mechanical properties of castings, particularly properties such as fatigue crack growth resistance and life, are often not as consistent or as good as the more costly wrought counterpart, but cost reduction can be an important aspect of the selection. Net-shape castings can also reduce complex machining operations and the number of joints in a complex-shaped component thereby making it more resistant to corrosion. Although castings have been used for both primary and secondary structural components in military aircrafts, their use in commercial aircraft is less common and has been restricted more to secondary structures like pulley brackets, ducts, and complex valve bodies of hydraulic control systems. Nevertheless, there is a gradual increase in the number of components using cast aluminum such as for flap tracks in the wing structure, and for passenger and baggage compartment doors, specifically by Airbus.

Superplastic forming of certain aluminum alloys is a third route used to make an array of secondary components for the commercial airframe (examples include baggage compartment doors, inner frame for the blowout door assembly for the Boeing 737, the Boeing 737 auxiliary power unit (APU) air inlet duct, and the Boeing 777 wingtip light housing (Hefti 2007)). Superplastic forming refers to a secondary metal forming operation where the metal experiences large deformations (200–1000% strains) without failing by necking/local thinning. The process is typically carried out at elevated temperatures that are typically excess of half the melting temperature, at strain rates of the order of 10−4 s−1, and at very low stress levels (4–6 MPa for aluminum alloys). A special fine-grained equiaxed microstructure (<10 μm grain size) and resistance to cavitation/voiding are prerequisites; conventionally processed aluminum alloys do not exhibit this microstructure, and therefore special additional processing that adds cost is necessary to obtain such microstructures. By the way of dominant deformation mechanisms that occur during superplastic forming, grain boundary sliding and diffusion-controlled deformation mechanisms are high on the list. The process is slow, material costs are high, and specialized aluminum alloys (SUPRAL 100 and 220 and FORMALL 545 and 700) have been developed that exhibit superplastic characteristics. Nevertheless, superplastic forming offers economic advantages when a limited number of complex parts need to be made with expensive materials that have low formability as, for example, in the case of some titanium alloys.

Heat-treatable aluminum alloys, that is, those that are capable of being age-hardened, are primarily used as wrought products in airframes because of their ability to develop high specific strengths. Age-hardening or precipitation hardening is a two-step heat treatment (Porter and Easterling 1992), composed of a first “solution-treatment” step followed by a second “aging” step, that certain aluminum alloys can be subjected to and that enables them to develop a desirable microstructure composed of a homogeneous distribution of fine-scale precipitates in a matrix that substantially increases the alloy strength. By controlling the time and temperatures of this two-step heat treatment, a balance in properties can be obtained. Precipitation hardening has been even suggested as perhaps being the most significant metallurgical development of the twentieth century, and there are now many detailed reviews and overviews of the subject of precipitation hardening of aluminum alloys (Ardell 1985; Fine 1975; Kelly and Nicholson 1963; Polmear 2004; Ringer and Hono 2000). Alloys belonging to the 2XXX, 6XXX, 7XXX, and 8XXX series are candidates for precipitation hardening and are primarily used in airframe structures, with historically the 2XXX alloys being used when damage tolerance is the primary requirement and the 7XXX alloys being preferred where strength is the primary requirement.

A four-digit numerical system originally developed by the Aluminum Association and then accepted by most countries and known as the International Alloy Designation System (IADS) is currently used to describe wrought aluminum alloys (Table 5.2). The first digit indicates the alloy group/major alloying element (major alloying elements typically added to aluminum include Cu, Mg, Zn, Si, Mn, and Li), the second digit indicates the impurity limits/modification of the original registered alloy, and the last two digits identify the specific aluminum alloy. Experimental alloys are indicated by a prefix X. Table 5.3 shows specific compositions for a few aerospace aluminum alloys.

Casting alloys use a different notation. Two common casting alloys are A201.0 and A357.0. The first digit refers to the major alloying element and the second two digits identify a particular alloy composition. The zero after the decimal point identifies the product as a casting, while other numerals identify ingots. The letter prefix identifies impurity level starting with A; for example, A357.0 denotes a higher purity than the original 357.0.

Many, if not all the wrought alloys, are age-hardenable alloys, and as mentioned above, their properties can be tuned by controlling the precipitation hardening heat treatment. This has led to a series of heat treatment schedules that are coded by various letters and digits called “temper designations” as summarized in Table 5.4. These codes are usually added as suffixes to the alloy number (e.g., 7075-T6 or 2024-T3). Subsets of the temper that modify the properties further are denoted by one or more digits following the letter as shown in the examples above.

Alloys in the as-fabricated state or in the annealed state are identified with the suffixes F and O, respectively; those supplied in the solution-treated condition are designated with a W, while those supplied in the solution-treated + aged condition are assigned the suffix T. Digits following T identify the type of aging treatment.

Four often-encountered heat treatments in the 2XXX series alloys like 2024 or 2090 and in the 8XXX series Li-containing alloys like 8090 are the T3, T4, T6, and T8 treatments. The T3 and T8 treatments include a deformation step after solution treatment like a 2–6% stretch that encourages precipitation and reduces subsequent aging time. Thus, T3 and T4 correspond to natural aging (aging at room temperature) with and without an intermediate stretch, while T8 and T6 correspond to artificial aging to peak or near-peak hardness with and without an intermediate stretch (also see Table 5.4). If aging is carried beyond the T6 condition, either to stabilize the microstructure or as often done to improve corrosion resistance, then the temper is designated T7.

Before discussing desired microstructures for various properties of interest in aluminum alloys, we should at least briefly develop an understanding of precipitation hardening in the 2XXX, the 7XXX, and the Li-containing alloys of relevance to the airframe industry.

In relatively simple terms, alloys amenable to precipitation hardening must exhibit a large maximum solubility for solutes and a rapidly decreasing solubility with decreasing temperature. Normally, such alloys under equilibrium conditions exhibit a multiphase microstructure (in the simplest cases, two phases at least: a matrix phase and a solute-rich second phase). When such an alloy is reheated into the single-phase region (temperature T o in Fig. 5.11a) and quenched to low temperature (temperature T 1 in Fig. 5.11a), the second phase is unable to precipitate out, and so the single phase is retained in a supersaturated state. Importantly, a supersaturation of vacancies is also present in the microstructure. This is called the solution-treated and solution-quenched microstructure. When this supersaturated matrix is reheated and held at an intermediate temperature (T 2 in Fig. 5.11a) for a length of time, supersaturation is gradually relieved by a uniform distribution of fine precipitates, and the heat treatment step is called aging. A schematic illustration of this two-step heat treatment together with a schematic binary phase diagram at the A-rich end of an A-B system is shown in Fig. 5.11a, b (Callister and Rethwisch 2013).

(a) Schematic illustration of a binary phase diagram and a candidate alloy of composition C o that is precipitation-hardenable , and (b) the two-step precipitation hardening treatment. (Figure taken from Materials Science and Engineering, Callister and Rethwisch (2013); image used with permission from John Wiley & Sons, Inc.)

The kinetics of precipitation are aided by the quenched-in excess vacancies as otherwise the equilibrium concentration of vacancies alone at the aging temperature would not be adequate to provide reasonable precipitation kinetics. During the solid-state precipitation process to relieve the supersaturation described above, for energetic reasons, the first phase to precipitate is often not the equilibrium phase but a metastable phase or series of metastable phases called transition phases . Eventually, the transition phases give way to the equilibrium phase. These transition phases are important in bestowing a good combination of mechanical properties to these solution-treated and aged alloys. Frequently, by the time the equilibrium phases precipitate out at the expense of the transition phases in an alloy, the material is excessively overaged and its properties are degraded. Thus, from a mechanical properties perspective, during aging, one goes from the solution-treated and solution-quenched condition where the material strength/hardness is not very high through an underaged condition where strength and hardness are increasing and reach a maximum at the so-called “peak-aged” condition; beyond peak aging, the strength and hardness begin to decrease and we are now in an overaged state.

In some instances, for example, in some 2XXX series of alloys, aging progresses as a function of time even at room temperature, and then the process is referred to as “natural aging ” as opposed to “artificial aging ” where a part is aged at an elevated temperature. Natural aging does not normally produce an overaged microstructure, and thus the strength/hardness shows a gradual continuous increase over long periods of time. This idea is utilized in hardening aluminum alloy rivets (e.g., alloy 2017 and 2024) used in airframes where the solution-treated and solution-quenched rivets are stored in a refrigerator to suppress natural aging and keep them soft until they are popped in place; thereafter the rivet hardens over time at room temperature and acquires the needed strength to function effectively. The Mg present in these Al-Cu alloys (Table 5.3) is believed to encourage natural aging. In this context, of historic relevance is the discovery of the phenomenon of age-hardening in 1906 and the patenting of an age-hardenable aluminum alloy with a bulk composition of Al (3.5–5.5 wt%)-Cu (<1 wt%)-Mn alloy containing <1.0 wt% Mg by Alfred Wilm which was commercialized by the company Durener Metallwerke in Duren in northwestern Germany and copyrighted in 1909 under the name Duralumin (Polmear 2004). The Junkers F13 which flew in 1919 was the first all-metal passenger aircraft and was built out of Duralumin. Alcoa in the Unites States released its own version of Duralumin in 1911, and this alloy is still available under the designation Alloy 2017 (see Table 5.3).

The full sequence of microstructure evolution upon artificial aging of a binary Al-Cu alloy can be represented as α supersaturated solid solution on aging decomposes to first form disk-shaped Cu-rich zones called GP (Guinier-Preston) zones that are homogeneously dispersed in the matrix; these disks are typically one to two atoms thick, about 10 nm in diameter, and spaced about 10 nm apart. GP zones do not have their own crystal structure. Further aging leads to the precipitation of a transition phase called θ” which has a tetragonal unit cell, is also plate-like in morphology, is ~10 nm thick, and about 100 nm in diameter. Longer aging times lead to the next transition phase θ’, also with a tetragonal unit cell, a plate morphology, and size approaching 1 μm. By the time the microstructure is composed of a mixture of θ” and θ’, the alloy is likely in the peak-aged condition. Overaging results in the formation of the equilibrium phase, θ, with the CuAl2 stoichiometry, and this precipitate is relatively coarse and not particularly beneficial for mechanical properties. So overall, the aging sequence can be represented as α ssss → GP zones → θ” → θ’ → θ, where α ssss represents the solution-treated and solution-quenched supersaturated aluminum-rich solid solution phase. Alloy composition, aging temperature, and prior deformation of the solution-treated and solution-quenched alloy all play a role in determining whether all or only some of these microstructural manifestations occur during aging. Thus, for example, moderately increasing the aging temperature of a particular alloy may discourage GP zones formation; rather the θ” phase comes out directly from the supersaturated matrix solid solution, and further increase in aging temperature may even preclude θ”, while instead θ’ comes out directly from the supersaturated matrix (Fig. 5.12). In alloys like 2024 or 2224 that contain about 1.5 wt% Mg in addition to Cu, the major strengthening phase is the S′ phase which is a precursor to the ternary S phase (Al2CuMg) rather than the θ’ phase.

Transition phases in the binary Al-Cu system and their temperature/composition range of existence. (From Ringer and Hono 2000) (Figure used with permission from Elsevier)

Precipitation during artificial aging in the 6XXX alloys is complex and is strongly dependent on alloy composition. The properties of 6XXX Al-Mg-Si alloys are influenced by the precursor phases (monoclinic β” and hexagonal β’) to the equilibrium cubic Mg2Si phase (β). Even in the ternary system, the situation becomes more complicated as the overall alloy composition shifts to excess Si levels (i.e., when the Si level exceeds the Mg2Si stoichiometry). Substantial additional complications arise from the fact that many commercial Al-Mg-Si alloys frequently include varying amounts of Cu in them, and this leads to the formation of many other equilibrium phases that coexist with β. The interested reader is directed to an excellent review of these aspects in Al-Mg-Si-Cu alloys by Chakrabarti and Laughlin (2004).

By comparison, in the 7XXX alloys which are used extensively for airframes, there is significantly more consensus on the microstructure evolution during artificial aging. These alloys usually show a strong age-hardening response, but one drawback is that they are susceptible to stress corrosion cracking (SCC), and this restricts their use in the peak hardness condition. Stress corrosion cracking is a phenomenon whereby an aluminum alloy which normally would not fail under a certain loading condition in an inert environment can experience failure in an aggressive environment including humid air. The phenomenon is more common in specific combinations of alloys and tempers like 7075-T6, 2024-T3, and 7079-T6, and these have contributed to more than 90% of SCC service failures of aluminum alloy products (Starke and Staley 1996). The T73 temper (essentially an overaged state) was developed for 7075 products to solve this problem, but the strength in this condition is lower than that in the 7075-T6 products. There is general agreement that the aging sequence can be represented as α ssss → GP zones → η’ → η, where η is the equilibrium MgZn2 Laves phase with the C14 hexagonal structure. The GP zones in Al-Zn-Mg alloys are rich in both Mg and Zn and are spherical in shape (unlike the disk-shaped zones observed in the Al-Cu alloy series). The structure of the η’ transition phase has been extensively studied and debated, and a more detailed discussion of this aspect is available in the work of Ringer and Hono (2000). In the peak-aged T6 condition, strength is mainly derived from a fine distribution of the η’ and some fine η, whereas in the overaged T73 condition, coarser particles of η dominate the microstructure, being distributed in the grain interior as well as at grain boundaries.

In the past thirty years or so, considerable research and development to incorporate lithium in aluminum alloys (Li decreases density and increases elastic modulus of aluminum, both of which are desirable attributes) has led to several new alloys being developed in the Al-Cu-Li family (e.g., alloy 2090 in Table 5.3), in the Al-Cu-Mg-Li system (e.g., alloy 8090 in Table 5.3), along with newer versions such as alloy 2195 (which includes Li, Cu, and minor levels of Mg, Ag, and Zr) that was used for the external tank of the Space Shuttle, as well as alloys like 2050, 2055, 2060, 2096, 2097, 2098, and 2099. The composition of some of these alloys is provided in Table 5.5 (Dursun and Soutis 2014). More metallurgical details on several of these so-called third-generation aluminum-lithium alloys for aircraft airframes can be found in the detailed review of the history of Al-Li alloys provided by Rioja and Liu (2012) and the overview of advanced aircraft aluminum alloys by Dursun and Soutis (2014). Some of these alloys find applications in the Airbus A380-800 and A380-800F in the lower wing structures and are intended to be used for the Boeing 777X cargo floor. Complex aging treatments combined with newer welding techniques (like friction stir welding) and advances in machining methods have enabled the use of these newer alloys while realizing significant weight savings and obtaining improved balance in properties.

The “third-generation Al-Li alloys” evolved out of several years of lessons learnt from the first- and second-generation Al-Li alloys and is an outstanding example of alloy design through fine, thoughtful application of fundamental metallurgical principles. Quoting Rioja and Liu (2012), “understanding the influence of chemical composition and microstructure on mechanical and corrosion performance led to the simultaneous optimization of alloying additions and thermal-mechanical processing.” Thus, Li and Mg provide density reduction and solid solution hardening and precipitation hardening, Cu and Ag enhance solid solution hardening and precipitation hardening , Zn is added for solid solution hardening and corrosion improvement, Zr and Mn control the degree of recrystallization and texture in the product, and Ti is a grain refiner during solidification of ingots, while Fe, Si, Na, and K are impurities that adversely affect fatigue response and fracture toughness and should be minimized.

The age-hardening precipitates found in Al-Cu-Li alloys like 2099 and 2199 are the ternary T1 phase (Al2CuLi), δ’ (Al3Li), and θ’ (~Al2Cu). In addition to these strengthening precipitates, additional phases called dispersoids (fine particulate phases) such as Al3Zr and Al20Cu2Mn3 also occur and influence toughness and enable recrystallization control as well as grain size and texture control. A schematic illustration of the microstructure that might be observed in an aged Al-Cu-Li 2099 alloy (Fig. 5.13) taken from Rioja and Liu (2012) emphasizes the complexity (but the versatility to manipulate as well) that is present in these alloys. Dispersoids are not unique to Al-Cu-Li alloys but in fact occur in many airframe aluminum alloys, and Table 5.6 (Starke and Staley 1996) summarizes findings in a few of these alloys.

Schematic illustration of the microstructural complexity that is present in Al-Cu-Li alloys like alloy 2099 (Rioja and Liu 2012). (Figure used with permission from Springer)

In a well-annealed alloy, grains are typically equiaxed and randomly oriented; that is, with respect to a global coordinate system, each grain is differently aligned, crystallographically speaking, so that there is a distribution of crystallographic orientations along any selected global coordinate when summed over all the grains. However, when an alloy experiences considerable deformation such as extrusion or rolling, grains can develop a preferred orientation with respect to the deformation axis and then we say the material is no longer randomly textured but has developed a crystallographic texture which can be moderate or severe. Texture invariably leads to anisotropic properties in the material. Aluminum-lithium alloys, for example, can develop strong texture and texture gradients during fabrication, and this raises concern for design and manufacturing as well as in end use. Innovative heat treatment cycles have been successfully developed to combat these issues. In these Al-Cu-Li alloys, the extrusion and plate products are typically controlled to be unrecrystallized (Rioja and Liu 2012).

Mechanical properties of interest in airframe aluminum alloys, like any other application, are intimately connected to the underlying microstructure. Table 5.7 qualitatively describes desirable microstructural features for specific properties.

Improvement in key properties of aluminum alloys over the decades for the upper wing, the lower wing, and the fuselage of various aircrafts is shown in Figs. 5.14, 5.15, and 5.16, respectively, and taken from the review by Rioja and Liu (2012). For the upper wing structure, properties of importance include specific compressive strength (compressive strength/density), specific modulus, and fracture toughness. In Fig. 5.14 specific tensile yield strength is approximated for specific compressive strength. In the early years, the approach was to increase the specific strength of the upper wing to reduce weight until corrosion problems in the Boeing 707 aircraft forced a compromise in strength to enhance corrosion performance (by replacing the T6 tempers with the T7 tempers), but with time, newer 7XXX alloys with improved strength and corrosion resistance have been developed; however, the modulus (wing stiffness) has remained constant for the 7XXX alloys . This limits weight savings due to buckling-related issues, but the third-generation Al-Li alloys show significant improvement in modulus, comparable strength or better, and good corrosion and SCC resistance. In the case of the lower wing, key considerations are given to ultimate tensile strength and fracture toughness, and the continuous improvement in these properties with newer aluminum alloy development is evident in Fig. 5.15. Of relevance is the response observed for the third-generation Al-Cu-Li alloys 2060 and 2199 which are some of the best to date. For fuselage applications, the important properties are strength and fracture toughness in the LT direction (long transverse direction, i.e., perpendicular to the rolling direction) as this direction has the largest hoop stress. Once again, the improvements in these properties over the decades for several aircraft programs are evident in Fig. 5.16. Worth noting is the superior response of the three Al-Cu-Li alloys 2199, 2198, and 2060 all in the T8 temper.

Evolution of mechanical properties relevant to aircraft upper wing structure (Rioja and Liu 2012). (Figure used with permission from Springer)

Evolution of mechanical properties relevant to aircraft lower wing structure (Rioja and Liu 2012). (Figure used with permission from Springer)

Evolution of mechanical properties relevant to aircraft fuselage (Rioja and Liu 2012). (Figure used with permission from Springer)

Thus, as competition develops from other lightweight materials for airframe structures (like organic fiber-reinforced composites discussed later), the aluminum industry has continuously risen to the challenge; newer alloys with improved combination of properties are being developed, and improved manufacturing technologies building on existing infrastructure are being coupled with new joining and machining technologies. Together, these advances are being brought to the forefront to offer airframe manufacturers the possibility to design and develop new aircrafts with improved fuel efficiency, increased comfort, reduced emissions , and larger range.

Titanium and Titanium Alloys

Titanium is an attractive candidate for aerospace applications with a density of 4.5 g/cm3 and a melting temperature greater than 1600 °C. It also exhibits an allotropic transformation from α-Ti (hcp) at low temperature to β-Ti (bcc) at high temperature. Suitable alloying enables this transformation temperature to be shifted up or down as well as the creation of a two-phase α + β region in composition-temperature space. Accordingly, several commercial alloys have been developed that are all α (e.g., the four grades of commercial purity titanium), predominantly α (e.g., Ti-5Al-2Sn or Ti-6Al-2Sn-4Zr-2Mo), predominantly β (e.g., Ti-10V-2Fe-3Al), or two-phase α + β (e.g., Ti-6Al-4V). The density of Ti, though higher than Al, is significantly lower than steels, its melting temperature is almost 1000 °C higher than Al, and therefore its alloys are capable of much better warm temperature strength compared to Al. It is extremely corrosion resistant compared to Al and is not hydrogen embrittled like high-strength steels. Although compared to aluminum and steels, Ti metallurgy is younger (the first alloys were developed in the late 1940s in the United States), it has seen application in military aircrafts more so than in commercial aircrafts until recent times. In commercial aircraft, titanium alloys see usage in both the airframe sector and in the propulsion sector, but in this section, we continue to maintain our focus on the airframe (we will discuss the role of titanium and its alloys in aircraft engines in the propulsion section later). In the 1950s and 1960s, Ti alloys accounted for less than 2% of the structural weight of commercial aircrafts, whereas it accounts for nearly 9% of the structural weight of the Boeing 777 (Peters et al. 2003). Examples of alloys and airframe components where titanium alloys find applications are provided in Table 5.8 (Inagaki et al. 2014).

Commercially pure (CP ) titanium is available in four grades and is categorized by strength, ductility, and formability (workability). CP1 grade has the lowest oxygen level, the highest corrosion resistance and formability, and the lowest strength, while CP4 has the highest oxygen level, highest strength within this family, and moderate formability. These are used for aircraft floors and ducts and pipings for water supply systems in the onboard kitchens and toilets where reduced weight and good corrosion resistance are of importance. Likewise, in the piping systems for deicing equipment, where corrosion resistance and thermal stability are important, commercial purity titanium is the material of choice. The alloy Ti-3Al-2.5V is a near-α alloy which is stronger than CP titanium and more cold-workable than Ti-6Al-4V alloy; it is used in high-pressure hydraulic pipes in commercial aircrafts including in the Airbus A380. There is approximately 1 km of hydraulic tubing in each Airbus A380, and the use of titanium tubing provides a weight savings of 42% compared to stainless steel of equivalent quantity (Fine Tubes 2018). Because Ti-3Al-2.5V can be produced in strip and foil form, it is also used in the core of aircraft honeycomb panels (Boyer 1996, 2010).

The workhorse of the titanium industry is the α + β alloy, Ti-6Al-4V. One of its main attributes is that it is a forgiving alloy to work with; it is normally used at a minimum tensile strength of around 896 MPa, has good fatigue and fracture properties, and is used in all product forms including forgings, bars, castings, foil, sheet, plate, extrusions, tubings, and fasteners. There are four common heat treatments used for Ti-6Al-4V. These are (Boyer 1996, 2010):

-

i.

Mill anneal (MA or A): most common heat treatment with strength of 896 MPa, fracture toughness of ~66 MPa√m, and reasonable fatigue crack growth rates.

-

ii.

Recrystallization anneal (RA): this heat treatment is usually combined with the ELI grade of Ti-6Al-4V (extra low interstitial) and is a more damage-tolerant heat treatment—although the alloy has slightly lower strength than MA, improved fracture toughness (minimum of 77 MPa√m), and fatigue crack growth resistance and used for fracture critical applications in the B-1 and B-2 bombers.

-

iii.

Beta anneal (BA): used in standard and ELI grades—strength is somewhat reduced, whereas fracture toughness and fatigue crack growth resistance are maximized (fracture toughness minimum is 88 MPa√m), while fatigue strength is degraded. This heat treatment is used for the damage-tolerant components in the F22 fighter and in the critical fittings attaching the composite empennage to the fuselage of the Boeing 777.

-

iv.

Solution treated and aged (STA): provides the maximum strength but full hardenability is limited to about 25 mm. Titanium fasteners (hundreds and thousands of them on each commercial aircraft) are used in the STA condition with a minimum strength of 1100 MPa , with those with a diameter >19 mm used at a slightly lower strength. This heat treatment is not used for shaped components because of thermal stresses induced during water quenching (that are not relieved during aging) which lead to part warpage during machining.

The Boeing 757 utilizes titanium (Ti-6Al-4V) for the landing gear beam which is about 4.5 m long and 375 mm wide at its widest point and has a forging weight of over 815 kg (Boyer 1996, 2010) due to volume constraint problems; from a cost perspective, a high-strength aluminum alloy like 7075 would have been the preferred material, but to carry the required loads, the aluminum component would not fit within the envelope of the wing. Steel could have been used but it would have been heavier due to the higher density.

The cockpit window frames in the Boeing 757, 767, and 777 are machined from Ti-6Al-4V forgings in the BA condition, and the crown panel above them is fabricated from Ti-6Al-4V sheet (Boyer 1996). These items need to be made of high-strength Ti alloys as they need to withstand impact damage that can be incurred from bird strikes, but aluminum frames are adequate for other window frames. In the 777, Ti-6Al-4V superplastically formed sheet is used in the tail cone, while a casting approach is used for the exhaust duct of the auxiliary power unit (APU ) because of the high temperature associated with these areas, being too high for aluminum alloys, whereas the weight penalty would be high if nickel-based superalloys or steels were used. Furthermore, Ti-6Al-4V plate about 5 mm thick, 762 mm wide, and 3.3 m long is used in the fin deck of the 777 where the composite vertical fin attaches to the fuselage; other critical fittings that attach the horizontal and vertical composite fins to the fuselage are all made out of BA Ti-6Al-4V forgings. This is due to the small thermal expansion mismatch between titanium and carbon fibers in the composite as well as the compatibility between titanium and graphite fibers in the empennage that prevent galvanic corrosion problems. Hence, there is not a need for reliance on a corrosion protection system (Boyer 1996; Peters et al. 2003). Other α/β alloys like Ti-6Al-6V-2Sn offer higher strength advantages than Ti-6Al-4V while also conferring increased weight savings , although the fracture toughness is reduced; this alloy has substituted for Ti-6Al-4V in some applications.

Beta (β) titanium alloys can be subjected to heat treatments that can result in high-strength levels (>1380 MPa) and therefore provide substantial latitude in tailoring strength-fracture toughness combinations while also possessing good stress corrosion resistance (ratio of stress corrosion threshold to fracture toughness is in the range of 0.8–1.0). They also offer fabrication advantages for sheet production due to their ability to be cold-rolled. The interested reader is referred to a recent overview of commercial alloy developments, underlying metallurgical principles and common microstructures, and successful applications of beta titanium alloys in the aircraft industry, as well as potential future applications for this family of alloys (Cotton et al. 2015).

Ti-13V-11Cr-3Al, a β alloy , was extensively used in the SR-71 “Blackbird” for wings and body skin, for longerons, ribs, bulkheads, and almost the complete main and nose landing gears, being primarily selected because of its thermal stability. However, designers no longer consider the balance in properties adequate for aircraft structure, and newer and better alloys are available.

Another β alloy that has seen usage in modern aircraft is Ti-15V-3Cr-3Al-3Sn because of the ability to produce this material in strip form. It has been used to make various springs for aircraft applications because titanium in general is an excellent spring material. With a density that is ~60% and a modulus that is 50% of steel, it provides for significant weight and volume savings; furthermore there are no corrosion problems with titanium springs. This alloy is also used in the environmental control system (ECS) ducting in the Boeing 777; the ECS provides air supply, thermal control, cabin pressurization, and avionics cooling, enables smoke detection, and provides fire suppression. About 49 m of 178-mm-diameter ducts with a wall thickness ranging from 0.5 mm to 1.0 mm is used per aircraft (Boyer 1996). Replacing the previously used lower strength CP titanium with thinner duct walls of this alloy resulted in weight savings . Castings of this alloy were also used (at a strength level of 1140 MPa) in the cargo handling area of the Boeing 777 and in the APU vibration isolator mounts. It replaced high-strength stainless steel, resulting in significant weight savings.

Ti-10V-2Fe-3Al is a β alloy used as a forging in three different strength levels (965 MPa, 1105 MPa, and 1190 MPa) (Boyer 1996). It also has excellent fatigue properties, and almost the entire main landing gear of the Boeing 777 is built of this alloy. It resulted in a weight savings of 270 kg per airplane when it replaced a high-strength steel and simultaneously eliminated the possibility for stress corrosion cracking which would have been an issue with the steel.

Titanium is in general very resistant to corrosion. One of the few corrosive media in the aerospace environment is hot hydraulic fluid. The hydraulic fluid used in commercial aircraft breaks down and forms an organo-phosphoric acid at temperatures in excess of ~130 °C (Boyer 1996, 2010). This can etch titanium, reduce its gauge section, and generate hydrogen than can produce embrittlement. Beta 21S, a Ti-15Mo-2.7Nb-3Al-0.2Si alloy developed by TIMET (Titanium Metals Corporation), is the only titanium alloy which is immune to this attack. Previously, steel- and nickel-based alloys were used, but the development of this alloy enabled titanium usage in the APU and nacelle areas of the 777, enabling significant weight savings.

These examples serve well to illustrate the continuous evolution in improved metals and alloys to respond to the needs of the airframe industry to be competitive as regulatory and market demands change with time. Considering that the predominant timeframe of materials and design evolution of aluminum and titanium alloys only extends over the past 60–70 years, it is impressive how commercial airframe technology has advanced; this indeed sets the stage for the evolution of another alternative to lightweight, high-performance metal and alloy technology, namely, composite materials.

Composite Materials

Polymer Matrix Composites (PMCs)

The primary motivation for replacing metallic materials with PMCs in aircraft structures has been weight reduction, while also increasing structural robustness (mechanical stiffness, strength, damage tolerance, etc.) and performance. Thus, these composites, which consist of a polymer matrix with short fibers of other materials embedded, have been playing a transformative role in increasing fuel efficiency and reducing greenhouse gas emission, and their use in aircraft structures has accelerated in recent years. Figure 5.17 shows the trend in the use of polymer matrix composites (PMCs) in commercial aircraft over the years. Initially, the amount of PMCs used in aircraft was small, primarily in light-structural and cabin components. Airbus was the first to introduce an all-PMC tail section in 1988 in its A320 fleet. About 11% of the Boeing 777 aircraft , which came into service in 1995, was made of PMCs by weight (excluding engines) (Irving and Soutis 2015; Rana and Fanguerio 2016). Fast forward to 2011 when the Boeing 787 Dreamliner aircraft was first introduced commercially—an impressive 50% of its weight was PMCs (Fig. 5.17; Irving and Soutis 2015; Rana and Fanguerio 2016). This “true” mostly composite aircraft has a range of 7650–8500 miles and seats 210–290 passengers. The latest Airbus A350XWB aircraft boasts 53% PMCs by weight, with a range of up to 8500 miles and seats 250–400 passengers. This progress is largely due to the penetration of PMCs into true structural applications including wings, fuselage skins, landing gear, and even engines, together with the cost-effectiveness of composites (Irving and Soutis 2015; Rana and Fanguerio 2016). Figure 5.18 compares the materials’ makeup of Boeing aircrafts over the years, showing that PMC parts have replaced many of the parts that were previously made of aluminum alloys, resulting in a total weight savings of about 30% (Drew and Mouawad 2013). Also shown in Fig. 5.18 is the makeup of the skin structure of the Boeing 787 Dreamliner aircraft.

Percentage of total structural weight attributed to composites in commercial airplane models over time (Rana and Fanguerio 2016). (Image used with permission from Elsevier Books)

In addition to weight reduction, there are several other important advantages PMCs can offer (Irving and Soutis 2015; Rana and Fanguerio 2016). Modern PMCs have higher static strength, fatigue resistance, toughness, damage tolerance, and corrosion resistance over metallic alloys. Also, PMCs are better at shielding electromagnetic waves, and they can be designed to have better thermal stability over a wide temperature range. Furthermore, PMCs are more amenable to embedding/integrating structural health monitoring (SHM) systems and actuators—“smart” materials. In terms of manufacturing, assembly, and maintenance, PMC components can be formed into complex shapes, requiring reduced assembly, and they can be repaired. Thus, net-shape PMC components require significantly fewer joints and heavy fasteners (rivets, screws, bolts, nuts, etc.) which can be sources of failure in metallic components. This is driving an industry-wide trend of fewer components in overall assemblies, using one-piece designs wherever possible (Rana and Fanguerio 2016). There are however, still some manufacturing issues and safety concerns as composites technology is not as mature as its metals and alloys counterpart. Progress is being made to address these concerns, and composites are here to stay as the performance, efficiency, and cost benefits they offer have proven too great to pass up.

The bulk of the PMCs used in aircraft have continuous fibers (also called continuous fiber-reinforced polymers or CFRPs) with a high Young’s modulus (E) (see Table 5.9) (Chawla 1998). As the name implies, the matrix in PMCs is a polymer which typically has a low E (generally <1 GPa). The introduction of unidirectional continuous fibers into the polymer matrix, with excellent bonding between the two, imparts the PMC with synergistic, significant improvements in a combination of mechanical properties—stiffness, strength, and toughness—not witnessed in the individual materials. Typically, PMCs contain 10%–60% fibers by volume. This uniqueness of composites is captured in Ashby’s materials selection maps (density-stiffness and strength-toughness) displayed in Fig. 5.19 (Ashby et al. 2007).

Density-stiffness (left) and strength-toughness (right) “Ashby” design maps (Lovatt et al. 2000). (Data courtesy of Granta Design, Ltd., Cambridge, UK)