Abstract

Learning by doing, such as when learners give explanations to peer learners in collaborative learning, is known to be an effective strategy for gaining knowledge. This study used two types of facilitation technology in a simple explanation task to experimentally investigate those influence on the performance of understanding self’s concept during collaborative explanation activity. Dyads were given a topic about cognitive psychology and were required to use two different theoretical concepts, each of which was provided separately to one or the other of them, and explain the topic to each other. Two types of facilitation were examined: (1) use of a pedagogical conversational agent (PCA) and (2) visual gaze feedback using eye-track sensing. The PCA was expected to enable greater support of task-based activity (task-work) and visual gaze feedback to support learner coordination within the dyads (team-work). Results show that gaze feedback was effective when there was no PCA, and the PCA was effective when there was no gaze feedback on explaining self’s concept. This work provides preliminary implications on designing collaborative learning technologies using tutoring agents and sensing technology.

Access provided by CONRICYT-eBooks. Download conference paper PDF

Similar content being viewed by others

Keywords

1 Introduction

Inspired by Vygotsky’s socio-cognitive perspective [27], many socio-constructivism researchers have analyzed group interactions and investigated the characteristics of successful and unsuccessful learners in such practices. Moreover, with the emergence of technological innovations such as sensing technology and the development of automated systems such as conversational agents, there have been attempts to design new tutoring systems that enable greater support of social learning [12]. In such systems, pedagogical agents can play a role as a social actor, e.g., a teacher. Sensing technology has been used to detect users’ mental states and as an awareness tool, to support productive interactions among students in social learning [4]. The present study focused on collaborative learning dyads who engage in a concept explanation task and investigated the relative effectiveness of two technologies for supporting learning performance in such activities. We conducted a factorial analysis to determine the effects of using (1) pedagogical agents and (2) gaze-sensing technology for facilitating awareness of collaborative partners.

1.1 Collaborative Learning and Knowledge Integration

Studies in cognitive science have shown that constructive activities such as self-explanation are a metacognitive strategy effective over a wide range of task domains [1, 6]. Furthermore, studies in learning science have shown that collaborative interaction enables learners to develop conceptual understandings [10], conceptual changes [22], and higher-level representations [25]. It is also known that the visualization of a problem from different perspectives can be achieved via explanation activities [26]. Classroom practices based on these notions, called “jigsaw learning” [2], are a known technique for facilitating such cognitive processes by explanation activities conducted in groups. Scenarios in which one is required to consider different perspectives may create opportunities to integrate other knowledge and thus develop a higher, more abstract representation of the content [22].

Although constructive interactions such as explanation activities in collaborative learning between partners having different knowledge are an ideal strategy for gaining new knowledge, there are certain aspects related to learners’ cognition and communication that should be considered when designing collaborative tutoring systems. As self-explanation studies have shown, unsuccessful learners fail to develop self-monitoring states [6]. It is important to design tutoring systems that facilitate such metacognitive activity in learners and enable them to generate explanations that refine and expand content and problems. Intelligent tutoring systems (ITSs) have proved effective in facilitating such metacognition in learner–system interactions [9, 19]. Recent studies on ITSs have shown the effects of teaching via tutoring systems [5], developing conversational agents that have rich detectors for capturing the learner’s state and that generate facilitation prompts [8]. Other studies have investigated the relative effectiveness of various types of facilitation prompts given by agents in self-regulated learning [3]. The present study will define the activity supported by such tutoring systems is termed “task-work”.

However, most studies have investigated knowledge development and learners’ cognitive states through one-to-one interaction with the tutoring system; the number of studies on learning in multiple parties is relatively small. Additionally, social science studies focusing on psychological outputs in group-based activities have pointed out the disadvantages of multi-party learning [15], such as the difficulty of developing common ground between learners [7]. Because communication plays an important role in collaborative problem solving, we consider communication support to be an important factor in ITS design. We define this as “team-work”.

1.2 Supporting Task-Work and Team-Work in Collaborative Learning

Pedagogical Conversational Agent. The emerging technology for developing pedagogical conversational agents (PCAs) as virtual teachers has become recognized as an effective way to support learners. The use of conversational agents in collaborative problem solving has been shown to be effective in prompting achievement of goals [16], providing periodic initiation opportunities [20], collaboratively setting subgoals together [11] and showing scripted dialogues to learners [23]. Several studies have investigated the influence of a PCA’s functional design on knowledge explanation tasks such as providing emotional feedback [12], using multiple PCAs upon feedback [13], and using gaze gestures during learner–learner interactions [14]. However, these studies found evidence that learners sometimes ignore or misuse the PCA. Other problems include PCAs’ inability to fully support learner coordination during the activity. It is still not clearly understood what kinds of technology may facilitate the learning process when using a PCA.

Visual Gaze and Real-Time Feedback. As mentioned, one of the problems in collaborative learning on a concept explanation task is the hurdle of establishing common ground between the learners. Cooperative tasks such as the speaker’s language expression and the listener’s understanding process require mutual awareness, prompting the development of awareness tools to support interaction among students in computer-supported collaborative learning (CSCL). Recent studies have shown that providing feedback on the visual gaze of collaborative partners using eye-trackers [17], affording an indication of where the other learner may be looking in the same computer screen, can facilitate the achievement of joint attention.

Previous studies in communication [21] suggest that the degree of gaze recurrence in speaker–listener dyads is correlated with collaborative performance such as understanding and establishing common ground, showing that common knowledge grounding positively influences the coordination of visual attention. Several studies have investigated the use of visibly showing a partner’s gaze during a distance computer learning task [17]. Dyads collaborated remotely on a learning task. In one condition, participants were given information about the partner’s eye gaze on the screen; in a control group, they were not. Results showed that real-time mutual gaze perception intervention helped students achieve a higher quality of collaboration. However, these studies only investigated effects on the success of group coordination. It is not fully understood how such technologies can facilitate learning during collaborations in which PCAs are guiding the learners. With this in mind, in this study we took a broader view, focusing on both task-work and team-work simultaneously to see how the two factors may influence collaborative learning performance.

1.3 Aim of This Study

The aim of this study was to investigate the effect of two technologies on tutoring systems in peer collaborative learning. It focused on the effects of (1) prompting metacognitive suggestions using PCAs and (2) enhancing peer learners’ awareness by providing their gaze information. This paper documents the effects on the learning performance, especially focusing on the learner’s ability to construct a deeper understanding of self’s knowledge.

2 Method

Eighty Japanese students, all freshman-year psychology majors, participated in the experiment in exchange for course credit. They are called “learners” and participated in dyads. When participants arrived at the experiment room, the experimenter thanked them for their participation. The experimenter gave instructions for the task, explaining that they would participate in a scientific explanation task in which they would use technical concepts to explain human mental processing. Before the main task, they were given a free-recall test about the concepts in order to ensure that they did not already know the concepts that would be used in the task. Next, they performed the main explanation task for 10 min. Then, they took the post-test, which was another free-recall test. Finally, they were debriefed.

The dyad’s goal was to explain a topic in cognitive science (e.g., human information processing in language perception) by using two technical concepts (e.g., “top-down processing,” “bottom-up processing”). As in the “jigsaw” method studied in learning science and popularly used in classrooms for knowledge building, we set up a scenario in which the learners did not know each other’s concepts. The experimenter separately provided each of the learners with one of the two concepts. Thus, to be able to explain the topic using the two concepts, they needed to exchange their knowledge via explanations.

The first step was for each learner to explain his/her assigned concept to his/her partner. The concept was provided to the learner before the task began, and a brief description of the concept was also shown to him/her throughout the task. On starting the task, the learners were requested to first read the description and then explain its meaning to their partner. Learners were free to ask questions and discuss the assigned concept with their partners. After one learner finished his/her explanation of his/her assigned concept, they switched roles, and the other learner explained his/her concept. Each learner was also instructed that he/she would need to explain his/her partner’s concept so they would both be able to explain the topic using the two technical concepts.

2.1 Experimental System

The present study used a redeveloped version of a system designed for previous studies [12,13,14], which was developed in Java for a server–client network platform. For this study’s purposes, the system featured (1) a PCA that provides metacognitive suggestions to facilitate the explanation activities and (2) real-time feedback on the partner’s visual gaze (gaze feedback). Each learner sat before a computer display. They were not able to see each other but were able to communicate with each other orally, and they were instructed to look at the display while conversing with each other.

Participants’ Screens and Gaze Feedback. To start, the screens simultaneously changed to the displays shown in Fig. 1. The brief explanation of the assigned concept was presented on the monitor of the corresponding learner, and the explanation of the other learner’s concept was covered so they could not simply read and proceed as individuals.

The study used two eye-trackers (Tobii X2-30) for gaze feedback; a program was developed to show the visual gaze of the partner during the task as a red square in real time. Since the participants were instructed to begin by reading the text on their screens, it was expected that while one partner (learner B) explained his/her concept by looking at the area with the explanation of the concept, the listener (learner A) would also look at the same area as they proceeded.

PCA. In the center of the screen was an embodied PCA, which included physical movement upon speech, and a text box underneath for displaying messages. The experimenter sat to one side in the experiment room and manually signaled the PCA when to provide the metacognitive suggestions. A signal was issued whenever there was a momentary gap during the dyad’s conversation, but no more than one signal was issued within one minute. A rule-based generator determined the type of metacognitive suggestion to offer from among five types based on [12,13,14].

2.2 Experimental Design

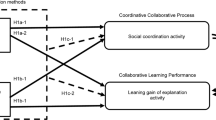

To investigate the effects of the two facilitation methods employed in this study, we implemented a \(2 \times 2\) experimental design (Table 1), each factor representing the absence or presence of the corresponding method (PCA or gaze feedback).

It was expected that the PCA would be effective for task-work and gaze feedback for awareness of others, thus relating to team-work.

2.3 Data and Analysis

The results to be reported were the effect of the two factors on the dependent variable, which was the gain score derived from the pre- and post-test scores. We coded the data collected by the free-recall pre- and post-tests on explaining the topic of the leaner’s self concept. The coding was performed for the explanation of the concept assigned to the learner himself/herself. The following points were given for evaluating the performance on understanding self’s concept. (1) 0 points: incorrect, (2) 1 point: naive explanation, but correct, (3) 2 points: concrete explanation based on materials presented, (4) 3 points: concrete explanation based on materials presented and using examples and metacognitive interpretations. The gain scores used for factorial analysis were calculated by subtracting the pre-test scores from the post-test scores.

3 Results

A \(2 \times 2\) between-subject ANOVA was conducted on the gain score. Figure 2 shows the average gain score for each condition according to the concept explained. There was a significant interaction between the two factors \((F(1,76) = 4.3563, p < .05, \eta ^2_p = .0542)\). Further analysis conducted for the simple main effects shows that the score for the with-gaze-feedback condition was higher than that for the without-gaze-feedback condition when no PCA was used \((F(1,76) = 7.5622, p < .01, \eta ^2_p = .0905)\). Additionally, the score for with-PCA was higher than for without-PCA when learners did not receive visible feedback about their partners’ gaze \((F (1,76) = 9.4563, p < .01, \eta ^2_p = .1107)\).

4 Discussion

The results for the explanation of the self’s concept show an interaction between the two factors. The results reveal that the visible gaze feedback was effective when no PCA was presented to the learners. This finding is consistent with those of previous studies [17, 24] even though they used other types of tasks and other dependent variables. Although gaze feedback is effective for gaining knowledge through explanation activities, no effect was found for gaze feedback when the PCA was present. The advantage of using the PCA only appeared when there was no gaze feedback on this dependent variable. These results are interesting given the fact that there was no synergistic effect between the two, but there is also no negative influence. Some participants in the with-PCA/with-gaze-feedback condition may have paid attention only to the PCA or to the gaze feedback cues because of their limited attention capability and thus paid less attention to the other system function. Further investigation of how they attended to the PCA and the partner’s gaze can provide more details about this point and remains as a future task. Moreover, performance on explanation of the learner can be reanalyzed by using coding methods which focus on coordination and knowledge integration. These are the challenges for the future.

One interesting observation is that the post-test scores were relatively low. The average post-test scores by condition were without-PCA/without-gaze-feedback, 1.12; without-PCA/with-gaze-feedback, 1.68; with-PCA/without-gaze-feedback, 1.92; with-PCA/with-gaze feedback, 1.79. The average score, less than 2, indicates that many learners used naive explanation strategies. This is particularly interesting in light of the fact that such tendency was rapidly to occur when they were giving explanations of their own assigned concept. Such egocentric bias is also seen in collaborative problem-solving tasks in studies in the field of cognitive science, and they are considered to arise during communication [18].

5 Conclusion

In collaborative learning, explaining is known to be an effective strategy for reflecting and gaining knowledge. Incorporating this concept, this study was an experimental investigation using a simple explanation task in which two learners having different knowledge were asked to explain a particular topic in cognitive science. The purpose was to investigate the kinds of technology that might facilitate the learners’ gaining of knowledge about learner’s own concept. The study focused on two types of technology, each designed to facilitate a different aspect of learning important in collaborative learning, task-work and team-work. The first was the use of a PCA that provided metacognitive suggestion prompts; this was expected to facilitate their task-work of explanation activities. The second was the use of sensing technology showing the partner’s gaze location; it was expected that such a visual aid would provide a better opportunity to coordinate and establish common ground. Used together, these two technologies were expected to facilitate learning performance, which was measured by the dependent variable, the level of understanding of self’s concepts. The results on performance of explanation about self’s concept show that gaze feedback was effective when there was no PCA, and the PCA was effective when there was no gaze feedback. Analysis based on interaction process related to coordination and looking at the performance based on knowledge integration should be challenges for the future work. This work provides preliminary results and contributes to the development and design of collaborative learning technologies using tutoring agents and sensing technology.

References

Aleven, V.A., Koedinger, K.R.: An effective metacognitive strategy: learning by doing and explaining with a computer-based cognitive tutor. Cogn. Sci. 26(2), 147–179 (2002)

Aronson, E., Patnoe, S.: The Jigsaw Classroom: Building Cooperation in the Classroom, 2nd edn. Addison Wesley Longman, New York (1997)

Azevedo, R., Cromley, J.: Does training on selfregulated learning facilitate students’ learning with hypermedia? J. Educ. Psychol. 96(3), 523–535 (2004)

Ben Khedher, A., Jraidi, I., Frasson, C.: Assessing learners’ reasoning using eye tracking and a sequence alignment method. In: Huang, D.-S., Jo, K.-H., Figueroa-García, J.C. (eds.) ICIC 2017. LNCS, vol. 10362, pp. 47–57. Springer, Cham (2017). https://doi.org/10.1007/978-3-319-63312-1_5

Biswas, G., Leelawong, K., Schwartz, D., Vye, N.: Learning by teaching: a new paradigm for educational software. Appl. Artif. Intell. 19(3), 363–392 (2005)

Chi, M., Leeuw, N., Chiu, M., Lavancher, C.: Eliciting self-explanations improves understanding. Cogn. Sci. 18(3), 439–477 (1994)

Clark, H.H., Brennan, S.E.: Grounding in communication. In: Resnick, B.L., Levine, M.R., Teasley, D.S. (eds.) Perspectives on Socially Shared Cognition, pp. 127–149. APA Press (1991)

D’Mello, S., Olney, A., Williams, C., Hays, P.: Gaze tutor: a gaze-reactive intelligent tutoring system. Int. J. Hum Comput Stud. 70(5), 377–398 (2012)

Graesser, A., McNamara, D.: Self-regulated learning in learning environments with pedagogical agents that interact in natural language. Educ. Psychol. 45(4), 234–244 (2010)

Greeno, G.J., de Sande, C.: Perspectival understanding of conceptions and conceptual growth in interaction. Educ. Psychol. 42(1), 9–23 (2007)

Harley, J.M., Taub, M., Azevedo, R., Bouchet, F.: “Let’s set up some subgoals”: understanding human-pedagogical agent collaborations and their implications for learning and prompt and feedback compliance. IEEE Trans. Learn. Technol. 11(1), 54–66 (2017)

Hayashi, Y.: On pedagogical effects of learner-support agents in collaborative interaction. In: Cerri, S.A., Clancey, W.J., Papadourakis, G., Panourgia, K. (eds.) ITS 2012. LNCS, vol. 7315, pp. 22–32. Springer, Heidelberg (2012). https://doi.org/10.1007/978-3-642-30950-2_3

Hayashi, Y.: Togetherness: multiple pedagogical conversational agents as companions in collaborative learning. In: Trausan-Matu, S., Boyer, K.E., Crosby, M., Panourgia, K. (eds.) ITS 2014. LNCS, vol. 8474, pp. 114–123. Springer, Cham (2014). https://doi.org/10.1007/978-3-319-07221-0_14

Hayashi, Y.: Coordinating knowledge integration with pedagogical agents. In: Micarelli, A., Stamper, J., Panourgia, K. (eds.) ITS 2016. LNCS, vol. 9684, pp. 254–259. Springer, Cham (2016). https://doi.org/10.1007/978-3-319-39583-8_26

Hill, G.W.: Groups versus individual performance: are N+1 heads better than one? Psychol. Bull. 91(3), 517–539 (1982)

Holmes, J.: Designing agents to support learning by explaining. Comput. Educ. 48(4), 523–547 (2007)

Jermann, P., Mullins, D., Nuessli, M.A., Dillenbourg, P.: Collaborative gaze footprints: correlates of interaction quality. In: Proceedings of CSCL 2011, pp. 184–191 (2011)

Keysar, B., Barr, J.D., Balin, A.J., Brauner, S.J.: Taking perspective in conversation: the role of mutual knowledge in comprehension. Psychol. Sci. 11(1), 32–38 (2000)

Koedinger, K.R., Anderson, J.R., Hadley, W.H., Mark, M.A.: Intelligent tutoring goes to school in the big city. Int. J. Artif. Intell. Educ. (IJAIED) 8, 30–43 (1997)

Kumar, R., Rose, C.: Architecture for building conversational architecture for building conversational agents that support collaborative learning. IEEE Trans. Learn. Technol. 4(1), 21–34 (2011)

Richardson, D.C., Dale, R.: Looking to understand: the coupling between speakers’ and listeners’eye movements and its relationship to discourse comprehension. Cogn. Sci. 29(6), 1045–1060 (2005)

Roschelle, J.: Learning by collaborating: convergent conceptual change. J. Learn. Sci. 2(3), 235–276 (1992)

Rummel, N., Spada, H., Hauser, S.: Learning to collaborate while being scripted or by observing a model. Int. J. Comput.-Supported Collab. Learn. 4(1), 69–92 (2009)

Schneider, B., Pea, R.: Toward collaboration sensing. Int. J. Comput.-Supported Collab. Learn. 4(9), 5–17 (2014)

Schwartz, L.D.: The emergence of abstract representation in dyad problem solving. J. Learn. Sci. 4, 321–354 (1995)

Shirouzu, H., Miyake, N., Masukawa, H.: Cognitively active externalization for situated reflection. Cogn. Sci. 26(4), 469–501 (2002)

Vygotsky, L.S.: The Development of Higher Psychological Processes. Harverd University Press, Cambridge (1980)

Acknowledgments

This work was supported by Grant-in-Aid for Scientific Research (C), Japan Society for the Promotion of Science (JSPS) KAKENHI Grant Number 16K00219.

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Rights and permissions

Copyright information

© 2018 Springer International Publishing AG, part of Springer Nature

About this paper

Cite this paper

Hayashi, Y. (2018). Gaze Feedback and Pedagogical Suggestions in Collaborative Learning. In: Nkambou, R., Azevedo, R., Vassileva, J. (eds) Intelligent Tutoring Systems. ITS 2018. Lecture Notes in Computer Science(), vol 10858. Springer, Cham. https://doi.org/10.1007/978-3-319-91464-0_8

Download citation

DOI: https://doi.org/10.1007/978-3-319-91464-0_8

Published:

Publisher Name: Springer, Cham

Print ISBN: 978-3-319-91463-3

Online ISBN: 978-3-319-91464-0

eBook Packages: Computer ScienceComputer Science (R0)