Abstract

Portable cameras record dynamic first-person video footage and these videos contain information on the motion of the individual to whom the camera is mounted, defined as ego. We address the task of discovering ego-motion from the video itself, without other external calibration information. We investigate the use of similarity transformations between successive video frames to extract signals reflecting ego-motions and their frequencies. We use novel graph-based unsupervised and semi-supervised learning algorithms to segment the video frames into different ego-motion categories. Our results show very accurate results on both choreographed test videos and ego-motion videos provided by the Los Angeles Police Department.

Access provided by CONRICYT-eBooks. Download conference paper PDF

Similar content being viewed by others

Keywords

- Inverse Compositional Algorithm

- Fidelity Points

- Real-world Video Data

- classificationClassification Algorithms

- Video Stabilization

These keywords were added by machine and not by the authors. This process is experimental and the keywords may be updated as the learning algorithm improves.

Introduction

Affordable high-quality cameras for recording the first-person point-of-view experience, such as GoPro, are an increasingly common item in many aspects of people’s lives. In this paper, we present a novel approach for segmenting or indexing body-worn videos to different ego-motion categories.

Prior work on vision-based first-person human action analysis has focused a lot on indoor activities, such as object recognition [24], hand gesture recognition [18] [32], sign language recognition [29], context aware gesture recognition [28], hand tracking [31] and detecting daily life activities [23]. Work with body-worn sensors has also been shown to be effective for categorizing human actions and activities [11] [27]. An unsupervised ego-action learning method was proposed in [14] for sports videos. The basis of video indexing is to model the transformation between successive frames in the video. For the purpose of video indexing, several studies have examined parametric models of frame transformation [6, 13]. Parametric models can be also used for video stabilization [26], and panorama construction [12].

In this paper, we propose an approach to classify different ego-motion categories. We know that human motion observed from a first-person point-of-view can be captured by the global displacement between successive frames. This means that we should be able to aggregate global motion and marginalize out local outlier motion. We also know that motion involving the human gait has an inherent frequency component. Therefore we can expect that frequency analysis can be used as an important feature for ego-action categorization. We propose the use of a parametric model for calculating the simple global representation of motion. This approach produces a low dimensional representation of the motion of the ego. We then classify the ego-motion using novel graph-based semi-supervised and unsupervised learning algorithms. The algorithms are motivated by PDE-based image segmentation methods and achieve high performance in both accuracy and efficiency for different discrete data sets.

We consider the ego-motion classification problem with both benchmark and real-world data. Working with both types of data is critical because of the stark differences in the degree of difficulty in the analysis of video data collected under controlled and uncontrolled or “wild” conditions. Benchmark datasets with known ground truth are developed under experimental conditions controlled by the researcher. Such datasets attempt to simulate the types of behaviors that are of most interest. Simulations may favor positive outcomes because they seek not only to limit sources of error linked to video image quality, but also enhance target behaviors of interest. For example, experimental protocols that seek to enhance camera stability, ensure adequate lighting conditions, avoid obstructions may all assist in the algorithmic task. Ensuring that experimental participants enact well-defined or discrete transitions between different types of behavior, or exaggerate the differences between behavioral modes may favor accurate segmentation . We draw on choreographed video collected under controlled circumstances to develop our approach.

Videos not collected under controlled conditions may nevertheless be hand-labeled by the researcher to produce a ground truth. Such videos may be subject to many more quality challenges than simulated scenes. Actual behavior and conditions as they exist on the ground are unforgiving. People in real-world settings may not act in discrete, linear sequences, nor are they necessarily inclined to exaggerate their different actions for easy detection . Ego-motions may also proceed so quickly that they defy discrete recognition . We may also lack sufficient semantic categories to capture the diversity of real-world behavior. Real-world video systems may also not be state-of-the-art and therefore suffer from poor camera stability, low frame rate, low resolution, poor color saturation and data collection errors (both human and mechanical). All of these effects can drastically impact the ability of the researcher to label video for ground truth, which introduces errors into algorithmic methods. We draw on police body-worn video (BWV) to evaluate how our methods perform under challenging real-world conditions. Police BWV is typically shaky, contains noise from low light conditions, poor color saturation and occlusions, and represents diverse and often mixed motion routines.

The paper is organized as follows. In section “Motion Features”, we describe the method for motion feature extraction for two successive frames. In section “Classification Method”, we investigate the semi-supervised and unsupervised graph-based MBO algorithms for classification . In section “Experimental Results”, we elaborate on our experimental results using choreographed test video and real-world video data. Section “Conclusion” concludes the paper.

Motion Features

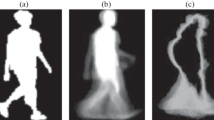

We characterize motion in a video sequence using a set of features. The features represent the relative movements of ego, the individual on whom the video camera is mounted. The features depend on the estimation of parametric models between successive frames and on the analysis of periodic signals of the motion through characteristic frequencies . We illustrate our method of constructing the motion features in Fig. 10.1. In section “Transformations Between Two Successive Frames”, we discuss how to use the inverse compositional algorithm to estimate the similarity transformation between successive frames. This transformation is represented by four parameters t x, t y, a and b. In section “Movement Signal”, we construct four of the features to be used for the video segmentation —horizontal displacement (x), vertical displacement (y ), angle of rotation (r) and zoom (z) using the similarity transformation . In addition, the characteristic frequencies of these four signals are computed using the method discussed in section “Frequency Signal”. In section “Equalization of Variance”, we combine the four movement features and four frequency features to obtain the eight-dimensional feature vector for each transformation between two successive frames. It is this feature vector that will be used for the graph-based machine learning method.

Transformations Between Two Successive Frames

To compute the motion of the video sequence, we estimate the similarity transformations between consecutive frames using the inverse compositional algorithm [1, 2]. It is possible to use more general parametric motions, such as affinities or homographies. However, their calculation is more prone to errors in the presence of camera shake. In any case, we find that the four parameters of the similarity are sufficient to characterize motion.

The inverse compositional algorithm is an improvement of the Lucas-Kanade method [2, 15] for image registration . Its implementation in [25] includes the use of robust error functions and estimates the correct transformation even in the presence of occlusions or multiple motions. Let I 1(x) and I 2(x) be two images, with \(\mathbf {x} = \left ( x,y \right )\). Let p be the global displacement vector between the two images and Δ p be the incremental displacement vector at each iteration. Let x ′(x;p, Δ p) be the correspondence map from the left to the right image, or equivalently two frames in a video sequence, parameterized by p and the incremental refinement Δ p. The energy model is given by

where \(\rho \left (\cdot \right )\) is a function that gives less weight to large values of the argument, where the difference in image intensities is big (e.g., ρ(s 2, λ) = 0.5s 2∕(s 2 + λ 2)).

Minimizing the energy with respect to Δ p yields:

with

and \(\rho ':=\rho '\left (\left |{\mathbf {I}}_2(\mathbf {x}'(\mathbf {x};\mathbf {p}))-{\mathbf {I}}_1(\mathbf {x})\right |{ }^2_2; \lambda \right )\). \(\mathbf {J}(\mathbf {x};\mathbf {p})=\frac {\partial \mathbf {x}'(\mathbf {x};\mathbf {p})}{\partial \mathbf {p}}\) is the Jacobian of the transformation. Table 10.1 lists the similarity transformation and its Jacobian using the parametrization proposed in [33].

The minimum of this energy provides the parameters of the transformation. To reach a highly accurate solution, the algorithm uses an iterative process. It also includes a coarse-to-fine strategy for estimating large displacements . See [25] for further details.

Movement Signal

Simple motions, such as horizontal (x) and vertical (y) movements, zoom (z) and rotation (r) information can be computed given the similarity. The procedure for calculating the displacement of the central pixel is shown in Algorithm 10.1.

Algorithm 10.1 Calculate the displacement of the central pixel

Since the similarity includes the composition of a zoom and rotation matrices, it is easy to obtain these coefficients from the parametrization of Table 10.1. In this case, the rotation r and zoom factor z are calculated as

The signals from raw video footage may have abnormally large values. We filter out these values in preprocessing. We replace the signal value by its mean μ, if the signal value is outside the (μ − 3σ, μ + 3σ) region where σ is the standard derivation. The filtered signals can still be very noisy. We use convolutions with a Gaussian function to smooth these signals, which is the basic idea in video stabilization [26].

We use the QUAD video data setFootnote 1 to examine ego-motion signals. We discuss the details of this data set in section “Experimental Results”.

The motion signals we calculate using Algorithm 10.1 and Eq. (10.4) are shown in Fig. 10.2. The left column gives the raw data x, y, z and r and the right column the corresponding filtered and smoothed data.

The periodic pattern correlates with the periodic actions in the QUAD video. The large oscillation of x corresponds to ego turning left and right repeatedly. The large oscillation of y corresponds to ego repeatedly looking up and looking down. The four peaks in z correspond to ego walking and running, since the frames zoom fast when the person is walking or running. The large oscillations of rotation r also correlate with the movements of turning left, turning right, looking up and looking down.

Frequency Signal

Some ego-motions are periodic, such as jumping, walking and running. Periodic motions have different characteristic frequencies . This observation leads us to investigate the frequencies of x, y, z and r using Fourier analysis. We use the short-time Fourier transform (STFT) to determine the sinusoidal frequency and phase content of local sections of a signal as it changes over time. In practice, the procedure for computing STFTs is to use a sliding window of fixed length and compute the Fourier transform as the window slides over the whole signal. We use the Hann window here:

As shown in Fig. 10.3, the Hann window is zero at the boundaries which reduces the artifacts at the boundary. The STFT is defined by:

where the length of the window is N and m indicates the window sampling rate.

The magnitude squared of the STFT yields the spectrogram of the function:

We use a five-second window in our experiments. We show the spectrogram of six different motions of the y signal in Fig. 10.4. The frequency is very small when the ego repeatedly turns left and right. The 2 s period is almost the same as when ego repeatedly looks up and down. Looking up and down causes a frequency at 0.6 Hz. The spectrogram of small steps and walking are very similar. The largest frequency is at 7.8 Hz. When ego walks at 0.5 s per step, the frequency is 2 Hz. However, because the GoPro camera is head-mounted, the camera also has an oscillation when ego is walking. This camera oscillation causes these observed high frequencies. For jumping and running, the spectrogram gives accurate frequencies at 2 Hz and 3.4 Hz, respectively.

We select the characteristic frequency of the window, which is defined as:

where f max is the frequency corresponding to the largest value in the spectrogram and δ is the standard deviation of the spectrogram. The condition of being larger than 3δ guarantees that the frequency picked is unlikely to be caused by noise.

In practice, we choose N to be 300 frames (5 s) and let the window move 60 frames (1 s) each time. In this case, at each frame, there are 5 f ws. We choose the median of these f ws to be the final frequency at the frame.

We apply this procedure with the four movement signals x, y, r, and z and get four frequency signals f x, f y, f r and f z. In other words, in addition to four movement signals, each frame transition is also associated with four characteristic frequencies . We compute these frequencies for the QUAD video and show their values in Fig. 10.5. We can observe four periods in the frequencies which correlate with the action periods in the video.

Equalization of Variance

We always force the variance of each signal to be 1 by forcing x to be \(\bar {x}+\frac {x-\bar {x}}{\sigma (x)}\), where \(\bar {x}\) is the mean and σ(x) is the standard variation. In this way, each signal gives equal contribution to the combined feature vector . Different weights can be considered to be applied on different signals based on the importance of the signals.

After equalizing the variance of the 8 signals, we combine them into a final motion feature f motion. It is an N × 8 matrix, where N is the number of frames in the video. Each row represents the eight-dimensional feature vector of one frame and we denote the feature vector of the ith frame to be F i. In this way, we code the video frames by their feature matrix f motion:

Classification Method

Once we have built the features f motion of the video, we would like to infer a number of ego-motion categories from the data. In this section, we explore graph-based semi-supervised and unsupervised algorithms for video segmentation . We consider each transformation between two successive frames as a node in a weighted graph and classify them in different motion classes.

Recently, novel classification algorithms have been proposed [20] that are motivated by PDE-based image segmentation methods and are modified to apply to discrete data sets. These algorithms improve both accuracy of the solution and efficiency of the computation and can be potentially faster in parallel than various classification algorithms such as spectral clustering with K-means [17, 36]. The OpenMP parallelization and optimization of the algorithms are discussed in [19] with online demo and codes.

The classification algorithms consider each data point as a node in a weighted graph. The similarity (weight) between two nodes i and j is given by formula:

where F i and F j are feature vectors of nodes i and j according to (10.9), and τ is a parameter to be determined [7, 36]. We use the Euclidean distance here. To determine the value of τ, we try different values and run the experiments on the validation data to choose the τ with the best accuracy. We use τ = 40 in this paper. More about how to choose τ can be found in [4].

The classification problem is approached using ideas from graph-cuts [30]. Given a weighted undirected graph, the goal is to find the minimum cut (measured by a summation of the weights along the graph cut ) for this problem. This is equivalent to assigning a scalar or vector value u i to each ith data point and minimizing the graph total variation (TV) ∑ij|u i − u j|w ij [34]. Instead of directly solving a graph-TV minimization problem, the graph TV can be transformed to a graph-based Ginzburg-Laudau (GL) functional [4]:

where W(u) is a double well potential, for example \(W(u)=\frac {1}{4}(u^2-1)^2\) in a binary partitioning and multi-well potential in k dimensions (same as the number of classes). L s is the normalized symmetric graph Laplacian which is defined as \(L = I - D^{-\frac {1}{2}}WD^{-\frac {1}{2}}\), where D is a diagonal matrix with diagonal elements d i =∑j ∈ V w(i, j).

In the vanishing 𝜖 limit, a variant of the GL energy Gamma-converges to the graph TV functional [35]. Different fidelity terms are added to the GL functional for semi-supervised and unsupervised learning respectively. The GL energy for semi-supervised learning is:

The last term of Eq. (10.12) is the regular L 2 fit to known data with some constant μ, while λ(x) takes the value of 1 on fidelity nodes, and 0 otherwise. The variable \(\widehat {u}\) is the initial value for u with randomly chosen labels for non-fidelity data points and the “ground truth” for the fidelity points.

The GL energy for unsupervised learning is:

In (10.13), the term ||f − c r||2 denotes an N × 1 vector (||f(x 1) − c r||2, …, ||f(x N) − c r||2)T and the x i (i = 1, …N) are the N pixels of the data set. In addition, the term u ⋆,r indicates the rth column of u; the vector u ⋆,r is a N × 1 vector which contains the probabilities of every node belonging to class r. The term \(\widehat {n}\) is the number of classes and is to be provided to the algorithm in advance. This problem is essentially equivalent to the K-means method when μ approaches +∞.

The GL functional is minimized using gradient descent [16]. An alternative is to directly minimize the GL functional using the MBO scheme [9, 21], or a direct compressed sensing method [22]. We use the multiclass MBO scheme [9] in this paper in which one alternates between solving the heat (diffusion) equation for u and thresholding to maintain distinct class structure. Computation of the entire graph Laplacian is prohibitive for large data sets so we use the Nyström extension to randomly sample the graph and compute a modest number of leading eigenvalues and eigenfunctions of the graph Laplacian [8]. By projecting all vectors onto this sub-eigenspace, the iteration step reduces to a simple coefficient update.

Semi-supervised and Unsupervised Algorithms

We outline here the semi-supervised and the unsupervised algorithms. For the semi-supervised algorithm, the fidelity data (a small amount of “ground truth”) is known and the remaining data needs to be classified according to the categories of the fidelity. For the unsupervised algorithm, there is no prior knowledge of the data labels. We use the Nyström extension algorithm beforehand for both algorithms to calculate the eigenvalues and eigenvectors as the inputs. In practice, these two algorithms converge very fast and give accurate classification results.

Algorithm 10.2 Semi-supervised Graph MBO algorithm [21]

Algorithm 10.3 Unsupervised graph MBO algorithm [10]

The K-means algorithm [17] for finding K clusters proceeds iteratively by first choosing K centroids and then assigning each point to the cluster of the nearest centroid. The centroid of each cluster is then recalculated and the iterations continue until there is little change from one iteration to the next.

In both semi-supervised and unsupervised algorithms, we calculate the leading eigenvalues and eigenvectors of the graph Laplacian using the Nyström method [8] to accelerate the computation. This is achieved by calculating an eigendecomposition on a smaller system of size M << N and then expanding the results back up to N dimensions. The computational complexity is almost O(N). We can set M << N without any significant decrease in the accuracy of the solution.

Suppose \(Z=\{Z_k\}_{k=1}^N\) is the whole set of nodes on the graph. By randomly selecting a small subset X, we can partition Z as \(Z=X\bigcup Y\), where X and Y are two disjoint sets, \(X=\{Z_i\}_{i=1}^M\) and \(Y = \{Z_j\}_{j=1}^{N-M}\) and M << N. The weight matrix W can be written as

where W XX denotes the weights of nodes in set X, W XY denotes the weights between set X and set Y , \(W_{YX} = W_{XY}^T\) and W Y Y denotes the weights of nodes in set Y . It can be shown that the large matrix W Y Y can be approximated by \(W_{YY} \approx W_{YX}W_{XX}^{-1}W_{XY}\), and the error is determined by how many of the rows of W XY span the rows of W Y Y. We only need to compute W XX, \(W_{XY} = W_{YX}^T\), and it requires only (|X|⋅ (|X| + |Y |)) computations versus (|X| + |Y |)2 when the whole matrix is used. Recently this algorithm has been further developed to run on parallel architectures [19, 20].

Experimental Results

To evaluate the performance of our method we need both choreographed video sequences to run controlled experiments and real-world videos to observe performance of our method in naturalistic settings. It is easy to define the ground truth for the choreographed videos since the motions of the person who takes the video are both discrete, and well-defined. For example, looking left and right never coincides with running. However, real-world body-worn video usually contains a combination of different motions with noise and it is therefore harder to define a ground truth.

Choreographed Video

The first video we use is QUAD [14]. We show one frame of the QUAD video in Fig. 10.6. This video is 4 min and 10 s in length and has 60 frames per second for a total of 15,000 frames. It contains nine ego-motions (stand still, turn left, turn right, look up, look down, jump, step in place, walk and run). The Ego wore a head-mounted GoPro camera. The nine actions were performed in order and repeated four times. The ground truth is shown in the first row of Fig. 10.7. The horizontal axis represents time and colors represent different ego-motion categories. The order of the movements are standing still, turning left and turning right repeatedly, looking up and looking down repeatedly, jumping, stepping, walking, running, turning left and then start the same series of motions again for another three times. We compute the feature vector for each two successive frames as described in section “Motion Features”. Then we use K-means, the unsupervised graph MBO algorithm and the semi-supervised graph MBO algorithm for the ego-motion classification . We use 10% known labels (evenly sampled) in the semi-supervised graph MBO algorithm. The classification results of these three algorithms are shown in the 2nd, 3rd and 4th rows of Fig. 10.7. For the K-means and the unsupervised MBO algorithm, we ran the experiments several times and pick the best results here. Depending on the initialization, these two algorithms can converge to different local minima, which is common for most non-convex variational methods . The K-means algorithm gives relatively good results, except that it does not recognize the category of looking down and misclassifies some parts of running, jumping, small steps and walking. The unsupervised graph MBO algorithm gives results similar to K-means. The semi-supervised graph MBO algorithm with 10% known labels gives very accurate results. The accuracy summary of these three algorithms is shown in Table 10.2.

Ego-motion classification results of the QUAD video. The 9 colors represent 9 different ego-motion classes: standing still (dark blue), turning left (moderate blue), turning right (light blue), looking up (dark green) and looking down (light green), jumping (bud green), stepping (aztec gold), walking (orange), runing (yellow)

Real-World Body-Worn Video

We also investigated real-world body-worn videos. We use a data set from the Los Angeles Police Department. The videos are from police wearing chest-mounted cameras while patrolling areas of Los Angeles on foot. The videos record a wide array of police activities from basic patrol through foot chases and arrest. Our ego-motion classification results may be used in modeling the routine activities of police and their interactions with the public.

Police BWV is not collected under controlled circumstances. Ego-motions may evolve rapidly without clear or discrete transitions. Much body worn video is collected at night impacting light and color saturation. The videos also have distortion due to the use of a fish-eye lens. Since there has been very little formal analysis of police BWV, there is a lack of appreciation for the diversity of police behavior likely to be encountered (i.e., very limited semantic dictionaries). The ground-truth is labeled by us without input from the police.

We show here the video segmentation result of one clip of police video. The video is 8 min and 16 s in length, with 14991 frames in total. This particular video was chosen for its diversity of ego-activities thereby presenting a challenging problem for ego motion classification . In the video, police arrive at an apartment building, talk with some people in front of the building, go upstairs, wait outside a room, enter and search the room, leave the room, walk downstairs, and talk to several people outside the building. We define four ego-motion categories in this video—standing (or very slow motions not easy to define), walking, going upstairs, and going downstairs. The ground truth classification of this video is shown in the first row of Fig. 10.8. The dark blue segments represent the category of standing or slow movements when the officer talks with others in front of the building. It also contains actions when the officer enters the room. The video of this period is very shaky and not easily defined as one motion category. The light blue segment corresponds to the walking category. The green segment corresponds to the police going upstairs and the yellow part is going downstairs.

We consider the semi-supervised algorithm for the police body-worn video. We are not using the unsupervised graph MBO algorithm because the result is not consistent. The results are shown in Fig. 10.8. K-means is included as a baseline method since it captures the difference between going upstairs and downstairs. However, K-means frequently misclassifies walking and going downstairs. Some standing frames are classified as other motion categories. This later result is reasonable since standing in this video combines some other movements. The semi-supervised graph MBO algorithm includes 10% known labels on this piece of video. The segmentation results are shown in the third row of Fig. 10.8. The semi-supervised graph MBO method is much better than K-means, and the four categories are all captured almost correctly. The accuracy summary is shown in Table 10.3. The overall accuracy of the semi-supervised graph MBO algorithm with 10% known labels is 90.17%.

Conclusion

In this paper, we investigate the task of discovering ego-motion categories from first-person videos. We deal with this problem in two steps. The first step is comparing two successive frames using the inverse compositional algorithm to extract signals containing motion and motion frequency information. Then we use unsupervised and semi-supervised clustering algorithms for classification . The semi-supervised graph based methods are particularly accurate using only 10% training data. We show promising results on both choreographed and real-world video data.

The potential for future advances in this area are significant particularly in relation to police body-worn video. At full deployment of body-worn video in 2018, the Los Angeles Police Department is projected to collect 3.2 million individual videos totaling more than 200K h of total video feed per year. This represents both a vast resource and a significant analytical challenge. The amount of data suggests that the full array of ego-motions practiced by police might eventually be discovered and subject to classification , moving us towards a realistic picture of the diversity of police activities. There will clearly be no lack of training data with which to tackle this problem. The same surfeit of video data is proving to be true in other domains outside of policing. Recognition of the diversity of ego-motion in policing activity may also lead to novel extensions of the methods into dyadic- and n-person motion models. In the dyadic-motion case there is much to be learned. It is well known that relative motion of individuals with respect to one another encodes fundamental social information [3]. For example, an individual running away from ego may encode avoidance or fear, while an individual running directly towards ego may encode attraction and threat. More complex social interactions may be captured in n-person motion models.

The challenges to achieving such outcomes with real-world video are also significant. In the police body-worn video case, semi-supervised classification clearly outperforms the unsupervised approach. Yet even a small fraction of fidelity points (10% in the current method) is probably infeasible given the volumes of video arriving each day. Semi-supervised methods will therefore need to rely on as few fidelity points as possible. However another approach is video labeling where activities segmented in one video might be used as labels for semi-supervised segmentation in another video. This was demonstrated in [4, 5] for image labelling. It will also be necessary to consider how generalizable methods are across real-world video examples. Ideally, a handful of videos might be exhaustively labeled for ground-truth and these would then work across the growing set of videos. This is an empirical questions that we can start addressing now with the recognition that new methods may be needed to account for the variability of real-world video.

Finally, we also point out that body-worn video is but one sensor platform in what is increasingly a multi-sensor world. It is worth investigating whether there is an advantage to doing more with single sensors, or whether it is better to integrate the signals from many independent sensors. For example, we can imagine doing both ego-motion and scene topic classification from the same video sequence, or as an alternative use accelerometers to capture ego-motion and matching these data to scene classification from video. Importantly, the issues are not strictly technological. Police body-worn video is treated as evidence and therefore is subject to all of the evidence handling rules required by law. Each sensor implies a different packet of physical of evidence that must be maintained and handled appropriately. Future work will need to examine these sorts of tradeoffs in detail.

Notes

- 1.

The data set can be found at: http://www.cs.cmu.edu/$sim$kkitani/datasets/.

References

S. Baker, I. Matthews, Equivalence and efficiency of image alignment algorithms, in IEEE Computer Society Conference on Computer Vision and Pattern Recognition (2001)

S. Baker, I. Matthews, Lucas-Kanade 20 years on: a unifying framework. Int. J. Comput. Vis. 56, 221–255 (2004)

H.C. Barrett, P.M. Todd, G.F. Miller, P.W. Blythe, Accurate judgments of intention from motion cues alone: a cross-cultural study. Evol. Hum. Behav. 26(4), 313–331 (2005)

A.L. Bertozzi, A. Flenner, Diffuse interface models on graphs for classification of high dimensional data. Multiscale Model. Simul. 10(3), 1090–1118 (2012)

A.L. Bertozzi, A. Flenner, Diffuse interface models on graphs for classification of high dimensional data. SIAM Rev. 58(2), 293–328 (2016)

P. Bouthemy, M. Gelgon, F. Ganansia, A unified approach to shot change detection and camera motion characterization. IEEE Trans. Circuits Syst. Video Technol. 9(7), 1030–1044 (1999)

F. Chung, Spectral Graph Theory, vol. 92 (American Mathematical Society, Providence, 1997)

C. Fowlkes, S. Belongie, F. Chung, J. Malik, Spectral grouping using the Nyström method. IEEE Trans. Pattern Anal. Mach. Intell. 26(2), 214–225 (2004)

C. Garcia-Cardona, E. Merkurjev, A.L. Bertozzi, A. Flenner, A.G. Percus, Multiclass data segmentation using diffuse interface methods on graphs. IEEE Trans. Pattern Anal. Mach. Int. 36(8), 1600–1613 (2014)

H. Hu, J. Sunu, A.L. Bertozzi, Multi-class graph Mumford-Shah model for plume detection using the MBO scheme, in Energy Minimization Methods in Computer Vision and Pattern Recognition (Springer International Publishing, Berlin, 2015)

T. Huynh, M. Fritz, B. Schiele, Discovery of activity patterns using topic models, in Proceedings of the 10th International Conference on Ubiquitous Computing (2008), pp. 10–19

V. Kiani, H.R. Pourreza, Robust GME in encoded mpeg video, in Proceedings of the 9th International Conference on Advances in Mobile Computing and Multimedia (2011), pp. 147–154

J. Kim, H.S. Chang, J. Kim, H. Kim, Efficient camera motion characterization for MPEG video indexing. IEEE Int. Conf. Multimed. Expo 2, 1171–1174 (2000)

K.M. Kitani, T. Okabe, Y. Sato, A. Sugimoto, Fast unsupervised ego-action learning for first-person sports videos, in 2011 IEEE Conference on Computer Vision and Pattern Recognition (CVPR) (2011), pp. 3241–3248

B.D. Lucas, T. Kanade, An iterative image registration technique with an application to stereo vision, in Proceedings of the 7th International Joint Conference on Artificial intelligence (IJCAI) (1981)

X. Luo, A.L. Bertozzi, Convergence analysis of the graph Allen-Cahn scheme. J. Stat. Phys. 167(3), 934–958 (2017)

J. MacQueen, Some methods for classification and analysis of multivariate observations, in Proceedings of the Fifth Berkeley Symposium on Mathematical Statistics and Probability (1967)

W.W. Mayol, D.W. Murray, Wearable hand activity recognition for event summarization, in International Symposium on Searable Computers (2005), pp. 122–129

Z. Meng, A. Koniges, H. Yun, S. Williams, T. Kurth, B. Cook, J. Deslippe, A.L. Bertozzi: OpenMP parallelization and optimization of graph-based machine learning algorithms, in OpenMP: Memory, Devices, and Tasks, ed. by N. Maruyama, B. de Supinski, M. Wahib. Lecture Notes in Computer Science, vol. 9903 (Springer, Berlin, 2016). IWOMP

Z. Meng, E. Merkurjev, A. Koniges, A.L. Bertozzi, Hyperspectral image classification using graph clustering methods. Image Process. Line 7, 218–245 (2017)

E. Merkurjev, T. Kostic, A.L. Bertozzi, An MBO scheme on graphs for classification and image processing. SIAM J. Imag. Sci. 6(4), 1903–1930 (2013)

E. Merkurjev, E. Bae, A.L. Bertozzi, X.C. Tai, Global binary optimization on graphs for classification of high-dimensional data. J. Math. Imag. Vision 52(3), 414–435 (2015)

H. Pirsiavash, D. Ramanan, Detecting activities of daily living in first-person camera views, in IEEE Conference on Computer Vision and Pattern Recognition (CVPR) (2012), pp. 2847–2854

X. Ren, C. Gu, Figure-ground segmentation improves handled object recognition in egocentric video. CVPR 2(3), 6 (2010)

J. Sánchez, The inverse compositional algorithm for parametric registration. Image Process. Line 6, 212–232 (2016)

J. Sánchez, J.-M. Morel, Motion smoothing strategies for 2D video stabilization. SIAM J. Imag. Sci. 11(1), 219–251 (2018)

E.H. Spriggs, F. de la Torre, M. Hebert, Temporal segmentation and activity classification from first-person sensing, in 2009 IEEE Computer Society Conference on Computer Vision and Pattern Recognition Workshops (2009), pp. 17–24

T. Starner, B. Schiele, A. Pentland, International Symposium on Wearable Computers (1998), pp. 50–57

T. Starner, J. Weaver, A. Pentland, Real-time american sign language recognition using desk and wearable computer based video. IEEE Trans. Pattern Anal. Mach. Intell. 20(12), 1371–1375 (1998)

M. Stoer, F. Wagner, A simple min-cut algorithm. J. ACM 44(4), 585–591 (1997)

L. Sun, U. Klank, M. Beetz, EYEWATCHME—3D hand and object tracking for inside out activity analysis, in 2009 IEEE Computer Society Conference on Computer Vision and Pattern Recognition Workshops (2009), pp. 9–16

S. Sundaram, W. Cuevas, High level activity recognition using low resolution wearable vision, in 2009 IEEE Computer Society Conference on Computer Vision and Pattern Recognition Workshops (2009), pp. 25–32

R. Szeliski, Computer Vision: Algorithms and Applications (Springer Science & Business Media, Berlin, 2010)

A. Szlam, X. Bresson, A total variation-based graph clustering algorithm for cheeger ratio cuts. UCLA CAM Report: 09-68 (2009)

Y. Van Gennip, A.L. Bertozzi, Gamma-convergence of graph Ginzburg-Landau functionals. Adv. Differ. Equ. 17(11/12), 1115–1180 (2012)

U. Von Luxburg, A tutorial on spectral clustering. Stat. Comput. 17(4), 395–416 (2007)

Acknowledgements

The work was supported by the ONR grant N00014-16-1-2119, NSF grant DMS-1737770, NSF grant DMS-1417674, FUI project Plein Phare by BPI-France and NIJ Grant 2014-R2-CX-0101.

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Rights and permissions

Copyright information

© 2018 Springer International Publishing AG, part of Springer Nature

About this paper

Cite this paper

Meng, Z., Sánchez, J., Morel, JM., Bertozzi, A.L., Brantingham, P.J. (2018). Ego-Motion Classification for Body-Worn Videos. In: Tai, XC., Bae, E., Lysaker, M. (eds) Imaging, Vision and Learning Based on Optimization and PDEs. IVLOPDE 2016. Mathematics and Visualization. Springer, Cham. https://doi.org/10.1007/978-3-319-91274-5_10

Download citation

DOI: https://doi.org/10.1007/978-3-319-91274-5_10

Published:

Publisher Name: Springer, Cham

Print ISBN: 978-3-319-91273-8

Online ISBN: 978-3-319-91274-5

eBook Packages: Mathematics and StatisticsMathematics and Statistics (R0)