Abstract

In Sect. 2.3.1, the measurement problem was formulated in the form of a trilemma. In this view, either (i) the wavefunction is not a complete description; or (ii) the time evolution is not a continuous unitary process; or (iii) measurements do not lead to well-defined results. The GRW theory described in Sect. 2.3.1 chooses alternative (ii); it adds a nonlinear term to the Schrödinger equation, which models a physical mechanism for the “actual” collapse of the wavefunction. The Copenhagen interpretation also denies a continuous time evolution which follows the Schrödinger equation; in contrast to the GRW theory, this process is however not given a realistic interpretation.

Access provided by CONRICYT-eBooks. Download chapter PDF

Similar content being viewed by others

In Sect. 2.3.1, the measurement problem was formulated in the form of a trilemma. In this view, either (i) the wavefunction is not a complete description; or (ii) the time evolution is not a continuous unitary process; or (iii) measurements do not lead to well-defined results. The GRW theory described in Sect. 2.3.1 chooses alternative (ii); it adds a nonlinear term to the Schrödinger equation, which models a physical mechanism for the “actual” collapse of the wavefunction. The Copenhagen interpretation also denies a continuous time evolution which follows the Schrödinger equation; in contrast to the GRW theory, this process is however not given a realistic interpretation.

In this chapter, we treat the most prominent advocates of those strategies which either deny the completeness of the wavefunction (de Broglie–Bohm theory) , or question the uniqueness of the measurement results (Everett’s or the many-worlds interpretation). In these theories, the state vector is thus subject to a continuous unitary time evolution. Their common feature is dispensing with the “collapse” of the wavefunction; only the appearance of this non-unitary change of state needs to be justified in these interpretations. Thus, the name no-collapse interpretations has become common as a generic label for these theories.

5.1 The de Broglie–Bohm Theory

Within the debates over the interpretation of the quantum theory—especially in view of the measurement problem—the question of whether or not quantum mechanics in its present form is simply incomplete is immediately raised. The statistical interpretation of quantum mechanics suggests that it must be based on an additional structure, whose elucidation would give the interpretation of the theory a completely new direction. Since this additional structure is unknown in the present version of quantum mechanics, this research programme was originally called “the search for ‘hidden’ variables”.

In 1952, David Bohm published his article “A Suggested Interpretation of the Quantum Theory in Terms of ‘Hidden’ Variables” (Bohm 1952). At the time, he was unaware that Louis de Broglie had introduced a mathematically equivalent formulation of this theory already in 1927 at the 5th Solvay Conference (de Broglie 1927). For this reason, we refer to this interpretation as the “de Broglie–Bohm theory” (DBB theory).Footnote 1 de Broglie himself referred to the interpretation as the “theory of pilot waves” (l’onde pilote). The conference proceedings of the 5th Solvay Conference have been accessible in English only since 2009 (Bacciagaluppi and Valentini 2009). Antony Valentini and Guido Bacciagaluppi not only undertook the translation, but also, in their knowledgeable commentary, they discuss the role of this conference for the interpretation of the quantum theory in general. According to their analysis, it is misleading to reduce the significance of the 5th Solvay Conference to the (unquestionably important) debates between Bohr and Einstein. Bacciagaluppi and Valentini argue in favour of a re-evaluation of the role of de Broglie within the early interpretation debates, and in that connection, they state:

Today, pilot-wave theory is often characterized as simply adding particle trajectories to the Schrödinger equation. An understanding of de Broglie’s thought from 1923 to 1927, and of the role it played in Schrödinger’s work, shows the gross inaccuracy of this characterization: after all, it was actually Schrödinger who removed the trajectories from de Broglie’s theory (Bacciagaluppi and Valentini 2009, p. 78).

A discussion of the priorities in the early development of wave mechanics can and should not be carried out here. We have cited this thought-provoking passage mainly because it expresses the basic idea of the de Broglie–Bohm theory in such a simple and clear-cut manner. This is a theory which alleges the incompleteness of the usual quantum mechanics and adds “particles” in the literal sense to the wavefunction. As we have already indicated above, the term “hidden variables” has been adopted for these additional determining quantities. This term is, to be sure, somewhat misleading, since even the harshest critics cannot deny that particles, and their locations in particular, are directly observable (and thus in this sense not at all hidden). Instead, it is simply the wavefunction which is not susceptible to direct observation.Footnote 2

For reasons which of course must be explained in more detail in the following, the de Broglie–Bohm theory succeeds in this way in describing the measurement process as a normal interaction which leads to a uniquely defined final state. At the same time, it is (in the technical sense) a deterministic theory—while in addition, it can also reproduce all the predictions of the quantum theory. However, this theory makes no new predictions which deviate from those of the quantum theory, so that experimentally, there is no way to decide between the two.Footnote 3

In Bohm’s formulation of 1952, we are dealing with an extension of non-relativistic quantum theory. We will take up the question of a relativistic generalization in Sect. 5.1.7. The following description of the theory makes use at various points of a comparison with the “standard interpretation” or the “usual textbook version” of quantum mechanics. These concepts are naturally not strictly defined, and the reader can think here of the Copenhagen interpretation or a textbook description of quantum mechanics, which do not deal with the problems treated in this book.

5.1.1 Mathematical Description

The de Broglie–Bohm theory is an extension of the standard quantum theory. Among the relations which define the theory mathematically, we thus find the usual Schrödinger equation:

Here, V refers to the potential which characterizes the corresponding system (see also Eq. (1.39) in Sect. 1.2.4; there, the Schrödinger equation was introduced for only one spatial dimension). We have chosen the positional representation not by chance, since it is, as we shall see, in fact distinguished within the de Broglie–Bohm theory. In the standard interpretation, \(\psi \) is presumed to contain the complete description of the system, and from its absolute square \(|\psi |^2\), the probability of observing a particle by a measurement within a particular spatial region can be obtained. In the standard interpretation, one however cannot speak of a particle’s trajectory or orbit, i.e. that which brought it to the position where it was observed.

In the de Broglie–Bohm theory, the concept of “particle” is taken so seriously that at each moment in time (i.e. even without measurements), it is associated with a well-defined position. A quantum-mechanical N-particle system is thus no longer described by the wavefunction alone, but rather by the pair consisting of the wavefunction and the position coordinates of the particles: \((\psi , Q(t))\). Here, \(Q(t)=(Q_1(t),\cdots ,Q_N(t) )\), where \(Q_i:t\rightarrow \mathbb {R}^3\) denotes the trajectory of the ith particle. \(Q(t)\in \mathbb {R}^{3N}\) is called the configuration of the system, and \(\mathbb {R}^{3N}\) is its so-called configuration space.Footnote 4

For the particle positions Q(t), one must specify an equation of motion, i.e. a (differential) equation which describes the temporal and spatial evolution of the particle positions under the influence of the given external conditions. This prescription must reproduce—on average—the statistical predictions of quantum theory. There have been various suggestions for the motivation of this equation of motion (cf. Passon 2010, pp. 32–36). In the following, we will make use of the analogy between quantum theory and hydrodynamics, which was pointed out as early as 1926 by Erwin Madelung (cf. Madelung 1926). Let us therefore briefly consider a liquid (or a gas) with a mass density of \(\rho _{m}\). Under the assumption that the mass is a conserved quantity, the mass density within a certain region in space can then change its magnitude only if fluid flows out of or into that region. In order to describe the flow of the fluid, we define the “current-density vector” or, for short, the “current density”, as the product of the mass density and the flow velocity of the fluid: \(j_m=\rho _m v\). The x component of \(j_m\) denotes the amount of fluid which flows per unit time through a unit surface element (perpendicular to the x-axis) and correspondingly for the y and z components. Then the conservation of mass is represented by the following mathematical expression:

Here, the symbol “\(\nabla \)” denotes the divergence, i.e. the sum of the spatial changes over all three directions. This equation of continuity from hydrodynamics expresses—as explained—the conservation of the fluid mass.

We now turn back to quantum theory, in which likewise an equation of continuity holds—but now for the “probability density” \(\rho =|\psi |^2\). This equation is formally identicalFootnote 5 to the hydrodynamic equation:

At this point, naturally, the mathematical details should not be so much the subject of our considerations as the structural relations. The decisive point is that this equation can be derived from the Schrödinger equation, and for the probability current density, we find the following (somewhat complicated) expression:

In the usual textbook descriptions of quantum theory, the equation of continuity (5.3) is interpreted as an expression of the “conservation of probability”. Probability (like mass within hydrodynamics) can be neither “created” nor “destroyed”.

In the de Broglie–Bohm theory, one takes a step further, since the goal is finally to arrive at an equation of motion for the “Bohmian particles”. The expression \(\rho \) in quantum theory is interpreted as the probability density of the real particle configuration, and we recall that in hydrodynamics, the relation \(j=\rho v\) holds. If we put in the corresponding quantum-mechanical expressions for \(\rho \) and j (and use the “polar representation” \(\psi =Re^{\frac{i}{\hbar }S}\) for the wavefunction), we find, after a simple computation, the equation of motion for the particle positions Q(t) that we were seeking (for its velocity, we have of course \(v=\frac{dQ}{dt}\)):

This Eq. (5.5) is called the guidance equation of the de Broglie–Bohm theory. Pictorially speaking, the particle trajectories are thus guided by the wavefunction (or rather by its phase S). Treating a physical problem with the help of the DBB theory thus means first of all solving the Schrödinger equation (as in the usual quantum mechanics). In Sect. 5.1.4, we will discuss concrete applications.Footnote 6

The validity of the equation of continuity (5.3) has still another important consequence for the de Broglie–Bohm theory. It follows from this equation namely that a configuration once distributed according to \(|\psi |^2\) retains this property under Bohmian dynamics. This observation is the key to the fact that the de Broglie–Bohm theory reproduces all the predictions of the usual quantum theory, since naturally a differential equation fixes the motion only through its boundary and initial conditions. If one now chooses the initial configuration \(Q(t_0)\) at random according to the probability distribution \(|\psi _{t_0}|^2\) for a system that is described by the wavefunction \(\psi \), then the configuration Q(t) will remain distributed according to \(|\psi _t|^2\) at each later moment in time, t. In other words, according to Born’s rule, all the predictions of the usual quantum theory will be reproduced.Footnote 7 This condition is called the “quantum equilibrium hypothesis”, and we will take a closer look at it in Sect. 5.1.2.

The three relations which define the de Broglie–Bohm theory mathematically are thus

-

1.

The Schrödinger equation: \(i\hbar \frac{\partial \psi }{\partial t} =-\left( \frac{\hbar ^2}{2m}\right) \nabla ^2 \psi + V(\mathbf{r})\psi \)

-

2.

The guidance equation: \(\frac{dQ}{dt}=\frac{\nabla S}{m}\)

-

3.

The quantum equilibrium hypothesis: The position distribution \(\rho \) of states with the wavefunction \(\psi \) is given by the probability density \(\rho =|\psi |^2\).

The second and the third relations deserve a more careful consideration, since they signal the differences relative to conventional quantum theory.

5.1.2 The Quantum Equilibrium Hypothesis

According to the quantum equilibrium hypothesis, the positions of the particles of a state which is described by the wavefunction \(\psi \) are distributed in accord with the probability density \(|\psi |^2\). The occupation probability within a spatial region V is calculated by integration, \(\int _V|\psi |^2 dV'\).

If this initial condition is fulfilled at one time, it follows from the equation of continuity (5.3) that Born’s rule will remain valid at all later times. Furthermore, the quantum equilibrium hypothesis guarantees that the particle positions cannot be more precisely controlled. Bell writes on this topic:

Note that the only use of probability here is, as in classical statistical mechanics, to take account of uncertainty in initial conditions (Bell 1980, p. 156).

If thus follows that the Heisenberg uncertainty relations can also not be violated within the de Broglie–Bohm theory! At the same time, one might be tempted to call the “determinism” of the de Broglie–Bohm theory “fictitious”. In its descriptive content, the de Broglie–Bohm theory does not differ from the standard interpretation of quantum mechanics, and it likewise can make only statistical predictions. The quote from Bell however indicates a conceptual difference. Within the de Broglie–Bohm theory, the statistical character of the predictions is attributable to our lack of knowledge and is thus epistemic in nature. Within the standard interpretation of quantum mechanics, the ignorance interpretation of the probability is not possible; it is thus an ontic probability.

Let us now turn to the question of how this equilibrium distribution can be justified. The first attempt dates back to Bohm (cf. Bohm 1953), who gave a dynamical explanation of the \(|\psi |^2\) distribution. His approach was developed further by Valentini (1991). In Valentini and Westman (2005), one finds for example numerical simulations of systems which, under the dynamics of the guidance equation, lead from a non-equilibrium distribution to the quantum equilibrium distribution. In the framework of this approach, it would seem reasonable to consider systems in “quantum non-equilibrium”—together with all possible deviations of the predictions between conventional quantum mechanics and the de Broglie–Bohm theory (cf. Valentini 2004). Another strategy—for which Bell seems to express support at various times—consists in simply postulating the quantum equilibrium hypothesis. This would give it the status of a fundamental law.

In contrast, Dürr et al. (1992) argue that neither postulating the quantum equilibrium condition, nor its dynamic justification is reasonable or convincing. At the core, the question is namely how—within a deterministic theory—probability statements can occur at all. This problem is naturally much older than the de Broglie–Bohm theory, and it has dominated the discussion on the relation between (Newtonian) statistical mechanics and classical thermodynamics since the nineteenth century. In their justification of the quantum equilibrium distribution, Dürr et al. therefore hark back to a concept introduced by Ludwig Boltzmann (1844–1906), namely that of “being typical” for a physical event. “Being typical” has a terminological meaning here, namely the appropriateness for the “overwhelming majority” (as defined by measure theory) of initial configurations (Dürr 2001, pp. 49ff) .

The application of this concept to de Broglie–Bohm theory is now carried out in two steps. First, the authors clarify the question of under which conditions subsystems can be associated with a wavefunction at all. This can naturally not be expected of arbitrary subsystems, owing to interactions with their environment. In principle, the de Broglie–Bohm theory thus holds for the wavefunction of the universe, \(\Psi \). The concept of the “wavefunction of the universe” sounds presumptuous. In fact, it does not mean that the de Broglie–Bohm theory claims universal validity. Rather, it is the wavefunction of a system in which probability statements can no longer be explained in terms of an “external influence”, i.e. by the existence of a still larger system in which the system considered is embedded. For the fundamental justification of probability statements, this standpoint thus must be adopted.

For the wavefunction of the universe, however, the assertion that its position coordinates are distributed according to \(\rho =|\Psi |^2\) appears problematic. After all, there is only one universe,Footnote 8 and a test of this probability statement by measurements of relative frequencies of occurrence is impossible. For the wavefunction of the universe, one cannot ascribe the meaning of a probability density to the expression \(|\Psi |^2\), at least not in an operational sense. Instead, Dürr et al. suggest that we see in it a measure of what a “typical” initial condition (in Boltzmann’s sense) for the universe would be like. They justify their choice with the “equivariance” of the distribution, i.e. with the fact already mentioned that a configuration which at one moment is distributed according to \(|\psi |^2\) will retain this property. The choice of any other (non-equivariant) distribution as the measure of “typical” initial configurations would have to distinguish a particular moment in time, and the moment at which precisely that distribution was present in an unnatural way.

In addition, there is a class of subsystems which can be described by using “effective wavefunctions”. This means that the particle dynamics of these subsystems are almost completely determined by that effective wavefunction .Footnote 9

Finally, Dürr et al. can prove that subsystems with an effective wavefunction \(\psi \) within a “typical” universe fulfil the quantum equilibrium hypothesis. In this sense, the deterministic de Broglie–Bohm theory obtains the appearance of randomness, and the empirical distributions correspond to the quantum-mechanical predictions. If one accepts this “Boltzmann argument”, then the quantum equilibrium condition becomes even a theorem of the de Broglie–Bohm theory.Footnote 10

5.1.3 The Guidance Equation

Thus far, we have considered only the single-particle case. The general form of the guidance equation for an N-particle system is given byFootnote 11:

Here, \(m_i\) denotes the mass of the ith particle, \(\mathfrak {I}\) the imaginary part of the following expression and \(\nabla _i\) is the gradient with respect to the spatial coordinates of the ith particle. In case the wavefunction is a spinor, i.e. \(\psi : \mathbb {R}^{3N} \rightarrow \mathbb {C}^{2N}\), the probability current is changed, so that one obtains the following guidance equation:

where \( \psi ^*\psi \) is the scalar product on \(\mathbb {C}^2\). The latter equation is mentioned here not only for completeness, but also because it will be used in the treatment of the measurement problem in Sect. 5.1.5.

The existence and uniqueness of the solutions of the guidance equation for all the relevant types of potentials have been demonstrated (see Teufel and Tumulka 2005). Two points should be emphasized: First, the order of the guidance equation (as well as the resulting general properties of its solutions); second, its so-called non-locality. The next two subsections are devoted to these two issues.

General Properties of the Trajectories

Since the guidance equation is a differential equation of first order, one initial condition \(Q(t_0)\) already determines the trajectories uniquely. In configuration space, the paths are thus not overlapping. It follows for the single-particle case, in which position space and configuration space are identical, that the trajectories within the DBB theory do not intersect each other. If they are in fact identical at one point, then they must be identical at all points. In many cases, this information alone allows us to visualize a qualitative picture of the trajectories.

Non-locality

The guidance equation determines the trajectory of the ith particle essentially by taking the derivative of the wavefunction (more precisely: by taking its gradient). The wavefunction is however defined on configuration space and is evaluated at the position Q(t). In other words, the change of position of each particle at the time t depends on the positions of all the other particles at the same moment in time. Since these influences do not propagate through space in the sense of a short-range interaction, one speaks of a non-local influence, or of the non-locality of the de Broglie–Bohm theory. However, it is precisely this non-locality which permits the de Broglie–Bohm theory to violate the Bell inequalities (in agreement with experiments; see Chap. 4). At the same time, the quantum equilibrium hypothesis guarantees that this non-locality cannot be used for the transmission of signals, since it is in the end a question of stochastic events. The evident problem of the relativistic generalization of this theory will be addressed in Sect. 5.1.7.

5.1.4 Applications of the de Broglie–Bohm Theory

We now turn our attention to the obvious question of which form the particle trajectories take, whose existence distinguishes the de Broglie–Bohm theory from the usual quantum theory. The guidance equation has in fact been solved numerically for various problems. For those who favour this theory, the existence of these trajectories is notably more important than their concrete characteristics or their numerical simulation. Dürr writes on the question of whether Bohmian trajectories should be calculated at all:

Roughly speaking, no! Sometimes, however, the asymptotic behaviour of the trajectory – essentially that of free particles – can be quite useful. [...] All that we learn from the trajectories is indeed only that at every time t, particles are present whose positions are distributed according to the quantum equilibrium hypothesis as \(|\psi (q,t)|^2\) (Dürr 2001, p. 142).

In the following, we nevertheless consider explicit Bohmian trajectories for the tunnel effect, for interference from gratings (and from the double slit), as well as for the hydrogen atom; thus for several examples of quantum phenomena which, in the usual understanding, can not possibly be explained in terms of continuous trajectories.

The Tunnel Effect

A spectacular prediction of quantum theory is the “tunnel effect”. It consists in the fact that quantum-mechanically described particles can overcome a potential barrier, although the energy height of the barrier is greater than the energy of the particles. Radioactive \(\alpha \) decay, as well as nuclear fusion in the interior of the Sun, is understandable only in terms of the tunnel effect.Footnote 12 Pictorially speaking, the particles pass below the barrier—they thus “tunnel” beneath it.Footnote 13 In an orthodox manner of speaking, there is a finite probability that the particles will be detected on the other side of the barrier. Within the de Broglie–Bohm theory, a continuous particle trajectory must naturally lead from inside the potential barrier to a position outside the barrier.

Figure 5.1 shows the paths taken by some of these trajectories. The y-axis corresponds to the position coordinate (in arbitrary position units) and the x-axis to the time coordinate. A Gaussian wave packet \(\psi \) was assumed as initial condition, and it approaches the barrier from below in the figure. This potential barrier is located at \(0.72\le y\le 0.78\), and it is twice as high as the average energy of the wave packet.Footnote 14 Then the Schrödinger equation is solved numerically and input into the guidance equation. In this way, the course of the trajectories can be computed. One can first recognize how all the particles are braked within the barrier (the slopes of the trajectories in Fig. 5.1 correspond to the particles’ velocities). The tunnel effect occurs for those particles which reach the barrier first, while those arriving later are reflected earlier and earlier. If this were not the case, the particles’ trajectories could intersect. Thus, the property of being intersection-free determines the shape of the trajectories already qualitatively.

A numerical simulation of some trajectories in the 1-dimensional tunnel effect (from Dewdney and Hiley 1982, reprinted with kind permission of the Springer Verlag). The x-axis corresponds to the time coordinate and the y-axis to the position coordinate

This description of the tunnel effect notably opens up the possibility of calculating the “tunnelling time”. The obvious question of the time required by a particle in order to overcome the tunnel barrier cannot even be reasonably asked of conventional quantum theory, since time is not an observable. Cushing (1995) discusses the possibility of an experimental test of the de Broglie–Bohm theory on this basis.

Two-Slit Interference

Diffraction and interference of an electron beam by a double slit and the pattern of the typical interference fringes (see Fig. 5.2, left) were successfully observed by Claus Jönsson in 1959 (see Möllenstedt and Jönsson 1959). Particularly impressive are the experiments in which the particle beam has such a low intensity that the formation of the interference pattern can be observed over a longer period of time. Then, point-like detected particles on the detection screen are seen to gradually build up the interference pattern.

This experiment would appear to illustrate with unusual clarity that the concept of particle trajectories is not applicable in quantum theory. If—thus runs the usual argument—the particle trajectories pass through the upper or the lower slit, it should be irrelevant whether at that moment the other slit were opened or closed. The result should be that the distribution, after passing through a double slit, should correspond to the sum of those from each of two single slits.

However, the observed pattern is evidently quite different. Popularizations occasionally claim that the particle has passed “through both slits”. This paradoxical formulation is apparently intended to suggest that particle trajectories in the classical sense can no longer be considered to exist.

Within the de Broglie Bohm theory, this problem is resolved in a simple manner. The particle trajectories naturally each pass through only one of the openings of the double slit (or of the grating). The trajectories are however led by the wavefunction, according to the guidance equation. The wavefunction encodes the information on the slit geometry and steers the trajectories correspondingly towards the interference maxima. Here, it becomes clear how within the de Broglie–Bohm theory, the wavefunction no longer represents a “probability wave”, but instead a real physical effect.Footnote 15 Every reference to wave–particle dualism thus becomes superfluous.

If the initial values of the particle are distributed according to the quantum equilibrium hypothesis, the DBB theory exactly reproduces the occurrence frequency distribution of quantum theory. A numerical simulation of some of the corresponding trajectories can be seen on the right in Fig. 5.2. Again, it can be clearly discerned that the trajectories do not intersect. At the same time, they exhibit a completely “non-classical” behaviour, in that they show abrupt changes of direction (in—classically—“field-free” regions). Here, one can already see that momentum or energy conservation do not hold on the level of individual particles, since they obey Bohmian mechanics and not Newtonian mechanics. In Sect. 5.1.5 (see also Footnote 19), this aspect is explained in more detail.

The Hydrogen Atom

The discrete and characteristic spectra of excited atoms provided important impulses to the early development of the quantum theory. The successful description of the discrete energy levels of the hydrogen atom belongs among its early triumphs.

The solution of the Schrödinger equation for this problem (i.e. for the potential \(V=-\frac{e^2}{r}\)) is mathematically rather involved and will not be repeated here. The decisive point is that one is led to eigenstates of the energy for which the wavefunction is a product of a real function and the expression \(e^{i(m\phi -Et/\hbar )}\). In the ground state, m (the “magnetic” or “directional” quantum number) is zero, so that the phase is given by \(S=-Et\). Inserting this expression into the guidance equation (5.5), we obtain for the velocity field of course everywhere zero; we have computed the spatial derivative of an expression which has no spatial dependence. In other words, the particle in the ground state is at rest, at positions which are distributed according to the quantum equilibrium condition for the associated wavefunction. One might call this result counter-intuitive—but it must be admitted that no one has an “intuition” of the processes within an atom.Footnote 16

5.1.5 The Solution of the Measurement Problem

At its core, the measurement problem consists in the fact that following a measurement, the measurement apparatus indeed shows a result. After the measurement, the apparatus should (considered quantum-theoretically) thus be in an eigenstate of the corresponding operator.

In general, the microscopic state (on which the measurement is carried out) is described as a superposition of various components, which each correspond to a different “pointer position” of the measurement apparatus. Under the dynamics of the linear Schrödinger equation, the measurement apparatus should also take on a superposition state and not an eigenstate. In reality, however, a superposition of macroscopic states is neither readily imaginable, nor has one ever been observed.Footnote 17

The solution of the measurement problem by the de Broglie–Bohm theory can be illustrated in a completely non-technical and nevertheless appropriate way. It is based on the idea that it is only the pair consisting of the wavefunction and the configuration which makes up the complete description of a system and not just the wavefunction alone. Due to the definite particle positions, every system is in a well-defined state at every time. This therefore holds also for the measurement apparatus after a measurement has taken place. The different pointer positions of the measurement apparatus are in the end none other than different configurations, Q(t). In other words, in the de Broglie–Bohm theory, the “wavefunction of the measurement apparatus” will in general be in a superposition state. The configuration however indicates the result of the measurement which is actually realized. That part of the wavefunction which “guides” the particle(s) can be reasonably termed the effective wavefunction. All the remaining parts can be ignored, since they are irrelevant for the particle dynamics. As a result of decoherence effects (see Sect. 5.2.4), the probability that they will produce interference effects with the effective wavefunction is vanishingly small. In this sense, the de Broglie–Bohm theory describes an “effective collapse” (see also Footnote 9). In the words of Dürr:

This ‘collapse’ is not a physical process, but rather an act of convenience. It takes place only through the choice of description [...] because the price of forgetting about the other, non-effective parts of the wavefunction is extremely low, since future interferences are practically excluded (Dürr 2001, p. 160).

This solution of the measurement problem makes an additional tacit assumption: All the results of measurements must be uniquely characterizable in terms of position coordinates. Think for example of the “pointer positions”, or of the positions of inked pixels on a sheet of paper.Footnote 18 This however does not mean that only the measurement of particle positions can be described by the de Broglie–Bohm theory. Naturally, this solution of the measurement problem applies also to spin, momentum or the measurement of any other “observable”. Their status is however drastically re-interpreted in this theory, as is described by the keyword “contextuality” .

Contextuality

Already in Sect. 1.1.1, we dealt with the Stern–Gerlach experiment for the measurement of the spin component of an electron. A beam of silver atoms is passed through an inhomogeneous magnetic field, so that the spins of their outer electrons lead to a splitting of the beam.

Here, also, we are dealing with a measurement whose definite result is described by the de Broglie–Bohm theory. The discussion is made more complicated by the fact that the Schrödinger equation cannot describe particles with spin. Instead, one has to resort to the so-called Pauli equation. This modification of the Schrödinger equation describes spin-\(\frac{1}{2}\) particles using a 2-component wavefunction. A guidance equation for the particles is found analogously to the case of the Schrödinger equation (this procedure was already described in Sect. 5.1.3, Eq. 5.7). This however yields no conceptional differences relative to the above discussion. Figure 5.3 gives a naive representation of how the results of the measurements are determined in the de Broglie–Bohm theory. If the particle coordinate is above the plane of symmetry (like the small black dot under the magnifying glass in the figure), a deflection into the upper branch of the wavefunction occurs (“spin up”), and vice versa. It is thus the particle’s location which determines the result of a spin measurement! The property “spin” is not attributed to the particle itself, but instead, it is a property of the wavefunction.Footnote 19

We could argue in a similar way about the measurement of energy, momentum or other observables. For all of these quantities, the de Broglie–Bohm theory thus introduces no additional “hidden variables” which would describe their actual values. Instead, their values are determined by the wavefunction, the particle position and the particular implementation of the measurement. Taking the example of the Stern–Gerlach experiment, we can illustrate the influence of the particular measurement setup in an intuitively clear manner: If the orientation of the magnetic field in Fig. 5.3 were reversed, we would measure the opposite spin for the same particles! The de Broglie–Bohm theory thereby composes a picture in which only position measurements yield a value that was already present within the system before the measurement and is thus a property of the particle in the narrow sense. All other measurements owe their outcomes to the “context” of the implementation of the measurement. The terms “measurement” and “observable” are rather misleading here. This property of the de Broglie–Bohm theory is called “contextuality”. Indeed, this concept has a somewhat more extended meaning in the discussions and includes those mutual influences which occur in combined measurements of different quantities.

The relations treated here can be formulated rather concisely by making use of the terminology which has been developed in philosophy for the description of various types of properties. The spin, or all other properties aside from the position, are not categorial properties within the de Broglie–Bohm theory , but rather they are dispositions.Footnote 20 The contextuality of dispositions is however not remarkable; it is simply a part of their definition (cf. Pagonis and Clifton 1995).

Proofs of the Impossibility of Hidden Variables

This contextuality of the de Broglie–Bohm theory explains why the numerous “no-go” theorems or “proofs of impossibility” relating to theories with hidden variables do not apply to it. The best known of these theorems is due to von Neumann. A generalization was formulated by Kochen and Specker; see Mermin (1990) and the references there. These theorems are based on the intuition that hidden variables fully encode the (only apparently) statistical outcome of the measurements. The proofs demonstrate the impossibility of a mapping which ascribes to every state a unique value in regard to every possible measurement—indeed, without taking the context into account. The de Broglie–Bohm theory does not claim even the existence of actual values in regard to every physical quantity which can be measured; for only the position is a categorial property of this theory. Think again of the example given above of the measurement of the spin component: The particle is not associated with any fixed orientation of its spin, independently of a concrete “measurement”; when the direction of the magnetic field is changed, the spin can even take on the reversed value. According to Daumer et al. (1996), the understanding of measurements which is based for example on such no-go theorems reveals a “naive realism” in relation to the role of operators. These authors understand this as the usual identification between operators and observables, bound up with the widespread manner of speaking that “operators can be measured”. This expression is however highly misleading, since the influence of the experimental context on a measurement as described above is not taken into account.

5.1.6 The Schools of the de Broglie–Bohm Theory

The Compendium of Quantum Physics (Greenberger et al.2009) contains two entries on the subject of this chapter. One of them is entitled the “Bohm Interpretation of Quantum Mechanics”, while the other is called simply “Bohmian Mechanics”. One is left with the suspicion that “Bohmian mechanics” is not identical to “Bohm’s interpretation of quantum theory”. This impression is correct and deserves a closer look—even if only to facilitate the orientation of the reader in studying the relevant literature.

The article on “Bohmian Mechanics” was written by Detlef Dürr, Sheldon Goldstein, Roderich Tumulka and Nino Zanghì. This group supports a version of the theory which was formulated by John Bell, beginning in the 1960s. Our own treatment is closely related to this version. At its centre stand the guidance equation and the re-interpretation of the concept of observables (keyword: “contextuality”) .

The author of the article “Bohm Interpretation of Quantum Mechanics” is Basil Hiley. He was a colleague and close coworker of David Bohm at Birkbeck College; and together with Chris Dewdney, Chris Philippidis and others, this “English group” strongly supports Bohm’s formulation of the theory from 1952. Bohm and his coworkers referred (or refer) to this theory notably as an “ontological” or a “causal” interpretation of the quantum theory. In this variant of the theory, the concept of the “quantum potential” plays a central role. Let us consider the derivation of the guidance equation in this respect, as it was given by David Bohm in 1952. He chose a path for the derivation which invokes an analogy to the Hamilton–Jacobi equation of classical mechanics. In the classical case, the Hamilton–Jacobi theory contains the relation \(p=\nabla S\) (with the momentum \(p=m v\) and the action S). Bohm could show in his work that a similar relation holds in quantum theory, if the action is replaced by the phase S of the wavefunction. This then led him to the well-known guidance equation, \(v=\frac{dQ}{dt}=\frac{\nabla S}{m}\). Indeed, this theory can then be represented in such a way that it appears to be a modification of Newtonian mechanics:

with the classical potential V and the additional quantum potential

Note, however, that in contrast to Newtonian physics, the velocity is already fixed via the guidance equation. The representation in terms of a second-order differential equation is thus misleading, since it suggests that position and momentum may be chosen independently.

In fact, the quantum potential has completely non-classical properties, which allow the adherents of this “causal viewpoint” to justify the uniqueness of quantum phenomena. They find for example that wavefunctions which differ only through a complex factor lead to the same quantum potential, since in \(U_{\mathrm {quant}}\), the wavefunction enters both into the denominator and the numerator. Here, Bohm and Hiley (1993, p. 31) introduce the concept of “active information”, and they find in it the justification for a new kind of “holism” (see also Hiley 1999).

Although these two readings of the de Broglie–Bohm theory are mathematically equivalent, and the real contradiction between the usual quantum theory and these variants of the DBB theory holds for both of them, the rivalry of these schools is considerable. Hiley writes:

It should be noted that the views expressed in our book (Bohm and Hiley 1993) differ very substantially from those of Dürr et al. (1992), who have developed an alternative theory. It was very unfortunately that they chose the term ‘Bohmian mechanics’ to describe their work. When Bohm first saw the term he remarked, ‘Why do they call it ‘Bohmian mechanics’? Have they not understood a thing that I have written’? He was referring [...] to a footnote in his book Quantum Theory, in which he writes, ‘This means that the term ‘quantum mechanics’ is a misnomer. It should, perhaps, be better called quantum nonmechanics.’ It would have been far better if Dürr et al. had chosen the term ‘Bell mechanics’. That would have reflected the actual situation far more accurately. (Hiley 1999, p. 119)

The acrimony in this dispute is essentially due to the fact that the “ontological interpretation” associates far-reaching natural-philosophical speculations to its concept of the quantum potential, while supporters of “Bohmian mechanics” see the strength of the theory in its being able to eliminate philosophical speculations from the formulation of the theory. Characteristically, the title of an article by Dürr and Lazarovici (2012) is “Quantum physics without quantum philosophy”.

5.1.7 Criticism of the de Broglie–Bohm Theory

John Bell, who, beginning in the 1960s contributed to the popularization of the de Broglie–Bohm theory with a number of articles, writes concerning the topic of this section:

It is easy to find good reasons for disliking the de Broglie–Bohm picture. Neither de Broglie nor Bohm liked it very much; for both of them, it was only a point of departure. Einstein also did not like it very much. He found it ‘too cheap’, although, as Born remarked, ‘it was quite in line with his own ideas’. But like it or lump it, it is perfectly conclusive as a counter example to the idea that vagueness, subjectivity, or indeterminism are forced on us by the experimental facts covered by nonrelativistic quantum mechanics (Bell 2001, p. 152).

According to Bell, all the counter arguments cannot reduce the importance of the theory in principle. Nevertheless, in the following we will take a closer look at some of those arguments. Heisenberg expresses the opinion that the identical descriptive content of the theory (relative to standard quantum mechanics) disqualifies it as an independent theory. He writes (Heisenberg 1959, p. 106):

From a strictly positivistic point of view, one could even say that we are dealing here not with an alternative suggestion to the Copenhagen interpretation, but instead with an exact repetition of it, only with different terminology.

In the face of the conceptional differences between the de Broglie–Bohm theory and the usual quantum theory, this statement would seem to be overly strong. Furthermore, Heisenberg naturally presumes here that the Copenhagen interpretation offers a convincing solution to the measurement problem. Closely related are references to “Ockham’s razor”. According to the prevailing opinion, when two theories are equivalent, the one which requires the lesser number of premises should be preferred. Does Ockham’s razor thus “cut off” the guidance equation as superfluous ballast from a theory which offers no additional predictions? This demand fails to notice that the additional equation in the de Broglie–Bohm theory renders unnecessary all of the postulates concerning the outcome of a measurement and the interpretation of the wavefunction.

While the previous arguments take the considerable similarity of the DBB theory to the quantum theory as an object of criticism, others see the reason to repudiate the DBB theory in their radical dissimilarity. They find fault with the explicit distinction of the position, and the lack of symmetry between, e.g., the position and the momentum spaces (see the objection of Pauli in Myrvold 2003). In the DBB theory, with its first-order equation of motion, momentum and energy at the level of individual particles are however no longer conserved quantities. The justification of the demand for symmetry between position and momentum can thus be reasonably questioned.

Still other critics are bothered by the (double) role of the wavefunction: It fixes the particle dynamics and is at the same time (i.e. its absolute square) a measure for the equilibrium distribution. In addition, it acts upon the particle’s motion without any back-reaction effects. Another point of criticism refers to the fact that according to the de Broglie–Bohm theory, the world is populated by innumerable “empty” wavefunctions. This is indeed somewhat inelegant.

The role or the status of the wavefunction is also the object of a discussion among those scientists who work with the DBB theory. Originally, the wavefunction was taken to represent a real, physical field. Dürr et al. (1996) suggest, in contrast, that it should play a “nomological” role (i.e. with a law-like character). The wavefunction would then more closely correspond to, e.g., the Hamilton function in classical mechanics than to the usual type of physical field. This could reduce the weight of the criticisms of the lack of reaction effects and of the “empty” wavefunctions. While the interested reader can find a more detailed discussion of the criticisms of the DBB theory in Passon (Passon 2010, pp. 117ff), we will now turn to the principal objection against it: The question of the possibility of a relativistic generalization of the theory.

The particle dynamics of the de Broglie–Bohm theory connects positions at arbitrary distances. This non-locality would appear to violate Einstein’s postulate of the speed of light as an upper limiting velocity. However, the DBB theory discussed so far is an extension of non-relativistic quantum mechanics. The allusion to the fact that it is not compatible with the requirements of the special theory of relativity is thus not really a criticism, but rather simply a statement of fact. This rejoinder is however too superficial, since it is indeed just the non-local dynamics which allows the de Broglie–Bohm theory to explain the violation of the Bell inequalities (cf. Chap. 4, and there in particular Sect. 4.4).

As a rule, the criticism of the non-locality of the DBB theory is primarily associated with doubts as to whether or not the theory can be relativistically generalized. At the same time, there is an orthodox relativistic quantum theory (the Dirac theory) and a relativistic quantum field theory (see Chap. 6), so that the final (and negative) judgment about the DBB theory seems to be passed. However, this argument would be significantly more convincing if those (orthodox) relativistic theories had no measurement problem. But there, also, e.g. the question of definite measurement outcomes is controversially debated.

Thus, the development of a “Bohm-like” relativistic quantum theory (and quantum field theory) without the foundational problems of the conventional formulation is part of the current research program of scientists who work in this field. Some of the approaches discussed there apply notably not particle ontologies, but instead field ontologies . Furthermore, some of the relativistic generalizations dispense even with a deterministic description.Footnote 21

It turns out that not only the dynamics of a generalized guidance equation pose a problem for a relativistic generalization, but also the (generalized) quantum equilibrium distribution. This requirement distinguishes a frame of reference, namely that in which the distribution is determined. The equivalence of all inertial systems is, however, at the very core of special relativity, according to the usual understanding. There are some approaches in which the “distinguished” frame of reference has no experimentally accessible influence and which can reproduce all the predictions of relativistic quantum theory. A new evaluation of the relationship between quantum theory and relativity is however certainly bound up in such approaches. The supporters of the DBB theory recall in this connection quite rightly that (as mentioned above) it is precisely this non-locality, as expressed in the violation of the Bell inequalities, which is also a part of conventional quantum mechanics and quantum field theory. Therefore, from the viewpoint of many adherents of the DBB theory, the conventional interpretations of quantum mechanics and quantum field theory likewise have this same problem, but they mask it by their vague formulations (cf. also Bricmont 2016, pp. 169–173) for more on this topic) .

We shall now leave the de Broglie–Bohm theory for a while and turn to Everett’s work, another controversially discussed interpretation of quantum theory. In Sect. 5.3, we will then come back to the DBB theory within the framework of a comparison between various interpretation approaches.

5.2 Everett’s Interpretation

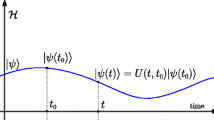

In 1957, the American physicist Hugh Everett III (1930–1982) published his “relative state” formulation of quantum mechanics (see Everett 1957). It contains the results of his doctoral thesis, mentored by John A. Wheeler at Princeton University. Its goal was a re-formulation of the theory, in which the discontinuous change of state (“collapse”) would be superfluous, and instead, a unitary time evolution of the wavefunction would hold throughout. In contrast to the de Broglie–Bohm theory, however, the completeness of the quantum-mechanical description is asserted, and thus the third statement of Maudlin’s trilemma (Sect. 2.3.1) is denied: Measurements appear to have only one definite outcome in Everett’s approach, although in fact the wavefunction (with its superposition states) contains a complete description.

Everett’s guiding concept was to derive the interpretation from the mathematical formalism.Footnote 22 He was motivated explicitly by the measurement problem, or by the distinction of an external observer in the usual formulation:

No way is evident to apply the conventional formulation of quantum mechanics to a system that is not subject to external observation. The whole interpretive scheme of that formalism rests upon the notion of external observation (Everett 1957, p. 455).

But at the latest when considering cosmological problems, the standpoint of an external observer can no longer be reasonably assumed, and the applicability of the quantum theory would appear to be frustrated by that fact.

Along with the justification for how—in view of the superposition states—the appearance of definite measurement outcomes is produced, Everett must furthermore explain how and why the statistics of those measurement results follows Born’s rule (i.e. \(|c_i|^2\) corresponds to the probability of occurrence of the given outcome). Now, Everett’s work has itself become the object of an interpretation debate. Jeffrey Barrett writes on this subject:

The fact that most no-collapse theories have at one time or another been attributed to Everett shows how much the no-collapse tradition owes to him, but it also shows how hard it is to say what he actually had in mind (Barrett 1999, pp. 90f).

In the following, we will trace roughly how the open technical and conceptional questions relating to the 1957 article have led to the development of variants and modifications. We begin however with a description of Everett’s basic idea.

5.2.1 The Basic Idea

Everett’s re-interpretation of the measurement problem is just as surprising as it is brilliant. This problem results, as is well known, from the application of the quantum theory to the measurement process. In general, a superposition state results from this process, consisting of, e.g., different “pointer states”, while our experience tells us that measurements lead to unique results. Superposition states appear under these circumstances not to give an appropriate description of the physical situation. The drastic consequences which result (“either the Schrödinger equation is false or it is not complete” (Bell 2001, p. 173)) are avoided by Everett with the aid of the following consideration: Under the premise that the quantum theory is applicable also to the observation process, the observer must therefore also enter into a “superposition state”—and this superposition undermines the reliability of the judgement that caused us to doubt the appropriateness of superposition states as a description in the first place! Instead, Everett suggests that we identify every term of the superposition with an (equally weighted) state of the observer.Footnote 23 The evolution of measurements (or observations) can then be described as follows:

We thus arrive at the following picture: Throughout all of a sequence of observation processes there is only one physical system representing the observer, yet there is no single unique state of the observer (...). Nevertheless, there is a representation in terms of a superposition, each element of which contains a definite observer state and a corresponding system state. Thus with each succeeding observation (or interaction), the observer state ‘branches’ into a number of different states. (...) All branches exist simultaneously in the superposition after any given sequence of observations (Everett 1957, p. 459).

In which sense Everett can still consider just one observer (“one physical system representing the observer”), who is simultaneously in the multiplicity of states as described, is initially unclear. The various different answers to this question lead essentially to the different variants of the Everett interpretation which were mentioned in the above quote from Barrett.

5.2.2 The Many-Worlds Interpretation

Bryce DeWitt and Neil Graham (1973) popularized the Everett theory through their anthology “The Many-Worlds Interpretation of Quantum Mechanics” and coined the catchy name with their choice for its title. They interpret the branching of the wavefunction mentioned in the Everett quote above in a completely realistic manner, as a real splitting into different “worlds”, and writeFootnote 24:

The universe is constantly splitting into a stupendous number of branches, all resulting from the measurement-like interactions between its myriads of components. Moreover, any quantum transition taking place on every star, in every galaxy, in every remote corner of the universe is splitting our local world on earth into myriads of copies of itself (DeWitt and Graham 1973, p. 161).

“World” means here the totality of all the (macroscopic) objects, and the human observer likewise is subject to this splitting into a manifold of “copies”.

David Wallace (Wallace 2010, p. 4) illustrates this astounding idea by means of an analogy with classical electrodynamics. Imagine an electromagnetic configuration \(F_1(r,t)\) which describes a pulse of light that is propagating from the Earth to the Moon. A second configuration \(F_2(r,t)\) could describe a light pulse underway from Venus to Mars. How, asks Wallace, should one now interpret the configuration

Does it describe a light pulse which is moving simultaneously between the Earth and the Moon as well as between Venus and Mars, since it occurs as a superposition? This is of course nonsense; instead, Eq. (5.10) does not describe a “strange” light pulse in a superposition state, but rather two “ordinary” light pulses at different locations. Wallace continues:

And this, in a nutshell, is what the Everett interpretation claims about macroscopic quantum superpositions: they are just states of the world in which more than one macroscopically definite thing is happening at once. Macroscopic superpositions do not describe indefiniteness, they describe multiplicity (Wallace 2010, p. 5).

Here, however, we are not dealing with a spatial separation (as in the example from electrodynamics), but instead—as Wallace expresses it—with a dynamic separation. This means that the parallel worlds have no mutual interactions, i.e. described pictorially, they are “transparent” to one another. The innumerable “worlds” are located unperturbed in the same, single spacetime region.Footnote 25

The interpretation of Everett’s construction given by DeWitt and Graham has entered into the popular-scientific literature and has since ignited the fantasy of (not only) laypersons interested in physics and science fiction authors. In an obvious sense, the measurement problem is resolved by this construction, since in each “world”, an eigenstate of the measurement apparatus in fact exists. Whether this condition suffices for a complete resolution of the measurement problem is however questioned by Maudlin (2010). In Sect. 5.2.6, we will discuss this criticism of Everett’s interpretation. The situation regarding the question of non-locality is similar: While Bacciagaluppi (2002) supports the view that the violation of Bell’s inequality (see Chap. 4) can be explained here without action at-a-distance, Allori et al. (2011) argue that the many-worlds interpretation produces this appearance of locality only because of its imprecise formulation. In Allori et al. (2011), a modification of the many-worlds interpretation is suggested, which likewise contains action at-a-distance (cf. Sect. 5.2.6).

In the version that we have thus far sketched, the theory however does not appear to be complete. Leslie Ballentine has pointed out that the meaning of probability statements within the Everett interpretation is unclear. Finally, all possible events do actually occur (see Ballentine 1971, pp. 233–235). Furthermore, the branching is subject to an ambiguity with respect to the choice of basis. This problem of the “preferred” basis will be discussed first, in the following section.

5.2.3 The Problem of the Preferred Basis

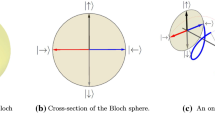

Let us consider a typical example of the superposition of various spin states (e.g. those of a silver atom): \(|\Psi \rangle = \frac{1}{\sqrt{2}} \left( |\uparrow _x \rangle + |\downarrow _x \rangle \right) \). If one wishes to determine the orientation of the spin along the x direction, one would investigate this state using a correspondingly oriented Stern–Gerlach magnet. At the end of the measurement, the state

is present. This state thus describes—according to the many-worlds interpretation—two “worlds”, in which the x component of the spin is either \(\uparrow _x\) or \(\downarrow _x\). The decomposition into basis vectors is however in general not unique and could be just as well carried out with eigenvectors with respect to some other measurable quantity. For example, the following linear combination could be consideredFootnote 26:

With respect to this basis, the state (5.11) now has the following representation:

If the two “worlds” branch in terms of these basis vectors, the spin along the x direction would not have a well-defined value, and instead, its z componentFootnote 27 would be well defined. The choice of a basis within the quantum theory is to be sure purely conventional and should have no physical relevance. A factual difference between the representations in (5.11) and (5.12) must therefore be separately justified. In other words, the choice of a “preferred basis” is necessary. One might object at this point that the choice of a specific measurement setup leads to precisely such a distinction of the pointer basis (5.11). In the other basis (5.12), in contrast, in every term there is a superposition of the various states of the x measurement apparatus. The non-occurrence (or rather the non-observability) of superpositions of macroscopically different states was however just what we were trying to explain with the Everett interpretation—it should thus not be a precondition of the investigation. Furthermore, such a distinction of a particular basis for the measurement process contradicts the spirit of an interpretation which merely wishes to let the formalism remain valid and in which the observation plays no fundamental role.Footnote 28

In today’s view, the suggestions for solving this problem fall into two classes: The older ones, which do not refer to decoherence, and those which make use of the mechanism of decoherence. A brief treatment of those Everett variants which are currently considered to be obsolete since the advent of the approaches based on decoherence is desirable with a view to the discussion of the concept of probability (Sect. 5.2.5). We will therefore first cast a brief glance at these older approaches before considering the role of decoherence theory in Sect. 5.2.4.

David Deutsch’s Variant of Everett’s Interpretation

David Deutsch, in his early works, suggested a mechanism for distinguishing a basis (cf. Deutsch 1985).Footnote 29 He extends the quantum-theoretical formalism in terms of an algorithm which produces the corresponding basis. This depends (without going into the details here) only on the corresponding physical state and its dynamics. The choice is limited by the requirement that in the case of “measurements”, the relevant basis in fact corresponds to the “pointer basis”. This guarantees that after a measurement, a unique result is in fact obtained (Deutsch 1985, pp. 22f).

Wallace (2010, p. 7) calls this variant of the Everett interpretation the “many-exact-worlds” interpretation. In Sect. 5.2.4, we will see that in the meantime, there are more promising candidates for the solution of the problem of the preferred basis, and David Deutsch himself has also rejected this interpretation since the end of the 1990s. First, however, we will consider yet another variant.

The Many-Minds Interpretation

The many-worlds interpretation includes the act of observation within the physical description. This apparently presumes that mental states are also a part of the physical world and are subject to the laws of quantum theory.Footnote 30

In this sense, a many-worlds interpretation appears to always imply a theory of branching consciousness states (the exception will be discussed below) . This evident significance is however not meant when one speaks of the many-minds interpretation.

A prominent suggestion of this variant is due to Albert and Loewer (1988). They were motivated by the problem of the preferred basis, as well as the difficulty of understanding the significance of probability statements within the many-worlds interpretation (this problem will be treated in more detail in Sect. 5.2.5).

The point of departure of the many-minds interpretation is the statement that mental states can never be in superpositions, according to our introspective experience. Loewer and Albert reason from this that mental states (i.e. beliefs, intentions, memories, etc.) are not physical.Footnote 31

They then postulate that every observer is outfitted with an infinite number of “minds”. While in the case of a measurement or an interaction, the physical brain states take on a superposition state, a probabilistic time evolution leads to a state in which a certain portion of these minds corresponds in each case to the perception of one single outcome for the experiment. This process takes place within one world.

Now, how does this interpretation deal with the problem of the “preferred basis”? In an evident sense, the choice of basis vectors for the evolution of a state has no physical significance, since in the many-minds interpretation, there is only one world. An ambiguity with respect to the splitting into “many worlds” thus cannot arise here. However, Barrett (1999, p. 195) has pointed out that the “basis” of the consciousness states plays a comparable role.Footnote 32

Both in the many-minds interpretation and also in the interpretation of Deutsch (1985), a preferred basis must thus be postulated. This common strategy is accompanied by a common difficulty: All attempts to introduce a preferred basis ad hoc must postulate properties which should in fact be explained in a fundamental theory (cf. Wallace 2010, p. 8). In the next section, we will treat the theory of decoherence. With it, one associates the hope that a convincing solution of the problem of the preferred basis can be found, since it does without such ad hoc assumptions.

5.2.4 The Role of Decoherence Theory

As a rule, physics investigates “isolated systems”, i.e. it considers the influence of the “environment” to be a negligible perturbation and, above all, an unnecessary complication. We now find that within the quantum theory, precisely the inclusion of the interactions with the environment can lead to conceptional progress in describing measurements, as well as the classical limits of the theory. The research which has been accomplished since the early 1970s in this field has not been associated with any particular interpretation of quantum theory and makes use simply of the mathematical properties of the standard formalism. Pioneers in this field of “decoherence”Footnote 33 were Zeh (1970) and Zurek (1981). Already in Sect. 2.3, we have discussed the decoherence programme. We make use here of the concepts introduced there, amplify them, and codify the results within the context of the Everett interpretation.

In Sect. 5.2.3, we have already explained how the decomposition of a state into its basis vectors can be ambiguous. The decompositions (5.11) and (5.12) are mathematically equivalent—their physical differences must therefore be justified.

The first step towards the resolution of this problem can now be accomplished through a purely mathematical consideration: If we look at the entanglement with a third system E (the environment, in our example likewise represented by a two-dimensional Hilbert space with states \(|e_i\rangle \)), we will be led to a state of the form

Andrew Elby and Jeffrey Bub (1994) were able to show that this decomposition into orthogonal states on a triple product space is unique.Footnote 34 It thus eliminates the ambiguity in the choice of a basis, in a formal sense (and also that of the associated physical measurand). Naturally, this purely mathematical argument as yet yields no indication of which basis is to be distinguished—especially since the extremely detailed states of the environment are unobservable. In this situation, physical criteria for the identification of this unique (“preferred”) basis must still be developed, as Schlosshauer mentions:

The decoherence programme has attempted to define such a criterion based on the interaction with the environment and the idea of robustness and preservation of correlations. The environment thus plays a double role in suggesting a solution to the preferred-basis problem: it selects a preferred pointer basis, and it guarantees its uniqueness via the tridecompositional uniqueness theorem (Schlosshauer 2005, p. 1279).

These criteria were thus not postulated, but rather they follow from the quantum-theoretical investigation of the dynamic influence of the environment. For this purpose, one treats complicated models of the environment. The interactions between it and the measurement apparatus take place as a rule via force laws which contain powers of the spatial distance (e.g. the Coulomb force \(\propto r^{-2}\)). It follows that the unique decomposition as a rule distinguishes the basis of positional space, and in the case of a measurement, the “pointer basis” is the relevant basis. Schlosshauer summarizes this approach, called environment-induced superselection , as follows:

The clear merit of the approach of environment-induced superselection lies in the fact that the preferred basis is not chosen in an ad hoc manner simply to make our measurement records determinate or to match our experience of which physical quantities are usually perceived as determinate (for example, position). Instead the selection is motivated on physical, observer-free grounds, that is, through the system-environment interaction Hamiltonian. The vast space of possible quantum-mechanical superpositions is reduced so much because the laws governing physical interactions depend only on a few physical quantities (position, momentum, charge, and the like), and the fact that precisely these are the properties that appear determinate to us is explained by the dependence of the preferred basis on the form of the interaction. The appearance of classicality is therefore grounded in the structure of the physical laws – certainly a highly satisfying and reasonable approach (Schlosshauer 2005, pp. 14f).

This quote once again emphasizes that the results of decoherence are not tied to any particular interpretation of the quantum theory, i.e. that they can be applied within every interpretation.Footnote 35

Since the interaction with the environment is described quantum-mechanically (i.e. via a unitary time evolution), the combination of the [object \(+\) measurement apparatus \(+\) environment] remains in a so-called pure state. This overall state will in general contain both a superposition of various “pointer positions” and also interference terms. The exact state of the environment is not only not susceptible to influences, but as a rule also not to observation. If one computes the predictions for the real observables in the subsystem [object \(+\) measurement apparatus], one obtains a result in which there are practically no more interference terms.Footnote 36 This part of the programme is termed the environment-induced decoherence and consists—in summary—in the fact that from a coherent superposition (a “pure state”), an incoherent (or “decoherent”—thus the name) superposition with respect to a uniquely defined basis emerges. Due to an apparent reason, this process alone does not constitute a solution to the measurement problem, for it still cannot explain which branch of this now decoherent superposition corresponds to the outcome of the measurement. In Footnote 28, the measurement problem was divided up into two sub-problems: (i) “Preferred basis” and (ii) “definite outcome”. The decoherence theory thus merely solves the first sub-problem.

For the Everett interpretation, this question is naturally not relevant: In its context, the basis which is preferred in this manner defines the splitting into independent “worlds”. These are however not “exact” (as, e.g., in the suggestion of Deutsch 1985), but are rather merely approximations. In the end, the preferred basis is approximately distinguished by a dynamic process.

According to David Wallace (2010, p. 11) , since the mid-1990s there has been a broad consensus among physicists that the problem of the preferred basis has been solved by environment-induced decoherence. Only in some areas of the philosophy of science is there still criticism of the fact that the approximate dynamic process of decoherence is used to define objects which one then “takes seriously in the ontological sense”. In Wallace’s opinion, the quasi-classical branches of the wavefunction are “emergent structures”, whose ontological status corresponds, for example, to that of the temperature in statistical mechanics (see the essay by Wallace in Saunders et al. 2010, p. 53).

The Everett interpretation has experienced a considerable revival through these results, since the decoherence-based modification is ontologically certainly less extravagant than the versions of DeWitt and Graham (1973), Deutsch (1985) or Albert and Loewer (1988).Footnote 37 The definition of the“worlds” is based here on a dynamic process which can be analysed using the methods of the standard formalism. Furthermore, this approach can be relativistically generalized in a manifest way. The significant open question which remains is that of the status of probability statements, to which we will now turn our attention.

5.2.5 Probability in Everett’s Interpretation

Within the Copenhagen interpretation, if we consider a state \(|\Psi \rangle =\sum _i c_i|\psi _i\rangle \), the square of the amplitude \(|c_i|^2\) denotes the probability of obtaining the state \(|\psi _i\rangle \) as the result of a measurement of the corresponding observable on the system \(|\Psi \rangle \). In the de Broglie–Bohm theory, the same is true – but there, on the grounds that the configuration of the particle selects out this part of the wavefunction. In the GRW theory, finally, this is the probability that the dynamic collapse of the wavefunction of the measurement apparatus will lead to this state. In all of these cases, there are two preconditions for the practicable application of the probability concept: Various possible outcomes and the lack of knowledge of the actual result. Within the many-worlds interpretation, however, all of the results will occur with certainty. It thus initially appears unclear just what the probability statements could refer to in this connection (the “incoherence problem”)—not to mention why these probabilities should correspond to \(|c_i|^2\) (the “quantitative problem”). Precisely these two aspects (which are however closely related) are singled out in the discussion of the probability problem.

The status of probability statements within the Everett interpretation has led to a technically and conceptionally highly complex debate. Some of the important contributions to this discussion will be treated in the following. Here, again, it is seen that the advent of the decoherence theory marked a division point within the overall debate.

The Incoherence Problem

Naturally, the square of the amplitude \(|c_i|^2\) still retains the mathematical properties within the Everett interpretation that qualified it to be a measure of probability (over the set of all branchings). However, the \(c_i\) are just “branching amplitudes”, and every branch claims the same reality in this interpretation. Both Everett, as well as later DeWitt and Graham, appear to have appreciated this difference insufficiently, since they claimed that they could even derive Born’s rule:

The conventional probability interpretation of quantum mechanics thus emerges from the formalism itself (DeWitt and Graham 1973, p. 163).

This claim is supported by DeWitt and Graham on the basis of the following mathematical resultFootnote 38: If one considers a series of N measurements of a superposition state with coefficients \(c_i\), then in the limit \(N\rightarrow \infty \), the state of the overall system (\(=\) N measurement apparatus \(+\) N systems) converges towards an eigenstate of the so-called relative frequency operator for the measured value i. This operator measures—as its name implies—just the relative frequency with which the experiment yields the outcome i. The associated eigenvalue is then indeed given by \(|c_i|^2\). However, to see a proof of Born’s rule in this fact is a failure to recognize that in real experiments, the value of N must always remain finite, and therefore, branches occur with statistical deviations. Now, one can justifiably expect that their squared amplitudes remain “small”. The assertion that these events thus also occur with small probabilities is however correct only if the squares of the branching amplitudes are indeed identifiable with probabilities. This however renders the argument circular, for just this identification is what was supposed to be justified (cf. Barrett 1999, p. 163; Deutsch 1985, p. 20; or Ballentine 1971, p. 234).

A genuine solution to the incoherence problem was suggested by David Deutsch in the same article in which he also treated the question of the preferred basis. It is based on the intuition that the most probable outcome should also be the most frequent. While with DeWitt, individual worlds branch off, Deutsch postulates an (uncountable) infinity of identical copies of the same world (see Axiom 8 in Deutsch 1985, p. 20). In the case of a measurement (with i possible measured values), a relative fraction \(p_i\) branches off into worlds with the corresponding experimental outcome. This fraction then corresponds to the probability of occurrence of the event i (in “my” world). Deutsch thus solves the incoherence problem by means of an extension of the “ontology” of the theory.

The many-minds interpretation of Albert and Loewer (1988) proceeds identically in a structural sense. As we have seen, there also, each observer state is associated with infinitely many minds. In the case of a measurement (with i possible measured values), these are supposed to assume the “consciousness content” that “the event has occurred”, likewise with the fractional weight \(p_i\).

If we now set this fraction \(p_i\) of the minds or the worlds (Deutsch), respectively, equal to the squared amplitude \(|c_i|^2\), we also obtain an (ad hoc) solution to the quantitative problem.Footnote 39

These two suggestions are of course based on special solutions of the problem of the preferred basis (cf. Sect. 5.2.3), which at latest since the advent of decoherence-based approaches is regarded as obsolete. We thus find here the curious situation that precisely the most convincing solution (in the eyes of many physicists) to the problem of the preferred basis leads to the result that the concept of probability once again appears to be a “foreign body” within the Everett interpretation.

Now, there exist various approaches which—as escape routes out of this dilemma—attempt to justify a concept of “uncertainty” or “indeterminacy” within the Everett interpretation. This concept appears to many authors to be a necessary condition for making it possible that probability statements can reasonably be made at all.