Abstract

k-means clustering is one of the popular procedures for multivariate analysis in which observations are classified into a reduced number of clusters. The resulting centroid matrix is refereed to capture variables which characterize clusters, but between-clusters contrasts in the centroid matrix are not always clear and thus difficult to interpret. In this research, we address the problem in interpretation and propose a new procedure of k-means clustering which produces a sparse and thus interpretable centroid matrix. The proposed procedure is called SPARK. In SPARK, the sparseness of the centroid matrix is constrained and therefore it contains a number of exact zero elements. Because of this, the contrasts between-clusters are highlighted and it allows us to interpret clusters easier in comparison with the standard k-means clustering. A sparsity selection procedure for determining the optimal sparsity of the centroid with reduced computational load is also proposed. Behaviors of the proposed procedure are evaluated by two real data examples, and the results indicate that SPARK performs well for dealing with real world problems.

Access provided by CONRICYT-eBooks. Download conference paper PDF

Similar content being viewed by others

Keywords

1 Introduction

k-means clustering, known as a non-hierarchical clustering procedure, is widely used for extracting the homogeneity of observations, by assigning them into a small number of clusters. Let \(\mathbf{X}\) be an n-obserbations \(\times \) p-variables matrix, and the k-means clustering is formulated as a minization of the least squares loss function defined as

where \(\mathbf{M} = \{m_{il}\}\) is an n-observations \(\times \) p-variables membership matrix and \(\mathbf{Y}=\{y_{jl}\}\) is a p-variables \(\times \) k-clusters centroid matrix. \(\mathbf{x}_{(i)}\) and \(\mathbf{y}_{l}\) denote the ith row vector and the lth column vector of \(\mathbf{X}\) and \(\mathbf{Y}\), respectively.

The centroid matrix is refereed for interpreting what variables characterize the clusters, and the within- and between-clusters contrasts in the centroid matrix are of help for the interpretation. These contrasts, however, are not always clearly observed and therefore the interpretation is difficult, as exemplified in Sect. 4. A typical strategy to discriminate the clusters is to replace the elements close to zero in the centroid matrix with zeros, by a certain threshold. It is not recommended, however, in that the threshold totally depends on users’ decision, and it can spoil the reliability of the interpretation and the following decisions.

In this article, considering the above problem in interpretability of the resulting centroid matrix, we propose a new algorithm for clustering which produces an easily interpreted centroid matrix. We call this algorithm SPARK (abbreviation of Sparse k-means). In SPARK, the resulting centroid matrix is sparse in that it contains a number of entries exactly equal to zero. The contrasts of the clusters are therefore emphasized, without any subjective threshold, which facilitates the easier and more coherent interpretation than the existing procedures. Such a centroid matrix is obtained by minimizing (1) subject to the constraint that \(\mathbf{Y}\) has a specific number of zero elements, namely,

where \(Sp(\mathbf{Y})\) is the number of zero in \(\mathbf{Y}\). The positive integer r is specified beforehand.

1.1 Related Procedure

Sun et al. (2012) proposed regularized k-means clustering for obtaining such sparse centroid matrix, which is similar to the proposed method. It is formulated as a minimization of (1) subject to the row-wise constraint on \(\mathbf{Y}\)

where \(||\mathbf{y}_{(j)}||\) is an \(L_{1}\)-norm of the \(\mathbf{Y}\)’s jth row vector \(\mathbf{y}_{(j)}\) and a tuning parameters \(\lambda _j\ (j=1,\ldots , p)\) control the resulting sparsity of \(\mathbf{Y}\). It therefore contains a number of zero elements, since the \(L_{1}\)-norm of rows of \(\mathbf{Y}\) is constrained to be less than \(\lambda _1,\ldots ,\lambda _p\). This minimization is equivalent to the minimization of the following function;

We call this approach as a penalty approach, in that it adds the penalty function \(\sum _j^p \lambda _{j}||\mathbf{y}_{(j)}||\) to the original loss function (1). The tuning parameters take any positive integer, which are commonly determined by cross-validation. Similar approaches can be found in Witten and Tibshirani (2010) and Hastie et al. (2015). Penalty approach is originally proposed for avoiding over-fitting in clustering. Generalizability, however, does not always results in the easier interpretability of the clusters, which we focus on in this article. The proposed procedure directly controls the number of zero elements r in the centroid matrix within a restricted range, without introducing tuning parameters as in the penalty approaches. Within- and between-contrasts in the centroid matrix are therefore highlighted, and it allows users to find what variables manifest the clusters easily. It should be noted that controlling r cannot consider all possible values of \(\lambda _1,\ldots ,\lambda _p\). For interpretation of clusters, however, inspecting all possible \(\mathbf{\lambda }\)s is not necessary, and sparseness of \(\mathbf{Y}\) can be determined by how many elements in \(\mathbf{Y}\) are zero and ignorable.

2 Algorithm

The proposed procedure SPARK is formulated as the following constrained minimization problem;

subject to the sparsity constraint (2) and the membership constraint is imposed on \(\mathbf{M}\) such that

The parameter matrices are alternately and iteratively updated in the M-step and Y-step, respectively, starting from multiple sets of initial values in order to avoid accepting a local minimum as the final solution. In these steps, the current parameter matrix is replaced by the one minimizing (1) keeping the other parameter matrix fixed. The update formulae used in the M-step and Y-step are presented as follows.

M-step The minimization of \(f(\mathbf{M}, \mathbf{Y})\) with fixed \(\mathbf{Y}\) subject to (6) is achieved by the k-means algorithm with the fixed centroid (MacQueen 1967). Therefore, the optimal \(\mathbf{M}=\{m_{il}\}\) is obtained by

for \(i=1,\ldots ,n\).

Y-step Using the matrix \(\mathbf{C} = \mathbf{X}^{\prime }{} \mathbf{M}(\mathbf{M}^{\prime }{} \mathbf{M})^{-1}\), (1) is rewritten as

where \(\mathbf{D}=\mathrm {diag}\{d_{11},\ldots ,d_{ll},\ldots ,d_{kk}\}\) denotes the \(k\times k\) diagonal matrix whose lth diagonal element is equal to the number of the observations classified into the lth cluster \((l=1,\ldots ,k)\). The third term is proved to be zero as follows;

Therefore, minimizing the second term in (8), \(g(\mathbf{Y})=||\mathbf{D}^{1/2}(\mathbf{C}-\mathbf{Y})||^{2}\), is equivalent to the minimization of \(f(\mathbf{M},\mathbf{Y})\) with respect to \(\mathbf{Y}\). Further, \(g(\mathbf{Y})\) is rewritten as

where the Z denotes r pairs of indices (j, l)s indicating the locations of \(y_{jl}\)s to be zero. The last equality holds when the second term in (10) is equal to zero, that is, when \(y_{jl}\) with \((j,l)\in Z^{\bot }\) is taken equal to the corresponding \(c_{jl}\). In addition, the limit \(\sum _{(j,l)\in Z}d_{ll}^{1/2}c_{jl}^{2}\) is minimal when Z is composed of the indices of the r smallest \(c_{jl}^{2}\)s among all squared elements in \(\mathbf{C}\). Therefore, \(\mathbf{Y}\) that minimizes \(g(\mathbf{Y})\) is obtained as

for \(l = 1,\ldots ,k\) and \(j = 1,\ldots ,p\), where \(c_{[r]}^{2}\) denotes the rth smallest value among all \(c_{jl}^{2}s\). The update formulae (7) and (11) are used in the M-step and Y-step, respectively, and it is guaranteed that function value of \(f(\mathbf{M},\mathbf{Y})\) monotonically decreases in each of these steps. As presented in this section, \(\mathbf{M}\) and \(\mathbf{Y}\) are alternately updated until the convergence is reached. In the following real data examples, we used 100 different initial values for \(\mathbf{M}\) and \(\mathbf{Y}\).

3 Sparsity Selection Based on Information Criteria

In the proposed procedure, the number of zeros in \(\mathbf{Y}\) has to be specified as a positive integer r in (2). In this article, the minimum and maximum of r, \(r_{min},\ r_{max}\), are defined as

considering that \(\mathbf{Y}\) has p non-zero elements when \(\mathbf{Y}\) has a perfect cluster structure; each variable is associated with only one cluster. Selecting the number of zero elements in \(\mathbf{Y}\) can be considered as a model selection problem, since this selection partially specifies the model part of \(\mathbf{MY}^{\prime }\) fitted to \(\mathbf{X}\). In this respect, the information criterion such as AIC and BIC is suitable for specifying r, which controls how sparse the model is to be fitted to the data. In this section, we propose two criteria in order to select the “best” r among the interval \([r_{min}, r_{max}]\).

Here, let \(\mathbf{E}=\{e_{ij}\}\) be the matrix of errors defined as \(\mathbf{E} = \mathbf{X}-\mathbf{MY}^{\prime }\). Under the assumption that \(\mathbf{X}\) is generated by \(\mathbf{X} = \mathbf{MY}^{\prime }+\mathbf{E}\) with \(e_{{i}{j}}\) distributed independently and identically according to \(N(0,\sigma ^{2})\) for all is and js with a specific error variance \(\sigma ^2\), it can be shown that the least squares estimation and maximum likelihood estimation in SPARK are equivalent. The log-likelihood function to be maximized in the ML estimation is

including \(f(\mathbf{M},\mathbf{Y})\) to be minimized in the least square estimation. With an arbitrary r, the maximum of \(l(\mathbf{M},\mathbf{Y})\) is attained as

where \(f_{min}(r)\) denotes the attained function value of (1). By (14), the information criteria AIC(r) and BIC(r) with the specific r are obtained by

where \(\nu (r)\) denotes the number of parameter to be estimated with a certain r;

Therefore, r can be determined by \(r = \mathop {\mathrm{arg~min}}\limits _{r_{min} \le r \le r_{max}}AIC(r)\ \text {or}\ BIC(r)\) in terms of minimizing the model selection criteria. This approach is considered to be computationally inefficient, however, as of 100 run of SPARK are required, in order to avoid a local minimum, for each of all possible rs. When \(\mathbf{X}\) is of a large size, (\(\mathbf{X}\) contains many observations and variables) the resulting centroid matrix is also of a large size, and thus higher computational cost is required for each run.

In order to find such r with lower computational cost, we propose the following algorithm.

- Step 1.:

-

Set \(S_{initial}\) and \(S_{decrease}\) to an integer within the range [0, 1]. Set \(r_{t} = S_{initial} \times r_{max}\)

- Step 2.:

-

Repeat Step 3 to Step 4 while \(S > 1\).

- Step 3.:

-

(Forward search) Repeat (a) to (c).

- (a):

-

Set \(r=r_t\) and compute

$$\begin{aligned} \varDelta AIC(r) = AIC(r+1) - AIC(r) \end{aligned}$$(18)or

$$\begin{aligned} \varDelta BIC(r) = BIC(r+1) - BIC(r) \end{aligned}$$(19) - (b):

-

If \(\varDelta AIC(r)\) or \(\varDelta BIC(r)\) is smaller than 0, set \(r_t = r_t + S\) and go back to 2. Otherwise proceed to (c).

- (c):

-

Set \(S = S \times S_{decrease}\) and proceed to the backward search.

- Step 4.:

-

(Backward search) Repeat (a) to (c).

- (a):

-

Set \(r=r_t\) and compute \(\varDelta AIC(r)\) or \(\varDelta BIC(r)\).

- (b):

-

If \(\varDelta AIC(r)\) or \(\varDelta BIC(r)\) is greater than 0, set \(r_t = r_t - S\) and go back to 4. Otherwise proceed to (c).

- (c):

-

Set \(S = S \times S_{decrease}\) and proceed to the forward search.

- Step 5.:

-

If the previous step is Forward search, repeat barkward search with \(S=1\) until \(\varDelta AIC(r)\) or \(\varDelta BIC(r)\) is positive; otherwise repeat Forward search \(\varDelta AIC(r)\) or \(\varDelta BIC(r)\) is negative.

The above algorithm seeks r which minimizes AIC(r) or BIC(r) within the range \([r_{min}, r_{max}]\) by repeating the forward and backward search and reducing the step size S at each step of the iteration, starting from the initial step size \(r_{max} \times S_{initial}\). The rate of decrement of the step size is controlled by \(S_{decrease}\). The total computational cost is therefore dramatically reduced compared with applying SPARK for computing AIC(r) or BIC(r) for all rs. In the following simulation and the real data examples, we set \(S_{initial} = 0.9\) and \(S_{decrease} = 0.7\) which is empirically confirmed to be well-performed.

4 Real Data Examples

In this section, we demonstrate that SPARK extracts the sparse centroids underlying the dataset and facilitates interpretation of the centroid, with keeping the correctness of classification.

4.1 Example 1: Fisher’s Iris Data

In the first example, SPARK was applied to Fisher’s Iris data, where 150 samples, which are originally sampled from three species, were measured with respect to four variables. In order to find the optimal sparsity, the sparsity selection procedure based on BIC was used. It suggested that \(r=2\) was the best, and we also applied the standard k-means clustering to Iris data for comparison.

The estimated centroids are shown in Table 1 as a heatmap. As found in Table 1, the contrast between the first (C1) and the second (C2) clusters can be seen in Sepal.Length and Sepal.Width. In addition, C2 is different from the rest of clusters with respect to Sepal.Width The contingency table of two partitions, the species of samples and the estimated membership, for SPARK and the one for k-means, are shown in Table 2. It can be seen that the estimated memberships correspond to the species, in that \((49+37+42)/150=85.3\%\) of the observations are correctly classified, while \((50+39+36)/150=89.2\%\) in the k-means. These results indicate that SPARK appropriately produces sparser and thus easy-to-interpret centroid matrix in comparison with the exiting method, keeping the accuracy of classification.

4.2 Example 2: Vicon Physical Action Dataset

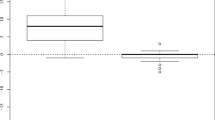

The second example is Vicon Physical Action Dataset (Lichman 2013). A subject’s walking was recorded by the 3-axis motion sensors attached to the subject’s right and left wrists, elbows, knees, and ankles. The activity was recorded for approximately 8000 ms with the frequency of 20 Hz. Therefore we have 24 (x-/y-/z-axis sensors of right and left wrists, elbows, knees and ankles) \(\times \) 173 (time elapsed) data matrix. k-means clustering is applied to the data matrix and the resulting centroid matrix is shown in Fig. 1 as a heatmap. The number of clusters is set to 5 which explains 75% of the total variance of the dataset.

We can interpret the estimated five clusters by referring the 173 \(\times \) 5 centroid matrix as follows. For example, the first (C1) and the second (C2) clusters are well discriminated against the others; the first cluster is characterized by the lower output value in the middle phase of records (around 2000–6000 ms) and the higher value in the latter phase (around 6000–8500 ms), while this variation in the sensor outputs is shifted for 2000 ms earlier in the second cluster. The third (C3), fourth (C4) and fifth (C5) clusters are, however, hard to be discriminated mutually, in that the time evolutions of values are similar to each other especially in the early phase.

Before applying SPARK to the dataset, the sparsity selection procedures were applied. The AIC- and BIC-based procedures suggested that \(r=332\) and \(r=461\) were the best, respectively. We therefore determined to set \(r=461\) in order to obtain the sparser centroid matrix. This means that approximately 53.3% of the all elements of the centroid matrix were estimated as zero. The number of clusters was set at 5, as in the example of the k-means clustering in Sect. 1.

The resulting centroid matrix is represented as a heatmap in Fig. 1. The elements estimated as zero are colored in white. It can be seen that, compared with the standard k-means clustering, the estimated centroid is sparse enough and the contrasts between clusters are clearer than in the k-means clustering solution. Based on the sparse centroid, each cluster can be interpreted as follows; the sensors classified into the first cluster show the lower values from approximately 2000–5000 ms and the higher values from 6500 ms to the end of recording, and this variation of sensor outputs is earlier by 1500 ms in the second cluster. The third cluster is characterized by the lower values around 6000 ms, which makes the cluster different from the other clusters. In the fourth cluster, the lower values and the higher values alternately appear except in the early phase of recording, while the sensor outputs are almost stable in the fifth cluster.

The centroids obtained by k-means are less sparse than the centroids for SPARK and the characteristics of clusters are unclear. As a measure of interpretability, Lorenzo-Seva (2003) proposed the index of simplicity called LS index in the context of factor analysis. The LS index ranges from 0 (least simple) to 1 (most simple) and the values LS index for the centroid matrices were 0.313 in the k-means and 0.590 in the SPARK, which indicates the sparsely estimated centroids are more simple and thus more interpretable compared with the existing method.

The sensor classified into each cluster are shown in Table 3. The first cluster is composed of the z-axis sensors on the right arm, while those on the left arm are classified into the third cluster. It indicates that the subject’s horizontal movement in the left and right arms are expressed in the first and the second clusters. The third cluster is composed of 10 sensors, the y/z-axis sensors on the left arm and the y-axis sensors on the leg. The x-axis sensor on all parts are classified into the fourth cluster, and refereeing the sparse centroids in Fig. 1 therefore indicate that the clear difference between the x-axis and the y-axis movement is observed around 6000 ms.

5 Concluding Remarks

In this article, we proposed a new procedure of clustering called SPARK, which produces a sparse centroid matrix The interpretation of the centroid matrix is easier compared with the ordinal k-means clustering by the sparsity constraint imposed on the centroid matrix. It is also possible to obtain such sparse centroid by adding a penalty term to the loss function of k-means clustering, as proposed by some authors. These procedures mainly aims to improve the robustness of clustering through the sparse estimation of centroid matrix. In SPARK, on the other hand, we rather focus on the interpretability of the resulting centroid matrix than robustness. The sparseness of the centroid matrix is therefore controlled by the number of zero elements in the centroid matrix, which is closely related to its interpretation. The results of the two real data examples indicate that the estimated sparse centroids surely facilitates to capture the characteristic of the clusters.

References

Hastie, T., Tibshirani, R., & Wainwright, M. (2015). Statistical learning with sparsity. CRC press.

Lichman, M. (2013). UCI machine learning repository. http://archive.ics.uci.edu/ml

Lorenzo-Seva, U. (2003). A factor simplicity index. Psychometrika, 68(1), 49–60.

MacQueen, J. (1967). Some methods for classification and analysis of multivariate observations. In Proceedings of the 5th Berkeley Symposium on Mathematical Statistics and Probability, 1, 281–297.

Sun, W., Wang, J., & Fang, Y. (2012). Regularized k-means clustering of high-dimensional data and its asymptotic consistency. Electronic Journal of Statistics, 6, 148–167.

Witten, D., & Tibshirani, R. (2010). A framework for feature selection in clustering. Journal of the American Statistical Association, 105(490), 713–726.

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Rights and permissions

Copyright information

© 2018 Springer International Publishing AG, part of Springer Nature

About this paper

Cite this paper

Yamashita, N., Adachi, K. (2018). SPARK: A New Clustering Algorithm for Obtaining Sparse and Interpretable Centroids. In: Wiberg, M., Culpepper, S., Janssen, R., González, J., Molenaar, D. (eds) Quantitative Psychology. IMPS 2017. Springer Proceedings in Mathematics & Statistics, vol 233. Springer, Cham. https://doi.org/10.1007/978-3-319-77249-3_34

Download citation

DOI: https://doi.org/10.1007/978-3-319-77249-3_34

Published:

Publisher Name: Springer, Cham

Print ISBN: 978-3-319-77248-6

Online ISBN: 978-3-319-77249-3

eBook Packages: Mathematics and StatisticsMathematics and Statistics (R0)