Abstract

In this work, we demonstrate the extension of quadrature approximations, built from conformal mapping of interpolatory rules, to sparse grid quadrature in the multidimensional setting. In one dimension, computation of an integral involving an analytic function using these transformed quadrature rules can improve the convergence rate by a factor approaching π∕2 versus classical interpolatory quadrature (Hale and Trefethen, SIAM J Numer Anal 46:930–948, 2008). For the computation of high-dimensional integrals with analytic integrands, we implement the transformed quadrature rules in the sparse grid setting, and we show that in certain settings, the convergence improvement can be exponential with growing dimension. Numerical examples demonstrate the benefits and drawbacks of the approach, as predicted by the theory.

Access provided by CONRICYT-eBooks. Download conference paper PDF

Similar content being viewed by others

Keywords

- Sparse Grid

- Sparse Grid Quadrature Rule

- Conformal Mapping

- Interpolatory Quadrature

- High-dimensional Integrals

These keywords were added by machine and not by the authors. This process is experimental and the keywords may be updated as the learning algorithm improves.

1 Introduction and Background

Standard interpolatory quadrature methods, such as Gauss–Legendre and Clenshaw–Curtis, tend to have points which cluster near the endpoints of the domain. As seen in the well-known interpolation example of Runge, this can mitigate the spurious effects of the growth of the polynomial basis functions at the boundary. However, this clustering can be problematic and inefficient in some situations. Gauss–Legendre and Clenshaw–Curtis grids, with n quadrature points on [−1, 1], distribute asymptotically as \(\frac {1}{\pi \sqrt {1-x^2}}\) [33]. Hence these clustered grids may have a factor of π∕2 fewer points near the middle of the domain, compared with a uniform grid. This may have unintended negative effects, and the issue is compounded when considering integrals over high-dimensional domains.

For numerical integration of an analytic function in one dimension, the convergence of quadrature approximations based on orthogonal polynomial interpolants depends crucially on the size of the region of analyticity, which we denote by Σ. More specifically, they depend on ρ ≥ 1, the parameter yielding the largest Bernstein ellipse E ρ contained within the region of analyticity Σ. The Bernstein ellipse is defined as the open region in the complex plane bounded by the curve

This gives some intuition as to why the most stable quadrature rules place more nodes toward the boundary of the domain [−1, 1]; since the boundary of E ρ is close to {±1}, the analyticity requirement is weaker near the endpoints of the domain. More specifically, to be analytic in E ρ , the radius of the Taylor series of f at {±1} is only required to be ρ − 1∕ρ, while the radius of the Taylor series centered at 0 is required to be at least ρ + 1∕ρ.

On the other hand, the appearance of the Bernstein ellipse in the analysis is not tied fundamentally to the integrand, but only to the choice of polynomials as basis functions. Thus, we may consider other types of quadrature rules which still take advantage of the analyticity of the integrand. Using non-polynomial functions as a basis for the rule may improve the convergence rate of the approximation. Much research has gone into investigating ways to find the optimal quadrature rule for a function analytic in Σ, and to overcome the aforementioned “π∕2-effect”, including end-point correction methods [2, 15, 18], non-polynomial based approximation [4,5,6, 25, 34], and the transformation methods [7, 10, 13, 16, 17, 19, 24, 26, 30, 30] which map a given set of quadrature points to a less clustered set. In this paper, we consider the transformation approach, based on the concept of conformal mappings in the complex plane. Many such transformations have been considered in the literature, especially for improper integrals where the integrand has endpoint singularities, e.g., [11, 30]. Our interest here is in analytic functions which have singularities in the complex plane away from the endpoints of the interval. We consider the transformations from [13], which offer the following benefits: (1) practical and implementable maps; and (2) simple concepts leading to theorems which may precisely quantify their benefits in mitigating the effect of the endpoint clustering.

Our contribution to this line of research is to implement and analyze the application of the transformed rules to sparse grid quadratures in the high-dimensional setting. For high-dimensional integration over the cube [−1, 1]d, the endpoint clustering means that a simple tensor product quadrature rule may use (π∕2)d too many points. On the other hand, we show that for sparse Smolyak quadrature rules [27] based on tensorization of transformed one-dimensional quadrature, this effect may be mitigated to some degree. Even in the sparse grid setting, the use of mapped quadratures is not new. The paper [11] uses a similar method to generate new quadrature rules. In their setting, the goal is to compute integrals where the integrand has boundary singularities. In contrast, our objective is to analyze the rules for integrands which are analytic but may have singularities in the complex plane away from [−1, 1]d.

The remainder of the paper is outlined next. First, we introduce the one-dimensional transformed quadrature rules in Sect. 2, and in Sect. 2.2 describe how to use them in the construction of sparse grid quadrature rules for integration of multidimensional functions. In Sect. 3, we provide a brief analysis of the corresponding mapped method to show that the improvement in the convergence rate to a d-dimensional integral is (π∕2)1∕ξ(d), where ξ(d)−1 ≥ d, and provide numerical tests for the sparse grid transformed quadrature rules in Sect. 4. We conclude this effort with some remarks on the benefits and limitations of the method in Sect. 5.

2 Transformed Quadrature Rules

In this section, we introduce one-dimensional transformed quadrature rules, based on the conformal mappings described in [13], applied to classical polynomial interpolation based rules. These rules will be used as a foundation for sparse tensor product quadrature rules for computing high-dimensional integrals, introduced in later sections.

To begin, suppose we want to integrate a given function f over the domain [−1, 1], and assume this function admits an analytic extension in a region \([-1,1] \subset \varSigma \subset \mathbb {C}\). Given a set of points \(\{ x_j \}_{j=1}^n\), an interpolatory quadrature rule is defined from the Lagrange interpolant of f, which is the unique degree n − 1 polynomial matching f at each of the abcissas x j , i.e.,

The quadrature approximation of the integral of f, denoted Q n [f], is then defined by

with weights given explicitly as

Now, according to the Cauchy integral theorem, since f has an analytic extension, we can evaluate the integral along any (complex) path contained in Σ with endpoints {±1}. Next, let g be a conformal mapping satisfying the conditions:

According to the argument above, the integral can be rewritten as the path integral from − 1 to 1, with the path parameterized by the map g, i.e.,

Applying our original quadrature rule to the latter integral,

we obtain a new quadrature rule with transformed weights \(\{\tilde {c}_j\}_{j=1}^n\) and points \(\{\tilde {x}_j\}_{j=1}^n\).

Equation (5) provides the motivation for the choice of the conformal mapping g. Specifically, the Taylor series for f, centered at points x ∈ [−1, 1] which are close to the boundary, may have a radius which extends beyond the largest Bernstein ellipse in which f is analytic. We may then hope to find a g such that a Bernstein ellipse is conformally mapped onto the whole region where f is analytic, where classical convergence theory yields the convergence rate for (f ∘ g) ⋅ g′. In addition to (4), it is especially advantageous to have g map [−1, 1] onto itself, i.e.,

In this case, the transformed weights and points remain real-valued, and we avoid evaluations of f with complex inputs.

We now turn our attention to several specific conformal mappings which satisfy the conditions (4), along with the extra condition (6). For more details on the derivation and numerical implementation of the maps, see [13]. The first mapping we consider applies to functions which admit an analytic extension at every point on real line; in other words, functions which have only complex singularities. In this case, the natural transformations to consider are ones that conformally map the interior of a Bernstein ellipse (1) to a strip about the real line. Specifically, we define a map which takes the Bernstein ellipse with shape parameter ρ to the complex strip with half-width \(\frac 2{\pi } ( \rho - 1 )\), as shown in Fig. 1. We can do this through the use of Jacobi elliptic functions [1, 8]. First, fixing a value ρ > 1, we define the parameter 0 < m < 1 through

and the associated parameter K = K(m),

which is an incomplete Jacobi elliptic integral of the first kind [1]. Finally, we define the mapping in terms of the elliptic sine function sn(⋅;m):

We’ll refer to this map as the “strip map” in the following.

The mapping (7) takes the Bernstein ellipse E 1.4 (left) to a strip of half-width 2(1.4 − 1)∕π ≈ 0.255

According to (5), we also need to know the derivative of g 1, given by

with \(\omega (z) = 2K \sin ^{-1}(z) / \pi \). Here we have also made use of the elliptic cosine function, cn, and elliptic amplitude function, dn [1]. For our applications, we also require the values of \(g_1^{\prime }\) at the endpoints of the interval, which are given by

Again we refer the reader to [13] for additional details.

Another way to change the endpoint clustering, and transform the quadrature rule under a conformal map, is to use an appropriately normalized truncation of the power series for \(\sin ^{-1}(z)\). The map \(\frac {2}{\pi }\sin ^{-1}(z)\) perfectly eliminates the clustering of the Gauss–Legendre and Clenshaw–Curtis points, but since it has singularities at ±1, it is useless for our purposes. On the other hand, by considering a truncation of the power series

we define a more desirable mapping. To this end, for M ≥ 1, we define

with a constant c(M) ∈ (0, 1) appropriately chosen so that g 2(±1) = ±1. This mapping is much simpler to implement than the previous mapping. We will call this map the “pill map”, since it maps the Bernstein ellipse to a pill-shaped region about [−1, 1] with flatter sides. In Fig. 2, we plot the image of the ellipse E ρ with ρ = 1.4, under the mapping (9) with M = 4. The region on the right has almost flat sides, with width a little bigger than \(\frac 2{\pi } ( 1.4 - 1 ) \approx 0.255\).

The mapping (9), with M = 4, takes the Bernstein ellipse E 1.4 (left) to a pill-shaped region with sides of length ≈ 0.255

2.1 Standard One-Dimensional Quadrature Rules

Here we give a brief summary of some standard interpolatory-type quadrature rules, to which we will apply the mappings of the previous section. Only the nodes are discussed here, as the weights for each method will be defined according to (3). For an overview of the theory of interpolatory quadrature, see [33, Ch. 19].

The first quadrature rule is based on the extrema of the Gauss–Chebychev polynomials. For a given number of points n, these are given by:

If we choose the number of nodes n = n(l) to grow according to n(1) = 1, n(l) = 2l−1 + 1, l > 1, this generates a nested sequence known as the Clenshaw–Curtis nodes.

Another set of points of interest are the well-known Gaussian abscissa, which are the roots of orthogonal polynomials with respect to a given measure. Here we consider the sequence of Gauss–Legendre nodes, which consists of the roots of the sequence of polynomials orthogonal to the uniform measure on [−1, 1], i.e., the n roots of the polynomials

With the introduction of a weight into the integral from (2), other families of orthogonal polynomials can be used. The main advantage of Gauss points is their high degree of accuracy, i.e., the one-dimensional quadrature rules built from n Gauss points integrate exactly polynomials of degree 2n − 1.

Remark 1

Gauss–Legendre points do not form a nested sequence, which may lead to inefficiency in the high-dimensional quadrature setting. In fact, without nestedness of the one-dimensional sequence, the sparse grid rule described in the following section may not even be interpolatory. Even so, we only require the one-dimensional rule to be interpolatory to apply the conformal mapping theory in the multidimensional setting. We also remark that nested quadrature sequences based on the roots of orthogonal polynomials, the so-called Gauss–Patterson points, are also available, but we do not consider these types of rules herein.

The final set of nodes we consider are known as the Leja points. Leja points satisfy a recursive definition, that is, given a point x 1 ∈ [−1, 1], for n ≥ 2 define

where we typically take x 1 = 0. Of course, there may be several minimizers to (12), so for computational purposes, we simply choose the minimizer closest to the left endpoint. In the interpolation setting, Leja sequences are known to have good properties for approximation in high-dimensions [20], and there has been much research related to the stability properties of such nodes when used for Lagrange interpolation [14, 31]. The lack of symmetry of the sequence may not be ideal for all applications, and certain symmetric “odd Leja” constructions may be used in these cases [29]. On the other hand, the points here have the added benefit of being a nested sequence and grow one point at a time, and furthermore have asymptotic distribution which is that same as that of Gauss and Clenshaw–Curtis nodes [20].

2.2 Sparse Quadrature for High Dimensional Integrals

For the numerical approximation of high-dimensional integrals over product domains, it is natural to consider simple tensor products of one-dimensional quadrature rules. Unfortunately, these rules suffer from the curse of dimensionality, as the number of points required to accurately compute the integral grows exponentially with the underlying dimension of the integral; i.e., a rule using n points in each dimension requires n d points. For certain smooth integrands, we can mitigate this effect by considering sparse combinations of tensor products of these one-dimensional rules, i.e., sparse grid quadrature. It is known that sparse grid rules can asymptotically achieve approximately the same order of accuracy as full tensor product quadrature, but use only a fraction of the number of quadrature nodes [9, 22, 23, 27].

Rather than the one-dimensional integral from before, we let d > 1 be the dimension and define Γ := [−1, 1]d. In addition, by letting x = (x 1, …, x d ) be an arbitrary element of Γ, we consider the problem of approximating the integral

using transformed quadrature rules. To define the sparse grid rules, we first denote by {I p(l)}l≥1 a sequence of given one-dimensional quadrature operators using p(l) points. Here I p(l) may be a standard interpolatory quadrature Q p(l) from (2) or its conformally transformed version \(\widetilde {Q}_{p(l)}\) from (5). With I 0 := 0, define the difference operator

Then given a set of multiindices \(\varLambda _w \subset \mathbb {N}^d_{0}\), we define the sparse grid quadrature operator to be

where we refer to the natural number w as the level of the sparse grid rule, and N w is the total number of points in Γ used by the sparse grid. The choice of multiindex set Λ w may vary based on the problem at hand. We only require that it be downward closed, i.e., if l ∈ Λ w , then ν i ≤ l i for all i = 1, …, d implies ν ∈ Λ w . The index set may be anisotropic, i.e., dimension dependent, or if appropriate error indicators are defined, it may even be chosen adaptively. Some typical choices are given in Table 1, but for simplicity, we consider only standard isotropic Sparse Smolyak grids. For more information on anisotropic rules, see [21].

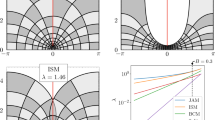

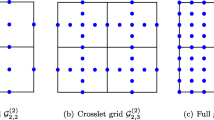

The effect of the conformal mapping on the placement of the nodes used by the sparse quadrature rule (14) is similar to the one-dimensional case. In Fig. 3, we have plotted the nodes of a two-dimensional Clenshaw–Curtis sparse grid with the transformation map (9), using ρ = 1.4, versus a traditional Clenshaw–Curtis sparse grid. Note how the clustering of the nodes toward the outer boundary of the cube is diminished.

3 Comparison of the Transformed Sparse Grid Quadrature Method

In this section we investigate the potential improvement in convergence for computation of high-dimensional integrals using the sparse grid quadrature method based on the transformed rules. The different mappings (7) and (9), since they have different properties, will be considered separately. Furthermore, the focus of this section will be on the transformation of Gauss–Legendre rules, though we remark that starting from a one-dimensional convergence result such as the following theorem, the rest of the analysis is similar for the Clenshaw–Curtis case. We begin by quoting the following one-dimensional result stated from [13], establishing the convergence of the transformed Gauss–Legendre rule for an analytic integrand.

Theorem 1

For some ρ > 1, let f be analytic and uniformly bounded by γ > 0 in the ellipse E ρ . Then for n ≥ 1, the Gauss–Legendre quadrature rule has the error bound

Now taking a specific region of analyticity and a given conformal map, we apply Theorem 1 to quantify the benefit of the transformation method. We start by considering functions analytic in the strip S ε of half width ε about the real line, and the Gauss–Legendre rule transformed under the map (7).

Theorem 2

([ 13 , Theorem 3.1]) Let f be analytic in a strip S ε of half width ε about the real line, and g 1 the conformal map (7) mapping \(E_{1 + \frac {\pi }2 \varepsilon } \rightarrow S_{\varepsilon }\) . Then for n ≥ 1, and any \(\tilde {\varepsilon } < \varepsilon \) , the transformed Gauss–Legendre quadrature rule has the error bound

where \(\gamma _1 = \sup _{s\in E_{1 + \frac {\pi }2 \tilde {\varepsilon }}} |f(g_1(s)) g_1^{\prime }(s)|\).

This theorem follows by an application of Theorem 1 to the integral of (f ∘ g)g′ using (5). We must take \(\tilde {\varepsilon } < \varepsilon \), since otherwise the value of γ 1 will be infinite due to the behavior of g′. Furthermore, since the closure of \(g(E_{1 + \frac {\pi }2 \tilde {\varepsilon }})\) is contained in the open set S ε , we have γ 1 < ∞ without assuming the boundedness of f. However, we do not lose much from this assumption, and this theorem shows that we can achieve savings of almost a factor of π∕2 for functions analytic in a strip S ε .

For the mapping (9), the results are somewhat more complicated, due to the fact that the properties of the map depend crucially on the chosen degree M of the truncation, and for a given M we may not be able to realize the full factor of π∕2. From a practical standpoint, this is not much worse than the case of the strip mapping (7), since full information about the analyticity of the integrand may not be available, and hence it may be difficult to tune the parameter of the mapping to the integral at hand. Thus, what we have in the case of the map (9) is a more precise result with all the parameters specified. The following result from [13] will apply to functions which are analytic in the ε-neighborhood of [−1, 1], denoted U ε . Then we have the following theorem.

Theorem 3

([ 13 , Theorem 6.1]) Let ε ≤ 0.8, and let f be analytic in a ε-neighborhood U ε of [−1, 1]. Let g 2 be the conformal map (9), truncated at degree M = 4. Then for n ≥ 1, the transformed Gauss–Legendre quadrature rule has the error bound

where \(\gamma _2 = \sup _{s\in E_{1 + 1.3\varepsilon }} |f(g_2(s)) g_2^{\prime }(s)| \).

From the one dimensional results of Theorems 2 and 3, for the maps (7) and (9), resp., we are able to fully quantify the benefits of the TQ rules applied to sparse grid quadrature in high dimensions. The following theorems give the expected sub-exponential convergence rate for a sparse grid quadrature approximation of an analytic integrand based on the Gauss–Legendre points. These results are in accord with other subexponential rates for sparse multidimensional polynomial approximation obtained in [3, 12, 22, 32]. Recall that we are considering only isotropic sparse Smolyak constructions, according to the last row in Table 1.

Theorem 4

Let f be analytic in \(\prod _{i=1}^d S_{\varepsilon }\) for some ε > 0, and let g 1 be the conformal mapping (7). Then for any \(\tilde {\varepsilon } < \varepsilon \) , the sparse quadrature (14) built from transformed Gauss–Legendre quadrature rules satisfies the following error bound in terms of the number of quadrature nodes:

where γ 1 is defined as in Theorem 2 , and

with the constant \(\zeta = 1 + \log (2)(1 + \log _2(1.5)) \approx 2.1\).

Theorem 5

For some 0 < ε ≤ 0.8, let f be analytic in \(\prod _{i=1}^d U_{\varepsilon }\) , and let g 2 be the conformal mapping (9) truncated at degree M = 4. Then the sparse quadrature (14) built from transformed Gauss–Legendre quadrature rules satisfies the following error bound in terms of the number of quadrature nodes:

with γ 2 as in Theorem 3 , and ξ(d) as in (19).

Sketch of Proof

From the one dimensional results of Theorems 2 and 3, resp., the proof of the results above follows from well-known sparse grid analysis techniques and estimates on the number of quadrature nodes [22]. Specifically, we may follow along the lines of the proof of [22, Theorem 3.19], with the one-dimensional convergence estimates [22, p. 2230] replaced by (16) and (17), resp, and noting that, e.g.,

The rest of the proof is an application of Lemmas 3.4 and 3.5 from the aforementioned [22], along with the estimate on the number of quadrature points given by Lemma 3.17. □

We remark again that it is not necessary to use the same ε in each dimension, but we make that choice for clarity of presentation. As mentioned in Sect. 2.2, in the case that the integrand f has dimension-dependent smoothness, anisotropic sparse grid methods are available.

We now make a few remarks on the improvements of Corollaries 4 and 5 over sparse grids based on traditional interpolatory quadrature methods. First, note that for functions f ∈ C(Γ) which admit an analytic extension in either \(\prod _{i=1}^d S_{\varepsilon }\) or \(\prod _{i=1}^d U_{\varepsilon }\), the largest (isotropic) polyellipse in which f is analytic has the shape parameter ρ = 1 + ε. Hence, the convergence rate of typical sparse grid Gauss–Legendre quadrature, using N abscissa, is

Thus, the improvement in convergence rate is multiplied exponentially in the sparse grid case, i.e., in the case of Corollary 4, the number of points required to reach a certain tolerance is reduced by a factor approaching \((\pi /2)^{\xi (d)^{-1}}\), with ξ as in (19). To see this, let N SGTQ and N SG be the necessary number of points for the right-hand sides of (18) and (21), respectively, to be less than a given tolerance. Then, we may calculate that

The constants are ignored in the calculation, though the transformed quadrature may have slightly improved constant versus the standard case. We also note that ξ(d)−1 ≥ d, so the improvement is exponential in the dimension, and that as ε →∞, i.e., for functions which are analytic in a large region containing [−1, 1], the improvement factor degrades to 1. In the case of the sparse grid quadrature approximation transformed by (9), we use (20), so the improvement is \(1.3^{\xi (d)^{-1}}\), as long as ε ≤ 0.8. As mentioned in the work [13], the factor of 1.3 is still less than π∕2 ≈ 1.57, but for smaller ε and large truncation parameter M this can improved to 3∕2; see [13, Theorem 6.2].

4 Numerical Tests of the Sparse Grid Transformed Quadrature Rules

In this section we test the sparse grid transformed quadrature rules on a number of multidimensional integrals, and compare the performance versus standard rules. The transformed rules we consider are based on the conformal mapping of Gauss–Legendre, Clenshaw–Curtis, and Leja quadrature nodes, which are describe in Sect. 2.1. We transform these rules using both of the conformal mappings (7) and (9), using the Matlab code provided in [13] to generate the one-dimensional quadrature sequences. The Tasmanian sparse grid toolkit [28, 29] is used for the implementation of the full sparse grid quadrature rule. For the Clenshaw–Curtis rule, we use a standard Smolyak sparse grid with doubling rule; see Table 1. For the Gauss–Legendre and Leja sequences, we use isotropic total degree index sets with linear point growth p(l) = l, l ≥ 1. For each of the rules, we will consider error versus the total number of sparse grid points, N w .

4.1 Comparison of Maps

For the first test, we compare the sparse grid methods with the transformed quadratures to traditional quadrature approximations for computing the integral of three test functions over the cube [−1, 1]3 in three dimensions. In each case, we compare the different maps (7) and (9) for the generation of the transformed one-dimensional quadrature from the Clenshaw–Curtis, Gauss–Legendre, and Leja rules. The chosen mapping parameters are ρ = 1.4 with (7) and truncation parameter M = 4 for (9).

In Fig. 4, we plot the results for approximating the integral over [−1, 1]3 of the function

This function has complex singularities at points \(\boldsymbol z\in \mathbb {C}^3\) where at least one coordinate \(z_j = \frac 1{\sqrt {5}}\text{i}\), and is hence analytic in the complex hyper-strip \(\prod _{i=1}^3 S_{1/\sqrt {5}}\). As expected, the quadrature generated according to the mapping (7) performs the best here, though the chosen parameter ρ = 1.4 is somewhat less than the optimal, since the value \(\frac 2{\pi } ( 1.4 - 1 ) \approx 0.255 < 1/\sqrt {5} \). Regardless, the transformed sparse grid approximations again perform better than their classical counterparts, gaining up to two orders of magnitude in the error for Clenshaw–Curtis and Gauss rules. Note that on the right-hand plot, the transformation (9) does not work well with the Leja rule. The results for the standard quadrature are repeated in each plot for ease of comparison.

Figure 5 again shows the results for approximating the integral of the function

over the cube [−1, 1]3. This function is entire, but grows rapidly in the complex hyperplane away from [−1, 1]3. The left-hand plot shows the performance of the sparse grid transformed quadratures using the transformation (7), while the right-hand plot uses (9). In each case, the sparse quadrature approximations using mapped rules outperform traditional sparse grid quadrature, and there is only a slight difference in the performance of the transformed rules corresponding to the different mappings.

Finally, in Fig. 6, we plot results for approximating the integral of the function

over the cube, [−1, 1]3. This function is entire and does not grow too quickly away from the unit cube in the complex hyperplane \(\mathbb {C}^3\). On the other hand, by fixing the parameters in the conformal mapping, the convergence rate of the transformed sparse grid rules is restricted by the analyticity of the composition (f ∘ g)g′. In other words, the conformal mapping technique cannot take full advantage of the analyticity of the function f. Thus, we see that the rules based on holomorphic mappings are inferior for computing the integral of this function, though the transformed Leja rule using (7) is somewhat competitive, at least up to the computed level.

4.2 Effect of Dimension

Next we investigate the effect of increasing the dimension d of the integral problem, and see whether the holomorphic transformation idea indeed decreases the computational cost with growing dimension. The test integral for this experiment is

In Table 2 we compare the number of points used to estimate the integral (26) in d = 2, 4, 6 dimensions, up to the given error tolerance. We use both the Clenshaw–Curtis and the Leja rules, with their corresponding transformed versions. We do not include the results for the Gauss–Legendre method, since as in Fig. 4, the GL method performs much worse than the others for this test function. Here we implement only the map (7) with ρ = 1.7, which maps the interior of the ellipse (1) to a strip of half-width \(\frac 1{\pi }(1.7 - 1) \approx 1/\sqrt {5}\). This integral has simple product structure, so we compare the computed sparse grid approximation to the “true” integral value computed to high precision. As expected, the sparse grid rules using transformed quadrature need far fewer points to compute the value of the integral up to a given tolerance, as compared with standard sparse grid rules. As the dimension increases, because of the doubling rule p from Table 1, the number of points grows rapidly from one level to the next. Thus, a certain grid may vastly undershoot or overshoot the optimal number of points needed to achieve a certain error. Furthermore, it may be the case that the convergence has not yet reached the asymptotic regime for such a large tolerance 10−2, and so we claim from Table 2 that the transformed sparse grid rules may work well even before the convergence is governed by the asymptotic theory.

5 Conclusions

In this work, we have demonstrated the application of the transformed quadrature rules of [13] to isotropic sparse grid quadrature in high dimensions, and showed that in certain situations we are able to speed up convergence of a transformed sparse approximation by a factor approaching \((\pi /2)^{\xi (d)^{-1}}\), where \(\xi (d)^{-1} \approx d \log d\). We applied the rules to several test integrals, and experimented with different conformal mappings g, and found that the sparse grid quadratures with conformally mapped rules outperformed the standard sparse grid rules based on one-dimensional interpolatory quadrature by a significant amount for several example integrands. For entire functions, or functions which are analytic and grow slowly in a large region around [−1, 1], the transformation method fails to beat standard quadrature rules, since the convergence of the transformed quadrature is dictated by the chosen mapping parameter. However, the transformed rules perform especially well for functions which are analytic only in a small neighborhood around [−1, 1], even if the mapping parameter is not tuned exactly to the region of analyticity.

References

M. Abramowitz, I.A. Stegun, Handbook of Mathematical Functions: with Formulas, Graphs, and Mathematical Tables, vol. 55 (Courier Corporation, North Chelmsford, 1964)

B.K. Alpert, Hybrid Gauss-trapezoidal quadrature rules. SIAM J. Sci. Comput. 20(5), 1551–1584 (1999)

J. Beck, F. Nobile, L. Tamellini, R. Tempone, Convergence of quasi-optimal stochastic galerkin methods for a class of pdes with random coefficients. Comput. Math. Appl. 67(4), 732–751 (2014)

G. Beylkin, K. Sandberg, Wave propagation using bases for bandlimited functions. Wave Motion 41(3), 263–291 (2005)

J.P. Boyd, Prolate spheroidal wavefunctions as an alternative to Chebyshev and Legendre polynomials for spectral element and pseudospectral algorithms. J. Comput. Phys. 199(2), 688–716 (2004)

Q.-Y. Chen, D. Gottlieb, J.S. Hesthaven, Spectral methods based on prolate spheroidal wave functions for hyperbolic PDEs. SIAM J. Numer. Anal. 43(5), 1912–1933 (2005)

P. Favati, G. Lotti, F. Romani, Bounds on the error of Fejér and Clenshaw–Curtis type quadrature for analytic functions. Appl. Math. Lett. 6(6), 3–8 (1993)

H.E. Fettis, Note on the computation of Jacobi’s nome and its inverse. Computing 4(3), 202–206 (1969)

T. Gerstner, M. Griebel, Numerical integration using sparse grids. Numer. Algoritm. 18(3–4), 209–232 (1998)

M. Götz, Optimal quadrature for analytic functions. J. Comput. Appl. Math. 137(1), 123–133 (2001)

M. Griebel, J. Oettershagen, Dimension-adaptive sparse grid quadrature for integrals with boundary singularities, in Sparse Grids and Applications-Munich 2012 (Springer, Cham, 2014), pp. 109–136

M. Griebel, J. Oettershagen, On tensor product approximation of analytic functions. J. Approx. Theory 207, 348–379 (2016)

N. Hale, L.N. Trefethen, New quadrature formulas from conformal maps. SIAM J. Numer. Anal. 46(2), 930–948 (2008)

P. Jantsch, C.G. Webster, G. Zhang, On the Lebesgue constant of weighted Leja points for Lagrange interpolation on unbounded domains. IMA J. Numer. Anal. (2018). https://doi.org/10.1093/imanum/dry002

S. Kapur, V. Rokhlin, High-order corrected trapezoidal quadrature rules for singular functions. SIAM J. Numer. Anal. 34(4), 1331–1356 (1997)

D. Kosloff, H. Tal-Ezer, Modified Chebyshev pseudospectral method with O(N −1) time step restriction. J. Comput. Phys. 104, 457–469 (1993)

M. Kowalski, A.G. Werschulz, H. Woźniakowski, Is Gauss quadrature optimal for analytic functions? Numer. Math. 47(1), 89–98 (1985)

J. Ma, V. Rokhlin, S. Wandzura, Generalized Gaussian quadrature rules for systems of arbitrary functions. SIAM J. Numer. Anal. 33(3), 971–996 (1996)

M. Mori, An IMT-type double exponential formula for numerical integration. Publ. Res. Inst. Math. Sci. 14(3), 713–729 (1978)

A. Narayan, J.D. Jakeman, Adaptive Leja sparse grid constructions for stochastic collocation and high-dimensional approximation. SIAM J. Sci. Comput. 36(6), A2952–A2983 (2014)

F. Nobile, R. Tempone, C.G. Webster, An anisotropic sparse grid stochastic collocation method for partial differential equations with random input data. SIAM J. Numer. Anal. 46(5), 2411–2442 (2008)

F. Nobile, R. Tempone, C.G. Webster, A sparse grid stochastic collocation method for partial differential equations with random input data. SIAM J. Numer. Anal. 46(5), 2309–2345 (2008)

E. Novak, K. Ritter, High dimensional integration of smooth functions over cubes. Numer. Math. 75(1), 79–97 (1996)

K. Petras, Gaussian versus optimal integration of analytic functions. Constr. Approx. 14(2), 231–245 (1998)

D. Slepian, H.O. Pollak, Prolate spheroidal wave functions, Fourier analysis and uncertainty–I. Bell Labs Tech. J. 40(1), 43–63 (1961)

R.M. Slevinsky, S. Olver, On the use of conformal maps for the acceleration of convergence of the trapezoidal rule and sinc numerical methods. SIAM J. Sci. Comput. 37(2), A676–A700 (2015)

S. Smolyak, Quadrature and interpolation formulas for tensor products of certain classes of functions. Soviet Math. Dokl. 4, 240–243 (1963)

M. Stoyanov, User manual: Tasmanian sparse grids v4.0. Technical Report ORNL/TM-2015/596. Oak Ridge National Laboratory (2017). https://tasmanian.ornl.gov/manuals.html

M.K. Stoyanov, C.G. Webster, A dynamically adaptive sparse grids method for quasi-optimal interpolation of multidimensional functions. Comput. Math. Appl. 71(11), 2449–2465 (2016)

H. Takahasi, M. Mori, Quadrature formulas obtained by variable transformation. Numer. Math. 21(3), 206–219 (1973)

R. Taylor, V. Totik, Lebesgue constants for Leja points. IMA J. Numer. Anal. 30(2), 462–486 (2010)

H. Tran, C.G. Webster, G. Zhang, Analysis of quasi-optimal polynomial approximations for parameterized PDEs with deterministic and stochastic coefficients. Numer. Math. 137, 451–493 (2017)

L.N. Trefethen, Approximation Theory and Approximation Practice (SIAM, Philadelphia, 2013)

H. Xiao, V. Rokhlin, N. Yarvin, Prolate spheroidal wavefunctions, quadrature and interpolation. Inverse Prob. 17(4), 805–838 (2001)

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Rights and permissions

Copyright information

© 2018 Springer International Publishing AG, part of Springer Nature

About this paper

Cite this paper

Jantsch, P., Webster, C.G. (2018). Sparse Grid Quadrature Rules Based on Conformal Mappings. In: Garcke, J., Pflüger, D., Webster, C., Zhang, G. (eds) Sparse Grids and Applications - Miami 2016. Lecture Notes in Computational Science and Engineering, vol 123. Springer, Cham. https://doi.org/10.1007/978-3-319-75426-0_6

Download citation

DOI: https://doi.org/10.1007/978-3-319-75426-0_6

Published:

Publisher Name: Springer, Cham

Print ISBN: 978-3-319-75425-3

Online ISBN: 978-3-319-75426-0

eBook Packages: Mathematics and StatisticsMathematics and Statistics (R0)