Abstract

Recent progress in electronics has allowed the construction of affordable mobile robots. This opens many new opportunities, in particular in the context of collective robotics. However, while several algorithms in this field require global localization, this capability is not yet available in low-cost robots without external electronics. In this paper, we propose a solution to this problem, using only approximate dead-reckoning and infrared sensors measuring the grayscale intensity of a known visual pattern on the ground. Our approach builds on a recursive Bayesian filter, of which we demonstrate two implementations: a dense Markov Localization and a particle-based Monte Carlo Localization. We show that both implementations allow accurate localization on a large variety of patterns, from pseudo-random black and white matrices to grayscale images. We provide a theoretical estimate and an empirical validation of the necessary traveled distance for convergence. We demonstrate the real-time localization of a Thymio II robot. These results show that our system solves the problem of absolute localization of inexpensive robots. This provides a solid base on which to build navigation or behavioral algorithms.

Access provided by CONRICYT-eBooks. Download chapter PDF

Similar content being viewed by others

1 Introduction

Driven by consumer products, the technologies of electronics, motor and battery have made tremendous progress in the last decades. They are now widely available at prices which make affordable mobile robots a reality. This opens many new opportunities, in particular in the contexts of collective robotics.

Collective and swarm robotics focus on the scalability of systems when the number of robots increases. In that context, global localization is a challenge, whose solution depends on the environment the robots evolve in. A common approach is to measure the distance and orientation between robots [12], but this approach relies on beacons to provide absolute measurements. Yet, several state of the art algorithms require global positioning [1]. In experimental work, it is often provided by an external aid such as a visual tracker, bringing a non-scalable single point of failure into the system, and breaking the distributed aspect. Therefore, there is the need for a distributed and affordable global localization system for collective robotics experiments.

This paper answers this need by providing a distributed system using only approximate dead-reckoning and inexpensive infrared sensors measuring the grayscale intensity of the ground, without knowing the initial pose of the robots. As sensors are mounted on the robot, the localization is a local operation, which makes the approach scalable. Our solution is based on the classical Markov [6] and Monte Carlo [2] Localization frameworks that can be seen as respectively a dense and a sampling-based implementation of a recursive Bayesian filter. While these approaches are commonly used in robots with extensive sensing capabilities such as laser scanners, their implementation on extremely low bandwidth sensors is novel, and raises specific questions, such as which distance the robot must travel for the localization to converge.

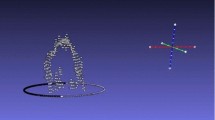

In this paper, we deploy these algorithms on the Thymio II differential-wheeled mobile robot. The robot reads the intensity of the ground using two infrared sensors (Fig. 1, middle, circled red) and estimates the speed of its wheels by measuring the back electromotive force, which is less precise than encoder-based methods. For evaluation purposes, a Vicon tracking system (http://www.vicon.com/) provides the ground-truth pose (Fig. 1, right). Our first contribution is a predictive model of the necessary distance to travel to localize the robot. Our second contribution is a detailed analysis of the performances of the two implementations in comparison with ground-truth data. Our last contribution is an experimental validation of online, real-time localization (Fig. 1, left). The source code and all experimental data are available onlineFootnote 1 and the system can be seen running in a video.Footnote 2

2 Related Work

The main challenges of solving the localization problem on affordable mobile robots are the constraints on the environment and the limited information content that inexpensive sensors can typically provide.

The work of Kurazume and Nagata [8] first raised the idea of performing inexpensive localization through the cooperative positioning of multiple robots. Prorok et al. [12] is a modern work representative of this approach. These authors have used an infrared-based range and bearing hardware along with a distributed Monte Carlo Localization approach, allowing a group of robots to localize down to a precision of 20 cm. However, this methods would require fixed robots acting as beacons to provide absolute positioning. Moreover, both radio and infrared-based range and bearing systems require complex electronics. Finally, when the cost of all robots is added, the system is far from cheap.

A cheaper approach is to use walls around an experimental arena to localize. For example, Zug et al. [13] have developed an algorithm using an array of triangulation-based infrared distance sensors. A Kalman filter algorithm is applied to localize the robot within a 2 by 1 m box. No experimental result is provided, but a simulation shows the estimated error to be within 2 cm. Dias and Ventura [3] used two horizontal lines from a vga camera to read barcodes on the walls of an arena and localize an e-puck robot. Their system employs an Extended Kalman Filter (EKF) algorithm and reaches a precision of 1 cm and 5\(^\circ \). However, these systems require no obstacles between the robot and the walls, and thus are not scalable to a large number of robots.

This problem can be alleviated by detecting a known pattern visible on the ceiling and fusing this information with odometry. This approach was proven effective for localization using high-quality cameras [2]. Focusing on a low cost, Gutmann et al. [7] have developed a system using only 3 or 4 light detectors, able to localize a mobile robot in a room with an accuracy of 10 cm. However, this method requires a controlled ceiling arrangement and empty space over the robots.

Another approach is to exploit the ground for localization. Park and Hashimoto [11] proposed to localize a mobile robot over a ground equipped with randomly distributed passive rfid tags. The average localization error of this method is lower than 10 cm. However, this approach requires the ground to be equipped with tags which can become expensive and tedious to deploy when the area grows.

The system proposed in this paper builds on the idea of using information from the ground for absolute localization, with performance comparable with related work. To the best of our knowledge, existing solutions for localization of multi-robot systems either rely on an expensive adaptation of the environment using markers or beacons, or necessitate the embedding of expensive sensing capabilities on every robot. In contrast, our system is affordable since it only requires a low number of inexpensive infrared sensors onboard the robot (which are typically used as cliff detectors), and a simple grayscale pattern on the ground that can be achieved using a printed poster.

3 Model

The generic Bayesian filter used to estimate the pose of a robot in its environment uses the following variables:

-

\(X_{1:t}\) 2-D pose at times 1..t, consisting of x, y coordinates and an angle \(\theta \).

-

\(Z_{1:t}\) observations at times 1..t, consisting of the output of the sensors measuring the grayscale intensity of the ground.

-

\(U_{1:t}\) odometry at times 1..t, consisting of the left and right wheel speeds.

It is classically formulated as a recursive Bayesian filter with the following joint probability distribution:

This filter is based on a few assumptions. First it assumes that the current observation is independent on the past observations, the past states and odometry commands conditionally to the current state. It also features the Markov assumption: the next state is independent on former states, commands and observations conditionally to the previous state and the current command. Finally, it assumes that the actions are independent from the past. All these assumptions are standard and can be found in Kalman filtering and other classical models.

This joint probability distribution allows to formulate the problem as the estimation of the pose \(X_t\) at time t given the observations \(Z_{1:t}\) and the commands \(U_{1:t}\):

This inference involves two distributions to be specified: the observation model \(p(Z_t | X_t)\) and the motion model \(p(X_t|X_{t-1}, U_t)\), whose parametrizations depend on the robot. In addition, we define a self-confidence metrics and outline the implementation.

Observation model. Our observation model \(p(Z_t | X_t) = \prod _{i=0,1}^{N_\mathrm {sensors}} p(Z_t^{i} | X_t)\) assumes all sensor noises to be independent, and the ground color to be in the range of [0, 1] (0 being black and 1 being white). We take \(p(Z_t^{i} | X_t) \sim \mathscr {N}(v,\sigma _\mathrm {obs})\), for robot pose \(X_t\), sensor i, and a corresponding ground intensity v according to the map. The parameter \(\sigma _\mathrm {obs}\) is selected based on the knowledge of the sensor. Thymio II has two sensors (Fig. 1, center). For binary (black and white) patterns, we chose \(\sigma _\mathrm {obs} = 0.5\). For grayscale images, based on measurement on a Thymio II, we set \(\sigma _\mathrm {obs}\) to 0.15.

Motion model. Based on the model of Eliazar et al. [4], we assume that the motion has a Gaussian error model, hence \(p(X_t~|~X_{t-1}, U_{t})\sim \mathscr {N}(\mu _t,\varSigma _t)\). The mean \(\mu _t\) is built by accumulating the estimated displacements by dead-reckoning between times \(t-1\) and t. Therefore, if \(\varDelta x_t\), \(\varDelta y_t\), \(\varDelta \theta _t\) are the displacement between \(t-1\) and t, expressed in the robot local frame at \(t-1\), \(\mu _t\) is:

where \(R(\theta )\) is the 2-D rotation matrix of angle \(\theta \). The \(3\times 3\) diagonal covariance matrix \(\varSigma _t\) is a function of the distance traveled, the amount of rotation, and two parameters \(\alpha _\mathrm {xy}\), \(\alpha _\theta \):

with \( \sigma _{\mathrm {xy},t} = \alpha _\mathrm {xy} \sqrt{\varDelta x_{t}^2 + \varDelta y_{t}^2}\).

To cope with the possibility of the robot being kidnapped and therefore its pose becoming unknown, a uniform distribution with a weight \(p_\mathrm {uniform}\) is added to \(X_t\). The parameters \(\alpha _\mathrm {xy}\), \(\alpha _\theta \) and \(p_\mathrm {uniform}\) are estimated using maximum likelihood (Sect. 5).

Implementations. We compare two variants of this filter. In Markov Localization, the distributions are discretized using regular grids [6]. For our experiments, the x, y cell resolution is 1 cm and the angular resolution varies from 20\(^\circ \) (18 discretization steps for 360\(^\circ \)) to 5\(^\circ \) (72 discretization steps for 360\(^\circ \)). The estimated pose is the coordinates of the cell of maximum probability. In Monte Carlo Localization [2], the distributions are represented using samples in a particle filter. In order to extract a single pose estimate out of the many particles, we find a maximum density area in which we average the particles. It is similar to a 1-point ransac scheme [5]. We implemented both algorithms in Python with some Cython (http://www.cython.org) procedures used for time-critical inner loops. The algorithms run on an embedded computer or laptop (Fig. 1, left). Thymio II is programmed through the aseba framework [10], which connects to Python using D-Bus.

Self confidence. We define a self-confidence term that corresponds to the ratio of the probability mass of \(p(X_t)\) that is within a distance \(d_\mathrm {xy}\) and an angle difference \(d_\theta \) to the estimated pose. In our experiments, we use \(d_\mathrm {xy} = 3\) cm and \(d_\theta = 10^\circ \).

4 Theoretical Analysis of Convergence

One can estimate the time required for the robot to localize itself in a given space, by comparing the information needed and the information gained while traveling.

Information need. For the Markov Localization approach, there is a given number of discrete cells. The amount of information needed to unambiguously specify one among them all is \(\mathrm {H}_\mathrm {loc} = \log _2(N_\mathrm {cells})\), with \(N_\mathrm {cells}\) the number of cells.

Information gain. We can estimate the information gain at each time step. Let us consider the case of a binary map and a binary sensor. This sensor ideally yields 1 bit of information per measurement. In practice, there is a loss in information due to the sensor noise, characterized by the \(p_\mathrm {correct}\) probability of the sensor to be correct:

where \(\mathrm {H}_\mathrm {b}\) is the binary entropy function: \(\mathrm {H}_\mathrm {b}(p) = -p\log _2(p) - (1-p)\log _2(1-p)\).

We also need to take into account that the sensor measurements are not completely independent. For example, when the robot is not moving, it always observes the same place and thus cannot really gain additional information besides being sure of the intensity of the current pixel. In a discretized world, we thus need to estimate the probability of having changed cell in order to observe something new, which depends on the distance traveled and the size of the cells. This problem is equivalent to the Buffon-Laplace needle problem of finding the probability for a needle thrown randomly on a grid to actually intersect the gridFootnote 3 [9]. In our case, the probability of changing cell is given by:

with d the distance traveled and h the size of the cells.

We can then compute the conditional entropy for two successive ideal binary measurements \(O_{t-1}\) and \(O_t\) separated by d based on the conditional probability. There are two cases: either the robot has not moved enough to change cell (with probability \(1 - p_\mathrm {diff}\)) and the new observation is the same as the old, or the robot has changed cell (with probability \(p_\mathrm {diff}\)) and the new observation has the same probability to be the same or the opposite of the old. This can be summarized by the following conditional probability distribution (b/w \(=\) black/white):

After rearranging the terms of the conditional entropy, the loss of information due to redundancy in the traveled distance is:

There is also redundancy between several sensors placed on the same robot. The probability that they see the same cell based on the distance between them is exactly the same as the probability of a sensor to see the same cell after a displacement of the same distance. The information loss due to the redundancy from the sensor placement is noted \(\mathrm {H}_\mathrm {sensors}\) and follows the same formula as \(\mathrm {H}_\mathrm {loss,d}\), but with d being the distance between the two sensors in Eq. 6.

Finally, we can approximate the information that our robot gathers at each time step by assuming that the trajectory of the robot does not loop (no redundancy between distant time steps) and that the trajectories of the sensors are independent. The information gathered in a time step is then:

This formula also ignores the uncertainty in the robot motion. With these assumptions, it is an upper bound on the average information gain.

Outlook. Faster localization can be achieved by moving at a greater speed to reduce redundancy in the successive measurements, with proportional increase in sampling frequency. If designing a new robot, better sensors would reduce cross-over noise. Setting the sensors apart would also reduce the redundancy between their information but, for our specific grid size, they are sufficiently separated in Thymio II.

5 Empirical Analysis of Performance

To evaluate the performance of our localization algorithms, we remotely controlled the robot and recorded datasets covering all possible robot motions:

-

trajectory 1 and 2: The robot alternates forward and backward movements with rotations on spot, at a speed of 3–5 cm/s.

-

linear trajectory: The robot simply goes straight ahead along the x-axis of the map, at a speed of 3–5 cm/s.

-

trajectory with kidnapping: The robot alternates phases of forward movement, at a speed of 15 cm/s, and turning on spot. To test the algorithm’s ability of recovering from catastrophic localization failures, we perform “robot kidnapping” by relocating the robot to another part of the map every minute.

The robot moves on a 150 \(\times \) 150 cm ground pattern containing 50 \(\times \) 50 cells of 3 \(\times \) 3 cm, each randomly black or white (Fig. 1, right). The robot is connected to ros to synchronize its sensor values and odometry information with ground-truth data from Vicon, sampled at a period of 0.3 s. We chose this period so that with basic trajectories, at maximum speed the robot travels approximately half the length of one cell between every sample.

Parameter estimation. We estimated the noise parameters of the motion model using maximum likelihood, considering the error between the ground truth and the odometry data in the local frame between two time steps. Using trajectory 1 and trajectory 2, we found the values for \(\alpha _\mathrm {xy}\) and \(\alpha _\theta \) to be in the order of 0.1. Similarly, using trajectory with kidnapping, we also found the value for \(p_\mathrm {uniform}\) to be in the order of 0.1.

Basic localization. Figure 2 shows the error in position and orientation for the first two trajectories. In these plots, distance traveled represents the cumulative distance traveled by the center point between the two ground sensors of the robot.

For the Markov Localization approach, all discretization resolutions allow the robot to localize down to a precision of 3 cm and 5\(^\circ \). However, in trajectory 1, the resolution of 18 discretization steps is not enough to keep tracking the orientation at a distance traveled of 50 and 80 cm. These both correspond to the robot rotating on spot. Finer discretizations do not exhibit this problem, they are more robust and have similar precision. Therefore, we see that an angular discretization of 36 (10\(^\circ \) resolution) is sufficient to provide accurate tracking. In trajectory 2, we see that an angular discretization of 54 allows for a better angular precision than 36, but 72 does not improve over 54. All discretizations provide equal position precision.

For the Monte Carlo Localization approach, we see that on trajectory 1, the robot localizes already with 50 k particles, but in twice the distance it takes with 100 k particles. Increasing the number of particles beyond this value only marginally decreases localization time. While 50 k particles are sufficient to localize on this run on average, in some trials, the robot loses orientation when it turns on spot. On trajectory 2, using 50 k particles is not enough to localize the robot. Increasing this number to 100 k leads to a good localization, except after the robot has traveled 130 cm. This corresponds to a long moment during which the robot rotates on spot, leading to less information acquisition, and therefore degraded performances.

Overall, both approaches have similar accuracy. When angular precision is critical, the Monte Carlo Localization approach might achieve better performance, as the Markov Localization approach is limited in precision by its angular discretization.

Distance to converge. Figure 3 shows the error in position for 5 different starting points in each of the first two trajectories, in which the robot moves at a speed of 3–5 cm/s. We see that, with the Markov Localization approach, the correct pose is found after about 20 cm. There are also outliers: this happens when the robot is turning on spot, in which case there is not enough information to localize the robot. With 400 k particles, the Monte Carlo Localization approach also converges after about 20 cm. Decreasing the number of particles quickly increases the distance needed for convergence, reaching 60 cm for 100 k particles. Using only 50 k particles, some trajectory segments fail to converge within 80 cm length.

It is interesting to compare these distances with theoretical estimates (see Sect. 4). One difficulty is that the theoretical model specifies the probability \(p_\mathrm {correct}\) of the sensor to be correct. In our observation model, instead, we specify \(\sigma _\mathrm {obs}\) the noise of the sensor. Therefore, we propose to compute \(p_\mathrm {correct}\) from \(\sigma _\mathrm {obs}\) by considering a sensor positioned over a black ground, and by assuming that all values below a threshold of 0.5 (0 being black and 1 being white) are correctly read:

In these runs, we have assumed \(\sigma _\mathrm {obs} = 0.5\), leading to \(p_\mathrm {correct}=0.84\) (Eq. 10) and \(\mathrm {H}_\mathrm {noise}=0.63\) (Eq. 5). Our robot moves at 3 cm/s with a time step of 0.3 s on a grid of 3 cm \(\times \)3 cm cells in which the color is known to be similar; this yields \(\mathrm {H}_\mathrm {loss,d}=0.33\) (Eq. 8). Moreover, the sensors are 2.2 cm apart, which yields \(\mathrm {H}_\mathrm {sensors}=0.041\). As such the robot gathers on average at most 0.036 bit per time step, or 0.040 bit/cm (Eq. 9). With a 150 cm \(\times \) 150 cm environment discretized with cells of 1 cm and 5\(^\circ \) angle, the amount of information needed for the localization is \(\mathrm {H}_\mathrm {loc}=20.6\). This means that, on average, the robot should not be localized before traveling around 520 cm.Footnote 4 This distance is far larger than the observed one of about 20 cm. The reason is that using \(\sigma _\mathrm {obs} = 0.5\) for the binary case was an arbitrary choice and therefore the value of \(p_\mathrm {correct}\) does not correspond to the reality.

Computing the value of \(p_\mathrm {correct}\) to match the observed convergence distance by inverting the computation, we find 0.97, corresponding to \(\sigma _\mathrm {obs} = 0.26\). If we consider \(\sigma _\mathrm {obs} = 0.15\) as measured on grayscale images, then \(p_\mathrm {correct} = 0.99957\). This leads to a minimum theoretical localization distance of about 14.3 cm. This value is slightly lower than our experimental results, which makes sense as it is a lower bound. Moreover, this lower bound would be attained with a perfect filter observing a perfect pattern. Our filter is not perfect, because we have run it with an overestimated \(\sigma _\mathrm {obs}\) of 0.5. Nevertheless, the filter works well, showing that it degrades gracefully with respect to imprecision in its parameters. This is important in practice, as users might not be able to provide extremely precise parameters.

Effect of map size. Figure 4 shows the effect of reducing the map size. The robot runs linearly on one quarter of the map, while the Markov Localization is performed on the whole map, half of it, and a quarter of it. We see that reducing the map size does reduce the distance traveled necessary to converge, in accordance to the theory, because less information have to be acquired to reduce the uncertainty of the pose.

The error between the estimation by the localization algorithm and the ground truth, and the self confidence of the algorithm, on the run with kidnapping. For Monte Carlo Localization, the solid lines show the median over 10 trials, while the light dots show the individual trials. The gray areas show the time during the robot is being held in the air at the occasion of kidnapping

Robot kidnapping. Figure 5 shows the error in position, orientation and the self confidence for the run with kidnapping. In this run, the robot is kidnapped twice, after having traveled 550 and 1000 cm. It takes the robot approximately 100 cm to re-localize, and does so successfully with both Markov and Monte Carlo Localization approaches. This difference of distance with previous runs is mostly due to the speed of the robot, which is about 5 times faster. With the Markov Localization approach, all discretization resolutions are approximately equivalent in position performance, but 18 leads to a lower orientation precision, as well as to a lower self confidence, due to the large discretization step. Other resolutions have a confidence of about 0.5 when the robot is localized, and this value drops below 0.1 after kidnapping, clearly showing that the algorithm is able to assess its status of being lost.

With the Monte Carlo Localization approach, the robot localizes most of the time with 100 k particles, and always with 200 k particles or more. With 50 k particles, the robot eventually localizes, but this might take more than 2 m of traveled distance. We see that the self confidence increases with more particles, and, similarly to the Markov Localization approach, drops after kidnapping. This confirms the effectiveness of the self confidence measure.

Computational cost. Table 1 shows the execution duration of one step for the two algorithms with different parameters. These data were measured on a Lenovo laptop T450s with an Intel Core i7 5600U processor running at 2.6 GHz, and are averages over the two first trajectories. We see that with the Markov Localization approach, the duration scales linearly with the number of discretization angles. With the Monte Carlo Localization approach, the scaling is linear but amortized (50 k particles is not twice faster as 100 k). This is due to the selection of pose estimate, which uses a ransac approach and is therefore independent of the number of particles. For similar localization accuracy, the Monte Carlo Localization approach is slower than the Markov Localization approach, and therefore we suggest to use the former with an angular discretization of 36 in practical applications. However, the Monte Carlo Localization approach might be preferred if a high angular precision is required, but at least 100 k particles are necessary for proper localization.

6 Real-Time Localization

Figure 6 shows the performance of the online, real-time localization of a Wireless Thymio II (Fig. 1, left). We tested different grayscale images of 59 \(\times \) 42 cm, printed on A2 paper sheets with a resolution of 1 pixel/cm. We used Markov Localization with an angular discretization of 36 and a spatial resolution of 1 cm; the localization is performed every 0.4 s. The localization algorithm runs on a Lenovo laptop X1 3rd generation with an Intel Core i7-5500U processor running at 2.4 GHz, at a cpu load of approximately 80%. The algorithm localizes the robot globally on all images. The images with more contrast lead to a more robust and faster localization, while the ones with lower contrast lead to more imprecise trajectories. In Fig. 6, we see that the parts of trajectories in red are less precise than the ones in green. This shows that the self confidence measure is effective in assessing the quality of the localization.

The image, estimated trajectory and self confidence for different 59 \(\times \) 42 cm grayscale patterns printed on A2 papers with a resolution of 1 pixel/cm. The robot is remotely controlled to draw a 8-shape figure, with similar motor commands for each image. Markov Localization with 36 discretization angles used. The color of the lines vary from red (confidence 0) to green (confidence 1). Trajectories are plotted starting from a confidence level of 0.2

Embedded use. The runtime cost is dominated by the motion model, as it involves convoluting a small window for every cell of the probability space. With the current parameters, this leads to approximately 250 floating-point multiplications per cell. For comparison, VideoCore® IV, the built-in gpu in the Raspberry Pi 3 (costing €33), has a peak processing power of 28 gflops. Assuming that, due to limitations in bandwidth and dependencies between data, it can only be used at 10% of its peak floating point throughput, it could run a gpu version of our algorithm at 10 Hz on a map of 1.8 by 1.8 m with a resolution of 1 cm. Therefore, our approach can truely be used for distributed collective experiments with affordable robots.

Scalability. The computational speed of the system and the necessary distance to converge scale linearly with the size of the localized area. Also, a prolonged use of the robot might wear off the ground, slightly shifting the grayscale intensities and jeopardizing the localization. Nevertheless, modern printing options allow to use strong supports that only degrade slowly with time. Therefore, our system can be helpful for conducting collective experiments in a laboratory environment.

Optimality. An interesting question is what is an optimal pattern. However, this question has two sides. On the one hand, a pattern could be optimal in term of quantity of information, allowing the robot to localize as fast as possible. In that case, a white-noise pattern would be ideal. On the other hand, if the robot has to interact with humans, or only be monitored by humans, such a pattern would be highly disturbing. Our experimental results with paintings and drawings show that, even with a pattern having a lot of regularities and symmetries, our system is still able to localize. Therefore, we believe it is usable with natural patterns such as photographies, drawings and grayscale schematics.

7 Conclusion

In this paper, we have implemented and empirically evaluated Markov- and Monte Carlo-based approaches for localizing mobile robots on a known ground visual pattern. Each robot requires only inexpensive infrared sensors and approximate odometry information. We have shown that both approaches allow successful localization without knowing the initial pose of the robot, and that their performances and computational requirements are of a similar order of magnitude. Real-time localization was successful with a large variety of A2 grayscale images, using the Markov Localization approach with 36 discretization steps for angle. Should larger patterns be desired, the code could be further optimized by implementing it in the gpu, allowing it to run on inexpensive boards such as the Raspberry Pi.

In addition, we have outlined, and empirically validated, a method to estimate the localization performance in function of the sensor configuration. This method provides a guide for taking decisions about the placement of sensors in a future robot design: localization performance can be improved by placing the sensors far apart on a line perpendicular to the direction of movement of the robot; moreover, more sensors allow for collecting more information, if they are separated by the size of the smallest visual structure in the map.

These contributions to the state of the art enable absolute positioning of inexpensive mobile robots costing in the €100 range. In the context of distributed collective robotics, they provide a solid base to build navigation or behavioral algorithms.

Notes

- 1.

- 2.

- 3.

The needle is the segment joining the start and end points of the robot movement and the grid is the borders of the cells.

- 4.

References

Breitenmoser, A., Martinoli, A.: On combining multi-robot coverage and reciprocal collision avoidance. In: Distributed Autonomous Robotic Systems, pp. 49–64. Springer (2016)

Dellaert, F., Fox, D., Burgard, W., Thrun, S.: Monte Carlo localization for mobile robots. In: International Conference on Robotics and Automation (ICRA), pp. 1322–1328. IEEE Press (1999)

Dias, D., Ventura, R.: Absolute localization for low capability robots in structured environments using barcode landmarks. J. Autom. Mob. Robot. Intell. Syst. 7(1), 28–34 (2013)

Eliazar, A.I., Parr, R.: Learning probabilistic motion models for mobile robots. In: Twenty-first International Conference on Machine Learning, pp. 32–39. ACM (2004). https://doi.org/10.1145/1015330.1015413

Fischler, M.A., Bolles, R.C.: Random sample consensus: a paradigm for model fitting with applications to image analysis and automated cartography. Commun. ACM 24(6), 381–395 (1981). https://doi.org/10.1145/358669.358692

Fox, D., Burgard, W., Thrun, S.: Markov localization for mobile robots in dynamic environments. J. Artif. Intell. Res. 11, 391–427 (1999). https://doi.org/10.1613/jair.616

Gutmann, J.S., Fong, P., Chiu, L., Munich, M.: Challenges of designing a low-cost indoor localization system using active beacons. In: International Conference on Technologies for Practical Robot Applications (TePRA), pp. 1–6. IEEE Press (2013). https://doi.org/10.1109/TePRA.2013.6556348

Kurazume, R.Y., Nagata, S., Hirose, S.: Cooperative positioning with multiple robots. In: International Conference on Robotics and Automation (ICRA), pp. 1250–1257. IEEE Press (1994)

Laplace, P.S.: Théorie analytique des probabilités, 3rd rev. Veuve Courcier, Paris (1820)

Magnenat, S., Rétornaz, P., Bonani, M., Longchamp, V., Mondada, F.: ASEBA: a modular architecture for event-based control of complex robots. IEEE/ASME Trans. Mechatron. 16(2), 321–329 (2010). https://doi.org/10.1109/TMECH.2010.2042722

Park, S., Hashimoto, S.: An approach for mobile robot navigation under randomly distributed passive rfid environment. In: International Conference on Mechatronics (ICM), pp. 1–6. IEEE Press (2009)

Prorok, A., Bahr, A., Martinoli, A.: Low-cost collaborative localization for large-scale multi-robot systems. In: International Conference on Robotics and Automation (ICRA), pp. 4236–4241. IEEE Press (2012)

Zug, S., Steup, C., Dietrich, A., Brezhnyev, K.: Design and implementation of a small size robot localization system. In: International Symposium on Robotic and Sensors Environments (ROSE), pp. 25–30. IEEE Press (2011)

Acknowledgements

The authors thank Emmanuel Eckard and Mordechai Ben-Ari for insightful comments on the manuscript and Ramiz Morina for his drawings. This research was supported by the Swiss National Center of Competence in Research “Robotics”.

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Rights and permissions

Copyright information

© 2018 Springer International Publishing AG

About this chapter

Cite this chapter

Wang, S., Colas, F., Liu, M., Mondada, F., Magnenat, S. (2018). Localization of Inexpensive Robots with Low-Bandwidth Sensors. In: Groß, R., et al. Distributed Autonomous Robotic Systems. Springer Proceedings in Advanced Robotics, vol 6. Springer, Cham. https://doi.org/10.1007/978-3-319-73008-0_38

Download citation

DOI: https://doi.org/10.1007/978-3-319-73008-0_38

Published:

Publisher Name: Springer, Cham

Print ISBN: 978-3-319-73006-6

Online ISBN: 978-3-319-73008-0

eBook Packages: EngineeringEngineering (R0)