Abstract

Statistical distances, divergences, and similar quantities have a large history and play a fundamental role in statistics, machine learning and associated scientific disciplines. However, within the statistical literature, this extensive role has too often been played out behind the scenes, with other aspects of the statistical problems being viewed as more central, more interesting, or more important. The behind the scenes role of statistical distances shows up in estimation, where we often use estimators based on minimizing a distance, explicitly or implicitly, but rarely studying how the properties of a distance determine the properties of the estimators. Distances are also prominent in goodness-of-fit, but the usual question we ask is “how powerful is this method against a set of interesting alternatives” not “what aspect of the distance between the hypothetical model and the alternative are we measuring?”

Our focus is on describing the statistical properties of some of the distance measures we have found to be most important and most visible. We illustrate the robust nature of Neyman’s chi-squared and the non-robust nature of Pearson’s chi-squared statistics and discuss the concept of discretization robustness.

Access provided by CONRICYT-eBooks. Download chapter PDF

Similar content being viewed by others

1 Introduction

Distance measures play a ubiquitous role in statistical theory and thinking. However, within the statistical literature this extensive role has too often been played out behind the scenes, with other aspects of the statistical problems being viewed as more central, more interesting, or more important.

The behind the scenes role of statistical distances shows up in estimation, where we often use estimators based on minimizing a distance, explicitly or implicitly, but rarely studying how the properties of the distance determine the properties of the estimators. Distances are also prominent in goodness-of-fit (GOF) but the usual question we ask is how powerful is our method against a set of interesting alternatives not what aspects of the difference between the hypothetical model and the alternative are we measuring?

How can we interpret a numerical value of a distance? In goodness-of-fit we learn about Kolmogorov-Smirnov and Cramér-von Mises distances but how do these compare with each other? How can we improve their properties by looking at what statistical properties are they measuring?

Past interest in distance functions between statistical populations had a two-fold purpose. The first purpose was to prove existence theorems regarding some optimum solutions in the problem of statistical inference. Wald (1950) in his book on statistical decision functions gave numerous definitions of distance between two distributions which he primarily introduced for the purpose of creating decision functions. In this context, the choice of the distance function is not entirely arbitrary, but it is guided by the nature of the mathematical problem at hand.

Statistical distances are defined in a variety of ways, by comparing distribution functions, density functions or characteristic functions and moment generating functions. Further, there are discrete and continuous analogues of distances based on comparing density functions, where the word “density” is used to also indicate probability mass functions. Distances can also be constructed based on the divergence between a nonparametric probability density estimate and a parametric family of densities. Typical examples of distribution-based distances are the Kolmogorov-Smirnov and Cramér-von Mises distances. A separate class of distances is based upon comparing the empirical characteristic function with the theoretical characteristic function that corresponds, for example, to a family of models under study, or by comparing empirical and theoretical versions of moment generating functions.

In this paper we proceed to study in detail the properties of some statistical distances, and especially the properties of the class of chi-squared distances. We place emphasis on determining the sense in which we can offer meaningful interpretations of these distances as measures of statistical loss. Section 2 of the paper discusses the definition of a statistical distance in the discrete probability models context. Section 3 presents the class of chi-squared distances and their statistical interpretation again in the context of discrete probability models. Section 3.3 discusses metric and other properties of the symmetric chi-squared distance. One of the key issues in the construction of model misspecification measures is that allowance should be made for the scale difference between observed data and a hypothesized model continuous distribution. To account for this difference in scale we need the distance measure to exhibit discretization robustness, a concept that is discussed in Sect. 4.1. To achieve discretization robustness we need sensitive distances, and this requirement dictates a balance of sensitivity and statistical noise. Various strategies that deal with this issue are discussed in the literature and we briefly discuss them in Sect. 4.1. A flexible class of distances that allows the user to adjust the noise/sensitivity trade-off is the kernel smoothed distances upon which we briefly remark on in Sect. 4. Finally, Sect. 5 presents further discussion.

2 The Discrete Setting

Procedures based on minimizing the distance between two density functions express the idea that a fitted statistical model should summarize reasonably well the data and that assessment of the adequacy of the fitted model can be achieved by using the value of the distance between the data and the fitted model.

The essential idea of density-based minimum distance methods has been presented in the literature for quite some time as it is evidenced by the method of minimum chi-squared (Neyman 1949). An extensive list of minimum chi-squared methods can be found in Berkson (1980). Matusita (1955) and Rao (1963) studied minimum Hellinger distance estimation in discrete models while Beran (1977) was the first to use the idea of minimum Hellinger distance in continuous models.

We begin within the discrete distribution framework so as to provide the clearest possible focus for our interpretations. Thus, let \(\mathcal {T}=\{0, 1, 2, \cdots , T\}\), where T is possibly infinite, be a discrete sample space. On this sample space we define a true probability density τ(t), as well as a family of densities \(\mathcal {M}=\{m_{\theta }(t): \theta \in \varTheta \}\), where Θ is the parameter space. Assume we have independent and identically distributed random variables X 1, X 2, ⋯ , X n producing the realizations x 1, x 2, ⋯ , x n from τ(⋅). We record the data as d(t) = n(t)/n, where n(t) is the number of observations in the sample with value equal to t.

Definition 1

We will say that ρ(τ, m) is a statistical distance between two probability distributions with densities τ, m if ρ(τ, m) ≥ 0, with equality if and only if τ and m are the same for all statistical purposes.

Note that we do not require symmetry or the triangle inequality, so that ρ(τ, m) is not formally a metric. This is not a drawback as well known distances, such as Kullback-Leibler, are not symmetric and do not satisfy the triangle inequality.

We can extend the definition of a distance between two densities to that of a distance between a density and a class of densities as follows.

Definition 2

Let \(\mathcal {M}\) be a given model class and τ be a probability density that does not belong in the model class \(\mathcal {M}\). Then, the distance between τ and \(\mathcal {M}\) is defined as

whenever the infimum exists. Let \(m_{\mathrm {best}} \in \mathcal {M}\) be the best fitting model, then

We interpret ρ(τ, m) or \(\rho (\tau , \mathcal {M})\) as measuring the “lack-of-fit” in the sense that larger values of ρ(τ, m) mean that the model element m is a worst fit to τ for our statistical purposes. Therefore, we will require ρ(τ, m) to indicate the worst mistake that we can make if we use m instead of τ. The precise meaning of this statement will be obvious in the case of the total variation distance, as we will see that the total variation distance measures the error, in probability, that is made when m is used instead of τ.

Lindsay (1994) studied the relationship between the concepts of efficiency and robustness for the class of f- or ϕ-divergences in the case of discrete probability models and defined the concept of Pearson residuals as follows.

Definition 3

For a pair of densities τ, m define the Pearson residual by

with range the interval [−1, ∞).

This residual has been used by Lindsay (1994), Basu and Lindsay (1994), Markatou (2000, 2001), and Markatou et al. (1997, 1998) in investigating the robustness of the minimum disparity and weighted likelihood estimators respectively. It also appears in the definition of the class of power divergence measures defined by

For λ = −2, −1, −1/2, 0 and 1 one obtains the well-known Neyman’s chi-squared (divided by 2) distance, Kullback-Leibler divergence, twice-squared Hellinger distance, likelihood disparity and Pearson’s chi-squared (divided by 2) distance respectively. For additional details see Lindsay (1994) and Basu and Lindsay (1994).

A special class of distance measures we are particularly interested in is the class of chi-squared measures. In what follows we discuss in detail this class.

3 Chi-Squared Distance Measures

We present the class of chi-squared disparities and discuss their properties. We offer loss function interpretations of the chi-squared measures and show that Pearson’s chi-squared is the supremum of squared Z-statistics while Neyman’s chi-squared is the supremum of squared t-statistics. We also show that the symmetric chi-squared is a metric and offer a testing interpretation for it.

We start with the definition of a generalized chi-squared distance between two densities τ, m.

Definition 4

Let τ(t), m(t) be two discrete probability distributions. Then, define the class of generalized chi-squared distances as

where a(t) is a probability density function.

Notice that if we restrict ourselves to the multinomial setting and choose τ(t) = d(t) and a(t) = m(t), the resulting chi-squared distance is Pearson’s chi-squared statistic. Lindsay (1994) studied the robustness properties of a version of \(\chi _{a}^{2}(\tau , m)\) by taking a(t) = [τ(t) + m(t)]/2. The resulting distance is called symmetric chi-squared, and it is given as

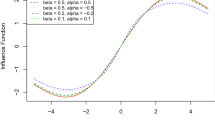

The chi-squared distance is symmetric because S 2(τ, m) = S 2(m, τ) and satisfies the triangle inequality. Thus, by definition it is a proper metric, and there is a strong dependence of the properties of the distance on the denominator a(t). In general we can use as a denominator \(a(t) = \alpha \tau (t) + \overline {\alpha }m(t)\), \(\overline {\alpha } = 1- \alpha \), α ∈ [0, 1]. The so defined distance is called blended chi-squared (Lindsay 1994).

3.1 Loss Function Interpretation

We now discuss the loss function interpretation of the aforementioned class of distances.

Proposition 1

Let τ, m be two discrete probabilities. Then

where a(t) is a density function, and h(X) has finite second moment.

Proof

Let h be a function defined on the sample space. We can prove the above statement by looking at the equivalent problem

Note that the transformation from the original problem to the simpler problem stated above is without loss of generality because the first problem is scale invariant, that is, the functions \(\widehat {h}\) and \(c\widehat {h}\) where c is a constant give exactly the same values. In addition, we have location invariance in that h(X) and h(X) + c give again the same values, and symmetry requires us to solve

To solve this linear problem with its quadratic constraint we use Lagrange multipliers. The Lagrangian is given as

Then

is equivalent to

or

Using the constraint we obtain

Therefore,

If we substitute the above value of h in the original problem we obtain

as was claimed. □

Remark 1

Note that \(\widehat {h}(t)\) is the least favorable function for detecting differences between means of two distributions.

Corollary 1

The standardized function which creates the largest difference in means is

where \(\chi _{a}^{2} = \sum \frac {[\tau (t) - m(t)]^{2}}{a(t)}\) , and the corresponding difference in means is

Remark 2

Are there any additional distances that can be obtained as solutions to an optimization problem? And what is the statistical interpretation of these optimization problems? To answer the aforementioned questions we first present the optimization problems associated with the Kullback-Leibler and Hellinger distances. In fact, the entire class of the blended weighted Hellinger distances can be obtained as a solution to an appropriately defined optimization problem. Secondly, we discuss the statistical interpretability of these problems by connecting them, by analogy, to the construction of confidence intervals via Scheffé’s method.

Definition 5

The Kullback-Leibler divergence or distance between two discrete probability density functions is defined as

Proposition 2

The Kullback-Leibler distance is obtained as a solution of the optimization problem

where h(⋅) is a function defined on the same space as τ.

Proof

It is straightforward if one writes the Lagrangian and differentiates with respect to h. □

Definition 6

The class of squared blended weighted Hellinger distances (BWHD α ) is defined as

where 0 < α < 1, \(\overline {\alpha } = 1- \alpha \) and τ(x), m β (x) are two probability densities.

Proposition 3

The class of BWHD α arises as a solution to the optimization problem

When \(\alpha = \overline {\alpha } = {1}/{2}\) , the (BWHD 1/2)2 gives twice the squared Hellinger distance.

Proof

Straightforward. □

Although both Kullback-Leibler and blended weighted Hellinger distances are solutions of appropriate optimization problems, they do not arise from optimization problems in which the constraints can be interpreted as variances. To exemplify and illustrate further this point we first need to discuss the connection with Scheffé’s confidence intervals.

One of the methods of constructing confidence intervals is Scheffé’s method. The method adjusts the significance levels of the confidence intervals for general contrasts to account for multiple comparisons. The procedure, therefore, controls the overall significance for any possible contrast or set of contrasts and can be stated as follows,

where \(\widehat {\sigma }\) is an estimated contrast variance, ∥⋅∥ denotes the Euclidean distance and K is an appropriately defined constant.

The chi-squared distances extend this framework as follows. Assume that \(\mathcal {H}\) is a class of functions which are taken, without loss of generality, to have zero expectation. Then, we construct the optimization problem \(\sup _{h} \int h(x) [ \tau (x) - m_{\beta }(x) ] dx\), subject to a constraint that can possibly be interpreted as a constraint on the variance of h(x) either under the hypothesized model distribution or under the distribution of the data.

The chi-squared distances arise as solutions of optimization problems subject to variance constrains. As such, they are interpretable as tools that allow the construction of “Scheffé-type” confidence intervals for models. On the other hand, distances such as the Kullback-Leibler or blended weighted Hellinger distance do not arise as solutions of optimization problems subject to interpretable variance constraints. As such they cannot be used to construct confidence intervals for models.

3.2 Loss Analysis of Pearson and Neyman Chi-Squared Distances

We next offer interpretations of the Pearson chi-squared and Neyman chi-squared statistics. These interpretations are not well known; furthermore, they are useful in illustrating the robustness character of the Neyman statistic and the non-robustness character of the Pearson statistic.

Recall that the Pearson statistic is

that is, the Pearson statistic is the supremum of squared Z-statistics.

A similar argument shows that Neyman’s chi-squared equals \( \sup _{h} t_{h}^{2}\), the supremum of squared t-statistics.

This property shows that the chi-squared measures have a statistical interpretation in that a small chi-squared distance indicates that the means are close on the scale of standard deviation. Furthermore, an additional advantage of the above interpretations is that the robustness character of these statistics is exemplified. Neyman’s chi-squared, being the supremum of squared t-statistics, is robust, whereas Pearson’s chi-squared is non-robust, since it is the supremum of squared Z-statistics.

Signal-to-Noise There is an additional interpretation of the chi-squared statistic that rests on the definition of signal-to-noise ratio that comes from the engineering literature.

Consider the pair of hypotheses H 0 : X i ∼ τ versus the alternative H 1 : X i ∼ m, where X i are independent and identically distributed random variables. If we consider the set of randomized test functions that depend on the “output” function h, the distance between H 0 and H 1 is

This quantity is a generalization of one of the more common definitions of signal-to-noise ratio. If, instead of working with a given output function h, we take supremum over the output functions h, we obtain Neyman’s chi-squared distance, which has been used in the engineering literature for robust detection. Further, the quantity S 2(τ, m) has been used in the design of decision systems (Poor 1980).

3.3 Metric Properties of the Symmetric Chi-Squared Distance

The symmetric chi-squared distance, defined as

can be viewed as a good compromise between the non-robust Pearson distance and the robust Neyman distance. In what follows, we prove that S 2(τ, m) is indeed a metric. The following series of lemmas will help us establish the triangle inequality for S 2(τ, m).

Lemma 1

If a, b, c are numbers such that 0 ≤ a ≤ b ≤ c then

Proof

First we work with the right-hand side of the above inequality. Write

where α = (b − a)/(c − a). Set \(g(t) = 1 / \sqrt {t}\), t > 0. Then \(g^{\prime \prime }(t) = \frac {d^{2}}{d t^{2}} g(t) > 0\), hence the function g(t) is convex. Therefore, the aforementioned relationship becomes

But

where

Thus

and hence

as was stated. □

Note that because the function is strictly convex we do not obtain equality except when a = b = c.

Lemma 2

If a, b, c are numbers such that a ≥ 0, b ≥ 0, c ≥ 0 then

Proof

We will distinguish three different cases.

- Case 1::

-

0 ≤ a ≤ b ≤ c is already discussed in Lemma 1.

- Case 2::

-

0 ≤ c ≤ b ≤ a can be proved as in Lemma 1 by interchanging the role of a and c.

- Case 3::

-

In this case b is not between a and c, thus either a ≤ c ≤ b or b ≤ a ≤ c.

Assume first that a ≤ c ≤ b. Then we need to show that

We will prove this by showing that the above expressions are the values of an increasing function at two different points. Thus, consider

It follows that

The function f 1(t) is increasing because \(f_{1}^{\prime } > 0\) (recall a ≥ 0) and since c ≤ b this implies f 1(c) ≤ f 1(b). Similarly we prove the inequality for b ≤ a ≤ c. □

Lemma 3

The triangle inequality holds for the symmetric chi-squared distance S 2(τ, m), that is,

Proof

Set

By Lemma 2

But

Therefore

and hence

as was claimed. □

Remark 3

The inequalities proved in Lemmas 1 and 2 imply that if τ ≠ m there is no “straight line” connecting τ and m, in that there does not exist g between τ and m for which the triangle inequality is an equality.

Therefore, the following proposition holds.

Proposition 4

The symmetric chi-squared distance S 2(τ, m) is indeed a metric.

A testing interpretation of the symmetric chi-squared distance:

let ϕ be a test function and consider the problem of testing the null hypothesis that the data come from a density f versus the alternative that the data come from g. Let θ be a random variable with value 1 if the alternative is true and 0 if the null hypothesis is true. Then

Proposition 5

The solution ϕ opt to the optimization problem

where π(θ) is the prior probability on θ, given as

is not a 0 − 1 decision, but equals the posterior expectation of θ given X. That is

the posterior probability that the alternative is correct.

Proof

We have

But

and

hence

as was claimed. □

Corollary 2

The minimum risk is given as

where

Proof

Substitute ϕ opt in \({\mathbb {E}}_{\pi }[ ( \theta - \phi )^{2} ]\) to obtain

Now set

Then

or, equivalently,

Therefore

as was claimed. □

Remark 4

Note that S 2(f, g) is bounded above by 4 and equals 4 when f, g are mutually singular.

The Kullback-Leibler and Hellinger distances are extensively used in the literature. Yet, we argue that, because they are obtained as solutions to optimization problems with non-interpretable (statistically) constraints, are not appropriate for our purposes. However, we note here that the Hellinger distance is closely related to the symmetric chi-squared distance, although this is not immediately obvious. We elaborate on this statement below.

Definition 7

Let τ, m be two probability densities. The squared Hellinger distance is defined as

We can more readily see the relationship between the Hellinger and chi-squared distances if we rewrite H 2(τ, m) as

Lemma 4

The Hellinger distance is bounded by the symmetric chi-squared distance, that is,

where S 2 denotes the symmetric chi-squared distance.

Proof

Note that

Also

and putting these relationships together we obtain

Therefore

and

and so

as was claimed. □

3.4 Locally Quadratic Distances

A generalization of the chi-squared distances is offered by the locally quadratic distances. We have the following definition.

Definition 8

A locally quadratic distance between two densities τ, m has the form

where K m (x, y) is a nonnegative definite kernel, possibly dependent on m, and such that

for all functions a(x).

Example 1

The Pearson distance can be written as

where  is the indicator function. It is a quadratic distance with kernel

is the indicator function. It is a quadratic distance with kernel

Sensitivity and Robustness In the classical robustness literature one of the attributes that a method should exhibit so as to be characterized as robust is the attribute of being resistant, that is insensitive, to the presence of a moderate number of outliers and to inadequacies in the assumed model.

Similarly here, to characterize a statistical distance as robust it should be insensitive to small changes in the true density, that is, the value of the distance should not be greatly affected by small changes that occur in τ. Lindsay (1994), Markatou (2000, 2001), and Markatou et al. (1997, 1998) based the discussion of robustness of the distances under study on a mechanism that allows the identification of distributional errors, that is, on the Pearson residual. A different system of residuals is the set of symmetrized residuals defined as follows.

Definition 9

If τ, m are two densities the symmetrized residual is defined as

The symmetrized residuals have range [−1, 1], with value − 1 when τ(t) = 0 and value 1 when m(t) = 0. Symmetrized residuals are important because they allow us to understand the way different distances treat different distributions.

The symmetric chi-squared distance can be written as a function of the symmetrized residuals as follows

where b(t) = [τ(t) + m(t)]/2.

The aforementioned expression of the symmetric chi-squared distance allows us to obtain inequalities between S 2(τ, m) and other distances.

A third residual system is the set of logarithmic residuals, defined as follows.

Definition 10

Let τ, m be two probability density. Define the logarithmic residuals as

with δ ∈ (−∞, ∞).

A value of this residual close to 0 indicates agreement between τ and m. Large positive or negative values indicate disagreement between the two models τ and m.

In an analysis of a given data set, there are two types of observations that cause concern: outliers and influential observations. In the literature, the concept of an outlier is defined as follows.

Definition 11

We define an outlier to be an observation (or a set of observations) which appears to be inconsistent with the remaining observations of the data set.

Therefore, the concept of an outlier may be viewed in relative terms. Suppose we think a sample arises from a standard normal distribution. An observation from this sample is an outlier if it is somehow different in relation to the remaining observations that were generated from the postulated standard normal model. This means that, an observation with value 4 may be surprising in a sample of size 10, but is less so if the sample size is 10, 000. In our framework therefore, the extent to which an observation is an outlier depends on both the sample size and the probability of occurrence of the observation under the specified model.

Remark 5

Davies and Gather (1993) state that although detection of outliers is a topic that has been extensively addressed in the literature, the word “outlier” was not given a precise definition. Davies and Gather (1993) formalized this concept by defining outliers in terms of their position relative to a central model, and in relationship to the sample size. Further details can be found in their paper.

On the other hand, the literature provides the following definition of an influential observation.

Definition 12 (Belsley 1980)

An influential observation is one which, either individually or together with several other observations, has a demonstrably larger impact on the calculated values of various estimates than is the case for most of the other observations.

Chatterjee and Hadi (1986) use this definition to address questions about measuring influence and discuss the different measures of influence and their inter-relationships.

The aforementioned definition is subjective, but it implies that one can order observations in a sensible way according to some measure of influence. Outliers need not be influential observations and influential observations need not be outliers. Large Pearson residuals correspond to observations that are surprising, in the sense that they occur in locations with small model probability. This is different from influential observations, that is from observations for which their presence or absence greatly affects the value of the maximum likelihood estimator.

Outliers can be surprising observations as well as influential observations. In a normal location-scale model, an outlying observation is both surprising and influential on the maximum likelihood estimator of location. But in the double exponential location model, an outlying observation is possible to be surprising but never influential on the maximum likelihood estimator of location as it equals the median.

Lindsay (1994) shows that the robustness of these distances is expressed via a key function called residual adjustment function (RAF). Further, he studied the characteristics of this function and showed that an important class of RAFs is given by \(A_{\lambda }(\delta )=\frac { (1+\delta )^{\lambda } -1 }{ \lambda + 1 }\), where δ is the Pearson residual (defined by Eq. (1)). From this class we obtain many RAFs; in particular, when λ = −2 we obtain the RAF corresponding to Neyman’s chi-squared distance. For details, see Lindsay (1994).

4 The Continuous Setting

Our goal is to use statistical distances to construct model misspecification measures. One of the key issues in the construction of misspecification measures in the case of data being realizations of a random variable that follows a continuous distribution is that allowances should be made for the scale difference between observed data and hypothesized model. That is, data distributions are discrete while the hypothesized model is continuous. Hence, we require the distance to exhibit discretization robustness, so it can account for the difference in scale.

To achieve discretization robustness, we need a sensitive distance, which implies a need to balance sensitivity and statistical noise. We will briefly review available strategies to deal with the problem of balancing sensitivity of the distance and statistical noise.

In what follows, we discuss desirable characteristics we require our distance measures to satisfy.

4.1 Desired Features

Discretization Robustness Every real data distribution is discrete, and therefore is different from every continuous distribution. Thus, a reasonable distance measure must allow for discretization, by saying that the discretized version of a continuous distribution must get closer to the continuous distribution as the discretization gets finer.

A second reason for requiring discretization robustness is that we will want to use the empirical distribution to estimate the true distribution, but without this robustness, there is no hope that the discrete empirical distribution will be closed to any model point.

The Problem of Too Many Questions Thus, to achieve discretization robustness, we need to construct a sensitive distance. This requirement dictates us to carry out a delicate balancing act between sensitivity and statistical noise.

Lindsay (2004) discusses in detail the problem of too many questions. Here we only note that to illustrate the issue Lindsay (2004) uses the chi-squared distance and notes that the statistical implications of a refinement in partition are the widening of the sensitivity to model departures in new “directions” but, at the same time, this act increases the statistical noise and therefore decreases the power of the chi-squared test in every existing direction.

There are a number of ways to address this problem, but they all seem to involve a loss of statistical information. This means we cannot ask all model fit questions with optimal accuracy. Two immediate solutions are as follows. First, limit the investigation only to a finite list of questions, essentially boiling down to prioritizing the questions asked of the sample. A number of classical goodness-of-fit tests create exactly such a balance. A second approach to the problem of answering infinitely many questions with only a finite number of data points is through the construction of kernel smoothed density measures. Those measures provide a flexible class of distances that allows for adjusting the sensitivity/noise trade-off. Before we briefly comment on this strategy, we discuss statistical distances between continuous probability distributions.

4.2 The L 2-Distance

The L 2 distance is very popular in density estimation. We show below that this distance is not invariant to one-to-one transformations.

Definition 13

The L 2 distance between two probability density functions τ, m is defined as

Proposition 6

The L 2 distance between two probability density functions is not invariant to one-to-one transformations.

Proof

Let Y = a(X) be a transformation of X, which is one-to-one. Then x = b(y), b(⋅) is the inverse transformation of a(⋅), and

Thus, the L 2 distance is not invariant under monotone transformations. □

Remark 6

It is easy to see that the L 2 distance is location invariant. Moreover, scale changes appear as a constant factor multiplying the L 2 distance.

4.3 The Kolmogorov-Smirnov Distance

We now discuss the Kolmogorov-Smirnov distance used extensively in goodness-of-fit problems, and present its properties.

Definition 14

The Kolmogorov-Smirnov distance between two cumulative distribution functions F, G is defined as

Proposition 7 (Testing Interpretation)

Let H

0 : τ = f versus H

1 : τ = g and that only test functions φ of the form  or

or  for arbitrary x

0 are allowed. Then

for arbitrary x

0 are allowed. Then

Proof

The difference between power and size of the test is G(x 0) − F(x 0). Therefore,

as was claimed. □

Proposition 8

The Kolmogorov-Smirnov distance is invariant under monotone transformations.

Proof

Write

Let Y = a(X) be a one-to-one transformation and b(⋅) be the corresponding inverse transformation. Then x = b(y) and dy = a ′(x)dx, so

Therefore,

and the Kolmogorov-Smirnov distance is invariant under one-to-one transformations. □

Proposition 9

The Kolmogorov-Smirnov distance is discretization robust.

Proof

Notice that we can write

with  being thought of as a “smoothing kernel”. Hence, comparisons between discrete and continuous distributions are allowed and the distance is discretization robust. □

being thought of as a “smoothing kernel”. Hence, comparisons between discrete and continuous distributions are allowed and the distance is discretization robust. □

The Kolmogorov-Smirnov distance is a distance based on the probability integral transform. As such, it is invariant under monotone transformations (see Proposition 8). A drawback of distances based on probability integral transforms is the fact that there is no obvious extension in the multivariate case. Furthermore, there is not a direct loss function interpretation of these distances when the model used is incorrect. In what follows, we discuss chi-squared and quadratic distances that avoid the issues listed above.

4.4 Exactly Quadratic Distances

In this section we briefly discuss exactly quadratic distances. Rao (1982) introduced the concept of an exact quadratic distance for discrete population distributions and he called it quadratic entropy. Lindsay et al. (2008) gave the following definition of an exactly quadratic distance.

Definition 15 (Lindsay et al. 2008)

Let F, G be two probability distributions, and K is a nonnegative definite kernel. A quadratic distance between F, G has the form

Quadratic distances are of interest for a variety of reasons. These include the fact that the empirical distance \(\rho _{K}(\widehat {F}, G)\) has a fairly simple asymptotic distribution theory when G identifies with the true model τ, and that several important distances are exactly quadratic (see, for example, Cramér-von Mises and Pearson’s chi-squared distances). Furthermore, other distances are asymptotically locally quadratic around G = τ. Quadratic distances can be thought of as extensions of the chi-squared distance class.

We can construct an exactly quadratic distance as follows. Let F, G be two probability measures that a random variable X may follow. Let ε be an independent error variable with known density k h (ε), where h is a parameter. Then, the random variable Y = X + ε has an absolutely continuous distribution such that

or

Let

be the kernel-smoothed Pearson’s chi-squared statistic. In what follows, we prove that P ∗2(F, G) is an exactly quadratic distance.

Proposition 10

The distance P ∗2(F, G) is an exactly quadratic distance provided that \(\iint K(s, t)d(F-G)(s)d(F-G)(t) < \infty \) , where \(K(s, t) = \int \frac {k_{h}(y-s) k_{h}(y-t)}{g^{*}(y)} dy \).

Proof

Write

Now using Fubini’s theorem, the above relationship can be written as

with K(s, t) given above. □

Remark 7

-

(a)

The issue with many classical measures of goodness-of-fit is that the balance between sensitivity and statistical noise is fixed. On the other hand, one might wish to have a flexible class of distances that allows for adjusting the sensitivity/noise trade-off. Lindsay (1994) and Basu and Lindsay (1994) introduced the idea of smoothing and investigated numerically the blended weighted Hellinger distance, defined as

$$\displaystyle \begin{aligned} BWHD_{\alpha} (\tau^{*}, m_{\theta}^{*}) = \int \frac{ ( \tau^{*}(x) - m_{\theta}^{*}(x) )^{2} }{ \left(\alpha \sqrt{\tau^{*}(x)} + \overline{\alpha} \sqrt{ m_{\theta}^{*}(x) } \right)^{2} } dx,\end{aligned} $$where \(\overline {\alpha }= 1- \alpha \), α ∈ [1/3, 1]. When α = 1/2, the BWHD 1/2 equals the Hellinger distance.

-

(b)

Distances based on kernel smoothing are natural extensions of the discrete distances. These distances are not invariant under one-to-one transformations, but they can be easily generalized to higher dimensions. Furthermore, numerical integration is required for the practical implementation and use of these distances.

5 Discussion

In this paper we study statistical distances with a special emphasis on the chi-squared distance measures. We also introduce an extension of the chi-squared distance, the quadratic distance, introduced by Lindsay et al. (2008). We offered statistical interpretations of these distances and showed how they can be obtained as solutions of certain optimization problems. Of particular interest are distances with statistically interpretable constraints such as the class of chi-squared distances. These allow the construction of confidence intervals for models. We further discussed robustness properties of these distances, including discretization robustness, a property that allows discrete and continuous distributions to be arbitrarily close. Lindsay et al. (2014) study the use of quadratic distances in problems of goodness-of-fit with particular focus on creating tools for studying the power of distance-based tests. Lindsay et al. (2014) discuss one-sample testing and connect their methodology with the problem of kernel selection and the requirements that are appropriate in order to select optimal kernels. Here, we outlined the foundations that led to the aforementioned work and showed how these elucidate the performance of statistical distances as inferential functions.

References

Basu, A., & Lindsay, B. G. (1994). Minimum disparity estimation for continuous models: Efficiency, distributions and robustness. Annals of the Institute of Statistical Mathematics, 46, 683–705.

Belsley, D. A., Kuh, E., & Welsch, R. E. (1980). Regression diagnostics: Identifying influential data and sources of collinearity. New York: Wiley.

Beran, R. (1977). Minimum Hellinger distance estimates for parametric models. Annals of Statistics, 5, 445–463.

Berkson, J. (1980). Minimum chi-square, not maximum likelihood! Annals of Statistics, 8, 457–487.

Chatterjee, S., & Hadi, A. S. (1986). Influential observations, high leverage points, and outliers in linear regression. Statistical Science, 1, 379–393.

Davies, L., & Gather, U. (1993). The identification of multiple outliers. Journal of the American Statistical Association, 88, 782–792.

Lindsay, B. G. (1994). Efficiency versus robustness: The case for minimum Hellinger distance and related methods. Annals of Statistics, 22, 1081–1114.

Lindsay, B. G. (2004). Statistical distances as loss functions in assessing model adequacy. In The nature of scientific evidence: Statistical, philosophical and empirical considerations (pp. 439–488). Chicago: The University of Chicago Press.

Lindsay, B. G., Markatou, M., & Ray, S. (2014). Kernels, degrees of freedom, and power properties of quadratic distance goodness-of-fit tests. Journal of the American Statistical Association, 109, 395–410.

Lindsay, B. G., Markatou, M., Ray, S., Yang, K., & Chen, S. C. (2008). Quadratic distances on probabilities: A unified foundation. Annals of Statistics, 36, 983–1006.

Markatou, M. (2000). Mixture models, robustness, and the weighted likelihood methodology. Biometrics, 56, 483–486.

Markatou, M. (2001). A closer look at weighted likelihood in the context of mixture. In C. A. Charalambides, M. V. Koutras, & N. Balakrishnan (Eds.), Probability and statistical models with applications (pp. 447–468). Boca Raton: Chapman & Hall/CRC.

Markatou, M., Basu, A., & Lindsay, B. G. (1997). Weighted likelihood estimating equations: The discrete case with applications to logistic regression. Journal of Statistical Planning and Inference, 57, 215–232.

Markatou, M., Basu, A., & Lindsay, B. G. (1998). Weighted likelihood equations with bootstrap root search. Journal of the American Statistical Association, 93, 740–750.

Matusita, K. (1955). Decision rules, based on the distance, for problems of fit, two samples, and estimation. Annals of Mathematical Statistics, 26, 613–640.

Neyman, J. (1949). Contribution to the theory of the χ 2 test. In Proceedings of the Berkeley Symposium on Mathematical Statistics and Probability (pp. 239–273). Berkeley: The University of California Press.

Poor, H. (1980). Robust decision design using a distance criterion. IEEE Transactions on Information Theory, 26, 575–587.

Rao, C. R. (1963). Criteria of estimation in large samples. Sankhya Series A, 25, 189–206.

Rao, C. R. (1982). Diversity: Its measurement, decomposition, apportionment and analysis. Sankhya Series A, 44, 1–21.

Wald, A. (1950). Statistical decision functions. New York: Wiley.

Acknowledgements

The first author dedicates this paper to the memory of Professor Bruce G. Lindsay, a long time collaborator and friend, with much respect and appreciation for his mentoring and friendship. She also acknowledges the Department of Biostatistics, School of Public Health and Health Professions and the Jacobs School of Medicine and Biomedical Sciences, University at Buffalo, for supporting this work. The second author acknowledges the Troup Fund, Kaleida Foundation for supporting this work.

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Rights and permissions

Copyright information

© 2017 Springer International Publishing AG

About this chapter

Cite this chapter

Markatou, M., Chen, Y., Afendras, G., Lindsay, B.G. (2017). Statistical Distances and Their Role in Robustness. In: Chen, DG., Jin, Z., Li, G., Li, Y., Liu, A., Zhao, Y. (eds) New Advances in Statistics and Data Science. ICSA Book Series in Statistics. Springer, Cham. https://doi.org/10.1007/978-3-319-69416-0_1

Download citation

DOI: https://doi.org/10.1007/978-3-319-69416-0_1

Published:

Publisher Name: Springer, Cham

Print ISBN: 978-3-319-69415-3

Online ISBN: 978-3-319-69416-0

eBook Packages: Mathematics and StatisticsMathematics and Statistics (R0)