Abstract

This chapter covers extensively the methods used to determine the flow velocity starting from the recordings of particle images. After an introduction to the concept of spatial correlation and Fourier methods, an overview of the different PIV evaluation methods is given. Ample discussions devoted to explain the details of the discrete spatial correlation operator in use for PIV interrogation. The main features associated to the FFT implementation (aliasing, displacement range limit and bias error) are discussed. Methods that enhance the correlation signal either in terms of robustness or of accuracy are surveyed. The discussion of ensemble correlation techniques and the use of single-pixel correlation in micro-PIV and macroscopic experiments is a novel addition to the present edition. A detailed description is given of the standard image interrogation based on multigrid image deformation, where the advantages in the treatment of complex flows are discussed as well as the issues in terms of resolution and numerical stability. Another new feature introduced in this chapter is the discussion of the recent developments of algorithms in use for PIV time series as obtained by high-speed PIV systems. Namely, the algorithms to perform Multi frame-PIV, Pyramid Correlation and Fluid Trajectory Correlation and Ensemble Evaluation are treated. Furthermore, a new section that discusses the methods used for individual particle tracking is introduced. The discussion describes the working principles of PTV for planar PIV. The potential of the latter techniques in terms of spatial resolution as well as their limits of applicability in terms of image density are presented.

An overview of the Digital Content to this chapter can be found at [DC5.1].

Access provided by CONRICYT-eBooks. Download chapter PDF

Similar content being viewed by others

This chapter treats the fundamental techniques for the evaluation of PIV recordings. In order to extract the displacement information from a PIV recording some sort of interrogation scheme is required. Initially, this interrogation was performed manually on selected images with relatively sparse seeding which allowed the tracking of individual particles [2, 20]. With computers and image processing becoming more commonplace in the laboratory environment it became possible to automate the interrogation process of the particle track images [25, 27, 28, 98]. The application of tracking methods, that is to follow the images of an individual tracer particle from exposure to exposure, is best practicable in the low image density case, see Fig. 1.10a. The low image density case often appears in strongly three-dimensional high-speed flows (e.g. turbomachinery) where it is not possible to provide enough tracer particles or in two phase flows, where the transport of the particles themselves is investigated. Additionally, the Lagrangian motion of a fluid element can be determined by applying tracking methods [14, 19].

In principle, however, a high data density is desired on the PIV vector fields, especially if strong spatial flow variations need to be resolved or for the comparison of experimental data with the results of numerical calculations. This demand requires a medium concentration of the images of the tracer particles in the PIV recording. Medium image concentration is characterized by the fact that matching pairs of particle images – due to subsequent illuminations – cannot be detected by visual inspection of the PIV recordings, see Fig. 1.10b. Hence, statistical approaches, which will be described in the next sections, were developed. After a statistical evaluation has been performed first, tracking algorithms can be applied additionally in order to achieve sub-window spatial resolution of the measurement, which is known as super resolution PIV [45].

Tracking algorithms have continuously been improved during the past decade. Today, particle tracking is an interesting alternative to statistical PIV evaluation methods as we will see in more detail in Sect. 5.4.

Comparisons between cross-correlation methods and particle tracking techniques together with an assessment of their performance have been performed in the framework of the International PIV Challenge [40, 88,89,90].

5.1 Correlation and Fourier Transform

5.1.1 Correlation

The main objective of the statistical evaluation of PIV recordings at medium image density is to determine the displacement between two patterns of randomly distributed particle images, which are stored as a 2D distribution of gray levels. Looking around in other areas of metrology (e.g. radars), it is common practice in signal analysis to determine, for example, the shift in time between two (nearly) identical time signals by means of correlation techniques. Details about the mathematical principles of the correlation technique, the basic relations for correlated and uncorrelated signals and the application of correlation techniques in the investigation of time signals can be found in many textbooks [8, 63, 64]. The theory of correlation is easily extended from the one dimensional (1D time signal) to the two- and three-dimensional (gray level spatial distribution) case. In Chap. 4 the use of auto- and cross-correlation techniques for statistical PIV evaluation has already been explained. Analogously to spectral time signal representations, for a 2D spatial signal I(x, y) the power spectrum \(| \hat{I}(r_x,r_y)|^2\) can be determined where \(r_x,r_y\) are spatial frequencies in orthogonal directions. The basic theorems for correlation and Fourier transform known from the theory of time signals are also valid for the 2D case (with appropriate modifications) [10].

For the calculation of the auto-correlation function two possibilities exist: either direct numerical calculation or indirectly (numerically or optically), using the Wiener-Khinchin theorem [8, 10]. This theorem states that the Fourier transform of the auto-correlation function \(R_{\text {I}}\) and the power spectrum \(| \hat{I}(r_x,r_y)|^2\) of an intensity field I(x, y) are Fourier transforms of each other.

The direct numerical calculation is computationally more intensive and has barely been used for 2D PIV. It becomes a viable approach for recording with a sparse distribution of particles, such as encountered in 3D PIV see Sect. 9.3.

Figure 5.1 illustrates that the auto-correlation function can either be determined directly in the spatial domain (upper half of the figure) or indirectly by Fourier transform FT (left hand side), multiplication, that is the calculation of the squared modulus, in the frequency plane (lower half of the figure), and by inverse Fourier transform FT\(^{-1}\) (right hand side).

5.1.2 Optical Fourier Transform

As already mentioned in Sect. 2.5 the far field diffraction pattern of an aperture transmissivity distribution is represented by its Fourier transform [26, 47, 68]. A lens can be used to transfer the image from the far field close to the aperture. For a mathematical derivation of this result some assumptions have to be made, which are described by the Fraunhofer approximation. These assumptions (large distance between object and image plane, phase factors) can be fulfilled in practical optical setups for Fourier transforms.

Figure 5.2 shows two different configurations for such optical Fourier processors. In the arrangement on the left hand side the object, which would consist of a transparency to be Fourier transformed (e.g. the photographic PIV recording), is placed in front of the so-called Fourier lens (at -f usually). In the second setup (right hand side) the object is placed behind the lens. As derived in the book of Goodman [26] both arrangements differ only by the phase factors of the complex spectrum and a scale factor. Light sensors (photographic film as well as CCD sensors) are only sensitive to the light intensity. The intensity corresponds to the squared modulus of the complex distribution of the electromagnetic field; hence phase differences in the light wave cannot be detected. Therefore, both arrangements shown in Fig. 5.2 can be used for PIV evaluation. The result of the optical Fourier transform (OFT, dashed line in Fig. 5.1) is the power spectrum of the gray value distribution of the transparency.

In the following this will be illustrated for the case of a pair of two particle images. White (transparent) images of a tracer particle on a black (opaque) background will form a double aperture on the photographic PIV recording. With good lens systems the diameter of an image of a tracer particle on the recording is of the order of 20 to \(30\,\upmu \mathrm{m}\). The spacing between the two images of a tracer particle should be approximately 150–250 \(\upmu \mathrm{m}\), in order to obtain optimum conditions for optical evaluation (compare Sect. 3.2). Figure 5.3 shows a cross-sectional cut through the diffraction pattern of a double aperture (parameters are similar to those of the PIV experiment). The figure at the left side shows several peaks of the light intensity distribution under an envelope. The envelope represents the diffraction pattern of a single aperture with the same diameter (i.e. the Airy pattern, see Sect. 2.5). The intensity distribution will extend in the 2D presentation in the vertical direction, thus forming a fringe pattern, that is the Young’s fringes. The fringes are oriented normal to the direction of the displacement of the apertures (tracer images). The displacement between the fringes is inversely proportional to the displacement of the apertures (tracer images). If the distance between the apertures (tracer images) is decreased, the distance between the fringes will increase inversely. This is illustrated in the center of Fig. 5.3, where the distance between the two apertures is only half that of the example on the left side. It can be seen that the distance between the fringes is increased by a factor of two. The same inverse relation, which is due to the scaling theorem of the Fourier transform, is valid for the envelope of the diffraction pattern: if the diameter of the aperture (particle images) decreases, the extension of the Airy pattern will increase inversely (see Fig. 5.3, right side). As a consequence, more fringes can be detected in those fringe patterns which are generated by smaller apertures (particle images). This is one reason to explain why small and well focused particle images will increase the quality and detection probability in the evaluation of PIV recordings. Due to another property of the Fourier transform, that is the shift theorem, the characteristic shape of the intensity pattern does not change if the position of the particle image pairs is changed inside the interrogation spot. Increasing the number of particle image pairs also does not change the Young’s fringe pattern significantly. Of course this is not true for the case of just two image pairs: two fringe systems of equal intensity will overlap, allowing no unambiguous evaluation.

5.1.3 Digital Fourier Transform

The digital Fourier transform is the basic tool of modern signal and image processing. A number of textbooks describe the details [8, 10, 36, 109]. The breakthrough of the digital Fourier transform is due to the development of fast digital computers and to the development of efficient algorithms for its calculation (Fast Fourier Transformation, FFT) [8, 10, 12, 109]. Those aspects of the digital Fourier transform relevant for the understanding of digital PIV evaluation will be described in Sect. 5.3.

5.2 Overview of PIV Evaluation Methods

In the following the different methods for the evaluation of PIV recordings by means of correlation and Fourier techniques will be summarized.

Figure 5.4 presents a flow chart of the fully digital auto-correlation method, which can be implemented in a straight-forward manner following the equations given in Chap. 4. The PIV recording is sampled with comparatively small interrogation windows (typically 30–250 samples in each direction). For each window the auto-correlation function is calculated and the position of the displacement peak in the correlation plane is determined. The calculation of the auto-correlation function is carried out either in the spatial domain (upper part of Fig. 5.1) or – in most cases – through the use of FFT algorithms.

If the PIV recording system allows the employment of the double frame/single exposure recording technique (see Fig. 3.2) the evaluation of the PIV recordings is performed by cross-correlation (Fig. 5.5). In this case, the cross-correlation between two interrogation windows sampled from the two recordings is calculated. As will be explained later in Sect. 5.3, it is advantageous to offset both these samples according to the mean displacement of the tracer particles between the two illuminations. This reduces the in-plane loss of correlation and therefore increases the correlation peak strength. The calculation of the cross-correlation function is generally computed numerically by means of efficient FFT algorithms.

Single frame/double exposure recordings may also be evaluated by a cross-correlation approach instead of auto-correlation by choosing interrogation windows slightly displaced with respect to each other in order to compensate for the in-plane displacement of particle images. Depending on the different parameters, auto-correlation peaks may also appear in the correlation plane in addition to the cross-correlation peak.

Computer memory and computation speed being limited in the beginning of the eighties, PIV work was strongly promoted by the existence of optical evaluation methods. The most widely used method was the Young’s fringes method [DC5.2], which in fact is an optical-digital method, employing optical as well as digital Fourier transforms for the calculation of the correlation function.

In the next section the most commonly used and very flexible digital evaluation methods will be discussed in more detail.

5.3 PIV Evaluation

With the wide-spread introduction of digital imaging and improved computing capabilities the optical evaluation approaches used for the analysis of photographic PIV recordings quickly became obsolete. Initially a desktop slide scanner for the digitization of the photographic recording replaced the complex opto-mechanical interrogation assemblies with computer-based interrogation algorithms. Then rapid advances in electronic imaging further allowed for a replacement of the rather cumbersome photographic recording process. In the following we describe the necessary steps in the fully digital analysis of PIV recordings using statistical methods. Initially, the focus is on the analysis of single exposed image pairs, that is single exposure/double frame PIV, by means of cross-correlation. The analysis of multiple exposure/single frame PIV recordings can be viewed as a special case of the former.

5.3.1 Discrete Spatial Correlation in PIV Evaluation

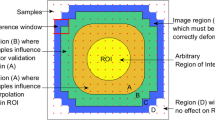

Before introducing the cross-correlation method in the evaluation of a PIV image, the task at hand should be defined from the point of view of linear signal or image processing. First of all let us assume we are given a pair of images containing particle images as recorded from a light sheet in a traditional PIV recording geometry. The particles are illuminated stroboscopically so that they do not produce streaks in the images. The second image is recorded a short time later during which the particles will have moved according to the underlying flow (for the time being ignoring effects such as particle lag, three-dimensional motion, etc.). Given this pair of images, the most we can hope for is to measure the straight-line displacement of the particle images since the curvature or acceleration information cannot be obtained from a single image pair. Further, the seeding density is too large and homogeneous that it is difficult to match up discrete particles. In some cases the spatial translation of groups of particles can be observed. The image pair can yield a field of linear displacement vectors where each vector is formed by analyzing the movement of localized groups of particles. In practice, this is accomplished by extracting small samples or interrogation windows and analyzing them statistically (Fig. 5.6).

From a signal (image) processing point of view, the first image may be considered the input to a system whose output produces the second image of the pair (Fig. 5.7). The system’s transfer function, \(\varvec{H}\), converts the input image I to the output image \(I'\) and is comprised of the displacement function \(\varvec{d}\) and an additive noise process, N. The function of interest is a shift by the vector \(\mathbf {d}\) as it is responsible for displacing the particle images from one image to the next. This function can be described, for instance, by a convolution with \(\delta ({\varvec{x}}-{\varvec{d}})\). The additive noise process, N, in Fig. 5.7 models effects due to recording noise and three-dimensional flow among other things. If both \(\varvec{d}\) and N are known, it should then be possible to use them as transfer functions for the input image I to produce the output image \(I'\). With both images I and \(I'\) known the aim is to estimate the displacement field \(\varvec{d}\) while excluding the effects of the noise process N. The fact that the signals (i.e. images) are not continuous – the dark background cannot provide any displacement information – makes it necessary to estimate the displacement function \(\varvec{d}\) using a statistical approach based on localized interrogation windows (or samples).

One possible scheme to recover the local displacement function would be to deconvolve the image pair. In principle this can be accomplished by dividing the respective Fourier transforms by each other. This method works when the noise in the signals is insignificant. However, the noise associated with realistic recording conditions quickly degrades the data yield. Also the signal peak is generally too sharp to allow for a reliable sub-pixel estimation of the displacement.

Rather than estimating the displacement function \(\varvec{d}\) analytically, the method of choice is to locally find the best match between the images in a statistical sense. This is accomplished through the use of the discrete cross-correlation function, whose integral formulation was already described in Sect. 4:

The variables I and \(I'\) are the samples (e.g. intensity values) as extracted from the images where \(I'\) can be taken larger than the template I. Essentially the template I is linearly ‘shifted’ around in the sample \(I'\) without extending over edges of \(I'\). For each choice of sample shift (x, y), the sum of the products of all overlapping pixel intensities produces one cross-correlation value \(R_{{\text {II}}}(x,y)\). By applying this operation for a range of shifts \((-M\le x\le +M, -N\le y \le +N)\), a correlation plane the size of \((2 M + 1) \times (2 N + 1)\) is formed. This is shown graphically in Fig. 5.8. For shift values at which the samples’ particle images align with each other, the sum of the products of pixel intensities will be larger than elsewhere, resulting in a high cross-correlation value \(R_{\text {II}}\) at this position (see also Fig. 5.9). Essentially the cross-correlation function statistically measures the degree of match between the two samples for a given shift. The highest value in the correlation plane can then be used as a direct estimate of the particle image displacement which will be discussed in detail in Sect. 5.3.5.

The cross-correlation function \(R_{{\text {II}}}\) (right) as computed from real data by correlating a smaller template I (\(32\times 32\,\text {pixel}\)) with a larger sample \(I'\) (\(64\times 64\,\text {pixel}\)). The mean shift of the particle images is approximately 12 pixel to the right. The approximate location of best match of I within \(I'\) is indicated as a white rectangle

Upon examination of this direct implementation of the cross-correlation function two things are obvious: first, the number of multiplications per correlation value increases in proportion to the interrogation window (or sample) size, and second, the cross-correlation method inherently recovers linear shifts only. No rotations or deformations can be recovered by this first order method. Therefore, the cross-correlation between two particle image samples will only yield the displacement vector to first order, that is, the average linear shift of the particles within the interrogation window. This means that the interrogation window size should be chosen sufficiently small such that the higher-order effects can be neglected.

The first observation concerning the quadratic increase in multiplications with sample size imposes a quite substantial computational effort. In a typical PIV interrogation the sampling windows cover of the order of several thousand pixel while the dynamic range in the displacement may be as large as \(\pm 10\) to \(\pm 20\,\text {pixel}\) which would require up to one million multiplications and summations to form only one correlation plane. Clearly, taking into account that several thousand displacement vectors can be obtained from a single PIV recording, a more efficient means of computing the correlation function is required.

5.3.1.1 Frequency Domain Based Calculation of Correlation

The alternative to calculating the cross-correlation directly using Eq. (5.1) is to take advantage of the correlation theorem which states that the cross-correlation of two functions is equivalent to a complex conjugate multiplication of their Fourier transforms:

where \(\hat{I}\) and \(\hat{I'}\) are the Fourier transforms of the functions I and \(I'\), respectively. In practice the Fourier transform is efficiently implemented for discrete data using the fast Fourier transform or FFT which reduces the computation from \(\text {O}[N^2]\) operations to \(\text {O}[N \log _2 N]\) operations [12, 36, 66]. The tedious two-dimensional correlation process of Eq. (5.1) can be reduced to computing two two-dimensional FFT’s on equal sized samples of the image followed by a complex-conjugate multiplication of the resulting Fourier coefficients. These are then inversely Fourier transformed to produce the actual cross-correlation plane which has the same spatial dimensions, \(N\times N\), as the two input samples. Compared to \(\text {O}[N^4]\) for the direct computation of the two-dimensional correlation the process is reduced to \(\text {O}[N^2 \log _2 N]\) operations. The computational efficiency of this implementation can be increased even further by observing the symmetry properties between real valued functions and their Fourier transform, namely the real part of the transform is symmetric: \(\text {Re}(\hat{I}_i) = \text {Re}(\hat{I}_{-i})\), while the imaginary part is antisymmetric: \(\text {Im}(\hat{I}_i) = -\text {Im}(\hat{I}_{-i})\). In practice two real-to-complex, two-dimensional FFTs and one complex-to-real inverse, two-dimensional FFT are needed, each of which require approximately half the computation time of standard FFTs (Fig. 5.10). A further increase in computation speed can of course be achieved by optimizing the FFT routines such as using lookup tables for the required data, reordering and weighting coefficients and/or fine tuning the machine level code [22, 23].

The use of two-dimensional FFT’s for the computation of the cross-correlation plane has a number of properties whose effects have to be dealt with.

Fixed sample sizes: The FFT’s computational efficiency is mainly derived by recursively implementing a symmetry property between the even and odd coefficients of the discrete Fourier transform (the Danielson–Lanczos lemma [12, 66]). The most common FFT implementation requires the input data to have a base-2 dimension (i.e. \(16\times 16\,\text {pixel}\) or \(32\times 32\,\text {pixel}\) samples). For reasons explained below it generally is not possible to simply pad a sample with zeroes to make it a base-2 sized sample.

Periodicity of data: By definition, the Fourier transform is an integral (or sum) over a domain extending from negative infinity to positive infinity. In practice however, the integrals (or sums) are computed over finite domains which is justified by assuming the data to be periodic, that is, the signal (i.e. image sample) continually repeats itself in all directions. While for spectral estimation there exist a variety of methods to deal with the associated artifacts, such as windowing, their use in the computation of the cross-correlation will introduce systematic errors or will even hide the correlation signal in noise.

One of these methods, zero padding, which entails extending the sample size to four times the original size by filling in zeroes, will perform poorly because the data (i.e. image sample) generally consists of a nonzero (noisy) background on which the signal (i.e. particle images) is overlaid. The edge discontinuity brought about in the zero padding process contaminates the spectra of the data with high frequency noise which in turn deteriorates the cross-correlation signal. The slightly more advanced technique of FFT data windowing removes the effects of the edge discontinuity, but leads to a nonuniform weighting of the data in the correlation plane and to a bias of the recovered displacement vector. The treatment of this systematic error is described in more detail below.

Aliasing: Since the input data sets to the FFT-based correlation algorithm are assumed to be periodic, the correlation data itself is also periodic. If the data of length N contains a signal (i.e. displacements) exceeding half the sample size N / 2, then the correlation peak will be folded back into the correlation plane to appear on the opposite side. For a displacement \(d_{\text {x,true}} > N/2\), the measured value will be \(d_{\text {x,meas.}} = d_{\text {x,true}} - N\). In this case the sampling criterion (Nyquist-Shannon sampling theorem) has been violated causing the measurement to be aliased. The proper solution to this problem is to either increase the interrogation window size, or, if possible, reduce the laser pulse delay, \(\varDelta t\).

Displacement range limitation: As mentioned before the sample size N limits the maximum recoverable displacement range to \(\pm N/2\). In practice however, the signal strength of the correlation peak will decrease with increasing displacements, due to the proportional decrease in possible particle matches. Earlier literature reports a value of N / 3 to be an adequate limit for the recoverability of the displacement vector [107]. A more conservative, but widely adopted limit is N / 4, sometimes referred to as the one-quarter rule [43]. However, by using iterative evaluation techniques with window-shifting techniques these requirements are only essential for the first pass and obsolete for all other passes as we will see later.

Bias error: Another side effect of the periodicity of the correlation data is that the correlation estimates are biased. With increasing shifts less data are actually correlated with each other since the periodically continued data of the correlation template makes no contribution to the actual correlation value. Values on the edge of the correlation plane are computed from only the overlapping half of the data and should be weighted accordingly. Unless the correlation values are weighted accordingly, the displacement estimate will be biased to a lower value (Fig. 5.11). This error decreases with increasing sample sizes. Larger particle images and along with it wider correlation peaks are associated with larger bias errors. The proper weighting function to account for this biasing effect will be described in Sect. 5.3.5.

If all of the above points are properly handled, an FFT-based interrogation algorithm as shown in Fig. 5.10 will reliably provide the necessary correlation data from which the displacement data can be retrieved. For the reasons given above, this implementation of the cross-correlation function is sometimes referred to as circular cross-correlation compared to the linear cross-correlation of Eq. (5.1).

5.3.1.2 Calculation of the Correlation Coefficient

For a number of cases it may be useful to quantify the degree of correlation between the two image samples. The standard cross-correlation function Eq. (5.1) will yield different maximum correlation values for the same degree of matching because the function is not normalized. For instance, samples with many (or brighter) particle images will produce much higher correlation values than interrogation windows with fewer (or weaker) particle images. This makes a comparison of the degree of correlation between the individual interrogation windows impossible. The cross-correlation coefficient function normalizes the cross-correlation function Eq. (5.1) properly:

where

The value \(\mu _I\) is the average of the template and is computed only once while \(\mu _{{\text {I}}'}(x,y)\) is the average of \(I'\) coincident with the template I at position (x, y). It has to be computed for every position (x, y). Equation (5.3) is considerably more difficult to implement using an FFT-based approach and is usually computed directly in the spatial domain. In spite of its computational complexity, the equation does permit the samples to be of unequal size which can be very useful in matching up small groups of particles. Nevertheless a first order approximation to the proper normalization is possible if the interrogation windows are of equal size and are not zero-padded:

- Step 1::

-

Sample the images at the desired locations and compute the mean and standard deviations of each.

- Step 2::

-

Subtract the mean from each of the samples.

- Step 3::

-

Compute the cross-correlation function using 2D-FFTs as displayed in Fig. 5.10.

- Step 4::

-

Divide the cross-correlation values by the standard deviations of the original samples. Due to this normalization the resulting values will fall in the range \(-1 \le c_{\text {II}}\le 1\).

- Step 5::

-

Proceed with the correlation peak detection taking into account all artifacts present in FFT-based cross-correlation.

5.3.2 Correlation Signal Enhancement

5.3.2.1 Image Pre-processing

The correlation signal is strongly affected by variations of the image intensity. The correlation peak is dominated by brighter particle images with weaker particle images having a reduced influence. Also the non-uniform illumination of particle image intensity, due to light-sheet non-uniformities or pulse-to-pulse variations, as well as irregular particle shape, out-of-plane motion, etc. introduce noise in the correlation plane. For this reason image enhancement prior to processing the image is oftentimes advantageous. The main goal of the applied filters is to enhance particle image contrast and to bring particle image intensities to a similar signal level such that all particle images have a similar contribution in the correlation function [85, 102, 108].

Among the image enhancement methods, background subtraction from the PIV recordings reduces the effects of laser flare and other stationary image features. This background image can either be recorded in the absence of seeding, or, if this is not possible, through computation of an average or minimum intensity image from a sufficiently large number of raw PIV recordings (at least \(20-50\)). These images can also be used to extract areas to be masked.

A filter-based approach to image enhancement is to high-pass filter the images such that the background variations with low spatial frequency are removed leaving the particle images unaffected. In practice this is realized by calculating a low-passed version of the original image and subtracting it from the original. Here the filter kernel width should be larger than the diameter of the particle images: \(k_{\text {smooth}} > d_\tau \).

Thresholding or image binarization, possibly in combination with prior high-pass filtering, results in images where all particles have the same intensity and thus have equal contribution to the correlation function. This binarization, however, is associated with an increase in the measurement uncertainty.

The application of a narrow-width, low-pass filter may be suitable to remove high-frequency noise (e.g. camera shot noise, pixel anomalies, digitization artifacts, etc.) from the images. It also results in widening of the correlation peaks, thus allowing a better performance of the sub-pixel peak fitting algorithm (see Sect. 6.2.2). In cases where images are under-sampled (\(d_\tau < 2\)) it reduces the so-called peak-locking effect, but also increases the measurement uncertainty.

Range clipping is another method of improving the data yield. The intensity capping technique [85], which was found to be both very effective and easy to implement, relies on setting intensities exceeding a certain threshold to the threshold value. Although optimal threshold values may vary with the image content, it may be calculated for the entire image from the grayscale median image intensity, \(I_{\mathrm {median}}\), and its standard deviation, \(\sigma _I\): \(I_{\text {clip}} = I_{\text {median}} + n \sigma _I\). The scaling factor n is user defined and generally positive in the range \(0.5< n < 2\).

A similar approach to intensity capping is to perform dynamic histogram stretching in which the intensity range of the output image is limited by upper and lower thresholds. These upper and lower thresholds are calculated from the image histograms by excluding a certain percentage of pixel from the either the upper or lower end of the histogram, respectively.

While the previous two methods provide contrast normalization in a global sense, the min/max filter approach suggested by Westerweel [102] also adjusts to variations of image contrast throughout the image. The method relies on computing envelopes of the local minimum and maximum intensities using a given tile size. Each pixel intensity is then stretched (normalized) using the local values of the envelopes. In order not to affect the statistics of the image the tile size should be larger than the particle image diameter, yet small enough to eliminate spatial variations in the background [102]. Sizes of \(7 \times 7\) to \(15 \times 15\) are generally suitable.

A very robust method of image contrast enhancement capable of dealing with non-uniform image background intensity is to subtract an image based on the local mean and local standard deviation as proposed in [74]. First a Gaussian filtered version of the image \(M_I(x,y)\) is subtracted from the original image I(x, y) and then divided by an image of the local variance \(\sigma _I(x,y)\):

where \(\sigma _1\) and \(\sigma _2\) are user defined kernel sizes for the Gaussian filter operations with typically \(\sigma _1 < \sigma _2\).

Normalization by the time-average image intensity is also an effective technique to remove stationary background with time-varying intensity. By decomposing a given raw image sequence into its POD modes Mendez et al. [56] have shown that the PIV particle pattern can be recovered by filtering out few of the first POD modes and thus rejecting unwanted features such as wall-reflections, noise and non-uniform illumination.

When PIV time series are available, unwanted light reflections can be removed via a high pass filter in the frequency domain [83]. A robust approach to suppress background reflections is based on variants of local normalization method [55]. The specific problem of near-wall measurements contaminated by surface reflections requires dedicated approaches, including for instance the treatment of the solid object region as discussed in [111].

When applying any of the previously described contrast enhancement methods, it should be remembered that modifications of the image intensities may also affect the image statistics which in turn can result in increased measurement uncertainties. This has to be balanced against the increase in data yield. Selective application of contrast enhancing filters in areas of low data would be the logical consequence. Also it should be made clear that strong reflections near walls can only be alleviated through processing as long as the image is not overexposed (saturation) in these areas. Care should be taken to minimize these reflections while acquiring the PIV image data.

5.3.2.2 Phase-Only Correlation

Improvement of the correlation signal may be achieved through adequate filters applied in the spectral domain (Fig. 5.12). Since most PIV correlation implementations rely on FFT based processing, spectral filtering is easily accomplished with very little computational overhead. A processing technique proposed by Wernet [101] called symmetric phase only filtering (SPOF) is based on phase-only filtering techniques which are commonly found in optical correlator systems. SPOF has been shown to improve the signal-to-noise ratio in PIV cross-correlation. In practice these filters also normalize the contribution of all sampled particle images, thus providing contrast normalization. In addition the influence of wall reflections (streaks or lines) and other undesired artifacts can be reduced. According to [85] SPOF yields more accurate results in the presence of DC-background noise, but is not as well suited as the intensity capping technique in reducing the displacement bias influence of bright spots with high spatial frequencies.

5.3.2.3 Correlation-Based Correction

Another form of improving the signal-to-noise ratio in the correlation plane (e.g. displacement peak detection rate) was proposed by Hart [32]. The technique involves the multiplication of at least two correlation planes calculated from samples located close-by, typically offset by one quarter to half the correlation sample width. Provided that the displacement gradient between the samples is not significant the multiplication of the correlation planes will enhance the main signal correlation peak while removing noise peaks that generally have a more random occurrence. Correlation plane averaging, that is summation of the of correlation planes instead of multiplication, is more robust when the number of combined correlation planes increases. The method, however, is less effective in removing spurious correlation peaks [53].

5.3.2.4 Ensemble Correlation Techniques

While the previous method is applied within a given PIV image pair, it can also be applied to a sequence of images. This PIV processing approach, also known as ensemble correlation or correlation averaging, was developed in the framework of micro-PIV applications in an effort to reduce the influence of Brownian motion that introduces significant noise in data obtained from a single PIV recording. Rather than obtaining displacement data for each individual image pair, the technique relies on averaging the correlation planes obtained from a sequence of images. With increasing frame counts a single correlation peak will accumulate for each correlation plane reflecting the mean displacement of the flow [42, 54, 104]. Although computationally very efficient, the main drawback of this approach is that all information pertaining to the unsteadiness of the flow is lost (e.g. no RMS values). Its use with conventional (macroscopic) PIV recording has been verified by the authors [38, 39]. Among the main benefits of this method are the fast calculation of the mean flow and the potentially high spatial resolution. Since this processing is very fast, it has potential as a quasi-online diagnostic tool. To demonstrate the effectiveness of the ensemble correlation technique, Meinhart et al. compared three different averaging algorithms applied to a series of images acquired from a steady flow through a microchannel [54]. The signal-to-noise ratio for measurements generated from a single pair of images was low due to an average of only 2.5 particle images in each \(16 \times 64\,\text {pixel}\) interrogation window. As a result velocity measurements are noisy and approximately 20% appear to be erroneous. Three types of average are compared.

Example of image overlapping: a one of the LID-PIV recordings; and b result of overlapping 9 LID-PIV recordings. Image size: \(256\times 256\,\text {pixel}\) [100]. Copyright 2002, AIAA. Reprinted with permission

Effect of image overlapping: a results for a single LID-PIV recording pair with a particle-tracking algorithm; and b results for the overlapped PIV recording pair with a correlation-based algorithm [100]. Copyright 2002, AIAA. Reprinted with permission

-

Image averaging: a time average of the first exposure and second exposure image series are produced that are then correlated (Fig. 5.13). This approach is very efficient and effective when working with low image density (LID) recordings. A variant of this technique consists in producing a maximum image from a given series of recordings (image overlapping). An example is given in Fig. 5.14. Especially in \(\mu \)PIV the low image density recordings are evaluated with particle-tracking algorithms, whereby the velocity vector is determined with only one particle, and hence the accuracy and reliability of the technique are limited. In addition, interpolation procedures are necessary to obtain velocity vectors on the desired regular grid from the randomly distributed particle-tracking data (Fig. 5.15), which brings additional uncertainties to the final results. The ensemble image permits to evaluate the flow at higher resolution than that allowed by cross-correlation analysis of the individual image pair. The underlying hypothesis for this approach is that the flow is stationary and laminar: condition often met in micro fluidics, but rarely applicable within macroscopic flows.

-

Correlation field averaging: the correlation function from each image pair is calculated and averaged along the sequence of recordings. This method is computationally more demanding than the image averaging technique. The benefit is that it is effective both at low and high level of seeding density (Fig. 5.16).

-

Velocity vector averaging: the velocity measurement is calculated for each image pair and then averaged along the sequence.

The performance comparison based on synthetic images is illustrated in Fig. 5.17. The fraction of valid mean velocity vectors is displayed as a function of the number of image pairs considered for the analysis. Clearly the combination of few recordings is very beneficial to the yield of valid vectors. The ensemble average correlation technique shows the fastest rise towards a totality of valid vectors. Averaging the velocity vectors gives a similar result, however with a slower convergence. Finally, averaging the images and then calculating the correlation shows in this case a similar trend. It shall be retained in mind that image averaging reaches an optimum and then the number of valid vectors will decline as a result of the drop of image contrast over long averages. The rms uncertainty of these three techniques indicates again that cross-correlation averaging is more accurate than vector averaging, followed by image averaging. An important advantage of correlation averaging and image averaging is that the interrogation window can be reduced when the number of recording is increased. Therefore correlation averaging and image averaging techniques offer a higher spatial resolution compared to vector averaging, where the interrogation window needs to contain a minimum number of pairs at each recording.

5.3.2.5 Single-Pixel Ensemble-Correlation

Comparison of three evaluation techniques. Top: Spatial cross-correlation analysis yields instantaneous vector fields at high seeding concentrations by averaging the displacement of small particle image groups within interrogation windows. Middle: Particle tracking analysis provides instantaneous velocity information at particle image locations. This approach is best suited for low and moderate particle image concentrations. Bottom: Ensemble correlation analysis provides only mean flow fields and the mean intensity of the velocity fluctuations by averaging the correlation planes obtained in many independent measurements. This technique works at any seeding concentration but the higher the concentration the faster it converges

Following the discussion in Sect. 5.3.1 the discrete correlation can be performed between a small template (interrogation template or window) to be searched for in a larger template in the second exposure (search window). The limit case is that the interrogation template is represented by a single pixel. In this case the interrogation of a single pair of images cannot yield any reliable information as the multiplication of the search template by a single value is a replica of itself. When the operation is repeated for a larger number of recordings (typically \(10^4\)–\(10^5\)), the ensemble correlation signal will emerge with a distinct peak and an acceptable signal-to-noise ratio.

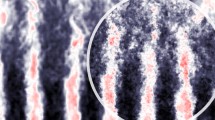

This evaluation method was first applied by Westerweel et al. [104] for stationary laminar flows in micro-fluidics and by Billy et al. [9] for the analysis of periodic laminar flows. Later, the approach was extended by Kähler and co-workers for the analysis of macroscopic laminar, transitional, and turbulent flows [42], and for the analysis of compressible flows at large Mach numbers [38, 80]. Furthermore, the technique was extended for the analysis of stereoscopic PIV images by Scholz & Kähler [81]. The product of the pixel intensity and the intensity distribution in the second frame over a search region is the instantaneous contribution to forming the ensemble correlation signal. Applications of single pixel correlations both in micro-PIV and aerodynamic flows (combined with long-range microscopy) have demonstrated the ability to measure the time-averaged flow velocity at unprecedented spatial resolution (in the order of a micron or less). Further discussion is given in Chap. 10.

Figure 5.18 summarizes the input and output expected by applying the techniques described above. Figure 5.19 illustrates schematically the properties of the correlation signal resulting from single pair analysis, ensemble cross-correlation and single pixel ensemble correlation. The latter requires typically two to three order of magnitude more samples to achieve the same signal-to-noise ratio than ensemble correlation for instance when a window of \(32\times 32\) pixel is adopted. The position of the maximum in the ensemble correlation map captures only the mean velocity vector. It is possible to extract the velocity probability density function from the analysis of the shape of the correlation peak, if a sufficiently large number of PIV image pairs is available [1, 3, 38, 80, 87, 106].

The correlation function can be regarded as the convolution of the velocity probability density function (pdf) and the image auto-correlation function. De-convolving the correlation signal from the auto-correlation function gives an estimate of the velocity pdf and allows for the estimation of the Reynolds stresses at high spatial resolution, when the single-pixel ensemble correlation is used.

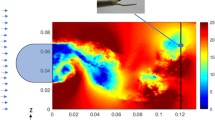

Figure 5.20 shows an example from experiments in a transonic backward-facing step flow where single-pixel correlation is used. The very steep velocity profile is better captured with the single-pixel analysis.

Comparison of mean displacement field and Reynolds shear stress distribution of a transonic backward facing step flow computed from 10 000 PIV double images: a) \(16\times 16 \,\text {pixel}\) window correlation: every 4th vector in X-direction and each vector in Y-direction is shown. b) single-pixel ensemble correlation: every 32nd vector in X-direction and every 2nd vector in Y-direction is shown. The correlation peaks were averaged over \(6 \times 3\) pixel. From Scharnowski et al. [80]

5.3.3 Evaluation of Doubly Exposed PIV Images

Although the current technology allows PIV recording in single exposure/multiple frame mode, in some experiments multiple exposed particle images may still be utilized. This is especially the case when photographic recording with high spatial resolution or digital SLR cameras are used. In the early days of PIV, optical techniques for extracting the displacement information from the photographs were utilized. However, desktop slide scanners also make it possible to digitize the photographic negatives and thus enable a purely digital evaluation. Analysis of double-exposed recordings also arises when frame separation no longer is possible for digital cameras, for instance, for the combination of very high-speed flows with high image magnification.

The effect of interrogation window offset on the position of the correlation peaks using FFT based cross-correlation on double exposed images. \(R_{{\text {D}}^{+}}\) marks the displacement correlation peak of interest, \(R_{\text {P}}\) is the self-correlation peak. In this case a horizontal shift is assumed

Essentially the same algorithms utilized in the digital evaluation of PIV image pairs described before can be used with minor modifications to extract the displacement field from multiple exposed recordings. The major difference between the evaluation modes arises from the fact that all information is contained on a single frame – in the trivial case a single sample is extracted from the image for the displacement estimation (Fig. 5.21, case I). From this sample, the auto-correlation function is computed by the same FFT method described earlier. In fact, the auto-correlation can be considered as a special case of the cross-correlation where both samples are identical. Unlike the cross-correlation function computed from different samples the auto-correlation function will always have a self-correlation peak located at the origin (see also the mathematical description in Sect. 4.5). Located symmetrically around the origin, two peaks with less than one fourth the intensity describe the mean displacement of the particle images in the interrogation area. The two peaks arise as a direct consequence of the directional ambiguity in the double (or multiple) exposed/single frame recording method.

To extract the displacement information in the auto-correlation function the peak detection algorithm has to ignore the self-correlation peak, \(R_{\text {P}}\), located at the origin, and concentrate on the two displacement peaks, \(R_{{\text {D}}^{+}}\) and \(R_{{\text {D}}^{-}}\). If a preferential displacement direction exists, either from the nature of the flow or through the application of displacement biasing methods (e.g. image shifting), then the general search area for the peak detection can be predefined. Alternatively a given number of peak locations can be saved from which the correct displacement information can be extracted using a global histogram operator (Sect. 7.15).

The digital evaluation of multiple exposed PIV recordings can be significantly improved by sampling the image at two positions which are offset with respect to each other according to the mean displacement vector. This offers the advantage of increasing the number of paired particle images while decreasing the number of unpaired particle images. This minimization of the in-plane loss-of-pairs increases the signal-to-noise ratio, and hence the detection of the principal displacement peak \(R_{{\text {D}}^{+}}\). However, the interrogation window offset also shifts the location of the self-correlation peak, \(R_{\text {P}}\), away from the origin as illustrated in Fig. 5.21 (Case II – Case V).

The use of FFTs for the calculation of the correlation plane introduces a few additional aliasing artifacts that have to be dealt with. As the offset of the interrogation window is increased, first the negative correlation peak, \(R_{{\text {D}}^{-}}\), and then the self-correlation peak, \(R_{\text {P}}\), will be aliased, that is, folded back into the correlation plane (Fig. 5.21 Case III – Case V). In practice, detection of the two strongest correlation peaks by the procedure described in Sect. 5.3.5.1 is generally sufficient to recover both the positive displacement peak, \(R_{{\text {D}}^{+}}\), and the self-correlation, \(R_{\text {P}}\). The algorithm can be designed to automatically detect the self-correlation peak because it generally falls within a one pixel radius of the interrogation window offset vector.

5.3.4 Advanced Digital Interrogation Techniques

The transition of PIV from the analog (photographic) recording to digital imaging along with improved computing resources prompted significant improvements of interrogation algorithms. The various schemes can roughly be categorized into five groups:

-

single pass interrogation schemes such as presented in Willert & Gharib [107]

-

multiple pass interrogation schemes with integer sampling window offset [99, 103].

-

coarse-to-fine interrogation schemes (resolution pyramid [33, 86, 105]) or (flow-)adaptive resolution schemes

-

schemes relying on the deformation of the interrogation samples according to the local velocity gradient [76]

-

super-resolution schemes and single particle tracking [7, 45, 91]

Especially the combination of the grid refining schemes in conjunction with image deformation have recently found widespread use as they combine significantly improved data yield with higher accuracy compared to first-order schemes (rigid offset of the interrogation sample). The following sections give brief overview of each of these schemes.

5.3.4.1 Multiple Pass Interrogation

The data yield in the interrogation process can be significantly increased by using a window offset equal to the local integer displacement in a second interrogation pass [103]. By offsetting the interrogation windows according to the mean displacement, the fraction of matched particle images to unmatched particle images is increased, thereby increasing the signal-to-noise ratio of the correlation peak (see Sect. 6.2.4). Also, the measurement noise or uncertainty in the displacement, \(\varepsilon \), reduces significantly when the particle image displacement is less than half a pixel (i.e. \(|\varvec{d}| < 0.5\,\text {pixel}\)) where it scales proportional to the displacement [103]. The interrogation window offset can be relatively easily implemented in an existing digital interrogation software for both single exposure/double frame PIV recordings or multiple exposure/single frame PIV recordings described in the previous section. The interrogation procedure could take the following form:

- Step 1::

-

Perform a standard digital interrogation with an interrogation window offset close to the mean displacement in the data.

- Step 2::

-

Scan the data for outliers using a predefined validation criterion as described in Sect. 7.1. Replace outlier data by interpolating from the valid neighbors.

- Step 3::

-

Use the displacement estimates to adjust the interrogation window offset locally to the nearest integer.

- Step 4::

-

Repeat the interrogation until the integer offset vectors converge to \(\pm 1\,\text {pixel}\). Typically three passes are required.

The speed of this multiple pass interrogation can be increased significantly by comparing the new integer window offset to the previous value allowing unnecessary correlation calculations to be skipped. The data yield can be further increased by limiting the correlation peak search area in the last interrogation pass.

As pointed out by Wereley & Meinhart [99] a symmetric offset of the interrogation samples with respect to the point of interrogation corresponds to a central difference interrogation which is second-order accurate in time in contrast to a simple forward differencing scheme that simply adds the offset to the interrogation point (see Fig. 5.22).

Sampling window shift using a forward difference scheme (left) and central difference scheme, right (from [76])

5.3.4.2 Grid Refining Schemes

The multiple pass interrogation algorithm can be further improved by using a hierarchical approach in which the sampling grid is continually refined while the interrogation window size is reduced simultaneously. This procedure, originally introduced by Soria [86] and Willert [105], has the added capability of successfully utilizing interrogation window sizes smaller than the particle image displacement. This permits the dynamic spatial range (DSR) to be increased by this procedure. This is especially useful in PIV recordings with both a high image density and a high dynamic range in the displacements. In such cases standard evaluation schemes cannot use small interrogation windows without losing the correlation signal due to the larger displacements (one-quarter rule, [44]). Instead the offset of the interrogation window at subsequent iterations allows to circumvent the above rule and obtain good correlation signal even for interrogation windows smaller than the particle image displacement. However, a hierarchical grid refinement algorithm is more difficult to implement than a standard interrogation algorithm. Such an algorithm may look as follows:

- Step 1::

-

Start with a large interrogation sample that is known to capture the full dynamic range of the displacements within the field of view by observing the one-quarter rule (p. 156).

- Step 2::

-

Perform a standard interrogation using windows that are large enough to obey the one-quarter rule.

- Step 3::

-

Scan for outliers and replace by interpolation. As the recovered displacements serve as estimates for the next higher resolution level, the outlier detection criterion should be more stringent than that for single-step analysis to prevent possible divergence of the new estimate from the true value [82].

- Step 4::

-

Project the estimated displacement data on to the next higher resolution level. Use this displacement data to offset the interrogation windows with respect to each other.

- Step 5::

-

Increment the resolution level (e.g. halving the size of the interrogation window) and repeat steps 1 through 4 until the actual image resolution is reached.

- Step 6::

-

Finally perform an interrogation at the desired interrogation window size and sampling distance (without outlier removal and smoothing). By limiting the search area for the correlation peak the final data yield may be further increased because spurious, potentially stronger correlation peaks can be excluded if they fall outside of the search domain.

In the final interrogation pass the window offset vectors have generally converged to \(\pm 0.5\,\text {pixel}\) of the measured displacement thereby guaranteeing that the PIV image was optimally evaluated. The choice for the final interrogation window size depends on the particle image density. Below a certain number of matched pairs in the interrogation area (typically \(\mathcal{N_{\text {I}}}< 4\)) the detection rate will decrease rapidly (see Chap. 9). Figure 5.23 shows the displacement data of each step of the grid and interrogation refinement.

The processing speed may be significantly increased by down-sampling the images during the coarser interrogation passes. This can be achieved by consolidating neighboring pixel by placing the sum of a block of \(N\times N\) into a single pixel (pixel binning) [105]. This allows the use of smaller interrogation samples that are evaluated much faster. In fact, a constant size of the correlation kernel can be used regardless of the image resolution (e.g. a \(4\times \) down-sampled image interrogated by a \(32 \times 32\,\text {pixel}\) sampling window corresponds to a \(128\times 128\,\text {pixel}\) sample at the initial image resolution).

5.3.4.3 Image Deformation Schemes

The particle image pattern displacement is measured by cross-correlation under the assumption that the motion of the particles image within the interrogation window is approximately uniform. In practice this condition is often violated as most flows of interest produce a velocity distribution with significant velocity gradients due to shear layers and vortices. In these cases the cross-correlation peak produced by image pairs with a different velocity becomes broader and it can split into multiple peaks due to large velocity differences across the window (Fig. 5.24).

As a result, the measurement of velocity in presence of large velocity gradient (e.g. in the core of a vortex, Fig. 5.23) is affected by larger uncertainty and suffers from a higher vector rejection rate. The window deformation technique is meant to compensate the in-plane velocity gradient and the peak broadening effect. Both can be largely reduced when the two PIV recordings are deformed according to an estimation of the velocity field. The technique can be implemented within the multi-grid method [86, 105] described before (see Sect. 5.3.4.2). The main advantage with respect to the multi-grid window shifting method is an increased robustness and accuracy over shear flows such as boundary layers, vortices and turbulent flows in general. The basic principle is illustrated in Fig. 5.25, where a continuous image deformation progressively transforms the images towards two hypothetical recordings taken simultaneously at time \(t+\varDelta t/2\).

Principle of the window deformation technique. Left: tracer pattern in the first exposure. Right: tracer pattern at the second exposure (solid circles represent the tracers correlated with the first exposure in the interrogation window). In grey deformed window according to the displacement distribution estimated from a previous interrogation

In analogy with the discrete window shift technique, this method is referred to as window deformation; however, the efficient implementation of the procedure is based on the deformation of the entire PIV recordings, which is sometimes referred to as image deformation (Fig. 5.26). The two approaches are therefore synonyms of the same concept. The image deformation technique can be summarized as follows:

- Step 1::

-

Standard digital interrogation with an interrogation window complying with the one quarter rule (p. 156)

- Step 2::

-

Low-pass filtering of the velocity vector field. A filter kernel equivalent to the window size is sufficient to smooth spurious fluctuations and suppress fluctuations at sub-window wavelength. Moving average filters or spatial regression with a 2nd order least squares regression are suitable choices [82].

- Step 3::

-

Deformation of the PIV recordings according to the filtered velocity vector field with a central difference scheme. The image resampling scheme influences the accuracy of the procedure [5]. For typical PIV images with particle image diameter of 2 to \(3\,\text {pixel}\), high order schemes (cardinal interpolation, B-splines) yield better results than low order interpolators (bilinear interpolation) [95, 97].

- Step 4::

-

Further interrogation passes on the deformed images with an interrogation window reduced in size, yet containing at least 4 image pairs.

- Step 5::

-

Add the result of the correlation to the filtered velocity field.

- Step 6::

-

Scan the velocity vector field for outliers and replace these by interpolation.

- Step 7::

-

Repeat steps 2 to 6 two or three times. With each iteration the incremental change in the displacement field will decrease.

In analogy to the standard cross-correlation function given in Eq. (5.1) the equation used for the spatial cross-correlation for deformed images reads as follows:

where \(\tilde{I}(i,j)\) and \(\tilde{I}'(i,j)\) are the image intensities reconstructed after deformation using the predicted deformation field \(\varDelta \varvec{s}(\varvec{x})\) in a central difference scheme:

The deformation field \(\varDelta \varvec{s}(\varvec{x})\) is a spatial distribution which generally is not uniform and therefore requires interpolation at each pixel in the image. Here a truncation of the Taylor series at the first order term is commonly sufficient for the reconstruction of the local displacement:

Here \(\varvec{x_0}\) denotes the position of the center of the interrogation window. Since the size of the interrogation window normally exceeds the spacing of the displacement vectors (overlap factor 50–75%) the displacement distribution within the window is a piecewise linear function resulting in a higher order approximation of the velocity distribution within the window. Dedicated literature on the performance of velocity field interpolators indicates that B-splines are an optimal choice in terms of accuracy and computational cost [4].

When steps 2–6 are repeated a number of times, the distance between particle image pairs is minimized and \(\tilde{I}(i,j)\) and \(\tilde{I}'(i,j)\) tend to coincide except for out-of-plane particle motion and shot-to-shot image intensity variation. As a consequence, the correlation function returns a peak located at the center of the correlation plane.

The procedure has the additional benefits of yielding a more symmetrical correlation peak, approximately at the origin of the correlation plane, reducing uncertainties due to distorted peak shape or inaccurate peak reconstruction (see Figs. 5.27 and 5.28). Another advantage is that the spatial resolution is approximately doubled with respect to a rigid window interrogation [82], with the caveat of a selective amplification of wavelengths smaller than the interrogation window (see Fig. 5.29). The latter needs to be compensated for by low-pass filtering the intermediate result [82] (see Fig. 5.30).

Correlation map of a shear flow as in Fig. 5.24 with multi-step correlation and window deformation. A single peak can be clearly distinguished from the correlation noise with a correlation coefficient of 99.5% compared to 27.3% for the undeformed image

5.3.4.4 Image Interpolation for PIV

Due to the continuously varying displacement field, the intensity of the deformed images needs to be resampled (e.g. by interpolation) at non-integer pixel locations, which increases the computational load of the technique. Depending on the choice of interpolator significant bias errors may be introduced as shown in Fig. 5.31. The data was obtained from synthetic images with constant particle image displacement using the Monte Carlo methods described in Sect. 6.2.1. Polynomial interpolation produces a significant bias error up to one fifth of a pixel and are not particularly suited for this purpose.

A review of the topic on the background of medical imaging along with a performance comparison is given by Thévenaz et al. [95]. They suggest the use of generalized interpolation with non-interpolating basis functions such as B-splines or shifted bi-linear interpolation in favor of more commonly used polynomial or bandlimited sinc-based interpolation.

In contrast to many other imaging applications, a properly recorded PIV image generally contains almost discontinuous data with significant signal strength in the shortest wavelengths close to the sampling limit (i.e. strong intensity gradients). Because of this the image interpolator should primarily be capable of properly reconstructing these steep intensity gradients. A concise comparison of various advanced image interpolators for use in PIV image deformation is provided by Astarita & Cardone [5]. In accordance to the findings reported by Thévenaz et al. [95], they suggest the use of B-splines for an optimum balance between computational cost and performance. The bias error for B-splines of third and fifth order shown in Fig. 5.31 clearly supports this. If higher accuracy is required then sinc-based interpolation, such as Whittaker reconstruction [79], or FFT-based interpolation schemes [110] with a large number of points should be used. However, processing time may increase an order of magnitude.

5.3.4.5 Iterative PIV Interrogation and Its Stability

Multi-step analysis of PIV recordings can be seen as comprising of two procedures:

(1) Multi-grid analysis where the interrogation window size is progressively decreased. This process eliminates the one-quarter rule constraint and usually terminates when the required window size (the smallest) is applied.

(2) Iterative analysis at a fixed sampling rate (grid spacing) and spatial resolution (window size). This process further improves the accuracy of the image deformation and enhances the spatial resolution.

In essence the iterative analysis can be described by a predictor-corrector process governed by the following equation:

where \(\varDelta \varvec{s}_{k+1}\) indicates the result of the evaluation at the \(k{\text {th}}\) iteration. The correction term \(\varDelta \varvec{s}_{\text {corr}}\) can be viewed as a residual and is obtained by interrogating the deformed images as calculated by the central difference expression Eq. (5.8). The procedure can be repeated several times, however two to three iterations are already sufficient to achieve a converged result with most of the in-plane particle image motion compensated through the image deformation.

The iterative scheme introduced above appears very logical and its simplicity makes it straightforward to implement, which probably explains why it has been so broadly adopted in the PIV community [21, 35, 78, 79, 99]. However, when such iterative interrogation is performed without any spatial filtering of the velocity field, the process produces spurious oscillations of small amplitude that grow with the number iterations. The instability arises from the sign reversal in the sinc shape of the response function associated to the top-hat function of the interrogation window. For instance, taking two almost identical images except for artificial pixel noise, the displacement field measurement after some iterations begins to oscillate at a spatial wavelength \(\lambda _{\text {unst}} \approx 2/3 D_{\text {I}}\) and yields a wavy pattern [82].

The above result is consistent with the response function of a top-hat weighted interrogation window being \(r_s = sin(x/{D_{\text {I}}})/(x/{D_{\text {I}}})\). Therefore wavelengths in the range with negative values of the sinc function are systematically amplified. The iterative process requires stabilization by means of a low-pass filter applied to the updated result (Fig. 5.30), which damps the growth of fluctuations at wavelengths smaller than the window size. A moving average filter with a kernel size corresponding to that of the interrogation window is more than sufficient to stabilize the process.

However, filtering with a second order least-squares spatial regression allows to both maximize spatial resolution and minimize the noise. Other means of stabilization are based on interrogation window weighting techniques (e.g. Gaussian or LFC [58]). A numerical simulation using a sine-modulated shear flow shows that the single-pass cross-correlation amplitude modulation (empty squares in Fig. 5.29) follows closely that of a sinc function with a 10% cut-off occurring when the window size is about one-quarter the spatial wavelength (\(D_{\text {I}}/ \lambda = 0.25\)). The iterative interrogation (full circles in Fig. 5.29) delays the cut off at \(D_{\text {I}}/ \lambda = 0.65\). This implies that with a window size of \(32\,\text {pixel}\) the single-step cross-correlation is only capable of accurately recovering fluctuations with a wavelength larger than \(120\,\text {pixel}\). The minimum wavelength reduces to \(50\,\text {pixel}\) with the iterative deformation interrogation. The higher response of the iterative interrogation without filter (empty circles in Fig. 5.29) is only hypothetical because the process is unstable and the error is dominated by the amplified wavy fluctuations.

In conclusion the spatial resolution achieved with iterative deformation is about twice as high than that of the single-step or window-shift procedure. It should be retained in mind that the increase in resolution becomes only effective when the velocity field is sampled spatially at a higher rate, that is, increasing the overlap factor to 75% between adjacent windows instead of 50%. Otherwise, the error committed when evaluating for instance the velocity spatial derivatives is dominated by numerical truncation due to the large distance between neighboring vectors.

5.3.4.6 Adaptive Interrogation Techniques

The iterative multi-grid interrogation may help in increasing the spatial resolution by decreasing the final window size. However, in several cases the flow and the flow seeding distribution are not homogeneous over the observed domain. In this case, the optimization rules for interrogation can only be satisfied in an average sense and local non-optimal conditions may occur such as poor correlation signal or too low flow sampling rate. Moreover, the window filtering effect can be minimized when the flow exhibits variations along a preferential direction. For instance, in case of stationary interfaces an adaptive choice of the interrogation volume shape and orientation may contribute to achieve further improvements especially when dealing with shear layers [93] or shock waves [94]. The main rationale behind adaptive choice of the interrogation window is that, maintaining its overall size, one can reduce its length in one direction and compensate it enlarging the window in the orthogonal direction. The parameter governing this choice can be the velocity gradient or higher spatial derivative [77], but also more simply the direction of a solid surface nearby. Spatial adaptivity is particularly attractive for 3D PIV where the interrogation volume can be shaped as flat as a coin or elongated as a cigar depending on the velocity gradient topology [59] (Fig. 5.32).

5.3.4.7 Non-correlation-Based Interrogation Techniques

Other interrogation algorithms exist that do not rely on cross-correlation. Most work has been devoted to image motion estimation, also referred to as optical flow, which is a fundamental issue in low-level vision and has been the subject of substantial research for application in robot navigation, object tracking, image coding or structure reconstruction [6, 34]. These applications are commonly confronted with the problem of fragmented occlusion (i.e. looking through branches of a tree) or depth discontinuities in space, which are analogous to shocks within fluid flows.

Optical flow for the analysis of PIV images was first reported by Quenot et al. who investigated a thermally driven flow of water [67]. Further implementations of optical flow adapted to the evaluation of PIV images have been reported by Ruhnau et al. [72, 73]. The potential of optical flow for achieving high spatial resolution and accurate results in high-gradient regions was demonstrated in the scope of the “International PIV-Challenge” [88,89,90]. One known deficiency of established optical flow techniques available in computer vision is their instability in the presence of out-of-plane motion of particles that is associated with a loss of image correspondence. Therefore additional constraints have to be implemented in the algorithms to successfully apply them to PIV recordings [72, 73]. The latter can potentially be resolved by the application of optical flow techniques to 3D experiments, which remains to date a topic of research.

The degree of image matching can be evaluated not only by intensity multiplication as in the case of cross-correlation, but also by calculating the difference between two patterns. The method of minimum quadratic differences (MQD) is based on the difference between the reference and search template. The application to PIV has been investigated by Gui & Merzkirch [29, 30] among others. The method performs similar to cross-correlation analysis in terms of accuracy of displacement estimation. The potential advantage of the method is that the operation of difference can be more easily accelerated than the multiplication with dedicated microprocessors. One of the shortcomings of MQD is the higher sensitivity to variations in the illumination between the two exposures, which needs to be accounted for with intensity equalization.

The least-squares matching algorithm is based on MQD operations, but the function to be minimized is not defined in the physical space of spatial shift, but in that of the coefficients of affine transformations (shift, rotation, dilation, shear) [46]. The method is therefore also very well suited to the analysis of flows with a variety of length scales where the deformation in between the two recordings cannot be neglected.

Earlier work from Tokumaru & Dimotakis [96] presented the image correlation velocimetry (CIV), followed by a number of optimizations by Fincham & Delerce [21] leading to an algorithm performing iterative window refinement, including the deformation, comparable to that based on cross-correlation.

5.3.5 Cross-Correlation Peak Detection

One of the most crucial, but not necessarily easily understood features of digital PIV evaluation, is that the position of the correlation peak can be estimated to subpixel accuracy. Estimation accuracies of the order of 1 / 10–\(1/20\mathrm{th}\) of a pixel are realistic for \(32 \times 32\,\text {pixel}\) samples from 8-bit digital images. Simulation data such as those presented in Sect. 6.1 can be used to quantify the achievable accuracy for a given imaging setup.

Since the input data itself is discretized, the correlation values exist only for integral shifts. The highest correlation value would then permit the displacement to be determined only with an uncertainty of \(\pm 1/2\,\text {pixel}\). However, with the cross-correlation function being a statistical measure of best match, the correlation values themselves also contain useful information. For example, if an interrogation sample contains ten particle image pairs with slightly varying shift of \(2.5\,\text {pixel}\) on average, then from a statistical point of view, five particle image pairs will, for instance contribute to the correlation value associated with a shift of \(2\,\text {pixel}\), while the other five will indicate a shift of \(3\,\text {pixel}\). As a result, the correlation values for the \(2\,\text {pixel}\) and \(3\,\text {pixel}\) shifts will have the same value. An average of the two shifts will yield an estimated shift of \(2.5\,\text {pixel}\). Although rather crude, the example illustrates that the information hidden in the correlation values can be effectively used to estimate the mean particle image shift within the interrogation window.