Abstract

Time series classification has been attracting significant interests with many challenging applications in the research community. In this work, we present a novel time series classification method based on the statistical information of each time series class, called Principal Shape Model (PSM), which can quickly and effectively classify the time series even if they are very long and the dataset is very large. In PSM, the time series with the same class label in the training set are gathered to extract the principal shapes which will be used to generate the classification model. For each test sample, by comparing the minimum distance between this sample and each generated model, we can predict its label. Meanwhile, through the principal shapes, we can get the intrinsic shape variation of time series of the same class. Extensive experimental results show that PSM is orders of magnitudes faster than the state-of-art time series classification methods while achieving comparable or even better classification accuracy over common used and large datasets.

Access provided by CONRICYT-eBooks. Download conference paper PDF

Similar content being viewed by others

Keywords

1 Introduction

Time series research has attracted significant interests in the data mining community, due to the fact that series data are presented in a wide range of our daily life. As a fundamental research, time series classification has been studied extensively and many algorithms have been proposed during the last decade. Recent empirical evidence has strongly suggested that the simple nearest neighbor classifier is very difficult to beat [5]. Thus, the vast majority of time series classification research has focused on alternative distance measures for 1-NN classifiers based on raw data, compression data or smoothing data [20]. In these kinds of methods, the similarity of time series is a key point for the classification task. Ding et al. [5] present a good survey of similarity calculation methods for time series. While the nearest neighbor classifier has the advantages of simplicity and not requiring extensive parameter tuning, it does have several disadvantages. One is its time and space consumption for large datasets, the other is it does not give us a reasonable explanation about the relationship between a time series and a class. Unlike the NN-based methods taking all series points into consideration, another kinds of methods treat the subsequences of time series as a basis for classification. Recent years, the shapelets-based methods [6, 14, 17, 21] have been studied most, which attempt to find the subsequences with high discriminative power as features of one class. The idea is that the time series in different classes can be distinguished by their local subsequences instead of the whole ones. These kinds of methods provide interpretable results, which can help researchers understand the data.

Due to the influences of many factors, the time series of a class are slightly different at some time point. It is common for data acquisition. The above two kinds of methods, i.e., 1-NN classifier and shapelets-based methods, describe these differences by distance between time series or between subsequences. It is a reasonable assumption that two time series in the same class have a small distance. In 1-NN classifier, the distances between the test sample and all training time series should be calculated first and the one with the minimum value should have the same label with the test sample. For shapelets-based methods, all subsequences with any length are shapelet candidates and the most important step to find out shapelets is to calculating the distances between a candidate and all training time series [21]. Even though there are some pruning strategy to accelerate this process [17], the process of finding the most discriminative subsequences is computationally expensive.

Unlike existing methods, we utilize an entirely different method and build a model to describe these differences of the time series of a class. The label of a test sample can be obtained by comparing the similarity of the instance and the models. By this way, the large amount of distance calculation is unnecessary. Moreover, the variation characteristics of time series of a class can also be represented by the model. In this paper, the variation characteristics of time series is described by a linear model. Firstly, We extract the intrinsic variation characteristics of each class to generate the principal shapes based on its statistical information. Then each class corresponds to a model that constructed by its principal shapes. All time series of a class are considered as a linear combination of principal shapes. The similarity of a test time series and a class models is represented by an objective function. By optimizing these objective functions and comparing the similarity values, we can predict the class label of this test time series. The experimental results on a large number of time series datasets demonstrate that our method is orders of magnitudes faster than other methods, while achieving comparable or even better classification accuracies.

In summary, our contributions include the following:

-

We utilize the principal shapes to describe the variation characteristics of time series in the same class, which gives a good understand for time series.

-

The distance between the model constructed by principle shapes and a test sample is employed to predict the test sample’s label. Compared with 1-NN based method, this technique can reduce the amount of distance calculation.

-

We empirically validate the performance of our proposed method on commonly used time series datasets and some large datasets. The results show promising results compared to other methods.

The remainder of this paper is organized as follows: We provide some background into time series classification and review the common used algorithms in Sect. 2. Section 3 describes the principal shape model in detail and illustrates the principal shapes by a toy example. The effectiveness and efficiency of the proposed method are also discussed. Section 4 demonstrates the PSM is effective by adequate experiments. Conclusions and future work are drawn in Sect. 5.

2 Background and Related Work

2.1 Time Series Classification

A time series is an ordered set of real-valued variables. Usually, the time series are recorded in temporal order at fixed intervals of time. Time series classification is defined as the problem of building a classifier from a labelled training time series set to predict the label of new time series. A time series \(\varvec{x}_i\) with m values is represented as \( \varvec{x}_i = (x_{i,1}, x_{i,2}, \ldots , x_{i,m})^T \) and an associated class label \(l_i\). The training set is a set of n labelled pairs \((\varvec{x}_i,l_i)\) with C classes: \(X = \{(\varvec{x}_1,l_1),(\varvec{x}_2,l_2),\ldots , (\varvec{x}_n,l_n)\}\), where \(l_i \in C\). For classification algorithm, the normalization of time series is necessary and the commonly used approach is z-normalization: \(norm({\varvec{x}}) = \frac{{\varvec{x}}-mean(X)}{std(X)}\). For ease of explanation, we still use \({\varvec{x}}\) to denote \(norm({\varvec{x}})\). Given an unlabelled time series dataset, the objective is to classify each sample of this dataset to one of the predefined classes.

The main difference between time series classification problems and the general classification task is the order of observations is very important. Therefore, the algorithm for time series classification requires specific techniques to meet this characteristic. Two kinds of methods are studied in recent years. One is global-based method by taking the whole time series into account, while the other considers the usage of time series subsequences, called local-based method.

2.2 Global-Based Methods

In global-based methods, the classifier takes the whole time series as features and predicts the label of a new time series based on its similarity metrics where distance is measured directly between time series points. Two kinds of distances, Euclidean distance (ED) and Dynamic Time Warping (DTW) distance, have been widely and successfully used as the similarity metrics [10, 11, 15, 18]. ED is usually time and space efficient, but it often gets a poor classification accuracy [18]. DTW is considered as a better solution in time series research community because it allows time series to match even if they are out of phase in the time axis [12, 19]. It has been proved that the 1-NN classifier with DTW is the best approach for small datasets. However, as the number of time series increases, the classification accuracy of DTW will converge to ED [5]. As we mentioned in Sect. 1, these methods have two drawbacks: high time and space complexity for large datasets and long time series and results uninterpretable.

2.3 Local-Based Methods

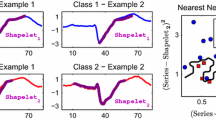

To address the limitations of 1-NN classifier, a new shapelets-based classification algorithm is proposed by Ye in 2009 [21]. Informally, shapelets are time series subsequences which can maximally represent a class in some sense. The algorithm completes the classification task by constructing a decision tree classifier. The important point is that shapelet offers interpretable features to domain experts. The utility of shapelets has been confirmed and extended by many researchers [7]. Nevertheless, since all subsequences of time series could be a shapelet, finding a good shapelet is a time-consuming task. Although there are several speedup strategy, e.g., early abandon pruning, entropy pruning [21], intelligent caching and reuse of intermediate results (called Logical Shapelets) [14], etc., it still takes a long running time, especially in case of large datasets and long time series. To speed up the process of shapelets discovery, chang et al. [3] propose to parallelize the distance computations using GPUs. Rakthanmanon et al. [17] propose a heuristic strategy, called Fast-Shapelets (FSH), to speed up the searching process by exploiting a random projection method on the SAX representation of time series to find the potential shapelet candidates [13]. It is up to orders of magnitudes faster than Logical Shapelets, which reduces the time complexity from O(\(n^2m^3\)) to O(\(nm^2\)) (n is the number of time series in the dataset, and m is the length of the longest time series). Except for decision tree classifier, some off-the-shelf classifiers like SVM are also used on shapelets data [8, 9], which are transformed by measuring distances between the original time series and discovered shapelets. It can improve prediction accuracy while still maintaining the explanatory power of shapelets.

Another way to find shapelets called Learning Time Series (LTS) is to learn (not search for) shapelets by optimizing an objective function [6]. The LTS algorithm enables learning near-to-optimal shapelets directly without the need to try out lots of candidates and can learn true top-k shapelets. It is an entirely new perspective on time series shapelets. The algorithm can also gain the higher accuracy in some time series dataset than other methods, but it is space and time-consuming.

3 Principal Shape Model

3.1 Principal Shapes Extraction from Time Series

If we take a time series as a vector, the vectors of a training set can form a distribution in m dimensional space. If we model this distribution, we can generate new series which are similar to those in the original training set, and also can examine the test time series to decide whether they are plausible examples. To simplify the problem, we wish to reduce the dimensionality of the training data from m to a reasonable dimension k. An effective approach is to apply Principal Component Analysis (PCA) method. Given a training set of \(n_i\) time series with the same class label, the mean time series \(\overline{\varvec{x}_i}\) is calculated as: \(\overline{\varvec{x}_i} = \frac{1}{n_i}\sum \limits _{j=1}^{n_i}(\varvec{x}_j)\).

The principal shapes of variation in each class, i.e. the ways in which some points of time series tend to move together, can be found by using PCA. In order to remove the meaningless distortions, we first calculate the deviations \(d\varvec{x}_j = \varvec{x}_j-\overline{\varvec{x}_i}\) of each class. From these deviations, we can get the matrix M of the i-th class in training set. And then the eigenvectors (also called principal components), \(\varvec{p}_1, \ldots , \varvec{p}_{n_i}\), and the corresponding eigenvalues, \(\lambda _1, \ldots , \lambda _{n_i}\), can be obtained by singular value decomposition method. The variations of time series points can be described by the principal components and eigenvalues. Each principal component gives a pattern of variation of time series points. The first principal component, which is associated with the largest eigenvalue, \(\lambda _1\), describes the largest part of the time series variation. The proportion of total variance described by the j-th principal component is equal to the \(\lambda _j\).

Generally, most of the time series variation can be represented by a small number k of principal components. The parameter k can be assigned a certain number, but a more common practice is to choose the first k principal components from a sufficiently large proportion of the total variance of the training set. The proportion can be decided by the cross validation method for each dataset. The total variance S is defined as: \(S=\sum \limits _{j=1}^{n_i}\lambda _j\).

By this way, all time series of a class can be approximated by taking the mean time series and a weighted sum of the first k principal components:

where \(P = (\varvec{p}_1, \varvec{p}_2, \ldots , \varvec{p}_k)\) is the matrix that made up by the first k eigenvectors; \(\varvec{b}=(b_1, b_2, \ldots , b_k)^T\) is a weight vector.

By only choosing the first k principal components instead of the whole eigenvectors, we can get the principal shapes contained in each class. Besides, the distortions occasionally occur in one or several time series can be eliminated, because they are usually related to small principal components.

These above equations allow us to generate new instance of time series by varying the parameter \(\varvec{b}\) within a suitable limit, which should be related with the training set. Note that the variance of \(b_j\) on the training set can be given by \(\lambda _j\), so a reasonable limit [16] is

The coefficient 3 is selected because most of the variations lie in 3 standard deviation of the mean. By applying limits of \(\pm 3\sqrt{\lambda _j}\) to the parameter \(b_j\), we can ensure that the generated time series is similar to those in the training set.

3.2 An Example of Principal Shapes on Toy Dataset

We use Car dataset as our toy dataset, which is one of time series datasets from UCR archiveFootnote 1, to exhibit the extracted principal shapes and their variations. It is composed of four classes, which contains 60 training time series and 60 test time series. Each time series contains 577 real observations. We extract the principle shapes of each class from training set and the first four principal shapes of each class are shown in Fig. 1.

From this figure we can see, the principal shapes of each class are different, so we can build a model based on these principal shapes for time series classification. As mentioned above, the vector \(\varvec{b}\) defines a set of parameters of a deformable model. By varying the elements of \(\varvec{b}\), we can vary the principal shapes. All extracted principal shapes of each class are added up after varying by an admissible parameter, and then we get the overall shape variation of each class. Figure 2 shows the range of variation of each class. The shape is a superposition of all admissible principal shapes. The dotted arrow lines indicate that all time series of this class can only vary in these scope.

It is clearly that time series of different classes have different shape variations. The class information of a new time series that we want to predict can be obtained by fitting these shape variations. The one with the minimum distance is the best match with the test time series.

3.3 Classifying New Time Series

The principal shapes of class i and the corresponding parameter \(\varvec{b}^i\) form a linear model which we call it principal shape modelFootnote 2. After getting the models of all classes, the training time series can be expressed by these models. When doing the classification task, for a new time series, our purpose is to fit these models to this time series. More specifically, if a time series belongs to a specific class i, the corresponding model (\(P^i, \varvec{b}^i\)) must fit it well, otherwise, the model has a large distance to this time series. Assume \(\varvec{y}\) is the time series to be classified, an objective function to measure the fitting degree of class i is defined by

where \(i \in C\) and \(\overline{\varvec{x}_i}\) is the mean of i-th class time series, \(P^i\) is a matrix of the first k eigenvectors generated by the i-th class, \(\varvec{b}^i\) is the weight vector.

Formula (4) is a least squares problem with an inequality constraint. It has been proved that f (without constraint) is a strictly downward convex function and has the only extremum [2]. The best \(\varvec{b}^{i*}\) is:

As we can find that if we want to get the minimum value of objective function, an inverse operation is necessary. Due to the complexity of matrix inverse operation and the inequality constraint, we cannot easily get the best parameter vector \(\varvec{b}^i\). An alternative method to solve this problem is the Gradient Descent (GD). With the help of GD, we can gain the minimum value between a new time series \(\varvec{y}\) and the current model (\(\varvec{\overline{x}_i}\), \(P^i\), \(\varvec{b}^i\)). Algorithm 1 describes this process.

For gradient descent algorithm, the initial value of \(\varvec{b}^i\) is usually set to a random number and the algorithm should run many times to obtain the best value. But in this algorithm, due to the characteristic of f: \(\frac{{{\partial ^2}f(\varvec{b}_i)}}{{\partial {{\varvec{b}^i}^2}}} = {P^{iT}}P^i\), there’s no need to repeat the experiment many times to find the minimum value by setting different initial values of \(\varvec{b}^i\). In Algorithm 1, the lines 1 gives a default value of \(\varvec{b}^i\) and the distance between \(\varvec{y}\) and the model by using the default \(\varvec{b}^i\) is obtained in line 3. The constants A and B in line 7 are used to calculate the gradient which is completed in line 9 for current \(\varvec{b}^i\). The iterative process for finding the best \(\varvec{b}^{i*}\) is from line 8 to line 18. Lines 11–13 limit the range of \(\varvec{b}^i\), i.e. \(\Vert b^i_j\Vert \le 3\sqrt{\lambda ^i_j}\). Parameter \(\alpha \) in line 10 is the step size. If its value is very small, the algorithm will converge slowly. Our objective function is a strictly downward convex function, so we do not need a small step size. In our experiments, \(\alpha \) is set to 0.5 and only after a few iterations, we can get the minimum distance.

For each class, we can get a distance from the objective function. If \(\varvec{y}\) is an instance of this class, the function value must be smaller than other class. After we find the best parameter vector \(\varvec{b}^{i*}\) to f for each class and the label of \(\varvec{y}\) is obtained by:

3.4 Effectiveness and Efficiency

In PSM, the model is generated by the principal shapes extracted from the training set. So the ability of these principal shapes is very important for our model. If the training data is skewed, the principal shapes gained by this training set can not express the variation of the test time series. In this case, the accuracy of PSM decreases like other methods.

For the first step of our method, we get the eigenvalues and eigenvectors of each class by using SVD. It takes O(min{\(m{n_i}^2\), \(m^2{n_i}\)}) time, where m is the length of the time series, \(n_i\) is the number of time series in i-th class of training set. In Algorithm 1, the parameter matrix A requires O(km) computation time where k is the number of the selected principal shapes and \(k\le min(m,n_i)\). And B needs a matrix multiplication, which requires O(\(k^2m\)) computation time. In each iterative process of fitting the model, the most time-consuming step is the distance calculation for a new \(\varvec{b}\). It take O(km) time. So the Algorithm 1 needs O(max{\(k^2m\), kmt}) time in total where t is the the number of iterations. Usually, we only use a small number of principal shapes to build our model, so k is very small. As we mentioned above, t is also very small because the objective function f is strictly convex. Thus, the total time complexity is O(min{\(m{n_i}^2\), \(m^2{n_i}\)}+ max{\(k^2m\), kmt}).

4 Experiments and Results

4.1 Datasets and Baselines

In order to test the classification performance of our PSM method, we first perform the experiments on the commonly used UCR (See footnote 1) and UEAFootnote 3 datasets (called common datasets in this paper) as other literatures [6, 8, 9, 17]. They provide diverse characteristics with different lengths and number of the classes and different numbers of time series instances and Table 1 gives the details. We use the default train and test data splits, which is the same as the baselines.

To verify the performance of our method on large and long time series datasets, we use extra 8 datasets from UCR archives (see footnote 1) (called large datasets) to do our experiments. The details are shown in Table 2.

We compare our method with other 8 baselines. One is 1-NN classifier with DTW which has been proved it is very difficult to beat [1]. The other methods are: standard shapelet-based classifier with information gain (IG) [21], Kruskal-Wallis statistic (KW) and F-statistic (FS) [8]; The Fast-shapelets algorithm with the help of SAX representation (FSH) [17]; Learning Time Series which is the state-of-the-art algorithm for accuracy (LTS) [6]; Shapelet-transform algorithm which transforms the shapelets into feature vectors for the first time (IGSVM) [8] and FLAG which is known as the fastest shapelet-based algorithm [9]. All experiments are performed on a computer with intel i7 CPU and 16GB memory.

4.2 Accuracy and Running Time on Common Datasets

We first compare our method against the selected baselines in terms of classification accuracy and running time on common datasets of Table 1. The results are shown in Tables 3 and 4 respectively and the best method of each dataset is highlighted in bold. The symbol “-” denotes that we cannot get the method’s result in a reasonable time (over 24 h).

To tell the significant difference in accuracy of different methods of Table 3, a non-parametric Friedman test based on ranks [4] described as a critical difference diagram is employed. Figure 3 shows the results on common datasets. The horizontal line, called cliques, denotes that the related methods has no significant difference [4]. As can be seen, PSM has a better classification accuracy than most baselines. Although PSM has a slight low accuracy than LTS and FLAG, there is no significant difference in rank with these two methods based on the cliques of critical difference diagram recommend.

Time-consuming is another evaluation criterion for different methods. Based on the running time shown in Table 4, PSM is fastest on most datasets and it is 3–4 orders of magnitude faster than most baselines. Recall that LTS has the best accuracy, but it is much slower than PSM in all datasets.

In general, our method PSM is fastest and outperforms almost all of baselines according to the running time while it has comparable accuracy. More precisely, PSM has a clear higher accuracy and less time consuming than NNDTW, IG, KW, FS and FSH methods in terms of the Friedman test and running time. When compared with IGSVM, PSM also has a slight accuracy advantage while it is 3–4 orders of magnitude faster on most datasets. LTS and FLAG is better than PSM on classification accuracy, but they are slow, especially that LTS takes much more running time for slightly larger datasets.

4.3 Accuracy and Running Time on Large Datasets

We now test the performance of our method PSM on large datasets. In this experiments, we discard the comparison with IG, KW, FS and IGSVM methods because these methods are too slow to get the results in a reasonable time on these datasets. The results of PSM and other baselines are shown in Table 5 and Figure 4 gives the critical difference diagram.

Unlike the results on common datasets, PSM not only has the least time-consuming but also gets the best classification accuracy than other baselines on these large datasets. The reason is that the model built by PSM greatly depends on the training set. If the training set gives a good variation representation of the whole dataset, PSM can get a higher classification accuracy. For these large datasets, the abundant training samples basically contain the main variation of time series of datasets, so we get good results.

4.4 Scalability

In this section, we use the time series dataset StraLightCurves in the UCR archives (see footnote 1) to test the scalability of PSM. This dataset contains 9,236 starlight time series of length 1,024 and 3 types of star objects. The training set has 1,000 time series in default partition. Two key factors for testing the scalability are the number of training sample and the length of each sample.

We first fix the length to 1,024 and vary the number of training set from 100 to 1,000. In this case, IG, KW, FS and IGSVM methods are too slow to be run and LTS also cannot be run because of the large memory requirement. So we drop them from the comparison. We have only 4 results for NNDTW because it is also to slow when the number over 400. The running time and classification accuracy of different methods are shown in Fig. 5. As the number of training samples increasing, our method has a distinct advantage while other methods take much more running time, which has the same trends as Tables 4 and 5. One thing we should note that the time consuming of PSM is almost the same when the number of training samples increasing. The reason is that the principal shapes are extracted from the same size of covariance matrix when the training time series have the same length. This suggests that the time consumption of PSM is mainly related to the number of test samples.

Now, we test the effect of the length of time series for different methods. In this experiment, the number is fixed to 1,000 and the length is varied from 100 to 1,024. When length over 300, LTS cannot be run in our computer for the memory constraint and we only get 3 results. For NNDTW, it is too slow when length over 800. Figure 6 gives the results. PSM is still fastest and it is almost linear increase with the length of time series. It’s worth noting that PSM can still get a good accuracy when the length is very short while LTS, FSH and FLAG have low accuracy.

5 Conclusion and Future Work

In this work, we propose a statistical method, PSM, to find the principal shapes of a time series dataset. With the principal shapes of each class, we can predict the class information of unlabeled time series quickly. Experimental results show that PSM is faster than other time series classification methods while it gains comparable classification accuracy compared with the state-of-the-art method on the commonly used time series datasets. Moreover, our method gets highest accuracy on large time series datasets, which fully demonstrates that the extracted principal shapes represent the intrinsic shape variation effectively when we have sufficient training data. Note that we still employ ED as the metric and do not consider the phase shift in time dimension. We propose to consider this situation in the future.

Notes

- 1.

- 2.

The superscript i denotes that we are dealing with the i-th class.

- 3.

References

Batista, G.E., Wang, X., Keogh, E.J.: A complexity-invariant distance measure for time series. In: SDM, vol. 11, pp. 699–710. SIAM (2011)

Björck, Å.: Numerical Methods for Least Squares Problems. SIAM, Philadelphia (1996)

Chang, K.W., Deka, B., Hwu, W.M.W., Roth, D.: Efficient pattern-based time series classification on GPU. In: 2012 IEEE 12th International Conference on Data Mining (ICDM), pp. 131–140. IEEE (2012)

Demšar, J.: Statistical comparisons of classifiers over multiple data sets. J. Mach. Learn. Res. 7(Jan), 1–30 (2006)

Ding, H., Trajcevski, G., Scheuermann, P., Wang, X., Keogh, E.: Querying and mining of time series data: experimental comparison of representations and distance measures. Proc. VLDB Endowment 1(2), 1542–1552 (2008)

Grabocka, J., Schilling, N., Wistuba, M., Schmidt-Thieme, L.: Learning time-series shapelets. In: Proceedings of the 20th ACM SIGKDD International Conference on Knowledge Discovery and Data Mining, pp. 392–401. ACM (2014)

He, Q., Dong, Z., Zhuang, F., Shang, T., Shi, Z.: Fast time series classification based on infrequent shapelets. In: 2012 11th International Conference on Machine Learning and Applications (ICMLA), vol. 1, pp. 215–219. IEEE (2012)

Hills, J., Lines, J., Baranauskas, E., Mapp, J., Bagnall, A.: Classification of time series by shapelet transformation. Data Min. Knowl. Disc. 28(4), 851–881 (2014)

Hou, L., Kwok, J.T., Zurada, J.M.: Efficient learning of timeseries shapelets. In: Thirtieth AAAI Conference on Artificial Intelligence, AAAI, pp. 1209–1215 (2016)

Jeong, Y.S., Jeong, M.K., Omitaomu, O.A.: Weighted dynamic time warping for time series classification. Pattern Recogn. 44(9), 2231–2240 (2011)

Keogh, E., Kasetty, S.: On the need for time series data mining benchmarks: a survey and empirical demonstration. Data Min. Knowl. Disc. 7(4), 349–371 (2003)

Keogh, E., Ratanamahatana, C.A.: Exact indexing of dynamic time warping. Knowl. Inf. Syst. 7(3), 358–386 (2005)

Lin, J., Keogh, E., Wei, L., Lonardi, S.: Experiencing Sax: a novel symbolic representation of time series. Data Min. Knowl. Disc. 15(2), 107–144 (2007)

Mueen, A., Keogh, E., Young, N.: Logical-shapelets: an expressive primitive for time series classification. In: Proceedings of the 17th ACM SIGKDD International Conference on Knowledge Discovery and Data Mining, pp. 1154–1162. ACM (2011)

Petitjean, F., Forestier, G., Webb, G.I., Nicholson, A.E., Chen, Y., Keogh, E.: Dynamic time warping averaging of time series allows faster and more accurate classification. In: 2014 IEEE International Conference on Data Mining (ICDM), pp. 470–479. IEEE (2014)

Baldock, R., Tim, C.: Model-Based Methods in Analysis of Biomedical Images. Oxford University Press, Oxford (1999)

Rakthanmanon, T., Keogh, E.: Fast shapelets: a scalable algorithm for discovering time series shapelets. In: Proceedings of the Thirteenth SIAM Conference on Data Mining (SDM), pp. 668–676. SIAM (2013)

Ratanamahatana, C.A., Keogh, E.: Making time-series classification more accurate using learned constraints. In: Proceedings of the 2004 SIAM International Conference on Data Mining, pp. 11–22. SIAM (2004)

Ratanamahatana, C.A., Keogh, E.: Three myths about dynamic time warping data mining. In: Proceedings of SIAM International Conference on Data Mining (SDM05), pp. 506–510. SIAM (2005)

Ueno, K., Xi, X., Keogh, E., Lee, D.J.: Anytime classification using the nearest neighbor algorithm with applications to stream mining. In: Sixth International Conference on Data Mining, ICDM 2006, pp. 623–632. IEEE (2006)

Ye, L., Keogh, E.: Time series shapelets: a new primitive for data mining. In: Proceedings of the 15th ACM SIGKDD International Conference on Knowledge Discovery and Data Mining, pp. 947–956. ACM (2009)

Acknowledgements

This work was supported by the National 863 Program of China [grant numbers 2015AA015401]; Research Foundation of Ministry of Education and China Mobile [grant number MCM20150507].

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Rights and permissions

Copyright information

© 2017 Springer International Publishing AG

About this paper

Cite this paper

Zhang, Z., Wen, Y., Zhang, Y., Yuan, X. (2017). Time Series Classification by Modeling the Principal Shapes. In: Bouguettaya, A., et al. Web Information Systems Engineering – WISE 2017. WISE 2017. Lecture Notes in Computer Science(), vol 10569. Springer, Cham. https://doi.org/10.1007/978-3-319-68783-4_28

Download citation

DOI: https://doi.org/10.1007/978-3-319-68783-4_28

Published:

Publisher Name: Springer, Cham

Print ISBN: 978-3-319-68782-7

Online ISBN: 978-3-319-68783-4

eBook Packages: Computer ScienceComputer Science (R0)