Abstract

The purpose of this article is to present scientific research results of Karali et al (Proceedings of 8th International Conference on Bioinformatics and Bioengineering (BIBE), 2008, [14]), Karali et al (Inf Technol Biomed, 15(13):381–6, 2011, [15]), Karali et al (J Biosci Med (JBM), 1:6–9, 2013, [16]), Karali et al (Int J Comput Vis, 321–331, 1988 [24]) concerning medical imaging, especially in the fields of image reconstruction in Emission Tomography and image segmentation. Image reconstruction in Positron Emission Tomography (PET) uses the collected projection data of the object/patient under examination. Iterative image reconstruction algorithms have been proposed as an alternative to conventional analytical methods. Despite their computational complexity, they become more and more popular, mostly because they can produce images with better contrast-to-noise (CNR) and signal-to-noise (SNR) ratios at a given spatial resolution, compared to analytical techniques. In Sect. 23.1 of this study we present a new iterative algorithm for medical image reconstruction, under the name Image Space Weighted Least Squares (ISWLS) (Karali et al, Proceedings of 8th International Conference on Bioinformatics and Bioengineering (BIBE), 2008, [14]). In (Karali et al, Proceedings of 8th International Conference on Bioinformatics and Bioengineering (BIBE), 2008, [14]) we used phantom data from a prototype small-animal PET system and the methods presented are applied to 2D sinograms. Further, we assessed the performance of the new algorithm by comparing it to the simultaneous versions of known algorithms (EM-ML, ISRA and WLS). All algorithms were compared in terms of cross-correlation coefficient, reconstruction time and CNRs. ISWLS have ISRA’s properties in noise manipulation and WLS’s acceleration of reconstruction process. As it turned out, ISWLS presents higher CNRs than EM-ML and ISRA for objects of different sizes. Indeed ISWLS shows similar performance to WLS during the first iterations but it has better noise manipulation. Section 23.5 of this study deals with another important field of medical imaging, the image segmentation and in particular the subject of deformable models. Deformable models are widely used segmentation methods with scientifically accepted results. In Karali et al (Int J Comput Vis, 321–331, 1988 [24]) various methods of deformable models are compared, namely the classical snake (Kass et al, Int J Comput Vis, 321–331, 1988, [25]), the gradient vector field snake (GVF snake) (Xu, IEEE Proceedings on Computer Society Conference on Computer Vision and Pattern Recognition, 1997, [36]) and the topology-adaptive snake (t-snake) (Mcinerney, Topologically Adaptable Deformable Models For medical Image Analysis, 1997, [29]), as well as the method of self-affine mapping system (Ida and Sambonsugi, IEEE Trans Imag Process, 9(11), 2000 [22]) as an alternative to snake models. In Karali et al (Int J Comput Vis, 321–331, 1988 [24]) we modified the self-affine mapping system algorithm as far as the optimization criterion is concerned. The new version of self-affine mapping system is more suitable for weak edges detection. All methods were applied to glaucomatic retinal images with the purpose of segmenting the optical disk. The methods were compared in terms of segmentation accuracy and speed. Segmentation accuracy is derived from normalized mean square error between real and algorithm extracted contours. Speed is measured by algorithm segmentation time. The classical snake, T-snake and the self-affine mapping system converge quickly on the optic disk boundary comparing to GVF-snake. Moreover the self-affine mapping system presents the smallest normalized mean square error (nmse). As a result, the method of self-affine mapping system presents adequate segmentation time and segmentation accuracy, and significant independence from initialization.

Access provided by CONRICYT-eBooks. Download conference paper PDF

Similar content being viewed by others

1 Part I

1.1 Introduction

Medical Imaging is a vital component of a large number of clinical applications. Such applications occur at all stages of medical treatment, from diagnosis to the areas of design, implementation and assessment of the effectiveness of treatment. The development of tomography, the breakthrough of modern computer systems, the evolution of specialized computer signal processing packages and the advancements on medical data analysis, has brought a real revolution in radiology and diagnostic radiology. The result of the medical technology development is the noninvasive obtain of precise functional and/or anatomical information of the interior of the human body.

The modern techniques of computed tomography (x-ray, CT), positron emission tomography (PET), single photon emission tomography (SPECT) use detector arrays mounted or rotatable around the test object in order to collect multiple different angular views (projections) of the object. The collected projection data are used by mathematical algorithms to reconstruct images of the areas of interest in the subject matter. These images are either anatomical or images of biochemical activity of structures of interest.

Positron Emission Tomography (PET) is a medical imaging technique, which utilizes the unique features of \(\beta \) \(+\) nuclear decay of specific radionuclides for imaging metabolic activity of the anatomy of the tested structures. Radionuclides are produced in a cyclotron and are used for labeling molecules with particular biological interest. The labeled molecules are introduced intravenously in the body under examination and distributed by the bloodstream to tissues in a manner that is determined by their biochemical properties. Specifically when the radioactive atom of a labeled molecule decays a positron e \(+\) is emitted, which is annihilated, near the point of generation, by an individual electron e- resulting in the emission of two gamma-ray energy 511 keV each. The two emitted photons travel diametrically and can escape from the human body [17].

A PET system consists of a set of detectors which surround the patient and the aim is to detect and convert the high energy photons emitted to an electrical signal. The electrical signal is then fed to signal processing electronic devices. In a typical PET examination the annihilation events detected, are corrected for various factors and reconstructed into tomographic images using special mathematical algorithms. The result of the reconstruction is a whole tomographic image. The luminance value of each pixel of the image is proportional to the amount of the radioactive molecule in the region showing the pixel. Therefore PET images allow the in vivo quantitative recording of the spatial distribution of the radioactive tracer in the body [5, 18, 19].

Methods of medical data reconstruction that have been developed so far are divided into two major categories [5, 17, 18]:

-

Analytical methods, which use the mathematics of the computed tomography, which connect projection data with the spatial distribution of radioactivity within the subject matter.

-

Iterative methods, which model the data collection process by the PET scan and attempt, relying on predefined criteria and a number of successful iterations, to approach the real image of the spatial distribution of the radioactive tracer.

The analytical reconstruction methods are based on linear calculation of the inverse Radon transform and offer direct mathematical solution for the image formation. The core of the analytical reconstruction methods is the Filtered Backprojection algorithm (FBP). Variations or extensions of FBP methods are the Fast Volume Reconstruction Algorithm (FAVOR [7]) and the 3D Reprojection algorithm (3DRP) [17].

The analytical methods are standard reconstruction techniques, which are applied in clinical systems. The reason for their prevalence of the preceding decades lies in their low computational cost. The big disadvantage of analytical techniques is their inability to include correction models of all factors involved in the making of PET data (such as the scope of the positron, the production of two gamma rays at angles between them of less than \(180^{\circ }\), scattering phenomena, the attenuation, random coincidences, the different performance and sensitivity of the detectors, etc.) during the reconstruction process. Further analytical methods do not maintain the non-negativity of the image values and can export images reconstructed with star-like artifacts [17].

The iterative methods are based on stochastic models for the entire data-making process taking into account all of the physical and technical factors involved during a PET examination. They fall into two major categories, the algebraic techniques (algebraic techniques-ART [12]) and the statistical methods which in turn are divided into maximum likelihood techniques (maximum-likelihood algorithms-ML) and least squares methods (least squares-LS, weighted- least squares-WLS [2], iterated space reconstruction algorithm (ISRA) [3]). The algebraic techniques are the first iterative techniques used. Then the algorithm of maximum likelihood (ML) 1982 by Shepp and Vardi [18] has been applied. Since then, multitude of variants have appeared (SAGE [8], RAMLA [4], OSEM [11], MAP-EM [10]), in order to improve efficiency and reconstruction time and the further improvement of the quality of medical image.

The progress in research and clinical application of iterative techniques is closely associated with the development and optimization of electronic circuits and computer systems. They require large computational reconstruction times, high computing and memory storage. However they have great research interest due to the high image quality they produce, compared to analytical methods. These are techniques that allow modeling of the whole data acquisition process in the reconstruction, and most, especially statistical methods, guarantee positive solutions.

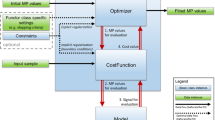

The purpose of this part of the study is to present a new iterative image reconstruction algorithm, under the short name ISWLS [14] (Image Space Weighted Least Squares), produced by the maximization of an objective function. This algorithm was introduced in [14] and studied in [14]. To maximize the objective function, the Kuhn–Tucker condition must be satisfied. ISWLS is expected to have ISRA properties in noise manipulation and WLS acceleration of the reconstruction process. To assess the performance of the new iterative reconstruction method, we have used phantom data produced from simulating a prototype small-animal PET system. We compare ISWLS reconstruction data with those from EM-ML, ISRA, and WLS. We also present the OS (ordered subsets) version of ISWLS (OS-ISWLS) and compare it with the OSEM, OS-ISRA, and OS-WLS [15]. Moreover the MRP (median-root-prior) [1] version of ISWLS is presented and compared with MRP-EMML, MRP-ISRA and MRP-WLS [16]. The methods presented here are applied to 2D sinograms. We have implemented simultaneous versions of the aforementioned algorithms. The simultaneous version of an algorithm is an algorithm where all image pixels are simultaneously updated in every iteration. These methods are of great interest, because of their ability to be implemented in parallel computing architectures, which decreases drastically the total reconstruction time.

2 Theory

In general, every iterative method relies on the hypothesis that the projection data y are linearly connected to the image x of radiopharmaceutical spatial distribution, according to the equation:

where A is a matrix that characterizes the PET system being used for data acquisition. In bibliography this matrix is called system or probability matrix and it projects image data to sinogram domain (the term sinogram refers to the projection data matrix). Every element \(\alpha \) \(_{ij}\) of the system matrix A represents the probability an annihilation event emitted in image pixel i to be detected in LOR\(_{j}\). The significance of the probability matrix lies on the valuable information, related to the data acquisition process, that it can contain (e.g. number of detector rings, number of detector elements in every ring, ring diameter, diameter of transaxial field of view, detector size, image size, spatial and angular sampling).

The most commonly used least squares algorithms, that are based on simultaneous iterative schemes, are ISRA (Image Space Reconstruction Algorithm) and WLS (Weighted Least Squares) with updating step in the kth iteration:

Expectation Maximization Maximum Likelihood (EM-ML) algorithm has an updating step in the kth iteration:

2.1 ISWLS Algorithm

In the current work, we propose a new algorithm under the short name ISWLS. Consider an image discretized into N pixels and the measured data \(\mathbf {y}\) collected by M detector tubes. We can propose the following ISWLS estimator of \(\mathbf {x}\) in Eq. 23.1:

where

Under the conditions in problem (23.5), \({\hat{\mathbf{x }}}\) is a solution if and only if the Kuhn-Tucker condition is satisfied, namely:

where:

According to (23.7) Eq. 23.8 is written as:

So, we obtain the fixed point iterative formula for the ith pixel’s update as follows:

Moreover, we can derive the ordered subsets version of ISWLS, (OS-ISWLS) with updating scheme in kth iteration for subset \(S_n \):

The OS version of an iterative algorithm accelerates the reconstruction process without image deterioration. An OS algorithm divides sinogram data into subsets and applies the simple version of the reconstruction algorithm to every subset. The approximation of the image x\(_{s}\) that the simple version provides after processing the s subset consists the initial solution for the next subset s \(+\) 1. Every OS iteration is completed after the application of the simple algorithm to all subsets of sinogram data.

In addition we can present the MRP (median-root-prior) [1] version of ISWLS. MRP versions belong to regularization’s reconstruction algorithms. These methods take into account a priori information for the radioactivity spatial distribution inside the object under examination. For the reduction of the noise many regularization methods have been proposed, which reduce drastically the noise with a small image resolution reduction. The success of a regularization method depends on the mathematical formula of the prior. Median root prior (MRP) belongs to the most popular priors. It is derived from a Gaussian distribution with mean value the median value of reconstructed image pixels in the vicinity of pixel i. The use of MRP results in noise component reduction while at the same time it preserves the edges.

Suppose that:

where \(\hbox {M}=\hbox {med}(\text {x}_{\hbox {i}}, \hbox {i})\), the median value of reconstructed image pixels in the vicinity of pixel i. Then:

The term \(b\) \(\in \) [0,2] determines the degree of smoothing in reconstruction images. If \(b=0\) no prior is applied. Big values of b cause over-smoothing, while small values of b result in images with high resolution but with increased noise.

According to the one-step-late philosophy [9], where the prior is applied to the previous radiopharmaceutical distribution estimation, we can extract an empirical iterative formula for the ISWLS algorithm in combination with MRP prior. The new iterative algorithm has updating scheme:

The MRP-EM-ML updating scheme in kth iteration is:

The MRP-ISRA updating scheme in kth iteration is:

The MRP-WLS updating scheme in kth iteration is

3 Results

For the evaluation of the iterative reconstruction methods presented in THEORY, projection data of a Derenzo-type phantom have been used. The Derenzo-type phantom consists of sets of rods filled with F\(^{18}\), with diameters 4.8, 4, 3.2, 2.4, 1.6 and 1.2 mm, and the same separation between surfaces in the corresponding sets. The rods were surrounded by plastic (polyethylene). Data were produced using Monte Carlo simulation of a small-animal PET scanner.

Further, 18\(\,\times \,\)10\(^{6}\) coincidence events were collected. Projection data were binned to a 2D sinogram, 55 \(\times \)170 pixels in size, which means that data from 55 TORs (Tube of Response) per rotation angle were collected and 170 totally angular samples were used. Since the two detector heads rotate from 0\(^{\circ }\) to 180\(^{\circ }\) the angular step size was 1.0647\(^{\circ }\).

The system matrix was derived from an analytical method and calculated once before reconstruction. Each element \(a_{ij} \) was computed as the area of intersection \(E_{ij} \), of TOR\(_{j}\) (Tube-of-Response) with image pixel i. The calculated system matrix is a sparse matrix. It consists of zero-valued elements in majority that have no contribution during iterative reconstruction process. So, only the non-zero elements were stored, resulting in significant reduction in system matrix size and consequently in required storage. The reconstructed 2D images were 128 \(\times \)128 pixels in size, thus the system matrix consisted of 55 \(\times \) 170 \(\times \) 128 \(\times \) 128 elements with 4.33% sparsity.

The initial image estimate for all algorithms was:

where \(y\) \(_{j}\) is the value of the jth sinogram element and N represents the total number of image pixels (\(N=128\times 128\) in this implementation).

3.1 Comparative Evaluation of Normal Versions

Figure 23.1 shows the reconstructed transaxial images with EMML, ISRA, WLS, and ISWLS after 1, 10 and 50 iterations.

In Fig. 23.2 cross-correlation coefficient c [13] of every iterative method is plotted, versus the number of iterations. The cross-correlation coefficient c was calculated according to the equation:

where \(\bar{{I}}recon\) and \(\bar{{I}}real\) are the reconstructed image and the true phantom activity image mean values, respectively. Cross-correlation coefficient is a similarity measure between reconstructed and real radiodistribution image. Its values are in the range [−1, 1]. Value \(c=1\) corresponds to fully correlated images.

Except for the cross-correlation coefficient that shows the average performance of the reconstruction methods, local contrast-to-noise ratios (CNR) [6] for rods with different diameters were calculated. CNRs for 4.8, 3.2, and 1.6 mm rods diameter were computed, using squared regions-of-interest (ROIs), 4.55, 3.85 and 1.15 mm in size, respectively. The ROIs were placed inside the corresponding objects. The number of selected ROIs was equal to the number of same sized objects. ROIs of the same sizes were positioned in three different background areas, each.

where \(R_{obj_{ROI} } \) is the mean value of reconstructed objects in the corresponding ROIs, and \(R_{Backg_{ROI} } \) is the mean value of the three background ROIs in each case.

Further, \(\sigma _{Backg_{ROI} } \)is the standard deviation of background values in the corresponding ROIs. The graphs in Fig. 23.3 illustrate the variation of CNR\(_{ROI}\) with respect to the number of iterations, for the three different objects diameters.

In Fig. 23.4 the reconstruction time for every iterative algorithm is presented as a function of the number of iterations. Reconstruction time calculations were performed on a Pentium M processor 1400 MHz (Intel Corp.) personal computer (RAM 1280 MB) under Windows XP Professional.

3.2 Comparative Evaluation of OS Versions

For the evaluation of the ordered subsets, iterative reconstruction methods projection data of the same Derenzo-type phantom has been used as in the comparative study of the simple algorithms.

In Fig. 23.5 cross-correlation coefficient c of every ordered subsets iterative method is plotted versus the number of iterations.

Figure 23.6 presents reconstructed images with OSEM, OS-ISRA, OS-WLS and OS-ISWLS after 1, 3 and 5 iterations for 15 subsets.

In Fig. 23.7 CNRs for two objects of different diameter are plotted versus the number of iterations. CNRs are derived with the same method as explained in Eq. 23.20. Reconstruction time is the same for all ordered subsets algorithms under study. One iteration lasts 29 s for all ordered subsets methods.

3.3 Comparative Evaluation of MRP Versions

Figure 23.8 shows the reconstructed transaxial images with MRP-EMML, MRP-ISRA, MRP-WLS and MRP-ISWLS after 1, 10 and 50 iterations.

In Fig. 23.9 cross-correlation coefficient c of every iterative method is plotted versus the number of iterations.

The graphs in Fig. 23.10 illustrate the variation of CNR\(_{ROI}\) with respect to the number of iterations for the three different object diameter.

4 Discussion

According to results (Figs. 23.2, 23.3) EM-ML and ISRA presents similar behavior at the first 50 iterations. EM-ML converges slowly during the first 50 iterations to the best approximation of the true image. After the 50th iteration the algorithm enhances more small-sized objects but in general image contrast decreases and noise component starts to increase. ISRA on the contrary presents better noise manipulation. ISRA reaches constant CNRs values for big and small-sized objects. WLS shows almost identical noise manipulation as EM-ML algorithm. It reaches faster than EM-ML and ISRA the same CNRs values. ISWLS combines WLS’s acceleration and ISRA’s noise manipulation.

Reconstruction time of EM-ML and WLS is almost the same as a function of the number of iterations (\(\approx \)3.8 s/iteration). Although it is not obvious from Fig. 23.4, ISRA and ISWLS are slower than EM-ML and WLS during the first 9 iterations. Their reconstruction speed is gradually improved by increasing the number of iterations. ISWLS and ISRA reconstruction time converges to the others’ reconstruction time after 10 iterations. The reason for slow reconstruction process during the first iterations lies in the time needed for backprojection computations (\(\sum \limits _{i=1}^M {a_{ij} y_j } \) for ISRA and \(\sum \limits _{i=1}^M {a_{ij} y_j^2}\) for ISWLS) in the first iteration.

The OS versions reach faster than the simple versions the same cross-correlation coefficient and CNRs values, as expected. For example they reach the value of 0.75 of cross-correlation coefficient earlier (during the first 10 iterations) than the simple versions, which they present the same results after 50 iterations. In general OS-ISWLS is comparable to OSEM and shows better performance than OS-WLS, according to CNRs graph, which indicates a better noise manipulation than OS-WLS. ISWLS reaches to the best approximation of the true image during the first 3 iterations for big and small-sized objects. OS-ISRA and OSEM needs few more iterations especially for small-sized objects.

The choice of 15 subsets was made after a comparative study of ISWLS and OS-ISWLS. We compared OS-ISWLS to ISWLS using 3, 9, 15, and 24 subsets. The comparative criterion was CNR, calculated as described in Eq. 23.20. Small number of subsets resulted in CNRs similar to ISWLS. Increasing the number of subsets resulted in images with high CNRs during the first ten iterations. Using 15 or 24 subsets the reconstructed images reach the highest CNR values before ten iterations. ISWLS achieves the same highest CNRs after 50 iterations. We chose 15 subsets to 24 because this number of subsets presented smaller image degradation as the number of iteration increased.

MRP-ISWLS presents higher cross-correlation values than MRP-EM-ML and MRP-ISRA. It shows the same high values of cross-correlation coefficient as MRP-WLS. As illustrated in Fig. 23.10 MRP-ISWLS presents high CNR ratios from the first iterations, higher than MRP-EM-ML and MRP-ISRA. Although it shows similar performance to MRP-WLS, its CNR ratios do not degrade after 50 iterations but tend to be stabilized. So, MRP-ISWLS presents a better noise manipulation than MRP-WLS.

Reconstruction time of MRP-EM-ML and MRP-WLS is almost the same (77.5 s/iteration). MRP-ISRA and MRP-ISWLS are slower than MRP-EM-ML and MRP-WLS during the first 9 iterations (79 s/iteration). Their reconstruction speed is gradually improved by the increasing of iterations number. MRP-ISWLS and MRP-ISRA reconstruction time converges to the others’ reconstruction time after 10 iterations. The reason for slow reconstruction process during the first iterations lies in the time needed for backprojection computations in the first iteration.

In order to determine a satisfactory value of b, various values were applied to MRP-ISWLS. These values were 0.001, 0.01, 0.1, 1, 1.5. The value b \(=\) 0.001 presented higher CNR values in comparison to ISWLS’s results.

In this study, data were corrected for scanner sensitivity prior to the reconstruction process. Such a correction could be incorporated during the reconstruction process, but that exceeds our research purpose.

5 Part II

5.1 Introduction

Extracting useful medical information, for the inside of the human body, from medical images belongs to the field of medical image segmentation. In recent years the computational medical image segmentation plays an increasingly important role in medical imaging. The image segmentation is an important pretreatment step in medical imaging, especially in automatic detection of anatomical structures and pathological conditions. It is the separation process of structures of interest, the implementation of which resulted in the development of numerous algorithms. Because of the wide variety of objects, shapes and variations in image quality, segmentation remains a difficult process. A global segmentation method, which produces satisfactory results for all imaging applications, does not exist. The medical images more often contain high levels of noise, and defects due to incomplete data collection. This could cause appreciable difficulties when applying classical segmentation methods, such as edge detection and thresholding. As a result, these techniques either fail completely or they need an image processing stage after segmentation to remove false limits of the object of interest. Furthermore, subsequent analysis and interpretation of the segmented structures is prevented by the dimensions of the pixel or the voxel.

Each segmentation algorithm aims to identify the pixels belonging to the object of interest or to determine the pixels of the object contour. Detection methods of the object region are based on the intensity values or the texture of the pixels. Contour determination techniques use the derivative of the image, which has high values at the boundaries of objects.

One of the most popular methods of segmentation is the use of deformable models [20, 40]. According to these techniques, near the region of interest an elastic contour model is placed which, through repetitive processes, is adapted to the actual contour. The widely recognized ability of the deformable models comes from their ability to segment, match and trace images of anatomical structures, utilizing restrictions arising from imaging data combined with a priori information relative to the location, size and shape of these structures. The deformable models are capable of resolving the often considerable variation in biological structures over time and from person to person. For this reason they are the focus of research in the segmentation and have been used extensively in medical applications with promising results. Also, they support intuitive interaction mechanisms that allow experts to interpret the results. The deformable models are especially suitable for segmentation of images characterized by intense artifacts, noise, limited spatial resolution and hardly detectable borders between the structures. However, deformable models may result in false contour if the original outline is not placed close to real. They can also present problems in areas of intense curvature of the contour.

The deformable models in the literature are referred to as snakes, active contours or active surfaces, balloons, deformable contours or deformable surfaces. These models are curves or surfaces defined on image domain that deform under the influence of internal and external forces. Internal forces are related to the curve or the surface itself and are designed to keep the model smooth during the deformation process. External forces adjust the model to the real object boundary and their computation is based on image information. In theory, because of the constrain for smooth contours and their ability to incorporate a priori information of object shape, deformable models can handle noise problems in images and contour discontinuities. So, they permit the description of the contour as a continuous mathematical model and they are able to achieve inter-pixel segmentation accuracy.

In this work various methods of deformable models are presented, namely, the classical snake [21, 25, 28], the gradient vector field snake (GVF snake) [36,37,38,39] and the topology-adaptive snake (t-snake) [29,30,31,32, 34]. Also, the method of self-affine mapping system [22, 23] is presented as an alternative to the snakes. The self-affine mapping system was implemented using an adapting scheme for determining the size of areas with similarity [22]. The minimum distance was used as optimization criterion which, according to Sect. 23.7, is more suitable for weak edges detection. All methods were applied to glaucomatic retinal images with the purpose of segmenting the optical disk. Moreover, the aforementioned methods were compared in terms of segmentation accuracy.

The optical disk is the optical nerve and the vessels’ entrance point in the retina. In fundus gray images it appears as a luminous white area. It has an almost round shape that is interrupted by the outgoing vessels. Sometimes the optical disk has an elliptical shape because of the small but not negligible angle between the planes of the image and the object. Optical disk size varies from patient to patient.

Optical disk segmentation is a very important preprocessing stage of many algorithms, which have been designed for automatic detection of retinal anatomical structures and pathological conditions. For example, the detection methods of some vessels and their junctions start from the optical disk area, where the big vessels lie. These can serve as starting points for the detection of the other vessels [35]. Another important feature in retinal images is macula. Macula’s position usually is estimated according to the optical disk’s position under the condition that the distance between the macula and the optical disk is constant [26, 27]. Moreover, optical disk camouflage contributes to better and easier lesions detection related to different retinopathies [20]. Furthermore, the optical disk center can be used as a reference point for distance measurements in retinal images. In addition, the segmented optical disk can be a reference area for the registration of retinal images acquired in different time or with a different method. Retinal images registration can reveal changes in vessels’ size and disposition inside the optical disk, as well as changes in optical disk size related to serious eye diseases, such as glaucoma and vessels neoplasia [33].

6 Theory

6.1 Classical Snake

The classical snakes are used widely in image processing, in computer vision and in medical imaging applications for allocating object boundaries. The classical parametric elastic models (classical snakes), due to Kass, Witkin and Terzopoulos, change shape and finally are adapted to the real object boundary according to the minimization process of an energy function. The energy function reaches its global minimum when the active contour is smooth and coincides with the real object boundary.

6.2 Gradient Vector Flow Snake (GVF Snake)

The use of classical snake is unfortunately limited because it must be initialized close to the true contour and because of its inefficient convergence in boundary concavities. Xu and Prince proposed an improved snake model in order to overcome classical snakes’ limitations. In particular, they introduced a new external force, the gradient vector flow (GVF), that is computed as the diffusion of gradient vectors of a gray or binary edge map of the image. According to Xu and Prince, the usual external forces are conservative forces that make the active contour unable to successfully approximate boundary concavities. GVF is a non- conservative force. Mathematically, it is based on the Helmholtz theorem, according to which a general static vector field can be separated in a conservative and tubular field. GVF snake was designed to have conservative and tubular characteristics in order to present the desirable properties of adequate initialization range and convergence in boundary concavities.

6.3 Topologically Adaptable Snake (T-Snake)

T-snake comprises another variant of classical snake. It is based on a space partitioning technique to create a topologically adaptable snake. The difference between the classical snake and T-snake is the use of an affine cell image decomposition, so as to iteratively reparametrize the snake and to make topological transformations. Image is partitioned into a net of discrete triangular cells. As the snake evolves under the influence of internal and external forces, it is reparametrized with a new set of nodes and elements. The reparametrization process consists of an efficient calculation of the intersections of the snake with the image net. These intersection points might be nodes of the updated T-snake. In 2D the T-snake is a 2D curve consisting of N nodes connected in series. The T-snake is a discrete version of the classical snake and retains many of its properties.

6.4 Self-Affine Mapping System

The self-affine mapping system is a technique similar to the snake model that adjusts an initial curve to the real object contour, using a self-affine mapping system which is used widely in fractal encoding. This particular method has an advantage over conventional snakes, mostly because of its ability to detect distinct and blurred edges with significant accuracy. It has replaced the process of energy minimization of the classical snake with a contractive self-affine mapping system that is used in the creation of fractal shapes. The parameters of the system are determined after a blockwise self-similarity analysis of the gray image through a simplified algorithm of fractal coding. The use of the self-affine mapping system is due to the fact that the points of the initial map, when they are positioned near image edges, after iterative contractions of the map, they are finally attached to the edges. This attraction can be exploited for contour extraction that has the shape of a curve of similar points rather than a curve of smooth points which are detected by the snake model.

Suppose an image g(x) is defined in \(G\subset R^{n}\). If there exist affine transformations \(a_i :A_i \rightarrow R^{n}\) and \(\beta _i :R^{1}\rightarrow R^{1}\) so that

for some image regions \(A_i \subset G\) then the texture in \(A_i \) is similar to the texture in \(a_i (A_i )\) and the image presents self-similarity in these two regions \(A_i \) and \(a_i (A_i )\). The set \(\left\{ {A_i ,a_i ,\beta _i |i=1,2, \ldots ,I} \right\} \), where I is the total number of regions \(A_i \), is called self-affine model of the image. If Eq. 23.21 holds then it can be written that:

so there arises another self-affine model of the image the set \(\{ a_i (A_i ),a_i^{-1} ,\beta _i^{-1} |i=1,2, \ldots ,I\}\). The transformations \(a_i \) dilate maps \(A_i \) and \(\beta _i \) are unit operators. If \(\Omega \) is the set of subsets of G then the self-affine map \(S:\Omega \rightarrow \Omega \) is defined as:

where X is a subset of G and C a fixed set. When X is known its intersection with \(A_i \) is mapped through the affine transformation \(a_i \) and the union of all these mapped regions with C results in the final map S(X).

For a 2D image \(n=2\), \(A_i \) are squared image regions, subsets of G, and transformations \(a_i \) are defined as:

and

where \(\bar{{x}}_i \) the central point of \(A_i \). Moreover, the self-affine model assumes that:

In order for map S to be determined, the regions \(A_i \) are first defined. Then an adequate algorithm searches for the best values of the parameters \(r_i ,p_i ,q_i \) and \(\tau _i =(s_i ,t_i )\) of the map, so that Eq. 23.25 is satisfied for every \(A_i \), so the self-affine models \(\left\{ {A_i ,a_i ,\beta _i } \right\} \) and \(\left\{ {a_i (A_i ),a_i^{-1} ,\beta _i^{-1} } \right\} \) are determined. The searching is performed through a block-matching algorithm. The block-matching algorithm consists of the following steps:

- STEP 1::

-

Initialization of r, s and t. Also, the difference \(E=g(\mathbf {x})-\beta _i (g(a_i (\mathbf {x})))\) is assigned a very large value.

- STEP 2::

-

For every \(\mathbf {x}\in A_i \) the value of \(g(a_i (\mathbf {x}))\) is computed. Because the sampling points \(\mathbf {x}\) may be between image pixels, the values \(g(a_i (\mathbf {x}))\) are computed using bilinear interpolation.

- STEP 3::

-

Initialization of p and q.

- STEP 4::

-

Computation of \(\beta _i (g(a_i (\mathbf {x})))\) for every \(\mathbf {x}\in A_i \).

- STEP 5::

-

Computation of the difference E. For this computation the Mean Square Error (MSN) or the Absolute Mean Distance (AMD) are usually used. If the new E is smaller than the initial value then the initial value is replaced by the new E and the values of r, s, t, p and q are registered as \(r_i \), \(s_i \), \(t_i \), \(p_i \) and \(q_i \) respectively.

- STEP 6::

-

If all p and q are checked then the algorithm moves to STEP 7, otherwise it goes back to STEP 4.

- STEP 7::

-

If all r, s, and t are checked the algorithm is terminated, otherwise it goes back to STEP 2.

As in the conventional snake model this method must be initialized by a rough contour. The pixels inside the initial curve take value 1 and the rest belongs to the background having value 0. This way a binary image is created, called alpha mask. The purpose of the self-affine mapping system is the fitting of the alpha mask contour to the real object boundary. In order for the initial curve b to be attached to the real contour c three conditions must be satisfied:

-

1.

the set c must equal the invariable set S

-

2.

the transformations \(a_i^{-1} \) must be systolic

-

3.

the set b should be adequately close to c

Moreover, parameters s, t which are defined during the block-matching process should be determined, so that every set \(a_i (A_i )\) contains the corresponding \(A_i \), namely: \(-\frac{r-1}{2}e\le s\) and \(t\le \frac{r-1}{2}e\), where eis the size of regions \(A_i \). Finally, the total number of iterations \(\nu \) should be: \(\nu >\frac{\log \frac{e}{2}}{\log r}\).

7 Results

7.1 New Self-Affine Mapping System Optimization Criterion

In the self-affine mapping system the size of the areas \(a_i (A_i )\) was chosen to be twice the size of the areas \(A_i \), namely \(r=2\) so as condition 2 to holds. The searching area for the block-matching process was \([-\frac{n}{4},\frac{n}{4}]\), where n the size of the area A. When the value of n is small (e.g. \(n=8)\) condition 3 is not satisfied, while when it is large (e.g. \(n=32)\) condition 3 is satisfied, but the final contour is a rough approximation of the optic disk true boundary and condition 1 is now not satisfied and fine details of the object’s boundary are not detected. So, we chose an adapting scheme where n was assigned an initial big value (\(n=32)\), which is gradually decreased to \(n=4\), namely \(e_{\max } =32\) and \(e_{\min } =4\). The number of iterations was set to \(\nu =\frac{\log \frac{e}{2}}{\log r}+1\). In Eq. 23.26 p was set to 1 and q to 0. As optimization criteria of measuring the difference between g(x) and \(\beta _i (g(a_i (x)))\), two criteria were tested and optically evaluated. The first was the classical AMD and the second was the Minimum Distance (MD).

Figure 23.11 presents the final contours using \(n=8\) and \(n=32\) fused in the same optical image (Fig. 23.11a) and the final contour using the adapting scheme (Fig. 23.11b).

From Fig. 23.11b one can observe the strong attraction of mask boundary points from the vessels in the area of the optic disk. Vessels are strong edges. The AMD function is minimized towards these strong edges and the mask boundary points are caged from the edges of the vessels. Figure 23.12 shows two examples of a self-affine mapping system application, where AMD (left column) and MD (right column) were used as optimization functions. MD application results in the detection of optic disk boundary and not the detection of points that belong to strong edges like the vessels. However, the detection of weak edges reduces the degrees of freedom in mapping out the initial contour.

7.2 Comparison of Elastic Models

The different segmentation methods were applied to 26 retinal images 512\(\,\times \,\)512 pixels in size, in order for the optic disk boundary to be extracted. For the implementation of the classical snake the external force, derived from a Gaussian potential field with \(\sigma =3\), was used accompanied with a pressure force with constant weights. In every position of the snake nodes, external force values were calculated using the bilinear interpolation method. Constant weights were also used for the internal forces. Initial contours were placed close to the real object boundary.

The GVF field \(\vec {v}(x,y)=\left[ {u(x,y),\upsilon (x,y)} \right] \), was calculated according to the equations:

were \(f_x \) and \(f_y \)the first derivatives of the edge map f of the image I. The edge map was derived as \(f(x,y)=\left| {\nabla \left[ {G_\sigma (x,y){*}I(x,y)} \right] } \right| \), with \(\sigma =3\). Initial values for u and \(\upsilon \) were \(u_{init} =f_x \) and \(\upsilon _{init} =f_y \). Moreover, \(\mu =0.29\) and 80 total iterations were used for the calculation of the GVF field for all the 26 applications. Initial contours were placed close to the real ones.

For the implementation of the T-snake the external force derived from a Gaussian potential field with \(\sigma =3\) was used, accompanied with a pressure force with constant weights. The initial seed point was chosen to be inside the object of interest. According to this seed point the algorithm initializes a square snake.

The self-affine mapping system was implemented according to Sect. 23.7.1.

Figure 23.13 presents comparatively optic disk extraction using the classical snake model, the GVF model, the T-snake and the self- affine mapping system.

Optic disk extraction with (a) manual by expert, (b) the classical snake (\(\alpha =2\), \(\beta =2\), \(\gamma =1\), w \(=\) 7, w\(_{p}=0.05\), 125 total iterations), (c) the GVF snake (\(\alpha =2\), \(\beta =2\), \(\gamma =1\), w \(=\) 7, w\(_{p}=0.05\), \(\mu =0.29\), 80 iterations for GVF calculation and 125 total iterations), (d) the T-snake (\(\alpha =20\), \(\beta =20\), w \(=\) 71, w\(_{p}=70\), 45 total iterations), (d) the self-affine mapping system (e\(_{min}=4\), e\(_{max}=32\), r \(=\) 2)

For the comparative evaluation of the different deformable models normalized mean square error (nmse), between the real optic disk contour \((I_{real})\) and the final contour \((I_{deformabls})\) extracted by every deformable model method applied to 26 retinal images, was computed. The real contours were drawn by an expert. The nmse was calculated according to the equation:

Fig. 23.14 presents nmse for the four deformable model techniques and for the 26 retinal images.

Figure 23.15 shows the total segmentation time for the four deformable models for the 26 retinal images.

8 Discussion

From Fig. 23.13 the final contour of the classical model is smooth and approximates the real one. GVF snake is an alternative of the classical snake designed to detect complicated boundaries with high curvature and sharp edges. The optic disk boundary does not have such characteristics, it is almost round. The range of the two methods is almost the same. The classical snake and the GVF snake must be initialized close to the real contour. According to Fig. 23.15 the GVF snake algorithm is also slower than the classical snake method, mostly because of the extra time it needs to calculate the external GVF force.

T-snake results in a satisfying optic disk contour (Fig. 23.13d). The biggest advantage of the T-snake algorithm is its range. It is initialized in a point inside the optic disk. Moreover, the total segmentation time of T-snake is small, smaller than any other deformable method. So, T-snake presents a robust and efficient segmentation technique.

The self-affine mapping system is superior in optic disk boundary extraction than the other techniques, according to Figs. 23.13 and 23.14. Furthermore the algorithm is independent from optic disk size and image intensity. Also, with the choice of minimum distance as a matching criterion the caging of the model from the vessels is avoided. Self-affine mapping system seems to present bigger independence from initialization than the classical snake and the GVF snake (Fig. 23.16). The initial contour is placed away from the real one (Fig. 23.16b). The total segmentation time of the algorithm is also adequate, according to Fig. 23.15, since it is faster than the classical snake and the GVF snake. The self-affine mapping system succeeds also in small nmse values (Fig. 23.14). Another advantage of the self-affine mapping system is that this method is self-terminated, a characteristic that the other deformable methods do not present.

In general self-affine mapping system succeeds in approximating very well the true optic disk boundary. The distance between the real and the initial contour depends on the value of \(e_{\max } \). If \(e_{\max } \) is increased the degrees of freedom in lining the initial contour are increased.

9 Conclusions

Iterative image reconstruction algorithms have been proposed as an alternative to conventional analytical methods. Despite their computational complexity, they become more and more popular, mostly because they can produce images with better contrast-to-noise (CNR) and signal-to-noise ratios at a given spatial resolution, compared to FBP techniques. Iterative methods are able to incorporate a model of all the physical phenomena during the acquisition process, including scanner characteristics. Based on predetermined criteria and after a series of successful iterations, they attempt to find the best approach to the true image of radioactivity spatial distribution.

In this paper, different simultaneous iterative reconstruction schemes were applied to data acquired from a simulation of a small-animal PET scanner. A new iterative scheme was discussed named ISWLS. EM-ML, ISRA, WLS, and ISWLS and their OS and MRP versions were implemented and evaluated in terms of task-dependent measures for quantization and detection. ISWLS combines WLS’s reconstruction acceleration with ISRA’s good noise manipulation. ISWLS could be preferable for use in 3-D reconstruction applications, where the precalculation of the factor \({\sum ^{M}_{j=1}}{a_{ij}}{y_{j}}\) which is constant, will lessen the computational cost and demands for high computational memory.

Another important field of medical imaging is image segmentation. It is used especially in automatic anatomical structure and pathological areas detection. Because of the variety of object shapes and the variance in image quality, image segmentation remains a difficult task. Every segmentation algorithm aims at the detection of the image pixels that belong to the object of interest. Deformable models are the most popular image segmentation techniques. They are designed to approximate the significant variance of biological structures with time and from person to person.

In this work various methods of deformable models were compared, which are the classical snake, the gradient vector field snake (GVF snake) and the topology-adaptive snake (t-snake). Also, the method of self-affine mapping system was presented as an alternative of the snake models. The self-affine mapping system was implemented using an adapting scheme. Moreover, Minimum Distance was introduced as an optimization criterion more suitable for optic disk boundary detection. All methods were applied to glaucomatic retinal images with the purpose of segmenting the optical disk. The methods were compared in terms of segmentation accuracy. The self-affine mapping system presents efficient segmentation time, segmentation accuracy and significant independence from initialization.

References

Part I

Alenius, S., Ruotsalainen, U., Astola, J.: Using local median as the location of the prior distribution in iterative emission tomography image reconstruction. In: Proceedings of the IEEE Medical Image Conference (1997)

Anderson, M.M., Mair, B.A., Rao, M., Wu, C.H.: Weighted least-squares reconstruction methods for positron emission tomography. IEEE Trans. Med. Imag. 16(2), 159–165 (1997)

Archer, G.E.B., Titterington, D.M.: The iterative image space reconstruction algorithm (Isra) as an alternative to the EM algorithm for solving positive linear inverse problems. Stat. Sin. 5, 77–96 (1995)

Browne, J., De Pierro, A.R.: A row-action alternative to the EM algorithm for maximizing likelihoods in emission tomography. IEEE Trans. Med. Imag. 15(5), 687–699 (1996)

Cherry, S.R., Gambhir, S.S.: Use of positron emission tomography in animal research. ILAR J. 42(3), 219–231 (2001)

Cherry, S.R., Sorenson, J.A., Phelps, M.E.: Physics in Nuclear Medicine. Saunders-Elsevier (2003)

Comtat, C.M., Defrise, M., Townsend, D.: The FAVOR algorithm for 3D PET data and its implementation using a network of transputers. Phys. Med. Biol. 38, 929–944 (1993)

Fessler, J.A., Hero, A.O.: Space-alternating generalized expectation-maximization algorithm. IEEE Trans. Signal Process. 42(10), 2664–2676 (1994). October

Green, P.J.: On use of the EM algorithm for penalized likelihood estimation. J. R. Stat. Soc. Ser. B 52, 443–452 (1990)

Hanson, K.M.: Chapter 3: Bayesian and related methods in image reconstruction from incomplete data. In: Stark, H. (ed.) Image Recovery: Theory and Applications. Academic Press, Orlando (1987)

Hudson, H.M., Larkin, R.S.: Accelerated image reconstruction using ordered subsets of projection data. IEEE Trans. Med. Imag. 13(4), 601–609 (1994)

Jiang, M., Wang, G.: Convergence studies on iterative algorithms for image reconstruction. IEEE Trans. Med. Imag. 22(5), 569–579 (2003)

Karali, E., Asvestas, P., Nikita, K.S., Matsopoulos, G.K.: Comparison of different global and local automatic registration schemes: an application to retinal images. In: Proceedings of MICCAI 2004. LNCS, vol. 3216, Part 1, pp. 813–820. Springer, Berlin (2004)

Karali, E., Pavlopoulos, S., Koutsouris, D.: Assessment of iterative image reconstruction techniques for small-animal PET imaging applications. In: Proceedings of 8th International Conference on Bioinformatics and Bioengineering (BIBE), Athens, Greece, October 2008 (2008)

Karali, E., Pavlopoulos, S., Lambropoulou, S., Koutsouris, D.: ISWLS: novel algorithm for image reconstruction in PET. IEEE Trans. Inf. Technol. Biomed. 15(13), 381–6 (2011)

Karali, E., Koutsouris, D.: Towards novel regularization approaches to PET image reconstruction. J. Biosci. Med. (JBM) 1, 6–9 (2013)

Phelps, M.E.: PET Molecular Imaging and its Applications. Springer, New York (2004)

Shepp, L.A., Vardi, Y.: Maximum likelihood reconstruction for emission tomography. IEEE Trans. Med. Imag. MI–1(2) (1982)

Tarantola, G., Zito, F., Gerundini, P.: PET instrumentation and reconstruction algorithms in whole-bode applications. J. Nucl. Med. 44(5), 756–768 (2003)

Part II

Alireza, O., Majid, M.: Barry Thomas and Richard Markham, Colour Morphology and Snakes for Optic Disc Localisation (2002)

Cohen, L.D.: On active contour models and balloons. Comput. Vis. Gr. Imag. Process. Imag. Underst. 53(2), 211–218

Ida, T., Sambonsugi, Y.: Self-affine mapping system and its application to object contour extraction. IEEE Trans. Imag. Process. 9(11) (2000)

Ida, T., Sambonsugi, Y., Watanabe, T.: Boundary fitting of extracted objects using LIFS. Syst. Comput. Jpn. 31(8) (2000)

Karali, E., Lambropoulou, S., Koutsouris, D.: Elastic models: a comparative study applied to retinal images. Technology and Health Care, vol. 19, pp. 1–13. IOS Press, Amsterdam (2011)

Kass, M., Witkin, A., Terzopoulos, D.: Snakes: active contour models. Int. J. Comput. Vis. 321–331 (1988)

Li, H., Opas, C.: Fundus image features extraction. In: Proceedings of the 22nd Annual EMBS International Conference (2000)

Li, H., Opas, C.: Automatic location of optic disk in retinal images. IEEE (2001)

McInerney, T., Terzopoulos, D.: Deformable models in medical image analysis: a survey. Med. Imag. Anal. 1(2), 91–108 (1996)

Mcinerney, T.: Topologically adaptable deformable models for medical image analysis. Ph.D. Dissertation, University of Toronto (1997)

McInerney, T., Terzopoulos, D.: Medical image segmentation using topologically adaptable surfaces. In: Proceedings of CVRMed’97, France (1997)

McInerney, T., Terzopoulos, D.: Topology adaptive deformable surfaces for medical image volume segmentation. IEEE Trans. Med. Imag. 18(10), 840–850 (1999)

McInerney, T., Terzopoulos, D.: T-snakes: topology adaptive snakes. Med. Imag. Anal. 4, 73–91 (2000)

Rosin, P.L., Marshall, D., Morgan, J.E.: Multimodal retinal imaging: new strategies for the detection of glaucoma. In: IEEE ICIP (2002)

Terzopoulos, D.: Deformable models: classic, topology-adaptive and generalized formulations

Thomas, W., Jean-Claude, K.: Segmentation of color fundus images of the human retina: detection of the optic disk and the vascular tree using morphological techniques (2001)

Xu, C., Prince, J.: Gradient vector flow: a new external force for snakes. In: IEEE Proceedings on Computer Society Conference on Computer Vision and Pattern Recognition, pp. 66–71 (1997)

Xu, C., Prince, J.: Snakes, shapes and gradient vector flow. IEEE Trans. Imag. Process. 7(3) (1998)

Xu, C., Prince, J.L.: Generalized gradient vector flow external forces for active contours. Signal Process. 7(1), 131–139 (1998)

Xu, C., Prince, J.: Gradient vector flow deformable models. Handbook of Medical Imaging. Academic Press, London (2000)

Xu, Yezzi, A., Prince, J.: On the relationship between parametric and geometric active contours. In: Proceedings of the 34th Asilomar Conference on Signals, Systems and Computers, pp. 483-489 (2000)

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Rights and permissions

Copyright information

© 2017 Springer International Publishing AG

About this paper

Cite this paper

Karali, E. (2017). Novel Approaches to Medical Information Processing and Analysis. In: Lambropoulou, S., Theodorou, D., Stefaneas, P., Kauffman, L. (eds) Algebraic Modeling of Topological and Computational Structures and Applications. AlModTopCom 2015. Springer Proceedings in Mathematics & Statistics, vol 219. Springer, Cham. https://doi.org/10.1007/978-3-319-68103-0_23

Download citation

DOI: https://doi.org/10.1007/978-3-319-68103-0_23

Published:

Publisher Name: Springer, Cham

Print ISBN: 978-3-319-68102-3

Online ISBN: 978-3-319-68103-0

eBook Packages: Mathematics and StatisticsMathematics and Statistics (R0)