Abstract

A novel infill sampling criterion is proposed for a surrogate based global optimization algorithm. Due to the extensive amount of calculations required for the meta-heuristic global optimization methods, a surrogate model was employed. In the surrogate based global optimization, SBGO, an iterative process of constructing a model and sampling new points are repeated until a stopping criterion is met. An infill sampling criterion, ISC, controls which data point should be sampled, however, because the characteristics of a design problem are prone to influence the performance of the algorithm, an adaptive ISC should be developed. Thus, in this study, an algorithm that adaptively searches globally and locally considering the current existing samples is proposed. The novel ISC is integrated with a global search measure weighted minimum distance, WD, which considers not only the most ambiguous regions but also accounts for the response values for higher efficiency. The algorithm was tested on unconstrained mathematical functions including the Dixon-Szego test functions and the results were compared with other SBGO algorithms. Additionally, the algorithm was further expanded and implemented to constrained optimization problems using penalizing coefficients.

Access provided by CONRICYT-eBooks. Download conference paper PDF

Similar content being viewed by others

Keywords

1 Introduction

The surrogate based global optimization (SBGO) is an optimization method which employs surrogate model(s) to find the global optimum. Compared to previous global optimization methods such as meta-heuristic methods, it is less computationally expensive due to the use of the surrogates. The basic procedure of SBGO follows the given steps; (1) initial points are sampled to build an initial surrogate, (2) afterwards an infill sampling criterion (ISC) decides next point to be evaluated and added to the sample set, (3) steps 1 and 2 are repeated until some defined stopping criteria are met. The performance of an SBGO algorithm is mainly determined by the defined ISC.

SBGO algorithms can be categorized into two groups according to the use of a single or multiple phase; an algorithm that uses a single phase is dominated by a single criterion throughout the entire process of searching whereas in a multiple phase, a search is performed either in a global region or a bounded local region. The search executed in each region is called global search and local search, respectively. A typical example of a single phase SBGO is the EGO algorithm using EI as the ISC [1]. Other examples may include variations of the EI such as generalized EI [2], or RBF based SBGO such as Bjorkman [3], Gutmann [4], CORS-RBF [5] and weighted EI [6] which pre-defined the search pattern. SuperEGO developed by Sasena et al. [7] is an example for multiple phase SBGO.

Despite the fact that the ISC mostly dictates the algorithm, the performance may become heavily influenced by the characteristics of a design problem. Therefore, it is required to develop an ISC that adaptively accounts for the current state of sample points and decides which region to sample next. In this study, a novel two phase ISC is proposed that adaptively switch search regions between global and local according to its conditions. The distinguishable property of the algorithm is that it does not have pre-defined pattern or repetition but rather accounts for the existing points to determine the next iteration.

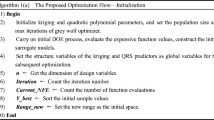

2 Adaptive SBGO

The development objective of the adaptive algorithm is to compensate for the drawbacks caused by the characteristics of a design problem with a fixed criterion. This section will provide the details of the criterion; the overview of SBGO algorithm will be presented first, specific conditions for switching will be then proposed, the weighted minimum distance metric which accounts for the response value in a global search will be presented next and finally, the implementation method to constrained problems will be stated. All optimization procedures from now on will assume to have a goal of finding the minimum.

2.1 Overview of Two Phase SBGO

The schematic for a two phase SBGO is given in Fig. 1. The initial sampling is done in order to create the initial surrogate model. In this study, OLHD [8] is used for initial sample points with a number of 5ndv, ndv being the number of design variables. The model was generated using Kriging [9] with optimized internal parameters. The ISC may decide which domain to search where in our case the global search is always performed at the beginning of the algorithm. The method in choosing either global or local search lies within the ISC. The ISC is generally consisted with the following elements; a term for local search metric and a term for a global search metric. This is expressed in Eq. (1).

The control weight, w, determines if the iteration should be local search or a global search by switching between 0 and 1. Generally, the local search term contains a minimization of the surrogate model to predict a candidate location where the global minimum may exist. The global search term usually consists of a metric for finding the location with the least known information, for example, a point that has the maximum predicted variance. The next two sub-sections will describe the terms in detail and the conditions for deciding the control weight, w.

2.2 Local and Global Search Terms

As previously stated, the local search term should consist of a metric predicting a candidate point where the optimum might be located. This procedure is done using Eq. (2) where the negative complies the search for the minimum of the surrogate when the equation is maximized.

Maximized minimum distance is an often used method to search for a point which lacks known information, i.e. a point that has the potential of giving the most information when sampled. The metric was integrated with weights which adjust according to the response value. The metric will be named as weighted minimum distance and WD in short, defined as Eq. (3).

The \( \bar{y} \) and \( \sigma_{y} \) are the mean and the standard deviation of the response values of the sample points. The WD works similar to the standard minimum distance metric but tends to lean towards areas with smaller response values. Summing up the equations from (1) to (3), the ISC and the optimization problem is defined as Eq. (4).

2.3 Switching Conditions for the ISC

In this section, the details for the conditions controlling the weight in the ISC are presented. The weight changes from 0 to 1 when local to global transition conditions (LtoG) are met and 1 to 0 when global to local transition conditions (GtoL) are met. The rule for this switch is stated below:

-

(1)

LtoG1 condition; when consecutive iterations has no impact of significant improvement

-

(2)

LtoG2 condition; when a last sample point was sampled too close to another existing point

-

(3)

GtoL1 condition; when a new best point has been found

-

(4)

GtoL2 condition; when adequate number of global search is considered to have performed

The following will be the discussion of the four conditions in detail. For the LtoG1 condition, the current best point and the best point from the last iteration are subtracted to calculate the relative improvement. It is then divided with the magnitude of the previous best point for normalization purpose. If the value exceeds a defined threshold, a count is increased and after a number of counts are cumulated, the condition is met and the local search is terminated proceeding to global search after wards i.e. the control weight of the ISC changes from 0 to 1.

For LtoG2 condition, a threshold is defined considering the maximin distance between the existing sample points. The purpose of this changing threshold is to prevent the threshold from becoming too small or too large. If the threshold is too large, local search will always be terminated after a single iteration, if it is too small, it will seldom transit to global search, unable to increase the overall accuracy of the model.

The GtoL1 condition has its purpose of developing a new region with the potential global optimum. This condition is considered by comparing the current best response value and the previous best response value. If the current best is better than the previous, it indicates that a new region with higher potential has been located from the global search and therefore, a more thorough investigation is required leading to local search around that point.

Finally, the GtoL2 condition is added in order to regularly return to local searching and place some restriction in the iteration of global search. To contain the number of consecutive global searches, a threshold and a count is employed as it was with LtoG1 condition. If the consecutive global search points do not find a new best point it will return to local searching for further development of the current best sample. Performing global search with shallow restriction is prone to cause a lot of wasting expensive computation. Therefore, the metric and the thresholds must be decided with caution. In this study for GtoL2 condition, a response deviation of nearest points is considered and defined as Eq. (5).

If the difference between the current and the previous deviations exceeds the threshold i.e. the improvement around the best point is insignificant, the count increases. When the count cumulates enough to the defined value, the control weight of ISC is changed from 1 to 0, in other words, the global search terminates. This metric was observed to insure the prevention of redundant global sampling.

2.4 Expansion for Constrained Problems

There are typically two methods of handling constraints in SBGO algorithms; the penalty method and the probability of feasibility method. A penalized method has an advantage over the probability of feasibility in which it can be sampled at the constraint due to its discrete tendency whereas for the probability of feasibility method it is not easily achieved. Therefore, we have chosen to use the penalty method and applied it over the ISC.

First, a penalty coefficient is added in the WD in order to repel away from infeasible regions during the global search phase. Also, a penalty term is added along with the surrogate model to prevent sampling a violated point.

3 Numerical Examples

To validate the performance and robustness of the algorithm, it has been tested on various mathematical functions including the Dixon-Szego test functions. Details of the test functions can be found in the reference [10]. The result may be heavily influenced by the initial DoE, therefore, 30 different OLHDs were used for each test function and the performance and robustness were measured using the average number of function evaluations (NoE) and the standard deviation of the NoE. Also, the maximum allowed NoE was defined as 200 to sort out the failure. Table 1 is the results for the performance and Table 2 states the success rate and robustness. Other algorithms did not present the success rate nor the deviation in the literature, hence, only the CORS-RBF-Restart [11] is compared for these measures.

The Shekel function results were not given for the EGO algorithm in its paper. It can be observed that the Adaptive SBGO has a comparable performance with the other algorithms. The value in the parentheses is the ranking among the algorithms. It is clear that it always remains within the top three which is a clear advantage when selecting an algorithm without knowing the characteristics of a problem; most of the time in a real world application.

It is shown that the suggested adaptive SBGO withholds a comparable result against the CORS-RBF-Restart algorithm. The Shekel functions seem to press some difficulties for the proposed algorithm, however, it is not a considerably crucial difference.

Table 3 presents the results for the numerical examples of constrained test functions. The test functions used for the constrained problems can be found in the reference [12] which also describes the details of the COBRA algorithm used for comparison.

As it can be seen in the result, the suggested algorithm presents no difficulty for solving constrained optimization problems.

4 Conclusion

In this study a novel infill sampling criterion for surrogate based optimization with conditions governing the search phase is proposed. The purpose of the criterion is to enhance the efficiency of the global optimization process. Note that the criterion is not obliged to a specific surrogate model construction method and can be used for any models.

The suggested algorithm was tested on mathematical functions and the results have shown that the algorithm has a comparable performance along with other previously developed SBGO algorithms. A further study might involve tuning the user parameters more adaptive to the algorithm itself. Since the criterion is not bound to any other aspects of the algorithm, additional conditions and features are expected to be easily augmented.

References

Jones, D.R., Schonlau, M., Welch, W.J.: Efficient global optimization of expensive black-box functions. J. Global Optim. 13, 455–492 (1998)

Schonlau, M.: Computer experiments and global optimization, Ph.D., Statistics, University of Waterloo (1997)

Bjorkman, M., Holmstrom, K.: Global optimization of costly nonconvex functions using radial basis functions. Optim. Eng. 1, 373–397 (2000)

Gutmann, H.M.: A radial basis function method for global optimization. J. Global Optim. 19, 201–227 (2001)

Regis, R.G., Shoemaker, C.A.: Constrained global optimization of expensive black box functions using radial basis functions. J. Global Optim. 31, 153–171 (2005)

Sobester, A., Leary, S.J., Keane, A.J.: On the design of optimization strategies based on global response surface approximation models. J. Global Optim. 33, 31–59 (2005)

Sasena, M.J.: Flexibility and efficiency enhancements for constrained global design optimization with kriging approximations, Ph.D., Mechanical engineering, University of Michigan (2002)

Park, J.S.: Optimal Latin-hypercube designs for computer experiments. J. Stat. Plan. Inference 39, 95–111 (1994)

Sacks, J., Welch, W.J., Mitchell, T.J., Wynn, H.P.: Design and analysis of computer experiments. Stat. Sci. 4, 409–423 (1989)

Dixon, L.C.W., Szego, G.P.: The global optimization problem: an introduction. In: Towards Global Optimization, vol. 2, North-Holland, Amsterdam, pp. 1–15 (1978)

Regis, R.G., Shoemaker, C.A.: Improved strategies for radial basis function methods for global optimization. J. Global Optim. 37, 113–135 (2007)

Regis, R.G.: Constrained optimization by radial basis function interpolation for high-dimensional expensive black-box problems with infeasible initial points. Eng. Optim. 46, 218–243 (2014)

Acknowledgements

This work was supported by “Human Resources Program in Energy Technology” of the Korea Institute of Energy Technology Evaluation and Planning (KETEP), granted financial resource from the Ministry of Trade, Industry & Energy, Republic of Korea. (No. 20164010200860)

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Rights and permissions

Copyright information

© 2018 Springer International Publishing AG

About this paper

Cite this paper

Park, D., Chung, IB., Choi, DH. (2018). Surrogate Based Global Optimization Using Adaptive Switching Infill Sampling Criterion. In: Schumacher, A., Vietor, T., Fiebig, S., Bletzinger, KU., Maute, K. (eds) Advances in Structural and Multidisciplinary Optimization. WCSMO 2017. Springer, Cham. https://doi.org/10.1007/978-3-319-67988-4_52

Download citation

DOI: https://doi.org/10.1007/978-3-319-67988-4_52

Published:

Publisher Name: Springer, Cham

Print ISBN: 978-3-319-67987-7

Online ISBN: 978-3-319-67988-4

eBook Packages: EngineeringEngineering (R0)