Abstract

Large scale multidimensional data are often available as multiway arrays or higher-order tensors which can be approximately represented in distributed forms via low-rank tensor decompositions and tensor networks. Our particular emphasis is on elucidating that, by virtue of the underlying low-rank approximations, tensor networks have the ability to reduce the dimensionality and alleviate the curse of dimensionality in a number of applied areas, especially in large scale optimization problems and deep learning. We briefly review and provide tensor links between low-rank tensor network decompositions and deep neural networks. We elucidating, through graphical illustrations, that low-rank tensor approximations and sophisticated contractions of core tensors, tensor networks have the ability to perform distributed computations on otherwise prohibitively large volume of data/parameters. Our focus is on the Hierarchical Tucker, tensor train (TT) decompositions and MERA tensor networks in specific applications.

Access provided by CONRICYT-eBooks. Download chapter PDF

Similar content being viewed by others

1 Introduction and Objectives

This paper aims to present some new ideas and methodologies related to tensor decompositions (TDs) and tensor networks models (TNs), especially in applications to deep neural networks (DNNs) and dimensionality reduction. The resurgence of artificial neural systems, especially deep learning neural networks has formed an active frontier of machine learning, signal processing and data mining [1,2,3,4,5,6, 13, 14]. Tensor decompositions (TDs) decompose complex data tensors of exceedingly high volume into their factor (component) matrices, while tensor networks (TNs) decompose higher-order tensors into sparsely interconnected small-scale factor matrices and/or low-order core tensors [7,8,9,10,11,12,13,14]. These low-order core tensors are called “components”, “blocks”, “factors” or simply “cores”. In this way, large-scale data can be approximately represented in highly compressed and distributed formats.

In this paper, the TDs and TNs are treated in a unified way, by considering TDs as simple tensor networks or sub-networks; the terms “tensor decompositions” and “tensor networks” will therefore be used interchangeably. Tensor networks can be thought of as special graph structures which break down high-order tensors into a set of sparsely interconnected low-order core tensors, thus allowing for both enhanced interpretation and computational advantages [12,13,14].

Tensor networks offer a theoretical and computational framework for the analysis of computationally prohibitive large volumes of data, by “dissecting” such data into the “relevant” and “irrelevant” information. In this way, tensor network representations often allow for super-compression of data sets as large as \(10^{8}\) entries, down to the affordable levels of \(10^{5}\) or even less entries [15,16,17,18,19,20,21,22,23,24,25].

Challenges in Big Data Processing. Extreme-scale volumes and variety of modern data are becoming ubiquitous across the science and engineering disciplines. In the case of multimedia (speech, video), remote sensing and medical/biological data, the analysis also requires a paradigm shift in order to efficiently process massive data sets within tolerable time (velocity). Such massive data sets may have billions of entries and are typically represented in the form of huge block matrices and/or tensors. This has spurred a renewed interest in the development of tensor algorithms that are suitable for extremely large-scale data sets.

Apart from the huge Volume, the other features which characterize big data include Veracity, Variety and Velocity (see Fig. 1a and b). Each of the “V features” represents a research challenge in its own right. For example, high volume implies the need for algorithms that are scalable; high Velocity requires the processing of big data streams in near real-time; high Veracity calls for robust and predictive algorithms for noisy, incomplete and/or inconsistent data; high Variety demands the fusion of different data types, e.g., continuous, discrete, binary, time series, images, video, text, probabilistic or multi-view. Some applications give rise to additional “V challenges”, such as Visualization, Variability and Value. The Value feature is particularly interesting and refers to the extraction of high quality and consistent information, from which meaningful and interpretable results can be obtained.

Our objective is to show that tensor networks provide a natural sparse and distributed representation for big data, and address both established and emerging methodologies for tensor-based representations and optimization. Our particular focus is on low-rank tensor network representations, which allow for huge data tensors to be approximated (compressed) by interconnected low-order core tensors [10, 11, 14].

2 Tensor Operations and Graphical Representations of Tensor Networks

Tensors are multi-dimensional generalizations of matrices. A matrix (2nd-order tensor) has two modes, rows and columns, while an Nth-order tensor has N modes for example, a 3rd-order tensor (with three-modes) looks like a cube. Sub-tensors are formed when a subset of tensor indices is fixed. Of particular interest are fibers which are vectors obtained by fixing every tensor index but one, and matrix slices which are two-dimensional sections (matrices) of a tensor, obtained by fixing all the tensor indices but two. It should be noted that block matrices can also be represented by tensors.

We adopt the notation whereby tensors (for \(N\ge 3\)) are denoted by bold underlined capital letters, e.g., \(\underline{\mathbf{X}}\in \mathbb R^{I_{1} \times I_{2} \times \cdots \times I_{N}}\). For simplicity, we assume that all tensors are real-valued, but it is possible to define tensors as complex-valued or over arbitrary fields. Matrices are denoted by boldface capital letters, e.g., \(\mathbf{X}\in \mathbb R^{I \times J}\), and vectors (1st-order tensors) by boldface lower case letters, e.g., \(\mathbf{x}\in \mathbb R^J\). For example, the columns of the matrix \(\mathbf{A}=[\mathbf{a}_1,\mathbf{a}_2, \ldots ,\mathbf{a}_R] \in \mathbb R^{I \times R}\) are the vectors denoted by \(\mathbf{a}_r \in \mathbb R^I\), while the elements of a matrix (scalars) are denoted by lowercase letters, e.g., \(a_{ir}=\mathbf{A}(i,r)\) (for more details regarding notations and basic tensor operations see [10,11,12,13,14, 26].

A specific entry of an Nth-order tensor \(\underline{\mathbf{X}}\in \mathbb R^{I_{1} \times I_{2} \times \cdots \times I_{N}}\) is denoted by \(x_{i_1,i_2,\ldots ,i_N} = \underline{\mathbf{X}}(i_1,i_2, \ldots , i_N) \in \mathbb R\). The order of a tensor is the number of its “modes”, “ways” or “dimensions”, which can include space, time, frequency, trials, classes, and dictionaries. The term “size” stands for the number of values that an index can take in a particular mode. For example, the tensor \(\underline{\mathbf{X}}\in \mathbb R^{I_{1} \times I_{2} \times \cdots \times I_{N}}\) is of order N and size \(I_n\) in all modes-n \((n=1,2,\ldots ,N)\). Lower-case letters e.g., i, j are used for the subscripts in running indices and capital letters I, J denote the upper bound of an index, i.e., \(i=1,2,\ldots ,I\) and \(j=1,2,\ldots , J\). For a positive integer n, the shorthand notation \({<}{n}{>}\) denotes the set of indices \(\{1,2,\ldots ,n\}\).

Notations and terminology used for tensors and tensor networks differ across the scientific communities to this end we employ a unifying notation particularly suitable for machine learning and signal processing research [13, 14].

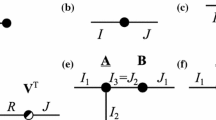

A precise description of tensors and tensor operations is often tedious and cumbersome, given the multitude of indices involved. We grossly simplify the description of tensors and their mathematical operations through diagrammatic representations borrowed from physics and quantum chemistry (see [13, 14, 27] and references therein). In this way, tensors are represented graphically by nodes of any geometrical shapes (e.g., circles, squares, dots), while each outgoing line (“edge”, “leg”, “arm”) from a node represents the indices of a specific mode (see Fig. 2a). In our adopted notation, each scalar (zero-order tensor), vector (first-order tensor), matrix (2nd-order tensor), 3rd-order tensor or higher-order tensor is represented by a circle (or rectangular), while the order of a tensor is determined by the number of lines (edges) connected to it. According to this notation, an Nth-order tensor \(\underline{\mathbf{X}}\in \mathbb R^{I_1 \times \cdots \times I_N}\) is represented by a circle (or any shape) with N branches each of size \(I_n, \; n=1,2, \ldots , N\) (see Sect. 2.1). An interconnection between two circles designates a contraction of tensors, which is a summation of products over a common index (see Fig. 2b).

Hierarchical (multilevel block) matrices are also naturally represented by tensors. All mathematical operations on tensors can be therefore equally performed on block matrices [12, 13].

In this paper, we make extensive use of tensor network diagrams as an intuitive and visual way to efficiently represent tensor decompositions. Such graphical notations are of great help in studying and implementing sophisticated tensor operations. We highlight the significant advantages of such diagrammatic notations in the description of tensor manipulations, and show that most tensor operations can be visualized through changes in the architecture of a tensor network diagram.

2.1 Tensor Operations and Tensor Network Diagrams

Tensor operations benefit from the power of multilinear algebra which is structurally much richer than linear algebra, and even some basic properties, such as the rank, have a more complex meaning.

For convenience, general operations, such as vec\((\cdot )\) or \(\mathrm{diag}(\cdot )\), are defined similarly to the MATLAB syntax.

Multi-indices: By a multi-index \(i = \overline{i_1 i_2 \cdots i_N}\) we refer to an index which takes all possible combinations of values of indices, \(i_1, i_2, \ldots , i_N\), for \(i_n = 1,2,\ldots , I_n\), \(\;n=1,2,\ldots ,N\) and in a specific order. Multi–indices can be defined using the following convention [28]:

Matricization. The matricization operator, also known as the unfolding or flattening, reorders the elements of a tensor into a matrix. Such a matrix is re-indexed according to the choice of multi-index described above, and the following two fundamental matricizations are used extensively.

The mode- n matricization. For a fixed index \(n \in \{1,2,\ldots ,N\}\), the mode-n matricization of an Nth-order tensor, \(\underline{\mathbf{X}}\in \mathbb R^{I_1\times \cdots \times I_N}\), is defined as the (“short” and “wide”) matrix

with \(I_n\) rows and \(I_1 I_2 \cdots I_{n-1} I_{n+1} \cdots I_N\) columns, the entries of which are

Note that the columns of a mode-n matricization, \(\mathbf{X}_{(n)}\), of a tensor \(\underline{\mathbf{X}}\) are the mode-n fibers of \(\underline{\mathbf{X}}\).

The mode- \(\{n\}\) canonical matricization. For a fixed index \(n \in \{1,2,\ldots ,N\}\), the mode-\((1,2,\ldots , n)\) matricization, or simply mode-n canonical matricization, of a tensor \(\underline{\mathbf{X}}\in \mathbb R^{I_1\times \cdots \times I_N}\) is defined as the matrix

with \(I_{1} I_{2} \cdots I_{n}\) rows and \(I_{n+1} \cdots I_{N}\) columns, and the entries

The matricization operator in the MATLAB notation (reverse lexicographic) is given by

As special cases we immediately have

The tensorization of a vector or a matrix can be considered as a reverse process to the vectorization or matricization (see Fig. 3) [14].

Tensor reshaping operations: matricization, vectorization and tensorization. Matricization refers to converting a tensor into a matrix, vectorization to converting a tensor or a matrix into a vector, while tensorization refers to converting a vector, a matrix or a low-order tensor into a higher-order tensor

Matricization (flattening, unfolding) used in tensor reshaping. a Tensor network diagram for the mode-n matricization of an Nth-order tensor, \(\underline{\mathbf{A}}\in \mathbb R^{I_1 \times I_2 \times \cdots \times I_N}\), into a short and wide matrix, \(\mathbf{A}_{(n)} \in \mathbb R^{I_n \;\times \; I_1 \cdots I_{n-1} I_{n+1} \cdots I_N}\). b Mode-\(\{1,2,\ldots ,n\}\)th (canonical) matricization of an Nth-order tensor, \(\underline{\mathbf{A}}\), into a matrix \(\mathbf{A}_{{<}{n}{>}} = \mathbf{A}_{(\overline{i_1 \ldots i_n}\; ; \; \overline{i_{n+1} \ldots i_N})} \in \mathbb R^{I_1 I_2 \cdots I_n \; \times \;I_{n+1} \cdots I_N}\)

The following symbols are used for most common tensor multiplications: \(\circ \) for the outer product \(\otimes \) for the Kronecker product, \(\odot \) for the Khatri–Rao product, \(\circledast \) for the Hadamard (componentwise) product, and \( \times _n\) for the mode-n product. We refer to [13, 14, 26, 29] for more detail regarding the basic notations and tensor operations (Figs. 4 and 5).

Outer product. The central operator in tensor analysis is the outer or tensor product, which for the tensors \(\underline{\mathbf{A}}\in \mathbb R^{I_1 \times \cdots \times I_N}\) and \(\underline{\mathbf{B}}\in \mathbb R^{J_1 \times \cdots \times J_M}\) gives the tensor \(\underline{\mathbf{C}}=\underline{\mathbf{A}}\,\circ \, \underline{\mathbf{B}}\in \mathbb R^{I_1 \times \cdots \times I_N \times J_1 \times \cdots \times J_M }\) with entries \(c_{i_1,\ldots ,i_N,j_1,\ldots ,j_M} = a_{i_1,\ldots ,i_N} \; b_{j_1,\ldots ,j_M}\).

Note that for 1st-order tensors (vectors), the tensor product reduces to the standard outer product of two nonzero vectors, \(\mathbf{a}\in \mathbb R^I\) and \(\mathbf{b}\in \mathbb R^J\), which yields a rank-1 matrix, \(\mathbf{X}=\mathbf{a}\,\circ \, \mathbf{b}= \mathbf{a}\mathbf{b}^{\text {T}} \in \mathbb R^{I \times J}\). The outer product of three nonzero vectors, \(\mathbf{a}\in \mathbb R^I, \; \mathbf{b}\in \mathbb R^J\) and \(\mathbf{c}\in \mathbb R^K\), gives a 3rd-order rank-1 tensor (called pure or elementary tensor), \(\underline{\mathbf{X}}= \mathbf{a}\,\circ \, \mathbf{b}\,\circ \, \mathbf{c}\in \mathbb R^{I \times J \times K}\), with entries \(x_{ijk} =a_i \; b_j \; c_k\).

The outer (tensor) product has been generalized to the nonlinear outer (tensor) products, as follows

where \(\rho \) is, in general, nonlinear suitably chosen function (see [30] and Sect. 7 for more detail).

In a similar way, we can define the generalized Kronecker and the Khatri-Rao products. Generalized Kronecker product of two tensors \(\underline{\mathbf{A}}\in \mathbb R^{I_1 \times I_2 \times \cdots \times I_N}\) and \(\underline{\mathbf{B}}\in \mathbb R^{J_1 \times J_2 \times \cdots \times J_N}\) yields a tensor \(\underline{\mathbf{C}}= \underline{\mathbf{A}}\otimes _{\rho } \underline{\mathbf{B}}\in \mathbb R^{I_1 J_1 \times \cdots \times I_N J_N}\), with entries \(c_{\;\overline{i_1 j_1}, \ldots , \overline{i_N j_N}} = \rho (a_{i_1, \ldots ,i_N}, b_{j_1, \ldots ,j_N})\).

Analogously, we can define a generalized Khatri–Rao product of two matrices \(\mathbf{A}=[\mathbf{a}_1, \ldots , \mathbf{a}_J] \in \mathbb R^{I\times J}\) and \(\mathbf{B}= [\mathbf{b}_1, \ldots , \mathbf{b}_J] \in \mathbb R^{K\times J}\) is a matrix \(\mathbf{C}= \mathbf{A}\odot _{\rho } \mathbf{B}\in \mathbb R^{I K \times J}\), with columns \(\mathbf{c}_j = \mathbf{a}_j \otimes _{\rho } \mathbf{b}_j \in \mathbb R^{IK}\).

CP decomposition, Kruskal tensor. Any tensor can be expressed as a finite sum of rank-1 tensors, in the form

which is exactly the form of the Kruskal tensor, also known under the names of CANDECOMP/PARAFAC, Canonical Polyadic Decomposition (CPD), or simply the CP decomposition in (23). We will use the acronyms CP and CPD.

Tensor rank. The tensor rank, also called the CP rank, is a natural extension of the matrix rank and is defined as a minimum number, R, of rank-1 terms in an exact CP decomposition of the form in (6).

Multilinear products. The mode-n (multilinear) product, also called the tensor-times-matrix product (TTM), of a tensor, \(\underline{\mathbf{A}}\in \mathbb R^{I_{1} \times \cdots \times I_{N}}\), and a matrix, \(\mathbf{B}\in \mathbb R^{J \times I_n}\), gives the tensor

with entries \(c_{i_1,i_2,\ldots ,i_{n-1},j,i_{n+1},\ldots , i_{N}} =\sum _{i_n=1}^{I_n} a_{i_1,i_2,\ldots ,i_N} \; b_{j,i_n}\). An equivalent matrix representation is \(\mathbf{C}_{(n)} =\mathbf{B}\mathbf{A}_{(n)}\), which allows us to employ established fast matrix-by-vector and matrix-by-matrix multiplications when dealing with very large-scale tensors. Efficient and optimized algorithms for TTM are, however, still emerging [31,32,33].

Full Multilinear Product. A full multilinear product, also called the Tucker product,Footnote 1 of an Nth-order tensor, \(\underline{\mathbf{G}}\in \mathbb R^{R_1 \times R_2 \times \cdots \times R_N}\), and a set of N factor matrices, \(\underline{\mathbf{B}}^{(n)} \in \mathbb R^{I_n \times R_n}\) for \(n=1,2,\ldots , N\), performs the multiplications in all the modes and can be compactly written as

Observe that this format corresponds to the Tucker decomposition [26, 34, 35] (see also Sect. 3.1).

Multilinear product of a tensor and a vector (TTV). In a similar way, the mode-n multiplication of a tensor, \(\underline{\mathbf{G}}\in \mathbb R^{R_{1} \times \cdots \times R_{N}}\), and a vector, \(\mathbf{b}\in \mathbb R^{R_n}\) (tensor-times-vector, TTV) yields a tensor

with entries \( c_{r_1,\ldots ,r_{n-1},r_{n+1},\ldots , r_{N}} =\sum _{r_n=1}^{R_n} g_{r_1, \ldots ,r_{n-1}, r_n, r_{n+1},\ldots ,r_N}\; b_{r_n}\).

Note that the mode-n multiplication of a tensor by a matrix does not change the tensor order, while the multiplication of a tensor by vectors reduces its order, with the mode n removed.

Generalized (nonlinear) multilinear tensor products used in deep learning in a compact tensor network notation. a Generalized multilinear product of a tensor, \(\underline{\mathbf{G}}\in \mathbb R^{R_1 \times R_2 \times \cdots \times R_5}\), and five factor (component) matrices, \(\mathbf{B}^{(n)} \in \mathbb R^{I_n \times R_n}\) (\(n=1,2,\ldots ,5\)), yields the tensor \(\underline{\mathbf{C}}= (((((\underline{\mathbf{G}}\,\times ^{\sigma }_1 \,\mathbf{B}^{(1)}) \,\times ^{\sigma }_2\, \mathbf{B}^{(2)}) \,\times ^{\sigma }_3 \,\mathbf{B}^{(3)}) \,\times ^{\sigma }_4\, \mathbf{B}^{(4)}) \,\times ^{\sigma }_5 \,\mathbf{B}^{(5)}) \in \mathbb R^{I_1 \times I_2 \times \cdots \times I_5}\). This corresponds to the generalized Tucker format. c Generalized multi-linear product of a 4th-order tensor, \(\underline{\mathbf{G}}\in \mathbb R^{R_1 \times R_2 \times R_3 \times R_4}\), and three vectors, \(\mathbf{b}_n \in \mathbb R^{R_n}\) \((n=1,2,3)\), yields the vector \(\mathbf{c}= (((\underline{\mathbf{G}}\, \bar{\times }^{\sigma }_1\, \mathbf{b}_1) \,\bar{\times }^{\sigma }_2 \,\mathbf{b}_2) \,\bar{\times }^{\sigma }_3 \,\mathbf{b}_3) \in \mathbb R^{R_4} \), where, in general, \(\sigma \) is a nonlinear activation function

Multilinear products of tensors by matrices or vectors play a key role in deterministic methods for the reshaping of tensors and dimensionality reduction, as well as in probabilistic methods for randomization/sketching procedures and in random projections of tensors into matrices or vectors. In other words, we can also perform reshaping of a tensor through random projections that change its entries, dimensionality or size of modes, and/or the tensor order. This is achieved by multiplying a tensor by random matrices or vectors, transformations which preserve its basic properties [36,37,38,39,40,41,42,43].

Tensor contractions. Tensor contraction is a fundamental and the most important operation in tensor networks, and can be considered a higher-dimensional analogue of matrix multiplication, inner product, and outer product.

In a way similar to the mode-n multilinear product,Footnote 2 the mode-\( (_n^m)\) product (tensor contraction) of two tensors, \(\underline{\mathbf{A}}\in \mathbb R^{I_1 \times I_2 \times \cdots \times I_N}\) and \(\underline{\mathbf{B}}\in \mathbb R^{J_1 \times J_2 \times \cdots \times J_M}\), with common modes, \(I_n=J_m\), yields an \((N+M-2)\)-order tensor, \( \underline{\mathbf{C}}\in \mathbb R^{I_1 \times \cdots \times I_{n-1} \times I_{n+1} \times \cdots \times I_N \times J_{1} \times \cdots \times J_{m-1} \times J_{m+1} \times \cdots \times J_M}\), in the form (see Fig. 6a)

for which the entries are computed as \( c_{i_1,\, \ldots , \, i_{n-1}, \, i_{n+1}, \, \ldots , i_N, \, j_1, \, \ldots , \, j_{m-1}, \, j_{m+1}, \,\ldots , \, j_M} = \sum _{i_n=1}^{I_n} a_{i_1, \ldots , i_{n-1}, \, i_n, \, i_{n+1}, \, \ldots ,\, i_N} \; b_{j_1, \, \ldots , \,j_{m-1}, \, i_n, \, j_{m+1}, \, \ldots , \, j_M}\). This operation is referred to as a contraction of two tensors in single common mode.

Tensors can be contracted in several modes (or even in all modes), as illustrated in Fig. 6. Often, the super- or sub-index, e.g., m, n, will be omitted in a few special cases. For example, the multilinear product of the tensors, \(\underline{\mathbf{A}}\in \mathbb R^{I_{1} \times I_{2} \times \cdots \times I_{N}}\) and \(\underline{\mathbf{B}}\in \mathbb R^{J_{1} \times J_{2} \times \cdots \times J_{M}}\), with common modes, \(I_N=J_1\), can be written as

for which the entries \(c_{\mathbf{i}_{2:N},\mathbf{j}_{2:M}}= \sum _{i=1}^{I_1} a_{i, \mathbf{i}_{2:N}} b_{i,\mathbf{j}_{2:M}}\) by using the MATLAB notation \(\mathbf{i}_{p:q}=\{i_p,i_{p+1},\ldots ,i_{q-1},i_q\}\).

In this notation, the multiplications of matrices and vectors can be written as, \(\mathbf{A}\times ^1_2 \mathbf{B}= \mathbf{A}\times ^1 \mathbf{B}=\mathbf{A}\mathbf{B}\), \(\;\mathbf{A}\times ^2_2 \mathbf{B}=\mathbf{A}\mathbf{B}^{\text {T}}\), \(\;\mathbf{A}\times ^{1,2}_{1,2} \; \mathbf{B}= \mathbf{A}\bar{\times }\mathbf{B}= \langle \mathbf{A}, \mathbf{B}\rangle \), and \(\mathbf{A}\times ^1_2 \;\mathbf{x}=\mathbf{A}\times ^1 \mathbf{x}=\mathbf{A}\mathbf{x}\).

Examples of contractions of two tensors. a Tensor contraction of two 4th-order tensors, along mode-3 in \(\underline{\mathbf{A}}\) and mode-2 in \(\underline{\mathbf{B}}\), yields a 6th-order tensor, \(\underline{\mathbf{C}}= \underline{\mathbf{A}}\; \times _{3}^{2} \; \underline{\mathbf{B}}\in \mathbb R^{I_1 \times I_2 \times I_4 \times J_1 \times J_3 \times J_4}\), with entries \(c_{i_1,i_2,i_4,j_1,j_3,j_4}= \sum _{i_3} \; a_{i_1,i_2,i_3,i_4} \; b_{j_1,i_3,j_3,j_4}\). b Tensor contraction of two 5th-order tensors along the modes 3, 4, 5 in \(\underline{\mathbf{A}}\) and 1, 2, 3 in \(\underline{\mathbf{B}}\) yields a 4th-order tensor, \(\underline{\mathbf{C}}= \underline{\mathbf{A}}\; \times _{5, 4, 3}^{1, 2, 3} \; \underline{\mathbf{B}}\in \mathbb R^{I_1 \times I_2 \times J_4 \times J_5}\). Nonlinear contraction can be also performed similar to formula (5)

In practice, due to the high computational complexity of tensor contractions, especially for tensor networks with loops, this operation is often performed approximately [44,45,46,47].

3 Mathematical and Graphical Representation of Basic Tensor Networks

Tensor networks (TNs) represent a higher-order tensor as a set of sparsely interconnected lower-order tensors (see Fig. 7), and in this way provide computational and storage benefits. The lines (branches, edges) connecting core tensors correspond to the contracted modes while their weights (or numbers of branches) represent the rank of a tensor network,Footnote 3 whereas the lines which do not connect core tensors correspond to the “external” physical variables (modes, indices) within the data tensor. In other words, the number of free (dangling) edges (with weights larger than one) determines the order of a data tensor under consideration, while set of weights of internal branches represents the TN rank.

Illustration of the decomposition of a 9th-order tensor, \(\underline{\mathbf{X}}\in \mathbb R^{I_1 \times I_2 \times \cdots \times I_9}\), into different forms of tensor networks (TNs). In general, the objective is to decompose a very high-order tensor into sparsely (weakly) connected low-order and small size core tensors, typically 3rd-order and 4th-order cores. Top: The Tensor Train (TT) model, which is equivalent to the Matrix Product State (MPS) with closed boundary conditions (CBC). Middle: The Projected Entangled-Pair States (PEPS). Bottom: The Hierarchical Tucker (HT)

3.1 The CP and Tucker Tensor Formats

The CP and Tucker decompositions have long history. For recent surveys and more detailed information we refer to [12,13,14, 26, 48,49,50]. Compared to the CP decomposition, the Tucker decomposition provides a more general factorization of an Nth-order tensor into a relatively small size core tensor and factor matrices, and can be expressed as follows:

where \(\underline{\mathbf{X}}\in \mathbb R^{I_1\times I_2 \times \cdots \times I_N}\) is the given data tensor, \(\underline{\mathbf{G}}\in \mathbb R^{R_1 \times R_2 \times \cdots \times R_N}\) is the core tensor, and \(\mathbf{B}^{(n)} =[\mathbf{b}_1^{(n)},\mathbf{b}_2^{(n)},\ldots ,\mathbf{b}_{R_n}^{(n)}] \in \mathbb R^{I_n\times R_n}\) are the mode-n factor (component) matrices, \(n=1,2,\ldots ,N\) (see Fig. 8). The core tensor (typically, \(R_n \ll I_n\)) models a potentially complex pattern of mutual interaction between the vectors in different modes. The model in (11) is often referred to as the Tucker-N model.

Using the properties of the Kronecker tensor product, the Tucker-N decomposition in (11) can be expressed in an equivalent vector form as

where the multi-indices are ordered in a reverse lexicographic order (little-endian).

Illustration of the standard Tucker and Tucker-CP decompositions, where the objective is to compute the factor matrices, \(\mathbf{B}^{(n)}\), and the core tensor, \(\underline{\mathbf{G}}\). Tucker decomposition of a 3rd-order tensor, \(\underline{\mathbf{X}}\cong \underline{\mathbf{G}}\times _1 \mathbf{B}^{(1)} \times _2 \mathbf{B}^{(2)} \times _3 \mathbf{B}^{(3)}\). In some applications, the core tensor can be further approximately factorized using the CP decomposition as \(\underline{\mathbf{G}}\cong \sum _{r=1}^R \mathbf{a}_r \,\circ \, \mathbf{b}_r \,\circ \, \mathbf{c}_r\) or alternatively using TT/HT decompositions. Graphical representation of the Tucker-CP decomposition for a 3rd-order tensor, \(\underline{\mathbf{X}}\cong \underline{\mathbf{G}}\times _1 \mathbf{B}^{(1)} \times _2 \mathbf{B}^{(2)} \times _3 \mathbf{B}^{(3)} =\llbracket \underline{\mathbf{G}}; \mathbf{B}^{(1)}, \mathbf{B}^{(2)}, \mathbf{B}^{(3)} \rrbracket \cong (\underline{\varvec{\varLambda }} \times _1 \mathbf{A}^{(1)} \times _2 \mathbf{A}^{(2)} \times _3 \mathbf{A}^{(3)}) \times _1 \mathbf{B}^{(1)} \times _2 \mathbf{B}^{(2)} \times _3 \mathbf{B}^{(3)} = \llbracket \underline{\varvec{\varLambda }}; \; \mathbf{B}^{(1)} \mathbf{A}^{(1)}, \; \mathbf{B}^{(2)} \mathbf{A}^{(2)}, \; \mathbf{B}^{(3)} \mathbf{A}^{(3)} \rrbracket \)

Note that the CP decomposition can be considered as a special case of the Tucker decomposition, whereby the cube core tensor has nonzero elements only on the main diagonal. In contrast to the CP decomposition, the unconstrained Tucker decomposition is not unique. However, constraints imposed on all factor matrices and/or core tensor can reduce the indeterminacies to only column-wise permutation and scaling, thus yielding a unique core tensor and factor matrices [51].

3.2 Operations in the Tucker Format

If very large-scale data tensors admit an exact or approximate representation in their TN formats, then most mathematical operations can be performed more efficiently using the so obtained much smaller core tensors and factor matrices.

As illustrative example, consider the Nth-order tensors \( \underline{\mathbf{X}}\) and \( \underline{\mathbf{Y}}\) in the Tucker format, given by

for which the respective multilinear ranks are \(\{R_1,R_2,\ldots ,R_N\}\) and \(\{Q_1,Q_2,\ldots ,Q_N\}\), then the following mathematical operations can be performed directly in the Tucker format, which admits a significant reduction in computational costs [13, 52,53,54]:

-

The addition of two Tucker tensors of the same order and sizes

$$\begin{aligned} \underline{\mathbf{X}}+ \underline{\mathbf{Y}}= \llbracket \underline{\mathbf{G}}_X \oplus \underline{\mathbf{G}}_Y; \; [\mathbf{X}^{(1)}, \mathbf{Y}^{(1)}], \ldots , [\mathbf{X}^{(N)}, \mathbf{Y}^{(N)}] \rrbracket , {XXXX} \end{aligned}$$(14)where \(\oplus \) denotes a direct sum of two tensors, and \([\mathbf{X}^{(n)},\mathbf{Y}^{(n)}] \in \mathbb R^{I_n \times (R_n + Q_n)}\), \(\;\;\mathbf{X}^{(n)} \in \mathbb R^{I_n \times R_n}\) and \(\mathbf{Y}^{(n)} \in \mathbb R^{I_n \times Q_n}, \; \forall n\).

-

The Kronecker product of two Tucker tensors of arbitrary orders and sizes

$$\begin{aligned} \underline{\mathbf{X}}\otimes \underline{\mathbf{Y}}= \llbracket \underline{\mathbf{G}}_X \otimes \underline{\mathbf{G}}_Y; \; \mathbf{X}^{(1)} \otimes \mathbf{Y}^{(1)}, \ldots , \mathbf{X}^{(N)} \otimes \mathbf{Y}^{(N)}\rrbracket . \quad \end{aligned}$$(15) -

The Hadamard or element-wise product of two Tucker tensors of the same order and the same sizes

$$\begin{aligned} \underline{\mathbf{X}}\circledast \underline{\mathbf{Y}}= \llbracket \underline{\mathbf{G}}_X \otimes \underline{\mathbf{G}}_Y; \; \mathbf{X}^{(1)} \odot _1 \mathbf{Y}^{(1)}, \ldots , \mathbf{X}^{(N)} \odot _1 \mathbf{Y}^{(N)}\rrbracket ,\qquad \end{aligned}$$(16)where \(\odot _1\) denotes the mode-1 Khatri–Rao product, also called the transposed Khatri–Rao product or row-wise Kronecker product.

-

The inner product of two Tucker tensors of the same order and sizes can be reduced to the inner product of two smaller tensors by exploiting the Kronecker product structure in the vectorized form, as follows

$$\begin{aligned} \langle \underline{\mathbf{X}}, \underline{\mathbf{Y}}\rangle&= \text{ vec }(\underline{\mathbf{X}})^{\text {T}} \; \text{ vec }(\underline{\mathbf{Y}}) \\&= \text{ vec }(\underline{\mathbf{G}}_X)^{\text {T}} \left( \bigotimes _{n=1}^N \mathbf{X}^{(n)\;\text {T}} \right) \left( \bigotimes _{n=1}^N \mathbf{Y}^{(n)} \right) \text{ vec }(\underline{\mathbf{G}}_Y) \nonumber \\&= \text{ vec }(\underline{\mathbf{G}}_X)^{\text {T}} \left( \bigotimes _{n=1}^N \mathbf{X}^{(n)\,\text {T}} \; \mathbf{Y}^{(n)} \right) \; \text{ vec }(\underline{\mathbf{G}}_Y) \nonumber \\&= \langle \llbracket \underline{\mathbf{G}}_X; (\mathbf{X}^{(1)\,\text {T}} \; \mathbf{Y}^{(1)}), \ldots , (\mathbf{X}^{(N)\,\text {T}} \; \mathbf{Y}^{(N)}) \rrbracket , \underline{\mathbf{G}}_Y \rangle . \nonumber \end{aligned}$$(17) -

The Frobenius norm can be computed in a particularly simple way if the factor matrices are orthogonal, since then all products \(\mathbf{X}^{(n)\,\text {T}} \, \mathbf{X}^{(n)}, \; \forall n\), become the identity matrices, so that

$$\begin{aligned} \Vert \mathbf{X}\Vert _F&= \langle \underline{\mathbf{X}}, \underline{\mathbf{X}}\rangle \nonumber \\&= \text{ vec }\left( \llbracket \underline{\mathbf{G}}_X; (\mathbf{X}^{(1)\,\text {T}} \; \mathbf{X}^{(1)}), \ldots , (\mathbf{X}^{(N)\,\text {T}} \; \mathbf{X}^{(N)}) \rrbracket \right) ^{\text {T}} \text{ vec }(\underline{\mathbf{G}}_X) \nonumber \\&= \text{ vec }(\underline{\mathbf{G}}_X)^{\text {T}} \; \text{ vec }(\underline{\mathbf{G}}_X) = \Vert \underline{\mathbf{G}}_X\Vert _F. \end{aligned}$$(18) -

The N-D discrete convolution of tensors \(\underline{\mathbf{X}}\in \mathbb R^{I_1 \times \cdots \times I_N}\) and \(\underline{\mathbf{Y}}\in \mathbb R^{J_1 \times \cdots \times J_N}\) in their Tucker formats can be expressed as

$$\begin{aligned} \underline{\mathbf{Z}}= & {} \underline{\mathbf{X}}*\underline{\mathbf{Y}}= \llbracket \underline{\mathbf{G}}_Z; \mathbf{Z}^{(1)}, \ldots , \mathbf{Z}^{(N)} \rrbracket \\\in & {} \mathbb R^{(I_1+J_1-1) \times \cdots \times (I_N+J_N-1)}. \nonumber \end{aligned}$$(19)If \(\{R_1,R_2,\ldots ,R_N\}\) is the multilinear rank of \(\underline{\mathbf{X}}\) and \(\{Q_1,Q_2,\ldots ,Q_N\}\) the multilinear rank \(\underline{\mathbf{Y}}\), then the core tensor \(\underline{\mathbf{G}}_Z = \underline{\mathbf{G}}_X \otimes \underline{\mathbf{G}}_Y \in \mathbb R^{R_1 Q_1 \times \cdots \times R_N Q_N} \) and the factor matrices

$$\begin{aligned} \mathbf{Z}^{(n)} = \mathbf{X}^{(n)} \boxdot _1 \mathbf{Y}^{(n)} \in \mathbb R^{(I_n+J_n-1) \times R_n Q_n}, \end{aligned}$$(20)where \(\mathbf{Z}^{(n)}(:,s_n) = \mathbf{X}^{(n)}(:,r_n) *\mathbf{Y}^{(n)}(:,q_n) \in \mathbb R^{(I_n+J_n-1)}\) for \(s_n= \overline{r_n q_n} = 1,2, \ldots , R_n Q_n\).

-

Super Fast discrete Fourier transform (MATLAB functions fftn\((\underline{\mathbf{X}})\) and fft\((\mathbf{X}^{(n)}, [], 1)\)) of a tensor in the Tucker format

$$\begin{aligned} \mathcal{{F}} (\underline{\mathbf{X}}) = \llbracket \underline{\mathbf{G}}_X; \mathcal{{F}} (\mathbf{X}^{(1)}), \ldots , \mathcal{{F}} (\mathbf{X}^{(N)})\rrbracket . \end{aligned}$$(21)Note that if the data tensor admits low multilinear rank approximation, then performing the FFT on factor matrices of relatively small size \(\mathbf{X}^{(n)} \in \mathbb R^{I_n \times R_n}\), instead of a large-scale data tensor, decreases considerably computational complexity. This approach is referred to as the super fast Fourier transform in Tucker format.

Similar operations can be performed in other TN formats [13].

4 Curse of Dimensionality and Separation of Variables for Multivariate Functions

The term curse of dimensionality was coined by Bellman [55] to indicate that the number of samples needed to estimate an arbitrary function with a given level of accuracy grows exponentially with the number of variables, that is, with the dimensionality of the function. In a general context of machine learning and the underlying optimization problems, the “curse of dimensionality” may also refer to an exponentially increasing number of parameters required to describe the data/system or an extremely large number of degrees of freedom. The term “curse of dimensionality”, in the context of tensors, refers to the phenomenon whereby the number of elements, \(I^N\), of an Nth-order tensor of size \((I \times I \times \cdots \times I)\) grows exponentially with the tensor order, N. Tensor volume can therefore easily become prohibitively big for multiway arrays for which the number of dimensions (“ways” or “modes”) is very high, thus requiring huge computational and memory resources to process such data. The understanding and handling of the inherent dependencies among the excessive degrees of freedom create both difficult to solve problems and fascinating new opportunities, but comes at a price of reduced accuracy, owing to the necessity to involve various approximations.

The curse of dimensionality can be alleviated or even fully dealt with through tensor network representations; these naturally cater for the excessive volume, veracity and variety of data (see Fig. 1) and are supported by efficient tensor decomposition algorithms which involve relatively simple mathematical operations. Another desirable aspect of tensor networks is their relatively small-scale and low-order core tensors, which act as “building blocks” of tensor networks. These core tensors are relatively easy to handle and visualize, and enable super-compression of the raw, incomplete, and noisy huge-scale data sets. This suggests a solution to a more general quest for new technologies for processing of exceedingly large data sets within affordable computation times [13, 18, 56,57,58].

To address the curse of dimensionality, this work mostly focuses on approximative low-rank representations of tensors, the so-called low-rank tensor approximations (LRTA) or low-rank tensor network decompositions.

A tensor is said to be in a full or raw format when it is represented as an original (raw) multidimensional array [59], however, distributed storage and processing of high-order tensors in their full format is infeasible due to the curse of dimensionality. The sparse format is a variant of the full tensor format which stores only the nonzero entries of a tensor, and is used extensively in software tools such as the Tensor Toolbox [60] and in the sparse grid approach [61,62,63].

As already mentioned, the problem of huge dimensionality can be alleviated through various distributed and compressed tensor network formats, achieved by low-rank tensor network approximations.

The underpinning idea is that by employing tensor networks formats, both computational costs and storage requirements may be considerably reduced through distributed storage and computing resources. It is important to note that, except for very special data structures, a tensor cannot be compressed without incurring some compression error, since a low-rank tensor representation is only an approximation of the original tensor.

The concept of compression of multidimensional large-scale data by tensor network decompositions can be intuitively explained as follows [13]. Consider the approximation of an N-variate function \(h(\mathbf{x})= h(x_1,x_2,\ldots ,x_N)\) by a finite sum of products of individual functions, each depending on only one or a very few variables [64,65,66,67]. In the simplest scenario, the function \(h(\mathbf{x})\) can be (approximately) represented in the following separable form

In practice, when an N-variate function \(h(\mathbf{x}) \) is discretized into an Nth-order array, or a tensor, the approximation in (22) then corresponds to the representation by rank-1 tensors, also called elementary tensors (see Sect. 2.1). Observe that with \(I_n, \;n=1,2,\ldots ,N\) denoting the size of each mode and \(I=\max _n \{I_n\}\), the memory requirement to store such a full tensor is \(\prod _{n=1}^N I_n \le I^N\), which grows exponentially with N. On the other hand, the separable representation in (22) is completely defined by its factors, \(h^{(n)}(x_n), \;(n=1,2, \ldots ,N\)), and requires only \(\sum _{n=1}^N I_n \ll I^N\) storage units.

If \(x_1,x_2, \ldots , x_N\) are statistically independent random variables, their joint probability density function is equal to the product of marginal probabilities, \(p(\mathbf{x}) = p^{(1)}(x_1) p^{(2)} (x_2) \ldots p^{(N)}(x_N)\), in an exact analogy to outer products of elementary tensors. Unfortunately, the form of separability in (22) is rather rare in practice.

It should be noted that a function \(h (x_1, x_2)\) is a continuous analogue of a matrix, say \(\mathbf{H}\in \mathbb R^{I_1 \times I_2}\), while a function \(h(x_1, \ldots , x_N)\) in N dimensions is a continuous analogue of an N-order grid tensor \(\underline{\mathbf{H}}\in \mathbb R^{I_1 \times \cdots \times I_N}\). In other words, the discretization of a continuous score function \(h(x_1, x_2, \ldots , x_N)\) on a hyper-cube leads to a grid tensor of order N. Specifically, we make use of a grid tensor that approximates and/or interpolates \(h(x_1, \ldots , x_N)\) on a grid of points.

The concept of tensor networks rests upon generalized (full or partial) separability of the variables of a high dimensional function. This can be achieved in different tensor formats, including:

-

1.

The Canonical Polyadic (CP) format, where

$$\begin{aligned} h(x_1,x_2,\ldots ,x_N)\cong \sum _{r=1}^R h_{r}^{(1)}(x_1) \, h_{r}^{(2)} (x_2) \cdots h_{r}^{(N)}(x_N), \end{aligned}$$(23)in an exact analogy to (22). In a discretized form, the above CP format can be written as an Nth-order tensor

$$\begin{aligned} \underline{\mathbf{H}}\cong \sum _{r=1}^R {\mathbf{h}}_{\;r}^{(1)} \,\circ \, {\mathbf{h}}_{\;r}^{(2)} \,\circ \, \cdots \,\circ \, {\mathbf{h}}_{\;r}^{(N)} \in \mathbb R^{I_1 \times I_2 \times \cdots \times I_N}, \end{aligned}$$(24)where \({\mathbf{h}}_{\;r}^{(n)} \in \mathbb R^{I_n}\) denotes a discretized version of the univariate function \(h_r^{(n)}(x_n)\), symbol \(\circ \) denotes the outer product, and R is the tensor rank.

-

2.

The Tucker format, given by (see Sect. 3.1)

$$\begin{aligned} h(x_1,\ldots ,x_N) \cong \sum _{r_1=1}^{R_1} \cdots \sum _{r_N=1}^{R_N} g_{r_1,\ldots ,r_N} \; h_{r_1}^{(1)}(x_1) \cdots h_{r_N}^{(N)}(x_N), \end{aligned}$$(25)and its distributed tensor network variants,

-

3.

The Tensor Train (TT) format (see Sect. 6.2), in the form

$$\begin{aligned} h(x_1,x_2,\ldots ,x_N) \cong&\sum _{r_1=1}^{R_1} \sum _{r_2=1}^{R_2} \ldots \sum _{r_{N-1}=1}^{R_{N-1}} h_{r_1}^{(1)}(x_1) \, h_{r_1 \, r_2}^{(2)} (x_2) \cdots \nonumber \\ \cdots&h_{r_{N-2} \, r_{N-1}}^{(N-2)} (x_{N-1})\; h_{r_{N-1}}^{(N)}(x_N), \end{aligned}$$(26)with the equivalent compact matrix representation

$$\begin{aligned} h(x_1,x_2,\ldots ,x_N) \cong \mathbf{H}^{(1)} (x_1) \, \mathbf{H}^{(2)} (x_2) \cdots \mathbf{H}^{(N)} (x_N), \end{aligned}$$(27)where \(\mathbf{H}^{(n)}(x_n) \in \mathbb R^{R_{n-1} \times R_n}\), with \(R_0=R_N=1\).

All the above approximations adopt the form of “sum-of-products” of single-dimensional functions, a procedure which plays a key role in all tensor factorizations and decompositions.

Indeed, in many applications based on multivariate functions, a relatively good approximations are obtained with a surprisingly small number of factors; this number corresponds to the tensor rank, R, or tensor network ranks, \(\{R_1,R_2,\ldots , R_N\}\) (if the representations are exact and minimal). However, for some specific cases this approach may fail to obtain sufficiently good low-rank TN approximations [67]. The concept of generalized separability has already been explored in numerical methods for high-dimensional density function equations [22, 66, 67] and within a variety of huge-scale optimization problems [13, 14].

To illustrate how tensor decompositions address excessive volumes of data, if all computations are performed on a CP tensor format in (24) and not on the raw Nth-order data tensor itself, then instead of the original, exponentially growing, data dimensionality of \(I^N\), the number of parameters in a CP representation reduces to NIR, which scales linearly in the tensor order N and size I. For example, the discretization of a 5-variate function over 100 sample points on each axis would yield the difficulty to manage \(100^5 =\) 10,000,000,000 sample points, while a rank-2 CP representation would require only \(5 \times 2 \times 100 = 1000\) sample points.

In contrast to CP decomposition algorithms, TT tensor network formats in (26) exhibit both very good numerical properties and the ability to control the error of approximation, so that a desired accuracy of approximation is obtained relatively easily [13, 68,69,70]. The main advantage of the TT format over the CP decomposition is the ability to provide stable quasi-optimal rank reduction, achieved through, for example, truncated singular value decompositions (tSVD) or adaptive cross-approximation [64, 71, 72]. This makes the TT format one of the most stable and simple approaches to separate latent variables in a sophisticated way, while the associated TT decomposition algorithms provide full control over low-rank TN approximations.Footnote 4 We therefore, make extensive use of the TT format for low-rank TN approximations and employ the TT toolbox software for efficient implementations [68]. The TT format will also serve as a basic prototype for high-order tensor representations, while we also consider the Hierarchical Tucker (HT) and the Tree Tensor Network States (TTNS) formats (having more general tree-like structures) whenever advantageous in applications [13].

Furthermore, the concept of generalized separability of variables and the tensorization of structured vectors and matrices allows us to to convert a wide class of huge-scale optimization problems into much smaller-scale interconnected optimization sub-problems which can be solved by existing optimization methods [11, 14].

The tensor network optimization framework is therefore performed through the two main steps:

-

Tensorization of data vectors and matrices into a high-order tensor, followed by a distributed approximate representation of a cost function in a specific low-rank tensor network format.

-

Execution of all computations and analysis in tensor network formats (i.e., using only core tensors) that scale linearly, or even sub-linearly (quantized tensor networks), in the tensor order N. This yields both the reduced computational complexity and distributed memory requirements.

The challenge is to extend beyond the standard Tucker and CP tensor decompositions, and to demonstrate the perspective of TNs in extremely large-scale data analytic, together with their role as a mathematical backbone in the discovery of hidden structures in prohibitively large-scale data. Indeed, TN models provide a framework for the analysis of linked (coupled) blocks of tensors with millions and even billions of non-zero entries [13, 14].

5 Tensor Networks Approaches for Deep Learning

Revolution (breakthroughs) in the fields of Artificial Intelligence (AI) and Machine Learning triggered by class of deep convolutional neural networks (DCNNs), often simply called CNNs, has been a vehicle for a large number of practical applications and commercial ventures in computer vision, speech recognition, language processing, drug discovery, biomedical informatics, recommender systems, robotics, games, and artificial creativity, to mention just a few.

The renaissance of deep learning neural networks [5, 6, 73, 74] has both created an active frontier of machine learning and has provided many advantages in applications, to the extent that the performance of DNNs in multi-class classification problems can be similar or even better than what is achievable by humans.

Deep learning is highly interesting in very large-scale data analysis for many reasons, including the following [14]:

-

1.

High-level representations learnt by deep NN models, that are easily interpretable by humans, can also help us to understand the complex information processing mechanisms and multi-dimensional interaction of neuronal populations in the human brain;

-

2.

Regarding the degree of nonlinearity and multi-level representation of features, deep neural networks often significantly outperform their shallow counterparts;

-

3.

In big data analytic, deep learning is very promising for mining structured data, e.g., for hierarchical multi-class classification of a huge number of images.

It is well known that both shallow and deep NNs are universal function approximators in the sense that they are able to approximate arbitrarily well any continuous function of N variables on a compact domain, under the condition that a shallow network has an unbounded width (i.e., the size of a hidden layer), that is, an unlimited number of parameters. In other words, a shallow NN may require a huge (intractable) number of parameters (curse of dimensionality), while DNNs can perform such approximations using a much smaller number of parameters.

Universality refers to the ability of a deep learning network to approximate any function when no restrictions are imposed on its size. On the other hand, depth efficiency refers to the case when a function realized by polynomially-sized deep neural network requires shallow networks to have super-polynomial (exponential) size for the same accuracy of approximation (course of dimensionality). This is often referred to as the expressive power of depth.

Despite recent advances in the theory of DNNs, there are several open fundamental challenges (or open problems) related to understanding high performance DNNs, especially the most successful and perspective DCNNs [13, 14]:

-

Theoretical and practical bounds on the expressive power of a specific architecture, i.e., quantification of the ability to approximate or learn wide classes of unknown nonlinear functions;

-

Ways to reduce the number of parameters without a dramatic reduction in performance;

-

Ability to generalize while avoiding overfitting in the learning process;

-

Fast learning and the avoidance “bad” local and spurious minima, especially for highly nonlinear score (objective) functions;

-

Rigorous investigation of the conditions under which deep neural networks are “much better” the shallow networks (i.e., NNs with one hidden layer).

The aim of this section is to discuss the many advantages of tensor networks in addressing the first two of the above challenges and to build up both intuitive and mathematical links between DNNs and TNs. Revealing such links and inherent connections will both cross-fertilize deep learning and tensor networks and provide new insights.

In addition to establishing the existing and developing new links, this will also help to optimize existing DNNs and/or generate new architectures with improved performances.

We shall first present an intuitive approach using a simplified hierarchical Tucker (HT) model, followed by alternative simple but efficient, tensor train/tensor chain (TT/TC) architectures. We also propose to use more sophisticated TNs, such as MERA tensor network models in order to enable more flexibility, improved performance, and/or higher expressive power of the next generation of DCCNs.

5.1 Why Tensor Networks Are Important in Deep Learning?

Several research groups have recently investigated the application of tensor decompositions to simplify DNNs and to establish links between the deep learning and low-rank tensor networks [14, 75,76,77,78,79,80]. For example, [80] presented a general and constructive connection between Restricted Boltzmann Machines (RBM), which is a fundamental basic building block in class of Deep Boltzmann Machines, and (TNs) together with the correspondence between general Boltzmann machines and TT/MPS. In a series of research papers [30, 79, 81, 82] the authors analyze the expressive power of a class of DCNNs using simplified Hierarchal Tucker (HT) models (see the next sections). Particularly, Convolutional Arithmetic Circuits (ConvAC), also known as Sum-Product Networks, and Convolutional Rectifier Networks (CRN) have been considered as HT model. They claim that a shallow (single hidden layer) network realizes the classic CP decomposition, whereas a deep network with \(\log _2 N\) hidden layers realizes Hierarchical Tucker (HT) decomposition (see the next section). Some researchers also argued that the “unreasonable success” of deep learning can be explained by inherent law of physics, such as the theory of TNs that often employ physical constraints locality, symmetry, compositional hierarchical functions, entropy, and polynomial log-probability, imposed on measurements or input training data [77, 80, 83]. In fact, a very wide spectrum of tensor networks can be potentially used to model and analyze some specific classes of DNNs, in order to obtain simpler and/or more efficient neural networks in the sense that they could provide more expressive power or reduced complexity. Such an approach not only promises to open the door to various mathematical and algorithmic tools for enhanced analysis of DNNs, but also allows us to design novel multi-layer architectures and practical implementations of various deep learning systems. In other words, the consideration of tensor networks in this context may give rise to new NN architectures which could be even potentially superior to the existing ones, but have so far been overlooked by practitioners. Furthermore, methods used for reducing or approximating TNs could be a vehicle to achieve more efficient DNNs, with a reduced number of parameters. This follows from the facts that redundancy is inherent both in TNs and DNNs. Moreover, both TNs and DNNs are usually not unique. For example, two NNs with different connection weights and biases may result into the modeling the same nonlinear function. Therefore, the knowledge about redundancy in TNs can help simplify DNNs [14].

The general concept of optimization of DNNs via TNs is illustrated in Fig. 9. Given a specific DNN, we first construct an equivalent TN representation of the given DNN, then the TN is transformed into its reduced or canonical form by performing, e.g., the truncated SVD at each rank (bond). This will reduce the rank dimensions to the minimal requirement determined by a desired accuracy of approximation. Finally, we map back the reduced and optimized TN to another DNN. Since a rounded (approximated) TN has smaller ranks dimensions, a final DNN can be simpler than the original one,and with the same or slightly reduced performance.

Optimization of Deep Neural Networks (DNNs) using The Tensor Networks (TNs) approach. In order to simplify a specific DNN and reduce the number of its parameters, we first transform the DNN into a specific TN, e.g., TT/MPS, then transform the approximated (with reduced rank) TN back to a new optimized DNN. Such transformation can performed in a layer by layer fashion, or globally for the whole DNN. For detailed discussions of such mappings for the Restricted Boltzmann Machine (RBM) see [80]. Optionally, we may choose to first construct and learn (e.g., via tensor completion) a tensor network and then transform it to an equivalent DNN

It should be noted that, in practice, low-rank TN approximations have many potential advantages over a direct reduction of redundant DNNs, due to availability of many efficient optimization methods to reduce the number of parameters and achieve a pre-specified approximation error. Moreover, low-rank tensor networks are capable of avoiding the curse of dimensionality through low-order sparsely interconnected core tensors.

In the past two decades, quantum physicists and computer scientists have developed solid theoretical understanding and efficient numerical techniques for low-rank TN decompositions.

The entanglement entropy, Renyi’s entropy, entanglement spectrum and long range correlations are four of the most widely used quantities (calculated from a spatial reduced density matrix) investigated in the theory of tensor networks. The spatial reduced density matrix is determined by splitting a TN into two parts, say, regions A and B, where a density matrix in region A is given by integrating out all the degrees of freedom in region B. The entanglement spectra are determined by the eigenvalues of the reduced density matrix [84, 85].

Entanglement is a physical phenomenon that occurs when pairs or groups of particles, such as photons, electrons, or qubits, are generated or interact in such way that the quantum state of each particle cannot be described independently of the others, so that a quantum state must be described for the system as a whole. Entanglement entropy is therefore a measure for the amount of entanglement. Strictly speaking, entanglement entropy is a measure of how quantum information is stored in a quantum state and it is mathematically expressed as the von Neumann entropy of the reduced density matrix. Entanglement entropy characterizes the information content of a bipartition of a specific TN. Furthermore, the entanglement area law explains that the entanglement entropy increases only proportionally to the boundary between the two tensor sub-networks. Also entanglement entropy characterizes the information content of the distribution of singular values of a matricized tensor, and can be viewed as a proxy for the correlations between the two partitions; uncorrelated data has zero entanglement entropy at any bipartition.

Note that TNs are usually designed to efficiently represent large systems which exhibit a relatively low entanglement entropy. In practice, we often need to only care about a small fraction of the input training data among a huge number of possible inputs similar to deep neural networks. This all suggest that certain guiding principles in DNNs correspond to the entanglement area law used in the theory of tensor networks. These may then used to quantify the expressive power of a wide class of DCNNs. Note that long range correlations also typically increase with the entanglement. We therefore conjecture that realistic data sets in most successful machine learning applications have relatively low entanglement entropies [86]. On the other hand, by exploiting the entanglement entropy bound of TNs, we can rigorously quantify the expressive power of a wide class of DNNs applied to complex and highly correlated data sets.

5.2 Basic Features of Deep Convolutional Neural Networks

Basic DCNNs are usually characterized by at least three features: locality, weight sharing (optional) and pooling explained below [14, 79]

-

Locality refers to the connection of a (artificial) neuron only to neighboring neurons in the preceding layer, as opposed to being fed by the entire layer (this is consistent with biological NNs).

-

Weight sharing reflects the property that different neurons in the same layer, connected to different neighborhoods in the preceding layer, often share the same weights. Note that weight sharing, when combined with locality, gives rise to standard convolution. However, it should noted that although weight sharing may reduce the complexity of a deep neural network, it is optional. However, the locality at each layer is a key factor which gives DCNNs an exponential advantage over shallow NNs [77, 87, 88].

-

Pooling, is essentially an operator that gradually decimates (reduces) layer sizes by replacing the local population of neural activations in a spatial window by a single value (e.g., by taking their maxima, average values or their scaled products). In the context of images, pooling induces invariance to translation, which often does not affect semantic content, and is interpreted as a way to create a hierarchy of abstractions in the patterns that neurons respond to [14, 79, 87].

Usually, DCNNs perform much better when dealing with compositional function approximationsFootnote 5 and multi-class classification problems than shallow network architectures with one hidden layer. In fact, DCNNs can efficiently and conveniently select a subset of features for multiple classes, while for efficient learning a DCNN model can be pre-trained by first learning each DCNN layer, followed by fine tuning of the parameter of the entire model e.g., stochastic gradient descent. To summarize, the deep learning neural networks have the ability to exploit and approximate the complexity of compositional hierarchical functions arbitrarily well, whereas shallow networks are blind to them.

5.3 Score Functions for Deep Convolutional Neural Networks

Consider a multi-class classification task where the input training data, also called local structures or instances (e.g., input patches in images), are denoted by \(X= (\mathbf{x}_1,\ldots , \mathbf{x}_N)\), where \(\mathbf{x}_n \in \mathbb R^S\) \(\;n=1,\ldots ,N\)) belonging to one of C categories (classes) denoted by \(y_c \in \{y_1,y_2, \ldots , y_C\}\). Such a representation is quite natural for many high-dimensional data—in images, the local structures represent patches consisting of S pixels, while in audio data voice can be represented through spectrograms.

For this kind of problems, DCNNs aim is to model the following set of multivariate score functions:

where \(\underline{\mathbf{W}}_{\,y_c} \in \mathbb R^{I_1 \times \cdots \times I_N}\) is an Nth-order coefficient tensor (typically, with all dimensions \(I_n=I, \; \forall n\)), N is the number of (typically overlapped) input patches \(\mathbf{x}_n\), \(I_n\) is the size (dimension) of each mode \(\underline{\mathbf{W}}_{\,y_c}\), and \(f_{\theta _{1}}, \ldots , f_{\theta _{I_n}}\) are referred to as the representation functions (in the representation layer) selected from a parametric family of nonlinear functions.Footnote 6

In general, the one-dimensional basis functions could be polynomials, splines or other sets of basis functions. Natural choices for this family of nonlinear functions are also radial basis functions (Gaussian RBFs), wavelets, and affine functions followed by point-wise activations. Particularly interesting are Gabor wavelets, owing to their ability to induce features that resemble representations in the visual cortex of human brain.

Note that the representation functions in standard (artificial) neurons have the form

for the set of parameters \(\theta _{i}=\{\tilde{\mathbf{w}}_{i}, b_{i}\}\), where \(\sigma (\cdot )\) is a suitably chosen activation function.

The representation layer play a key role to transform the inputs, by means of I nonlinear functions, \(f_{\theta _i}(\mathbf{x}_n)\) \(\;(i=1,2,\ldots ,I)\), to template input patches, thereby creating I feature maps [81]. Note that the representation layer can be expressed by a feature vector defined as

where \( {\mathbf{f}}_{\varvec{\theta }}(\mathbf{x}_n) =[f_{\theta _1}(\mathbf{x}_n), f_{\theta _2}(\mathbf{x}_n), \ldots , f_{\theta _{I_n}}(\mathbf{x}_n)]^T \in \mathbb R^{I_n}\) for \(n=1,2,\ldots , N\) and \(i_n=1,2,\ldots , I_n\).

Alternatively, the representation layer can be expressed as rank one tensor (see Fig. 10a)

This allows us to represent the score function as an inner product of two tensors, as illustrated in Fig. 10a

Various representations of the score function of a DCNN. a Direct Representation of the score function \(h_{y_c}(\mathbf{x}_1,\mathbf{x}_2,\ldots ,\mathbf{x}_N)= \underline{\mathbf{W}}_{y_c} \bar{\times }_1 {\mathbf{f}}_{\varvec{\theta }}(\mathbf{x}_1) \bar{\times }_2 {\mathbf{f}}_{\varvec{\theta }}(\mathbf{x}_2) \cdots \bar{\times }_N {\mathbf{f}}_{\varvec{\theta }}(\mathbf{x}_N)\). Note that the coefficient tensor \(\underline{\mathbf{W}}_c\) can be represented in a distributed form by any suitable tensor network. b Graphical illustration of the Nth-order grid tensor of the score function \(h_{c}\). This model can be considered as a special case of Tucker-N model where the representation matrix \(\mathbf{F}_n \in \mathbb R^{ I_n \times I_n}\) built up factor matrices; note that typically all the factor matrices are the same and \(I_n =I,\; \forall n\). c CP decomposition of the coefficient tensor \(\underline{\mathbf{W}}_{\,y_c} = \underline{\varvec{\varLambda }}^{(y_c)} \times _1 \mathbf{W}^{(1)} \times _2 \mathbf{W}^{(2)} \ldots \times _N \mathbf{W}^{(N)} = \displaystyle \sum _{r=1}^{R} \lambda ^{(y_c)}_r \; (\mathbf{w}^{(1)}_r \,\circ \, \mathbf{w}^{(2)}_r \,\circ \, \cdots \,\circ \,\mathbf{w}^{(N)}_r)\), where \(\mathbf{W}^{(n)}=[\mathbf{w}^{(n)}_1,\ldots , \mathbf{w}^{(n)}_R] \in \mathbb R^{I_n \times R}\). This CP model corresponds to a simple shallow neural network with one hidden layer, comprising weights \(w^{(n)}_{ir}\), and the output layer comprising weights \(\lambda ^{(y_c)}_r \), \(r=1,\ldots ,R\)

To simplify the notations of a grid tensor, we can construct square matrices \(\mathbf{F}_n\) \(\;(n=1,2,\ldots ,N)\), as follows

which holding the values of taken by the nonlinear basis functions \(\{ f_{\theta _1}, \ldots , f_{\theta _{I_n}}\}\) on the selected fixed vectors, referred to as templates, \(\{\mathbf{x}_n^{(1)}, \mathbf{x}_n^{(2)},\ldots , \mathbf{x}_n^{(I_n)}\}\). Usually, we can assume that \(I_n=I, \; \forall n\), and \(\mathbf{x}_n^{(i_n)}=\mathbf{x}^{(i)}\) [30].

For discrete data values, the score function can be represented by a grid tensor, as graphically illustrated in Fig. 10b. The grid tensor of the nonlinear score function \(h_{y_c}(\mathbf{x}_1, ,\ldots , \mathbf{x}_N)\) determined over all the templates \(\mathbf{x}_n^{(1)}, \mathbf{x}_n^{(2)}, \ldots , \mathbf{x}_n^{(I)}\) can be expressed as a grid tensor

Of course, since the order N of the coefficient (core) tensor is large, it cannot be implemented, or even saved on computer due to the curse of dimensionality.

The simplest model to represent coefficient tensors would be to apply the CP decomposition to reduce the number of parameters, as illustrated in Fig. 10c. This leads to a simple shallow network, however, this approach is associated with two problems: (i) the rank R of the coefficient tensor \(\underline{\mathbf{W}}_{y_c}\) can be very large (so compression of parameters cannot be very high), (ii) the existing CP decomposition algorithms are not very stable for very high-order tensors, and so an alternative promising approach would be to apply tensor networks such as HT that enable us to avoid the curse of dimensionality.

Following the representation layer, a DCNN may consists of a cascade of L convolutional hidden layers with pooling in-between, where the number of layers L should be at least two. In other words, each hidden layer performs 3D or 4D convolution followed by spatial window pooling, in order to reduce (decimate) feature maps by e.g., taking a product of the entries in sub-windows. The output layer is a linear dense layer.

Classification can then be carried out in a standard way, through the maximization of a set of labeled score functions, \(h_{y_c}\) for C classes, that is, the predicted label for the input instants \(X=(\mathbf{x}_1,\ldots , \mathbf{x}_N)\) will be the index \(\hat{y}_c\) for which the score value attains a maximum, that is

Such score functions can be represented through their coefficient tensors which, in turn, can be approximated by low-rank tensor network decompositions [13].

The one restriction of the so formulated score functions (29) is that they allow for straightforward implementation of only a particular class of DCNNs, called convolutional Arithmetic Circuit (ConvAC). However, the score functions can be approximated indirectly and almost equivalently using more popular CNNs (see the next section). For example, it was shown recently how NNs with a univariate ReLU nonlinearity may perform multivariate function approximation [77].

The main idea is to employ a low-rank tensor network representation to approximate and interpolate a multivariate function \(h_{y_c}(\mathbf{x}_1, \ldots , \mathbf{x}_N)\) of N variables by a finite sum of separated products of simpler functions (i.e., via sparsely interconnected core tensors) [13, 14].

6 Convolutional Arithmetic Circuits (ConvAC) Using Tensor Networks

Once the set of score functions has been formulated (29), we need to construct (design) a suitable multilayered or distributed representation for DCNN implementation. The objective is to estimate the parameters \(\theta _1,\ldots , \theta _I\) and coefficient tensorsFootnote 7 \(\underline{\mathbf{W}}_{\,y_1},\ldots , \underline{\mathbf{W}}_{\,y_C}\). Since the tensors are of Nth-order and each with \(I^N\) entries, in order to avoid the curse of dimensionality, we need to perform dimensionality reduction through low-rank tensor network decompositions. Note that a direct implementation of (29) is intractable due to a huge number of parameters.

Conceptually, the ConvAC can be divided into three parts: (i) the first (input) layer is the representation layer which transforms input vectors \((\mathbf{x}_1,\ldots ,\mathbf{x}_N)\) into \(N \cdot I\) real valued scalars \(\{f_{\theta _i}(\mathbf{x}_n)\}\) for \(n=1,\ldots ,N\) and \(i=1,\ldots , I\). In other words, the representation functions, \(f_{\theta _i}: \mathbb R^S \rightarrow \mathbb R\), \(i=1,\ldots ,I\), map each local patch \(\mathbf{x}_n\) into a feature space of dimension I. We can denote the feature vector by \(\mathbf{f}_{n} = [f_{\theta _1}(\mathbf{x}_n), \ldots , f_{\theta _I}(\mathbf{x}_n) ]^{\text {T}} \in \mathbb R^{I}\), \(n=1,\ldots ,N\); (ii) the second, a key or kernel part, is a convolutional arithmetic circuits with many hidden layers that takes the \(N \cdot I\) measurements (training samples) generated by the representation layer; (iii) the output layer represented by a full matrix \(\mathbf{W}^{(L)}\), which computes C different score functions \(h_{y_c}\) [79].

6.1 Hierarchical Tucker (HT) and Tree Tensor Network State (TTNS) Models

The simplified HT tensor network [79] shown in Fig. 11 contains sparse 3rd-order core tensors \(\underline{\mathbf{W}}^{(l,j)} \in \mathbb R^{R^{(l-1,2j-1)} \times R^{(l-1,2j-1)} \times R^{(l,j)}}\) for \(l=1,\ldots ,L-1\) and matrices \(\mathbf{W}^{(0,j)} = [\mathbf{w}_1^{(0,j)}, \ldots , \mathbf{w}^{(0,j)}_{R^{(0,j)}}] \in \mathbb R^{I_j \times R^{(0,j)}}\) for \(l=0\) and \(j=1, \ldots , N/2^l\), and a full matrix \(\mathbf{W}^{(L)} = [\mathbf{w}_1^{(L)}, \ldots , \mathbf{w}_{y_C}^{(L)}] \in \mathbb R^{R^{(L-1)} \times y_C}\) with column vectors \(\mathbf{w}_{y_c}^{(L)} =\mathrm{diag}(\mathbf{W}^{(L)}_{y_c})=[\lambda ^{(y_c)}_1, \ldots , \lambda ^{(y_c)}_{R^{(L-1)}}]^T \) in the output L-layer (or equivalently the output sparse tensor \(\underline{\mathbf{W}}^{(L)} \in \mathbb R^{R^{(L-1)} \times R^{(L-1)} \times y_C}\). The number of channels in the input layer is denoted by I, while for the jth node in the lth layer (for \(l=0,1,\ldots ,L-1\)) is denoted by \(R^{(l,j)}\). The \(y_C\) different values of score functions are calculated in the output layer.

For simplicity, and in order to mimic basic features of the standard ConvAC, we assume that \(R^{(l,j)}= R^{(l)}\) for all j, and that frontal slices of the core tensors \(\underline{\mathbf{W}}^{(l,j)}\) are diagonal matrices with entries \(w^{l,j}_{r^{(l-1)}, r^{(l)}}\). Note that such sparse core tensors can be represented by non-zero matrices defined as \(\mathbf{W}^{(l,j)} \in \mathbb R^{R^{(l-1)} \times R^{(l)}}\).

Architecture of a simplified Hierarchical Tucker (HT) network with sparse core tensors, which simulates the coefficient tensor for a ConvAC deep learning network with a pooling-2 window [79]. The HT tensor network consists of \(L=\log _2(N)\) hidden layers and pooling-2 window. For simplicity, we assumed that we, \(N=2M=2^L\) input patches, \(R^{(l,j)}=R^{(l)}\)), for \(l=0,1,\ldots , L-1\). The representation layer is not shown explicitly in this figure. Note that since all core tensors can be represented by matrices, we do not need to use tensors notation in this case

The simplified HT tensor network can be mathematically described in the following recursive form

where \(\varvec{\lambda }^{(y_c)} =\mathrm{diag}(\lambda _1^{(y_c)}, \ldots , \lambda _{R^{(L-1)}}^{(y_c)} )= \underline{\mathbf{W}}^{(L)}(:,:,y_c)\).

In a special case when the weights in each layer are shared, i.e., \(\mathbf{W}^{(l,1)}=\mathbf{W}^{(l,2)}= \cdots = \mathbf{W}^{(l)}\), the above equation can be considerably simplified to

for the layers \(l= 1,\ldots , L-1\), while for the output layer

where \(\underline{\mathbf{W}}^{\le l}_{r^{(l)}} = \underline{\mathbf{W}}^{\le l} (:,\ldots ,:,r^{(l)}) \in \mathbb R^{I\times \cdots \times I}\) are sub-tensors of \(\underline{\mathbf{W}}^{\le l}\), for each \(r^{(l)} = 1,\ldots ,R^{(l)}\), and \(w^{(l)}_{r^{(l-1)}, r^{(l)}}\) is the \((r^{(l-1)},r^{(l)})\)th entry of the weight matrix \(\mathbf{W}^{(l)} \in \mathbb R^{R^{(l-1)}\times R^{(l)}}\).

However, it should be noted that the simplified HT model shown in Fig. 11 has a limited ability to approximate an arbitrary coefficient tensor, \(\underline{\mathbf{W}}_{y_c}\), due to strong constraints imposed of core tensors. A more flexible and powerful model is shown in Fig. 12, in which constraints imposed on 3rd-order cores have been completely removed. Such a HT tensor network (with a slight abuse of notation) can be mathematically expressed as

The HT network can be further extended to the Tree Tensor Networks States (TTNS), as illustrated in Fig. 13. The use of TTNS, instead HT tensor networks,allows for more flexibility in the choice of size of pooling-window, the pooling size in each hidden layer cab be adjusted by applying core tensors with a suitable variable order in each layer. For example, if we use 5th-order (4rd-order) core tensors instead 3rd-order cores, then the pooling will employ a size-4 pooling window (size-3 pooling) instead of only size-2 pooling window when using 3rd-order core tensors in HT tensor networks. For more detail regrading HT networks and their generalizations to TTNS [13].

6.2 Alternative Tensor Network Model: Tensor Train (TT) Networks

We should emphasize that the HT/TTNS architectures are not the only one suitable TN decompositions which can be used to model DCNNs, and the whole family of powerful tensor networks can be employed to model individual hidden layers. In this section we discuss modified TT/MPS and TC models for this purpose for which efficient learning algorithms exist.

The Tensor Train (TT) format can be interpreted as a special case of the HT format, where all nodes (TT-cores) of the underlying tensor network are connected in cascade (or train), i.e., they are aligned while factor matrices corresponding to the leaf modes are assumed to be identities and thus need not be stored. The TT format was first proposed in numerical analysis and scientific computing by Oseledets [15, 69].

Figure 14 presents the concept of TT decomposition for an Nth-order tensor, the entries of which can be computed as a cascaded (multilayer) multiplication of appropriate matrices (slices of TT-cores). The weights of internal edges (denoted by \(\{R_1,R_2,\ldots , R_{N-1}\}\)) represent the TT-rank. In this way, the so aligned sequence of core tensors represents a “tensor train” where the role of “buffers” is played by TT-core connections. It is important to highlight that TT networks can be applied not only for the approximation of tensorized vectors but also for scalar multivariate functions, matrices, and even large-scale low-order tensors [13].

In the quantum physics community, the TT format is known as the Matrix Product State (MPS) representation with the Open Boundary Conditions (OBC). In fact, the TT/MPS was rediscovered several times under different names: MPS, valence bond states, and density matrix renormalization group (DMRG) (see [13, 27, 89,90,91,92,93,94] and references therein).

An important advantage of the TT/MPS format over the HT format is its simpler practical implementation, as no binary tree needs to be determined. Another attractive property of the TT-decomposition is its simplicity when performing basic mathematical operations on tensors directly in the TT-format (that is, employing only core tensors). These include matrix-by-matrix and matrix-by-vector multiplications, tensor addition, and the entry-wise (Hadamard) product of tensors. These operations produce tensors, also in the TT-format, which generally exhibit increased TT-ranks. A detailed description of basic operations supported by the TT format is given in [13]. Note that only TT-cores need to be stored and processed, which makes the number of TN parameters to scale linearly in the tensor order, N, of a data tensor and all mathematical operations are then performed only on the low-order and relatively small size core tensors.

The TT rank is defined as an \((N-1)\)-tuple of the form

where \(\mathbf{X}_{{<}{n}{>}} \in \mathbb R^{I_1 \ldots I_n \times I_{n-1} \ldots I_N}\) is an nth canonical matricization of the tensor \(\underline{\mathbf{X}}\). Since the TT rank determines memory requirements of a tensor train, it has a strong impact on the complexity, i.e., the suitability of tensor train representation for a given raw data tensor.

The number of data samples to be stored scales linearly in the tensor order, N, and the size, I, and quadratically in the maximum TT rank bound, R, that is

This is why it is crucially important to have low-rank TT approximations.Footnote 8

As illustrated in Fig. 14 the simplest possible implementation of the ConvAC network is via the standard tensor train (TT/MPS) (unbalanced binary tree), which can be represented by recursive formulas as

where \( \mathbf{W}^{(n)}_{\;r_{n-1}}=\underline{\mathbf{W}}^{(n)}(r_{n-1},:,:) \in \mathbb R^{I_n \times R_n}\) are lateral slices of the core tensor \(\underline{\mathbf{W}}^{(n)} \in \mathbb R^{R_{n-1} \times I_n \times R_n}\) and \(\underline{\mathbf{W}}^{\le n}_{\,r_{n}}=\underline{\mathbf{W}}^{\le n}(:,\ldots ,:,r_{n}) \in \mathbb R^{I_1 \times \cdots \times I_n}\) are sub-tensors of \(\underline{\mathbf{W}}^{\le n} \in \mathbb R^{I_1 \times \cdots \times I_n \times R_n }\) for \(n=1, \ldots ,N\) (Fig. 14).

Extended (modified) Tensor Train (TT/MPS) architectures for the representation of coefficient (weight) tensors \(\underline{\mathbf{W}}_{\,y_c}\) of the score function \(h_{y_c}\). a TT-tucker network, also called fork tensor product states (FTPS) with reduced TT ranks. b Hierarchical TT network consisting of core tensors with different orders, where each high-order core tensor can be represented by a TT or HT sub-network. Rectangular boxes represent core tensors (sub-tensors) with variable orders. The ticker horizontal lines or double/tripple lines indicate relatively higher internal TT-ranks

The above recursive formulas for the TT network can be written in a compact form as

where \(\mathbf{w}^{(n)}_{r_{n-1},r_n} = \underline{\mathbf{W}}^{(n)} (:,i_n,:) \in \mathbb R^{I_n}\) are tubes of the core tensor \(\underline{\mathbf{W}}^{(n)} \in \mathbb R^{R_{n-1} \times I_n \times R_n}\).

The TT-rank of the standard tensor train network with 3rd-order cores, shown in Fig. 15, can be very large for core tensors located in the middle of the chain. In the worst case scenario, the TT ranks can grow even, up to \(I^{(N/2)}\) for an exact representation of Nth-order tensor. In order to reduce the TT-rank and consequently reduce the number of parameters of a DCNN, we can apply two approaches, as explained in Fig. 14a and b. The main idea is to employ only a few core tensors with order larger than three, whereby each of these core is further approximated via a TT or HT sub-network.

6.3 Tensor Chain and TT/MPO Networks

Alternatively, the Tensor Chain (TC) network, called also TT/MPS with periodic bounded conditions, shown in Fig. 16 can be employed to represent individual hidden layers or output fully connected layers. This TC network is mathematically described through the following a recursive formulas as