Abstract

In this paper, we proposed a method for plant classification, which aims to recognize the type of leaves from a set of image instances captured from same viewpoints. Firstly, for feature extraction, this paper adopted the 2-level wavelet transform and obtained in total 7 features. Secondly, the leaves were automatically recognized and classified by Back-Propagation neural network (BPNN). Meanwhile, we employed K-fold cross-validation to test the correctness of the algorithm. The accuracy of our method achieves 90.0%. Further, by comparing with other methods, our method arrives at the highest accuracy.

Access provided by CONRICYT-eBooks. Download conference paper PDF

Similar content being viewed by others

Keywords

1 Introduction

With acceleration of the extinction rate of plant species, it is essential urgent to protect plants [1, 2]. A preliminary task is the classification of plant type, which is challenging, complex and time-consuming. As we all know, plants includes the flowering plants, conifers and other gymnosperms and so on. Most of them do not blooming and have fruit, but almost all of them contain leaves [3]. Therefore, in this paper we focus on feature extraction and classification of leaves.

Recent studies are analyzed below: Heymans, Onema and Kuti [4] proposed a neural network to distinguish different leaf-forms of the opuntia species. Wu, Bao, Xu, Wang, Chang and Xiang [5] employed probabilistic neural network (PNN) for leaf recognition system. Wang, Huang, Du, Xu and Heutte [6] classified plant leaf images with complicated background. Jeatrakul and Wong [7] introduced Back Propagation Neural Network (BPNN), Radial Basis Function Neural Network (RBFNN), Probabilistic Neural Network (PNN) and compared the performances of them. Dyrmann, Karstoft and Midtiby [8] used convolutional neural network (CNN) to classify plant species. Zhang, Lei, Zhang and Hu [9] employed semi-supervised orthogonal discriminant projection for plant leaf classification.

Although these methods have achieved good results, ANN has the higher accuracy and less time-consuming in classification than other approaches. Therefore, in this paper, we employ the BPNN algorithm on the leaves of the classification of automatic identification.

Our contribution in this paper includes: (i) We proposed a five-step preprocessing method, which can remove unrelated information of the leaf image. (ii) We developed a leaf recognition system.

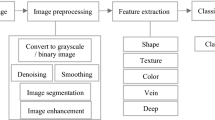

2 Methodology

2.1 Pretreatment

We put a sheet of glass over the leaves, so as to unbend the curved leaves. We pictured all the leaves indoor (put the leaves on the white paper) using a digital camera with (Canon EOS 70D) by two cameraman with experience over five years. The pose of the camera is fixed on the tripod during the imaging. Two light-emitting diode (LED) lights are hanged 6 inches over the leaves. Those images out of focus are removed.

2.2 Image Preprocessing

During the imaging, the background information is captured, which will inference the detection of the leaves. Therefore, the necessary preprocessing is to remove the irrelevant background and color channels.

First, we suppose texture is related to leave category, and color information is of little help. Hence, we removed the background of the image. Second, we convert the RGB image into a grayscale image. Table 1 shows the steps of image preprocessing.

Figure 1(a) shows the original image. Figure 1(b) shows the image of background with black color. Figure 1(c) shows the grayscale image

2.3 Feature Extraction

Fourier transform (FT) [10] is a method of signal analysis, which decomposes the continuous signal into harmonic waves with different frequency [11]. It can more easily deal with the original signal than traditional methods. However, it has limitations for non-stationary processes, it can only get a signal which generally contains the frequency, but the moment of each frequency is unknown. A simple and feasible handling method is to add a window. The entire time domain process is decomposed into a number of small process with equal length, and each process is approximately smooth, then employing the FT can help us to get when each frequency appears. We call it as Short-time Fourier Transform (STFT) [12, 13].

The drawback of STFT is that we do not know how to determine the size of windowing function. If the window size is small, the time resolution will be good and the frequency resolution will be poor. On the contrary, if the window size is large, the time resolution will be poor and the frequency resolution will be good.

Wavelet transform (WT) [14,15,16] has been hailed as a microscope of signal processing and analysis. It can analyze non-stationary signal and extract the local characteristics of signal. In addition, wavelet transform has the adaptability for signal in signal processing and analysis [17], therefore, it is a new method of information processing which is superior to the FT and STFT.

In this paper, the feature extraction [18, 19] of the original leaf images is carried out by 2-level wavelet transform, which it decompose the leaves images with the low-frequency and high-frequency coefficients. However, a massive of features not only increase the cost of computing but also consume much storage memory does little to classification [20]. We need to take some measures to select important feature. Entropy [21, 22] is used to measure the amount of information of whole system in the information theory and also could represent the texture of the image.

where X is a discrete and random variable, p(x) is the probability mass function, the amount of information contained in a message signal \( x_{i} \) can be expressed as

where \( I(x_{i} ) \) is a random variable, it can’t be used as information measure for the entire source [23], Shannon, the originator of modern information theory, defines the average information content of \( X \) as information entropy [24, 25]:

where \( \text{E} \) is the excepted value operator, \( H \) represents entropy. Figure 2 shows the entropy of source.

There are seven comprehensive indexes (as shown in Fig. 3) are obtained after adopting 2-level WT, with four in size of \( 50 \times 50 \) and three 100 × 100. The entropy of these matrices is calculated as the input to the following BP neural network (BP).

2.4 Back-Propagation Neural Network

Back Propagation algorithm is mainly used for regression and classification. It is one of the most widely used training neural networks model. Figure 4 represents the diagram of BP, the number of nodes in the input layer and output layer is determined but the number of nodes in the hidden layer is uncertain. There is an empirical formula can help to determine the number of hidden layer nodes, as follow

where \( t,r,s \) represents the number of nodes in the hidden layer, input layer, and output layer, respectively. \( c \) is an adjustment constant between zero and ten.

In our experiment, the features of leaves were reduced to seven after feature selection, i.e. \( r = 7 \). Meanwhile, there is one output unit, which stands for the predictable result, so \( s\, = \,1 \). Because \( c \) is constant, according to formula (4), we set \( t = 15 \).

BPNN algorithm is part of a supervised learning method. The following is the main ideas of the BP algorithm learning rule:

Known vectors: input learning samples \( \{ P^{1} ,P^{2} , \ldots ..,P^{q} \} \), the corresponding output samples \( \{ T^{1} ,T^{2} , \ldots .,T^{q} \} \).

Learning objectives: The weights are modified with the error between the target vector \( \{ T^{1} ,T^{2} , \ldots .,T^{q} \} \) and the actual output of the network \( \{ A^{1} ,A^{2} , \ldots ..,A^{q} \} \) in order to \( A^{i} \,(i = 1,2, \ldots ,q) \) is as close as possible to the excepted \( T^{i} \), i.e., error sum of squares of the network output layer is minimized.

BPNN algorithm has two parts: the forward transfer of working signal and error of the reverse transfer. In the forward propagation process, the state of each layer of neurons only affects the state of the next layer of neurons.

where \( x_{j} \) represents the input of hidden layer. \( w_{ij} \) is the weight between input layer and the hidden layer.

Equation (6) indicates the function of hidden layer.

\( {\text{A}}^{i} \) is the actual output of output layer.

where \( T^{i} \) is the desired output.

If the desired output \( T^{i} \) is not obtained at the output layer, we will use the Eq. (8) and the error value will be calculated, then the error of the reverse transfer is carried out.

2.5 Implementation of the Proposed Method

The aim of this study was to distinguish the type of leaves with high classification accuracy. The steps of proposed system are sample collection, pretreatment, preprocessing, feature extraction, classifier classification (Fig. 5). Table 2 shows the pseudocode of the proposed system.

2.6 Five-Fold Cross Validation

The training criterion of the standard BP neural network is to require that the error sum of squares (or the fitting error) of the expected and output values of all samples is less than a given allowable error \( \upvarepsilon \). In general, the smaller the value of \( \upvarepsilon \) is, the better the fitting accuracy. Nevertheless, for the actual application, the prediction error decreases with the decrease of the fitting error at the first. However, when the fitting error on training set decreases to a certain value, the prediction error on test set increases, which indicates that the generalization ability decreases. This is the “over-fitting” phenomenon which the BPNN modeling encountered. In this paper, cross-validation is used to prevent over-fitting.

The basic of the cross validation method is that the original dataset of the neural network were divided into two parts: the training set and the validation set. First of all, the classifier was trained by training set, and then test the training model using the validation set, through the above results to evaluate the performance of the classifier.

The steps of K-fold cross validation:

-

Step 1.

All training set \( {\text{S}} \) is divided into \( k \) disjoint subsets, suppose the number of training examples in S is m, then the training examples of each subset is m/k, the corresponding subset is called \( \left\{ {{\text{s}}_{1} ,s_{2} , \ldots .,s_{k} } \right\} \).

-

Step 2.

A subset is selected as test set from subset \( \left\{ {{\text{s}}_{1} ,s_{2} , \ldots .,s_{k} } \right\} \) each time, and the other (k − 1) as the training set.

-

Step 3.

Training model or hypothesis function is obtained through training.

-

Step 4.

Put the model into the test set and gain the classification rate.

-

Step 5.

Calculate the average classification rate of the k-times and regard average as the true classification rate of the model or hypothesis function.

3 Experiment and Discussions

The method of BP is implemented in MATLAB R2016a (The Mathworks, Natick, MA, USA). This experiments were accomplished on a computer with 3.30 GHz core and 4 GB RAM, running under the Window 8 and based on 64-bit processors.

3.1 Database

We used leaves as the object of study, which are all size of \( 200 \times 200 \) and in jpg format. The input dataset contains 90 images, which 30 of ginkgo biloba, 30 of Phoenix tree leaf and 30 of Osmanthus leaves. As mentioned above, we use the 5-fold cross validation to prevent the case of over-fitting, Thence, we ran one trials with each 72 (25 ginkgo biloba and 26 Osmanthus and 21 Phoenix tree) are used for training and the left 18 (5 ginkgo biloba and 4 Osmanthus and 9 Phoenix tree) are used for test. Figure 6 show one trail of 5-fold cross-validation.

3.2 Algorithm Comparison

The wavelet-entropy features were fed into different classifiers. We compared our method (BPNN) with Medium KNN [26], Coarse Gaussian SVM [27], Complex Tree [28], Cosine KNN [29]. The results of comparing were shown in Table 3.

Results in Table 3 are the central contribution of our experiment, we can see that the accuracy of our method achieves 90.0%, and it is better than other methods. Why BPNN performs the best among the fiver algorithms? The reason lies in the universal approximation theorem proven in reference [30].

4 Conclusion and Future Research

This paper introduced a novel automatic classify method for the leaves images. With the combination of BP neural network and wavelet-entropy, the accuracy of our method achieves 90.0%.

It costs 1.2064 s to finish feature extraction for 90 leaves images and the average time for each image is 0.0134 s.

Nevertheless, there are still several problems remaining unsolved: (1) we try to improve the accuracy of the algorithm; (2) we can employ other methods of feature extraction in order to decrease the time of extraction; (3) we may extend this method to other type of images, such as car image, tree image, etc.

References

Carro, F., Soriguer, R.C.: Long-term patterns in Iberian hare population dynamics in a protected area (Donana National Park) in the southwestern Iberian Peninsula: effects of weather conditions and plant cover. Integr. Zool. 12, 49–60 (2017)

Lim, S.H., et al.: Plant-based foods containing cell wall polysaccharides rich in specific active monosaccharides protect against myocardial injury in rat myocardial infarction models. Sci. Rep. 6, 15 (2016). Article ID: 38728

Du, J.X., et al.: Computer-aided plant species identification (CAPSI) based on leaf shape matching technique. Trans. Inst. Meas. Control 28, 275–284 (2006)

Heymans, B.C., et al.: A neural network for Opuntia leaf-form recognition. In: IEEE International Joint Conference on Neural Networks, pp. 2116–2121. IEEE (1991)

Wu, S.G., et al.: A leaf recognition algorithm for plant classification using Probabilistic Neural Network. In: International Symposium on Signal Processing and Information Technology, p. 120. IEEE (2007)

Wang, X.F., et al.: Classification of plant leaf images with complicated background. Appl. Math. Comput. 205, 916–926 (2008)

Jeatrakul, P., Wong, K.W.: Comparing the performance of different neural networks for binary classification problems. In: Eighth International Symposium on Natural Language Processing, Proceedings, pp. 111–115. IEEE (2009)

Dyrmann, M., et al.: Plant species classification using deep convolutional neural network. Biosyst. Eng. 151, 72–80 (2016)

Zhang, S.W., et al.: Semi-supervised orthogonal discriminant projection for plant leaf classification. Pattern Anal. Appl. 19, 953–961 (2016)

Meier, D.C., et al.: Fourier transform infrared absorption spectroscopy for quantitative analysis of gas mixtures at low temperatures for homeland security applications. J. Testing Eval. 45, 922–932 (2017)

Tiwari, S., et al.: Cloud point extraction and diffuse reflectance-Fourier transform infrared spectroscopic determination of chromium(VI): A probe to adulteration in food stuffs. Food Chem. 221, 47–53 (2017)

Garrido, M.: The feedforward short-time fourier transform. IEEE Trans. Circ. Syst. II-Express Briefs 63, 868–872 (2016)

Saneva, K.H.V., Atanasova, S.: Directional short-time Fourier transform of distributions. J. Inequal. Appl. 10, Article ID: 124 (2016)

Huo, Y., Wu, L.: Feature extraction of brain MRI by stationary wavelet transform and its applications. J. Biol. Syst. 18, 115–132 (2010)

Ji, G.L., Wang, S.H.: An improved reconstruction method for CS-MRI based on exponential wavelet transform and iterative shrinkage/thresholding algorithm. J. Electromag. Waves Appl. 28, 2327–2338 (2014)

Yang, M.: Dual-tree complex wavelet transform and twin support vector machine for pathological brain detection. Appl. Sci. 6, Article ID: 169 (2016)

Liu, A.: Magnetic resonance brain image classification via stationary wavelet transform and generalized eigenvalue proximal support vector machine. J. Med. Imaging Health Inform. 5, 1395–1403 (2015)

Bezawada, S., et al.: Automatic facial feature extraction for predicting designers’ comfort with engineering equipment during prototype creation. J. Mech. Des. 139, 10 (2017). Article ID: 021102

Gerdes, M., et al.: Decision trees and the effects of feature extraction parameters for robust sensor network design. Eksploat. Niezawodn. 19, 31–42 (2017)

Zhang, Y.: Binary PSO with mutation operator for feature selection using decision tree applied to spam detection. Knowl. Based Syst. 64, 22–31 (2014)

Yang, J.: Preclinical diagnosis of magnetic resonance (MR) brain images via discrete wavelet packet transform with Tsallis entropy and generalized eigenvalue proximal support vector machine (GEPSVM). Entropy 17, 1795–1813 (2015)

Phillips, P., et al.: Pathological brain detection in magnetic resonance imaging scanning by wavelet entropy and hybridization of biogeography-based optimization and particle swarm optimization. Prog. Electromag. Res. 152, 41–58 (2015)

Sun, P.: Pathological brain detection based on wavelet entropy and Hu moment invariants. Bio-Med. Mater. Eng. 26, 1283–1290 (2015)

Wei, L.: Fruit classification by wavelet-entropy and feedforward neural network trained by fitness-scaled chaotic ABC and biogeography-based optimization. Entropy 17, 5711–5728 (2015)

Yang, J.: Identification of green, Oolong and black teas in China via wavelet packet entropy and fuzzy support vector machine. Entropy 17, 6663–6682 (2015)

Zhou, X.-X.: Comparison of machine learning methods for stationary wavelet entropy-based multiple sclerosis detection: decision tree, k-nearest neighbors, and support vector machine. Simulation 92, 861–871 (2016)

Sharma, B., et al.: Traffic accident prediction model using support vector machines with Gaussian kernel. In: Fifth International Conference on Soft Computing for Problem Solving, pp. 1–10. Springer, Berlin (2016)

Maleszka, M., Nguyen, N.T.: Using subtree agreement for complex tree integration tasks. In: Selamat, A., Nguyen, N.T., Haron, H. (eds.) ACIIDS 2013. LNCS, vol. 7803, pp. 148–157. Springer, Heidelberg (2013). doi:10.1007/978-3-642-36543-0_16

Anastasiu, D.C., Karypis, G.: Fast parallel cosine k-nearest neighbor graph construction. In: 6th Workshop on Irregular Applications: Architecture and Algorithms (IA3), pp. 50–53. IEEE (2016)

Nguyen, H.D., et al.: A universal approximation theorem for mixture-of-experts models. Neural Comput. 28, 2585–2593 (2016)

Acknowledgment

This paper is financially supported by Natural Science Foundation of China (61602250), Natural Science Foundation of Jiangsu Province (BK20150983).

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Rights and permissions

Copyright information

© 2017 Springer International Publishing AG

About this paper

Cite this paper

Yang, MM., Phillips, P., Wang, S., Zhang, Y. (2017). Leaf Recognition for Plant Classification Based on Wavelet Entropy and Back Propagation Neural Network. In: Huang, Y., Wu, H., Liu, H., Yin, Z. (eds) Intelligent Robotics and Applications. ICIRA 2017. Lecture Notes in Computer Science(), vol 10464. Springer, Cham. https://doi.org/10.1007/978-3-319-65298-6_34

Download citation

DOI: https://doi.org/10.1007/978-3-319-65298-6_34

Published:

Publisher Name: Springer, Cham

Print ISBN: 978-3-319-65297-9

Online ISBN: 978-3-319-65298-6

eBook Packages: Computer ScienceComputer Science (R0)