Abstract

In order to effectively analyze and build cyberphysical systems (CPS), designers today have to combat the data deluge problem, i.e., the burden of processing intractably large amounts of data produced by complex models and experiments. In this work, we utilize monotonic parametric signal temporal logic (PSTL) to design features for unsupervised classification of time series data. This enables using off-the-shelf machine learning tools to automatically cluster similar traces with respect to a given PSTL formula. We demonstrate how this technique produces interpretable formulas that are amenable to analysis and understanding using a few representative examples. We illustrate this with case studies related to automotive engine testing, highway traffic analysis, and auto-grading massively open online courses.

You have full access to this open access chapter, Download conference paper PDF

Similar content being viewed by others

Keywords

These keywords were added by machine and not by the authors. This process is experimental and the keywords may be updated as the learning algorithm improves.

1 Introduction

In order to effectively construct and analyze cyber-physical systems (CPS), designers today have to combat the data deluge problem, i.e., the burden of processing intractably large amounts of data produced by complex models and experiments. For example, consider the typical design process for an advanced CPS such as a self-driving car. Checking whether the car meets all its requirements is typically done by either physically driving the car around for millions of miles [2], or by performing virtual simulations of the self-driving algorithms. Either approach can generate several gigabytes worth of time-series traces of data, such as sensor readings, variables within the software controllers, actuator actions, driver inputs, and environmental conditions. Typically, designers are interested not in the details of these traces, but in discovering higher-level insight from them; however, given the volume of data, a high level of automation is needed.

The key challenge then is: “How do we automatically identify logical structure or relations within such data?” One possibility offered by unsupervised learning algorithms from the machine learning community is to cluster similar behaviors to identify higher-level commonalities in the data. Typical clustering algorithms define similarity measures on signal spaces, e.g., the dynamic time warping distance, or by projecting data to complex feature spaces. We argue later in this section that these methods can be inadequate to learn logical structure in time-series data.

In this paper, we present logical clustering, an unsupervised learning procedure that utilizes Parametric Signal Temporal Logic (PSTL) templates to discover logical structure within the given data. Informally, Signal Temporal Logic (STL) enables specifying temporal relations between constraints on signal values [3, 10]. PSTL generalizes STL formulas by replacing, with parameters, time constants in temporal operators and signal-value constants in atomic predicates in the formula. With PSTL templates, one can use the template parameters as features. This is done by projecting a trace to parameter valuations that correspond to a formula that is marginally satisfied by the trace. As each trace is projected to the finite-dimensional space of formula-parameters, we can then use traditional clustering algorithms on this space; thereby grouping traces that satisfy the same (or similar) formulas together. Such logical clustering can reveal heretofore undiscovered structure in the traces, albeit through the lens of an user-provided template. We illustrate the basic steps in our technique with an example.

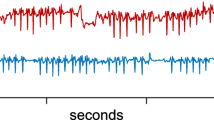

An example of a pitfall when using the DTW measure compared to projection using a PSTL template. We perform spectral clustering [24] on a similarity graph representation of 7 traces. Nodes of the graph represent traces and edges are labeled with the normalized pairwise distance using (1) the DTW measure and (2) the Euclidean distance between features extracted using the PSTL template \(\varphi _{\text {overshoot}}\). Note how under the DTW measure, the black and cyan traces are grouped together due to their behavior before the lane change, despite the cyan trace having a much larger overshoot. Contrast with the STL labeling in the second row, where both overshooting traces are grouped together. The bottom right figure provides the projection of the traces w.r.t. \(\varphi _{\text {overshoot}}\) with the associated cluster-labels shown in the second row.

Consider the design of a lane-tracking controller for a car and a scenario where a car has effected a lane-change. A typical control designer tests design performance by observing the “overshoot” behavior of the controller, i.e., by inspecting the maximum deviation (say a) over a certain duration of time (say, \(\tau \)) of the vehicle position trajectory x(t) from a given desired trajectory \(x_{ref}(t)\). We can use the following PSTL template that captures such an overshoot:

When we project traces appearing in Fig. 1 through \(\varphi _{\text {overshoot}}\), we find three behavior-clusters as shown in the second row of the figure: (1) Cluster 0 with traces that track the desired trajectory with small overshoot, (2) Cluster 1 with traces that fail to track the desired trajectory altogether, and (3) Cluster 2 with traces that do track the desired trajectory, but have a large overshoot value. The three clusters indicate a well-behaved controller, an incorrect controller, and a controller that needs tuning respectively. The key observation here is that though we use a single overshoot template to analyze the data, qualitatively different behaviors form separate clusters in the induced parameter space; furthermore, each cluster has higher-level meaning that the designer can find valuable.

In contrast to our proposed method, consider the clustering induced by using the dynamic time warping (DTW) distance measure as shown in Fig. 1. Note that DTW is one of the most popular measures to cluster time-series data [17]. We can see that traces with both high and low overshoots are clustered together due to similarities in their shape. Such shape-similarity based grouping could be quite valuable in certain contexts; however, it is inadequate when the designer is interested in temporal properties that may group traces of dissimilar shapes.

In Sect. 3, we show how we can use feature extraction with PSTL templates to group traces with similar logical properties together. An advantage of using PSTL is that the enhanced feature extraction is computationally efficient for the fragment of monotonic PSTL formulas [5, 14]; such a formula has the property that its satisfaction by a given trace is monotonic in its parameter values. The efficiency in feature extraction relies on a multi-dimensional binary search procedure [20] that exploits the monotonicity property.

A different view of the technique presented here is as a method to perform temporal logic inference from data, such as the work on learning STL formulas in a supervised learning context [5,6,7, 18], in the unsupervised anomaly detection context [15], and in the context of active learning [16]. Some of these approaches adapt classical machine learning algorithms such as decision trees [7] and one-class support vector machines [15] to learn (possibly, arbitrarily long) formulas in a restricted fragment of STL. Formulas exceeding a certain length are often considered inscrutable by designers. A key technical contribution of this paper is to show that using simple shapes such as specific Boolean combinations of axis-aligned hyperboxes in the parameter space of monotonic PSTL to represent clusters yields a formula that may be easier to interpret. We support this in Sect. 4, by showing that such hyperbox-clusters correspond to STL formulas that have length linear in the number of parameters in the given PSTL template, and thus of bounded descriptive complexity.

Mining parametric temporal logic properties in a model-based design has also been explored [12, 14]. We note that our proposed methods does not require such a model, which may not be available either due to the complexity of the underlying system or lack of certainty in the dynamics. We also note that there is much work on mining discrete temporal logic specifications from data (e.g. [21]): our work instead focuses on unsupervised learning of STL properties relevant to CPS.

The reader might wonder how much insight is needed by a user to select the PSTL template to use for classification. We argue the templates do not pose a burden on the user and that our technique can have high value in several ways. First, we observe that we can combine our technique with a human-guided (or automated) enumerative learning procedure that can exploit high-level template pools. We demonstrate such a procedure in the diesel engine case study. Second, consider a scenario where a designer has insight into the data that allows them to choose the correct PSTL template. Even in this case, our method automates the task of labeleing trace-clusters with STL labels which can then be used to automatically classify new data. Finally, we argue that many unsupervised learning techniques on time-series data must “featurize” the data to start with, and such features represent relevant domain knowledge. Our features happen to be PSTL templates. As the lane controller motivating example illustrates, a common procedure that doesn’t have some domain specific knowledge increases the risk of wrong classifications. This sentiment is highlighted even in the data mining literature [22]. To illustrate the value of our technique, in Sect. 5, we demonstrate the use of logic-based templates to analyze time-series data in case studies from three different application domains.

2 Preliminaries

Definition 1

(Timed Traces). A timed trace is a finite (or infinite) sequence of pairs \((t_0,\mathbf {x}_0)\), \(\ldots \), \((t_n,\mathbf {x}_n)\), where, \(t_0 = 0\), and for all \(i \in [1,n]\), \(t_i \in \mathbb {R}_{\ge 0}\), \(t_{i-1} < t_i\), and for \(i \in [0,n]\), \(\mathbf {x}_i \in \mathcal {D}\), where \(\mathcal {D}\) is some compact set. We refer to the interval \([t_0,t_n]\) as the time domain \(T\).

Real-time temporal logics are a formalism for reasoning about finite or infinite timed traces. Logics such as the Timed Propositional Temporal Logic [4], and Metric Temporal Logic (MTL) [19] were introduced to reason about signals representing Boolean-predicates varying over dense (or discrete) time. More recently, Signal Temporal Logic [23] was proposed in the context of analog and mixed-signal circuits as a specification language for real-valued signals.

Signal Temporal Logic. Without loss of generality, atoms in STL formulas can be be reduced to the form \(f(\mathbf {x}) \sim c\), where f is a function from \(\mathcal {D}\) to \(\mathbb {R}\), \(\sim \in \left\{ \ge ,\le ,=\right\} \), and \(c\in \mathbb {R}\). Temporal formulas are formed using temporal operators, “always” (denoted as \(\mathbf {G}\)), “eventually” (denoted as \(\mathbf {F}\)) and “until” (denoted as \(\mathbf {U}\)) that can each be indexed by an interval \(I\). An STL formula is written using the following grammar:

In the above grammar, \(a,b \in T\), and \(c\in \mathbb {R}\). The always (\(\mathbf {G}\)) and eventually (\(\mathbf {F}\)) operators are defined for notational convenience, and are just special cases of the until operator: \(\mathbf {F}_{I}\varphi \triangleq true \, \mathbf {U}_I\, \varphi \), and \(\mathbf {G}_{I}\varphi \triangleq \lnot \mathbf {F}_{I} \lnot \varphi \). We use the notation \((\mathbf {x},t) \models \varphi \) to mean that the suffix of the timed trace \(\mathbf {x}\) beginning at time t satisfies the formula \(\varphi \). The formal semantics of an STL formula are defined recursively:

We write \(\mathbf {x}\models \varphi \) as a shorthand of \((\mathbf {x},0) \models \varphi \).

Parametric Signal Temporal Logic (PSTL). PSTL [5] is an extension of STL introduced to define template formulas containing unknown parameters. Formally, the set of parameters \(\mathcal {P}\) is a set consisting of two disjoint sets of variables \(\mathcal {P}^V\) and \(\mathcal {P}^T\) of which at least one is nonempty. The parameter variables in \(\mathcal {P}^V\) can take values from their domain, denoted as the set \(V\). The parameter variables in \(\mathcal {P}^T\) are time-parameters that take values from the time domain \(T\). We define a valuation function \(\nu \) that maps a parameter to a value in its domain. We denote a vector of parameter variables by \(\mathbf {p}\), and extend the definition of the valuation function to map parameter vectors \(\mathbf {p}\) into tuples of respective values over \(V\) or \(T\). We define the parameter space \({\mathcal {D}_\mathcal {P}}\) as a subset of \(V^{|\mathcal {P}^V|} \times T^{|\mathcal {P}^T|}\).

A PSTL formula is then defined by modifying the grammar specified in (2) by allowing a, b to be elements of \(\mathcal {P}^T\), and \(c\) to be an element of \(\mathcal {P}^V\). An STL formula is obtained by pairing a PSTL formula with a valuation function that assigns a value to each parameter variable. For example, consider the PSTL formula \(\varphi (c,\tau )\) = \(\mathbf {G}_{[0, \tau ]} x > c\), with parameters variables \(c\) and \(\tau \). The STL formula \(\mathbf {G}_{[0, 10]} x > 1.2\) is an instance of \(\varphi \) obtained with the valuation \(\nu =\{\tau \mapsto 10,\ c\mapsto 1.2\}\).

Monotonic PSTL. Monotonic PSTL is a fragment of PSTL introduced as the polarity fragment in [5]. A PSTL formula \(\varphi \) is said to be monotonically increasing in parameter \(p_i\) if condition (3) holds for all \(\mathbf {x}\), and is said to be monotonically decreasing in parameter \(p_i\) if condition (4) holds for all \(\mathbf {x}\).

To indicate the direction of monotonicity, we now introduce the polarity of a parameter [5], \(\mathrm {sgn}(p_i)\), and say that \(\mathrm {sgn}(p_i) = +\) if the \(\varphi (\mathbf {p})\) is monotonically increasing in \(p_i\) and \(\mathrm {sgn}(p_i) = -\) if it is monotonically decreasing, and \(\mathrm {sgn}(p_i) = \bot \) if it is neither. A formula \(\varphi (\mathbf {p})\) is said to be monotonic in \(p_i\) if \(\mathrm {sgn}(p_i) \in \left\{ +,-\right\} \), and say that \(\varphi (\mathbf {p})\) is monotonic if for all i, \(\varphi \) is monotonic in \(p_i\).

While restrictive, the monotonic fragment of PSTL contains many formulas of interest, such as those expressing steps and spikes in trace values, timed-causal relations between traces, and so on. Moreover, in some instances, for a given non-monotonic PSTL formula, it may be possible to obtain a related monotonic PSTL formula by using distinct parameters in place of a repeated parameter, or by assigning a constant valuation for some parameters (Example in Appendix A).

Example 1

For formula (1), we can see that \(\mathrm {sgn}(a) = -\), because if a trace has a certain overshoot exceeding the threshold \(a^*\), then for a fixed \(\tau \), the trace satisfies any formula where \(a < a^{*}\). Similarly, \(\mathrm {sgn}(\tau )\) = \(+\), as an overshoot over some interval \((0,\tau ^*]\) will be still considered an overshoot for \(\tau > \tau ^*\).

Orders on Parameter Space. A monotonic parameter induces a total order \(\trianglelefteq _i\) in its domain, and as different parameters for a given formula are usually independent, valuations for different parameters induce a partial order:

Definition 2

(Parameter Space Partial Order). We define \(\trianglelefteq _i\) as a total order on the domain of the parameter \(p_i\) as follows:

Under the order \(\trianglelefteq _i\), the parameter space can be viewed as a partially ordered set \(({\mathcal {D}_\mathcal {P}}, \trianglelefteq )\), where the ordering operation \(\trianglelefteq \) is defined as follows:

When combined with Eqs. (3), (4) this gives us the relation that \(\nu (\mathbf {p}) \trianglelefteq \nu '(\mathbf {p})\) implies that \([\varphi (\nu (\mathbf {p})) \implies \varphi (\nu '(\mathbf {p}))]\). In order to simplify notation, we define the subset of X that satisfies \(\varphi (\nu (\mathbf {p}))\) as \(\llbracket \varphi ({\nu (\mathbf {p})})\rrbracket _{X}\). If \(X\) and \(\mathbf {p}\) are obvious from context, we simply write: \(\llbracket \varphi ({\nu })\rrbracket \). It follows that \((\nu \trianglelefteq \nu ')\) \(\implies \) \((\llbracket \varphi ({\nu })\rrbracket \subseteq \llbracket \varphi ({\nu '})\rrbracket )\). In summary: \(\trianglelefteq \) operates in the same direction as implication and subset. Informally, we say that the ordering is from a stronger to a weaker formula.

Example 2

For formula (1), the order operation \(\trianglelefteq \) is defined as \(\nu \trianglelefteq \nu '\) iff \(\nu (\tau ) < \nu '(\tau )\) and \(\nu (a) > \nu '(a)\). Consider  and

and  . As \(\mathrm {sgn}(a) = -\), \(\mathrm {sgn}(\tau ) = +\), \(\nu _1 \trianglelefteq \nu _2\), and \(\varphi _{\mathrm {overshoot}}(\nu _1(\mathbf {p})) \implies \varphi _{\mathrm {overshoot}}(\nu _2(\mathbf {p}))\). Intuitively this means that if x(t) satisfies a formula specifying a overshoot \(> -1.1\) (undershoot \(< 1.1\)) over a duration of 0.1 time units, then x(t) trivially satisfies the formula specifying an undershoot of \(< 1.3\) over a duration of 3.3 time units.

. As \(\mathrm {sgn}(a) = -\), \(\mathrm {sgn}(\tau ) = +\), \(\nu _1 \trianglelefteq \nu _2\), and \(\varphi _{\mathrm {overshoot}}(\nu _1(\mathbf {p})) \implies \varphi _{\mathrm {overshoot}}(\nu _2(\mathbf {p}))\). Intuitively this means that if x(t) satisfies a formula specifying a overshoot \(> -1.1\) (undershoot \(< 1.1\)) over a duration of 0.1 time units, then x(t) trivially satisfies the formula specifying an undershoot of \(< 1.3\) over a duration of 3.3 time units.

Next, we define the downward closure of \(\nu (\mathbf {p})\) and relate it to \(\llbracket \varphi ({\nu (\mathbf {p})})\rrbracket \).

Definition 3

(Downward closure of a valuation). For a valuation \(\nu \), its downward closure (denoted \(D(\nu )\)) is the set \(\left\{ \nu ' \mid \nu ' \trianglelefteq \nu \right\} \).

In the following lemma we state that the union of the sets of traces satisfying formulas corresponding to parameter valuations in the downward closure of a valuation \(\nu \) is the same as the set of traces satisfying the formula corresponding to \(\nu \). The proof follows from the definition of downward closure.

Lemma 1

\(\bigcup _{\nu ' \in D(\nu )} \llbracket \varphi ({\nu '})\rrbracket \equiv \llbracket \varphi ({\nu })\rrbracket \)

Lastly, we define the validity domain of a set of traces and \(\varphi \).

Definition 4

(Validity domain). Let \(X\) be a (potentially infinite) collection of timed traces, and let \(\varphi (\mathbf {p})\) be a PSTL formula with parameters \(\mathbf {p}\in \mathcal {P}\). The validity domainFootnote 1 \(\mathcal {V} (\varphi (\mathbf {p}),X)\) of \(\varphi (\mathbf {p})\) is a closed subset of \({\mathcal {D}_\mathcal {P}}\), such that:

Remark 1

The validity domain for a given parameter set \(\mathcal {P}\) essentially contains all the parameter valuations s.t. for the given set of traces \(X\), each trace satisfies the STL formula obtained by instantiating the given PSTL formula with the parameter valuation.

Validity domain and projection of traces in STL Cluster 0 from Fig. 1.

Example 3

In Fig. 2, we show the validity domain of the PSTL formula (1) for the three traces given in the subplot labeled STL cluster 0 in Fig. 1. The hatched red region contains parameter valuations corresponding to STL formulas that are not satisfied by any trace, while the shaded-green region is the validity domain of the formula. The validity domain reflects that till the peak value \(a^*\) of the black trace is reached (which is the smallest among the peak values for the three signals), the curve in \(\tau \)-a space follows the green trace (which has the lowest slope among the three traces). For any value of \(\tau \), for which \(a > a^*\), the formula is trivially satisfied by all traces.

3 Trace-Projection and Clustering

In this section, we introduce the projection of a trace to the parameter space of a given PSTL formula, and discuss mechanisms to cluster the trace-projections using off-the-shelf clustering techniques.

Trace Projection. The key idea of this paper is defining a projection operation \(\pi \) that maps a given timed trace \(\mathbf {x}\) to a suitable parameter valuationFootnote 2 \(\nu ^\star (\mathbf {p})\) in the validity domain of the given PSTL formula \(\varphi (\mathbf {p})\). We would also like to project the given timed trace to a valuation that is as close a representative of the given trace as possible (under the lens of the chosen PSTL formula).

One way of mapping a given timed trace to a single valuation is by defining a total order \(\preceq \) on the parameter space by an appropriate linearization of the partial order on parameter space. The total order then provides a minimum valuation to which the given timed trace is mapped.

Remark 2

For technical reasons, we often adjoin two special elements \(\top \) and \(\bot \) to \(\mathcal {V} (\varphi (\mathbf {p}), X)\) such that \(\forall \nu (\mathbf {p}) \in \mathcal {V} (\varphi (\nu (\mathbf {p})), X)\), \(\bot \trianglelefteq \nu (\mathbf {p}) \trianglelefteq \top \) and \(\forall x \in X\), \(x \models \varphi (\top (\mathbf {p}))\) and \(\lnot (x \models \varphi (\bot (\mathbf {p})))\). These special elements mark whether \(\mathcal {V} (\varphi (\nu (\mathbf {p})))\) is the whole parameter space or empty.

We present the lexicographic order on parameters as one possible linearization; other linearizations, such as those based on a weighted sum in the parameter space could also be used (presented in Appendix A for brevity).

Lexicographic Order. A lexicographic order (denoted \(\preceq _\mathrm {lex}\)) uses the specification of a total order on parameter indices to linearize the partial order. We formalize lexicographic ordering as follows.

Definition 5

(Lexicographic Order). Suppose we are given a total order on the parameters \(j_1> \cdots > j_n\). The total order \(\preceq _\mathrm {lex}\) on the parameter space \({\mathcal {D}_\mathcal {P}}\) is defined as:

Note that for a given total or partial order, we can define \(\inf \) and \(\sup \) under that order in standard fashion. Formally, the projection function using lexicographic order is defined as follows:

Computing \(\pi _{\mathrm {lex}}\) . To approximate \(\pi _{\mathrm {lex}}(\mathbf {x})\), we recall Algorithm 1 from [14] that uses a simple lexicographic binary searchFootnote 3.

We begin by setting the interval to search for a valuation in \(\mathcal {V} (\varphi (\mathbf {p}))\). We set the initial valuation to \(\top \) since it induces the most permissive STL formula. Next, for each parameter, (in the order imposed by \(\preceq _\mathrm {lex}\)), we perform bisection search on the interval to find a valuation in \(\mathcal {V} (\varphi (\mathbf {p}))\). Once completed, we return the lower bound of the search-interval as it is guaranteed to be satisfiable (if a satisfiable assignment exists).

Crucially, this algorithm exploits the monotonicity of the PSTL formula to guarantee that there is at most one point during the bisection search where the satisfaction of \(\varphi \) can change. The number of iterations for each parameter index i is bounded above by \(\log \left\lceil \frac{\sup ({\mathcal {D}_\mathcal {P}}_{i})-\inf ({\mathcal {D}_\mathcal {P}}_{i})}{\epsilon _i} \right\rceil \), and the number of parameters. This gives us an algorithm with complexity that grows linearly in the number of parameters and logarithmically in the desired precision.

Remark 3

Pragmatically, we remark that the projection algorithm is inherently very parallel at the trace level and as such scales well across machines.

Example 4

For the running example (PSTL formula (1)), we use the order \(a \preceq _\mathrm {lex}\tau \). As \(\mathrm {sgn}(a) = -\), and \(\mathrm {sgn}(\tau ) = +\), lexicographic projection has the effect of first searching for the largest a, and then searching for the smallest \(\tau \) such that the resulting valuation is in \(\mathcal {V} (\varphi _{\text {overshoot}})\). The projections of the three traces from STL cluster 0 from Fig. 1 are shown in Fig. 2. We use the same color to denote a trace and its projection in parameter space.

Three clusters represented using the level sets of Gaussian functions learned from Gaussian Mixture Models (GMMs) (see Example 5). The user specifies the number of clusters to discover (3 in this case), and specifies that the GMM algorithm use a diagonal covariance matrix (which restricts cluster shape to axis-aligned ellipsoids).

Clustering and Labeling. What does one gain by defining a projection, \(\pi \)? We posit that applying unsupervised learning algorithms for clustering in \({\mathcal {D}_\mathcal {P}}\) lets us glean insights about the logical structure of the trace-space by grouping traces that satisfy similar formulas together. Let L be a finite, nonempty set of labels. Let \(Y\subset X\) represent a user-provided set of traces. In essence, a clustering algorithm identifies a labeling function \(\ell : Y\rightarrow \mathbf {2}^{L}\) assigning to each trace in \(Y\) zero or more labels. We elaborate with the help of an example.

Example 5

In Fig. 3, we show a possible clustering induced by using Gaussian Mixture ModelsFootnote 4 (GMMs) for the trace-projections for the traces in Fig. 1. The figure shows that the traces colored green, red and black are grouped in the same cluster; this matches the observation that all three traces have behaviors indicating overshoots, but of reasonable magnitudes. On the other hand, traces colored magenta and yellow have no overshoot and are grouped into a second cluster. The final cluster contains the blue and cyan traces, both with a large overshoot.

Supposing the clustering algorithm reasonably groups traces satisfying similar parameter valuations/logical formulas, one may ask: “Can we describe this group of traces in terms of an easily interpretable STL formula?” Using an ellipsoid to represent a cluster, unfortunately, the answer is negative.

Example 6

For the cluster labeled 0 in Fig. 1, in (10), we show the formula describing the ellipsoidal cluster. Here the \(c_i\)s are some constants.

It is clear that formula (10) is inscrutable, and actually represents an infinite number of STL formulas. In case of GMMs, we can at least have an abstract description of clusters using ellipsoid shapes in the parameter-space. If we use spectral clustering (as described in Sect. 1), the representation of a cluster in the parameter space is even less obvious. To mitigate this problem, we observe that the distance between points in \({\mathcal {D}_\mathcal {P}}\) is a “good” proxy for whether they receive the same label. Thus, another way to define the labeling function \(\ell \), is via parameter ranges. We argue that the use of axis-aligned hyperboxes enclosing points with the same labels is a useful approximation of the clusters, particularly because as we see in the next section, it has a compact STL encoding.

Remark 4

For a given set of points, the tightest-enclosing hyperbox may include points that would not have received the same label by an off-the-shelf clustering algorithm. This can lead to a scenario where hyperbox-clusters intersect (see Fig. 5c in for an example). This means that we can now have points in the parameter space that can have possibly two or more labels. We argue that this can be addressed in two ways: (1) introduce a new hyperbox cluster for points in the intersection, (2) indicate that points in the intersection represent traces for which there is additional guidance required from the designer.

Hyperbox Clusters. In the previous section, we showed that we can construct a labeling function \(\ell \) to assign labels to the user-provided set of traces \(Y\). We now see how we can extend this labeling function to all possible traces \(X\).

Let  . A valid hyperbox B in the parameter space is defined in terms of its extreme points \((\nu _s(\mathbf {p}), \nu _w(\mathbf {p}))\), (where \(\nu _s \trianglelefteq \nu _w\)), where \(\nu _s\) and \(\nu _w\) are the infimum and supremum resp. over the box w.r.t. \(\trianglelefteq \). Formally,

. A valid hyperbox B in the parameter space is defined in terms of its extreme points \((\nu _s(\mathbf {p}), \nu _w(\mathbf {p}))\), (where \(\nu _s \trianglelefteq \nu _w\)), where \(\nu _s\) and \(\nu _w\) are the infimum and supremum resp. over the box w.r.t. \(\trianglelefteq \). Formally,

Definition 6

(Hyperbox)

In other words, we assume that a hyperbox is open on all faces not connected to the infimum of the box, unless the face is connected to the supremum of \({\mathcal {D}_\mathcal {P}}\). Let \(\mathcal {B}\) denote the set of all such hyperboxes.

Definition 7

(Hyperbox Labeling Function). Given a trace x and a hyperbox B, s.t. \(\pi (x) \in B\), we define \(\ell _\mathrm {box}\) as the hyperbox labeling function from \(X\) to \(\mathbf {2}^{L}\) as follows:

In other words, we only consider hyperboxes that contain the projections of all traces with a specific label (say l), and then any trace that projects to some point in the hyperbox gets all such labels l. We extend the definition \(\ell _\mathrm {box}(x)\) to boxes, such that \(\ell _\mathcal {B}(B) = \left\{ l \mid \pi (x) \in B \wedge l \in \ell _\mathrm {box}(x)\right\} \). We note that  represents the smallest set containing all parameter valuations that are labeled l. However, \(B^{*}\) does not satisfy the definition of a hyperbox as per Definition 6 as it is a closed set. Hence, we define an \(\epsilon \) relaxation of this set as the smallest bounding hyperbox satisfying Definition 6 at Hausdorff distance \(\epsilon \) from \(B^{*}\), and call it the \(\epsilon \) -bounding hyperbox. In the next section, we show how we can translate a cluster represented as an \(\epsilon \)-bounding hyperbox to an STL formula. We will further examine how, in some cases, we can represent a cluster by a superset \(B'\) of the \(\epsilon \)-bounding hyperbox that satisfies \(l \in \ell _\mathcal {B}(B')\), but allows a simpler STL representation.

represents the smallest set containing all parameter valuations that are labeled l. However, \(B^{*}\) does not satisfy the definition of a hyperbox as per Definition 6 as it is a closed set. Hence, we define an \(\epsilon \) relaxation of this set as the smallest bounding hyperbox satisfying Definition 6 at Hausdorff distance \(\epsilon \) from \(B^{*}\), and call it the \(\epsilon \) -bounding hyperbox. In the next section, we show how we can translate a cluster represented as an \(\epsilon \)-bounding hyperbox to an STL formula. We will further examine how, in some cases, we can represent a cluster by a superset \(B'\) of the \(\epsilon \)-bounding hyperbox that satisfies \(l \in \ell _\mathcal {B}(B')\), but allows a simpler STL representation.

Example 7

For the example shown in Fig. 1, for each of the red, green and black traces x, \(\ell _\mathrm {box}(x) = \left\{ 0\right\} \), while for the blue and cyan traces, \(\ell _\mathrm {box}(x) = \left\{ 2\right\} \). Any hyperbox B satisfying Definition 6 that is a superset of the hyperbox enclosing the red, green and black points shown in the bottom right figure has \(\ell _\mathcal {B}(B) = \left\{ 0\right\} \), while the hyperbox shown in the figure is an \(\epsilon \)-bounding hyperbox.

4 Learning STL Formulas from Clusters

A given \(\epsilon \)-bounding hyperbox B simply specifies a range of valuations for the parameters in a PSTL template \(\varphi \). We now demonstrate that because \(\varphi \) is monotonic, there exists a simple STL formula that is satisfied by the set of traces that project to some valuation in B. Recall that we use \(\llbracket \varphi ({\nu })\rrbracket \) to denote the set of traces that satisfies \(\varphi (\nu (\mathbf {p}))\). We define \(X_B\) as the set of traces that have a satisfying valuation in B:  .

.

Theorem 1

There is an STL formula \(\psi _B\) such that \(\{x \in X\mid x \models \psi _B\} \equiv X_B\).

Before proving this theorem, we introduce some notation:

Definition 8

(Essential Corners, \(E_B\) ). Let \(\nu _w(\mathbf {p}) = (w_1,\ldots ,w_n)\), and let \(\nu _s(\mathbf {p}) = (s_1,\ldots ,s_n)\). A valuation corresponding to an essential corner has exactly one i such that \(\nu (p_i) = s_i\), and for all \(j \ne i\), \(\nu (p_j) = w_j\).

Proof

(Theorem 1 ). We first introduce the notion of essential corners of a box B.

Note that B can be written in terms of downward closures of valuations: \(B = D(\nu _w) \cap \bigcap _{\nu \in E_B} \overline{D(\nu )}\). From Lemma 1, the set of traces satisfying a formula in \(\varphi (D(\nu ))\) is equivalent to \(\llbracket \varphi ({\nu })\rrbracket \). Further, using the equivalence between intersections (\(\cap \)) of sets of traces and conjunctions (\(\wedge \)) in STL, and equivalence of set-complements with negations, we define \(\psi _B\) below and note that the set of traces satisfying the formula \(\psi _B\) below is \(X_B\).\(\blacksquare \)

Example 8

Consider the \(B \in \mathcal {B}\) enclosing the projections for the yellow and magenta traces (Cluster 1). The corner-points of the cluster in clockwise order from bottom right corner are: \((-1.3,0.1)\), \((-1.3,3.3)\), \((-1.1,3.3)\), \((-1.1,0.1)\). Observe that as \(\mathrm {sgn}(a) = -\) and \(\mathrm {sgn}(\tau ) = +\), \(\nu _s\) = \((a \mapsto -1.1, \tau \mapsto 0.1)\), \(\nu _w\) = \((a \mapsto -1.3, \tau \mapsto 3.3)\). Thus, \(E_B\) = \(\{(-1.3, 0.1), (-1.1, 3.3)\}\). Thus:

Lemma 2

\(|\psi _B| \le (|\mathcal {P}| +1|)(|\varphi | + 2)\)

Proof

Recall from Definition 8 that corners in \(E_B\) have exactly 1 param set to \(s_i\). There are \(|\mathcal {P}|\) params, thus by pigeon hole principle, \(|E_B| \le |\mathcal {P}|\). In \(\psi _B\) for each corner in \(E_B\), the corresponding formula is negated, adding 1 symbol. Between each \(|\mathcal {P}| + 1\) instantiations of \(\varphi \) is a \(\wedge \). Thus \(|\psi _B| \le (|\mathcal {P}| + 1)(|\varphi | + 2)\) \(\blacksquare \)

Simplifying STL Representation. To motivate this section, let us re-examine Example 8. From Fig. 1, we can observe that there is no hyperbox cluster to the left of or above the chosen hyperbox cluster B, i.e., the one containing the magenta and yellow trace-projection. What if we consider supersets of B that are hyperboxes and have the same infimum point? For Example 8, we can see that any hyperbox that extends to the supremum of the parameter space in \(\tau \) or \(-a\) direction would be acceptable as an enclosure for the yellow and magenta traces (as there are no other traces in those directions). We formalize this intuition in terms of relaxing the set of corners that can appear in \(E_B\).

For instance, suppose that we replace \(E_B\) in Eq. (13) with \(E'_B\), where \(E'_B\) is any subset of the corners of B (excluding \(\nu _w\)). We call the collection of shapes induced by this relaxation as \(\mathcal {B}_2\). For \(|\mathcal {P}| = 2\), the possible shapes of elements in \(\mathcal {B}_2\) are shown in Fig. 4. For convenience, we use a bit-vector encoding for hyperbox corners, where \(\nu _s\) corresponds to the bit-vector with all 0s, \(\nu _w\) has all 1s, and essential corners are bit-vectors with exactly one 0. Consider the L shaped region, \(C_L\), created by \(E'_B = \{00\}\). The formula corresponding to \(C_L\) has obviously less descriptive complexity than \(E_B = \{01, 10\}\). Further notice, \(\mathcal {B}2 \setminus \mathcal {B}\) would have less descriptive complexity than elements of \(\mathcal {B}\).

One critical feature that \(\mathcal {B}_2\) (and thus \(\mathcal {B}\)) has is comparable convexity:

Definition 9

(Comparable Convexity). If \(\forall \nu , \nu ' \in B \subset {\mathcal {D}_\mathcal {P}}\) if \(\nu \trianglelefteq \nu '\) or \(\nu ' \trianglelefteq \nu \) then all convex combinations of \(\nu \) and \(\nu '\) are in B.

Comparable convexity allows us to argue that one can gain some insight into the set of traces by just examining the extremal cases and just “interpolating” the cases because of the associated parameters. We call these extremal cases the “representatives” of a cluster.

Theorem 2

Each element in \(\mathcal {B}_2\) is comparably convex. See Fig. 4 for examples.

Proof

Note that all elements of \(\mathcal {B}\subset \mathcal {B}_2\) are trivially comparably convex since hyperboxes are convex. Thus we focus on elements of \(\mathcal {B}_2 \setminus \mathcal {B}\). Now observe that any element \(C \in \mathcal {B}_2\) is the union of a finite set, \(\mathcal {H}\), of boxes in \(\mathcal {B}\). C s.t. \(C = \bigcup _{B_i \in \mathcal {H} \subset \mathcal {B}} B_i\) where \(\nu _1 \in B_1\) and \(\nu _2 \in B_2\). If \(B_1 \subset B_2\) or the other way around or \(\nu _1\in B_1 \cap B_2\) or \(\nu _2 \in B_1 \cap B_2\), then again there trivially the convex combination of \(\nu _1\) and \(\nu _2\) is in C because hyperboxes are convex (and the intersection of two hyperboxes is a hyperbox).

This leaves the case where \(\nu _1 \in B_1 \setminus B_2\) and \(\nu _2 \in B_2 \setminus B_1\) and neither \(B_1 \subset B_2\) nor \(B_2 \subset B_1\). This implies that \(\inf (B_1)\) is not comparable to \(\inf (B_2)\). W.L.O.G assume \(\nu _1 \trianglelefteq \nu _2\) and that the convex combination of \(\nu _1\) and \(\nu _2\) is not a subset C. Note that the definition of downward closure and the fact that  . But, \(B'\) is convex and \(B' \subset B_2 \subset C\), thus the convex combination of \(\nu _1\) and \(\nu _2\) is in C which is a contradiction. \(\blacksquare \)

. But, \(B'\) is convex and \(B' \subset B_2 \subset C\), thus the convex combination of \(\nu _1\) and \(\nu _2\) is in C which is a contradiction. \(\blacksquare \)

5 Case Studies

Implementation Details. We leveraged Breach [9] for performing projections \(\pi _{\mathrm {lex}}\), \(\mathtt {scikit}\)-\(\mathtt {learn}\) toolkit [11] for clustering and custom Python code for learning STL formulas for clusters. An IPython notebook with compressed versions of the datasets studied in the case studies (and a replementation of \(\pi _{\mathrm {lex}}\) in Python) is available for download at [1].

Diesel Engine. In this case study, we are provided with timed traces for a signal representing the Exhaust Gas Recirculation (EGR) rate for an early prototype of a Diesel Engine airpath controller. As the example comes from an automotive setting, we suppress actual signal values for proprietary reasons. The controller computes an EGR reference rate and attempts to track it. Typically, engineers visually inspect the step-response of the control system and look for patterns such as unusual overshoots, slow responsiveness, etc. The ST-Lib library [13] defines a pool of PSTL formulas designed to detect violations of such properties. Using a property from ST-Lib requires correctly setting the parameters in the PSTL templates therein. In this case study, we show how we can use our technique to determine parameters that characterize undesirable behavior. We focus on two templates: Rising Step and Overshoot. Many ST-Lib formulas are “step-triggered”, \(i.e., \) they are of the form: \(\mathbf {F}(\text {step}\wedge \phi )\) We first identify parameters for the step template, as it is used as a primitive in further analysis. For example, in the overshoot analysis we seek to characterize by what margin traces overshoot the reference. We use the following templates for rising-step and overshoot:

Equation (15) first reduces step detection (via a discrete derivative) to spike detection and then applies the ST-Lib spike detection template (that introduces a second derivative). As the view of PSTL is signal-centric, such operations can be introduced as new timed traces of a new signal, and do not require any modification of the logic. The \(\text {step}^*\) that appears in Eq. (16) is result step primitive we learn during our analysis. Finally, the lexicographic ordering used in the projections of Eqs. (15) and (16) are: \(m \preceq _\mathrm {lex}w\) and \(c \preceq _\mathrm {lex}w\) resp. Finally, each parameter is in \(\mathbb {R}_{>0}\).

Experiments. We have 33 traces of variable time-length. As a preprocessing step, we used a sliding window with a size of 1 second and a sliding offset of 0.5 s to generate equal length traces. The sliding window size and the offset was chosen by observation and experience to capture the significant local behaviors. In general, such a selection could be automated based on statistical criteria. Further, as we did not exploit the relationship between traces generated by the sliding window, we effectively analyzed over \(2\times 10^6\) traces (1 GB). Each trace generated is then prepossessed by numerically computing the second derivativeFootnote 5. After projecting to the parameter space for each template, we normalize the parameters to lie between 0, 1 and fit a Gaussian Mixture Model to generate labels, and learn the STL formulas for each cluster.

Results. The Step template revealed 3 clusters (Fig. 5a), of which the cluster labeled Step (Fig. 5b), was identified as an admissible “step” primitive. In picking the appropriate bounding box in \(\mathcal {B}\) we noted spikes have no inherit upper limit to their peaks. Thus, we derived the characterizing STL:  , where \(m^*, w^*\) are suppressed for proprietary reasons. The overshoot analysis revealed 5 clusters. We note that there are actually 2 distinct clusters which can reasonably be called overshoots, given by label 1 and 4 in Fig. 5c. The interpretation, is that while the majority of the overshoots occur soon after the step, there is a cluster that occurred later, either due to slow rise time or non-linear effects causing the oscillation about the reference to increasing before dying away. In either case, as with spike, we declare that any overshoot is still an overshoot as the amplitude c rises. Thus for cluster 1 we again chose to use a box from \(\mathcal {B}\) that does not bound c. This lead to:

, where \(m^*, w^*\) are suppressed for proprietary reasons. The overshoot analysis revealed 5 clusters. We note that there are actually 2 distinct clusters which can reasonably be called overshoots, given by label 1 and 4 in Fig. 5c. The interpretation, is that while the majority of the overshoots occur soon after the step, there is a cluster that occurred later, either due to slow rise time or non-linear effects causing the oscillation about the reference to increasing before dying away. In either case, as with spike, we declare that any overshoot is still an overshoot as the amplitude c rises. Thus for cluster 1 we again chose to use a box from \(\mathcal {B}\) that does not bound c. This lead to:  again suppressing values.

again suppressing values.

Traffic Behavior on the US-101 Highway. In order to model and predict driver behavior on highways, the Federal Highway Administration collected detailed traffic data on southbound US-101 freeway, in Los Angeles [8]. The pre-selected segment of the freeway is about 640 m in length and consists of five main lanes and some auxiliary lanes. Traffic through the segment was monitored and recorded through eight synchronized cameras, mounted on top of the buildings next to the freeway. A total of 45 min of traffic data was recorded including vehicle trajectory data providing lane positions of each vehicle within the study area.

Here, we apply our method to analyze lane switching “aggressiveness” characterized by how often a driver switches lanes and the dwell time in each lane before switching. We focus on lanes 2, 3, and 4, ignoring the outer lanes 1 and 5 since they are used entering and exiting the freeway, and thus have qualitatively different behavior. Each vehicle trajectory x(t), stores the lane position for the vehicle, and we use the following STL formula to capture the dwell time in \(L_i\):

Results. For this experiment, from 4824 total vehicle trajectories, we discard trajectories with no lane switching behavior and group them with the conservative driving behaviors. We analyze the remaining 896 targeted trajectories that have at least one lane-switch behavior, and each trajectory is at most 100 s long. As all parameters are independent, lexicographic ordering has no impact on \(\pi _{\mathrm {lex}}\). After normalizing the parameters by centering and scaling, we apply GMMs to label and generate bounding hyperboxes/STL formulas.

The resulting clusters are shown in Fig. 6a. Upon examining the representatives,we classified the behaviors of each cluster into 4 groups:

-

T1: No Weaving: only switching to adjacent lanes and never changing back.

-

T2: Normal driving behavior, from switching to adjacent lanes and coming back to overtake a slow vehicle in front.

-

T3: Slightly aggressive behavior, weaving between 2 lanes.

-

T4: Aggressive behavior, weaving between all three lanes.

The largest cluster, 0, contains behaviors without any weaving behavior. Cluster 3 and 5 represent the weaving behavior involving 2 lanes. Cluster 6 represents aggressive behavior and one of the representative is shown in Fig. 6c. We consider Cluster 7 as an anomaly for Cluster 2 as it has only 1 trajectory.

(a) Lane Switching Behavior results. Columns: Cluster, parameters, cluster type, num(traces). (b) shows the representatives of cluster 1, which upon the inspection are qualitatively very different. The blue car moves from lane 5 to lane 4, remains for \(\approx \)60 s and then moves to lane 3. The red car appears to use lane 5 to pass another car, move’s back into lane 4 and then to lane 3 shortly after. Inspecting the data, most of cluster 1 large \(\tau _4\) value. We subdivided the behavior further using a one class svm and interpreted the small \(\tau _4\) values as “aggressive”. New “aggressive” representatives given in (c). (Color figure online)

For clusters 1, 2, and 4, we cannot distinguish if drivers were rapidly weaving or weaving within a short period of time, due to the scarcity of the data. As seen in Fig. 6b, the representatives for cluster 1, demonstrated two different behaviors; one involving rapid lane-switching (red trace), one where the driver switched lanes more slowly (blue trace). Applying an additional 1-class SVM to the points in cluster 1 was used to distinguish these two cases.

CPS Grader. Massively Open Online Courses (MOOCs) present instructors the opportunity to learn from a large amount of collected data. For example, the data could be clustered to identify common correct solutions and mistakes. Juniwal et al. [16] demonstrated a semi-supervised procedure for a CPS MOOC; this involved first using DTW and K-Nearest Neighbors (KNN) to cluster traces of student solutions, and then picking representatives from clusters to ask the instructor to label. From the labeled data, they extract a characterizing STL formula given a PSTL template. The techniques demonstrated in this paper offer an alternative approach that can overcome some limitations of [16]. Firstly, as demonstrated in the opening example (see Fig. 1), DTW does not necessarily group traces in a way consistent with their logical classification. Second, the burden of labeling traces can still be quite large for instructors if the number of clusters is very large. Instead, unsupervised our approach offers a fully unsupervised approach (e.g., based on GMMs or K-Means) which still offers some degree of confidence that elements in the same cluster are similar w.r.t. a given PSTL template.

The tests in [16] involved the simulation of an IRobot Create and student generated controllers. The controller needed to navigate the robot up an incline and around static obstacles. To test this, the authors created a series of parameterized environments and a set of PSTL formula that characterized failure. In this work, we attempt to reproduce a somewhat arbitrary subset of the results shown in [16] that required no additional preprocessing on our part.

Obstacle Avoidance. We focus on 2 tests centered around obstacle avoidance. The authors used an environment where an obstacle is placed in front of a moving robot and the robot is expected to bypass the obstacle and reorient to it’s pre-collision orientation before continuing. The relevant PSTL formulas were “Failing simple obstacle avoidance” and “Failing re-orienting after obstacle avoidance” given below as \(\varphi _{avoid}\) and \(\varphi _{reorient}\) resp.:

CPS Grader Study Results, w. \(\mathcal {V} (\varphi _{avoid})\), (c), from [16] included for comparison (valuations in the green region of (c) correspond to mistakes). We note that in (b) we are able to identify 3 modes of failure (obstacle not avoided, 2x obstacle avoided but did not reorient), an insight not present in [16]. (Color figure online)

Results. A surprising observation for both templates is that the vast majority of data is captured in a relatively small parameter range. Upon investigation, it was revealed that the students were able to submit multiple solutions for grading—each corresponding to a trace. This biased the dataset towards incorrect solutions since one expects the student to produce many incorrect solutions and then a few final correct solutions. As seen in Fig. 7a, the results imply that a classifier for label 0, which corresponded to the robot not passing the obstacle, would have a low misclassification rate when compared against the STL artifact from [16]. Moreover, for obstacle avoidance, there are two other families of correct solutions uncovered. One is the set of traces that just barely pass the obstacle in time (label 2 in Fig. 7a), and the other is the spectrum of traces that pass the minimum threshold with a healthy margin (label 1 in Fig. 7a). For the reorient template, we discovered 3 general types of behaviors (again with GMMs), see Fig. 7b. The first (label 1) is a failure to move past the obstacle (echoing the large group under the obstacle avoidance template). The other 3 groups seem to move passed the obstacle, but two (labels 0 and 3) of them display failure to reorient to the original orientation of \(45^\circ \). One could leverage this behavior to craft diagnostic feedback for these common cases.

Conclusion. In this work we explored a technique to leverage PSTL to extract features from a time series that can be used to group together qualitatively similar traces under the lens of a PSTL formula. Our approach produced a simple STL formula for each cluster, which along with the extremal cases, enable one to develop insights into a set of traces. We then illustrated with a number of case studies how this technique could be used and the kinds of insights it can develop. For future work, we will study extensions of this approach to supervised, semi-supervised, and active learning. A key missing component in this work is a principled way to select a projection function (perhaps via learning or posterior methods). Other possible extensions involve integration with systematic PSTL enumeration, and learning non-monotonic PSTL formulas.

Notes

- 1.

If \(X\) is obvious from context (or does not matter), we write \(\mathcal {V} (\varphi (\mathbf {p}), X)\) as \(\mathcal {V} (\varphi )\).

- 2.

For canonicity, \(\pi \) need not be a function from timed traces to \({\mathcal {D}_\mathcal {P}}\). For example, it may be expedient to project a trace to a subset of \({\mathcal {D}_\mathcal {P}}\). For simplicity, we defer more involved projections to future exposition.

- 3.

For simplicity, we have omitted a number of optimizations in Algorithm 1. For example, one can replace the iterative loop through parameters with a binary search over parameters.

- 4.

A GMM assumes that the given parameter space can be modeled as a random variable distributed according to a set of Gaussian distributions with different mean and variance values. A given parameter valuation is labeled l if the probability of the valuation belonging to the \(l^{th}\) Gaussian distribution exceeds the probability of the valuation belonging to other distributions. Another way to visualize clusters in the parameter space is by level-sets of the probability density functions associated with the clusters. For example, for the \(l^{th}\) cluster, we can represent it using the smallest level-set that includes all given points labeled l.

- 5.

As the discrete-time derivative can introduce considerable noise, we remark that the discrete-time derivative can often be approximated by a noise-robust operation (such as the difference from a rolling mean/median.).

References

Logical Clustering CAV2017 Artifact. https://archive.org/details/Logical_Clustering_CAV2017_Artifact. Accessed 29 Apr 2017

Ackerman, E.: Google’s autonomous cars are smarter than ever at 700,000 miles. IEEE Spectr. (2014). http://spectrum.ieee.org/cars-that-think/transportation/self-driving/google-autonomous-cars-are-smarter-than-ever

Akazaki, T., Hasuo, I.: Time robustness in MTL and expressivity in hybrid system falsification. In: Kroening, D., Păsăreanu, C.S. (eds.) CAV 2015. LNCS, vol. 9207, pp. 356–374. Springer, Cham (2015). doi:10.1007/978-3-319-21668-3_21

Alur, R., Henzinger, T.A.: A really temporal logic. JACM 41(1), 181–203 (1994)

Asarin, E., Donzé, A., Maler, O., Nickovic, D.: Parametric identification of temporal properties. In: Khurshid, S., Sen, K. (eds.) RV 2011. LNCS, vol. 7186, pp. 147–160. Springer, Heidelberg (2012). doi:10.1007/978-3-642-29860-8_12

Bartocci, E., Bortolussi, L., Sanguinetti, G.: Learning temporal logical properties discriminating ECG models of cardiac arrhytmias. arXiv preprint arXiv:1312.7523 (2013)

Bombara, G., Vasile, C.I., Penedo, F., Yasuoka, H., Belta, C.: A decision tree approach to data classification using signal temporal logic. In: Proceedings of HSCC, pp. 1–10 (2016)

Colyar, J., Halkias, J.: US highway 101 dataset. Federal Highway Administration (FHWA), Technical report FHWA-HRT-07-030 (2007)

Donzé, A.: Breach, a toolbox for verification and parameter synthesis of hybrid systems. In: Touili, T., Cook, B., Jackson, P. (eds.) CAV 2010. LNCS, vol. 6174, pp. 167–170. Springer, Heidelberg (2010). doi:10.1007/978-3-642-14295-6_17

Donzé, A., Maler, O., Bartocci, E., Nickovic, D., Grosu, R., Smolka, S.: On temporal logic and signal processing. In: Chakraborty, S., Mukund, M. (eds.) ATVA 2012. LNCS, pp. 92–106. Springer, Heidelberg (2012). doi:10.1007/978-3-642-33386-6_9

Pedregosa, F., et al.: Scikit-learn: machine learning in python. J. Mach. Learn. Res. 12, 2825–2830 (2011)

Hoxha, B., Dokhanchi, A., Fainekos, G.: Mining parametric temporal logic properties in model-based design for cyber-physical systems. Int. J. Softw. Tools Technol. Transf. 1–15 (2017)

Kapinski, J., et al.: ST-lib: a library for specifying and classifying model behaviors. In: SAE Technical Paper. SAE (2016)

Jin, X., Donzé, A., Deshmukh, J.V., Seshia, S.A.: Mining requirements from closed-loop control models. IEEE TCAD ICS 34(11), 1704–1717 (2015)

Jones, A., Kong, Z., Belta, C.: Anomaly detection in cyber-physical systems: a formal methods approach. In: Proceedings of CDC, pp. 848–853 (2014)

Juniwal, G., Donzé, A., Jensen, J.C., Seshia, S.A.: CPSGrader: synthesizing temporal logic testers for auto-grading an embedded systems laboratory. In: Proceedings of EMSOFT, p. 24 (2014)

Keogh, E.J., Pazzani, M.J.: Scaling up dynamic time warping for data mining applications. In: Proceedings of KDD, pp. 285–289 (2000)

Kong, Z., Jones, A., Medina Ayala, A., Aydin Gol, E., Belta, C.: Temporal logic inference for classification and prediction from data. In: Proceedings of HSCC, pp. 273–282 (2014)

Koymans, R.: Specifying real-time properties with metric temporal logic. Real-Time Syst. 2(4), 255–299 (1990)

Legriel, J., Guernic, C., Cotton, S., Maler, O.: Approximating the pareto front of multi-criteria optimization problems. In: Esparza, J., Majumdar, R. (eds.) TACAS 2010. LNCS, vol. 6015, pp. 69–83. Springer, Heidelberg (2010). doi:10.1007/978-3-642-12002-2_6

Li, W., Forin, A., Seshia, S.A.: Scalable specification mining for verification and diagnosis. In: Proceedings of the Design Automation Conference (DAC), pp. 755–760, June 2010

Lin, J., Keogh, E., Truppel, W.: Clustering of streaming time series is meaningless. In: Proceedings of the 8th ACM SIGMOD Workshop on Research Issues in Data Mining and Knowledge Discovery, pp. 56–65. ACM (2003)

Maler, O., Nickovic, D.: Monitoring temporal properties of continuous signals. In: Lakhnech, Y., Yovine, S. (eds.) FORMATS/FTRTFT 2004. LNCS, vol. 3253, pp. 152–166. Springer, Heidelberg (2004). doi:10.1007/978-3-540-30206-3_12

von Luxburg, U.: A tutorial on spectral clustering. Stat. Comput. 17(4), 395–416 (2007)

Acknowledgments

We thank Dorsa Sadigh, Yasser Shoukry, Gil Lederman, Shromona Ghosh, Oded Maler, Eamon Keogh, and our anonymous reviewers for their invaluable feedback, and Ken Butts for the Diesel data. This work is funded in part by the DARPA BRASS program under agreement number FA8750–16–C–0043, the VeHICaL Project (NSF grant #1545126), and the Toyota Motor Corporation under the CHESS center.

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

A Appendix

A Appendix

Related Monotonic Formula for a Non-monotonic PSTL Formula

Example 9

Consider the PSTL template:  . We first show that the given formula is not monotonic.

. We first show that the given formula is not monotonic.

Proof

Consider the trace \(x(t) = 0\). Keep fixed \(w = 1\). Observe that \(h=0\), x(t) satisfies the formula. If \(h=-1\), then \(x(t) \not \models \varphi (h, w)\), since \(x(t) \le h\) is not eventually satisifed. If \(h=1\), then \(x(0) \le 1\) implying that for satisfaction, within the next 1 time units, the signal must becomes greater than 1. The signal is always 0, so at \(h=1\), the formula is unsatisfied. Thus, while increasing h from \(-1\) to 0 to 1, the satifaction has changed signs twice. Thus, \(\varphi (h, w)\) is not monotonic.

Now consider the following related PSTL formula in which repeated instances of the parameter h are replaced by distinct parameters \(h_1\) and \(h_2\). We observe that this formula is trivially monotonic:  .

.

Linearization Based on Scalarization

Borrowing a common trick from multi-objective optimization, we define a cost function on the space of valuations as follows: \(J(\nu (\mathbf {p})) = \sum _{i=1}^{|\mathcal {P}|} \lambda _i \nu (p_i)\). Here, \(\lambda _i \in \mathbb {R}\), are weights on each parameter. The above cost function implicitly defines an order \(\preceq _\mathrm {scalar}\), where, \(\nu (\mathbf {p}) \preceq _\mathrm {scalar}\nu '(\mathbf {p})\) iff \(J(\nu (\mathbf {p})) \le J(\nu '(\mathbf {p}))\). Then, the projection operation \(\pi _{\mathrm {scalar}}\) is defined as: \(\pi _{\mathrm {scalar}}(\mathbf {x}) = \mathrm {argmin}_{\nu (\mathbf {p}) \in \partial \mathcal {V} (\varphi (\mathbf {p}))} J(\nu (\mathbf {p}))\). We postpone any discussion of how to choose such a scalarization to future work.

Rights and permissions

Copyright information

© 2017 Springer International Publishing AG

About this paper

Cite this paper

Vazquez-Chanlatte, M., Deshmukh, J.V., Jin, X., Seshia, S.A. (2017). Logical Clustering and Learning for Time-Series Data. In: Majumdar, R., Kunčak, V. (eds) Computer Aided Verification. CAV 2017. Lecture Notes in Computer Science(), vol 10426. Springer, Cham. https://doi.org/10.1007/978-3-319-63387-9_15

Download citation

DOI: https://doi.org/10.1007/978-3-319-63387-9_15

Published:

Publisher Name: Springer, Cham

Print ISBN: 978-3-319-63386-2

Online ISBN: 978-3-319-63387-9

eBook Packages: Computer ScienceComputer Science (R0)