Abstract

Much is currently being studied on emotions and their temporal and spatial location. In this framework it is important to considerer the temporal dynamics of affective responses and also the underlying brain activity. In this work we use electroencephalographic (EEG) recordings to investigate the neural activity of 13 human volunteers while looking standardized images (positive/negative). Furthermore the subjects were, at the same time, listening to pleasant or unpleasant music. Then we analyzed topographic changes in EEG activity in the time domain. When we compared positive images with positive music versus negative images with negative music we found a significant time window in the period of time 448–632 ms after the stimulus appears, with a clear right lateralization for negative stimuli and left lateralization for positive stimuli. By contrast when we compared positive images with negative music versus negative images with positive music, we found a delayed window compared to the previous case (592–618 ms) and the marked lateralization disappeared. These results demonstrate the feasibility and usefulness of this approach to explore the temporal dynamics of human emotions and could help to set the basis for future studies of music perception and emotions.

Access provided by CONRICYT-eBooks. Download conference paper PDF

Similar content being viewed by others

Keywords

1 Introduction

The emotional interaction between humans and machines is one of the most important challenges in advanced human-machine interaction. One of the most important requisites in this field is to develop reliable emotion recognition systems, and for it we need to correctly identify emotions.

Some researchers support the notion of biphasic emotion, which states that emotion fundamentally stems from varying activation in centrally organized appetitive and defensive motivational systems that have evolved to mediate the wide range of adaptive behaviors necessary for an organism struggling to survive in the physical world [1, 2]. In this framework, neuroscientists have made great efforts to determine how the relationship between the stimulus input and the behavioral output is mediated though specific neural circuits that are highly organized [3].

The majority of studies in this area are based on techniques such as Positron Emission Tomography (PET) [4] or functional Magnetic Resonance Imaging (fMRI) [5] with exceptional spatial resolution but a very reduced temporal one (seconds). An alternative, which offers an excellent temporal resolution (in the range of milliseconds) is Electroencephalography (EEG).

In this study we investigated the temporal dynamics of neural activity associated to emotions (like/dislike) generated by looking at complex pictures derived from the International Affective Picture System (IAPS) [6] while the subjects listen to pleasant or unpleasant music. We used EEG to solve the problem of temporal resolution. We evaluated the correspondences between subjective emotional experiences induced by the pictures and then the role of the music in the resulting brain activity. Then we estimated the underlying neural places in which event-related potentials (ERPs) were generated and the tridimensional location of this locations was used for the assessment of changes in the activation of cortical networks involved in emotion processing.

Our results offer valuable information to better understand the temporal dynamics of emotions generated to visual and auditive stimuli and could be useful for the development of effective and reliable neural interfaces.

2 Methods

Participants

Thirteen persons participated in this study (mean age: 19.8; range: 19–38; seven men, six women). All of them were right handed with a laterality quotient of at least +0.4 (mean 0.7, SD: 0.2) on the Edinburgh Inventory [7].

All participants had no personal history of psychiatric or neurological disorder, alcohol or drug abuse, or current medication, and had normal or corrected to normal vision and audition. All were comprehensively informed about the details and the purpose of the study and gave their written consent for participation.

Visual and Auditory Stimuli

A set of standardized visual stimuli (80 pictures in total) was selected from the IAPS dataset [6]. These stimuli were validated in a previous study [8].

The images were divided into four groups, each one consisted of 20 images. Stimuli were presented in color, with equal contrast and luminance.

Pleasant music were two excerpts of joyful instrumental dance tunes: A. Dvorák, Slavonic Dance No. 8 in G Minor (Op. 46); J.S. Bach, Rejouissance (BWV 1069) and other fragments of music used previously in similar studies [9].

Unpleasant music were electronically manipulated (stimuli were processed using Cool Edit Pro software): For each pleasant stimulus, a new soundfile was created in which the original (pleasant) excerpt was recorded simultaneously with two pitches-shifted versions of the same excerpt, the pitch-shifted versions being one shade above and a return below the original pitch. Both pleasant and unpleasant versions of an excerpt, original and electronically manipulated, had the same dynamic outline, identical rhythmic structure, and identical melodic contour, rendering it impossible that simply the bottom-up processing of these stimulus dimensions already contributes to brain activation patterns when contrasting effects of pleasant and unpleasant stimuli.

Subjects were instructed to give each stimulus a score from 1 to 9 avoiding 5 depending on subjective taste (1: dislike; 9: like). Their verbal response was written.

Procedure

Figure 1 summarizes the serial configuration of the study. Each image was presented for 500 ms and was followed by a black screen lasting 3500 ms. The music started five seconds before the images started and finished five seconds later. The images appeared randomly and only once. The participants’ task was to observe the images and rate the arousal and valence of its emotional experience. Pictures score ranged from 9 (very pleasant) to 1 (very unpleasant).

Data Acquisition

The participants were seated in a comfortable position and asked to move as little as possible. Following the preparation phase, participants were instructed about the task. The pictures were presented through to a 21.5-inch computer screen to the subject in the dark.

We inculcated subjects to avoiding blinking during image exposure and trying to keep the gaze toward the monitor center. EEG data was continuously recorded by means of cap-mounted Ag-AgCl electrodes and a NeuroScan SynAmps EEG amplifier (Compumedics, Charlotte, NC, USA) from 64 locations according to the international 10/20 system (FP1, FPZ, FP2, AF3, GND, AF4, F7, F5, F3, F1, FZ, F2, F4, F6, F8, FT7, FC5, FC3, FC1, FCZ, FC2, FC4, FC6, FT8, T7, C5, C3, C1, CZ, C2, C4, C6, T8, REF, TP7, CP5, CP3, CP1, CPZ, CP2, CP4, CP6, TP8, P7, P5, P3, P1, PZ, P2, P4, P6, P8, PO7, PO5, PO3, POZ, PO4, PO6, PO8, CB1, O1, OZ, O2, CB2) [10]. The impedance of recording electrodes was examined for each subject prior to data collection and the thresholds were kept less 25 k\(\Omega \) as recommended [11]. All the recordings were performed at a sampling rate of 1000 Hz. Data were re-referenced to a Common Average Reference (CAR) and EEG signals were filtered using a 0.5 Hz high-pass and low-pass 45 Hz filters. Electrical artifacts due to gesticulation and eye blinking were corrected using Principal Component Analysis (PCA) [12]. They were identified as signal levels above 75 \(\upmu \)V in the 5 frontal electrodes (FP1, FPZ, FP2, AF3 and AF4). These electrodes were chosen because they are the most affected by potential unconscious movements. The time interval for artifact detection was within the interval (−200 ms, +500 ms) from stimulus onset.

The images were separated according to their valence (positive or negative) and the accompanying music (positive or negative).

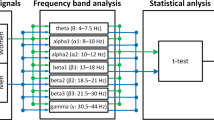

Statistical Analyses

We studied topographic changes in EEG activity [13,14,15,16] with the help of Curry 7 (Compumedics, Charlotte, NC, USA). We considered the total time course and the whole pattern of activation across the scalp by testing the total field power from all electrodes (see for additional description [17]) since this method is able to detect not only variances in amplitude, but also differences in the underlying sources of activity.

Topographical differences in EEG activity between different images were tested using a non-parametric randomization test (Topographic ANOVA or TANOVA) and a significance level of 0.01 as described elsewhere [8, 18].

On significant windows we performed standardized low resolution brain electromagnetic tomography (sLORETA) calculations [19]. This technique is an advanced low resolution distributed imaging technique for brain source localization that provides smooth and better localization for deep sources, with less localization errors but with low spatial resolution.

3 Results

Subjective Scores

The participants identified correctly the positive songs heard in each of the blocks, however, did not obtain very low scores for the unpleasant music (see Fig. 2). In fact, none was scored below five.

EEG

Upper Fig. 3 shows the main significant differences when we showed positive images (score 9 or 8) while the participants were listening to pleasant music regarding images with negative valence (score 1 or 2) presented simultaneously with negative music. We found a large significant time window between 448 ms to 632 ms (sig < 0.05). If the significant criteria is decreased to 0.01, the time window was reduced to 501–553 ms.

When the subjects were looking at positive images (score 9 or 8) while listening to negative music or looking at or negative images (score 1 or 2) while listening to positive music, there was also a large significant time window between 553 ms to 692 ms (sig < 0.05), see Fig. 3. When we decreased the significance to 0.01, the time window was also reduced to 592–618 ms.

sLoreta

Figure 4 shows the main results when we considered all possible source locations simultaneously applying standardized LORETA (sLORETA). We found a left lateralization when both the visual and auditory stimulus had positive valence whereas there was a clear right lateralization when both, visual and auditory stimuli were negative (Fig. 4). However, when we mixed positive images with negative sounds or viceversa there was not a clear laterality.

4 Discussion and Conclusion

Our results showed an increased activity in the left hemisphere for emotions with a positive valence whereas there was an increased activity in the right hemisphere for emotions with a negative valence. These results support our previous studies [8] and suggest that the visual emotional valence is reinforced when it coincides with the valence of the music. Furthermore these results agree with the valence hypothesis, which postulates a preferential engagement of the left hemisphere for positive emotions and of the right hemisphere for negative emotions [20, 21].

In addition we found a delay of a few milliseconds in the whole brain processing when images and music have different valences. Thus when both stimuli are not concordant, emotional processing takes more time.

Although more studies are still needed, our results demonstrate the feasibility and usefulness of presenting simultaneously visual and auditory information to explore the temporal dynamics of human emotions.

These results demonstrate the feasibility and usefulness of this approach to explore the temporal dynamics of human emotions and could help to set the basis for future studies of music perception and emotions. Furthermore this approach could be useful to better understand the role of specific brain regions and their relation to specific emotional or cognitive responses.

References

Cacioppo, J.T., Berntson, G.G.: Relationship between attitudes and evaluative space: a critical review, with emphasis on the separability of positive and negative substrates. Psychol. Bull. 115(3), 401–423 (1994). doi:10.1037/0033-2909.115.3.401

Davidson, R.J., Ekman, P., Saron, C.D., Senulis, J.A., Friesen, W.V.: Approach-withdrawal and cerebral asymmetry: emotional expression and brain physiology. I. J. Pers. Soc. Psychol. 58(2), 330–341 (1990). http://www.ncbi.nlm.nih.gov/pubmed/2319445

Fanselow, M.S.: Neural organization of the defensive behavior system responsible for fear. Psychon. Bull. Rev. 1(4), 429–438 (1994). doi:10.3758/BF03210947. http://www.ncbi.nlm.nih.gov/pubmed/24203551

Royet, J.-P., Zald, D., Versace, R., Costes, N., Lavenne, F., Koenig, O., Gervais, R.: Emotional responses to pleasant and unpleasant olfactory, visual, and auditory stimuli: a positron emission tomography study. J. Neurosci. 20(20), 7752–7759 (2000)

Vink, M., Derks, J.M., Hoogendam, J.M., Hillegers, M., Kahn, R.S.: Functional differences in emotion processing during adolescence and early adulthood. NeuroImage 91, 70–76 (2014). doi:10.1016/j.neuroimage.2014.01.035. http://www.ncbi.nlm.nih.gov/pubmed/24468408, http://linkinghub.elsevier.com/retrieve/pii/S1053811914000561

Lang, P., Bradley, M., Cuthbert, B.: International affective picture system (IAPS): technical manual and affective ratings. In: NIMH Center for the Study of Emotion and Attention, pp. 39–58 (1997). doi:10.1027/0269-8803/a000147, arXiv:0005-7916(93)E0016-Z, http://www.unifesp.br/dpsicobio/adap/instructions.pdf%5Cn, http://econtent.hogrefe.com/doi/abs/10.1027/0269-8803/a000147

Oldfield, R.: The assessment and analysis of handedness: the Edinburgh inventory. Neuropsychologia 9(1), 97–113 (1971). doi:10.1016/0028-3932(71)90067-4. http://linkinghub.elsevier.com/retrieve/pii/0028393271900674

Murcia, M.D.G., Lopez-Gordo, M.A., Ortíz, M.J., Ferrández, J.M., Fernández, E.: Spatio-temporal dynamics of images with emotional bivalence. In: Ferrández Vicente, J.M., Álvarez-Sánchez, J.R., de la Paz López, F., Toledo-Moreo, F.J., Adeli, H. (eds.) IWINAC 2015. LNCS, vol. 9107, pp. 203–212. Springer, Cham (2015). doi:10.1007/978-3-319-18914-7_21

Sammler, D., Grigutsch, M., Fritz, T., Koelsch, S.: Music and emotion: electrophysiological correlates of the processing of pleasant and unpleasant music. Psychophysiology 44(2), 293–304 (2007). doi:10.1111/j.1469-8986.2007.00497.x. http://doi.wiley.com/10.1111/j.1469-8986.2007.00497.x

Klem, G.H., Lüders, H.O., Jasper, H.H., Elger, C.: The ten-twenty electrode system of the international federation. International federation of clinical neurophysiology. Electroencephalogr. Clin. Neurophysiol. 52(Suppl.), 3–6 (1999). http://www.ncbi.nlm.nih.gov/pubmed/10590970

Ferree, T.C., Luu, P., Russell, G.S., Tucker, D.M.: Scalp electrode impedance, infection risk, and EEG data quality. Clin. Neurophysiol. 112(3), 536–544 (2001). doi:10.1016/S1388-2457(00)00533-2

Meghdadi, A.H., Fazel-Rezai, R., Aghakhani, Y.: Detecting determinism in EEG signals using principal component analysis and surrogate data testing. In: 2006 International Conference of the IEEE Engineering in Medicine and Biology Society, pp. 6209–6212. IEEE (2006). doi:10.1109/IEMBS.2006.260679, http://www.ncbi.nlm.nih.gov/pubmed/17946363, http://ieeexplore.ieee.org/document/4463227/

Murray, M.M., Brunet, D., Michel, C.M.: Topographic ERP analyses: a step-by-step tutorial review. Brain Topogr. 20(4), 249–264 (2008). doi:10.1007/s10548-008-0054-5

Brunet, D., Murray, M.M., Michel, C.M.: Spatiotemporal analysis of multichannel EEG: CARTOOL. Comput. Intell. Neurosci. 2011, 1–15 (2011). doi:10.1155/2011/813870

Martinovic, J., Jones, A., Christiansen, P., Rose, A.K., Hogarth, L., Field, M.: Electrophysiological responses to alcohol cues are not associated with Pavlovian-to-instrumental transfer in social drinkers. PLoS ONE 9(4), e94605 (2014). doi:10.1371/journal.pone.0094605. http://dx.plos.org/10.1371/journal.pone.0094605

Laganaro, M., Valente, A., Perret, C.: Time course of word production in fast and slow speakers: a high density ERP topographic study. NeuroImage 59(4), 3881–3888 (2012). doi:10.1016/j.neuroimage.2011.10.082. http://linkinghub.elsevier.com/retrieve/pii/S1053811911012523

Skrandies, W.: Global field power and topographic similarity. Brain Topogr. 3(1), 137–141 (1990). http://www.ncbi.nlm.nih.gov/pubmed/2094301

Rosenblad, A., Manly, B.F.J.: Randomization, Bootstrap and Monte Carlo Methods in Biology, 3rd edn. Chapman & Hall/CRC, Boca Raton (2007) 455 p. ISBN: 1-58488-541-6, Comput. Stat. 24(2), 371-372 (2009). doi:10.1007/s00180-009-0150-3

Pascual-Marqui, R.D.: Standardized low-resolution brain electromagnetic tomography (sLORETA): technical details. Methods Find. Exp. Clin. Pharmacol. 24(Suppl D), 5–12 (2002). http://www.ncbi.nlm.nih.gov/pubmed/12575463

Costa, T., Cauda, F., Crini, M., Tatu, M.-K., Celeghin, A., de Gelder, B., Tamietto, M.: Temporal and spatial neural dynamics in the perception of basic emotions from complex scenes. Soc. Cogn. Affect. Neurosci. 9(11), 1690-1703 (2014). doi:10.1093/scan/nst164. http://www.ncbi.nlm.nih.gov/pubmed/24214921, http://www.pubmedcentral.nih.gov/articlerender.fcgi?artid=PMC4221209, https://academic.oup.com/scan/article-lookup/doi/10.1093/scan/nst164

Fusar-Poli, P., Placentino, A., Carletti, F., Allen, P., Landi, P., Abbamonte, M., Barale, F., Perez, J., McGuire, P., Politi, P.L.: Laterality effect on emotional faces processing: ALE meta-analysis of evidence. Neurosci. Lett. 452(3), 262–267 (2009). doi:10.1016/j.neulet.2009.01.065

Acknowledgement

This work has been supported in part by the Spanish national research program (MAT2015-69967-C3-1), by a research grant of the Spanish Blind Organization (ONCE) by the Ministry of Education of Spain (FPU grant AP-2013/01842) and by Séneca Foundation - Agency of Science and Technology of the Region of Murcia.

Author information

Authors and Affiliations

Corresponding authors

Editor information

Editors and Affiliations

Rights and permissions

Copyright information

© 2017 Springer International Publishing AG

About this paper

Cite this paper

Grima Murcia, M.D., Sorinas, J., Lopez-Gordo, M.A., Ferrández, J.M., Fernández, E. (2017). Temporal Dynamics of Human Emotions: An Study Combining Images and Music. In: Ferrández Vicente, J., Álvarez-Sánchez, J., de la Paz López, F., Toledo Moreo, J., Adeli, H. (eds) Natural and Artificial Computation for Biomedicine and Neuroscience. IWINAC 2017. Lecture Notes in Computer Science(), vol 10337. Springer, Cham. https://doi.org/10.1007/978-3-319-59740-9_24

Download citation

DOI: https://doi.org/10.1007/978-3-319-59740-9_24

Published:

Publisher Name: Springer, Cham

Print ISBN: 978-3-319-59739-3

Online ISBN: 978-3-319-59740-9

eBook Packages: Computer ScienceComputer Science (R0)