Abstract

Radial basis functions (RBFs) are a popular meshfree discretisation method for constructing high-order approximation spaces. They are used in various areas comprising, for example, scattered data approximation, computer graphics, machine learning, aeroelasticity and the geosciences.The approximation space is usually formed using the shifts of a fixed basis function. This simple approach makes it easy to construct approximation spaces of arbitrary smoothness and in arbitrary dimensions.Multiscale RBFs employ radial basis functions with compact support. In contrast to classical RBFs they do not only use the shifts of a fixed basis function but also vary the support radius in an orderly fashion. If done correctly, this leads to an extremely versatile and efficient approximation method.This paper will discuss recent developments concerning compactly supported radial basis functions, the basic ideas of multiscale RBFs and the principle ideas of analysing the convergence and stability of an explicit algorithm for the reconstruction of multivariate functions from scattered data. Recent results on data compression and on adaptivity are addressed.

Access provided by CONRICYT-eBooks. Download chapter PDF

Similar content being viewed by others

1 Introduction

For the past few decades, radial basis functions have been established as one of the main tools in multivariate approximation theory. They allow the user to build high-order, meshfree approximation spaces and provide an extremely flexible tool for the reconstruction of functions from scattered data, see [6, 15, 46].

The main ingredient are, of course, radial functions, though many of the results are also true for non-radial functions.

Definition 1.

A radial function is a function \(\varPhi: \mathbb{R}^{d} \rightarrow \mathbb{R}\) of the form Φ(x) = ϕ( ∥ x ∥ 2), \(\mathbf{x} \in \mathbb{R}^{d}\), where \(\phi: [0,\infty ) \rightarrow \mathbb{R}\) is a univariate function and ∥ x ∥ 2 = (x 1 2 + … + x d 2)1∕2 denotes the Euclidean norm of \(\mathbf{x} \in \mathbb{R}^{d}\).

Sometimes, the univariate function ϕ in the above definition is referred to as the radial function but we will not do this here. We will however make the following general assumption.

Remark 1.

We will always assume that the univariate function \(\phi: [0,\infty ) \rightarrow \mathbb{R}\) is defined on all of \(\mathbb{R}\) by even extension, i.e. by ϕ(−r): = ϕ(r) for r > 0.

We will say that such a ϕ: [0, ∞) generates or induces a multivariate function on \(\mathbb{R}^{d}\). This induced function is simply defined to be Φ(x): = ϕ( ∥ x ∥ 2), \(\mathbf{x} \in \mathbb{R}^{d}\).

In this article, we will predominantly be dealing with radial functions \(\varPhi: \mathbb{R}^{d} \rightarrow \mathbb{R}\) having a compact support, i.e. with functions for which

is bounded. In general, we will simply assume that the support of Φ is given by the unit ball. In that case, we can introduce scaled versions, i.e.

with δ > 0, which obviously have support in the ball about the origin with radius δ. Besides scaling we can also shift a scaled basis function. This allows us to define our basic approximation spaces. To this end let \(\varOmega \subseteq \mathbb{R}^{d}\) be a bounded domain and let X = {x 1, …, x N } ⊆ Ω be a set of distinct points, the data sites. Associated to such a point set are two geometric quantities, the fill distance or mesh norm h X, Ω and the separation distance q X , which are defined to be

respectively. The fill distance describes how well the scattered points X “cover” the domain Ω in the following sense. The fill distance h X, Ω gives the radius of the largest ball completely contained in Ω without a data site within its interior. The fill distance is important for understanding approximation orders. The separation distance defines the smallest distance between the two closest data sites. It becomes important when looking at stability issues.

A scaled, compactly supported kernel and a set of data sites are enough to define a first approximation space via

We will refer to this as a local approximation space since we will not deal with only one data set and one associated approximation space, but with a sequence of data sets X 1, X 2, … with mesh norms \(h_{j} = h_{X_{j},\varOmega }\), which are supposed to be monotonically decreasing. To ensure a certain uniformity in decrease, we will assume that h j+1 ≈ μ h j for some fixed μ ∈ (0, 1). For some of our results we will require that the sequence is quasi-uniform, which means that there is a constant c q such that, with \(q_{j} = q_{X_{j}}\),

Having this sequence of data sets X j , it is clear that we can build a local approximation space \(W_{j} = W_{X_{j}}\) for each X j . To this end, we will define a kernel \(\varPhi _{j}:\varOmega \times \varOmega \rightarrow \mathbb{R}\) for each level. In our application this kernel will be given by the scaled version of a fixed translation invariant radial basis function. To be more precise, we assume that there is a compactly supported function \(\varPhi: \mathbb{R}^{d} \rightarrow \mathbb{R}\) with support in the unit ball B(0, 1) and that, for each level, there is a scaling parameter δ j > 0 such that we can define

If we consider y to be fixed, it follows that the function Φ j (⋅ , y) has support in B(y, δ j ), the ball with radius δ j and centre y.

As we assume that the data sets become denser and denser, we will also assume that the support radii become smaller and smaller, usually in the same way, i.e., we will assume that δ j = vh j with a constant ν > 0, which we will link to μ later on. Note that this also leads to δ j+1 ≈ μ δ j .

With the data sets and the associated kernels at hand, we can build approximation spaces of the form

representing details on level j. Thus, the approximation of our function will come from the sum of these spaces, i.e., we have to investigate the approximation power of

for n → ∞.

The rest of the paper is organised as follows. In the next section, we will discuss the relevant material on optimal recovery, reproducing kernel Hilbert spaces and Sobolev spaces. After that, we will discuss compactly supported radial basis functions. In the fourth section, we will then address multiscale interpolation and approximation.

2 Optimal Recovery and Reproducing Kernel Hilbert Spaces

2.1 The Reconstruction Problem

Our main tool in using discrete approximation spaces W X of the form (1) will be optimal recovery and interpolation, which turns out to be the same in this context. Throughout this section, we will consider only local approximation spaces \(W_{X,\varPhi _{\delta }}\). In particular, we want to keep the scaling factor δ fixed but might vary the data set X.

To understand why radial basis functions, or, more generally, positive definite functions are natural tools in multivariate approximation theory, we start with an abstract result from linear algebra.

To this end, let H be a Hilbert space and denote its dual by H ∗. Suppose Λ = {λ 1, …, λ N } ⊆ H ∗ is a set of linearly independent functionals on H and \(f_{1},\ldots,f_{N} \in \mathbb{R}\) are certain given values. Then a generalised recovery problem would ask for finding a function s ∈ H so that λ j (s) = f j , 1 ≤ j ≤ N. We will call s a generalised interpolant. Usually, there are an infinite number of possible generalised interpolants and hence there is need to distinguish one of them. Moreover, if the data contain noise, interpolation is not an appropriate way of reconstructing the unknown function f. Hence, we will also consider a method, which was originally introduced in the context of splines (see [42]).

Definition 2.

The norm-minimal generalised interpolant is the element s 0 ∈ H satisfying

Given a smoothing parameter ɛ > 0, the optimal recovery or smoothing spline is the element s ɛ ∈ H satisfying

The solutions to both problems (3) and (4) are unique. A proof of the following result can be found in [42, 46].

Theorem 1.

Let H be a Hilbert space, λ 1 ,…,λ N ∈ H ∗ linearly independent functionals with Riesz representers v j , 1,≤ j ≤ N, and let \(f_{1},\ldots,f_{N} \in \mathbb{R}\) be given. The unique solutions s 0 of (3) and s ɛ of (4) can be written as

ɛ ≥ 0, where the coefficients {α j } are the solution of the linear system

where A = (λ i (v j )) and f = (f 1 ,…,f N ) T.

Hence, the optimal recovery problem can constructively be solved once the Riesz representer of the functionals are known. For general Hilbert spaces of functions this is not an easy task. However, for reproducing kernel Hilbert spaces this becomes simple once the reproducing kernel is known.

Definition 3.

A Hilbert space H of functions \(f:\varOmega \rightarrow \mathbb{R}\) is a reproducing kernel Hilbert space if there is a kernel \(\varPhi:\varOmega \times \varOmega \rightarrow \mathbb{R}\) with

-

1.

Φ(⋅ , x) ∈ H for all x ∈ Ω,

-

2.

f(x) = 〈f, Φ(⋅ , x)〉 H for all x ∈ Ω and all f ∈ H.

In such a reproducing kernel Hilbert space, the Riesz representer of any functional λ ∈ H ∗ is simply given by applying λ to one of the arguments of the kernel, i.e. v λ = λ y Φ(⋅ , y). In this paper, we will exclusively be concerned with point evaluation functionals, i.e. λ j (f) = f(x j ). Hence, the optimal recovery takes the simple form

and the matrix A has thus entries Φ(x i , x j ).

2.2 Sobolev Spaces

The reproducing kernel Hilbert spaces we will be concerned with are Sobolev spaces. For an integer \(\sigma = k \in \mathbb{N}_{0}\) the Sobolev space H k(Ω) consists of all those functions u ∈ L 2(Ω) which have weak derivatives \(D^{\boldsymbol{\alpha }}u\) in L 2(Ω) for all \(\vert \boldsymbol{\alpha }\vert \leq k\). For non-integer σ > 0, the Sobolev space H σ(Ω) can be defined using interpolation theory, see, for example, [1].

The Sobolev embedding theorem guarantees that H σ(Ω) is a reproducing kernel Hilbert space for any σ > d∕2. However, since the reproducing kernel is uniquely determined by the inner product and also depends upon Ω in our setting, it is in general not possible to give an explicit formula for the reproducing kernel. We will circumvent this problem by two means. First of all, we will assume that we can extend our functions defined on Ω to all of \(\mathbb{R}^{d}\), see [4].

Proposition 1.

Let \(\varOmega \subseteq \mathbb{R}^{d}\) be a bounded domain with a Lipschitz boundary. Then, there is a universal extension operator \(E: H^{\sigma }(\varOmega ) \rightarrow H^{\sigma }(\mathbb{R}^{d})\) satisfying

-

1.

Ef|Ω = f

-

2.

\(\|Ef\|_{H^{\sigma }(\mathbb{R}^{d})} \leq C_{\sigma }\|f\|_{H^{\sigma }(\varOmega )}\)

for all f ∈ H σ (Ω) and all σ ≥ 0.

It is important that though the constant C σ > 0 might depend on σ, the operator E itself does not. The existence of such an operator means that we can equip H σ(Ω) with an equivalent norm \(f\mapsto \|Ef\|_{H^{\sigma }(\mathbb{R}^{d})}\) and thus we can concentrate on determining the reproducing kernel of \(H^{\sigma }(\mathbb{R}^{d})\) for σ > d∕2. This becomes particularly easy if we write the norm on \(H^{\sigma }(\mathbb{R}^{d})\) using the Fourier transform \(\widehat{f}\) of \(f \in H^{\sigma }(\mathbb{R}^{d})\), σ > d∕2 defined by

With this, we can define the norm on \(H^{\sigma }(\mathbb{R}^{d})\) to be

The reproducing kernel can then be written in translation-invariant form, i.e. it satisfies Φ(x, y) = Φ 0(x −y) with \(\varPhi _{0}: \mathbb{R}^{d} \rightarrow \mathbb{R}\) for which we will simply write Φ(x, y) = Φ(x −y) in an abuse of notation. The function \(\varPhi: \mathbb{R}^{d} \rightarrow \mathbb{R}\) is then simply the inverse Fourier transform of one over the weight function, i.e.

which is a consequence of the following general result, see [46].

Proposition 2.

Suppose \(\varPhi \in L_{1}(\mathbb{R}^{d})\) has a Fourier transform satisfying

Then, Φ also defines a reproducing kernel on \(H^{\sigma }(\mathbb{R}^{d})\) with respect to the inner product defined by

The norm associated to this inner product is equivalent to the Sobolev norm (7).

This also opens the door to the construction of a variety of kernels, which are also reproducing kernels in \(H^{\sigma }(\mathbb{R}^{d})\). We will only consider compactly supported Φ’s having a Fourier transform with such a decay. However, since for t ≥ 0 and σ ≥ 0, we have

we see that the decay property (8) is equivalent to the decay property

which is slightly easier to handle in what follows. An immediate consequence of these observations is the following one.

Corollary 1.

Let \(\varPhi \in L_{1}(\mathbb{R}^{d})\) be a reproducing kernel of \(H^{\sigma }(\mathbb{R}^{d})\) , σ > d∕2, i.e. its Fourier transform satisfies (9). For δ ∈ (0,1] let Φ δ := δ −d Φ(⋅∕δ). Then, Φ δ is also a reproducing kernel of \(H^{\sigma }(\mathbb{R}^{d})\) and for every \(g \in H^{\sigma }(\mathbb{R}^{d})\) , we have the norm equivalence

Proof.

This follows immediately from \(\widehat{\varPhi _{\delta }} =\widehat{\varPhi } (\delta \cdot )\). □

It is important to note that the Hilbert space in which the scaled kernel Φ δ is the reproducing kernel is always \(H^{\sigma }(\mathbb{R}^{d})\), i.e. the space itself does not depend on the scaling parameter. However, the norm associated to the inner product in which Φ δ is the reproducing kernel does depend on δ > 0. Also the equivalence constants depend on δ. While we always have \(\|f\|_{\varPhi _{\delta }} \leq C\|f\|_{H^{\sigma }(\mathbb{R}^{d})}\) with C > 0 being independent of δ > 0, the constant in the other estimate \(\|f\|_{H^{\sigma }(\mathbb{R}^{d})} \leq C\delta ^{-\sigma }\|f\|_{\varPhi _{\delta }}\) tends to infinity with δ → 0. This is not surprising at all, since \(\|\cdot \|_{\varPhi _{\delta }}\rightarrow \|\cdot \|_{L_{2}(\mathbb{R}^{d})}\) for δ → 0 and the constant has to balance this loss of derivative in the norm.

3 Compactly Supported Radial Basis Functions

In this section we will review recent results on compactly supported radial basis functions. We are particularly interested in the construction of such basis functions and in those compactly supported RBFs which are reproducing kernels of Sobolev spaces.

Much of the general theory in this section can be found in [48]. Newer results are based on [8, 9, 22, 35, 37, 43, 50, 51].

3.1 Construction of Compactly Supported RBFs

A famous result of Bochner states that a continuous, integrable function \(\varPhi: \mathbb{R}^{d} \rightarrow \mathbb{R}\) is positive definite and hence the reproducing kernel of its associated reproducing kernel Hilbert space, if and only if it possesses a non-negative, non-vanishing Fourier transform

see [46] for a proof. For a radial, positive definite function, it is well-known that the Fourier transform is also radial and can be reduced to a one-dimensional Hankel transform. This will be essential in what we now want to do. To formulate this, we need to introduce Bessel functions of the first kind.

Definition 4.

The Bessel function of the first kind of order \(\nu \in \mathbb{C}\) is defined by

This gives the desired representation in the case of a positive definite, radial function.

Theorem 2.

Suppose \(\varPhi \in L_{1}(\mathbb{R}^{d}) \cap C(\mathbb{R}^{d})\) is radial, i.e. Φ( x ) = ϕ(∥ x ∥ 2 ), \(\mathbf{x} \in \mathbb{R}^{d}\) . Then its Fourier transform \(\widehat{\varPhi }\) is also radial, i.e. \(\widehat{\varPhi }(\boldsymbol{\omega }) =\mathscr{ F}_{d}\phi (\|\boldsymbol{\omega }\|_{2})\) with

Hence, Φ is positive definite if and only if \(\mathscr{F}_{d}\phi \geq 0\) and \(\mathscr{F}_{d}\phi \not\equiv 0\).

An interesting consequence of (10) is that the operator \(\mathscr{F}_{d}\) cannot only be defined for \(d \in \mathbb{N}\) but actually for all d ∈ [0, ∞), which we will from now on do. However, the connection to a multivariate Fourier transform is, of course, only given if \(d \in \mathbb{N}\). Also, the existence of the integral has to be ensured.

Next, we introduce the key operators to construct compactly supported radial basis functions.

Definition 5.

-

1.

Let ϕ be given so that t ↦ ϕ(t)t is in L 1[0, ∞), then we define for r ≥ 0,

$$\displaystyle{(\mathscr{I}\phi )(r) =\int _{ r}^{\infty }t\phi (t)dt.}$$ -

2.

For even \(\phi \in C^{2}(\mathbb{R})\) we define for r ≥ 0,

$$\displaystyle{(\mathscr{D}\phi )(r) = -\frac{1} {r}\phi ^{{\prime}}(r).}$$

In both cases the resulting functions should be seen as even functions by even extension.

Obviously, these operators are inverse to each other in the sense that \(\mathscr{I}\mathscr{D} = I\) and \(\mathscr{D}\mathscr{I} = I\) whenever these operations are well-defined, where I denotes the identity operator.

The importance of these operators comes from the fact that they nicely work together with the Fourier transform operator \(\mathscr{F}_{d}\). We will formulate this in a moment but before that, we want to generalise them.

Definition 6.

Let α > 0 and assume that t ↦ t ϕ(t) is integrable over [0, ∞). Then, we define for r ≥ 0,

and for r < 0 the function \(\mathscr{I}_{\alpha }\phi\) is defined by even extension.

Note that we can rewrite the integral in the definition as

Hence, for α ∈ (0, 1), the singularity at t = r is integrable. Since we will be merely be concerned with continuous compactly supported functions in this paper, the operator \(\mathscr{I}_{\alpha }\) can always be applied to such a function for any α > 0.

We also see that for α = 1 we have \(\mathscr{I}_{1} =\mathscr{ I}\), i.e. both definitions coincide. Finally, we will particularly be interested in the case α = 1∕2. In this case the operator reduces to

Since we are merely interested in compactly supported functions, it is important for us to note that \(\mathscr{I}_{\alpha }\) respects a compact support in the following sense.

Lemma 1.

Assume that the even function \(\phi: \mathbb{R} \rightarrow \mathbb{R}\) has a compact support contained in the interval [−R,R]. Then, for each α > 0 the function \(\mathscr{I}_{\alpha }\phi\) has also compact support contained in [−R,R].

We are now going to extend the range of possible operators \(\mathscr{I}_{\alpha }\) by defining them also for α ≤ 0. We will do this first formally in the following definition.

Definition 7.

-

1.

We define \(\mathscr{I}_{0}\) to be the identity operator, i.e. \(\mathscr{I}_{0}\phi =\phi\) for all even functions \(\phi: \mathbb{R} \rightarrow \mathbb{R}\).

-

2.

For \(n \in \mathbb{N}\) we define \(\mathscr{I}_{-n}:=\mathscr{ D}^{n}\).

-

3.

For α > 0 we let k: = ⌈α⌉ and define \(\mathscr{I}_{-\alpha }:=\mathscr{ I}_{-k}\mathscr{I}_{k-\alpha }\).

To see to what functions ϕ we can apply these operators, we restrict ourselves to compactly supported and even functions. If such a function possesses ℓ + 1 continuous derivatives then we first note that the derivatives must satisfy ϕ (j)(r) = (−1)j ϕ (j)(−r). In particular, we see that for odd j the derivative at zero has to vanish. The Taylor expansion about the origin is thus

Applying \(\mathscr{I}_{-1} =\mathscr{ D}\) to this expansion yields

The critical term on the right-hand side behaves like

as long as ℓ ≥ 2 and like ϕ (2)(0) for ℓ = 1. Hence, for even \(\phi \in C^{2}(\mathbb{R})\) with compact support \(\mathscr{I}_{-1}\phi\) is well-defined and continuous. We can iterate this process to derive the following result.

Corollary 2.

Let α > 0 and k = ⌈α⌉. If \(\phi \in C^{2k}(\mathbb{R})\) is even and compactly supported, then \(\mathscr{I}_{-\alpha }\phi\) is well-defined, continuous and compactly supported.

However, more important to us is the following observation, which can be derived from the above corollary but also straight-forward by induction.

Corollary 3.

If \(k \in \mathbb{N}\) , then for every even \(\phi \in C(\mathbb{R})\) with compact support, \(\psi:=\mathscr{ I}_{k}\phi\) and \(\mathscr{I}_{-k}\psi\) are well-defined and satisfy

Proof.

We already know that ψ is well-defined. To see that \(\mathscr{I}_{-k}\psi\) is also well-defined, we first recall that the fundamental theorem of calculus immediately yields \(\mathscr{I}_{-1}\mathscr{I}_{1}\phi =\phi\). For k ≥ 2, we first compute

and the rest follows by induction. □

To understand the interaction of the operators \(\mathscr{I}_{\alpha }\) and \(\mathscr{F}_{d}\), we need the following relation on Bessel functions.

Lemma 2.

For μ > −1∕2 and ν > −1 we have

The proof of this lemma starts with the integral on the right-hand side. It uses the definition of the Bessel function as an infinite series, exchanges integration and summation, integrates the resulting terms and interprets the result as a Bessel function again. Details can be found in [38, Lemma 4.13].

Theorem 3.

For each d,α > 0 we have

Hence, ϕ defines a positive definite function on \(\mathbb{R}^{d+2\alpha }\) if and only if \(\mathscr{I}_{\alpha }\) defines a positive definite function on \(\mathbb{R}^{d}\).

Proof.

We compute \(\mathscr{F}_{d}\mathscr{I}_{\alpha }\) using Theorem 2 and Fubini’s theorem

After a change of variables u: = t∕s, the inner integral can be computed using Lemma 2:

Inserting this into the above formula for \(\mathscr{F}_{d}\mathscr{I}_{\alpha }\) immediately yields

□

This means particularly for the operators \(\mathscr{I} =\mathscr{ I}_{1}\) and \(\mathscr{I}_{1/2}\) the following.

Corollary 4.

-

A function ϕ induces a positive definite function on \(\mathbb{R}^{d+2}\) if and only if \(\mathscr{I}\phi\) induces a positive definite function on \(\mathbb{R}^{d}\).

-

A function ϕ induces a positive definite function on \(\mathbb{R}^{d+1}\) if and only if \(\mathscr{I}_{1/2}\phi\) induces a positive definite function on \(\mathbb{R}^{d}\).

However, (11) has another important consequence. If \(\phi \in C(\mathbb{R})\) has compact support, then we can interpret the classical Fourier transform of ϕ also in the L 2 sense. In the L 2-sense, ϕ is uniquely determined by its Fourier transform and hence we have the following result.

Corollary 5.

Let α,β > 0. Assume that \(\phi \in C(\mathbb{R})\) is even and has compact support then we have

and

Proof.

Using (11) yields

The other expressions in the first identity have the same Fourier transform and hence all of the stated functions must be the same.

To prove the second equality, let k = ⌈α⌉. By definition, we have \(\mathscr{I}_{-\alpha } =\mathscr{ I}_{-k}\mathscr{I}_{k-\alpha }\). Hence,

where we first have used (12) and then Corollary 3. □

3.2 Wendland Functions and Generalised Wendland Functions

We will now describe a popular class of compactly supported radial basis functions, which is widely used in applications. The starting point is the cut-off power function

which is known to induce a positive definite function on \(\mathbb{R}^{d}\) if ν ≥ (d + 1)∕2, see [3, 46].

Definition 8.

The Wendland function of smoothness 2k for space dimension d is defined by

The definition shows that we will have the same function for even d = 2n and odd d = 2n + 1. We will address this issue later on. However, it is also clear from this definition that ϕ d, k restricted to [0, 1] is a polynomial of degree ⌊d∕2⌋ + 3k + 1, which can easily be computed. Some of them are, up to a constant factor, listed in Table 1.

The reason why these functions have become quite popular is summarised in the following theorem.

Theorem 4.

The functions ϕ d,k are positive definite on \(\mathbb{R}^{d}\) and are of the form

with a univariate polynomial p d,k of degree ⌊d∕2⌋ + 3k + 1. They possess continuous derivatives up to order 2k. The degree of p d,k is minimal for a given space dimension d and smoothness 2k and are up to a constant factor uniquely determined by this setting.

The above defined functions have been generalised in the following way, see [8, 9, 22, 35].

Definition 9.

Let α > 0 and ν > −1 such that α +ν > 0. The generalised Wendland functions are defined to be

Obviously, we have

so that the new functions are indeed a generalisation of the old once. However, for arbitrary α and ν, we can neither expect ψ ν, α to be positive definite on \(\mathbb{R}^{d}\) nor will ψ ν, α in general be representable by a univariate polynomial within its support.

Nonetheless, using the machinery so far, we can compute the Fourier transform of these functions as

The latter integral has intensively been studied and can be expressed using hyper-geometric series. Recall that such a series is formally defined to be

where we assume that neither of the b 1, … b q is a non-positive integer and use the Pochhammer symbol defined as (a) n : = 1 for n = 0 and (a) n : = a(a + 1)⋯(a + n − 1) = Γ(a + n)∕Γ(a) and (a) n : = (a 1) n ⋯(a p ) n . Such a series is known to converge point-wise if p ≤ q, which is the case we are interested in.

A few simple properties and the connection to the integrals we are interested in are collected in the next lemma.

Lemma 3.

-

1.

If a p = b q , then p F q = p−1 F q−1.

-

2.

For β + μ > −1 and λ > −1 we have

$$\displaystyle\begin{array}{rcl} & & \int _{0}^{r}(r - t)^{\lambda }t^{\mu }J_{\beta }(t)dt = \frac{\varGamma (\lambda +1)\varGamma (\beta +\mu + 1)} {\varGamma (\beta +1)\varGamma (\beta +\lambda +\mu +2)}2^{-\beta }r^{\beta +\lambda +\mu +1} {}\\ & & \quad \mbox{ } \cdot _{2}F_{3}\left [\frac{\beta +\mu + 1} {2}, \frac{\beta +\mu + 2} {2};\beta +1, \frac{\beta +\lambda +\mu +2} {2}, \frac{\beta +\lambda +\mu +3} {2};-r^{2}/4\right ]. {}\\ \end{array}$$ -

3.

The derivatives of the hyper-geometric functions satisfy for \(n \in \mathbb{N}\) :

$$\displaystyle\begin{array}{rcl} & & \frac{d^{n}} {dz^{n}}_{p}F_{q}[a_{1},\ldots,a_{p};b_{1},\ldots,b_{q};z] {}\\ & =& \frac{(a_{1})_{n}\cdots (a_{p})_{n}} {(b_{1})_{n}\cdots (b_{q})_{n}} _{p}F_{q}[a_{1} + n,\ldots a_{p} + n;b_{1} + n,\ldots,b_{q} + n;z]. {}\\ \end{array}$$ -

4.

For r > 0 we have

$$\displaystyle{_{1}F_{2}\left [a;a + \frac{b} {2},a + \frac{b + 1} {2};-r^{2}/4\right ] > 0}$$if a and b satisfy b ≥ 2a ≥ 0 or b ≥ a ≥ 1 or 0 ≤ a ≤ 1,b ≥ 1. The hyper-geometric series cannot be strictly positive if 0 ≤ b < a or if a = b ∈ (0,1).

Proof.

The first statement is obvious, the third statement can simply be checked by differentiation under the sum. The second statement can be found, for example, in [11, 13.1(56)]. The final statement is a consequence of the findings in [30]. □

Hence, we can continue to compute our Fourier transform. Setting μ: = d∕2 +α, λ: = ν and β: = (d − 2)∕2 +α, we see that the second statement of the lemma yields

with

This allows us to make the following general statement on the generalised Wendland functions, which comes from [8].

Theorem 5.

Let α > 0 and ν > −1 with α + ν > 0. Then, the d-variate Fourier transform of the generalised Wendland function is given by

Hence, ψ ν,α induces a positive definite function on \(\mathbb{R}^{d}\) if

Furthermore, the Fourier transform satisfies

Proof.

Setting \(a = \frac{d+1} {2} +\alpha\) and b = ν shows that (16) is equivalent to b ≥ a ≥ 1. Hence, we can conclude from Lemma 3 that ψ ν, α is positive definite. To see (17), we also use Lemma 3, which yields

where the constant expression in the last but one line can be simplified to 1 using the recurrence formula of the Γ-function. □

Note that the last property can also be expressed as \(\mathscr{I}_{-1}\mathscr{F}_{d}\psi _{\nu,\alpha } =\mathscr{ F}_{d}\psi _{\nu,\alpha +1}\). It also has the following interesting consequence.

Corollary 6.

The Fourier transform of the generalised Wendland function ψ ν,α is monotonically decreasing if

Since the classical Wendland functions ϕ d, k are a special case of the generalised Wendland functions ψ ν, α , using the parameters ν = ⌊d∕2⌋ + k + 1 and α = k, we see that (16) is satisfied, i.e. we have an alternative proof for them being positive definite. However, for d ≥ n, the function ϕ d, k induces not only a positive definite function on \(\mathbb{R}^{d}\) but also on all \(\mathbb{R}^{n}\) with n ≤ d and the monotony condition (18) becomes

or

Corollary 7.

The Wendland function ϕ d,k induces a positive definite function on \(\mathbb{R}^{n}\) with a monotonically decreasing Fourier transform for all n ≤ 2⌊d∕2⌋− 1.

In [9], there is also a discussion of the decay of the Fourier transform of the generalised Wendland functions. This generalises earlier results from [44].

Theorem 6.

The d-dimensional Fourier transform \(\mathscr{F}_{d}\psi _{\mu,\alpha }\) of the generalised Wendland function ψ ν,α with \(\nu \geq \alpha +\frac{d+1} {2}\) and α > 0 satisfies

with certain constants c 1 ,c 2 > 0 independent of r. Hence, ψ ν,α defines a reproducing kernel of

As mentioned above, this recovers and generalises the decay rate established in [44] for \(\mathscr{F}_{d}\phi _{d,k}\), showing that they are reproducing kernels for \(H^{\sigma }(\mathbb{R}^{d})\) with \(\sigma = \frac{d+1} {2} + k\). While these are integer order Sobolev spaces in odd space dimensions, they are of order integer plus a half in even dimensions. But we are now also in the situation to explicitly state compactly supported RBFs which are reproducing kernels in Sobolev spaces of integer order in even dimensions. We only have to work with α = k − 1∕2, \(k \in \mathbb{N}\), and ν ≥ k + d∕2 to have a kernel for \(H^{k+d/2}(\mathbb{R}^{d})\). These kernels have a more complicated structure than the original Wendland kernels but are still easily computable. They can best be described by introducing the elementary functions

Then, some of the functions together with the space dimension d and the order σ of the Sobolev space in which they are reproducing kernels are, up to a constant factor, given in Table 2. We have always chosen ν = k + d∕2 = σ, since the decay of the Fourier transform and hence the Sobolev space is independent of ν as long as ν ≥ α + (d + 1)∕2 = k + d∕2.

Yet another consequence is the existence of compactly supported reproducing kernels in Sobolev spaces.

Corollary 8.

Each \(H^{\sigma }(\mathbb{R}^{d})\) with σ > d∕2 possesses a compactly supported, radial reproducing kernel.

Proof.

The results of Theorem 6 show that \(H^{\sigma }(\mathbb{R}^{d})\) has such a reproducing kernel, namely ψ ν, α as long as \(\sigma =\alpha -\frac{d+1} {2} > \frac{d+1} {2}\). The case of σ ∈ (d∕2, (d + 1)∕2) cannot be handled with this technique but follows from another construction technique in [23]. □

As a matter of fact, in [23] Johnson constructed compactly supported radial functions ϕ having a d-variate Fourier transform satisfying (8) for a \(\sigma = k \in \mathbb{N}\) with k ≥ d∕4 if d is even and k ≥ max((d + 1)∕4, 2) if d is odd.

For k ∈ [d∕4, d∕2] for even d and k ∈ [(d + 1)∕4, d∕2] for odd d, this seems at first to be problematic, since it is well-known that an integrable, continuous function with a non-negative Fourier transform has automatically an integrable Fourier transform (see [46, Corollary 6.12]). The resolution of this seeming contradiction is quite simple, for such values of k the constructed ϕ in [23] is not continuous on all of \(\mathbb{R}\) any more, it might even have a pole at the origin.

When it comes to the actual computation of the Fourier transform of a generalised Wendland function, it is better to reduce the d-variate Fourier transform to a univariate one:

Specifying this to the case of ϕ d, k shows that we will naturally have a different Fourier transform for d even or d odd. The Fourier transform for odd d is easily calculated for the classical Wendland functions. As an example, we give the three-dimensional Fourier transform of

Lemma 4.

The Fourier transform of ϕ d,1 for d = 3 is given as

Clearly, we see that the Fourier transform decays like \(\mathscr{O}(r^{-6})\), so that the corresponding Sobolev space is \(H^{3}(\mathbb{R}^{3})\) as predicted. However, note that the numerical evaluation near the origin is highly ill-conditioned because of severe cancellation.

3.3 Other Compactly Supported Radial Basis Functions

Besides the functions introduced and discussed in the previous sections, there are plenty of others which can be found in the literature. There is the construction by Buhmann in [5], which produces also “one piece” radial functions, but the decay of their Fourier transform is unknown. There are the earlier constructions by Wu in [50], which are also one piece polynomials but with a higher degree than those mentioned above, having also a Fourier transform with isolated zeros. Then, there is the construction by Johnson in [2, 23], which produces functions with multiple radial pieces. Each of these pieces is poly-harmonic. The functions are again reproducing kernels in integer order Sobolev spaces.

4 Multiscale Interpolation and Approximation

We are now coming to the second main part of this paper, the discussion of multiscale approximation using compactly supported radial basis functions.

Let us first point out why we need a multiscale approach when working with compactly supported radial basis functions. We start with the following negative result (see, for example, [47, 49]).

Recall that the norm-minimal interpolant \(s = s_{0} = I_{X,\varPhi _{\delta }}\) to a function f ∈ C(Ω), which is based upon the data sites X = {x 1, …, x N } ⊆ Ω and uses the basis function \(\varPhi _{\delta }: \mathbb{R}^{d} \rightarrow \mathbb{R}\) can be written as

where the coefficients \(\boldsymbol{\alpha } \in \mathbb{R}^{N}\) are determined by the linear system \(A\boldsymbol{\alpha } = \mathbf{f}\) with the interpolation matrix A = (Φ δ (x i −x j )). From now on, we will refer to this norm-minimal interpolant just as the interpolant.

Theorem 7.

Let \(\varOmega \subseteq \mathbb{R}^{d}\) be a bounded domain with a Lipschitz boundary. Let Φ be a reproducing kernel of \(H^{\sigma }(\mathbb{R}^{d})\) with σ > d∕2, i.e. \(\widehat{\varPhi }\) satisfies (9). Let Φ δ = δ −d Φ(⋅∕δ). Finally, let X ={ x 1 ,…, x N } ⊆Ω be given. Then, there is a constant C > 0 such that the error between any f ∈ H σ (Ω) and its interpolant \(s = I_{X,\varPhi _{\delta }}f\) can be bounded by

Consequently, if we denote the interpolant to a function f from our local approximation spaces W j from (2) as s j and choose the support radii δ j proportional to the fill distances \(h_{j} = h_{X_{j},\varOmega }\), we cannot expect s j to converge to f with j → ∞. We can only expect convergence if h j ∕δ j → 0 for j → ∞, which means that we essentially lose the advantage of the compact support, since the interpolation matrices become more and more dense.

4.1 Quasi-Interpolation, Principle Shift-Invariant Spaces and the Strang-Fix Conditions

Even in the more ideal situation of infinite, regular data sites and even if the interpolation process is replaced by a best approximation process the above negative result remains true. This follows from the following short discussion on quasi-interpolation in principle shift-invariant spaces.

For a compactly supported, continuous function \(\varPhi: \mathbb{R}^{d} \rightarrow \mathbb{R}\) and h > 0, we define the space

Such spaces are called principal shift-invariant spaces and they mimic our local approximation spaces in the case of the data sites being a regular grid of grid size h. These spaces have extensively been studied and their approximation properties are intrinsically connected to polynomial reproduction, i.e. to the question whether \(\pi _{m}(\mathbb{R}^{d}) \subseteq S_{h}\), or alternatively, to the so-called Strang-Fix conditions.

Definition 10.

An integrable function \(\varPhi: \mathbb{R}^{d} \rightarrow \mathbb{R}\) satisfies the Strang-Fix conditions of order m if \(\widehat{\varPhi }(0)\neq 0\) and \(D^{\boldsymbol{\beta }}\widehat{\varPhi }(2\pi \boldsymbol{\alpha }) = 0\) for all \(\boldsymbol{\alpha } \in \mathbb{Z}^{d}\setminus \{\mathbf{0}\}\) and \(\boldsymbol{\beta } \in \mathbb{N}_{0}^{d}\) with \(\vert \boldsymbol{\beta }\vert \leq m\).

The following result summarises these ideas. Its proof can be found in [39].

Theorem 8.

Let \(\varPhi: \mathbb{R}^{d} \rightarrow \mathbb{R}\) be a compactly supported, continuous function. Then, the following statements are equivalent:

-

1.

Φ satisfies the Strang-Fix conditions of order m.

-

2.

For \(\boldsymbol{\beta } \in \mathbb{N}_{0}^{d}\) with \(\vert \boldsymbol{\beta }\vert \leq m\) the function

$$\displaystyle{\mathbf{x}\mapsto \sum _{\boldsymbol{\alpha }\in \mathbb{Z}^{d}}\boldsymbol{\alpha }^{\boldsymbol{\beta } }\varPhi (\mathbf{x} -\boldsymbol{\alpha })}$$is a polynomial from \(\pi _{\vert \boldsymbol{\alpha }\vert }(\mathbb{R}^{d})\).

-

3.

For each \(f \in H^{m+1}(\mathbb{R}^{d})\) and each h > 0 there are weights \(w_{\boldsymbol{\alpha }}^{h}\) such that as h → 0,

$$\displaystyle\begin{array}{rcl} \left \|f -\sum _{\boldsymbol{\alpha }\in \mathbb{Z}^{d}}w_{\boldsymbol{\alpha }}^{h}\varPhi (h^{-1} \cdot -\boldsymbol{\alpha })\right \|_{ H^{s}(\mathbb{R}^{d})}& \leq & C_{s}h^{m+1-s}\|f\|_{ H^{s+1}(\mathbb{R}^{d})},\qquad 0 \leq s \leq p {}\\ \sum _{\boldsymbol{\alpha }\in \mathbb{Z}^{d}}\vert w_{\boldsymbol{\alpha }}^{h}\vert ^{2}& \leq & K\|f\|_{ L_{2}(\mathbb{R}^{d})}^{2}. {}\\ \end{array}$$The constants C s and K are independent of f.

Hence, in our terminology, at least if working on an infinite uniform grid, we could get away with just one of the approximation spaces \(S_{h} = W_{h\mathbb{Z}^{d}}\) if our compactly supported function was satisfying the Strang-Fix conditions.

However, as we will see now, if the kernel is in addition radial, it will not satisfy the Strang-Fix conditions. To prove this negative result, which goes back to Wu [51], we need two auxiliary results.

The first one is concerned with a question about the density of functions of the form exp(imx) in the continuous functions. It was first stated by Pólya as a problem in 1931 ([34]) and then solved by Szász in 1933 ([40]).

Lemma 5 (Pólya).

Let the real numbers m 1 ,m 2 ,⋯ have the properties 0 < m 1 < m 2 < ⋯ and

Furthermore, let f be continuous in the closed interval [a,b]. Then it will follow from

that f vanishes identically on [a,b].

The second auxiliary result comes from number theory. It deals with the question which natural numbers can be represented as the sum of two squares, see, for example, [24].

Lemma 6.

Let a n be the nth natural number which can be expressed as a sum of two integer squares. There are constants c 1 ,c 2 > 0 and \(n_{0} \in \mathbb{N}\) such that

Using the operators introduced in Section 3.1, we are now able to show that there are no compactly supported radial functions, which satisfy the Strang-Fix conditions in dimensions larger than one.

Theorem 9.

For d ≥ 2, there is no non-vanishing, continuous, radial, compactly supported function \(\varPhi: \mathbb{R}^{d} \rightarrow \mathbb{R}\) , which satisfies the Strang-Fix conditions.

Proof.

Assume that \(\varPhi: \mathbb{R}^{d} \rightarrow \mathbb{R}\) is such a function, i.e. Φ = ϕ( ∥ ⋅ ∥ 2) with an even, compactly supported and continuous function \(\phi: \mathbb{R} \rightarrow \mathbb{R}\). Then, its Fourier transform is given by

with a new compactly supported, continuous function \(\psi:=\mathscr{ I}_{\frac{d-1} {2} }\phi\). If Φ would satisfy the Strang-Fix condition, then we must therefore have

Next, let a n be the nth natural number, which can be represented by two squared integers. Since d ≥ 2, for each such a n we can therefore pick an \(\boldsymbol{\alpha }_{n} \in \mathbb{Z}^{d}\setminus \{\mathbf{0}\}\) such that \(a_{n} =\| \boldsymbol{\alpha }_{n}\|_{2}^{2}\). Thus, if we define \(m_{n}:= 2\pi \|\boldsymbol{\alpha }_{n}\|_{2} = 2\pi \sqrt{a_{n}}\), then Lemma 6 tells us that

This particularly means that \(\liminf _{n\rightarrow \infty }(n/m_{n}) = \infty \) and hence that according to Lemma 5, we must have that \(\psi =\mathscr{ I}_{(d-1)/2}\phi \equiv 0\). But from (13) we can finally conclude that \(\phi =\mathscr{ I}_{-(d-1)/2}\psi = 0\). □

4.2 Multilevel Interpolation

In this section, we want to discuss and analyse the simplest case of a multilevel algorithm, which produces a global approximant from the space V n . Let us recall the general setting. We assume that we have a sequence of increasingly finer and finer finite point sets

and a decreasing sequence of support radii

Then, using a compactly supported RBF \(\varPhi: \mathbb{R}^{d} \rightarrow \mathbb{R}\) and its scaled versions

we build, as mentioned in the introduction, local approximation spaces

and global approximation spaces

In this situation, the simplest possible algorithm to compute an approximation f n ∈ V n to a function f ∈ H is a residual correction scheme as described in Algorithm 1.

Algorithm 1: Multilevel Approximation

We will now analyse the approximation properties of this algorithm. To this end, we need a general sampling inequality. The following result comes from [32].

Lemma 7.

Let \(\varOmega \subseteq \mathbb{R}^{d}\) be a bounded domain with Lipschitz boundary. Let σ > d∕2. Let X ⊆Ω be a finite point set with sufficiently small mesh norm h X,Ω . Then, there is a constant C > 0, independent of X, such that for all f ∈ H σ (Ω) vanishing on X, we have

for 0 ≤μ ≤σ.

Using \(s_{j} = I_{X_{j},\varPhi _{j}}e_{j-1}\) as the interpolant to e j−1 on X j with kernel Φ j , we have the following theorem.

Theorem 10.

Let \(\varOmega \subseteq \mathbb{R}^{d}\) be a bounded domain with Lipschitz boundary. Let X 1 ,X 2 ,… be a sequence of point sets in Ω with mesh norms h 1 ,h 2 ,… satisfying h j+1 = μh j for j = 1,2,… with fixed μ ∈ (0,1) and h 1 = μ sufficiently small. Let Φ be a kernel generating \(H^{\sigma }(\mathbb{R}^{d})\) , i.e. satisfying (9) and let Φ j be defined by (20) with scale factor δ j = vh j . Let the target function f belong to H σ (Ω). Then, there exists a constant C 1 > 0 such that

and hence there exists a constant C > 0 such that

provided that μv ≥γ > 0 with a constant γ > 0. Thus there are constants μ 0 ∈ (0,1) and ν 0 > 1 such that the multiscale approximation f n converges linearly to f in the L 2 norm for all μ ≤μ 0 and ν ≥ν 0 with μν ≥γ.

Proof.

The proof of this theorem can be found in [49]. We will thus only review its critical steps. The first, important auxiliary observation is that the interpolant at X j to e j−1 is the same as the interpolant to Ee j−1 since both functions coincide on X j ⊆ Ω. Here, E denotes the extension operator from Proposition 1. From this, it follows that

where we have used Lemma 1 and the fact that the interpolant is norm-minimal with respect to the Φ j -norm.

Then, we have

with

Obviously, using Plancharel’s theorem, the properties of the extension operator and (19), we see that the first integral can be bounded by

Similarly, for the second integral, we can use (24) to derive the bound

Piecing these bounds together gives estimate (23). The rest then more or less follows by applying (23) iteratively and the following observation. Since e n = f − f n vanishes on X n , we have by Lemma 7 and Lemma 1 that

since \(h_{n}/\delta _{n+1} = h_{n}/(\mathit{v}h_{n+1}) \leq = \frac{1} {\nu \mu } \leq \frac{1} {\gamma }\). □

Though we cannot directly determine μ 0 and ν 0, equation (23) gives some insight into the influence of the two critical parameters μ and ν. On the one hand, the parameter μ determines how much we have to refine our data set from level to level. Hence, the smaller μ the more points we have to use in the next level.

On the other hand, the parameter ν determines the relation between the support radius and the fill distance. Here, a larger ν means that we have more non-zero entries per row in the interpolation matrix, which increases the computational cost. Nonetheless, increasing ν is less critical than decreasing μ.

But there is yet another consequence of this theorem. For simplicity, let us eliminate one of the parameters by setting ν = μ −1 so that (23) becomes

and we have convergence for all μ > 0 with C 1 μ σ < 1. However, we even have convergence if for an arbitrary σ > ɛ > 0 we have C 1 μ ɛ ≤ 1. In this case, (26) becomes

We can also revisit (25) by choosing an 0 ≤ β ≤ σ −ɛ. Then, Lemma 7 applied in the derivation of (25) yields

Using (27) n times yields the estimate

Finally, the fact that h 1 = μ and h j+1 = μ h j shows that h n = μ n so that we can rephrase the last estimate as

i.e., we have not only derived an estimate also for derivatives but have expressed the error in terms of the fill-distance of the finest data set. The exponent is almost optimal.

Corollary 9.

Under the assumptions of Theorem 10 with ν = 1∕μ and 0 ≤β ≤σ −ɛ we have the error bound

provided that μ is sufficiently small.

There are several possible extensions to this theorem. First of all, the condition h j+1 = μ h j can be relaxed to something like c μ h j ≤ h j+1 ≤ μ h j with fixed μ, c ∈ (0, 1) without altering the result. Secondly, the algorithm also converges if the target function f is rougher, say f ∈ H τ(Ω) with d∕2 < τ < σ. Details can be found in [49].

From a numerical point of view, the multilevel scheme is extremely efficient. Once, the neighbourhood information are known, i.e. once we know for each level ℓ and each data site x j (ℓ) those data sites x k (ℓ) which are relevant for the computation, i.e. those with ∥ x k (ℓ) −x j (ℓ) ∥ 2 ≤ δ ℓ , we have the following computational cost.

Corollary 10.

If the data sets X j are quasi-uniform, i.e q j ≈ h j , then we have for the involved linear systems:

-

The number of non-zero entries per row is independent of the level.

-

The condition number is independent of the level.

-

The number of steps required by a non-preconditioned CG method is independent of the level.

-

The computational cost is linear in each level.

The neighbourhood information can be assembled in \(\mathscr{O}(N_{j}\log N_{j})\) time using tree-based search structures. Finally, we also have to compute the residuals. If the data sets are nested, we can restrict ourselves to compute residuals only on the finest level. If they are not nested, then we have to do this in step j for the remaining point sets X j+1, …, X n . Again, the neighbourhood information can be collected in \(\mathscr{O}(N_{j}\log N_{j})\) time for level j. Moreover, the number of levels is, because of the uniform refinement, at most \(\mathscr{O}(\log N_{n})\).

Before we come to numerical results, we want to discuss briefly two versions of this algorithm, one which discards unnecessary coefficients and one which only considers necessary data sites.

The first one was introduced in [25] as one of two discarding strategies for multilevel algorithms on the sphere. The general idea is that after computing the local interpolant at level j, which has a representation of the form

to discard all coefficients \(\boldsymbol{\alpha }_{k}^{(j)}\) which have an absolute value smaller than a given threshold. This threshold can and should be level dependent. Since the discarding is done during each level, it was named discarding dynamically in contrast to the strategy of discarding after all steps have been computed. The Algorithm is formally given in Algorithm 2.

Algorithm 2: Multilevel Approximation with Dynamical Discarding

It is possible to show convergence of this algorithm in a very similar way as it has been done in the proof of Theorem 10. Details can be found in [25].

Theorem 11.

Let ɛ > 0 be given. Assume that the assumption of Theorem 10 are satisfied. Let ɛ j ≤ c 1 ɛδ j d∕2 with a constant c 1 > 0. Finally, let α:= C 1 (μ σ + ν −σ ). Then there is a constant C > 0 such that

Hence, after n steps the error can be bounded by

The second variation of our standard multilevel interpolation algorithm is an adaptive version. After computing the local interpolant s j on X j to e j−1, we can check the error e j = e j−1 − s j on the upcoming data set X j+1. Then, instead of interpolating this error on all of X j+1, we will actually only use those points of X j+1 on which e j has an absolute value larger than a given threshold ɛ j > 0. To describe this algorithm in more detail let us denote the initial point sets by \(\widetilde{X}_{j}\) and let us denote the adaptive point sets which are actually used by X j . Then, the algorithm can be stated as in Algorithm 3.

Algorithm 3: Adaptive Multilevel Approximation

An error analysis of this algorithm is more problematic since it would require to know e j on all of \(\widetilde{X}_{j}\) but we only know e j on \(X_{j} \subseteq \widetilde{ X}_{j}\). We could avoid this problem by creating an inner loop in which we check e j on \(\widetilde{X}_{j}\) and add those points for which e j is still too large. However, in practice this does not seem to be necessary.

4.3 Numerical Examples

We will now look at two examples. In the first example, we are interested in understanding the importance of the parameters ν, which is responsible for the relation between the support radius and the fill distance on each level, and the parameter μ, which is responsible for the refinement of the data sets from level to level.

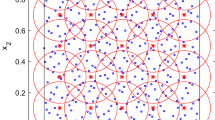

In this example, we will only be interested in varying the parameter ν. We will work on Ω = [0, 1]2 and use the Franke function, see, for example, [20] and the left half of Figure 1, as our target function. The RBF is given by ϕ(r) = (1 − r)+ 4(4r + 1), i.e. the C 2-Wendland function in \(\mathbb{R}^{2}\). We will work only on equidistant grids X j with grid size q j = 2−j−2, 1 ≤ j ≤ 8, which is also the separation distance of the data set X j and hence equivalent to its fill distance. This means that we fix the refinement factor to be μ = 1∕2. We then vary the overlap factor ν, by defining \(\delta _{j} =\widetilde{\nu } q_{j}\) and changing \(\widetilde{\nu }\). Finally, we measure the error on a fine grid of grid size q = 2−11.

A typical result can be found in Table 3, where we have chosen the overlap factor to be \(\widetilde{\nu }= 3\), which means that we have at most 25 non-zero entries per row in our matrix, independent of the level. The table contains the number of points per level, the discrete ℓ 2- and ℓ ∞ -error and the approximation order computed from comparing two successive level. Since ϕ 2, 1 is a reproducing kernel in \(H^{\sigma }(\mathbb{R}^{d})\) with σ = k + (d + 1)∕2 = 2. 5, which means we would expect an L 2-approximation order σ = 2. 5 and an L ∞ -approximation order of σ − d∕2 = 1. 5. Finally, the table also contains the number of steps an unpreconditioned CG method requires to compute an approximate solution of the linear system with a relative accuracy of 10−6 a higher accuracy does not lead to a significant change in the solutions but only to a larger number CG steps.

In Table 4 we have collected the error and convergence order estimates for increasing overlaps. It seems that the expected order of 2. 5 cannot only be matched but is actually exceeded. The number of non-zero entries per row increases to at most 373 in the case of \(\widetilde{\nu }= 11\). The example also indicates that halving the fill distance, i.e. choosing μ = 1∕2 seems to be an appropriate choice and that the convergence order can be achieved solemnly by increasing the initial support radius.

In our second example, we also want to test the other two algorithms. To this end, we keep the general set-up of our first example but change the test function to a C ∞ step function given by

which is shown in Figure 1 (right). We keep the overlap fixed at \(\widetilde{\nu }= 3\). The results of the standard multilevel algorithm are given in Table 5 and Figure 2. Clearly, convergence is much slower. In particular we have typical overshoots near the edge of the step function, at least in the first few levels.

The result of the dynamically discarding algorithm, Algorithm 2, is given in Table 6. For these results we have set the overall threshold to ɛ = 10−5 and have on level j then discarded all the coefficients with absolute value less than ɛ δ j . Clearly, the results are very similar to those of the standard algorithm, Algorithm 1. But the total number of point information used reduces from 1,402,168 in Algorithm 1 to just 31,506 in Algorithm 2, i.e. we use only 2.25% of the original points. As we can see from Figure 3, the reason for this is that only those basis functions centred at data sites near the edge of the step function or near the boundary of the domain have large coefficients.

The results of the adaptive algorithm are given in Table 7. Here we have used the thresholding strategy that we only considered points on the next level where the error of the current level is larger than 10−3 times the maximum error of the current interpolant on the next level. Again, the errors are comparable, though slightly worse. The total number of points used is now 74, 029 out of 1, 402, 168, or 5. 3%. Figure 4 shows the data sites which are actually used. The pattern is similar to the one created by the dynamically discarding algorithm, though more points are kept towards the boundary of the computational domain.

5 Other Multilevel Schemes

So far, we have discussed multilevel and multiscale methods for interpolation problems. It is straight-forward to extend the result to the optimal recovery or smoothing spline approach (4). The results of Theorem 10 and Corollary 9 remain valid if the smoothing parameter ɛ is chosen level dependent satisfying ɛ j ≤ c(h j ∕δ j )2σ, see [49].

In [41], the multilevel scheme is analysed for target functions being zero on the boundary. The centres and the support radii on each level are chosen such that the multilevel interpolant is satisfying zero boundary conditions, as well, i.e. the supports of the basis functions are always contained in the domain Ω.

In [13], a multilevel scheme for vector-valued, divergence-free functions is analysed. Here, matrix-valued kernels are employed. In this situation, it is not possible anymore to keep the ratio between fill distance and support radius constant.

The paper [29] follows closely the original papers [26, 27] and discusses convergence orders for interpolation on the sphere, similar to those derived in Corollary 9. It also deals with inverse estimates, i.e. statements that show that a certain convergence rate must lead to a certain smoothness of the target function. These are mainly based upon the results from [36].

Shortly after the introduction of compactly supported RBFs and the initial, purely numerical papers on multilevel interpolation schemes such as [18, 19] and the first theoretical results such as [21, 31], which all differ substantially from the theoretical results given in this paper, also first attempts based upon collocation for solving PDEs numerically with such a multilevel scheme have been derived, see, for example, [14, 16] and [7]. However, only recently, the numerical observations could be underpinned with theoretical proofs in [12, 28]. There have also been first papers on the solution of PDEs based upon a weak formulation and a Galerkin method, see [10, 45]. The first of these two papers is purely numerically, while the second one contains theoretical results, which seem, however, to be too optimistic.

Finally, multiscale schemes like those discussed here have been used in computer graphics (see [33]), in the context of neural networks (see [17]) and in the context of machine learning (see [52]).

References

R.A. Adams, J.J.F. Fournier, Sobolev Spaces, 2nd edn. (Academic, New York, 2003)

A. Al-Rashdan, M.J. Johnson, Minimal degree univariate piecewise polynomials with prescribed Sobolev regularity. J. Approx. Theory 164, 1–5 (2012)

R. Askey, Radial characteristic functions. Technical Report 1262, University of Wisconsin, Mathematics Research Center (1973)

S. Brenner, L. Scott, The Mathematical Theory of Finite Element Methods, 3rd edn. (Springer, New York, 1994)

M.D. Buhmann, Radial functions on compact support. Proc. Edinb. Math. Soc. 41, 33–46 (1998)

M.D. Buhmann, Radial Basis Functions. Cambridge Monographs on Applied and Computational Mathematics (Cambridge University Press, Cambridge, 2003)

C.S. Chen, M. Ganesh, M.A. Golberg, A.H.-D. Cheng, Multilevel compact radial functions based computational schemes for some elliptic problems. Comput. Math. Appl. 43, 359–378 (2002)

A. Chernih, Multiscale Wendland radial basis functions and applications to solving partial differential equations. PhD thesis, University of New South Wales, Sydney (2013)

A. Chernih, S. Hubbert, Closed form representations and properties of the generalised Wendland functions. J. Approx. Theory 177, 17–33 (2014)

A. Chernih, Q.T. Le Gia, Multiscale methods with compactly supported radial basis functions for Galerkin approximation of elliptic PDEs. IMA J. Numer. Anal. 34, 569–591 (2014)

A. Erdélyi, W. Magnus, F. Oberhettinger, F.G. Tricomi, Tables of Integral Transforms, volume I and II. (McGraw-Hill, New York, 1954)

P. Farrell, H. Wendland, RBF multiscale collocation for second order elliptic boundary value problems. SIAM J. Numer. Anal. 51, 2403–2425 (2013)

P. Farrell, K. Gillow, H. Wendland, Multilevel interpolation of divergence-free vector fields. IMA J. Numer. Anal. 37, 332–353 (2017)

G.E. Fasshauer, Solving differential equations with radial basis functions: multilevel methods and smoothing. Adv. Comput. Math. 11, 139–159 (1999)

G. Fasshauer, Meshfree Approximation Methods with MATLAB (World Scientific Publishers, Singapore, 2007)

G.E. Fasshauer, J.W. Jerome, Multistep approximation algorithms: improved convergence rates through postconditioning with smoothing kernels. Adv. Comput. Math. 10, 1–27 (1999)

S. Ferrari, M. Maggioni, N.A. Borhese, Multiscale approximation with hierarchical radial basis functions networks. IEEE Trans. Neural Netw. 15, 178–188 (2004)

M.S. Floater, A. Iske, Multistep scattered data interpolation using compactly supported radial basis functions. J. Comput. Appl. Math. 73, 65–78 (1996)

M.S. Floater, A. Iske, Thinning and approximation of large sets of scattered data, in Advanced Topics in Multivariate Approximation, ed. by F. Fontanella, K. Jetter, P.-J. Laurent (World Scientific, Singapore, 1996), pp. 87–96

R. Franke, Scattered data interpolation: tests of some methods. Math. Comput. 38, 181–200 (1982)

S.J. Hales, J. Levesley, Error estimates for multilevel approximation using polyharmonic splines. Numer. Algorithms 30, 1–10 (2002)

S. Hubbert, Closed form representations for a class of compactly supported radial basis functions. Adv. Comput. Math. 36, 115–136 (2012)

M.J. Johnson, Compactly supported, piecewise polyharmonic radial functions with prescribed regularity. Constr. Approx. 35, 201–223 (2012)

H. Koch, H. Pieper, Zahlentheorie: ausgewählte Methoden und Ergebnisse. VEB Deutscher Verlag der Wissenschaften, Berlin (1976)

Q.T. Le Gia, H. Wendland, Data compression on the sphere using multiscale radial basis functions. Adv. Comput. Math. 40, 923–943 (2014)

Q.T. Le Gia, I. Sloan, H. Wendland, Multiscale analysis in Sobolev spaces on the sphere. SIAM J. Numer. Anal. 48, 2065–2090 (2010)

Q.T. Le Gia, I. Sloan, H. Wendland, Multiscale approximation for functions in arbitrary Sobolev spaces by scaled radial basis functions on the unit sphere. Appl. Comput. Harmon. Anal. 32, 401–412 (2012)

Q.T. Le Gia, I. Sloan, H. Wendland, Multiscale RBF collocation for solving PDEs on spheres. Numer. Math. 121, 99–125 (2012)

M. Li, F. Cao, Multiscale interpolation on the sphere: Convergence rate and inverse theorem. Appl. Math Comput. 263, 134–150 (2015)

D.S. Moak, Completely monotonic functions of the form s −b(s 2 + 1)−a. Rocky Mt. J. Math. 17, 719–725 (1987)

F.J. Narcowich, N. Sivakumar, J.D. Ward, On condition numbers associated with radial-function interpolation. J. Math. Anal. Appl. 186, 457–485 (1994)

F.J. Narcowich, J.D. Ward, H. Wendland, Sobolev error estimates and a Bernstein inequality for scattered data interpolation via radial basis functions. Constr. Approx. 24, 175–186 (2006)

Y. Ohtake, A. Belyaev, M. Alexa, G. Turk, H.-P. Seidel, Multi-level partition of unity implicits. ACM Trans. Graphics 22, 463–470 (2003)

G. Pólya, Aufgabe 108: Ein Bruchteil des Fourierschen Funktionensystems. Jahresbericht der deutschen Mathematiker Vereinigung, 40, 81 (1931)

R. Schaback, The missing Wendland functions. Adv. Comput. Math. 34, 67–81 (2011)

R. Schaback, H. Wendland, Inverse and saturation theorems for radial basis function interpolation. Math. Comput. 71, 669–681 (2002)

R. Schaback, Z. Wu, Operators on radial functions. J. Comput. Appl. Math. 73, 257–270 (1996)

E.M. Stein, G. Weiss, Fourier Analysis in Euclidean Spaces (Princeton University Press, Princeton, NJ, 1971)

G. Strang, G. Fix, A Fourier analysis of the finite element variational method, in Constructive Aspects of Functional Analysis, ed. by G. Geymonat. C.I.M.E. II, Ciclo 1971 (Springer, Berlin, 1973), pp. 793–840

O. Szász, Lösung zu Aufgabe 108: Ein Bruchteil des Fourierschen Funktionensystems. Jahresbericht der deutschen Mathematiker Vereinigung 43 Part 2, 20–23 (1933)

A. Townsend, H. Wendland, Multiscale analysis in sobolev spaces on bounded domains with zero boundary values. IMA J. Numer. Anal. 33, 1095–1114 (2013)

G. Wahba, Spline Models for Observational Data. CBMS-NSF, Regional Conference Series in Applied Mathematics (SIAM, Philadelphia, 1990)

H. Wendland, Piecewise polynomial, positive definite and compactly supported radial functions of minimal degree. Adv. Comput. Math. 4, 389–396 (1995)

H. Wendland, Error estimates for interpolation by compactly supported radial basis functions of minimal degree. J. Approx. Theory 93, 258–272 (1998)

H. Wendland, Numerical solutions of variational problems by radial basis functions. in Approximation Theory IX, Volume 2: Computational Aspects, ed. by C.K. Chui, L.L. Schumaker (Vanderbilt University Press, Nashville, 1998), pp. 361–368

H. Wendland, Scattered Data Approximation. Cambridge Monographs on Applied and Computational Mathematics (Cambridge University Press, Cambridge, 2005)

H. Wendland, Computational aspects of radial basis function approximation, in Topics in Multivariate Approximation and Interpolation, ed. by K. Jetter, M.D. Buhmann, W. Haussmann, R. Schaback, J. Stöckler. Studies in Computational Mathematics, vol. 12 (Elsevier, Amsterdam, 2006), pp. 231–256

H. Wendland, Divergence-free kernel methods for approximating the Stokes problem. SIAM J. Numer. Anal. 47, 3158–3179 (2009)

H. Wendland, Multiscale analysis in Sobolev spaces on bounded domains. Numer. Math. 116, 493–517 (2010)

Z. Wu, Compactly supported positive definite radial functions. Adv. Comput. Math. 4, 283–292 (1995)

Z. Wu, Compactly supported radial functions and the Strang-Fix condition. Appl. Math. Comput. 84, 115–124 (1997)

B. Xu, S. Lu, M. Zhong, Multiscale support vector regression method in Sobolev spaces on bounded domains. Appl. Anal. 94, 548–569 (2015)

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Rights and permissions

Copyright information

© 2017 Springer International Publishing AG

About this chapter

Cite this chapter

Wendland, H. (2017). Multiscale Radial Basis Functions. In: Pesenson, I., Le Gia, Q., Mayeli, A., Mhaskar, H., Zhou, DX. (eds) Frames and Other Bases in Abstract and Function Spaces. Applied and Numerical Harmonic Analysis. Birkhäuser, Cham. https://doi.org/10.1007/978-3-319-55550-8_12

Download citation

DOI: https://doi.org/10.1007/978-3-319-55550-8_12

Published:

Publisher Name: Birkhäuser, Cham

Print ISBN: 978-3-319-55549-2

Online ISBN: 978-3-319-55550-8

eBook Packages: Mathematics and StatisticsMathematics and Statistics (R0)