Abstract

In this chapter, two studies are presented that investigate aspects of the cognitive potential exploitation hypothesis. This hypothesis states that students in Germany have cognitive potentials they do not use when solving subject-specific problems but that they do use when solving cross-curricular problems. This theory has been used to explain how students in Germany achieved relatively well on cross-curricular problem solving but relatively weakly on mathematical problem solving in the Programme for International Student Assessment (PISA) 2003. Our main research question in this chapter is: Can specific aspects of cross-curricular problem-solving competence (that is, conditional knowledge, procedural knowledge, and planning skills) be taught, and if so, would training in this area also transfer to mathematical problem solving? We investigated this question in a computer-based training experiment and a field-experimental training study. The results showed only limited effects in the laboratory experiment, although an interaction effect of treatment and prior problem-solving competence in the field-experiment indicated positive effects of training as well as a transfer to mathematical problem-solving for low-achieving problem-solvers. The results are discussed from a theoretical and a pedagogical perspective.

Access provided by CONRICYT-eBooks. Download chapter PDF

Similar content being viewed by others

Keywords

1 Introduction

Today, life-long learning seems to be essential, in order to keep up with the rapidly changing demands of modern society. Therefore, general competencies with a broad scope, such as problem solving (Klieme 2004), become more and more important. The development of problem solving as a subject-specific competence is a crucial goal addressed in the educational standards of various subject areas (e.g., Blum et al. 2006; AAAS 1993; NCTM 2000). However, problem solving is seen not only as a subject-specific competence, but also as a cross-curricular competence: that is, an important prerequisite for successful future learning in school and beyond (OECD 2004b, 2013; cf. also Levy and Murnane 2005). Considering the crucial importance of problem solving, both as a subject-specific and as a cross-curricular competence, it has become a focus in large-scale assessments like the Programme for International Student Assessment (PISA; e.g., OECD 2004b, 2013).

The starting point for this chapter were the results from PISA 2003, showing that students in Germany achieved only average results in mathematics, science, and reading, while their results in problem solving were above the OECD average. According to the OECD report on problem solving in PISA 2003, this discrepancy has been interpreted in terms of a cognitive potential exploitation hypothesis, which suggests that students in Germany possess generic skills or cognitive potentials that might not be fully exploited in subject-specific instruction at school (OECD 2004b; cf. also Leutner et al. 2004). While the present chapter focuses on cross-curricular problem solving and mathematical problem-solving competence, the cognitive potential exploitation hypothesis also assumes unused cognitive potentials in science education (Rumann et al. 2010).

On the basis of the results of PISA 2003, and further theoretical and empirical arguments, outlined below, two studies aiming at investigating aspects of the cognitive potential exploitation hypothesis are presented. In a laboratory experiment and in a field experiment, problem solving (components) were taught, and the subsequent effects on mathematical problem solving (components) were investigated.Footnote 1

2 Theoretical Framework

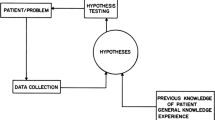

Research on problem solving has a long tradition in the comparatively young history of psychology. Its roots lie in research conducted in Gestalt psychology and the psychology of thinking in the first half of the twentieth century (e.g., Duncker 1935; Wertheimer 1945; for an overview, cf. Mayer 1992).

A problem consists of a problem situation (initial state), a more or less well-defined goal state, and a solution method that is not immediately apparent to the problem solver (e.g., Mayer 1992) because of a barrier between the initial state and the desired goal state (Dörner 1976). The solution of problems requires logically deriving and processing information in order to successfully solve the problem. Compared to a simple exercise or task, a problem is a non-routine situation for which no standard solution methods are readily at hand for the problem solver (Mayer and Wittrock 2006). Problem solving can thus be defined as “goal-oriented thought and action in situations for which no routinized procedures are available” (Klieme et al. 2001, p. 185, our translation).

The international part of the PISA 2003 problem solving test consisted of analytical problems (e.g., finding the best route on a subway map in terms of time traveled and costs; OECD 2004b). Analytical problems can be distinguished from dynamic problems (e.g., a computer simulation of a virtual chemical laboratory where products have to be produced by combining specific chemical substances; Greiff et al. 2012). In analytic problem solving, all information needed to solve the problem is explicitly stated in the problem description or can be inferred from it or from prior knowledge; analytical problem solving can thus be seen as the reasoned application of existing knowledge (OECD 2004b). In dynamic problem solving, in contrast, most of the information required to solve the problem has to be generated in an explorative interaction with the problem situation (“learning by doing”; Wirth and Klieme 2003). In this chapter we focus on analytical problem-solving competence, given that the cognitive potential exploitation hypothesis directly addresses this type of problem solving (for research on dynamic problem solving see Funke and Greiff 2017, in this volume).

2.1 Problem Solving in PISA 2003: The Cognitive Potential Exploitation Hypothesis

Since PISA 2003 (OECD 2003, 2004b), research on problem-solving competence in the context of school and educational systems has received growing attention. In PISA 2003, cross-curricular problem-solving competence is defined as

an individual’s capacity to use cognitive processes to confront and resolve real, cross-disciplinary situations where the solution path is not immediately obvious and where the literacy domains or curricular areas that might be applicable are not within a single domain of mathematics, science or reading. (OECD 2003, p. 156).

The definition of the domain of mathematics is based on the concept of literacy (OECD 2003, p. 26):

Mathematical literacy is an individual's capacity to identify and understand the role that mathematics plays in the world, to make well-founded judgements and to use and engage with mathematics in ways that meet the needs of that individual's life as constructive, concerned and reflective citizen.

The PISA 2003 problem solving test showed unexpected results for Germany (Leutner et al. 2004; OECD 2004b): While students in Germany only reached average results in mathematics (M = 503, SD = 103), science (M = 502, SD = 111) and reading (M = 491, SD = 109), their results in problem solving (M = 513, SD = 95) were above average compared to the OECD metric, which sets the mean to 500 and the standard deviation to 100. This difference between students’ problem-solving competence and their subject-specific competencies, for example in mathematics, is especially pronounced in Germany. Among all 29 participating countries, only Hungary and Japan showed greater differences in favor of problem solving (OECD 2004b). This large difference is especially surprising because of the high latent correlation of r = .89 of problem solving and mathematical competence in the international 2003 PISA sample (OECD 2005).

According to the OECD (2004b, p. 56; cf. also Leutner et al. 2004), this discrepancy can be interpreted in terms of a cognitive potential exploitation hypothesis: The test of cross-curricular problem-solving competence reveals students’ “generic skills that may not be fully exploited by the mathematics curriculum”. Within Germany, this unused potential seems to be especially pronounced for lower achievers (Leutner et al. 2004, 2005).

There are some arguments for this hypothesis: First, there are the conceptual similarities in terms of the theoretical process steps involved in successfully solving cross-curricular as well as mathematical problems (understanding the problem and the constraints, building a mental representation, devising and carrying out the plan to solve the problem, looking back; cf. Pólya 1945; cf. the mathematical modeling cycle). Second, the cognitive resources demanded in both domains are very similar (low demands for reading and science, high demands for reasoning; OECD 2003, cf. also Fleischer et al. in preparation).

Third, results from the German PISA 2003 repeated-measures study support the cognitive potential exploitation hypothesis with two arguments (cf. Leutner et al. 2006, for details): First, a path analysis of the longitudinal data, controlling for mathematical competence in Grade 9, showed that future mathematical competence in Grade 10 can be better predicted by analytical problem-solving competence (R 2 = .49) than by intelligence (R 2 = .41). Second, a communality analysis, decomposing the variance of mathematical competence in Grade 10 into portions that are uniquely and commonly accounted for by initial mathematical competence and problem-solving competence (and intelligence) was conducted. It showed that the variance portion commonly accounted for by analytical problem solving and initial mathematics (R 2 = .127) is larger than the variance portion commonly accounted for by intelligence and initial mathematics (R 2 = .042). Additionally, the variance portions uniquely accounted for by both intelligence (R 2 = .006) and problem solving (R 2 = .005) are near zero. These findings indicate that problem-solving competence and mathematical competence consist of several partly overlapping components that contribute differently to the acquisition of future mathematical competence.

2.2 Components of Problem-Solving Competence

Theoretically, several components of problem-solving competence can be distinguished: For example, knowledge of concepts, procedural knowledge, conditional knowledge, general problem solving strategies, and self-regulatory skills such as planning, monitoring and evaluation. We briefly describe the planning, procedural knowledge and conditional knowledge components, which are the focus of our study.

Planning, as an aspect of general problem solving competence (Davidson et al. 1994), is considered to be of crucial importance in school, work and everyday settings (Dreher and Oerter 1987; Lezak 1995). Planning can be defined as “any hierarchical process in the organism that can control the order in which a sequence of operations is to be performed” (Miller et al. 1960, p. 16). Planning is one of the first steps in models of mathematical problem solving (e.g., Pólya 1945). Procedural knowledge, “knowing how”, can be defined as the knowledge of operators to change the problem state and the ability to realize a cognitive operation (Süß 1996). In the context of the PISA problem solving test procedural knowledge is important in respect of dealing with unfamiliar tables or figures (such as flow-charts). Conditional knowledge (“knowing when and why”; Paris et al. 1983) incorporates the circumstances of the usage of operators and is related to strategy knowledge. Strategy knowledge is important in situations where more than one option is available, as in the case of problem solving. Therefore, general problem solving strategies such as schema-driven or search-based strategies (Gick 1986) and metacognitive heuristics (Bruder 2002; Pólya 1945), which can be part of broader strategic approach (de Jong and Ferguson-Hessler 1996), are regarded as important components of problem solving as well. For more detailed taxonomies of knowledge and aspects of problem solving see, for example, Alexander et al. (1991) and Stacey (2005).

The relevance of these components for the PISA 2003 problem solving scale is empirically supported by an item demand analysis (Fleischer et al. 2010) that identified planning, procedural knowledge, and conditional knowledge as important components of both cross-curricular and mathematical problem-solving competence. Furthermore, problem solving items have turned out to be more demanding than mathematics items in respect of systematic and strategic approaches, and also in relation to dealing with constraints and procedural knowledge. Mathematics items, on the other hand, have turned out to be more formalized and to require, of course, more mathematical content knowledge.

To sum up, there is evidence for a strong overlap between cross-curricular and mathematical problem-solving competence in both theoretical and empirical frames. In this chapter we focus mainly on the common components of planning, procedural knowledge and conditional knowledge.

3 Research Questions

Analytical problem-solving competence, as it was assessed in PISA 2003, consists of different components that, to some extent, require different cognitive abilities. Training in a selection of these components should have an effect on analytical problem-solving competence in general. In accordance with the cognitive potential exploitation hypothesis, and on the basis of the assumption that both cross-curricular and subject-specific problem-solving competencies share the same principal components, training in components of analytical problem-solving competence should also have transfer effects on components of mathematical problem-solving competence and therefore, on mathematical problem-solving competence in general. Against this background, we investigate the following main research questions: Can specific components of problem-solving competence be trained, and does such training transfer to mathematical problem solving? These two main questions are split up into three specific research questions:

Is it possible to train students in how to apply several important components of analytical problem-solving competence (conditional knowledge, procedural knowledge, and planning) in experimental settings (treatment check)?

Does such training improve analytical problem-solving competence in general (near transfer)?

Does transfer from analytical to mathematical problem solving occur (far transfer)?

4 Study I

In a first experimental training study, three components of analytical problem-solving competence—procedural knowledge, conditional knowledge, and planning—were taught.

We expected the experimental group to outperform a control group in all three components taught (conditional knowledge, procedural knowledge, and planning; treatment check) and on the global problem solving scale (near transfer). We further expected a positive transfer of the training to mathematical components (conditional knowledge, procedural knowledge, and planning) as well as on the global mathematics test (far transfer).

4.1 Methods

In a between-subjects design, a sample of 142 ninth grade students (44 % female; mean age = 15.04, SD = 0.84) from high and low tracks of secondary schools was randomly assigned to one of two experimental conditions.

In the experimental group, computer-based multimedia training in cross-curricular problem solving was used, with a focus on the components of procedural knowledge, conditional knowledge, and planning (cf. Fleischer et al. 2010). The training was mainly task-based and took 45 min. Students received feedback (knowledge of result) on each task and were given a second chance to solve the items; in the case of two wrong responses to an item, they were given the solution.

Students in the control group worked on a software tutorial without any mathematical tasks, in an online geometry package.

Randomization was done computer-based within each class. Due to time limitations, only a posttest was administered; there was no pretest.

The posttest (90 min) was composed of three parts:

-

1.

four scales of analytical problem solving: procedural knowledge (Cronbach’s α = .87), conditional knowledge (Cronbach’s α = .86), planning (Cronbach’s α = .96), problem-solving competence (items from the PISA 2003 problem solving test; OECD 2004b; Cronbach’s α = .65),

-

2.

four scales of mathematical problem solving: procedural knowledge (Cronbach’s α = .80), conditional knowledge (Cronbach’s α = .82), planning (Cronbach’s α = .97), mathematical problem-solving competence (items from the PISA 2003 mathematics test; OECD 2004a; Cronbach’s α = .73),

-

3.

a scale of figural reasoning (Heller and Perleth 2000) as an indicator of intelligence as covariate.

4.2 Results

Due to the fact that participants were self-paced in the training phase and in the first part of the posttest, the control group spent less time on training and more time on the first part of the posttest than did the experimental group—although pre-studies regarding time on task had indicated equal durations for the experimental and control group treatments. Consequently, the first part of the posttest (the scales on analytical problem solving) was analyzed by means of an efficiency measure (performance [score] per time [min]). MANCOVAs, controlling for school track and intelligence, with follow-up-ANCOVAs, showed that the experimental group outperformed the control group on planning (η2 = .073), conditional knowledge (η2 = .200), and procedural knowledge (η2 = .020), indicating a positive treatment check. The experimental group outperformed the control group on the global problem solving scale (η2 = .026) as well, which indicates near transfer. Far transfer on the mathematical scales, however, was not found (multivariate p = .953, η2 = .005). There was no interaction effect between group membership and school track (F < 1).

4.3 Discussion

In terms of efficiency, positive effects of the problem solving training on the trained components (treatment check) and on problem-solving competence in general (near transfer) were found in this first experimental training study. Thus, there is evidence that the trained components (i.e., procedural knowledge, conditional knowledge, and planning) are indeed relevant to analytical problem solving. However, no transfer of the training to the mathematical scales (i.e., far transfer) was found. Considering these results, the question arises as to whether the PISA 2003 test scales are sensitive enough to detect short-term training effects. Thus, in Study II we implemented an extended problem-solving training with a longitudinal design, additional transfer cues and prompts to enhance transfer to mathematics.

5 Study II

Study II focuses on an extended field-experimental training program in a school setting, including—as compared to Study I—more time for training, a broader variety of analytic problem solving tasks, and metacognitive support. The training aimed at fostering the joint components of problem solving and mathematical competence (i.e., planning, conditional knowledge, and procedural knowledge). In this study, we selected planning to be tested as a means of treatment check. We expected the experimental group to outperform the control group on a planning test (treatment check) and on a global problem solving test (near transfer). We further expected a positive transfer of the training on a global mathematics test (far transfer).

5.1 Methods

One hundred and seventy three students from six classes (Grade 9) of a German comprehensive school participated in the study (60 % female; mean age = 14.79, SD = 0.68) as part of their regular school lessons. The students in each class were randomly assigned to either the experimental or control group and were trained in separate class rooms for a weekly training session of 90 min. Including pretest, holidays, and posttest, the training period lasted 15 weeks (cf. Table 19.1).

The experimental group (EG) received broad training in problem solving with a focus on planning, procedural knowledge, conditional knowledge, and metacognitive heuristics (Table 19.1; cf. Buchwald 2015, for details). Due to the limited test time, only planning competence was tested.

Planning skills were taught through Planning Competence Training (PCT; Arling and Spijkers 2013; cf. also Arling 2006). In order to conduct the PCT in the school setting, the original training was modified in two ways:Footnote 2 First, students completed the training in teamwork (groups of two), not individually. Second, the training phase, consisting of two planning sessions with scheduling problems (i.e., planning a tour with many constraints in terms of dates and money), was complemented with additional reflection exercises (e.g., thinking about transfer of the in-tray working process to other activities).

After the PCT was finished, a variety of cross-curricular problem solving tasks were used for further problem solving training (e.g., the water jug problem, the missionaries and cannibals problem, Sudoku, dropping an egg without breaking it). The focus was again on planning, complemented by the use of heuristics (e.g., working forward or using tables and drawings; cf. Blum et al. 2006) and metacognitive questions (Bruder 2002; King 1991) that are also important for mathematical problem solving. Conditional knowledge was trained by judging, arguing for, and discussing options and solution methods.

The control group (CG; a wait control group) received rhetoric exercises (body language exercises, exercises against stage fright, learning how to use presentation software) in areas that are important in and outside school settings.

The pretest and posttest of cross-curricular problem-solving competence consisted of items from PISA 2003 (OECD 2004b). The pretest and posttest of mathematical problem-solving competence used items from PISA 2003 (OECD 2004a) and from a German test of mathematics in Grade 9 (a state-wide administered large-scale assessment of mathematics in North Rhine-Westphalia; Leutner et al. 2007). All items were administered in a balanced incomplete test design, with rotation of domains and item clusters. Consequently, each student worked on a test booklet consisting of 16–19 items per time point. Because no student worked on the same items on pre- and posttest occasions, memory effects are excluded.

5.1.1 Data Analysis

The pre- and posttest data for cross-curricular and mathematical problem solving were scaled per domain by concurrent calibration (Kolen and Brennan 2014) with the R package TAM (Kiefer et al. 2014), in order to establish a common metric for each domain. The following results are based on weighted likelihood estimates (WLE; Warm 1989). Please note that the results are preliminary; further analyses with treatment of missing data (e.g., multiple imputation; Graham 2012) are not yet available.

The descriptive pretest results for problem solving (EAP reliability = .48, variance = 0.71) show that the EG scored lower (M = 0.03, SD = 1.26) than the CG (M = 0.55, SD = 0.93). The descriptive posttest results for problem solving (EAP reliability = .49, variance = 0.92) show similar results for EG (M = 0.08, SD = 1.17) and CG (M = 0.06, SD = 1.35). The correlation between pre- and posttest is .62.

The descriptive pretest results for mathematics (EAP reliability = .58, variance = 1.30) show similar results for EG (M = 0.20, SD = 1.10) and CG (M = 0.14, SD = 1.32). The descriptive posttest results for mathematics (EAP reliability = .54, variance = 1.12) show similar results for EG (M = 0.03, SD = 1.05) and CG (M = 0.15, SD = 1.39) as well. The correlation between pre- and posttest is .80.

5.2 Results

5.2.1 Planning

As a treatment-check for the planning component of the training, the posttest of the PCT (Arling and Spijkers 2013) was conducted in Week 7 (Table 19.1). The results show, as expected, that the experimental group outperformed the control group (Cohen’s d = 0.45; Buchwald 2015).

5.2.2 Problem Solving

To investigate global training effects in terms of near transfer, a linear model with group membership and problem solving at the pretest (T1) as predictors, and problem solving at the posttest (T2) as criterion, was calculated. The modelFootnote 3 explained 16.3 % of the variance, with problem solving at T1 (f 2 = .37) and the interaction of problem solving at T1 and group membership (f 2 = .22) being significant effects. Thus, the results indicate an aptitude treatment interaction (ATI). A look at the corresponding effect plot (Fig. 19.1) reveals that the training shows near transfer for students with low problem solving competence at T1, but not for students with high problem-solving competence at T1.

Interaction (group * problem solving at the pretest [T1]) in the prediction of problem solving in the posttest (T2). CG = Control Group, EG = Experimental Group. The figure was generated with the R package effects (Fox 2003)

5.2.3 Mathematics

To investigate global training effects in terms of far transfer, a linear model was calculated with group membership, problem solving at T1, and mathematics at T1 as predictors, and mathematics at T2 as criterion. The model explained 29.5 % of the variance with mathematics at T1 (f 2 = .55), problem solving at T1 (f 2 = .21), and the interaction of problem solving at T1 and group membership (f 2 = .23) as significant effects. Thus, the results indicate an aptitude treatment interaction (ATI) as well. A look at the corresponding effect plot (Fig. 19.2) reveals that the training shows far transfer for students with low problem-solving competence at T1 but not for students with high problem-solving competence at T1.

Interaction (group * problem solving at the pretest [T1]) in the prediction of Mathematics in the posttest (T2). CG = Control Group, EG = Experimental Group. The figure was generated with the R package effects (Fox 2003).

5.3 Discussion

The results of Study II show a successful treatment-check for planning: that is, the planning component of the training program was effective. Other components of problem-solving competence (e.g., conditional knowledge) were part of the training, but for time reasons were not tested; these should be included in future studies.

For problem solving (near transfer) as well as mathematics (far transfer), an interaction of treatment and prior achievement in problem solving was found. These ATI (Aptitude Treatment Interaction) patterns of results indicate that, in terms of near and far transfer, the problem solving training is effective for students with low but not for students with high initial problem-solving competence, and also indicate a compensatory training effect with medium effect sizes. That the training is not helpful for high-achieving problem solvers might be due to motivational factors (“Why should I practice something I already know?”) or to interference effects (new procedures and strategies might interfere with pre-existing highly automated procedures and strategies).

6 General Discussion

Problem solving is one of the most demanding human activities. Therefore, learning to solve problems is a long-lasting endeavor that has to take care of a “triple alliance” of cognition, metacognition, and motivation (Short and Weissberg-Benchell 1989). Following the results of PISA 2003, the present chapter aimed at testing some aspects of the cognitive potential exploitation hypothesis. This hypothesis states that students in Germany have unused cognitive potentials available that might not be fully exploited in subject-specific instruction at school—for example, in mathematics instruction.

In two experimental studies we aimed at fostering mathematical problem-solving competence by training in cross-curricular problem-solving competence. The expectation was that training in the core components of problem-solving competence (i.e., planning, procedural and conditional knowledge, and metacognition-components) that are needed both in cross-curricular and in subject-specific problem solving) should transfer to mathematical competence. In a laboratory experiment (Study I) no effects, in terms of far transfer to mathematics, were found. However, the results of a field experiment (Study II), based on an extensive long-term training program, show some evidence for the cognitive potential exploitation hypothesis: For low-achieving problem solvers the training fostered both problem-solving competence (near transfer) and mathematical competence (far transfer).

Analyzing potential effects from cross-curricular problem-solving competence to mathematical competence in experimental settings was an important step in the investigation of the cognitive potential hypothesis. Further training studies will need to focus on samples with unused cognitive potentials in order to test whether a higher exploitation of cognitive potentials could be achieved.

6.1 Limitations and Future Research

The Role of Content Knowledge

This chapter focused on common components of cross-curricular and mathematical problem solving. Therefore, the role of domain-specific content knowledge in mathematics was somewhat neglected in our studies. An alternative approach to investigating the cognitive potential exploitation hypothesis is to focus on the difference between the domains: i.e., on the mathematical content knowledge.

Participants

Concerning the results and their interpretation, one has to keep in mind that Study II was conducted at only one German comprehensive school in an urban area, as part of the regular school lessons in Grade 9. Thus, participation in the training was obligatory, which is ecologically valid for school settings, but could have had motivational effects. For test the generalizability of the results, further research is needed in other school settings (e.g., studies with other kinds of schools and in other grades, participation on a voluntary basis).

Adaptive Assessment and Training

Following the ATI effect found in Study II, future research could use a more adaptive training, or identify students who require this kind of intervention. Finding evidence of effects from cross-curricular problem solving to the mathematical domain is an important step in investigating the cognitive potential exploitation hypothesis. Further studies on this hypothesis should concentrate on possible effects for students with high unused cognitive potentials. In order to select students with unused cognitive potential in the meaning of the cognitive potential exploitation hypothesis there is the need for individual assessment of this potential. This would require a more economical test with a higher reliability than the one used in our study. Adaptive testing (van der Linden and Glas 2000) could be a solution to this issue.

Problem-Solving Competence and Science

Although this chapter has dealt only with cross-curricular problem solving and mathematics competence, the cognitive potential exploitation hypothesis also assumes unused cognitive potentials for science learning. This is targeted in ongoing research with a specific focus on chemistry education (Rumann et al. 2010, RU 1437/4–3).

Problem solving in PISA 2012

Nine years after PISA 2003, problem solving was assessed again, in PISA 2012. The results of PISA 2012 indicated that students in Germany perform above the OECD average in both problem solving and in mathematics. Furthermore, mathematical competence is a little higher than expected on the basis of the problem-solving competence test (OECD 2014). Since the test focus changed from analytical or static problem solving in PISA 2003 to complex or dynamic problem solving in PISA 2012, it is not yet clear, however, whether the improvement from 2003 to 2012 can be interpreted as the result of the better exploitation of the cognitive potentials of students in Germany. Further theoretical analysis and empirical research on this question is needed.

Notes

- 1.

Parts of this chapter are based on Fleischer et al. (in preparation) and Buchwald (2015).

- 2.

We thank Dr. Viktoria Arling for her cooperation.

- 3.

This and the following model were calculated using a sequential partitioning of variance approach: that is, introducing the predictors in the following order: (1) achievement at T1, (2) group membership, and (3) achievement at T1 x group membership.

References

AAAS (American Association for the Advancement of Science). (1993). Benchmarks for science literacy. New York: Oxford University Press.

Alexander, P. A., Schallert, D. L., & Hare, V. C. (1991). Coming to terms: How researchers in learning and literacy talk about knowledge. Review of Educational Research, 61, 315–343.

Arling, V. (2006). Entwicklung und Validierung eines Verfahrens zur Erfassung von Planungskompetenz in der beruflichen Rehabilitation: Der “Tour-Planer” [Development and validation of an assessment instrument of planning competence in vocational rehabilitation]. Berlin: Logos.

Arling, V., & Spijkers, W. (2013). Konzeption und Erprobung eines Konzepts zum Training von Planungskompetenz im Kontext der beruflichen Rehabilitation [Training of planning competence in vocational rehabilitation]. bwp@ Spezial 6, HT 2013. Fachtagung, 05, 1–12.

Blum, W., Drüke-Noe, C., Hartung, R., & Köller, O. (2006). Bildungsstandards Mathematik: Konkret. Sekundarstufe I: Aufgabenbeispiele, Unterrichtsanregungen, Fortbildungsideen [German educational standards in mathematics for lower secondary degree: Tasks, teaching suggestions, thoughts with respect to further education]. Berlin: Cornelsen Scriptor.

Bruder, R. (2002). Lernen, geeignete Fragen zu stellen: Heuristik im Unterricht [Learning to ask suitable questions: Heuristics in the classroom]. Mathematik Lehren, 115, 4–9.

Buchwald, F. (2015). Analytisches Problemlösen: Labor- und feldexperimentelle Untersuchungen von Aspekten der kognitiven Potenzialausschöpfungshypothese [Analytical problem solving: Laboratory and field experimental studies on aspects of the cognitive potential exploitation hypothesis] (Doctoral dissertation, Universität Duisburg-Essen, Essen, Germany).

Davidson, J. E., Deuser, R., & Sternberg, R. J. (1994). The role of metacognition in problem solving. In J. Metcalfe & A. P. Shimamura (Eds.), Metacognition: Knowing about knowing (pp. 207–226). Cambridge, MA: MIT Press.

De Jong, T., & Ferguson-Hessler, M. G. M. (1996). Types and qualities of knowledge. Educational Psychologist, 31, 105–113. doi:10.1207/s15326985ep3102_2.

Dörner, D. (1976). Problemlösen als Informationsverarbeitung [Problem solving as information processing]. Stuttgart: Kohlhammer.

Dreher, M., & Oerter, R. (1987). Action planning competencies during adolescence and early adulthood. In S. L. Friedman, E. K. Scholnick, & R. R. Cocking (Eds.), Blueprints for thinking: The role of planning in cognitive development (pp. 321–355). Cambridge: Cambridge University Press.

Duncker, K. (1935). The psychology of productive thinking. Berlin: Springer.

Fleischer, J., Wirth, J., Rumann, S., & Leutner, D. (2010). Strukturen fächerübergreifender und fachlicher Problemlösekompetenz. Analyse von Aufgabenprofilen: Projekt Problemlösen [Structures of cross-curricular and subject-related problem-solving competence. Analysis of task profiles: Project problem solving]. Zeitschrift für Pädagogik, Beiheft, 56, 239–248.

Fleischer, J., Buchwald, F., Wirth, J., Rumann, S., Leutner, D. (in preparation). Analytical problem solving: Potentials and manifestations. In B. Csapó, J. Funke, & A. Schleicher (Eds.), The nature of problem solving. Paris: OECD.

Funke, J., & Greiff, S. (2017). Dynamic problem solving: Multiple-item testing based on minimally complex systems. In D. Leutner, J. Fleischer, J. Grünkorn, & E. Klieme (Eds.), Competence assessment in education: Research, models and instruments (pp. 427–443). Berlin: Springer.

Fox, J. (2003). Effect displays in R for generalised linear models. Journal of Statistical Software, 8(15), 1–27.

Gick, M. L. (1986). Problem-solving strategies. Educational Psychologist, 21, 99–120. doi:10.1080/00461520.1986.9653026.

Graham, J. W. (2012). Missing data: Analysis and design. Statistics for social and behavioral sciences. New York: Springer.

Greiff, S., Wüstenberg, S., & Funke, J. (2012). Dynamic problem solving: A new assessment perspective. Applied Psychological Measurement, 36, 189–213. doi:10.1177/0146621612439620.

Heller, K. A., & Perleth, C. (2000). Kognitiver Fähigkeitstest (KFT 4-12+R) [Cognitive ability test] (3rd ed.). Göttingen: Beltz.

Kiefer, T., Robitzsch, A., & Wu, M. L. (2014). TAM: Test analysis modules. R package version 1.0-2.1. Retrieved from http://CRAN.R-project.org/package=TAM

King, A. (1991). Effects of training in strategic questioning on children’s problem-solving performance. Journal of Educational Psychology, 83, 307–317. doi:10.1037/0022-0663.83.3.307.

Klieme, E. (2004). Assessment of cross-curricular problem-solving competencies. In J. H. Moskowitz & M. Stephens (Eds.), Comparing learning outcomes. International assessments and education policy (pp. 81–107). London: Routledge.

Klieme, E., Funke, J., Leutner, D., Reimann, P., & Wirth, J. (2001). Problemlösen als fächerübergreifende Kompetenz: Konzeption und erste Resultate aus einer Schulleistungsstudie [Problem solving as cross-curricular competence? Concepts and first results from an educational assessment]. Zeitschrift für Pädagogik, 47, 179–200.

Kolen, M. J., & Brennan, R. L. (2014). Test equating: Methods and practices (3rd ed.). New York: Springer.

Leutner, D., Klieme, E., Meyer, K., & Wirth, J. (2004). Problemlösen [Problem solving]. In PISA-Konsortium Deutschland (Eds.), PISA 2003. Der Bildungsstand der Jugendlichen in Deutschland: Ergebnisse des zweiten internationalen Vergleichs (pp. 147–175). Münster: Waxmann.

Leutner, D., Klieme, E., Meyer, K., & Wirth, J. (2005). Die Problemlösekompetenz in den Ländern der Bundesrepublik Deutschland [Problem-solving competence in the federal states of the federal republic of Germany]. In M. Prenzel et al. (Eds.), PISA 2003. Der zweite Vergleich der Länder in Deutschland: Was wissen und können Jugendliche? (pp. 125–146). Münster: Waxmann.

Leutner, D., Fleischer, J., & Wirth, J. (2006). Problemlösekompetenz als Prädiktor für zukünftige Kompetenz in Mathematik und in den Naturwissenschaften [Problem-solving competence as a predictor of future competencies in mathematics and science]. In PISA-Konsortium Deutschland (Ed.), PISA 2003. Untersuchungen zur Kompetenzentwicklung im Verlauf eines Schuljahres (pp. 119–137). Münster: Waxmann.

Leutner, D., Fleischer, J., Spoden, C., & Wirth, J. (2007). Landesweite Lernstandserhebungen zwischen Bildungsmonitoring und Individualdiagnostik [State-wide standardized assessments of learning between educational monitoring and individual diagnostics]. Zeitschrift für Erziehungswissenschaft, Sonderheft, 8, 149–167. doi:10.1007/978-3-531-90865-6_9.

Levy, F., & Murnane, R. J. (2005). The new division of labor: How computers are creating the next job market. Princeton: Princeton University Press.

Lezak, M. D. (1995). Neuropsychological assessment (3rd ed.). New York: Oxford University Press.

Mayer, R. E. (1992). Thinking, problem solving, cognition (2nd ed.). New York: Freeman.

Mayer, R. E., & Wittrock, M. C. (2006). Problem solving. In P. A. Alexander & P. H. Winne (Eds.), Handbook of educational psychology (pp. 287–303). Mahwah: Erlbaum.

Miller, G. A., Galanter, E., & Pribram, K. H. (1960). Plans and the structure of behavior. London: Holt, Rinehart and Winston.

NCTM (National Council of Teachers of Mathematics). (2000). Principles and standards for school mathematics. Reston: NCTM.

OECD (Organisation for Economic Co-operation and Development). (2003). The PISA 2003 assessment framework: Mathematics, reading, science and problem solving knowledge and skills. Paris: Author.

OECD (Organisation for Economic Co-operation and Development). (2004a). Learning for tomorrow’s world: First results from PISA 2003. Paris: Author.

OECD (Organisation for Economic Co-operation and Development). (2004b). Problem solving for tomorrow’s world: First measures of cross-curricular competencies from PISA 2003. Paris: Author.

OECD (Organisation for Economic Co-operation and Development). (2005). PISA 2003. Technical report. Paris: Author.

OECD (Organisation for Economic Co-operation and Development). (2013). PISA 2012 assessment and analytical framework: Mathematics, reading, science, problem solving and financial literacy. Paris: Author. doi:10.1787/9789264190511-en.

OECD (Organisation for Economic Co-operation and Development). (2014). PISA 2012 Results: Creative problem solving: Students' skills in tackling real-life problems (Volume V). Paris: Author. doi:10.1787/9789264208070-en.

Paris, S. G., Lipson, M. Y., & Wixson, K. K. (1983). Becoming a strategic reader. Contemporary Educational Psychology, 8, 293–316. doi:10.1016/0361-476X(83)90018-8.

Pólya, G. (1945). How to solve it: A new aspect of mathematical method. Princeton: University Press.

Rumann, S., Fleischer, J., Stawitz, H., Wirth, J., & Leutner, D. (2010). Vergleich von Profilen der Naturwissenschafts- und Problemlöse-Aufgaben der PISA 2003-Studie [Comparison of profiles of science and problem solving tasks in PISA 2003]. Zeitschrift für Didaktik der Naturwissenschaften, 16, 315–327.

Short, E. J., & Weissberg-Benchell, J. A. (1989). The triple alliance for learning: Cognition, metacognition, and motivation. In C. B. McCormick, G. Miller, & M. Pressley (Eds.), Cognitive strategy research: From basic research to educational application (pp. 33–63). New York: Springer.

Stacey, K. (2005). The place of problem solving in contemporary mathematics curriculum documents. Journal of Mathematical Behavior, 24, 341–350. doi:10.1016/j.jmathb.2005.09.004.

Süß, H. M. (1996). Intelligenz, Wissen und Problemlösen [Intelligence, knowledge and problem solving]. Göttingen: Hogrefe.

van der Linden, W. J., & Glas, C. A. (Eds.). (2000). Computerized adaptive testing: Theory and practice. New York: Springer.

Warm, T. A. (1989). Weighted likelihood estimation of ability in item response theory. Psychometrika, 54, 427–450. doi:10.1007/BF02294627.

Wertheimer, M. (1945). Productive thinking. New York: Harper & Row.

Wirth, J., & Klieme, E. (2003). Computer-based assessment of problem solving competence. Assessment in Education, 10, 329–345. doi:10.1080/0969594032000148172.

Acknowledgments

The preparation of this chapter was supported by grants DL 645/12–2, DL 645/12–3 and RU 1437/4–3 from the German Research Foundation (DFG) in the Priority Program “Competence Models for Assessing Individual Learning Outcomes and Evaluating Educational Processes” (SPP 1293). Thanks to Dr. Viktoria Arling for her provision of Planning Competence Training. We would like to thank our student research assistants and trainers (Derya Bayar, Helene Becker, Kirsten Breuer, Jennifer Chmielnik, Jan. Dworatzek, Christian Fühner, Jana Goertzen, Anne Hommen, Michael Kalkowski, Julia Kobbe, Kinga Oblonczyk, Ralf Ricken, Sönke Schröder, Romina Skupin, Jana Wächter, Sabrina Windhövel, Kristina Wolferts) and all participating schools and students. We thank Julia Kobbe, Derya Bayar and Kirsten Breuer for proofreading.

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Rights and permissions

Copyright information

© 2017 Springer International Publishing AG

About this chapter

Cite this chapter

Buchwald, F., Fleischer, J., Rumann, S., Wirth, J., Leutner, D. (2017). Training in Components of Problem-Solving Competence: An Experimental Study of Aspects of the Cognitive Potential Exploitation Hypothesis. In: Leutner, D., Fleischer, J., Grünkorn, J., Klieme, E. (eds) Competence Assessment in Education. Methodology of Educational Measurement and Assessment. Springer, Cham. https://doi.org/10.1007/978-3-319-50030-0_19

Download citation

DOI: https://doi.org/10.1007/978-3-319-50030-0_19

Published:

Publisher Name: Springer, Cham

Print ISBN: 978-3-319-50028-7

Online ISBN: 978-3-319-50030-0

eBook Packages: EducationEducation (R0)