Abstract

Computational models and simulations have evolved dramatically in the past decades, providing useful insights on neurological systems, their functions and dynamics. They successfully deepened our knowledge on systems ranging from biomolecular to neuronal network scale. In spite of these successes, Modeling and Simulation still represent a marginal contribution to the field of neuropharmacology. What may be the reasons behind this? These pages succinctly describe the current state of neuropharmacology, and provide arguments in favor of amplifying the role of Modeling and Simulation on the various phases of the drug discovery and development process for the nervous system. We provide examples illustrating how Modeling and Simulation can guide neuropharmacology. We then present a methodology for building multiscale models by reducing the computational complexity while maintaining predictability levels using computationally efficient input-output modeling. Finally, we deliver arguments in support of generalizing Modeling and Simulation in neuropharmacology to make it a cornerstone of the drug discovery and development process.

Access provided by CONRICYT-eBooks. Download chapter PDF

Similar content being viewed by others

Keywords

These keywords were added by machine and not by the authors. This process is experimental and the keywords may be updated as the learning algorithm improves.

Introduction

Experimental techniques have improved remarkably in the past decades, allowing a deeper understanding of the processes that take place in the central nervous system at different spatial (spanning from biomolecular mechanisms and synapses, to neurons and networks), and temporal scales.

These notable improvements have led to an exponential increase in the amount of data acquired. They have yielded a more quantitative view of the mechanisms underlying the central nervous systems’ functions and dysfunctions, and the effects of drugs. Availability of such data enables the development of computational models that simulate the brain and its changes in response to the application of exogenous compounds. This chapter gathers examples of biosimulation efforts aimed at facilitating the generation of new working hypotheses in a structured and efficient manner, and translating the gained quantitative understanding of the brain, its normal and pathological hallmarks into the discovery of more efficient therapies.

Scientific Problem

The Nervous System and Its Complexity

The nervous system is arguably one of the most complex organs of the body. Despite decades of relentless efforts, much remains to be learnt on how it performs its wide range of tasks and to this day, many questions remain unanswered. Our fascination for this complex organ feeds the headlines of health and science journals, drawing the attention of the neuroscientific community but also society at large.

The nervous system is afflicted by a variety of dysfunctions, with pathologies that may appear from a young age (e.g. Tourette syndrome, autism) to aged adulthood (Alzheimer’s disease, Parkinson’s disease). Understanding these dysfunctions have proven to be challenging for many reasons, especially due to the nervous system’s highly multi-temporal and multi-hierarchical nature. An additional complexity stems from the multifactorial nature of the disease process. Indeed, even for diseases such as Huntington’s disease in which the well-characterized mutation affects a single gene (which in the case of Huntington’s disease consists of a trinucleotide repeat disorder caused by the length of a repeated section of a gene that exceeds normal range), this single mutation causes a variety of changes that result in the disease’s pathological hallmarks (Fig. 1).

Therapeutics Development

Drug discovery and development (DD&D) for disorders of the central nervous system (CNS) have been plagued with a high and continuously rising attrition rate. DD&D is a risky business, especially for the nervous system where the probability that an agent entering clinical development reaches the marketplace is below 7 %. This number is much lower than the industry average of 15 % across other therapeutic areas [1, 2]. Similarly, Development and regulatory approval for cardiovascular and gastrointestinal indications took an average of 6.3 and 7.5 years, versus 12 years for CNS indications [3]—and was reported to reach 18 years from laboratory bench to market in 2011 [4].

These difficulties result in higher costs for CNS DD&D and undoubtedly explain why since 2011, GSK, AstraZeneca, Novartis, Pfizer, Sanofi, Janssen and Merck have initiated a significant downsize in their CNS operations.

The poor success rates outline the prevalent dichotomy often observed in the DD&D process: drugs that have a potent effect in experimental protocols (e.g. strong affinity to the desired target, resulting in significant changes in synaptic and neuronal function or metabolism, etc.) end up having a modest or nonexistent effect, or prohibitive side effects at the macroscopic level. This is due to several factors including the CNS drugs’ propensity to cause CNS-mediated side effects (e.g. nausea, dizziness and seizures), and the additional pharmacokinetic hurdle of the brain-blood barrier that therapeutic agents must face. Contrary to the development of a new antibiotic where the outcome is relatively simple (the bacterium is killed—or not—in a given and oftentimes relatively short treatment window), CNS compounds lead to a wide range of effects at different time scales. Recent examples of failure include suspicions of suicidal thoughts induced by anti-obesity or smoking cessation drugs. This led the Food and Drug Administration (FDA) to announce a change in policy in 2008 to mandate drug manufacturers to study the potential for suicidal tendencies during clinical trials. Compounds may even generate adverse effects. An example of adverse effect was reported by some patients taking antidepressants consisting of selective serotonin reuptake inhibitors (SSRIs) such as Prozac (fluoxetine), Paxil (paroxetine) or Zoloft (sertraline): they experienced suicidal thoughts during the initial phase of the treatment.

Necessity for a Quantitative Understanding of Mechanisms Underlying Pathology

These problems outline our lack of quantitative understanding of CNS dynamics. Unlike antibiotics, a CNS molecule may have a very small therapeutic window to induce a positive outcome without generating an army of harmful or undesired side effects. They also underscore our limited understanding of the mechanisms underlying pathologies. Finally, the DD&D pipeline is fragmented in multiple phases. From compound identification, to preclinical to clinical, preparations are inherently different and yield readouts that are arguably disconnected from the biological system, whose behavior we ultimately try to alter. Additionally, the use of animal models may contribute to a poor translatability of the observed effects of drug candidates to human patients population [5].

These compounded factors inherently result in a limited success rate, plaguing both the pharmaceutical industry and academic laboratories. They lead to increasingly higher costs, slow down the discovery process and delay the availability of potent drugs for patients.

A FDA report published in 2004 [6] outlined the need for innovative solutions to the healthcare challenges. It outlined how critical it has become to (i) integrate the data obtained in a uniform and standardized manner, while (ii) taking into account the dynamical properties inherent to biological systems. Together, these two measures will facilitate the generation of new working hypotheses in a structured and efficient manner, and translate our deepened understanding into more efficacious therapies. To this end, computational models constitute an innovative approach that may integrate up-to-date knowledge on the biological system; they may span multiple biological and temporal levels, encompassing mechanisms at the microscopic level as well as the resulting observations at the macroscopic level in a dynamic and integrated manner. They can replicate observables of function and dysfunctions, the effects of drugs on their respective target(s), and their subsequent effects on neuronal function, network function and ultimately on macroscopic observables, such as those obtained with functional neuroimaging.

Computational Methods

Unifying Computational Neuroscience

Computational neuroscience has witnessed tremendous growth in the past decade. However, given the complexity of the system studied, no unified methodology or tool exists that is able to span all hierarchical biological scales. Indeed, modeling methodologies are numerous, and differ quite significantly depending on the scale of the system under investigation. We refer the reader to other readings that provide an overview of the different modeling techniques as a function of the system investigated (see [7], Chap. 9). One notable point lays in the conceptual difference that separates computational neuroscience to computational neurology. Bridging this gap will deepen our understanding of CNS function and pave the path to individualized medicine.

Computational Neurology: Linking Observed Dysfunctions, Underlying Mechanisms and Individualized Treatments

In the context of pathologies, the computational methods described above often focus on modeling the mechanisms that underlie functions and dysfunctions. Whether at the biomolecular level (e.g. downstream effects of amyloid beta accumulation observed in Alzheimer’s disease patients), neuron or network level (changes in spiking frequency and/or network-level activity), or systems level (models of brain function and its biophysics [8]), each of these computational models focuses on the pathology, and more precisely the mechanisms underlying the pathology and the consequences on the system (and its scale) of interest.

On the other hand, computational neurology and psychiatry focus primarily on the patient and its diagnosis to suggest efficacious therapies. Consequently, computational neurology has historically involved primarily a top-down approach that is oftentimes disconnected from the actual mechanisms underlying the pathology. Instead, it often relies on inference modeling (through database analysis, mathematical modeling, clinical algorithms) and statistical intelligence (Fig. 2a bottom).

From a methodological standpoint, the constraints of computational neurology imply unifying top-down and bottom-up approaches to link macroscopic observables with nanoscopic mechanisms. The necessary steps are summarized in Fig. 2b: (i) Starting from the patient, generate measurements; (ii) these measurements provide insights on the amplitude of dysfunctions observed at the macroscopic level, and allow calibration of the parameters of a simulation platform (i.e. virtual in silico patient), enabling quantification of biomolecular and neuron/network levels changes and characterization of the pathogenic features; (iii) the parameters of the computational platform are calibrated to replicate the measurements from (ii) (generation in silico of the same ‘virtual’ observables), leading to (iv) a precise diagnosis; (v) different therapies may be tested on the virtual model—leading to the identification of the optimal treatment.

Computational neuropharmacology may greatly benefit from such centralized and integrated approach, removing gaps in the different phases of drug discovery and development. This will reduce error-prone interpolations and increase success rates.

The computational methods used in the results presented here use open tools and software. They comprise NEURON [9], EONS [10–12] and Libroadrunner [13]. Systems Biology Markup Language [14] is the preferred format in which all models are implemented and stored.

Results

NMDA Receptor Antagonism: Friend or Foe

We herein present results we obtained through Modeling and Simulation that yielded a better comprehension of the effects of compounds on the NMDA receptor and its associated channel, with direct significance on neuropharmacology of the excitatory synaptic transmission. The N-methyl-D-aspartate receptor (also known as NMDA receptor or NMDAR), is a glutamate receptor and ion channel found on excitatory postsynaptic spines. Its activation requires the binding of glutamate and glycine (or D-serine), and leads to the opening of its ion channel that is nonselective to cations with a reversal potential close to 0 mV. While the opening and closing of the ion channel is primarily gated by ligand binding, the flow of ions through the channel is voltage-dependent, due to the blockade of the channel by extracellular magnesium and zinc ions. Blockade removal allows the flow of sodium Na+ and small amounts of calcium Ca2+ ions into the cell, and potassium K+ out of the cell.

NMDA receptors are thought to play a critical role in the central nervous system. Interestingly, while competitive antagonists such as D-2-amino-5-phosphopentanoic acid (AP5) impair learning and memory, memantine, a non-competitive receptor antagonist has been reported to be paradoxically beneficial to patients with mild to moderate Alzheimer’s disease (AD). In this study, we use a Markov kinetic model and look at the differences in the receptor dynamics and its associated channel current in response to changes in the presence of either molecule.

The kinetic schema used was proposed by Schorge [15] and presented in Fig. 3. The receptor model takes into account the two binding sites for glutamate, the two binding sites for the co-agonist glycine, and two open states O1 and O2; the non-linear voltage dependency is taken into account and depends on surrounding concentration of magnesium. Additional details and parameter values of the model may be found in [16]. Modifications of the kinetic model allow analysis of the effects of AP5 on receptor-channel current as it binds to the NMDAR in a competitive manner to the glutamate binding site. Association and dissociation rate constants (kon and koff) for AP5 were set at 0.38 mM−1 ms−1 and 0.02 ms−1 respectively, based on published experimental results [17]. On the other hand, memantine has been shown to bind to the NMDA receptor in a voltage-dependent manner [18] (Fig. 4).

Learning and memory is associated on a cellular basis with changes in synaptic strengths due to repetitive stimulation of the synaptic connection. We therefore proposed to study the inhibitive effects of AP5 on the response of the NMDA receptor to trains of pulses of neurotransmitters at different frequencies; we quantified the cumulated inhibition on a 5 s window, and compared the response in the presence of AP5 with the one elicited when the receptor is in the presence of memantine. Simulations take place in voltage-clamp mode, meaning that the postsynaptic voltage is held constant. This removes the non-linear voltage dependency of the NMDA receptor associated channel.

Results outlined in Fig. 5 show that for both AP5 and memantine, the dose-responses are shifted to the right, indicating that more antagonist is needed to obtain the same level of inhibition when stimulation frequency increases.

Cumulative inhibition of the NMDA receptor current in the presence of AP5 (a) and memantine (b), in response to glutamate pulses applied at different frequencies (postsynaptic voltage is held constant at −60 mV). The inhibitory effect of both the competitive and non-competitive antagonists decreases as the stimulation frequency increases indicated by a shift to the right of the IC50 as pulses frequency increases [16]

In voltage-clamp mode, we then varied the postsynaptic voltage from −120 to +20 mV in low and high stimulation frequencies (10 and 100 Hz for AP5, and 10 and 200 Hz for MEM). The results indicate that AP5-induced inhibition decreases with frequency, but increases with voltage (when magnesium is present). In contrary, memantine-induced inhibition decreases with both frequency and voltage. This result is more clearly outlined in Fig. 6 which plots variations of the IC50 (concentration at which the amplitude of the output reaches 50 % of its maximum value) as a function of voltage at different frequencies (Fig. 7).

Variations of cumulative inhibition of AP5 and memantine as a function of voltage at different glutamate application frequencies [16]

Our results indicate clear differences in the effects produced by AP5 and memantine on their target receptor and the resulting dynamics of its associated channel. AP5-induced inhibition is characterized by a weak voltage dependence, and an increase in IC50 values with heightened glutamate stimulation frequencies, indicating reduced inhibition. This suggests that in the presence of a large quantity of glutamate, AP5 loses its competitive advantage on the receptor’s binding site, rendering it less potent. This may account for the failure of competitive antagonists reported in clinical trials, especially with respect to stroke. Indeed, stroke may be characterized by an increase in neuronal firing frequency in the affected area due to excess glutamate. Maintaining AP5-induced inhibition would require several fold increases in AP5 concentration, which would then lead to serious and potentially toxic side effects.

On the contrary, our results indicate that memantine has a strong dependence on both voltage and stimulation frequency, which supports the notion that memantine provides a tonic blockade of the receptor in basal conditions. This could account for its neuroprotective attribute. However, this inhibition is lifted when stimulation frequency increases and postsynaptic membrane depolarizes (conditions presumably associated with learning new information), which would explain why memantine does not negatively impact learning.

Neuropharmacology of Combinations: Modeling from Biomolecular Mechanisms to Neuronal Spiking

One of the fundamental characteristics of the brain is its hierarchical and temporal organization; both space and time must be considered to fully grasp the impact of the system’s underlying mechanisms on brain function. Complex interactions taking place at the molecular level regulate neuronal activity, which further modifies the function and structure of millions of neurons connected by trillions of synapses. This ultimately gives rise to phenotypic function and behavior at the system level. This spatial complexity is also accompanied by a complex temporal integration of events taking place at the microsecond scale, leading to slower changes occurring at the second, minute and hour scales. Simulation of mechanisms spanning multiple scales makes modeling a challenging task both from an implementation and numerical standpoint. Yet, these integrations are necessary for studying the effects of combinations of therapeutic agents that target very distinct systems and determining how these drugs interact to shape function at more integrated levels of complexity.

To illustrate proposed solutions to this challenge, we combine the NMDA-R competitive antagonist AP5 described earlier with a molecule known to act on the GABA A receptor. The GABA A receptor is an ionotropic receptor and ligand-gated ion channel that is found in inhibitory synapses; its endogenous ligand is GABA (γ aminobutyric acid), the major inhibitory neurotransmitter in the brain. Activation of this receptor leads to opening of its associated channel pore which selectively conducts chlorine ions inside the cell, resulting in hyperpolarization of the postsynaptic neuron. We propose to study the effect of bicuculline, a competitive antagonist of GABA A receptors, not only on the target’s function, but also on the resulting spiking pattern when these receptors are placed on a CA1 pyramidal neuron [19].

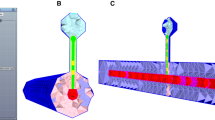

The modeling framework consists of a co-simulation comprising multiple instances of the EONS simulator linked to NEURON through the message-passing interface MPJ Express [20] distributed on a high-performance computer cluster. The stimulation protocol consists in presenting a train of action potentials with random inter-pulse intervals at a mean frequency of 10 Hz (within the range of physiological frequencies reported in the hippocampus) as presynaptic inputs to both excitatory (i.e. glutamatergic) and inhibitory (i.e. GABAergic) synapses of a CA1 pyramidal neuron. The neuron model used is the pyramidal cell described in [21], which uses digitally reconstructed dendritic morphology described in [22] in which synaptic currents are integrated along dendritic branches (112 excitatory glutamatergic synapses located in the stratum radiatum area and 14 inhibitory synapses located close to the soma). The kinetic model for the GABA A receptor is the one presented in [23] (Fig. 8).

The model allows for readouts of molecular, synaptic (postsynaptic current and voltage) and neuronal nature (somatic potential, and spiking activity). Four conditions were simulated: a control condition (i.e. no modulator), with IC50 concentration of AP5 (established at 100 μM in the section “NMDA Receptor Antagonism: Friend or Foe”), with IC50 concentration of bicuculline, and with both antagonists combined at IC50 concentrations. The somatic potentials resulting from the 10 Hz random interval train is presented in Fig. 9 for all four conditions.

From [19]. a Presynaptic input stimulation applied (random inter-pulse interval train with a Poisson distribution and a 10 Hz mean firing frequency). b−e CA1 hippocampal neuron somatic potential in response to stimulation a. b with no modulation. c Response with 100 μM AP5 (IC50 concentration). d Response with a 50 % decrease in GABA A current. e Response with both glutamatergic and gabaergic modulations combined

The somatic potentials obtained in the four conditions outline the high levels of non-linearity that arise at different levels of neuronal integration. Decreases in NMDA receptor current (50 % of the peak amplitude) at the molecular level result in a dramatic reduction in somatic spiking (77 % reduction) once placed in synapses and integrated along the dendritic tree of the pyramidal cell. Adversely, antagonizing the GABA A receptor results in over 50 % increase in the number of action potentials generated. When both modulators are applied, the number of spikes returns to a value close to the control condition (8 versus 9 action potentials), but with a very different (regularized) spiking pattern—likely to result in a very different outcome at the network level.

From Mechanistic to Non-mechanistic Modeling

The previous section outlined the notion that phenomena taking place at a specific scale in the nervous system may often interact in a non-linear manner and thereby yield emerging properties at other (often overlooked) scales. Indeed, exogenous compounds, modulators of excitatory and inhibitory synaptic receptors function were shown to interact and modulate the spike-timing patterns of a CA1 pyramidal neuron. The previous example, computed on several nodes of a high performance computer cluster, also outlines that integrating multiple levels of complexity (whether temporal or hierarchical) may result in a prohibitively large computational burden. Each synapse may de facto represent thousands of differential equations, yet neurons can comprise thousands of synapses, and brain structures are composed of different neurons populations, each potentially containing millions of cells. Simulating such computational load requires (i) increased computational muscle (e.g. IBM BlueGene) and/or (ii) better management of the complexity of the models simulated. This section proposes to focus on the latter—suggesting the use of non-mechanistic modeling methodologies capable of capturing the non-linear dynamics of the system of interest, while significantly reducing the computational load.

The biochemical mechanisms underlying synaptic function have been shown to display a high level of non-linearities—both on the presynaptic and postsynaptic sides [24–27]. These non-linearities are critical and most likely play a significant role in shaping the functions of synapses and neurons, giving them the ability to learn and generate long term changes used to encode memories. Yet these mechanisms, if they are to be modeled in their mechanistic dynamical complexity yield a large number of differential equations, thereby resulting in substantial (and potentially prohibitively large) computational complexity.

An alternative approach is to consider the system of interest as a black box, focusing the computational complexity on replicating the functional dynamics, i.e. the outputs the system generates in response to a series of inputs, rather than the internal mechanisms comprised in the system. This approach was reported to be successfully applied with the use of Volterra kernels [28] on the dynamics of neuronal populations [29, 30], yielding highly predictive models with minimal computational complexity. We proposed to adapt this methodology to model electrical properties of glutamatergic synapses [31] and evaluate it both in terms of predictability and computational complexity (Figs. 10 and 11).

Conceptual representation of different models of synapses in a realism—computational complexity plane. Exponential synapses are a good example of a relatively simple model of synapse that is often used in large scale simulations to save computational complexity. However, they lack in representing the non-linear dynamics of synaptic function (e.g. facilitation/depression, short term plasticity, etc.). Mechanistic models of synapses on the contrary may comprise a large number of mechanisms, thereby providing a high level of realism—but consequently impose more computationally heavy calculations which may become prohibitive in large scale neuronal network models. Examples of synaptic models spanning different levels of complexity may be found in [32]; example of very complex models are also described in [11, 33–35]

The properties modeled are the changes in conductance values of AMPA and NMDA receptors elicited by the response to presynaptic release of neurotransmitter. Estimation of parameters values in non-mechanistic models (interchangeably labeled input-output models in this context) is a crucial step that requires training the model with respect to reference input-output sequences obtained with the mechanistic model. Using long sequences captures a large number of nonlinear behavior thereby minimizing prediction errors by improving parameters estimation. The model structure comprises two sets of kernels with a slow and a fast time constant for each receptor model; we estimated the parameters of the model using a train of 1000 pulses at a 2 Hz random interval train (i.e. using a 500 s long simulation) (Fig. 12).

5 s sample of dynamic response of the mechanistic model (used to calibrate the parameters values of the non-mechanistic model) and the non-mechanistic (IO) model. Visual inspection yields virtually identical response; the total root mean square (RMS) error calculated on a 500 s simulation with novel presynaptic events yield a 3.3 % error

Having established that the response of the input-output model is very close to the response obtained with the mechanistic synapse model in the dynamical range of behaviors, we can now focus our attention on determining the computational speed gain obtained by replacing the mechanistic synapse model with an IO model. To benchmark the models, multiple instances of the IO synapse model were integrated in a neuron model and simulation duration was compared to the one obtained with the original mechanistic models.

We chose the hippocampal pyramidal neuron model proposed by Jarsky [36]. To minimize the computational load necessary to perform neuron-related calculations, we assigned the weight of each synapse with a zero value—thereby ensuring that (i) the time needed to calculate the neuron model remains constant independently of the number of synapses, and (ii) the largest extent of computational time is spent calculating synapses outputs. The number of synapses was varied and we recorded the simulation times. Results are presented in Fig. 13.

Adapted from [31]. Simulation Time (represented in logarithmic scale) varies as a function of the number of synapse instances. Computation time required for the kinetic synapse model is within the range of 10–20 min, while the computation time required for the IO synapse model is consistently at least an order of magnitude lower, ranging between 3 and 30 s. Dashed green line represents the speedup obtained with the IO synapse model compared to the mechanistic model as a function of the number of synapses modeled. The insert on the top right corner illustrates the process of having glutamatergic synapses added onto a pyramidal neuron model. At low number of synapses, the speedup of the IO synapse model is highest (around 150× faster than the computation time required for the kinetic synapse model). The speedup decreases, but stabilizes at around 50× speedup for larger number of synapses

The results in Fig. 13 indicate that the IO model consistently yields faster computation times even as the number of synapses modeled increases. The speedup decreases and seems to reach a plateau at around 50× (this value was verified with higher number of synapses—up to 10,000—not represented in the figure to focus on the non-asymptotic range).

Appropriately trained non-mechanistic models constitute a viable replacement for detailed mechanistic models in large scale models, yielding a significant gain in computational speed, while maintaining high predictability levels—thereby allowing larger scale models to be simulated while preserving biologically relevant subtleties in dynamics and non-linearities.

The non-mechanistic modeling methodology presented is generalizable and fully applicable, not only to other types of synapses (i.e. inhibitory or modulatory), but also other processes with identified inputs-outputs—yielding a natural solution for hierarchical large scale multiscale modeling challenges.

Messages for Neurologists and Computer Scientists

Experimental techniques have grown tremendously in the past decades, leading to a deeper understanding of the mechanisms that take place in the nervous system at multiple levels—ranging from genes and biomolecular mechanisms to brain level activity obtained through imaging methodologies. These notable improvements lead to an exponential increase in the amount of data acquired. They also lead to a more quantitative understanding of the roles of the mechanisms underlying the central nervous system’s functions and dysfunctions. Finally, they yield a better understanding of the effects of perturbations that can occur within these processes, as well as those of exogenous compounds. This deepened understanding enables the construction of predictive computational models capable of simulating the nervous system (in its control and pathological states), and the changes that can take place in response to exogenous compounds.

One of the advantages of multiscale computational models is their inherent ability to integrate, within the same model, experimental observations obtained using different (often incompatible) experimental modalities (or experimental paradigms as defined in Fig. 1), leading to the creation of a single modeled entity which characteristics reproduce all (or at least most) observations. This inherent ability can lead to the creation of virtual patients—patients with normal neuronal function, or with pathological dysfunctions. These virtual patients comprise within the same simulation framework the biomolecular mechanisms that have been demonstrated to take place in the normal and pathological cases, along with the consequences at higher levels of hierarchical (i.e. neuronal, structural and behavioral) and temporal (e.g. neurodegeneration) aggregated scales. The creation of this integrated model will constitute a major accomplishment of the emerging field of computational neurology, the patient-centered successor of computational neuroscience.

This also constitutes a tremendous opportunity for the pharmaceutical industry—leading to an integrated model on which multiple steps of the drug pipeline may be performed in silico in a unified manner—encompassing a large number of processes from target identification to efficacy, side effects and toxicity evaluations. This can lead to much needed defragmentation of the drug discovery process, consequently reducing the attrition rate that plagues drug discovery and development.

The ‘dream’ described above, and more broadly discussed in this book is met by several roadblocks, amongst which are computational power: despite our computers seemingly ever increasing processing power, creating large integrated models spanning several hierarchical and temporal levels constitutes a real challenge. This challenge may be faced using hybrid models that consist of a combination of mechanistic and non-mechanistic models interacting in a seamless manner—leading to efficient simulation of complex non-linear dynamics with a high level of functional realism. Another roadblock is constituted by our limited knowledge of many of the intricate mechanisms that underlie the complex biology of the nervous system. This challenge will ultimately be tackled as the field matures: using open standards and good computational modeling practices will ensure reproducible, iterative and collaborative modeling, consequently allowing iterative incorporation of additional findings while tuning and optimizing the rest of the model. This maturation will also see the development of frameworks compatible with the large number of methodologies and standards currently in use for modeling the multiple spatial and temporal scales of the nervous system.

Finally, a major shift will place the patient at the center. This shift has already started with the emergence of personalized medicine. It will expand to computational neurology to generate in silico models with personalized parameters values for utmost personalized medical prognosis and diagnosis.

References

“Pace of CNS drug development and FDA approvals lags other drug classes,” Tufts Cent. Study Drug Dev., vol. 14, no. 2, 2012.

“Longer clinical times are extending time to market for new drugs in US - Tufts CSDD Impact Report 7,” 2005.

I. Kola and J. Landis, “Opinion: Can the pharmaceutical industry reduce attrition rates?,” Nat. Rev. Drug Discov., vol. 3, no. 8, pp. 711–716, Aug. 2004.

K. I. Kaitin and C. P. Milne, “A Dearth of New Meds,” Sci. Am., vol. 305, no. 2, pp. 16–16, Jul. 2011.

“Evaluation of Current Animal Models,” Washington (DC). National Academies Press (US), 2013.

FDA, “Innovation or Stagnation: Challenge and Opportunity on the Critical Path to New Medical Products,” 2004.

K. Gurney and M. Humphries, “Methodological Issues in Modelling at Multiple Levels of Description,” in Computational Systems Neurobiology, N. Le Novere, Ed. Springer, 2012, pp. 259–281.

K. J. Friston and R. J. Dolan, “Computational and dynamic models in neuroimaging,” Neuroimage, vol. 52, no. 3, pp. 752–765, Sep. 2010.

N. T. Carnevale and M. L. Hines, The NEURON Book. Cambridge: Cambridge University Press, 2006.

J.-M. C. Bouteiller, Y. Qiu, M. B. Ziane, M. Baudry, and T. W. Berger, “EONS: an online synaptic modeling platform,” Conf. Proc. Int. Conf. IEEE Eng. Med. Biol. Soc., vol. 1, pp. 4155–4158, 2006.

J.-M. C. Bouteiller, M. Baudry, S. L. Allam, R. J. Greget, S. Bischoff, and T. W. Berger, “Modeling Glutamatergic Synapses: Insights Into Mechanisms Regulating Synaptic Efficacy,” J. Integr. Neurosci., vol. 7, no. 2, pp. 185–197, Jun. 2008.

J.-M. C. Bouteiller, S. L. Allam, E. Y. Hu, R. Greget, N. Ambert, A. F. Keller, F. Pernot, S. Bischoff, M. Baudry, and T. W. Berger, Modeling of the nervous system: From molecular dynamics and synaptic modulation to neuron spiking activity, vol. 2011. IEEE, 2011, pp. 445–448.

E. T. Somogyi, J.-M. Bouteiller, J. A. Glazier, M. König, J. K. Medley, M. H. Swat, and H. M. Sauro, “libRoadRunner: a high performance SBML simulation and analysis library: Table 1,” Bioinformatics, vol. 31, no. 20, pp. 3315–3321, Oct. 2015.

M. Hucka, A. Finney, H. M. Sauro, H. Bolouri, J. C. Doyle, H. Kitano, and the rest of the SBML Forum: A. P. Arkin, B. J. Bornstein, D. Bray, A. Cornish-Bowden, A. A. Cuellar, S. Dronov, E. D. Gilles, M. Ginkel, V. Gor, I. I. Goryanin, W. J. Hedley, T. C. Hodgman, J.-H. Hofmeyr, P. J. Hunter, N. S. Juty, J. L. Kasberger, A. Kremling, U. Kummer, N. Le Novere, L. M. Loew, D. Lucio, P. Mendes, E. Minch, E. D. Mjolsness, Y. Nakayama, M. R. Nelson, P. F. Nielsen, T. Sakurada, J. C. Schaff, B. E. Shapiro, T. S. Shimizu, H. D. Spence, J. Stelling, K. Takahashi, M. Tomita, J. Wagner, and J. Wang, “The systems biology markup language (SBML): a medium for representation and exchange of biochemical network models,” Bioinformatics, vol. 19, no. 4, pp. 524–531, Mar. 2003.

S. Schorge, S. Elenes, and D. Colquhoun, “Maximum likelihood fitting of single channel NMDA activity with a mechanism composed of independent dimers of subunits,” J. Physiol., vol. 569, no. Pt 2, pp. 395–418, Dec. 2005.

N. Ambert, R. Greget, O. Haeberlé, S. Bischoff, T. W. Berger, J.-M. Bouteiller, and M. Baudry, “Computational studies of NMDA receptors: differential effects of neuronal activity on efficacy of competitive and non-competitive antagonists.,” Open Access Bioinformatics, vol. 2, pp. 113–125, 2010.

M. Benveniste, J. M. Mienville, E. Sernagor, and M. L. Mayer, “Concentration-jump experiments with NMDA antagonists in mouse cultured hippocampal neurons,” J. Neurophysiol., vol. 63, no. 6, pp. 1373–84, Jun. 1990.

S. E. Kotermanski and J. W. Johnson, “Mg2 + Imparts NMDA Receptor Subtype Selectivity to the Alzheimer’s Drug Memantine,” J. Neurosci., vol. 29, no. 9, pp. 2774–2779, Mar. 2009.

J.-M. C. Bouteiller, A. Legendre, S. L. Allam, N. Ambert, E. Y. Hu, R. Greget, A. F. Keller, F. Pernot, S. Bischoff, M. Baudry, and T. W. Berger, “Modeling of the nervous system: From modulation of glutamatergic and gabaergic molecular dynamics to neuron spiking activity,” in 2012 Annual International Conference of the IEEE Engineering in Medicine and Biology Society, 2012, pp. 6612–6615.

M. Baker, B. Carpenter, and A. Shafi, “MPJ Express: Towards Thread Safe Java HPC,” in 2006 IEEE International Conference on Cluster Computing, 2006, pp. 1–10.

M. Ferrante, K. T. Blackwell, M. Migliore, and G. A. Ascoli, “Computational models of neuronal biophysics and the characterization of potential neuropharmacological targets,” Curr. Med. Chem., vol. 15, no. 24, pp. 2456–71, 2008.

G. A. Ascoli, D. E. Donohue, and M. Halavi, “NeuroMorpho.Org: a central resource for neuronal morphologies,” J. Neurosci., vol. 27, no. 35, pp. 9247–51, Aug. 2007.

J. R. Pugh and I. M. Raman, “GABAA receptor kinetics in the cerebellar nuclei: evidence for detection of transmitter from distant release sites,” Biophys. J., vol. 88, no. 3, pp. 1740–54, Mar. 2005.

J. S. Dittman and W. G. Regehr, “Calcium dependence and recovery kinetics of presynaptic depression at the climbing fiber to Purkinje cell synapse,” J. Neurosci., vol. 18, no. 16, pp. 6147–62, Aug. 1998.

W. G. Regehr, “Short-term presynaptic plasticity,” Cold Spring Harb. Perspect. Biol., vol. 4, no. 7, p. a005702, Jul. 2012.

S. L. Allam, J.-M. C. Bouteiller, E. Y. Hu, N. Ambert, R. Greget, S. Bischoff, M. Baudry, and T. W. Berger, “Synaptic Efficacy as a Function of Ionotropic Receptor Distribution: A Computational Study,” PLoS One, vol. 10, no. 10, p. e0140333, Oct. 2015.

J.-M. C. Bouteiller, S. L. Allam, R. Greget, N. Ambert, E. Y. Hu, S. Bischoff, M. Baudry, and T. W. Berger, “Paired-pulse stimulation at glutamatergic synapses - pre- and postsynaptic components,” Eng. Med. Biol. Soc. EMBC 2010 Annu. Int. Conf. IEEE, vol. 2010, pp. 787–790, 2010.

V. Volterra, Theory of functionals and of integral and integro-differential equations. New York: Dover, 1959.

D. Song, Z. Wang, V. Z. Marmarelis, and T. W. Berger, “Parametric and non-parametric modeling of short-term synaptic plasticity. Part II: Experimental study,” J. Comput. Neurosci., vol. 26, no. 1, pp. 21–37, Feb. 2009.

V. Z. Marmarelis, Nonlinear dynamic modeling of physiological systems. John Wiley & Sons, 2004.

E. Y. Hu, J.-M. C. Bouteiller, D. Song, M. Baudry, and T. W. Berger, “Volterra representation enables modeling of complex synaptic nonlinear dynamics in large-scale simulations,” Front. Comput. Neurosci., vol. 9, Sep. 2015.

A. Roth and M. C. W. van Rossum, “Modeling Synapses,” in Computational Modeling Methods for Neuroscientists, The MIT Press, 2009, pp. 139–160.

S. Nadkarni, T. M. Bartol, T. J. Sejnowski, and H. Levine, “Modelling vesicular release at hippocampal synapses,” PLoS Comput. Biol., vol. 6, no. 11, p. e1000983, Jan. 2010.

S. L. Allam, V. S. Ghaderi, J.-M. C. Bouteiller, A. Legendre, N. Ambert, R. Greget, S. Bischoff, M. Baudry, and T. W. Berger, “A computational model to investigate astrocytic glutamate uptake influence on synaptic transmission and neuronal spiking,” Front. Comput. Neurosci., vol. 6, p. 70, Jan. 2012.

R. Greget, F. Pernot, J.-M. C. Bouteiller, V. Ghaderi, S. Allam, A. F. Keller, N. Ambert, A. Legendre, M. Sarmis, O. Haeberle, M. Faupel, S. Bischoff, T. W. Berger, and M. Baudry, “Simulation of Postsynaptic Glutamate Receptors Reveals Critical Features of Glutamatergic Transmission,” PLoS One, vol. 6, no. 12, p. e28380, Dec. 2011.

T. Jarsky, A. Roxin, W. L. Kath, and N. Spruston, “Conditional dendritic spike propagation following distal synaptic activation of hippocampal CA1 pyramidal neurons,” Nat. Neurosci., vol. 8, no. 12, pp. 1667–1676, Dec. 2005.

Acknowledgments

The authors would like to acknowledge all collaborators from Rhenovia Pharma, Serge Bischoff, Michel Baudry, Saliha Moussaoui, Florence Keller, Arnaud Legendre, Nicolas Ambert, Renaud Greget, Merdan Sarmis, Fabien Pernot, Mathieu Bedez and Florent Laloue, and the Center for Neural Engineering at University of Southern California, Dong Song, Eric Hu, Adam Mergenthal, Mike Huang, and Sushmita Allam for fruitful work and collaborations; Sophia Chen for proofreading this chapter. Finally, we thank the reviewers for their constructive comments and suggestions towards improving the manuscript.

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Rights and permissions

Copyright information

© 2017 Springer International Publishing AG

About this chapter

Cite this chapter

Bouteiller, JM.C., Berger, T.W. (2017). The Role of Simulations in Neuropharmacology. In: Érdi, P., Sen Bhattacharya, B., Cochran, A. (eds) Computational Neurology and Psychiatry. Springer Series in Bio-/Neuroinformatics, vol 6. Springer, Cham. https://doi.org/10.1007/978-3-319-49959-8_15

Download citation

DOI: https://doi.org/10.1007/978-3-319-49959-8_15

Published:

Publisher Name: Springer, Cham

Print ISBN: 978-3-319-49958-1

Online ISBN: 978-3-319-49959-8

eBook Packages: EngineeringEngineering (R0)