Abstract

Previous research on songbirds has typically focused on variation in production of vocal communication signals. These studies have addressed the mechanisms and functional significance of variation in vocal production across species and, within species, across seasons and among individuals (e.g., males of varying resource-holding capacity). However, far less is known about parallel variation in sensory processing, particularly in non-model species. A relationship between vocal signals and auditory processing is expected based on the sender–receiver matching hypothesis. Here, we review our recent comparative studies of auditory processing in songbirds conducted using auditory evoked potentials (AEPs) in a variety of field-caught songbird species. AEPs are voltage waveforms recorded from the scalp surface that originate from synchronous neural activity and provide insight into the sensitivity, frequency resolution, and temporal resolution of the subcortical auditory system. These studies uncovered variation in auditory processing at a number of different scales that was generally consistent with the sender–receiver matching hypothesis. For example, differences in auditory processing were uncovered among species and across seasons that may enhance perception of communication signals in species-specific habitats and during periods of mate selection, respectively. Sex differences were also revealed, often in season-specific patterns, and surprising individual differences were observed in auditory processing of mate attraction signals but not of calls used in interspecific contexts. While much remains to be learned, these studies highlight a previously unrecognized degree of parallel variation in songbirds, existing at diverse hierarchical scales, between production of vocal communication signals and subcortical auditory processing.

Access provided by Autonomous University of Puebla. Download chapter PDF

Similar content being viewed by others

Keywords

These keywords were added by machine and not by the authors. This process is experimental and the keywords may be updated as the learning algorithm improves.

2.1 Introduction

The oscine passerines, or songbirds, are an important system for studying animal communication because, as a group, they rely heavily on vocalizations for survival and reproduction. A considerable body of research has focused on the mechanisms and adaptive significance of variation in vocal production within this group. At one level, considerable variation exists in the acoustic structure and complexity of species-specific vocalizations across approximately 4000 extant species. Vocal variation across species reflects differences in underlying brain circuitry and vocal tract anatomy (Zeng et al. 2007) and may ultimately enhance the fidelity of information transfer in species-specific habitats (Morton 1975). At a second level of variation, differences in signal production exist between individuals of the same species. For example, production of songs (mate attraction signals) in species inhabiting temperate latitudes typically occurs in males but not females and may vary considerably among males in relation to their resource-holding potential (e.g., Christie et al. 2004). A third level of variation in vocal production occurs within individuals over time, that is, during development and across seasons. Male songbirds of many species show dramatic seasonal changes in song production throughout the year that appear functionally adaptive and are linked to hormonally mediated regulation of underlying song control nuclei (Brenowitz 2004).

In contrast to our relatively extensive knowledge of vocal production in songbirds, far less is known about variation in auditory processing, particularly in non-model species. Indeed, the auditory capabilities of receivers in animal communication systems are often implicitly assumed to match our own perceptual abilities or perhaps spectral properties of vocal signals reflected in a spectrogram. In this chapter, we summarize our recent studies of the mechanisms and adaptive significance of auditory variation in songbirds. These studies have extended our knowledge of animal communication systems by examining auditory processing in songbirds primarily at the three aforementioned levels of variation: (1) auditory variation among species, (2) variation among individuals of the same species (e.g., between sexes) , and (3) seasonal variation in auditory processing operating at the level of the individual.

The broad scale of these investigations was made possible through use of an efficient physiological method for evaluating subcortical auditory processing known as auditory evoked potentials (AEPs). AEP s are average voltage waveforms recorded from the scalp in response to acoustic stimulation that arise from synchronized activity within neural populations located along the auditory pathway (Hall 1992). Only synchronous discharges from sufficiently large populations of neurons produce a large enough gross potential to be measurable at the scalp surface. While the gross nature of AEPs provides minimal information about the response properties of single neurons and small populations of neurons, their ability to provide basic insight into the absolute sensitivity, frequency resolution, and temporal resolution of the subcortical auditory system has led to recent increases in the application of this technique.

Processing of acoustic information by the auditory system has traditionally been measured by recording electrical activity from single neurons or small groups of neurons located in nuclei of the ascending auditory pathway in anesthetized, nonhuman animals (e.g., Konishi 1970; Chap. 3). One of the most fundamental aspects of auditory processing revealed by these studies is the tonotopic representation of sound, that is, different frequency components of the acoustic spectrum are represented by activity in different frequency-tuned neural subpopulations or channels. Spectral decomposition of sounds into their frequency components originates through auditory filtering in the inner ear and is maintained to varying degrees in different auditory nuclei along the entire length of the pathway from the brainstem nuclei to the midbrain, thalamus, and forebrain (e.g., Calford et al. 1983). A second, fundamental aspect of processing is neural synchrony to the temporal structure of sound. The instantaneous firing rate of auditory neurons varies with the amplitude envelope of acoustics signals (envelope following; Joris et al. 2004) and, at more peripheral levels of processing, the fine structure of the pressure waveform as well (typically for frequencies less than 3–6 kHz; frequency following; Johnson 1980). Envelope following occurs along the entire pathway up to and including the auditory forebrain, with the maximum frequency of encoded envelope fluctuations decreasing centrally (Joris et al. 2004). Synchrony of neural responses to envelope fluctuations, fine structure, and particularly sudden onsets of sound is the key aspect of auditory processing that allows assessment of auditory function through AEPs.

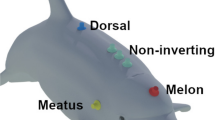

2.2 Assessment of Hearing Function with AEPs

AEPs are obtained by averaging scalp-recorded voltage waveforms across a large number of stimulus repetitions (typically several hundred to several thousand). Recordings are conducted in anesthetized birds to minimize muscle artifacts and with acoustic stimuli presented either from an electromagnetically shielded, free-field loudspeaker or insert earphone in a sound-attenuating booth. Subcutaneous electrodes are positioned at the vertex of the skull (non-inverting), posterior to the ipsilateral ear canal (inverting), and the nape of the neck (common ground) for recording AEPs. Voltage signals are differentially amplified with a gain of 100–200 K and band-pass filtered from between 0.1 and 5–10 kHz (depending on the measurement of interest). Sufficient electromagnetic shielding is critically important to prevent contamination of AEPs by stimulus artifact and can be verified with recordings made with the electrodes in saline or a potato. Detailed AEP recording procedures are described in a number of publications from our laboratory (e.g., Henry and Lucas 2008; Gall et al. 2013; Lucas et al. 2015; Vélez et al. 2015a).

AEP waveforms recorded in response to sound show a fast, bipolar deflection in response to the onset of acoustic stimulation (Fig. 2.1a) known as the auditory brainstem response (ABR ). ABRs in response to click stimuli and tone bursts with fast onset ramps contain multiple peaks attributable to different neural generators of the auditory pathway (reviewed in Hall 1992). The shortest latency peak is generated by the auditory nerve, while peaks with greater latencies arise from nuclei located further along the auditory pathway. The amplitude of ABR peaks varies with the frequency spectrum and sound level of the stimulus in relation to the number of underlying, responsive neurons and their synchrony. The latency of ABR peaks varies with sound level, with louder stimuli evoking shorter latency responses, and to a lesser extent with frequency (higher frequency stimuli tend to evoke ABRs of shorter latency). For responses to sounds with durations longer than a click, the ABR peaks are followed by a sustained response that reflects neural synchrony to the temporal fine structure and envelope of the stimulus waveform. The component of the sustained response that follows the stimulus envelope (Fig. 2.1b) is known as the envelope following response (EFR ), while the component locked to the low-frequency fine structure (Fig. 2.1a, b) is called the frequency following response (FFR ).

Examples of auditory evoked potentials (AEPs). (a) An AEP recorded in response to a 3-kHz pure tone with 3-ms onset and offset ramps. The response consists of an auditory brainstem response (ABR) at stimulus onset followed by a sustained frequency following response (FFR) associated with auditory temporal coding of the stimulus fine structure. (b, c) Responses to an amplitude-modulated tone stimulus. (b) Amplitude-modulated tone stimulus waveform with a carrier frequency of 2.75 kHz and an amplitude modulation frequency of 710 Hz and (c) AEP responses. The stimulus waveform plots pressure (arbitrary scale) as a function of time. The portion of the AEP responses to amplitude modulation shown in (c) begins 15 ms after stimulus onset and hence excludes the ABR. The top trace in (c) contains both the FFR (auditory temporal coding of the carrier frequency and amplitude modulation sidebands) and the envelope following response (EFR ; auditory temporal coding of the stimulus modulation frequency). The lower trace in (c) has been low-pass filtered at 1 kHz to remove the FFR and hence contains only the EFR component. AEPs in (a) and (c) were recorded from a dark-eyed junco ( Junco hyemalis ) and house sparrow ( Passer domesticus ), respectively. The total durations of the waveforms are given in the lower right corners of panels (a) and (c)

The audiogram, which plots absolute threshold (i.e., minimum detectable sound pressure level in quiet) as a function of frequency, delineates the frequency range of sensitive hearing and, therefore, serves as a starting point for understanding the auditory capabilities of any species. Audiograms can be estimated from ABRs recorded in response to tone burst stimuli of varying frequency and sound pressure level. For each tone frequency, the threshold is estimated as the minimum sound pressure level that evokes a reliable ABR. Thresholds are traditionally assessed by visual inspection of ABR waveforms but can also be estimated using statistical techniques such as linear regression or signal detection theory (see Gall et al. 2011). Compared to absolute thresholds of single neurons and of behaving animals, thresholds based on ABRs typically show a good correlation across frequencies and are elevated by 20–30 dB (Brittan-Powell et al. 2002).

As previously discussed, neurons of the auditory system are arranged into frequency-tuned, tonotopic channels that span the auditory pathway. The frequency bandwidths of these auditory filters are an important parameter of the system because these bandwidths determine the extent to which two sound components of similar frequency stimulate different neural populations, that is, are resolved by the auditory system . While the gross nature of AEPs would appear to preclude the possibility of measuring frequency resolution, estimates of auditory filter bandwidths can be obtained by combining the tone-evoked ABR methodology with the notched noise paradigm of human psychophysics (Patterson 1976). Notched noise is white noise with a narrow frequency band of energy filtered out around a center frequency. In traditional notched noise experiments (reviewed in Moore 1993), behavioral thresholds for detection of the tone are measured in notched noise across a range of notch bandwidths. The center frequency of the notch is typically held constant at the frequency of the tone signal, but can be varied to investigate filter asymmetry. Auditory filter shapes are derived from these data based on three main assumptions: (1) the tone is processed in a single auditory filter channel, (2) only noise energy that falls into that auditory filter channel contributes to masking the tone, and (3) detection of the tone requires a fixed signal-to-noise ratio at the output of the filter. When the notch bandwidth is sufficiently broad, little noise energy enters the auditory filter and the threshold for signal detection is low. When the notch bandwidth is narrower than the auditory filter, however, substantial noise energy spills into the filter and contributes to masking of the signal, resulting in a relatively higher threshold. In our adaptation of this method, auditory filter shapes are derived using ABR threshold data collected across a range of notch bandwidths fit to Patterson’s rounded exponential auditory filter model (Fig. 2.2). While the precise relationship between ABR-based tuning bandwidths and the frequency tuning of single neurons has not been quantified in any one species, the ABR method produces estimates of auditory filter bandwidth that are reasonable based on comparison to single-neuron data from other species.

Estimation of auditory filter bandwidth from tone-evoked ABRs in notched masking noise (from Henry and Lucas 2010a [Fig. 1]). The frequency of tone stimuli was 3 kHz. (a) ABR amplitude (normalized) plotted as a function of stimulus level at five different notch bandwidths [nw (right); expressed as the bandwidth in Hz divided by twice the center frequency of the notch (2 × 3 kHz)]. ABRs were recorded from a tufted titmouse ( Baeolophus bicolor ). Solid lines show the fit of a generalized linear model used for estimation of ABR thresholds. The ABR threshold for each notch bandwidth is the sound pressure level (dB SPL) at which the ABR amplitude score exceeds a statistical criterion (dashed line). (b) ABR thresholds (+ symbols) from panel (a) plotted as a function of notch bandwidth. The solid line represents the fit of the roex (p, r) auditory filter model (p = 23.4, r = 0.000030, K′ = 41.7). (c) The shape of the auditory filter derived from the threshold function in (b) (solid line; left y-axis). Also drawn are the long-term average power spectra (bin width = 25 Hz) of the notched noise maskers (dashed lines; right y-axis)

Within each tonotopic channel of the auditory system, the amplitude envelope of sound after auditory filtering is encoded through oscillations in the instantaneous firing rate of auditory neurons (Joris et al. 2004). The ability of auditory neurons to follow fast envelope fluctuations is a second, important parameter of the system because it determines the extent to which sounds occurring in rapid succession are temporally resolved versus fused together during auditory processing. In one method for assessing temporal resolution with AEPs, EFRs are recorded in response to amplitude-modulated sounds to generate a modulation transfer function (MTF) plotting EFR amplitude (i.e., the amplitude of the spectral component of the response at the modulation frequency of the stimulus) as a function of modulation frequency (Kuwada et al. 1986; Dolphin and Mountain 1992; Schrode and Bee 2015). EFR-based MTFs in birds typically show a peak at modulation frequencies from 300 to 700 Hz followed by steady declines in EFR amplitude at higher modulation frequencies attributable to reduced fidelity of neural envelope coding (e.g., Henry and Lucas 2008). Differences in EFR amplitude at high modulation frequencies can reflect differences in the temporal resolution of the auditory system.

In a second method for quantifying temporal resolution, ABRs are recorded in response to paired click stimuli presented with a brief time interval inserted between clicks (Supin and Popov 1995; Parham et al. 1996; Ohashi et al. 2005; Schrode and Bee 2015). ABR recovery functions generated from paired click responses plot the amplitude of the response to the second click, usually expressed as a percentage of amplitude of the response to a single click, as a function of the duration of the time interval between clicks. Because the amplitude of the ABR to the second click can be difficult to measure for short inter-click intervals, the ABR to the second click is usually derived by waveform subtraction of a single-click response from the response to both clicks (Fig. 2.3). ABR recovery functions in a variety of taxa, including birds, show a steady reduction in the amplitude of the ABR to the second click with decreasing inter-click interval associated with a reduction in the neural representation of the second click. Differences in ABR recovery observed at relatively short inter-click intervals can reflect differences in the temporal resolution of the subcortical auditory system.

Estimation of auditory temporal resolution using ABRs evoked by paired-click stimuli (from Henry et al. 2011 [Fig. 2]). Shown here are ABRs in response (a) to paired-click stimuli and (b) to single-click stimuli. (c) Derived ABRs to the second click of paired stimuli, which were generated by point-to-point subtraction of the response to the single-click stimulus from the response to the paired-click stimulus. Thick arrows indicate the timing of clicks, which were separated by time intervals of 7 ms (left) and 2 ms (right)

While the ABR is relatively straightforward to interpret due to its position in time near sound onset, EFRs and FFRs can present greater challenges. For example, sustained responses to the same stimulus component from different neural generators may combine to varying degrees either constructively or destructively depending on their relative amplitudes, the difference in response latency between generators, and the frequency of the stimulus component being followed. The problem is mitigated to some extent by the tendency for one neural generator to dominate a particular class of AEPs (e.g., EFRs to amplitude-modulated sounds may be dominated by an auditory nerve component at modulation frequencies greater than a few hundred Hz; Henry and Lucas 2008), but exists nonetheless. The depth of anesthesia can also affect auditory processing, either directly, as is the case for relatively central auditory nuclei, or through an intermediate effect on body temperature. Care should be taken to maintain stable body temperature and consistent depth of anesthesia both within and across AEP recording sessions. In our studies, we routinely used click-evoked ABRs to assess the stability of auditory function during recording sessions.

2.3 Coevolution Between Signalers and Receivers

Vocal communication signals are very diverse and often mediate evolutionarily important behaviors such as species recognition , mate attraction , territory defense , and group cohesion (Kroodsma and Miller 1996; Marler and Slabbekoorn 2004). Therefore, natural and sexual selection are expected to shape vocal signals in ways that optimize the transfer of information from signalers to receivers (Bradbury and Vehrencamp 2011). Because communication errors can have costly fitness consequences, signal-processing mechanisms in receivers are expected to match the physical properties of communication signals (Endler 1992). This expectation has been referred to as the matched filter hypothesis (Capranica and Moffat 1983) or the sender–receiver matching hypothesis (Dooling et al. 2000; Woolley et al. 2009; Gall et al. 2012a). In this section, we review behavioral and physiological studies looking at the match between signal properties and auditory processing in birds.

The correlation between vocal spectral content and the frequency range of auditory sensitivity (the audiogram) is arguably the most studied dimension of the sender–receiver matching hypothesis in birds. Konishi (1970) obtained auditory thresholds from single units in the cochlear nucleus of ten species of songbirds spanning six families: Emberizidae (five species), Icteridae (one species), Turdidae (one species), Sturnidae (one species), Passeridae (one species), and Fringillidae (one species). Species differences in high-frequency hearing sensitivity appear to be correlated with differences in the range of frequencies present in the conspecific vocal repertoire. These results offer support for the sender–receiver matching hypothesis. However, the author noted that covariation between vocal frequency content and the range of high-frequency hearing can also be due to differences in body size. The size of sound-producing structures can impose limits on the frequencies that songbirds can produce (Greenewalt 1968). Similarly, the size of the tympanic membrane and middle ear bone (columella in birds) can impose limits on the frequency range of hearing. Interestingly, however, canaries (Fringillidae ) and house sparrows (Passeridae ) have lower high-frequency sensitivity than white-crowned sparrows and white-throated sparrows (Emberizidae ), even though they are very similar in size. Given the similarities between the spectral content of the vocalizations among these four species, differences in auditory sensitivity do not reflect a match between signalers and receivers. Although signal properties and body size fail to explain differences in auditory sensitivity in these species, it is possible that auditory processing differences are due to taxonomic differences. In fact, emberizids were overall more sensitive to high-frequency sounds than all other species, suggesting phylogenetic conservation in auditory processing.

Behavioral studies using psychophysical methods have also been used to examine the relationship between hearing and vocal performance. Dooling (1992) reviews these studies, but some patterns are worth noting here. In general, high-frequency hearing sensitivity correlates with the high-frequency components in species-specific vocalizations (Dooling et al. 1978; Dooling 1982). Interestingly, Dooling (1992) found that emberizids are more sensitive to high-frequency sounds than all other families covered in his review. These results are in line with those of Konishi (1970) and suggest that phylogenetic conservation can constrain the evolution of auditory processing mechanisms.

More recently, studies using AEPs to measure peripheral auditory processing have also shown correlations between frequency sensitivity and spectral vocal content. Henry and Lucas (2008) showed that tufted titmice ( Baeolophus bicolor ), house sparrows ( Passer domesticus ), and white-breasted nuthatches ( Sitta carolinensis ) have similar hearing thresholds for sounds with frequencies below 4 kHz (Fig. 2.4). However, ABR thresholds to tones of 6.4 kHz were 12–14 dB lower (more sensitive) in tufted titmice than the other three species. These results were interpreted as an adaptation for processing high-frequency alarm calls present in the vocal repertoire of tufted titmice. Following up on these results, Lucas et al. (2015) measured the minimum, peak, and maximum frequencies in calls and songs of these species and of the white-crowned sparrow ( Zonotrichia leucophrys ). Overall, white-crowned sparrows have higher minimum, peak, and maximum frequencies than all other species. Accordingly, Lucas et al. (2015) also showed that white-crowned sparrows are less sensitive below 4 kHz and more sensitive above 4 kHz than the other species (Fig. 2.4). These results are in line with predictions of the sender–receiver matching hypothesis .

ABR-based audiograms of four songbird species (from Lucas et al. 2015 [Fig. 5]). Audiograms plot tone-evoked ABR thresholds (LS means ± s.e.m.) as a function of stimulus frequency for titmice ( Baeolophus bicolor ; triangles), nuthatches ( Sitta carolinensis ; squares), house sparrows ( Passer domesticus ; circles), and white-crowned sparrows ( Zonotrichia leucophrys ; diamonds). Thresholds were estimated using a visual detection method. Woodland species, closed symbols and continuous lines; open-habitat species, open symbols and dashed lines

The sender–receiver matching hypothesis is not restricted to the frequency range of vocal signals and frequency sensitivity. Vocalizations also vary in temporal structure and the temporal properties of vocal signals can play important roles in avian communication (Beckers and TenCate 2001). Gall et al. (2012a) investigated whether auditory processing of the rise time (or onset time) and of the sustained portion of sounds varies according to spectro-temporal features of vocalizations in five species of songbirds. Song elements of brown-headed cowbirds ( Molothrus ater ) have the most rapid rise times, followed by songs of dark-eyed juncos ( Junco hyemalis ), and, with the slowest rise times, song elements of white-crowned sparrows , American tree sparrow s ( Spizella arborea ), and house finches ( Carpodacus mexicanus ). Based on these song properties, the authors predicted strong ABRs to the onset of sounds in brown-headed cowbirds, followed by dark-eyed juncos, and with the weakest and similar ABRs, all other species in the study. Accordingly, ABRs to the onset of sounds were stronger in dark-eyed juncos than in white-crowned sparrows, American tree sparrows, and house finches. Contrary to predictions, however, brown-headed cowbirds had the weakest onset ABRs. Regarding the tonality of songs, white-crowned sparrows, American tree sparrows, and house finches also had more tonal song elements with the slowest rates of frequency modulation, followed by dark-eyed juncos, and with the fastest rates of frequency modulation and less tonal song elements, brown-headed cowbirds. Therefore, the authors predicted the strongest FFRs to the sustained portion of sounds would be found in white-crowned sparrows, American tree sparrows, and house finches, because they have more tonal song elements, followed by dark-eyed juncos, with the weakest FFRs found in brown-headed cowbirds, because they have less tonal song elements. As predicted, FFRs to the sustained portion of the sound were weak in brown-headed cowbirds. However, FFRs were strongest in dark-eyed juncos.

With some exceptions, these results largely support the sender–receiver matching hypothesis . Despite having relatively high-frequency vocalizations with rapid onsets, brown-headed cowbirds have weak ABRs to the onset of sounds, particularly at higher frequencies (Gall et al. 2011, 2012b). One possible explanation for the mismatch between signal properties and auditory processing in brown-headed cowbirds relates to their breeding strategy (Gall et al. 2011, 2012b). Brown-headed cowbirds are brood parasites, and processing of heterospecific vocalizations may therefore be very important to locate appropriate hosts. In fact, as discussed below, Gall and Lucas (2010) report drastic sex differences in auditory filter bandwidth in brown-headed cowbirds that align with processing of heterospecific vocalizations in females. Another possibility for the mismatch between signalers and receivers in brown-headed cowbirds is phylogenetic conservation . Brown-headed cowbirds and red-winged blackbirds ( Agelaius phoeniceus ) belong to the family Icteridae and share great similarities in auditory processing (Gall et al. 2011). Phylogenetic conservation could also explain why dark-eyed juncos and American tree sparrows , both members of the Emberizidae family, had similar ABRs and FFRs , despite differences in their vocalizations. However, white-crowned sparrows , another species in the Emberizidae family, had ABRs and FFRs more similar to brown-headed cowbirds and house finches .

Avian vocalizations often have more than one frequency component. For instance, the vocal repertoire of zebra finches ( Taeniopygia guttata ) includes harmonic complexes with over 15 frequency components. Differences in the frequency separation and the relative amplitude of each component lead to differences in pitch and timbre. Interestingly, the interaction between frequency components generates a gross temporal structure (Bradbury and Vehrencamp 2011) that may be processed in the temporal domain (Hartmann 1998). Through psychophysical experiments, Lohr and Dooling (1998) showed that zebra finches and budgerigars ( Melopsittacus undulatus ) are better than humans at detecting changes in one frequency component of complex harmonic stimuli. Furthermore, they showed that zebra finches perform better than budgerigars, which have predominantly frequency-modulated tonal vocalizations with less complex temporal structure. These results offer support for the sender–receiver matching hypothesis in that birds that produce structurally complex vocalizations are better at processing the temporal structure of complex sounds than birds that produce simple, tonal vocalizations.

AEPs have also been used to investigate peripheral auditory processing of temporal properties of sounds in the context of the sender–receiver matching hypothesis . Henry and Lucas (2008) measured the rates of envelope fluctuation in vocalizations of tufted titmice , house sparrows , and white-breasted nuthatches . Envelope fluctuation rates of vocalizations were higher in house sparrows and tufted titmice, with rates as high as 1450 Hz. Accordingly, the EFR to amplitude-modulated tones was stronger in house sparrows and tufted titmice than in nuthatches at modulation rates above 1 kHz. These results suggest that the auditory system of species with temporally structured vocalizations has higher temporal resolution. It is important to note that the song of white-breasted nuthatches is a harmonic complex with strong amplitude fluctuations at rates around 700 Hz. Vélez et al. (2015b) showed that EFRs to tones with envelope fluctuations between 200 and 900 Hz were stronger in white-breasted nuthatches during the spring than in two species with tonal songs: Carolina chickadees ( Poecile carolinensis ) and tufted titmice.

To conclude, there is ample evidence to support a general match between vocal properties and auditory processing in songbirds. This match, however, can be constrained by gross morphological and physiological differences between species, ultimately due to more distant phylogenetic relationships . For this reason, comparisons across a few distantly related species may produce biased results, and the observed correlations between properties of the vocalizations and the auditory system may not be solely due to coevolution between signalers and receivers. Therefore, we propose that comparative studies of closely related species that take into account different factors potentially shaping the evolution of vocal signals are an important next step to better understand whether and how auditory processing mechanisms and signal properties coevolve. Similarly, studies of variation in auditory processing across populations of the same species that differ in song properties (e.g., Slabbekoorn and Smith 2002; Derryberry 2009) might shed additional light on the correlation between signal design and receiver sensory physiology.

2.4 Habitat Effects on Song and Hearing

The acoustic adaptation hypothesis proposes that habitat structure constrains the evolution of acoustic signals (Morton 1975). In forests, reverberations and excess attenuation of high-frequency sounds favor tonal signals with frequencies below 3–5 kHz. In open habitats, the lack of reverberations and slow amplitude fluctuations imposed by wind select for high-frequency acoustic signals rapidly modulated in amplitude, frequency, or both (Morton 1975; Marten and Marler 1977; Wiley and Richards 1978; Richards and Wiley 1980; Wiley 1991). Birds have long been used as a model system to test the acoustic adaptation hypothesis. Results from these studies often agree with predictions from the acoustic adaptation hypothesis and show that species that live in forests have more tonal songs with lower frequencies than species that live in open habitats (reviewed in Boncoraglio and Saino 2007). Based on the sender–receiver matching hypothesis , habitat-dependent differences in song properties could lead to habitat-related differences in auditory processing of tonal versus temporally structured sounds and low-frequency versus high-frequency sounds.

We can use auditory filter properties as an index of the trade-off between processing tonal versus temporally structured sounds. Narrow filters have greater spectral resolution, whereas broad filters have greater temporal resolution (Henry et al. 2011). Because selection favors vocal signals that are tonal in forests and amplitude-modulated in open habitats, Henry and Lucas (2010b) predicted narrower auditory filters in forest species than in open-habitat species. As predicted, they found that auditory filters were generally narrower in forest species than open-habitat species (Fig. 2.5). Auditory filter bandwidth was significantly lower in white-breasted nuthatches , a forest species, than in house sparrows and white-crowned sparrows , both open-habitat species. Tufted titmice , another forest species, had significantly narrower auditory filter bandwidths compared to house sparrows, but not compared to white-crowned sparrows.

Acoustic structure of long-range vocalizations (songs) and ABR-based estimates of auditory filter bandwidth (frequency resolution) in four songbird species (from Henry and Lucas 2010b [Figs. 4 and 5]). Spectrograms of song notes from (a) three woodland species (dark-eyed junco , DEJU; tufted titmouse , ETTI; white-breasted nuthatch , WBNU) and (b) two open-habitat species (house sparrow , HOSP; white-crowned sparrow , WCSP). Song notes separated by a dashed line within the same panel are different examples. (c) Average auditory filter bandwidths among four of the study species while controlling for the effect of frequency. Data points represent least squares means ± s.e.m. of the species effect. Auditory filter bandwidths of the dark-eyed junco (not shown) are similar to the open-habitat species from 2 to 3 kHz and lower than the woodland species at 4 kHz

Interestingly, auditory filter properties of dark-eyed juncos , another forest species, differed drastically from those of all other species. While auditory filter bandwidth increased with increasing frequency in all other species, auditory filter bandwidth remained constant across test frequencies from 2 to 4 kHz in dark-eyed juncos. This result is striking for two main reasons. First, auditory filters with constant bandwidth across frequencies are uncommon and tend to occur in auditory specialists like barn owls ( Tyto alba ; Köppl et al. 1993) and echolocating species (Suga et al. 1976; Popov et al. 2006). Second, the pattern differs from those of the other forest species and from that of white-crowned sparrows , the most closely related species to dark-eyed juncos in the study. These results suggest that factors other than habitat and phylogenetic relatedness between species may have shaped auditory filter properties in dark-eyed juncos. Henry and Lucas (2010b) also found that the response of the auditory filters was more efficient (measured as the signal-to-noise response threshold) in open-habitat species than in forest species. This result was interpreted as a possible adaptation to compensate for the inherently lower sensitivity in noise of broad auditory filters.

Lucas et al. (2015) followed up on this study by examining both the properties of the vocalizations and the gross and fine temporal processing of complex tones in two forest species (white-breasted nuthatches and tufted titmice ) and two open-habitat species (house sparrows and white-crowned sparrows ). Consistent with the acoustic adaptation hypothesis, they showed that, overall, songs of open-habitat species have higher levels of entropy and higher peak and maximum frequencies than forest species. Entropy is a measure of the relative tonality of a signal, with low values representing tonal sounds and high values representing noisy sounds. One result was inconsistent with the acoustic adaptation hypothesis: white-breasted nuthatch songs are composed of a series of harmonics (Ritchison 1983) that generate a strong amplitude modulation Footnote 1 in this forest-adapted species. Moreover, the properties of call notes of both woodland species were inconsistent with the prediction of low-frequency, tonal vocalizations in forest habitats. However, calls are commonly produced in winter when the assumption of propagation -induced sound degradation is less valid due to the lack of leaves.

Based on differences in vocalizations and in auditory filter bandwidth (Henry and Lucas 2010b), Lucas et al. (2015) predicted that the auditory system of open-habitat species should respond stronger to the amplitude envelope of complex tones, while the auditory system of forest species should respond stronger to the individual spectral components of complex tones. They measured EFRs and FFRs evoked by complex tones of two and three frequency components with a fundamental frequency of 600 or 1200 Hz. As predicted, EFRs to the 600 Hz amplitude modulation rate of a complex tone were stronger in open-habitat species than in forest species. Interestingly, differences within forest species show that EFRs were stronger in nuthatches than in tufted titmice when the complex tone had an AM rate of 600 Hz. This more closely resembled nuthatch vocalizations. In contrast, FFRs to the different spectral components of the complex tones varied little across habitats. Indeed, FFRs were strongest in white-crowned sparrows . These results cannot be explained by differences in overall auditory sensitivity. Audiograms of these four species show that white-crowned sparrows are actually less sensitive to sounds in the frequency range of the complex tones used in the experiment (Lucas et al. 2015). Strong EFRs and FFRs in white-crowned sparrows may be due to greater acoustic complexity in their songs, as discussed below.

One surprising result was the potential for forest species to process harmonic stacks in two ways. One way is by processing of the envelope fluctuations of complex tones, as described by the envelope following response above. The second is spectral enhancement where the processing of the tonal properties of a given harmonic is enhanced when that harmonic is coupled with the next lowest harmonic in the series. Nuthatches have a simple vocal repertoire that includes a call and a song with strong harmonic content. Thus, enhanced processing of the individual spectral components and the envelope fluctuations of sounds may be necessary to decode all of the information in conspecific vocalizations, as suggested by Vélez et al. (2015a).

These studies, together with those on the sender-receiving matching hypothesis , show that comparative studies that take into account different factors that can shape the evolutionary design of vocal signals are necessary to better understand the evolution of auditory processing mechanisms. In a comparative study with nine species of New World sparrows, Vélez et al. (2015a) investigated whether auditory sensitivity to the frequency of sounds depends on song frequency content, song structure, or habitat-dependent constraints on sound propagation . The selection of species included three that predominantly live in forests, three in scrub-like habitats, and three in open habitats. Within each habitat, one species produces songs that are simple and tonal, one produces trilled songs composed of one element repeated throughout the entire song, and one produces complex songs that include tones, trills, and buzzes. Importantly, more closely related species in the study do not occupy similar habitats nor do they have structurally similar songs. Consistent with the acoustic adaptation hypothesis, Vélez et al. (2015a) found that songs of forest species are more tonal and have lower frequencies than songs of species that live in open habitats. Interestingly, species from different habitats had very similar hearing, as evidenced by audiograms obtained with ABRs. High-frequency hearing sensitivity, however, differed between species with different song types. Species that produce complex songs were more sensitive to high-frequency sounds than all other species. Why auditory sensitivity correlates with the structure of the songs is an open question. One possibility is that birds with complex songs, like the white-crowned sparrow , utilize a broader range of frequencies in order to decode all of the note types in the songs. Thus, the amount of information that is encoded in songs may correlate with auditory sensitivity. These results highlight the importance of considering the multiple dimensions of signals, and how the different dimensions interact, when studying the evolution of signal-processing mechanisms.

Studies of within-species variation are fundamental for understanding evolutionary processes. Several studies have shown how habitat-dependent constraints on sound propagation lead to divergence in song properties across conspecific populations inhabiting different environments (reviewed in Slabbekoorn and Smith 2002; Derryberry 2009). More recently, growing conservation efforts set the stage for studies on the effect of urbanization and anthropogenic noise in animal communication. Gall et al. (2012c) showed that urbanization, as well as habitat, affects the active space of brown-headed cowbird song. Furthermore, differences in the acoustic properties of bird vocalizations between urban and rural conspecific populations have been reported (Slabbekoorn and Peet 2003; Ríos-Chelén et al. 2015). Despite the breadth of studies looking at population-level variation in signal properties, differences in auditory processing between conspecific populations have been overlooked. To better understand the evolution of auditory processing mechanisms, future studies should focus on how auditory processing correlates with habitat-dependent and noise -dependent differences in vocal properties within species.

2.5 Seasonal Auditory Plasticity

As discussed earlier, auditory processing, particularly at the periphery, is often assumed to be static. However, an emerging body of work suggests that there is actually considerable plasticity in the auditory periphery of birds (Lucas et al. 2002, 2007; Henry and Lucas 2009; Caras et al. 2010; Gall et al. 2013; Vélez et al. 2015b), as well as fish (Sisneros et al. 2004; Vasconcelos et al. 2011; Coffin et al. 2012) and anurans (Gall and Wilczynski 2015).

Peripheral auditory processing was first shown to be influenced by season, and consequently by the likely reproductive state of birds, in 2002. Lucas et al. (2002) used AEPs (specifically the ABR) to investigate the response to clicks by five species (downy woodpeckers (Picoides pubescens) , white-breasted nuthatches , tufted titmice , Carolina chickadees , and house sparrows ) tested in both winter and spring and a sixth species (European starlings, Sturnus vulgaris ) tested only in winter. The clicks were presented at several rates and amplitudes. The authors were surprised to find relatively small differences in the overall average latency and amplitude of the ABR across the six species they studied (i.e., no or small main effect of species in their models). However, the addition of season and the season × species interaction revealed some unexpected patterns. In particular, Lucas et al. (2002) found that the amplitude of the ABR was significantly lower in spring than in winter for nuthatches and woodpeckers, while in chickadees and sparrows, the amplitude of the ABR was greater in spring than in winter.

Lucas et al. (2007) then investigated responses to tones in three species: Carolina chickadees , tufted titmice , and white-breasted nuthatches . They found seasonal plasticity that largely mirrored their previous findings. Chickadees tended to have stronger responses (greater amplitude) in the spring compared to the winter, while nuthatches had greater amplitude responses in the winter compared to the spring. The changes in the chickadees seemed to affect responses across a range of frequencies, while in nuthatches the plasticity was restricted to a narrow range of frequencies (1–2 kHz). Titmice did show seasonal plasticity in their onset response to tones (ABR), but did not show plasticity in their sustained responses (FFRs) .

Henry and Lucas (2009) next investigated frequency sensitivity in the house sparrow . They found that while the amplitude of ABRs to tone burst stimuli showed seasonal plasticity, there was no variation in ABR-based absolute thresholds (the audiogram) or the latency of the ABR response. The amplitudes of the response to tones from 3.2 to 6.4 kHz were greater in the spring than in the fall (Fig. 2.6). Again, these results mirror the initial findings of Lucas et al. (2002), with amplitude of the responses increasing during the spring months, when house sparrows are in reproductive condition.

Seasonal variation in the amplitude of tone-evoked ABRs in the house sparrow (from Henry and Lucas 2009 [Fig. 3]). Average ABR amplitude in spring, summer, and autumn (see legend) at frequencies of (a) 0.8–4.2 kHz and (b) 6.4 kHz. Error bars represent 95 % confidence intervals. Note that spring data are offset −150 Hz, autumn data are offset +150 Hz, and amplitude scales differ between panels

Gall et al. (2013) and Vélez et al. (2015b) extended the work on seasonality by investigating temporal and frequency resolution during autumn (nonbreeding) and spring (breeding). In the house sparrow , Gall et al. (2013) found that there were no (or very limited) main effects of season on temporal resolution and frequency resolution. However, temporal and frequency resolution did show significant seasonal variation when sex and season were considered in concert. Similarly, in other bird species, Vélez et al. (2015b) found that the main effect of season did not influence the response of Carolina chickadees , tufted titmice, or white-breasted nuthatches to the amplitude envelope or the fine structure of amplitude-modulated tones. However, they found that the response of titmice and chickadees to the fine structure of sound (FFR) did differ seasonally when sex and season were considered simultaneously. These sex × season interactions are discussed in greater detail in Sect. 2.6. Finally, Vélez et al. (2015b) found that there were seasonal changes in the EFR in each of the species, but only at a subset of amplitude modulation rates.

The work described above strongly suggests that there is seasonal plasticity in the adult auditory periphery in songbirds. There are two natural questions that follow from these observations: (1) What mechanisms underlie this plasticity? (2) What, if anything, is the function of this plasticity? While there has been some work addressing the first question, at this point we can only speculate about the potential functions of this plasticity.

There are several possible mechanisms that could be responsible for plasticity in the auditory periphery of songbirds. The most likely candidate seems to be that seasonally or reproductively related changes in hormone levels influence the electrical tuning of hair cells. Work in midshipman fish has shown that seasonal changes in auditory sensitivity are correlated with plasma steroid hormone level (Rohmann and Bass 2011). The tuning of hair cells in these fish is largely determined by large conductance ion channels, and seasonal changes in auditory tuning are linked to the expression of big potassium (BK) channels (Rohmann et al. 2009, 2013, 2014). Furthermore, manipulation of BK channels in larval zebra fish leads to changes in auditory sensitivity (Rohmann et al. 2014). In songbirds, estrogen receptors and aromatase have been found in hair cells (Noirot et al. 2009), and exogenous steroid hormones lead to plasticity in the amplitude of the ABR response (Caras et al. 2010). Other possible mechanisms of plasticity, which remain unexplored in songbirds, include the addition of hair cells in the inner ear [hair cell addition has been linked to seasonal changes in sensitivity in fish (Coffin et al. 2012)] or replacement or remodeling of hair cell structure. Songbirds are capable of regenerating hair cells following injury (Marean et al. 1998); however, it is not clear whether this mechanism could be involved in seasonal plasticity. Finally, auditory feedback from mate attraction signals may result in developmental changes that serve to enhance peripheral responses to those calls. This phenomenon has been seen in the auditory periphery of frogs (Gall and Wilczynski 2015) and in higher-order auditory processing areas in songbirds (Sockman et al. 2002, 2005) and frogs (Gall and Wilczynski 2014).

There are several hypotheses regarding the function of seasonal plasticity. Most commonly, this plasticity is assumed to enhance the ability of individuals to respond to vocal signals during the breeding season. However, this could be equally accomplished by having highly sensitive hearing year-round. There are two main mechanisms, then, by which plasticity may be favored over year-round sensitivity. The first is that plasticity may reduce energetic expenditure on sensory tissue during the nonbreeding season, as sensory tissues are expensive to maintain (Niven and Laughlin 2008). A similar mechanism is thought to drive plasticity in neural tissues controlling song production in male birds. An alternative explanation, particularly for plasticity in temporal and frequency resolution, is that these changes allow enhanced detection of seasonally specific sounds in both seasons. For instance, enhanced frequency resolution may be beneficial for females selecting mates on the basis of their vocalizations, while enhanced temporal resolution may be beneficial for both sexes in the nonbreeding season when signals about food or predation risk may be particularly important.

A second hypothesis about the function of plasticity is that plasticity may gate the salience of particular signals, rather than enhance their detection or discrimination. This idea has been largely unexplored in songbirds, although there is some support from other taxa. For instance, female frogs for which egg laying is imminent will respond with positive phonotaxis to male calls that are less attractive, while females earlier in their reproductive trajectory are more discriminating (Lynch et al. 2005, 2006). These changes in behavior are correlated with hormone profiles (Lynch and Wilczynski 2005), which are in turn correlated with changes in midbrain sensitivity to male calls (Lynch and Wilczynski 2008). When reproductive hormone levels are increased by administering gonadotropin, the overall activity of the midbrain is increased, suggesting that even relatively poor calls are likely to activate sensory processing areas when females are close to laying eggs. Therefore, plasticity in the responsiveness of the auditory midbrain to vocalizations may gate the salience of these signals for evoking behavioral responses. Similarly, birds’ enhanced sensitivity, or an altered balance of frequency and temporal resolution, may gate the salience of male reproductive signals. This sensory gating could then work in concert with hormonally induced changes in female motivation to modulate reproductive behavior.

2.6 Sex Differences in Auditory Processing

In birds, and in particular songbirds, there are often great differences between males and females in the production of vocalizations . Yet despite the well-studied variation between the sexes in terms of signal production, relatively little work has focused on variation in sensory processing between the sexes. In the auditory system, this oversight seems to have two primary causes. The first is that variation (both among seasons and between the sexes) in behavioral responses to signals has generally been assumed to be due to motivational , rather than due to sensory processing differences. The second is that the most common methodologies used to investigate auditory processing in birds were not well suited to the investigation of sex differences.

Prior to the early 2000s, most of what we knew about general aspects of avian audition was the result of either psychophysics or single-unit electrophysiological recordings. In both circumstances, the number of subjects is necessarily limited and the effect of sex on audition was, therefore, generally not considered. The early work on AEPs in birds was conducted in chickens and ducks, and focused on validating the methodology, on the development of auditory sensitivity, or on broad taxonomic comparisons, but did not investigate sex differences (Saunders et al. 1973; Aleksandrov and Dmitrieva 1992; Dmitrieva and Gottlieb 1994).

Much of the work on AEPs in songbirds has either not considered the sex of the animals (Brittan-Powell and Dooling 2002, 2004; Lucas et al. 2002) or did not find strong effects of sex on auditory responses (Lucas et al. 2007, 2015; Henry and Lucas 2008, 2010b; Caras et al. 2010; Vélez et al. 2015a; Wong and Gall 2015). For instance, Lucas et al. (2007) found no main effects of sex and marginal effects of sex × season and sex × species on the processing of tones when investigating three species of birds, although the within-season sample size for each sex was quite low (one to three individuals). Similarly, Henry and Lucas (2008) found no effects of sex on frequency sensitivity or temporal resolution in three species (tufted titmice , white-breasted nuthatches , and house sparrows ) that were tested primarily during the nonbreeding season. Nor did Lucas et al. (2015) find sex differences in the processing of complex tones in four species of songbird sampled during the nonbreeding season. Additionally, when samples are pooled across the breeding and nonbreeding seasons, sex effects have rarely been observed (Henry and Lucas 2010b).

Sex differences were first observed by Henry and Lucas (2009) in an investigation of seasonal patterns of frequency sensitivity in the house sparrow . They found that the ABR amplitude of male house sparrows increased at a greater rate than the ABR amplitude of females as the amplitude of the stimulus increased. They did not find any effects of sex on auditory thresholds or the latency of the ABR response. (They did not investigate the sex × season interaction due to inadequate sample sizes.) Henry and Lucas (2010a) then investigated frequency sensitivity in Carolina chickadees following the breeding season (September to November) but during a time of pair formation (Mostrom et al. 2002). Here they found marginal main effects of sex on ABR thresholds, but a clear effect of the sex × frequency interaction on ABR amplitude and latency. Generally, males tended to have lower thresholds and greater amplitudes, but longer latencies. Additionally, Henry and Lucas (2010a) found that males had greater frequency resolution than females during this time. In the same year, Gall and Lucas (2010) found that frequency resolution of brown-headed cowbirds sampled during the breeding season also showed large differences between the sexes, with females having greater frequency resolution than males. Similarly, in both brown-headed cowbirds and red-winged blackbirds tested during the breeding season, females had slightly lower ABR thresholds and much greater ABR amplitudes than males (Gall et al. 2011).

These early data suggested that sex differences in auditory processing occur but that the exact nature of these differences is both species specific and time specific. In particular, this early work seemed to suggest that sex differences are greatest during times of pair formation and breeding. Additionally, it seemed that these differences were greatest in species with a high degree of sexual dimorphism and that sex differences were greatest in species in which mate assessment occurs over a relatively shorter time period.

The Carolina chickadee and blackbird data led to the hypothesis that sex and season interact to influence auditory processing in songbirds in a way that is similar to how sex and season interact to influence the production of mate attraction signals. In temperate songbirds the utility of mate attraction signals decreases during the nonbreeding seasons; thus, at these times it is more energy efficient to downregulate neural tissue devoted to song production , rather than to maintain these tissues for long periods of disuse. Similarly, auditory processing may be modulated over the course of the year to match the auditory stimuli of greatest importance. Based on this hypothesis, we would predict that the sexes would differ in their auditory processing when females are evaluating mate attraction signals, but that auditory processing of the sexes would converge during the nonbreeding season. These changes could either improve female discrimination of vocalizations or alter the downstream neural population responding to a particular stimulus, thus altering the salience of mate attraction signals during pair formation. In this way, peripheral changes in audition could gate the release of mating behavior, potentially independently or in concert with changes in motivation . This assumes that for males the benefit of enhanced auditory processing during the breeding seasons may not offset the costs or that males and females may process different elements of male vocalizations. During the nonbreeding season, when males and females may need to respond to similar auditory stimuli, such as those related to predation and foraging , auditory processing is expected to converge.

This hypothesis was tested for the first time in house sparrows , with an investigation of frequency and temporal resolution (Gall et al. 2013). Henry et al. (2011) had previously shown that frequency resolution and temporal resolution are inversely related to one another, both at the species and individual level in songbirds. Gall et al. (2013) found that in the nonbreeding season, there was no difference between males and females in temporal resolution or frequency resolution. However, during the breeding season, females had greater frequency resolution than males, but poorer temporal resolution. This seasonal pattern seems to be due to plasticity in females, as males did not exhibit auditory variation between the seasons, while females did (Fig. 2.7).

Seasonal variation in ABR-based estimates of frequency resolution (top) and temporal resolution (bottom) in male and female house sparrows (from Gall et al. 2013 [Fig. 1]). Note that females (black diamonds) show an increase in frequency resolution (manifest as a reduction in auditory filter bandwidth) from the nonbreeding season (autumn) to the breeding season (spring) and, as a possible consequence, a concomitant decrease in temporal resolution (reduction in ABR recovery in response to a second click)

This pattern of sex-specific seasonal plasticity in frequency resolution and temporal resolution, as well as in the processing of pure tones, has now been observed in many other species. For instance, Vélez et al. (2015b) found that in the winter there were no sex differences in the EFR , a measure of temporal resolution, in three species of songbirds (tufted titmice , white-breasted nuthatches , and Carolina chickadees ). However, in the spring there were significant differences between the sexes in their ability to follow certain rates of temporal modulation. In titmice and in chickadees, males tended to have a greater ability than females to follow temporal modulation in the spring. Similarly, the strength of FFRs to pure tones did not differ between the sexes during the winter, but did differ during the spring. Typically, females had greater FFR amplitude in response to pure tones than males during the spring, with no difference in the winter.

The data from titmice , white-breasted nuthatches , and Carolina chickadees tell a similar story to that of the house sparrows and red-winged blackbirds : frequency resolution improves in females during the breeding season at the expense of temporal processing. Frequency sensitivity, when measured as the amplitude of the ABR to the onset of single frequency sound stimulus, also tends to be greater in females than in males during the breeding season (Gall et al. 2012b). However, other types of auditory processing tend to be less plastic. For instance, in these same species, there are only small differences in ABR-based auditory thresholds, even during the breeding season (Vélez et al. 2015a). Therefore, different facets of auditory processing in songbirds should be treated independently when investigating sex effects. Future research should strongly consider both season and stimulus type when investigating sex-specific auditory processing.

2.7 Individual Variation in Auditory Physiology

There is enormous variation at several scales in the structure of signals: among species (Gerhardt and Huber 2002; Catchpole and Slater 2008), among individuals of the same species (e.g., between sexes ; Catchpole and Slater 2008), and within individuals over time (Hill et al. 2015; Maddison et al. 2012). This variation in signal structure, or the phonemes or other elements characteristic of a signal, is often related to the function of the signal (Bradbury and Vehrencamp 2011). Signal function also influences, in part, the level of variation in the structure of signals at the species and individual levels. For example, signals used in interspecies communication tend to converge at the species level: mobbing or alarm calls share similar acoustic features between species that flock together (Ficken and Popp 1996; Hurd 1996). Interestingly, these calls also tend to be similar at the individual level. In contrast, elements of signals that denote species identity (i.e., species badges) are known to diverge among species, but individuals of the same species tend to share these signal elements (Gerhardt 1991). Finally, honest signals that denote individual quality diverge in structure at the species level (i.e., different species use different signals), and individuals within a species also differ (Grafen 1990; Searcy and Nowicki 2005).

In this chapter we have discussed a parallel set of scales with respect to variation in the auditory system: among species, between sexes , and within individuals over time (also see Dangles et al. 2009). The functional attributes of this variation tend to be fairly straightforward: species differences in auditory processing reflect, in part, constraints imposed by habitat on signal propagation (Morton 1975; Wiley 1991); sex differences in auditory processing may reflect differences in the requirement for processing sex-specific aspects of vocal signals (Gerhardt and Huber 2002); variation within individuals over time may reflect several factors such as seasonal variation in the use of different signal types (Catchpole and Slater 2008).

One of the smallest ecological scales we can consider is variation within-sex and within-season. Here the correspondence between signal evolution and the evolution of sensory systems seems to be relatively under-explored (Ronald et al. 2012). Indeed, Dangles et al. (2009) discussed variability in sensory ecology across a variety of scales, from populations to individual development , but they left out any discussion of variability between individuals of the same age and sex.

While the relationship within individuals between signal evolution and sensory evolution is poorly understood, the functional aspects of individual variability in signal design itself are well documented. Indeed, the signal side of individual variability is the heart of our theoretical framework for sexual selection . For example, the classic handicap principle offers a hypothesis for why males should differ in the intensity of a signal (Zahavi 1975; Grafen 1990): signals will evolve to be costly to the signaler if the magnitude of the cost is relatively higher for low-quality signalers compared to high-quality signalers. However, this theory is based on the implicit assumption that a signal of a given intensity, and therefore the information contained within that signal, is a fixed entity with a characteristic cost (e.g., Grafen 1990; Johnstone 1995; Searcy and Nowicki 2005). Logically, if the value of a signal with a specific set of properties is to be treated as a fixed entity, then either all receivers will have to process that signal in an identical way or the mapping of signal properties on to signal information content (see Bradbury and Vehrencamp 2011; Hailman 2008) has to result in an identical signal valuation across receivers. We have known for some time that receivers do vary in their response to signals, although the factors that have been addressed are primarily factors that affect functional aspects of mating decisions . For example, individual variation in mate choice is affected by a variety of factors, such as the physical and social signaling environment (Gordon and Uetz 2011; Clark et al. 2012), previous experience (Bailey 2011; Wong et al. 2011), genetic differences between choosing individuals (Chenoweth and Blows 2006; Horth 2007), and female condition (Cotton et al. 2006).

The treatment of the signal as a fixed entity ignores potential variability in the sensory processing capabilities of receivers. Additionally, if signal processing varies among individuals (i.e., individuals differ in their capacity to extract information from a signal), then signal information content decoded from the signal may also vary among individuals, particularly in the case of complex signals (Kidd et al. 2007). This individual variation in sensory processing is important theoretically because it has the potential for altering the fitness consequences of signals in several ways. First, the relative fitness accrued from expressing a particular signal will become more variable if signals are detected and processed differently by different receivers. Second, if individual variation in sensory processing varies among groups of receivers (e.g., age groups or groups of individuals that vary in their exposure to various sounds), then signals will vary in efficacy depending on the specific group to which they are directed. This raises the question as to whether signal processing does, in fact, vary among individuals and whether this variation is group specific. The answer to both of these questions is “yes.”

Individual variation in signal processing that is likely to alter mate-choice decisions has been demonstrated in several systems. Henry et al. (2011) found individual variation in ABR-based auditory filter bandwidth in a sample of Carolina chickadees . Moreover, they demonstrated that chickadees with broader filters had greater auditory temporal resolution (measured with paired-click stimuli) than chickadees with narrow filters. Thus, individual chickadees vary in the degree to which they are able to resolve temporal cues (such as amplitude modulation ) and spectral cues (such as frequency properties) in any given vocal signal. Similarly, Ensminger and Fernandez-Juricic (2014) found individual variation in cone density in the eyes of house sparrows . Chromatic contrast models were used to illustrate that these differences in cone density would result in differences in the ability of females to detect the quality of plumage signals known to be associated with mate choice . This individual variation in signal processing may alter the capacity of the signaler to encode relevant information in a signal, and it may also limit the capacity of the receiver to decode that information.

Group-specific variation is most easily shown in animals that are subject to different environments. For example, Gall and Wilczynski (2015) demonstrated that green treefrogs ( Hyla cinerea ) that were exposed to species-specific vocal signals as adults had altered peripheral auditory sensitivity compared to frogs that were not exposed to vocal signals. Phillmore et al. (2003) found that black-capped chickadees ( Poecile atricapillus ) that are isolate-reared had more difficulty in identifying individual-specific vocalizations than field-reared birds. These results are similar to those of Njegovan and Weisman (1997), who showed that isolate-reared chickadees also have impaired pitch discrimination.

There are three potential mechanisms that generate individual variation in auditory physiology: age-related differences, experience-related differences, and hormone -related differences. All of these mechanisms can be the cause of striking phenotypic plasticity in the auditory system. Age-related effects on hearing are well documented in humans (Clinard et al. 2010; He et al. 2007; Mills et al. 2006; Pichora-Fuller and Souza 2003) and in a variety of model systems (e.g., mice , Mus musculus , Henry 2002; rats , Rattus norvegicus , Parthasarathy and Bartlett 2011). Differences in rearing conditions potentially shape many aspects of auditory processing, from frequency coding and tonotopic maps to spatial processing and vocalization coding (Sanes and Woolley 2011; Woolley 2012; Dmitrieva and Gottlieb 1994). The auditory system is relatively plastic, even in adults. AEPs can change in frogs as a result of exposure to simulated choruses (Gall and Wilczynski 2015). Patterns of AEPs can be altered in a way that indicates better auditory processing in people with hearing deficits who undergo auditory training (Russo et al. 2005). Musicians compared to nonmusicians show enhanced auditory processing of tones (Musacchia et al. 2007), as do people who speak tonal languages such as Mandarin (Krishnan and Gandour 2009). Musicians are better at solving the cocktail party problem (i.e., detecting and recognizing speech in noise ; see Miller and Bee 2012) than are nonmusicians (Swaminathan et al. 2015). These examples show an explicit link between experience-dependent auditory plasticity and vocal communication.

Hormone levels, and particularly estrogen levels, are known to affect both developmental and activational levels of auditory performance (Caras 2013). Hormone effects are particularly important in seasonal plasticity of the auditory system where they promote retuning of the auditory system in a wide variety of taxa, including fish (Sisneros 2009), anurans (Goense and Feng 2005), birds (Caras et al. 2010), and mammals (Hultcrantz et al. 2006). However, as discussed earlier in this chapter, the details of this retuning can be quite complex. These hormone effects can, in turn, influence individual variability if there are individual differences in either the timing of the reproductive cycle or in the mean amplitude of estrogen levels during that cycle.

Charles Darwin’s theory of natural selection (Darwin 1872) is the framework within which almost all of biological thought is built. One of the three tenets of this theory is variation between individuals within a population. It seems almost odd that we spend so little time thinking about this in the context of sensory processing.

2.8 AEP Responses to Natural Vocalizations

In this section we describe some new analyses of AEPs in response to natural vocalizations that illustrate a number of factors we have discussed in this chapter. Virtually all of our own work has focused on the auditory processing of either simple sounds (clicks and tones) or sounds that mimic elements of vocal signals, such as harmonic tone complexes and amplitude-modulated sounds. While these studies provide critical information about auditory processing, it is particularly important to address how auditory systems process real vocal signals. This approach has led to important insights into hearing deficits in humans (Johnson et al. 2005, 2008) and into the processing of songs in the avian auditory forebrain (Amin et al. 2013; Elie and Theunissen 2015; Lehongre and Del Negro 2011).

The beauty of AEPs is that they can be generated with any input stimulus, including natural vocal signals. The processing of a vocal signal will generate a complex AEP waveform that can be quantified in a number of ways, although we take only a single approach here: cross correlation of response waveforms. We restrict our analysis to three signals: a tufted titmouse (hereafter ETTI) song element, a white-breasted nuthatch (hereafter WBNU) song element, and a WBNU contact (“quank”) call element (Fig. 2.8). The WBNU song and quank elements are interesting because their spectrograms are similar but their function is different: the song is used in mating-related contexts and the quank is both a contact call and a call used in mobbing. The ETTI song is structurally different than the WBNU song but shares the same function. Two exemplars of each signal were used in the experiments and are illustrated in Fig. 2.8; AEPs generated by these signals are shown in Fig. 2.9. Note that both of these figures depict two views of a signal (Fig. 2.8) or the AEP response (Fig. 2.9): the top figures (waveform plots) depict the amplitude of the pressure waveform or voltage waveform, respectively, as a function of time. The bottom figures (spectrogram plots) are a Fourier transform of the corresponding waveform, and they depict the frequency content of the signal (Fig. 2.8) or the AEP response (Fig. 2.9) as a function of time. In the spectrogram, simultaneous bands represent signal elements (Fig. 2.8) or evoked potential elements (Fig. 2.9) that include multiple frequency components. The technique used to measure AEPs, summarized briefly earlier in this chapter, is described in detail in Vélez et al. (2015a), Lucas et al. (2015), Gall et al. (2013), and Henry et al. (2011); we will not cover the technique here.

Natural vocalizations used to elicit AEPs. Shown here are the six input stimuli used in our study of AEPs in response to natural vocalizations of the tufted titmouse (ETTI) and white-breasted nuthatch (WBNU): (a) ETTI song #1, (b) ETTI song #2, (c) WBNU song #1, (d) WBNU song #2, (e) WBNU quank #1, (f) WBNU quank #2. The waveform (top) and spectrogram (bottom) are shown for each vocalization. The y-axis labels are identical for all figure pairs

AEPs in response to natural vocalizations. Shown here are mean AEP responses to one exemplar of each of the three types of stimuli played to each of the four species tested. Each figure has two parts: the top is the waveform view and the bottom is the spectrogram derived from the waveform. AEPs are shown for the tufted titmouse (ETTI), white-breasted nuthatch (WBNU), house sparrow (HOSP), and white-crowned sparrow (WCSP) in response to (a) ETTI song #1 , (b) WBNU song #1, and (c) WBNU quank #1. Note: the voltage intensity (y-axis) is the same for waveforms resulting from the same input stimulus

The two WBNU songs are composed of several harmonics that generate a fairly strong amplitude modulation , or beating, at a rate equal to the difference in frequency between the harmonics (Table 2.1). Here is a simple example to illustrate this: say you have three simultaneous tones with frequencies of 1200, 1800, and 2400 Hz. These tones represent a harmonic stack with a lower fundamental frequency of 600 Hz, although this fundamental is missing in our example. However, the three tones combine in a way that generates an amplitude modulation of 600 Hz. The WBNU songs and calls have this exact structure. This is important, because the auditory system will phase-lock to that AM rate in addition to phase-locking to each separate tone (Henry and Lucas 2008; Lucas et al. 2015; Vélez et al. 2015b). In addition to this AM component, all of these WBNU songs also have a gross amplitude envelope that starts at a low intensity in the beginning of the song element and has a peak intensity toward the end of the element (Fig. 2.8c, d).

Like the WBNU song, the quank call notes (Fig. 2.8e, f) can be characterized as having a series of strong harmonics that generate strong AM in both call notes (Table 2.1). What differs between the WBNU quank and WBNU song elements is that the WBNU song has a higher AM rate than the quank calls (Table 2.1), and the gross amplitude envelope increases more slowly (compare Fig. 2.8c, d vs. Fig. 2.8e, f). Both titmouse song elements are relatively tonal, with fundamental frequencies of about 2.6 kHz (Table 2.1; Fig. 2.8a, b). However, the song elements differ in their gross amplitude envelopes. Amplitude onset is slower in the ETTI song element 2 (Fig. 2.8b) than in the ETTI song element 1 (Fig. 2.8a). ETTI song element 1 also has a weak harmonic at 5.4 kHz that is not evident in the spectrogram of ETTI song element 2.

We measured AEPs from four species of birds: two tufted titmice (one male, one female), six white-breasted nuthatches (three males, three females), six house sparrows (five males, one female), and six white-crowned sparrows (three males, three females). Each bird was tested on both exemplars of each of the three signals. The average evoked potentials in response to the ETTI song element, WBNU song element, and WBNU quank call are given in Fig. 2.9.