Abstract

In Chap. 4, we saw that a proportion of difficult temporal relations were associated with a particular separate word or phrase that described the temporal relation type – a temporal signal.

Words are but the signs of ideas.

Preface to the Dictionary

Samuel Johnson

Access provided by CONRICYT-eBooks. Download chapter PDF

Similar content being viewed by others

Keywords

These keywords were added by machine and not by the authors. This process is experimental and the keywords may be updated as the learning algorithm improves.

5.1 Introduction

In Chap. 4, we saw that a proportion of difficult temporal relations were associated with a particular separate word or phrase that described the temporal relation type – a temporal signal. The failure analysis in Sect. 4.3.1 finds signals to be of use in over a third of difficult TLINKs. Despite their demonstrable impact on temporal link labelling (see Sect. 3.5.4), no work has been undertaken toward the automatic annotation of temporal signals, and little toward their exploitation. This chapter begins to address these deficiencies.

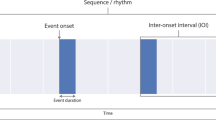

Temporal signals (also known as temporal conjunctions) are discourse markers that connect a pair of events and times and explicitly state the nature of their temporal relation. Humans resolve events and times in discourses that machines cannot yet automatically label. It is assumed that there must be information in the document and in world knowledge that allows resolution of events, times and relations between them. Temporal signals form part of this information. Intuitively, these words contain temporal ordering information that human readers can access. This chapter investigates the role that temporal signals play in discourse and finds methods for automatically annotating them.

To illustrate:

Example 9

“The exam papers were submitted before twelve o’clock.”

In Example 9 there is an event, the submitting of exam papers, and a time, twelve o’clock, that are temporally related. The word before serves as a signal that describes the nature of the temporal relation between them.

These temporal signals can occur with difficult temporal links and seem to provide explicit information about temporal relation type. It is worth investigating their potential utility in the relation typing task. If these signals are found to be useful, we may determine how to detect and use them automatically, instead of relying on existing manual annotations. To begin investigation the process of automatic signal annotation, a thorough account of temporal signals is required, followed by an examination of current resources that include temporal signal annotations. Next one may cast the signal annotation problem as a two step process. Firstly, one must know how to determine which words and phrases in a given document are temporal signals. Secondly, one needs to work out with which intervals a given temporal signal is associated, given many candidates. The tasks jointly comprise automatic temporal signal annotation.

This chapter is therefore structured as follows. In Sect. 5.2, we formally introduce background material regarding temporal signals. Section 5.3 reports on the effect that signal information has on an existing relation typing approach compared with the approach’s performance sans signal information, finding that adding features that describe temporal signals yields a large error reduction for automatic relation typing. Accordingly, after surveying signal annotations in existing corpora (Sect. 5.4), a method for automatically finding words and phrases that occur as temporal signals is introduced, which first requires the construction of a high-quality ground truth dataset (Sect. 5.5). After developing an approach to finding temporal signal expressions using this new dataset (Sect. 5.6), Sect. 5.7 describes a method for associating temporal signal (once found) with a pair of temporally-related intervals whose relation is described by the temporal signal. The overall performance of the presented temporal signal annotation system is then evaluated. The chapter concludes with an evaluation of the impact this automatic signal annotation has on the overall relation typing task (Sect. 5.8), which is a positive one.

5.2 The Language of Temporal Signals

Signal expressions explicitly indicate the existence and nature of a temporal relation between two events or states or between an event or state and a time point or interval. Hence a temporal signal has two arguments, which are the temporal “entities” that are related. One of these arguments may be deictic instead of directly attached to an event or time; anaphoric temporal references are also permitted. For example, the temporal function and arguments of after in “Nanna slept after a long day at work” are clear and are available in the immediately surrounding text. With “After that, he swiftly finished his meal and left” we must look back to the antecedent of that to locate the second argument.

Sometimes a signal will appear to be missing an argument; for example, sentence-initial signals with only one event in the sentence (“Later, they subsided.”). These signals relate an event in their sentence with the discourse’s current temporal focus – for example, the document creation time, or the previous sentence’s main event.

Signal surface forms have a compound structure consisting of a head and an optional qualifier . The head describes the temporal operation of the signal phrase and the qualifier modifies or clarifies this operation. An example of an unqualified signal expression is after, which provides information about the nature of a temporal link, but does not say anything about the absolute or relative magnitude of the temporal separation of its arguments. We can elaborate on this magnitude with phrases which give qualitative information about the relative size of temporal separation between events (such as very shortly after), or which give a specific separation between events using a duration as a modifying phrase (e.g. two weeks after). In both cases, the signal applies to the ordering of events either side of the separation, rather than the separation itself.

5.2.1 Related Work

Signals help create well-structured discourse. Temporal signals can provide context shifts and orderings [1]. These signal expressions therefore work as discourse segmentation markers [2]. It has been shown that correctly including such explicit markers makes texts easier for human readers to process [3].

Further, words and phrases that comprise signals are sometimes polysemous, occurring in temporal or non-temporal senses. For the purposes of automatic information extraction, this introduces the task of determining when a given candidate signal is used in a temporal sense.

Brée [4] performed a study of temporal conjunctions and prepositions and suggested rules for discriminating temporal from non-temporal uses of signal expressions that fall into these classes. Their approach relies heavily upon the presentation of contrasting examples of each signal word. This research went on to describe the ambiguity of nine temporal prepositions in terms of their roles as temporal signals [5].

Schlüter [6] identifies signal expressions used with the present perfect and compares their frequency in British and US English. This chapter later attempts a full identification of English signal expressions.

Vlach [7] presents a semantic framework that deals with duratives when used as signal qualifiers (see above). Our work differs from the literature in that is it the first to be based on gold standard annotations of temporal semantics and that it encompasses all temporal signal expressions, not just those of a particular grammatical class.

Intuitively, signal expressions contain temporal ordering information that human readers can access easily. Once temporal conjunctions are identified, existing semantic formalisms may be readily applied to discourse semantics. It is however ambiguous which temporal relation any given signal attempts to convey, as investigated by [8] and studied in TimeBank later in this chapter (Sect. 5.4.2). Our work quantifies this ambiguity for a subset of signal expressions.

5.2.2 Signals in TimeML

This section includes work from [9].

TimeML’s description of a signal isFootnote 1:

SIGNAL is used to annotate sections of text, typically function words, that indicate how temporal objects are to be related to each other. The material marked by SIGNAL constitutes the following:

indicators of temporal relations such as temporal prepositions (e.g. “on”, “during”) and other temporal connectives (e.g. “when”) and subordinators (e.g. “if”). This functionality of the SIGNAL tag was introduced by [10].

indicators of temporal quantification such as “twice”, “three times”.

Signals in TimeML are used to mark words that indicate the type of relation between two intervals and also to indicate multiple occurrences of events (temporal quantification). For the task of temporal relation typing, we are only interested in this former use of signals. The annotation guidelines suggest that in TimeML one should annotate a minimal set of tokens – typically just the “head” of the signal.

For example, in the sentence John smiled after he ate, the word after specifies an event ordering. Example 10 shows this sentence represented in TimeML.

Example 10

TimeML allows us to associate text that suggests an event ordering (a SIGNAL) with a particular temporal relation (a TLINK). To avoid confusion, it is worthwhile clarifying our use of the term “signal”. We use SIGNAL in capitals for tags of this name in TimeML and signal/signal word/signal phrase for a word or words in discourse that describe the temporal ordering of an event pair. Examples of the signals found in TimeBank are provided in Table 5.1.

It is important to note that not every occurrence of text that could be a signal is used as a temporal signal. Some signal words and phrases are polysemous, having both temporal and non-temporal senses: e.g. “before” can indicate a temporal ordering (“before 7 o’clock”) or a spatial arrangement (“kneel before the king”). This book refers to expressions that could potentially be temporal signals as candidate signal phrases. Only candidate signal phrases occurring in a temporal sense are of interest.

The signal text alone does not mean a single temporal interpretation. A temporal signal word such as after (for example) is used in TimeBank in TLINKs labelled after, before and includes. For example, there is no set convention to the order in which a TLINK’s arguments should be defined; the after TLINK in Example 10 could just as well be encoded as:

See Table 5.2 for the distribution of relation labels described by a subset of signal words and phrases.

As described above, signals sometimes reference abstract points as their arguments. These abstract points might be a reference time (Sect. 6.3) or an implicit anaphoric reference. As TimeML does not include specific annotation for reference time, one should instead assume that the signal co-ordinates its non-abstract argument with the interval at which reference time was last set. For example, in “There was an explosion Tuesday. Afterwards , the ship sank”, we will link the sank event with explosion (the previous head event) and then associate our signal with this link.

5.3 The Utility of Temporal Signals

Do signals help temporal relation typing? Given the role that they might play in the relation typing task suggested in Sect. 4.3.1 and having a high-level definition of temporal signals, it is next important to establish their potential utility. Since we have in TimeML a signal-annotated corpus, to answer this question, one can compare the performance of automatic relation typing systems with and without signal information. Positive results would motivate investigation into further work on automatic signal annotation. This section relates such a comparison, and includes work from [12]. An extended investigation into this section’s findings can be found in [13].

Although accurate event ordering has been the topic of research over the past decade, most work using the temporal signals present in text has been only preliminary. However, as noted in Chap. 3, specifically focusing on temporal signals when classifying temporal relations can yield a performance boost. This section attempts to measure that performance boost.

In TimeML, a signal is either text that indicates the cardinality of a recurring event, or text that explicitly states the nature of a temporal relation. Only the latter sense is interesting for the current work. This class of words and phrases includes temporal conjunctions (e.g. after) and temporal adverbials (e.g. currently, subsequently), as well as set phrases (e.g. as soon as). A minority of TLINKs in TimeML corpora are annotated with an associated signal (see Table 5.3).

While the processing of temporal signals for TLINK classification could potentially be included as part of feature extraction for the relation typing task, temporal signals are complex and useful enough to warrant independent investigation. When the final goal is TLINK labelling, once salient features for signal inclusion and representation have been found, one might skip signal annotation entirely and include these features in a temporal relation type classifier. As we are concerned with the characterisation and annotation of signals, we do not address this possibility here, instead attempting to understand signals as an intermediate step towards better overall temporal labelling.

The following experiment explores the question of whether signal information can be successfully exploited for TLINK classification by contrasting relation typing with and without signal information. The approach replicated as closely as possible is that of [14], briefly summarised as follows.

The replication had three steps. Firstly, to simplify the problem, the set of possible relation types was reduced (folded) by applying a mapping (see Sect. 3.3.1). For example, as a before b and b after a describe the same ordering between events a and b, we can flip the argument order in any after relation to convert it to a before relation. This simplifies training data and provides more examples per temporal relation class. Secondly, the following information from each TLINK is used as features: event class, aspect, modality, tense, negation, event string for each event, as well as two boolean features indicating whether both events have the same tense or same aspect. Thirdly, we trained and evaluated the predictive accuracy of the maximum entropy classifier from Carafe.Footnote 2 To match the original approach, ten-fold cross-validation was used, and a one-third/two-thirds split was also introduced to see the effect of reduced ratio of training:evaluation examples. This split the set of event-event TLINKs into a training set of 4156 instances and an evaluation set of 2078 instances.

In [14], TLINK data came from the union of TimeBank v1.2a and the AQUAINT TimeML corpora. As the TimeBank v1.2a corpus used is not publicly available, we used TimeBank v1.2. This use of a publicly-available version of TimeBank instead of a private custom version was the only change from the previous work. In this work we only examine event-event links, which make up 52.9 % of all TLINKs in our corpus, likely due to minor differences between the TLINK annotations of TimeBank v1.2 and TimeBank v1.2a.

Table 5.4 shows results from replicating the previous experiment on event-event TLINKs. The baseline listed is the most-common-class in the training data. This gives a similar score of 60.32 % accuracy compared to 61.79 % in the previous work. The differences may be attributed to the non-standard corpus that they use. The TLINK distribution over a merger of TimeBank v1.2 and the AQUAINT corpus differs from that listed in the paper.

5.3.1 Introducing Signals to the Relation Labelling Feature Set

Now that a reasonable replication of a prior approach has been established, the goal is to measure the difference in relation typing performance that temporal signals make. This requires feature representations of signals. To add information about signals to our training instances, we use the extra features described below; the two arguments of a TLINK are represented by e1 and e2. All features can be readily extracted from the existing TimeML annotations. Only gold-standard signal annotations from the corpora were used.

-

Signal phrase. This shows the actual text that was marked up as a SIGNAL. From this, we can start to guess temporal orderings based on signal phrases. However, just using the phrase is insufficient. For example, the two sentences Run before sleeping and Before sleeping, run are temporally equivalent, in that they both specify two events in the order run-sleep, signalled by the same word before.

-

Textual order of e1/e2. It is important to know the textual order of events and their signals even when we know a temporal ordering. Textual order can have a direct effect on the temporal order conveyed by a signal. To illustrate, “Bob washes before he eats” describes a story different from “Before Bob washes he eats”.

-

Textual order of signal and e1, signal and e2. These features describe the textual ordering of both TLINK arguments and a related signal. It will also help us see how the arguments of TLINKs that employ a particular signal tend to be textually distributed. The features are required to disambiguate cases where textual order is unreliable. To illustrate, “Bob washes before he eats” and “Before he eats, Bob washes” describe the same event ordering but have different text orderings.

-

Textual distance between e1/e2. Sentence and token count between e1 and e2.

-

Textual distance from e1/e2 to SIGNAL. If we allow a signal to influence the classification of a TLINK, we need to be certain of its association with the link’s events. Distances are measured in tokens.

-

TLINK class given SIGNAL phrase. Most likely TLINK classification in the training data given this signal phrase (or empty if the phrase has not been seen). Referred to as signal hint. Referred to as signal hint.

5.3.2 TLINK Typing Results Using Signals

Table 5.5 shows the results of adding features for temporal signals to the basic TLINK relation typing system. Moving to a feature set which adds SIGNAL information, including signal-event word order/distance data, 61.46 % predictive accuracy is reached. The increase is small when compared to 60.32 % accuracy without this information, but TLINKs that employ a SIGNAL in are a minority in our corpus (possibly due to under-annotation).

The low magnitude of the performance increase seen in Table 5.5 could be due to the way in which training examples are selected. There are in total 11 783 TLINKs in the combined corpus, of which 7.6 % are annotated including a SIGNAL; for just TimeBank v1.2, the figure is higher at 11.2 % (see Table 5.3 and also Fig. 5.1). The proportion of signalled TLINKs in our data – event-event links in the combined AQUAINT/TimeBank 1.2 corpus – is lowest at 5.1 %. It is possible that signalled TLINKs are classified significantly better using this extended feature set, but account for such a small part of this dataset that the overall difference is small. To test this, the experiment is repeated, this time splitting the dataset into signalled and non-signalled TLINKs.

If there is no performance difference between feature sets when classifying TLINKs that do use signals, then our hypothesis is incorrect, or the features used are insufficiently representative. If signals are helpful, and our features capture information useful for temporal ordering, we expect a performance increase when automatically classifying signalled TLINKs. Results in Table 5.6 support our hypothesis that signals are useful, but we are performing nowhere near the maximum level suggested above. Data sparsity is a problem here, as the combined corpus only contains 319 suitable TLINKs, and both source corpora show evidence of signal under-annotation. The results also suggest that the signal hint feature was not helpful; this is the same result found by [15].

Exploring the strongest feature set (basic+signals; no hint), and attempting to combat the data sparsity problem, we used 10-fold cross validation instead of a split; results are also in Table 5.6. This again shows a distinct improvement in the predictive accuracy of signalled TLINKs using this feature set over the features in previous work. Cross-validation also gives better overall accuracy. This is likely because of the low volumes of training data mean that the real difference in number of examples between 10-fold cross validation and a one-third/two-thirds split can make a large contribution to classifier performance.

5.3.3 Utility Assessment Summary

When learning to classify signalled TLINKs, there is a significant increase in predictive accuracy when features describing signals are introduced. This suggests that signals are useful when it comes to providing information for classifying temporal links, and also that the features we have used to describe them are effective.

Now that it is confirmed that signals are helpful in temporal relation typing, the next task is to determine how to annotate them automatically. A good account of existing resources may give clues for this process. After this, one needs to explore how to discriminate whether or not a candidate signal expression is used as a temporal signal in text. Next, after finding a temporal signal, we need to determine which intervals it temporally connects. Finally, we can attempt to annotate a temporal link based on the signal.

5.4 Corpus Analysis

In order to understand temporal signals, this section investigates the role of hand-annotated temporal signals in the TimeBank dataset. Further, casual examination reveals that words acting in a temporal signal role in existing datasets are not always annotated as such. Under-annotation can depend on how well the annotator understands the task, and the clarity of annotation guidelines. This section discusses the TimeML definition of signals and describes an augmented corpus which has received extra annotation.

Using the TimeBank corpus, we set out to answer the following questions:

-

1.

Of the expressions which can function as temporal signals, what proportion of their usage in the TimeBank corpus is as a temporal signal? E.g. how ambiguous are these expressions in terms of their role as temporal signals?

-

2.

Of the occurrences of these expressions as temporal signals, how ambiguous are they with respect to the temporal relation they convey?

The following section (which includes material from [9]) provides provisional answers to these questions – provisional as one of the difficulties we encountered was significant under-annotation of temporal signals in TimeBank. We have addressed this to some extent, but more work remains to be done. Nonetheless we believe the current study provides important insights into the behaviour of temporal signals and how they may be exploited by computational systems carrying out the temporal relation detection task.

5.4.1 Signals in TimeBank

The TimeML <SIGNAL> element bounds a lexicalised temporal signal. Summary information on the SIGNAL elements in TimeBank 1.2 is in Table 5.7 and the number of links per signal in Table 5.8. Although permitted under TimeML 1.2.1 for denoting cardinality, no signals have been assigned to event instances for this purpose, although there is one unassigned signal annotation that does indicate event cardinality.

In cases where a specific duration occurs as part of a complex qualifier-head temporal signal, e.g. two weeks after, TimeBank has followed the convention that the signal head alone is annotated as a SIGNAL and the qualifier is annotated as a TIMEX3 of type duration.

5.4.2 Relation Type Ambiguity

The nature of the temporal relation described by a signal is not constant for the same signal phrase, though each signal tends to describe a particular relation type more often than other types. Table 5.2 gives an excerpt of data showing which temporal relations are made explicit by each signal expression. The variation in relation type associated with a signal is not as great as it might appear as the assignment of temporal relation type has an element of arbitrariness – one may choose to annotate a before or after relation for the same event pair by simply reversing the temporal link’s argument order, for example. There is no TimeML convention regarding how TLINK annotation arguments should be ordered. Nevertheless, it is possible to draw useful information from the table; for example, one can see that meanwhile is much more likely to suggest some sort of temporal overlap between events than an ordering where arguments occur discretely.

5.4.2.1 Closed Class of Signals

To what extent are the words sometimes annotated as temporal signals in TimeBank actually used as time relaters?

As temporal signals and phrases are likely to be a closed class of words, our approach is to first define a set of temporal signal candidate words. For each occurrence of one of these words in a discourse, we will decide if it is a temporal signal or not.

Because they do not contribute to temporal ordering, annotated signals that indicate the cardinality of recurring events were removed before experimentation. We have derived a closed class of 102 signal words and phrases from [17] (see for example Sect. 10.5, “Time Relaters”), given in Table 5.9. This list is long but may not be comprehensive. Automatic signal annotation can be approached by finding words in a given document that are both within this closed class of candidate signal phrases and also occur having a temporal sense. TimeBank contains 62 unique signal words and phrases (ignoring case), annotated in 688 SIGNAL elements and used by 718 TLINKs. Of these 62, over half (39) are also found in our list above. The remaining 23 signals correspond to only 45 signal mentions, supporting 46 temporal links. Thus, if we can perfectly annotate every signal we find in text based on our closed class, we will have described 93.1 % of TLINK-supporting signals and be better able to label 93.6 % of TLINKs that have a supporting signal.

To provide a surface characterisation of the role signals play, the distribution of their part of speech tag (from PTB) over signals in TimeBank is given in Table 5.10. Many uses are as prepositions, perhaps for attaching events to each other by means of prepositional phrases.

Of the closed class entries detailed in Table 5.9, 25 entries occur in the corpus but are never annotated as signal text: again, directly, early, finally, first, here, last, late, next, now, recently, eventually, forever, formerly, frequently, initially, instantly, meantime, originally, prior, shortly, sometime, subsequent, subsequently and suddenly.

We could also derive an alternative signal list by extracting all phrases that are found as the first child of SBAR-TMP constituent tags, as suggested in Dorr and Gaasterlaand [18]. For example, in Fig. 5.2 (an automatically parsed and function-tagged sentence from TimeBank’s wsj_0520.tml), the first child of the SBAR-TMP constituent is a one-leaf IN tag. The text is after, which we would treat as a temporal signal. This approach returns a restrictive set of temporal signals, shown in Table 5.11, though contains few false positives.

5.4.3 Temporal Versus Non-temporal Uses

The semantic function that a temporal signal expression performs is that of relating two temporal entities. However, the words that can function as temporal signals also play other roles.

For example, one may use before to indicate that one event happened temporally prior to another. This word does not always have this meaning.

Example 12

“I will drag you before the court!”

In Example 12, the reading is that one will be summoned to appear in front of the court – the spatial sense – and not that the reader will be dragged, and then later the court will be dragged. It is important to know the correct sense of these connective words and phrases.

Of all temporal relations (TLINKs) in the English TimeBank, 11.2 % use a temporal signal in the original annotation (Table 5.3). It is important to note that some instances of signal expressions are used by more than one temporal link; see Table 5.8 for details. The most frequent signal word was “in”, accounting for 24.8 % of all signal-using TLINKs. However, only 13.3 % of occurrences of the word “in” have a temporal sense. The word “after” is far more likely to occur in a temporal sense (91.7 % of all occurrences).

As an aside, the notion that temporal signals might be easily picked out based upon word class may be dispelled by examining the distribution of parts-of-speech possessed by temporal signals – see Table 5.10. Part of speech is not a reliable disambiguator of sense, in this case.

5.4.4 Parallels to Spatial Representations in Natural Language

Time and space are related and often an event will be positioned in both. Language used for describing time and language used for describing space are often similar, not least in the fact they they both use signals and often even use the same words as signals. Temporal signals relate a pair of temporal intervals, and spatial signals relate a pair of regions. Although not the focus of this chapter, it is useful to note the common and contrasting behaviours of temporal and spatial signals that emerged during investigation.

SpatialML [19] is an annotation scheme for spatial entities and relations in discourse.Footnote 3 Among other things it includes elements for annotating relations between spatial entities.

Links in SpatialML may be topological or relative. Topological links include containment, connection and other links from a fixed set based on the RCC8 calculus. SpatialML relative links, on the other hand, express spatial trajectories between locations.

In the revised ACE 2005 SpatialML annotations,Footnote 4 97.5 % of all RLINKs (the SpatialML representation for a relative spatial link) have at least one accompanying textual signal (See Table 5.12). Compared to TimeBank’s 11.2 % of TLINKs having a signal, SpatialML relative links are much more likely to use an explicit signal than TimeML temporal relations. This may be because the mechanisms available in language for expressing temporal relations are wider than those for relating spatial entities. For example, to relate events in English, one may choose to use a tense and aspect (which involves inflection or added auxiliaries) instead of adding a signal word. Furthermore, there are three spatial dimensions in which to describe an entity; in contrast, the arrow of time supplied a single unidirectional dimension, which limits range of movements and relations available.

Unlike with relative links, signal usage is lower with topological links. Only 1.85 % of the latter use a signal. This distinction between relative and the temporal equivalent of topological links is not made in TimeML.

This difference in signal usage rate between topological and relative links may be because topological links are used to express relations that we infer from world knowledge and do not lexicalise. In “A Ugandan village”, one does not need to explain that the village is in Uganda. Relative links define one region relative to another. The nature of the relation is not easy to discern and so needs to be made explicit.

Because of the dominance of spatio-temporal sense frequencies over other uses of many of the words in this class, work on temporal signals may provide insights for future researchers working on determining spatial labels using spatial signals. This chapter will later (Sect. 5.6.4.3) on show how indications of spatial signal usage help discern temporal from non-temporal candidate signal words.

5.5 Adding Missing Signal Annotations

Given an idea of what signals are and evidence of their utility in temporal relation typing, the next step was to attempt automatic signal annotation. This was a two stage process, first concerned with identifying signal expressions that occur in a temporal sense, and then with determining which pair of events/timexes any given temporal signal co-ordinates. A preliminary approach to finding temporal signal expressions found that the dataset used suffered from low annotation quality, and so after outlining the preliminary approach, this section focuses on how the resources could be (and were) improved.

Upon examination of the non-annotated instances of words that usually occur as a temporal signal (such as after) it became evident that TimeBank’s signals are under-annotated. In an effort to boost performance, and as there is evidence of annotation errors in the source data, we revisited the original annotations.

This chapter outlines the signal expression discrimination task only briefly, instead focusing on corpus re-annotation. The next section is dedicated entirely to the discrimination problem.

5.5.1 Preliminary Signal Discrimination

The overall problem is to find expressions in documents that occur as temporal signals (a fuller problem definition is given below, in Sect. 5.6). This was approached by considering all occurrences of expressions from the above closed class of expressions (e.g. candidate signals) and judging, for each instance, whether or not it had a temporal sense. Judgement was performed by a supervised classifier (maximum entropy), trained and evaluated using cross-validation, based on the features listed in Sect. 5.6.4.2.

Failure analysis of this initial approach suggested that the corpus was too poorly annotated to serve either as representative, solid training data for signal discrimination, or for an evaluation set for a signal discrimination approach. Some re-annotation was necessary to improve the quality of the ground truth data. This section relates the approach to, and results of, that re-annotation.

5.5.2 Clarifying Signal Annotation Guidelines

Given that the signal annotations in TimeBank are not of sufficient quality, there are three potential causes for this: annotator fatigue, insufficient annotation guidelines, or a poor definition of signals. As annotator fatigue depends on the method of an individual annotation exercise, and TimeML’s signal definition is sufficient, we seek to clarify the annotation guidelines.

To clarify the guidelines, it’s important to have a thorough definition of temporal signals. While TimeML’s definition is sufficient, this chapter offers an extended definition of temporal signals in Sect. 5.2.

Signal surface forms have a compound structure of a head and an optional qualifier. The head describes the general action of the signal phrase and may optionally have an attached modifying phrase. Only the head should be annotated.

Example 13

“I arrived long after the party had finished.”

In Example 13, the word after is annotated, and the qualifier long is not. This would be annotated in TimeML something like:

I arrived long<SIGNAL>after</SIGNAL> the party had finished.

Further, a temporal signal has two arguments, which are timexes or events which are temporally related. Often both of these are explicit in the text immediately surrounding the signal. However, one may be elsewhere, as an implied argument.

5.5.3 Curation Procedure

The goal is to create a firm ground truth for further investigation. Given the extended definition of a signal and the guideline clarifications just mentioned, this section details the ensuing exercise of hand-curating TimeBank to repair signal annotations.

A subset of signal words was selected for re-annotation. All instances of these words (both as temporal and non-temporal) were re-annotated with TimeML, adding EVENTs, TIMEX3s and SIGNALs where necessary to create a signalled TLINK. We will reference this version of TimeBank with curated signal annotations as TB-sig.

Evaluating correct classifications against erroneous reference data will lead to artificially decreased performance. To verify that the training data (which is also evaluation data for cross-validation) is from a correct annotation, negative examples of signal words were checked manually. False negatives are removed by annotating them as TimeML signals, associating them with the appropriate TLINK or adding TLINKs and EVENTs where necessary.

Checking the entire corpus would be an exhaustive exercise. To increase the chance of finding missing annotations while limiting the search space during annotation, potentially high-impact signal words were prioritised. These were drawn from a set of signal phrases that fit the following criteria: (a) more than 10 instances in the corpus, and at least one of: (b) accuracy on positive examples less than 50 % or (c) accuracy on negative examples less than 50 % or (d) below-baseline classification performance. The data from this second pass is in Table 5.13.

5.5.4 Signal Re-Annotation Observations

During curation, some observations were made regarding specific signal expressions. In some cases, these observations led to the suggestion of a feature that may help discriminate temporal and non-temporal uses of a certain expression. This section reports those observations.

Previously

TimeBank contains eight instances of the word previously that were not annotated as a signal. Of these, all were being used as temporal signals. The word only takes one event or time as its direct argument, which is placed temporally before an event or time that is in focus. For example:

“X reported a third-quarter loss, citing a previously announced capital restructuring program”

In this sentence, the second argument of previously is “announced”, which is temporally situated before its first argument (“reported”). When previously occurs at the top of a paragraph, the temporal element that has focus is either document creation time or, if one has been specified in previous discourse, the time currently in focus.

After

Of the nineteen instances of this word not annotated as temporal, only three were actually non-temporal. The cases that were non-temporal were a different sense of the word. The temporal signals are adverbial, with a temporal function. Two non-temporal cases used a positional sense. The last case was in a phrasal verb to go after; “whether we would go after attorney’s fees”.

Throughout

All the cases of throughout not marked as signals were not temporal signals. Four were found in the newswire header, which carries meta-information in a controlled language heavily laden with acronyms and jargon and is not prose.

Early

Three of the negative instances of early are possibly not correctly annotated; the other 32 negatives are accurate. Of these three, one has a signal use, in part of a longer signal phrase “as early as”. The remaining two cases look like temporal signals. However, they are adjectival and only take one argument; there is no comparison, so we cannot say that the argument event is earlier than anything else. For this reason, they are deemed correctly annotated as non-signals.

When

There are 35 annotated and 27 non-annotated occurrences of this phrase. It indicates either an overlap between intervals, or a point relation that matches an interval’s start. Twenty-three of the twenty-seven non-annotated occurrences are used as temporal signals. Two of the remaining four are in negated phrases and not used to link an interval pair. for example, “did not say when the reported attempt occurred”. The other two are used in context setting phrases, e.g. “we think he is someone who is capable of rational judgements when it comes to power” (where when it comes to occurs in the sense of “with regard to”), which are not temporal in nature.

While

The cases of while that have not been annotated as a signal – the majority class, 33 to 6 – are often used in a contrastive sense. This does suggest that the connected events have some overlap, often between statives. For example, “But while the two Slavic neighbours see themselves as natural partners, their relations since the breakup of the Soviet Union have been bedeviled”. As two states described in the same sentences are likely to temporally overlap and any events or times outside or bounding these states will be related to the state, it is unlikely that any contribution to TLINK annotation would be made by linking the two states with a “roughly simultaneous” relation; the closest suitable label is TempEval’s overlap relation [20].

Example 14

“nor can the government easily back down on promised protection for a privatized company while it proceeds with ...”

The cases of while that were not of this sense were easier to annotate. Sometimes it was used as a temporal expression; “for a while”. Other times, it was not used in a contrastive sense, but instead modal – see Example 14. The four cases of non-contrastive usage were annotated as temporal signals.

Before

Three of the ten negative examples are correctly annotated. They are before in the spatial sense of “in front of” (as in “The procedures are to go before the Security Council next week”) and also a logical before that does not link instantiated or specific events (“ before taxes”). The remaining seven unannotated examples of the word are all temporal signals. These directly precede either an NP describing a nominalised event, or directly precede a subordinate clause (e.g. (IN before, S) – see Fig. 5.3).

Both cases of before that were not temporal signals were parsed and function tagged as if they were.Footnote 5 They were given the structure (PP-TMP, (IN before) ...) as shown in Fig. 5.4.

Until

All fourteen non-annotated instances of until should have been annotated as temporal signals. This word suggests a TimeML ibefore relation, unless qualified otherwise by something like “not until” or “at least until”.

Already

There were thirteen positive examples of already. All of the non-annotated examples had a non-temporal sense as per our description of temporal signals. The word tends to be used for emphasis, but can also suggest a broad “before DCT” position, which goes without saying for any past and present tensed events. As already can be removed without changing the temporal links present in a sentence, no further examples of this were annotated beyond the thirteen present in TimeBank.

Meanwhile

This word tends to refer to a reference or event time introduced earlier in discourse, often from the same sentence. As well as a temporal sense, it can have a contrastive “despite”-like meaning. It is often used to link state-class events, which are difficult to link unless one of their bounds is specific (see Example 15). In this case, it is not possible to describe the nature of the relation between the start and endpoints of either event interval, and so meanwhile suggests some kind of temporal overlap but nothing more. Sometimes meanwhile is used with no previous temporal reference. In these cases, the implicit argument is DCT. Five of the ten non-annotated meanwhiles were temporal signals.

Example 15

Obama was president. Meanwhile, I was a musician.

Again

This word shows recurrence and is always used for this purpose where it occurs in TimeBank not annotated as a temporal signal. No instances of “again” were annotated.

Former

This word indicates a state that persisted before DCT or current speech time and has now finished. Generally the construction that is found is an NP, which contains an optional determiner, followed by former and then a substituent NP which may be annotated as an EVENT of class state. This configuration suggests a TLINK that places the event before the state’s utterance.

Example 16

“The San Francisco sewage plant was named in honour of former President Bush.”

In Example 16, there is a state-class event – President – that at one time has applied to the named entity Bush. The signal expression former indicates that this state terminated before the time of the sentence’s utterance.

Three-quarters of the non-annotated instances of former in TimeBank are temporal signals. An example non-temporal occurrence is shown in Fig. 5.5

Recently

Although recently is a temporal adverb, it cannot be applied to posterior-tensed verbs (using Reichenbach’s tense nomenclature [21]). In the corpus, these are only seen in reported speech or of verbal events that happened before DCT. Recently adds a qualitative distance between event and utterance time, but is of reduced use when we can already use tense information.

The phrase “Until recently” appears awkward when cast as a temporal signal but can be interpreted as “before DCT”, with the interval’s endpoint being close to DCT. In this case, recently functions as a temporal expression, not a signal.

Only one of the non-annotated recentlys in TimeBank is a temporal signal. The exception, “More recently”, includes a comparative and is annotated as a TIMEX3; both this phrase and, e.g., “less recently” suggest a relation to a previously-mentioned (and in-focus) past event. As a result, we posit that recently on its own behaves as an abstract temporal point best annotated as a timex (as seen in the behaviour of “until recently” – until is the signal here, recently a TIMEX3 of value PAST_REF). Structures such as [comparative] recently may be interpreted as a qualified temporal signal, as they convey information about the relative ordering of the event that they dominate vent compared with a previously mentioned interval.

5.5.5 TB-Sig Summary

Upon examination of the non-annotated instances of words that often occur as a temporal signal (such as after) it became evident that TimeBank’s signals are under-annotated. As we are certain of some annotation errors in the source data, we revisited the original annotations. A subset of signal words was selected for re-annotation. This set consisted of signals that were ambiguous (occurred temporally close to 50 % of the time) or that we expected, based on informal observations, would yield a number of missed temporal annotations. All temporal instances of these words were re-annotated with TimeML, adding EVENTs, TIMEX3s and TLINKs where necessary to create a signalled TLINK.

A single annotator checked the source documents and annotated 69 extra signals, as well as adding 34 events, 1 temporal expression and 48 extra temporal links. This left 712 SIGNALs that support TLINKs and 780 TLINKs that use a signal, with 54 signals being used by more than one TLINK. No events, timexes or signals were removed.

A summary of frequent candidate signal expressions is given in Table 5.14. The corpus is available via http://derczynski.com/sheffield/. Given this new, curated ground truth for temporal signal annotation, we are now ready to begin approach automatic signal annotation: firstly distinguishing temporal from non-temporal candidate expressions, and then linking signal expressions with the interval annotations that they co-ordinate.

5.6 Signal Discrimination

The words and phrases that can act as temporal signals do not always convey a temporal relation. Some may indicate possession, or a spatial relation (see Sect. 5.4.4). If we are to automatically annotate signals, we need to develop a method for choosing which words and phrases in a discourse are temporal signals. This task, of finding temporal signal phrases, is called temporal signal discrimination.

This section begins with a problem definition and description of the method we adopted to address the problem. An automatic signal discrimination technique is trained using TimeML annotations. Finally, we present results showing automatic accuracy near or above gold-standard corpus IAA.

5.6.1 Problem Definition

The temporal signal discrimination problem is as follows: Given a closed class of signal words or phrases and a discourse annotated with times and events, identify the temporal signals. This task resembles word sense disambiguation [22, 23], in that given a word or phrase that may have multiple senses and its context, we have to determine if the active sense in context is a temporal one.

5.6.2 Method

The approach taken to automatic temporal signal discrimination is a supervised learning one.

We agreed a corpus and a set of words that could occur as signals. Next, we determined a set of feature variables that describe a word in context. After this we described each occurrence of a potential signal phrase in the corpus as a feature vector. Each instance was assigned a binary classification: positive if it is TimeML-annotated as a signal that is associated with a TLINK, or negative otherwise. Finally, we trained a classifier with these instances and evaluated its performance.

5.6.3 Discrimination Feature Extraction

As well as surface features from TimeML, syntactic features were used as part of feature extraction for signal discrimination.

5.6.3.1 Parsing and Other Syntactic Annotation

Syntactic information is likely to be of use in the signal discrimination task. Lapata [24] had some measure of success at learning a temporal relation classifier using sentences that contained signals, with syntactic information as a core part of their feature set. Their work used the BLLIP corpus,Footnote 6 which contains around 30 million words from Wall Street Journal articles and constituent parses generated by the Charniak parser [25].

To attempt to partially replicate this source information, we parsed the text of the TimeBank corpus. Note that TB-sig and TimeBank differ only in the annotations that they make over text; the actual words in both corpora are the same, and in the same order. To do this, we removed markup from each document and separated the remaining discourse into sentences using the Punkt sentence tokeniser [26], as part of CAVaT preprocessing [11]. Each sentence was then word-tokenised using NLTK’s treebank tokeniser.Footnote 7 To maintain word alignment consistency with the non-parsed text stored in CAVaT, we needed a parser that accepted external tokenisation. We chose the Stanford parser [27] for generation of constituent parses.

In addition to constituent parses, the BLLIP corpus includes function tags . These are optional labels [28] attached to nodes in a constituent tree. Function tags extend a constituent tag by providing additional information about the role it plays in a sentence. They exist in three main groups; syntactic, semantic and topical [29]. Of direct interest to us is the -TMP tag, which indicates temporal function. An example of this tag is given in Fig. 5.6, where the first children of an SBAR-TMP node comprise a temporal signal.

Early work on function tag assignment in conjunction with the Charniak parser was performed by Blaheta and Charniak [30]. Their approach found that choosing whether or not to assign any tag was a significant and difficult component of the task. Thus, evaluations are split into “with-null” and “no-null” figures, where with-null refers to tag assignment accuracy including the assignment of no tag to untagged constituents and no-null is the proportion of correctly-tagged constituents excluding non-tagged nodes. We refer to no-null performance figures when discussing taggers. The initial Blaheta tagger had an F-measure of 67.8 % on the semantic form/function category, which includes the TMP tag.

We would like to use a function tagger with good TMP tagging performance. This involved selecting the right tagger. Of these, Musillo [31] simultaneously parsed and tagged text using a Simple Synchrony Parser and an extended tag set. This generated lower results than Blaheta’s original attempt though this was improved to provide a marginal increase using input sentences annotated by an SVM tagger. Blaheta’s final tagger [32] improved semantic tagging to 83.4 % F-measure, which was comparable to later work in which overall tagging performance increased [33, 34]. As the final Blaheta tagger is freely available and openly distributed, we used this to augment our constituency parser (the Stanford parser [27]).

We only treated as positive examples signals that were associated with a TLINK. Signals that only provided information regarding event cardinality, or to subordinate or aspectual links, were ignored. Signals with text not in our closed class of signal words and phrases were ignored.

5.6.3.2 Basic Feature Set

Our initial features were both syntactic and lexical; a list of them is given below. Lexical and TimeML-based features were extracted directly from a CAVaT database constructed from TimeBank [11]. We use NLTK’s built-in Maximum Entropy classifier.

-

a.

Part-of-speech from PTB tagset [35]. (sig_pos)

-

b.

Function tag from Blaheta tagger; if there is more than one and the set includes TMP, assign TMP, otherwise assign the first listed. (sig_ftag)

-

c.

Constituent label and function tag of parent node in parse tree (two features). (parent_pos, parent_ftag)

-

d.

Constituent label and function tag of grandparent node in parse tree (two features). (gparent_pos, gparent_ftag)

-

e.

Is there any node with the TMP function tag between this token and the parse tree root? (tmplabel_in_path)

-

f.

Signal text. (text)

-

g.

Text of next token in sentence (if there is one). (next_token)

-

h.

Text of previous token in sentence (if there is one). (previous_token)

-

i.

Is there a TIMEX3 in the n following tokens? (timex_in_n_after)

-

j.

Is there an EVENT in the n following tokens? (event_in_n_after)

-

k.

Is there a TIMEX3 in the n preceding tokens? (timex_in_n_before)

-

l.

Is there an EVENT in the n preceding tokens? (event_in_n_before)

-

m.

The Stanford dependency relation of the candidate word to its parent. ()

In our work, \(n=2\) for the interval proximity features, based on an informed guess after looking at the data. The optimal value, depending on direction of context and type of interval (event vs. timex) search for, is left to future work.

There are 102 entries in our closed class of signal words/phrases; this set is kept constant throughout all experiments. In TimeBank there are 7 014 mentions of the members of this set, including both temporal and non-temporal mentions.

5.6.3.3 Extended Feature Set

Curation of signals, as detailed in Sect. 5.5, led to some direct observations about specific signal words. These observations in some cases suggested specific sources of signal discrimination information thar could potentially be translated to features. From the observations above, the new features that could be added were:

-

n.

Flag to see if signal text is in a verb group (before, after) (in_verb_group)

-

o.

Flag to see if a token at the top of a paragraph (previously)

-

p.

Flags to see if the preceding or following word(s) are part of a verb group (after) (following / preceding_in_verb_group)

-

q.

What is the highest-level subtree that begins at the next token (before) (following_subtree)

-

r.

What is the highest-level subtree that ends at the preceding token (preceding_ subtree)

-

s.

PoS of the next token and previous token (before, after) (following/ preceding_pos)

-

t.

PoS of the next event within n tokens (before, former) (next_event_pos)

-

u.

Type (TimeML class) of the next event within n tokens (former, meanwhile) (next_event_class)

-

v.

TimeML Tense and aspect of the next event within n tokens (already) (next_ event_tense / aspect)

-

w.

NP begins at next token? (former) (np_next)

-

x.

Is the preceding token a comparative, i.e., is it one of JJR or RBR? (recently) (preceding_comparative)

All of these were implemented and added as features, except the paragraph-top feature (due to a lack of a reliable document segmentation tool). In addition, we removed some noisy features that seemed to be causing overfitting within our sparse data set; the offset of the word within its sentence and the preceding & following token texts. We used the full constituent tag of subtrees for the preceding_subtree and following_subtree features, including .

5.6.3.4 Multivalent Tags

In a minority of cases, constituents and terminals were assigned multiple function tags. For example, values such as PRD-TPC-NOM or TMP-SBJ would be appended. Noticing that these instances were assigned high weights by a Naïve Bayes classifier, we measured error reduction on multiple variations of subtree tag feature representations. Results are shown in Table 5.15. It was found that reducing data sparsity by providing two separate features per subtree (for constituent tag and function tag) provided best overall performance for MaxEnt discriminators, but ID3 benefited most from the feature extraction that gave the sparsest values – full subtree labels.

5.6.3.5 Choice of Learning Algorithm

Signal discrimination is a binary classification problem: is a given word or phrase a temporal signal or not? We have constrained the set of words we attempt to classify by defining a closed class of signal words and described a set of features with which we will represent candidate words and context. We now need to choose a binary classification algorithm. We use a Naïve Bayes classifier, decision trees, a maximum-entropy classifier and adaptive boosting.

For rapid learning and quick feedback, we worked with the Naïve Bayes classifier. Naïve Bayes models are computationally cheap to learn. Its inductive bias includes the independence assumption – that all features are independent from each other. This is not true in our case, given the heavily interdependent nature of most of our features: well-formed syntactic structures are inherently constrained by grammar and the values of many of our features depend on syntax at multiple places in the same sentence or paragraph. For example, the parts of speech of any given token has some bearing on the part of speech of the following one, and these are again not independent of the parse tree of the sentence in which they occur. We also use a decision tree classifiers, which do not have this particular bias and are computationally quick to learn, but do not always cope well with noise. ID3 and C4.5 types are used. C4.5 attempts to deal with noise in training data by performing pruning on the tree after construction [36].

We also evaluate performance of our feature set with a maximum entropy classifier. This regression-based model assumes low collinearity between features, which is a less constraining assumption than that of the Naïve Bayes classifier, though problems may arise if we use highly-correlated features. Finally, we use adaptive boosting with decision stumps [37, 38], which is constrained to binary classification and can yield high-performance results. Adaptive boosting reduces the impact of the typically computationally intensive SVM-learning process and typically displays little overfitting, which is helpful with smaller datasets such as ours.

Performance was improved by removing features that have a high number of values (for example, the text of the token after a signal). We suspect this is due to them leading to overfitting.

5.6.4 Discrimination Evaluation

We have described how we trained a classifier using cross-validation. We evaluated performance using a held-out evaluation set, and determined scores by counting correct classifications and measuring both percentage of correctly classified instances and also the error-reduction compared to a baseline.

5.6.4.1 Baselines

To evaluate the performance of our approaches, it is useful to describe some simple annotation methods as baselines. A summary of our baselines is given in Table 5.16 and we explain each of them below.

One simple baseline is to find the most common classification and assign this to all instances. In our corpus, instances of phrases from our list of potential signals are used non-temporally nearly all the time (out of 6 091 instances of potential signal phrases, only 688 are annotated as being temporal signals in TimeBank – 11.3 %) and so our most common case is to classify everything as not being a temporal signal, regardless of the signal text.

We also use baselines that mark all words found in the signal phrase list as temporal signals if they have a part-of-speech tag of RB or IN, according to NLTK’s built-in maximum entropy tagger. Values are quoted for overall classification accuracy, as well as accuracy on positive examples (the minority of our training data).

5.6.4.1.1 Most Common Class

The training set is confined to just signal annotations in TimeBank/TB-sig, that are also in the closed class of signal expressions detailed above in Table 5.9. This introduces an inherent performance cap to the overall approach, but assumes no knowledge of whichever corpus is being used as the evaluation set. Of 4 576 training instances, 3 969 are negative (non-temporal) and 607 are positive (having a temporal meaning). The most-common-class is negative and if we assign this label to all mentions of members of the set, classifier accuracy is 86.7 % but no signals are identified (giving an effective F1 of zero if we imagine this as a signal recognition task); not a very informative baseline.

5.6.4.1.2 Class Member and Signal Word Tag

Of all leaf labels, IN and WRB have the highest proportion of signals (Table 5.10). To this end, we have two simple baselines, where we count a word as a temporal signal if its constituent tag is IN or WRB and it is found in the closed class of signals. Performance for these is given in Table 5.16. For IN, we have 25.6 % overall accuracy, correctly identifying text that is a temporal signal 81.2 % of the time. For WRB, we achieve 86.9 % accuracy, but only 5.77 % on the positive examples.

5.6.4.1.3 Parent Is SBAR-TMP

As mentioned in Sect. 5.4.2.1, one might expect an a SBAR-TMP subtree to begin with a temporal signal and also contain one of the signal’s arguments (see also Fig. 5.6). As we can use our closed class of signal words to differentiate signal head, signal qualifier and event/timex argument, we can look for leaves where the parent is SBAR with TMP in its function tags. This is our SBAR-TMP baseline, that performs at 87.0 % accuracy overall, with 9.88 % on positives – better than WRB, but still poor.

5.6.4.1.4 Parent Has Temporal Function

Limiting ourselves to just signals in subtrees labelled SBAR may be a short-sighted manoeuvre. We added a baseline that labels signal candidates as temporal if their parent has a temporal function label. This baseline achieves classification accuracy of 84.5 % and a 72.7 % accuracy on the positive examples; see Table 5.16.

5.6.4.2 Performance

With our original feature set and based on pre-curation data (e.g. TimeBank v1.2), we achieved a 40 % error reduction in signal discrimination relative to a competitive baseline, as seen in Table 5.17. For the general annotation task, naïve Bayes performed best, with good error reduction overall (26.5 %) and a similar improvement in recognition of positive examples (20.9 %), something that other classifiers did not perform so well with.

With the original feature set, models learned over TB-sig data performed as shown in Table 5.18. Performance using the extended feature set is detailed in Table 5.19, again based on TB-sig.

Our extra annotations introduce new signal instances for the extra terms that we have annotated, reducing the baseline to 85.2 % accuracy (677 positives, compared to 607 before re-annotation) from 86.7 % before – see Table 5.18. Performance using TB-sig is overall better (compared to Table 5.17), which we attribute to having a better-stated problem and less misleading data. Error reduction rate is now over 40 %, with overall accuracy just under 92 % and up to 75 % on the positive examples. This is better than performance on the original TimeBank data and comparable to the IAA figure of 0.77 for TimeBank’s initial SIGNAL annotation. C4.5 performs particularly well, reaching near-highest error reduction rate and good accuracy on positive examples.

The extended feature set, however, does not improve performance in the majority of cases, despite having been generated as part of a rational investigation. Analysis and further work is required to improve upon these signal discrimination results.

5.6.4.3 Useful Features

A sample post-classification analysis of feature weights – using TB-sig and the extended feature set – is presented in Table 5.20, taken from the last of five cross-validation passes. This is from the construction of a model using the whole signal-labelled corpus with a naïve Bayes classifier. The text of the signal is a particularly strong indicator for some of the features that occur much more often as temporal signals than not. We can also see that wh-adverb signals and wh-adverb phrases that contain the candidate signal expression are strong indicators of temporal meanings (features signal_label, parent_label and ending_subtree_label); this may be because of words such as when having only temporal senses. A timex or a past-tensed event occurring after the signal is also an indicator of it being temporal (timex_in_2_after). When the parent constituent or the largest constituent beginning at this point has a temporal function, then a candidate word is more likely to be temporal (parent_function, starting_subtree_function). The -TMP function tag helps to indicate a temporal signal when it dominates the candidate signal word (tmpfunction_in_path). Being followed by a dollar amount suggests that a candidate is not temporal (following_label = $) – for example, in a non-temporal use, “Shares closed at $ 50”; the high weight of this attribute-value pair is likely influenced by the high proportion of financial reporting in TimeBank, which takes a significant part of its text from the Wall Street Journal.

Words and phrases that are within a syntactical structure that has a spatial function (e.g. -LOC) contra-indicate a temporal meaning. This is aligned with the observation that members of our class of signal words often have both temporal and spatial meanings. Further, an adjacent structure with a spatial function (-EXT or -LOC) suggests a temporal function in a candidate word. This suggests collocation based approaches may not correctly discriminate temporal and non-temporal signals; syntactic parsing is required, in order to detect these functional nuances. Having NX (indicating the head of a complex NP) as a parent at can indicate a signal; this could be in cases where we have a signal before a nominalised event, such as in “before the explosion”. Finally, preceding a verb may be an indication of a temporal signal; this reflects the signal’s adverbial nature.

5.6.5 Discrimination on Unseen Data

Up to this point, evaluation has used cross-validation over TimeBank. Our error analysis led to the inclusion of features based on the data that is also part of the evaluation set. To check performance on previously unseen data, a further experiment was performed is as follows. We trained a signal discriminator and associator based on all of TimeBank + the extra signal annotations. The closed class is increased to include all phrases marked as signals in TimeBank. This way, TimeBank is only the training data.

As the final model was developed based partially on observations of TimeBank, it is not suitable to evaluate the final model on this corpus also. A previously unseen set, taken from the AQUAINT corpus (Sect. A.2.2), now forms the evaluation set. The N45 section of the AQUAINT corpus was curated to verify its signal annotations, and then signal discrimination was evaluated over this subcorpus based on a model trained on the entirety of TB-sig. The relevant statistics regarding this evaluation corpus are presented in Table 5.21.

Signal discrimination is measured in two ways. Firstly, classification accuracy shows how many of the candidate signal words were correctly labelled as signals or not-signals. Secondly, the overall performance of the association approach at annotating signals in any given document is described in terms of precision and recall. This takes into account how well the entire approach described above (including the signal words list described in Table 5.9, but not also including those found in TimeBank) does when given the task of identifying temporal signals in an arbitrary text. The augmented AQ/N45 annotations form the gold standard. The “parent has temporal function” baseline (Sect. 5.6.4.1) is used for comparison. Results are presented in Table 5.22. This compares well with the performance on (seen) TB-sig data (Table 5.17).

5.6.6 Summary

In this section, we have explored the task of signal discrimination. We discovered that TimeBank’s signal annotations are incomplete. To remedy this, we have proposed augmentations to the TimeML annotation standards and re-annotated a portion of the corpus. We have also defined a set of features that can describe a temporal signal in context and constrained our search space to just words and phrases in a closed class of signal words. As a result, we have been able to train a classifier to detect temporal signals at near-IAA accuracy.

5.7 Signal Association

Temporal signals connect one or more interval pairs and describe the nature of the temporal relation between the pair. This section describes an investigation into how to find the arguments of a temporal signal, thus associating the two arguments. We refer to this task as signal association .

In order to fully annotate temporal signals, we need to determine which arguments they co-ordinate. To this end, the task of determining which times or events are coordinated by a temporal signal is examined as the subject of this section.

5.7.1 Problem Definition

When performing temporal annotation, one needs to identify events and times and can then connect them with temporal links, perhaps using an associated signal. In fact, every time that a temporal signal is annotated, there must be a temporal link present. The signal association problem is: Given text with signal, event and timex annotations, determine which pair of events/times are associated by each signal phrase.

5.7.2 Method

A supervised learning approach is taken to finding which intervals a given signal co-ordinates. TB-Sig is used as the dataset for feature extraction. Two approaches are explored, detailed below. These use a largely common feature set, extracting a number of features for each interval considered and a further set of features describing the signal.

To generate training data given a signal, we will describe events and timexes within the scope of that signal using our feature set. Although any two intervals in a document could be linked by a given signal, the number of intervals or interval pairings one must search through could be large if the entire document is used as potential signal scope. For this reason, scope must be constrained, at a possible performance loss. Given candid examination of the signals in the corpus, the scope of the signal is taken to be the signal’s sentence and also enough previous sentences to include at least two intervals, as well as a DCT timex if present. We are attempting to determine which intervals are associated with the signal.

The goal is to learn a binary function, that can indicate whether or not an association supporting a TLINK exists in a given situation. A TLINK associates two intervals (timex or event) and may specify the type of temporal relation between them. We have tried two approaches to this signal association task; one where we examine \(\langle \)interval, signal\(\rangle \) tuples and another where we examine \(\langle \)interval-pair, signal\(\rangle \) tuples. The gold standard corpus, TimeBank, provides the positive examples. For each signal, there may be up to five valid TLINKs, each shown as an interval pair (see earlier Table 5.8).

For the single interval approach, we train a binary classifier to learn if an interval and signal are linked and then choose the two best candidate intervals for a signal, using classifier confidence to rank similarly-classified intervals. For the interval pair approach, for each signal we examine possible combinations of intervals and create a vector of features based on relations between the intervals and the given signal.

5.7.2.1 Single Interval Approach

In this section, we describe a signal association approach where individual intervals are ranked by their relation to the signal and the top two intervals are deemed to be associated.

Positive training examples came from intervals associated in a gold standard annotation. Negative training examples were taken to be all temporal intervals in the same sentence as the signal that were not associated with the signal. We used cross-validation to learn classifiers and recorded the prediction and confidence of the classifier for each entry in the evaluation fold. After this, for each signal, a list of candidate intervals was determined. The two intervals related to the signal were those classified as related with highest classifier confidence, or if fewer than two positive classifications were made, up to two are taken from lowest-confidence unrelated classifications. That is, for each signal, intervals are ranked in descending order of confidence; the goal is to find the two most likely intervals, and associate them in a TLINK backed by the given signal. Priority is established in this order:

-

1.

High-confidence and classified as related

-

2.

Low-confidence and classified as related

-

3.

Low-confidence and classified as unrelated

-

4.

High-confidence and classified as unrelated

The top two are then associated with a signal. This approach is limited to only detect one pair of intervals per signal.

5.7.2.2 Interval Pair Approach

In contrast to our previous approach, we tried to identify whole \(\langle \)interval-pair, signal\(\rangle \) 3-tuples as either a signalled TLINK or not. This produced a majority of negative examples. We instead only considered intervals where both arguments fell inside a sliding window of sentences, to reduce the heavy skew in training data. A boolean feature describing whether the intervals were in the same sentence was added to our set, as well as two sets of interval-signal relation features and general signal features as described earlier.

5.7.2.3 Surface and Constituent-Parse Features

For the signal association tasks, we used the following surface and constituent-parse features as input to a binary classifier. Constituent parse information comes from running the Stanford Parser [27] over discourse sentences, the bounds of which are determined using the Punkt tokeniser [26] implementation in NLTK. The features describe a single interval/signal pair. We use the same definition of syntactic dominance as [24]; that is, an interval (e.g. event or timex) is syntactically dominated by a signal if the interval’s annotated lexicalisation is found within a parse subtree where the first (leftmost) word of the parse subtree is the signal. Dominance features are included based on their success in signal linking in [24], where dominance was described as the \(V_L\) feature.

-

Is this interval the textually nearest after the signal?

-

Is this interval the textually nearest before the signal?

-

Does the signal syntactically dominate the interval?

-

Signal text (lower case)

-

Signal part of speech

-

Token distance of interval from signal

-

Interval/signal textual order

-

Is there a comma between the interval and signal?

-

Is the interval in the same sentence as the signal?

-

Is the interval DCT or a DCT reference?

-

Interval type (TimeML EVENT class or TIMEX3 type), total 11 values

-

If an event, its TimeML-annotated tense

5.7.2.4 Dependency Parse Features

We use the Stanford dependency parser [39] to return dependency graphs of our PoS-tagged, parsed and function labelled sentences. By default, the dependency parser ignores some words that we consider to be signal words, moving information about removed words in relationships. We configured it to never ignore words. The features that we extracted from sentence dependency parses were:

-

Length of path from interval to root

-

Is the signal a child of the interval?

-

Is the signal a direct parent of the interval?

-

Is the interval the tree root? (e.g., the head event/time)

-

Is the interval directly related to the signal with an advmod or advcl relation?

-

Does the interval modify the root directly? (e.g., is the interval a direct ancestor of the root, regardless of relation type)

-

Does the signal modify the interval directly? (e.g., is the signal a direct ancestor of the interval)

-

What relation does the interval have to its parent?

-

If the signal is a child of the interval, what is the relationship type?

5.7.3 Dataset

Examining some of the instances of temporal relations in TimeBank which have an attached signal, there were often clear syntactic relations between signals and their arguments (which are also the temporal relation’s arguments). Almost all signals co-ordinated two intervals in the same sentence as the signal (Table 5.23). In the cases where they did not, one of three situations prevailed. Firstly, the signal was the first token in the sentence and the argument outside of the sentence was either referenced by a temporal pronoun (as in e.g. “After that , the situation improved.”). Secondly, one argument is an event or time that has remained the temporal focus in discourse at the point where the signal is found, even after new sentences have been introduced. Thirdly, the signal will relate DCT with an interval in its sentence.

5.7.3.1 Closure