Abstract

This work describes and applies the recently introduced, general-purpose perturbative guidance termed variable-time-domain neighboring optimal guidance, which is capable of driving an aerospace vehicle along a specified nominal, optimal path. This goal is achieved by minimizing the second differential of the objective function (related to the flight time) along the perturbed trajectory. This minimization principle leads to deriving all the corrective maneuvers, in the context of an iterative closed-loop guidance scheme. Original analytical developments, based on optimal control theory and adoption of a variable time domain, constitute the theoretical foundation for several original features. The real-time feedback guidance at hand is exempt from the main disadvantages of similar algorithms proposed in the past, such as the occurrence of singularities for the gain matrices. The variable-time-domain neighboring optimal guidance algorithm is applied to two typical aerospace maneuvers: (1) minimum-time climbing path of a Boeing 727 aircraft and (2) interception of fixed and moving targets. Perturbations arising from nonnominal propulsive thrust or atmospheric density and from errors in the initial conditions are included in the dynamical simulations. Extensive Monte Carlo tests are performed, and unequivocally prove the effectiveness and accuracy of the variable-time-domain neighboring optimal guidance algorithm.

Access provided by Autonomous University of Puebla. Download chapter PDF

Similar content being viewed by others

Keywords

These keywords were added by machine and not by the authors. This process is experimental and the keywords may be updated as the learning algorithm improves.

1 Introduction

The problem of driving an aerospace vehicle along a specified path leading to fulfilling the boundary conditions associated with the mission specifications requires defining the corrective actions aimed at compensating nonnominal flight conditions. This means that a feedback control law, or, equivalently, a closed-loop guidance algorithm, is to be defined, on the basis of the current state of the vehicle, evaluated at prescribed sampling times.

Traditionally, two different approaches to guidance exist. Adaptive algorithms compute the flight trajectory at the beginning of each guidance interval, on the basis of feasibility or optimality criteria [3, 19]. Perturbative algorithms assume a specified nominal trajectory, and define the feedback control corrections aimed at maintaining the vehicle in the proximity of the nominal path [7, 9].

Neighboring Optimal Guidance (NOG) is a perturbative guidance concept that relies on the analytical second order optimality conditions, in order to find the corrective control actions in the neighborhood of the reference trajectory. This is an optimal trajectory that satisfies the first and second-order optimality conditions. In general, the neighboring optimal path originates from a perturbed state and is associated with the minimization of the second differential of the objective function. Several time-varying gain matrices, referring to the nominal trajectory, are defined, computed offline, and stored in the onboard computer. Only a limited number of works have been devoted to studying neighboring optimal guidance [1, 4–6, 18, 21]. In particular, a thorough treatment of NOG is due to Chuang [5], who proposed a simple formula for updating the time of flight, and used a very basic strategy to evaluate the gain matrices when the time of flight exceeds its nominal value. Hull [6, 7] supplied further relevant contributions to the topic, using a vector that contains the unknown parameters to optimize and proposing an analytical formulation for the update of the time of flight, albeit only at the initial time. A common difficulty encountered in implementing the NOG consists in the fact that the gain matrices become singular while approaching the final time. As a result, the real-time correction of the time of flight can lead to numerical difficulties so relevant to cause the failure of the guidance algorithm.

This work describes and applies the recently introduced [15, 16], general-purpose variable-time-domain neighboring optimal guidance algorithm (VTD-NOG), on the basis of the general theory of NOG described in [7]. Some fundamental, original features of VTD-NOG are aimed at overcoming the main difficulties related to the use of former NOG schemes, in particular the occurrence of singularities and the lack of an efficient law for the iterative real-time update of the time of flight. This is achieved by adopting a normalized time domain, which leads to defining a novel updating law for the time of flight, a new termination criterion, and a new analytical formulation for the sweep method. Two applications are considered, for the purpose of illustrating the new guidance algorithm: (1) minimum-time-to-climb path of a Boeing 727 aircraft and (2) interception of fixed and moving targets. Specifically, perturbations arising from the imperfect knowledge of the propulsive thrust and from errors in the initial conditions are included in the dynamical modeling. In addition, atmospheric density fluctuations are modeled for application (1). Extensive Monte Carlo (MC) tests are performed, with the intent of demonstrating the effectiveness and accuracy of the variable-time-domain neighboring optimal guidance algorithm.

2 Nominal Trajectory

The nominal trajectory of aerospace vehicles is computed in the absence of any perturbation. For the purpose of applying a neighboring optimal guidance, the nominal path is required to be an optimal trajectory that minimizes a specified objective function.

In general, the spacecraft trajectory is described through the time-varying, n-dimensional state vector x(t) and controlled through the time-varying, m-dimensional control vector u(t); the dynamical evolution over the time interval [t 0, t f ] (with t 0 set to 0 and t f unspecified) depends also on the time-independent, \(\tilde{p}\)-dimensional parameter vector \(\tilde{\mathbf{a}}\). The governing state equations have the general form

and are subject to q boundary conditions

where the subscripts “0” and “f” refer to t 0 and t f . A feasible trajectory is a solution that obeys the state equations (1) and satisfies the boundary conditions (2).

The problem at hand can be reformulated by using the dimensionless normalized time τ defined as

Let the dot denote the derivative with respect to τ hence forward. If \(\mathbf{a}:= \left [\tilde{\mathbf{a}}\quad t_{f}\right ]^{T}\) (and \(p:=\tilde{ p} + 1\)), the state equations (1) are rewritten as

The objective functional to minimize has the following general form:

The spacecraft trajectory optimization problem consists in identifying a feasible solution that minimizes the objective functional J, through selection of the optimal control law u ∗(t) and the optimal parameter vector a ∗, i.e.

2.1 First-Order Necessary Conditions for a Local Extremal

In order to state the necessary conditions for optimality, a Hamiltonian H and a function of the boundary conditions Φ are defined as [7]

where the time-varying, n-dimensional costate vector \(\boldsymbol{\lambda }(\tau )\) and the time-independent, q-dimensional vector \(\boldsymbol{\upsilon }\) are the adjoint variables conjugate to the state equations (4) and to the conditions (2), respectively.

In the presence of an optimal (locally minimizing) solution, the following conditions hold:

For the very general Hamiltonian (7) the Pontryagin minimum principle (8) yields the control variables as functions of the adjoint variables and the state variables; the relations (9) are the adjoint (or costate) equations, together with the related boundary conditions (10); (11) is equivalent to p algebraic scalar equations. If the control u is unconstrained, then (8) implies that H is stationary with respect to u along the optimal path, i.e.

Equations (8) through (11) are well established in optimal control theory (and are proven, for instance, in [7]), and allow translating the optimal control problem into a two-point boundary-value problem. Unknowns are the state x, the parameter vector a, and the adjoint variables \(\boldsymbol{\lambda }\) and \(\boldsymbol{\upsilon }\) (while the optimal control u ∗ is given by (8), as previously remarked). It is straightforward to demonstrate that the condition (11) is equivalent to

where \(\boldsymbol{\mu }_{0}\) and \(\boldsymbol{\mu }_{f}\) are, respectively, the initial and final value (at τ = 1) of the time-varying (p × 1)-vector \(\boldsymbol{\mu }\).

2.2 Second-Order Sufficient Conditions for a Local Minimum

The derivation of the second-order optimality conditions involves the definition of an admissible comparison path, located in the neighborhood of the (local) nominal, optimal solution, associated with the state x ∗, costate \(\boldsymbol{\lambda }^{{\ast}}\), and control u ∗. By definition, an admissible comparison path is a feasible trajectory that satisfies the equations of motion and the boundary conditions. A neighboring optimal path is an admissible comparison trajectory that satisfies also the optimality conditions. The nonexistence of alternative neighboring optimal paths is to be proven in order to guarantee optimality of the nominal solution [7, 8].

The first second-order condition is the Clebsch-Legendre sufficient condition for a minimum [7, 8], i.e. H uu ∗ > 0 (positive definiteness of H uu ∗). In the necessary (weak) form the Hessian H uu ∗ must be positive semidefinite.

In general, a neighboring optimal path located in the proximity of the optimal solution fulfills the feasibility equations (4) and (2) and the optimality conditions (8)–(11) to first order. This means that the state and costate displacements \(\{\delta \mathbf{x},\delta \boldsymbol{\lambda }\}\) (from the optimal solution) satisfy the linear equations deriving from (4) and (9),

in conjunction with the respective linear boundary conditions, derived from (2) and (10),

The fact that the Hamiltonian is stationary with respect to u, i.e. H u ∗ = 0 T, yields

Under the assumption that the Clebsch-Legendre condition is satisfied, (19) is solved for δ u

The parameter condition (11) is replaced by (13), leading to the following relations:

where (22) is written under the assumption that \(\varPhi _{\mathbf{a}\mathbf{x}_{ 0}} = \mathbf{0}\), condition that is met for the problems at hand. It is relatively straightforward to recognize that solving the equation system (14)–(19) and (21)–(22) is equivalent to solving the accessory optimization problem [7, 8], which consists in minimizing the second differential d 2 J. The solution process involves the definition of the sweep variables, through the following relations:

The matrices S, R, m, Q, n, and \(\boldsymbol{\lambda }\) must satisfy the sweep equations (not reported for the sake of conciseness), in conjunction with the respective boundary conditions (prescribed at the final time) [7, 8]. The variations \(d\boldsymbol{\upsilon }\) and d a can be solved simultaneously at τ 0 (at which \(\delta \boldsymbol{\mu }_{0} = \mathbf{0}\), cf. (22)), to yield

If (26) is used at τ 0, then \(\delta \boldsymbol{\lambda }_{0} = \left (\mathbf{S}_{0} -\mathbf{U}_{0}\mathbf{V}_{0}^{-1}\mathbf{U}_{0}^{T}\right )\delta \mathbf{x}_{0}\). Letting \(\hat{\mathbf{S}} = \mathbf{S} -\mathbf{U}\mathbf{V}^{-1}\mathbf{U}^{T}\), the same sweep equation satisfied by S turns out to hold also for \(\hat{\mathbf{S}}\), with boundary condition S → 0 as τ → τ f ( = 1). From the previous relation on \(\delta \boldsymbol{\lambda }_{0}\) and δ x 0 one can conclude that \(\delta \boldsymbol{\lambda }_{0} \rightarrow \mathbf{0}\) as δ x 0 → 0, unless \(\hat{\mathbf{S}}\) tends to infinity at an internal time \(\bar{\tau }\,(\tau _{0} \leq \bar{\tau }<\tau _{f})\), which is referred to as conjugate point. If \(\delta \boldsymbol{\lambda }_{0} \rightarrow \mathbf{0}\) and δ x 0 → 0 then also δ u → 0. In the end, if \(\hat{\mathbf{S}} <\infty\), then no neighboring optimal path exists. This is the Jacobi condition. The use of S is not effective for the purpose of guaranteeing optimality. In fact, cases exist for which S becomes singular, while \(\hat{\mathbf{S}}\) remains finite [7, 8], and this fully justifies the use of \(\hat{\mathbf{S}}\).

It is worth remarking that, with the exception of the displacements \(\{\delta \mathbf{x},\delta \mathbf{u},\delta \mathbf{a},\ \delta \boldsymbol{\upsilon },\delta \boldsymbol{\lambda },\delta \boldsymbol{\mu },\delta \mathbf{x}_{0},\delta \mathbf{x}_{f}\}\), all the vectors and matrices reported in this section are evaluated along the nominal, optimal trajectory.

3 Variable-Time-Domain Neighboring Optimal Guidance

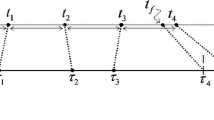

The iterative Variable-Time-Domain Neighboring Optimal Guidance (VTD-NOG) uses the optimal trajectory as the reference path, with the final intent of determining the control correction at each sampling time \(\left \{t_{k}\right \}_{k=0,\ldots,n_{S}}\). These are the times at which the displacement between the actual trajectory, associated with x, and the nominal trajectory, corresponding to x ∗, is evaluated, to yield d x k ≡ δ x k = x(t k ) −x k ∗(t k ). The total number of sampling times, n S , is unspecified, whereas the actual time interval between two successive sampling times is given and denoted with Δ t S , Δ t S = t k+1 − t k . It is apparent that a fundamental ingredient needed to implement VTD-NOG is the formula for determining the overall time of flight t f (k) at time t k . This is equivalent to finding the time-to-go \(\left (t_{f}^{(k)} - t_{k}\right )\) at t k . The following subsection is focused on this issue.

3.1 Time-to-Go Updating Law and Termination Criterion

The fundamental principle that underlies the VTD-NOG scheme consists in finding the control correction δ u(τ) in the generic interval \(\left [\tau _{k},\tau _{k+1}\right ]\) such that the second differential of J is minimized,

while holding the first-order expansions of the state equations, the related final conditions, and the parameter condition (i.e., the second of (22)). In contrast, the first of (22) cannot be used, because in general \(\delta \boldsymbol{\mu }_{k}\neq \mathbf{0}\) at τ k . Minimizing the objective (27) is equivalent to solving the accessory optimization problem, defined in the interval \(\left [\tau _{k},1\right ]\). This means that the relations reported in Sect. 2.2 need to be extended to the generic interval \(\left [\tau _{k},1\right ]\).

Other than the linear expansion of the state and costate equations, the related boundary conditions, and the second relation of (22), also Eqs. (23)–(25), (19), and (20) remain unchanged. However, now (26) is to be evaluated at τ k and becomes

because \(\delta \boldsymbol{\mu }_{k}\neq \mathbf{0}\) (unlike \(\delta \boldsymbol{\mu }_{0} = \mathbf{0}\)). The latter relation supplies the corrections \(d\boldsymbol{\upsilon }\) and d a at τ k as functions of the gain matrices U and V (defined in (26)), evaluated at τ k , and \(\delta \boldsymbol{\mu }_{k}\) (coming from the numerical integration of (21) in the preceding interval \(\left [\tau _{k-1},\tau _{k}\right ]\)). Equation (28) contains the updating law of the total flight time t f , which is included as a component of a. Hence, if dt f (k) denotes the correction on t f ∗ evaluated at τ k , then t f (k) = t f ∗ + dt f (k). As the sampling interval Δ t S is specified, the general formula for τ k is

The overall number of intervals n S is found at the first occurrence of the following condition, associated with the termination of VTD-NOG:

It is worth stressing that the updating formula (28) derives directly from the natural extension of the accessory optimization problem to the time interval \(\left [\tau _{k},1\right ]\). In addition, the introduction of the normalized time τ now reveals its great utility. In fact, all the gain matrices are defined in the normalized interval [0,1] and cannot become singular. Moreover, the limiting values \(\left \{\tau _{k}\right \}_{k=0,\ldots,n_{S}-1}\) are dynamically calculated at each sampling time using (29), while the sampling instants in the actual time domain are specified and equally spaced (cf. Fig. 1). Also the termination criterion (30) has a logical, consistent definition, and corresponds to the upper bound of the interval [0,1], to which τ is constrained.

3.2 Modified Sweep Method

The definition of a neighboring optimal path requires the numerical backward integration of the sweep equations [7]. However, as previously remarked, the matrix \(\hat{\mathbf{S}}\) has practical utility, because S may become singular while \(\hat{\mathbf{S}}\) remains finite in the interval [0,1[. Therefore, a suitable integration technique is based on using the classical sweep equations in the interval \(\left [\tau _{sw},1\right ]\) (where τ sw is sufficiently close to τ f = 1) and then switching to \(\hat{\mathbf{S}}\). However, due to (28), new relations are to be derived for \(\hat{\mathbf{S}}\) and the related matrices.

With this intent, the first step consists in combining (28) with (23)–(24), and leads to obtaining

This relation replaces (23).

Equation (31) is to be employed repeatedly in the derivation of new sweep equations. The related analytical developments are described in full detail in [15], and lead to attaining the following modified sweep equations:

The gain matrices involved in the sweep method, i.e. S, \(\hat{\mathbf{S}}\), R, Q, n, m, and \(\boldsymbol{\alpha }\), can be integrated backward in two steps:

-

1.

in \(\left [\tau _{sw},1\right ]\) the equations of the classical sweep method [7, 15], with the respective boundary conditions, are used.

-

2.

in \(\left [0,\tau _{sw}\right ]\) (32) through (37) are used; R, Q, n, m, and \(\boldsymbol{\alpha }\) are continuous across the time τ sw , whereas \(\hat{\mathbf{S}}\) is given by \(\hat{\mathbf{S}}:= \mathbf{S} -\mathbf{U}\mathbf{V}^{-1}\mathbf{U}^{T}\); in this work, τ sw is set to 0.99.

3.3 Preliminary Offline Computations and Algorithm Structure

The implementation of VTD-NOG requires several preliminary computations that can be completed offline and stored in the onboard computer. First of all, the optimal trajectory is to be determined, together with the related state, costate, and control variables, which are assumed as the nominal ones. In the time domain τ these can be either available analytically or represented as sequences of equally spaced values, e.g. \(\mathbf{u}_{i}^{{\ast}} = \mathbf{u}^{{\ast}}(\tau _{i})\ (i = 0,\ldots,n_{D};\,\,\tau _{0} = 0\,\,\text{and}\,\,\tau _{n_{D}} = 1)\). However, in the presence of perturbations, VTD-NOG determines the control corrections δ u(τ) in each interval \(\left [\tau _{k},\tau _{k+1}\right ]\), where the values \(\left \{\tau _{k}\right \}\) never coincide with the equally spaced values \(\left \{\tau _{i}\right \}\) used for u i ∗. Hence, regardless of the number of points used to represent the control correction δ u(τ) in \(\left [\tau _{k},\tau _{k+1}\right ]\), it is apparent that a suitable interpolation is to be adopted for the control variable u ∗ (provided that no analytical expression is available). In this way, the value of u ∗ can be evaluated at any arbitrary time in the interval 0 ≤ τ ≤ 1. For the same reason also the nominal state x ∗ and costate \(\boldsymbol{\lambda }^{{\ast}}\) need to be interpolated. If a sufficiently large number of points is selected (e.g., n D = 1001), then piecewise linear interpolation is a suitable option. The successive step is the analytical derivation of the matrices

Then, they are evaluated along the nominal trajectory and linearly interpolated, as well as A, B, C, D, E, and F, whose expressions are reported in [17]. Subsequently, the two-step backward integration of the sweep equations described in Sect. 3.2 is performed, and yields the gain matrices \(\hat{\mathbf{S}}\), R, m, Q, n, and \(\boldsymbol{\alpha }\), using also the analytic expressions of W, U, and V. The linear interpolation of all the matrices not yet interpolated concludes the preliminary computations.

On the basis of the optimal reference path, at each time τ k the VTD-NOG algorithm determines the time of flight and the control correction. More specifically, after setting the actual sampling time interval Δ t S , at each \(\tau _{k}\ \left (k = 0,\ldots,n_{S} - 1;\,\,\tau _{0} = 0\right )\) the following steps implement the feedback guidance scheme:

-

1.

Evaluate δ x k .

-

2.

Assume the value of \(\delta \boldsymbol{\mu }\) calculated at the end of the previous interval \(\left [\tau _{k-1},\tau _{k}\right ]\) as \(\delta \boldsymbol{\mu }_{k}\,\,(\delta \boldsymbol{\mu }_{0} = \mathbf{0})\).

-

3.

Calculate the correction dt f (k) and the updated time of flight t f (k).

-

4.

Calculate the limiting value τ k+1.

-

5.

Evaluate \(\delta \boldsymbol{\lambda }_{k}\).

-

6.

Integrate numerically the linear differential system composed of (14), (15), and (21).

-

7.

Determine the control correction δ u(τ) in \(\left [\tau _{k},\tau _{k+1}\right ]\) through (20).

-

8.

Points 1 through 7 are repeated after increasing k by 1, until (30) is satisfied.

Figure 2 portrays a block diagram that illustrates the feedback structure of VTD-NOG. The control and flight time corrections depend on the state displacement δ x (evaluated at specified times) through the time-varying gain matrices, computed offline and stored onboard.

4 Minimum-Time-to-Climb Path of a Boeing 727 Aircraft

As a first example, the variable-time-domain neighboring optimal guidance is applied to the minimum-time ascent path of a Boeing 727 aircraft, which is a mid-size commercial jet aircraft. Its propulsive and aerodynamics characteristics are interpolated on the basis of real data, and come from [2].

4.1 Problem Definition

The aircraft motion is assumed to occur in the vertical plane. In addition, due to the low flight altitude, the flat-Earth approximation is adopted, and the gravitational force is considered constant (g = 9. 80665 m∕s2). In light of these assumptions, the equations of motion are [20]

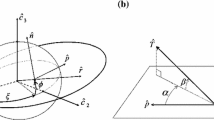

where z, x, γ, and v denote, respectively, the altitude, range, flight path angle, and velocity of the aircraft at hand, and ′ is the derivative with respect to the actual time t; D and L represent the magnitudes of the aerodynamic drag and lift (whose direction is illustrated in Fig. 3), whereas T denotes the thrust magnitude, and m is the mass, which is assumed constant and equal to 81,647 kg. The angle ε is portrayed in Fig. 3 as well, and identifies the thrust direction with respect to the zero lift axis; α is the angle of attack. The two aerodynamic forces are functions of (1) the (dimensionless) lift and drag coefficients c L and c D , (2) the atmospheric density ρ, (3) the instantaneous velocity v, and (4) the reference surface area S, equal to 145 m2 [2], according to the following relations:

Due to low altitude, the atmospheric density at sea level is used for the entire time of flight. The two coefficients c L and c D depend on the angle of attack and are interpolated in the following fashion [2]:

where the values of the constant quantities {c L0, c L1, c L2, α 1, c D0, c D1, c D2} are reported in [2]. Lastly, the thrust magnitude T depends on the instantaneous velocity, and is interpolated as well,

where the constant values of {c T0, c T1, c T2} are again reported in [2].

The minimum-time-to-climb problem consists in finding the optimal time history of the control angle α that minimizes the time t f needed to reach a given altitude in horizontal flight, with a prescribed velocity. This means that the objective function is simply

(the initial time is set to 0). The final conditions are partially specified,

whereas the initial conditions are completely known,

As the range x does not appear in the right-hand sides of the equations of motion nor in the final conditions, x is ignorable; as a result, the state vector x is given by \(\mathbf{x} = \left [z\quad \gamma \quad v\right ]^{T}\), while the control vector u includes only α (u ≡ α). The state equations can be rewritten in terms of τ-derivatives,

The right-hand sides of (50)–(52) form the vector f.

4.2 Optimal Trajectory

The VTD-NOG algorithm requires the preliminary determination of the optimal trajectory, which is assumed as the nominal path, together with the related optimal control, state, and costate vectors (cf. Sect. 2).

For the dynamical system at hand the Hamiltonian H and the function Φ are

The adjoint equations assume the form

The respective boundary conditions (10) do not add any further information (since the state components are completely specified at the final time), and therefore they are not reported. It is worth remarking that the derivatives of L, D, and T with respect to α and v can be easily expressed using (43)–(46) and are continuous. Moreover, the fact that H is stationary with respect to α at the optimal solution yields

Finally, the parameter condition (11) leads to

However, the parameter condition can be proven to be ignorable. As a first step, the components of \(\boldsymbol{\lambda }\) are homogeneous in the adjoint equations (55)–(57). This circumstance implies that if an optimization algorithm is capable of finding some initial value of \(\boldsymbol{\lambda }\) such that \(\boldsymbol{\lambda }_{0} = k_{\lambda }\boldsymbol{\lambda }_{0}^{{\ast}}\ (k_{\lambda }> 0)\) (where∗ denotes optimality), then the same proportionality holds between \(\boldsymbol{\lambda }\) and \(\boldsymbol{\lambda }^{{\ast}}\) at any τ. Moreover, the control u can be found through (58), which yields the same solution if \(\boldsymbol{\lambda }\) replaces \(\boldsymbol{\lambda }^{{\ast}}\). This circumstance implies that if \(\boldsymbol{\lambda }\) is proportional to \(\boldsymbol{\lambda }^{{\ast}}\) then the final conditions are fulfilled at the minimum final time t f ∗. In contrast, the parameter condition is violated, because the integral of (59) turns out to be

Therefore, provided that the proportionality condition holds, the optimal control u ∗ can be determined without considering the parameter condition, which is ignorable as an equality constraint and can be replaced by the inequality constraint

because k λ is an arbitrary positive constant. Once the (nonoptimal) values of the costate variables (fulfilling the proportionality condition) have been determined, the correct (optimal) values can be recovered after calculating k λ by means of (60).

In the end, the problem of determining the minimum-time-to-climb path can be reformulated as a two-point boundary-value problem, in which the unknowns are the initial values of three adjoint variables, as well as the time of flight, i.e. \(\left \{\lambda _{1,0},\lambda _{2,0},\lambda _{3,0},t_{f}\right \}\). The boundary conditions are represented by (48), accompanied by the inequality constraint (61). Once the optimal parameter set has been determined, the state and costate equations can be integrated, using (58) to express the control angle α as a function of the adjoint variables.

The optimal parameter set is determined by means of a simple implementation of swarming algorithm (PSO). This is a heuristic optimization technique, based on the use of a population of individuals (or particles). Selection of the globally optimal parameters is the result of a number of iterations, in which the individuals share their information. This optimization approach is extremely intuitive and easy-to-implement. Nevertheless, in the scientific literature several papers [10–14] prove that the use of this method is effective for solving trajectory optimization problems. A set of canonical units is employed for the problem at hand: the distance unit (DU) and time unit (TU) are

The search space is defined by the inequalities − 1 ≤ λ k, 0 ≤ 1 and 1 TU ≤ t f ≤ 6 TU. It is worth remarking that ignorability of the parameter condition allows defining arbitrarily the range in which the initial values of the adjoint variables are sought. Their correct values (fulfilling also the parameter condition (59)) can be recovered a posteriori, as discussed previously. PSO is used with the intent of minimizing the objective

where each term d k represents a final constraint violation. While minimization of (63) ensures feasibility, enforcement of the necessary conditions for optimality guarantees that the solution found by PSO is (at least locally) optimal.

The PSO algorithm yields a solution associated with \(\tilde{J} = 8.458 \times 10^{-7}\). The corresponding optimal time histories of the state and control components are portrayed in Figs. 4, 5, 6, and 7. From their inspection it is apparent that the minimum climbing path is composed of two phases: an initial ascent phase, up to an altitude greater than the final one, followed by a diving phase. The minimum time to climb equals 55.5 s.

4.3 Application of VTD-NOG

The neighboring optimal guidance algorithm proposed in this work is applied to the minimum-time-to-climb path of the Boeing 727 aircraft. Perturbations from the nominal situation are considered, in order to simulate a realistic scenario. In particular, perturbations on the initial state, thrust magnitude, and atmospheric density are taken into account. Several Monte Carlo campaigns are run, with the intent of obtaining some useful statistical information on the accuracy of the algorithm at hand, in the presence of the previously mentioned deviations, which are simulated stochastically. Monte Carlo campaigns test the guidance algorithm by running a significant number of numerical simulations. Each perturbed quantity in the initial state is associated with a Gaussian distribution, with mean value equal to the respective nominal one and with a specific σ-value, which is related to the statistical dispersion about the mean value. The σ-values are

In the numerical simulations the deviations from the nominal values are constrained to the intervals \(\left [0,2\varDelta \chi ^{(\sigma )}\right ]\ (\chi = z\,\,\text{or}\,\,\gamma )\) and \(\left [-2\varDelta v^{(\sigma )},2\varDelta v^{(\sigma )}\right ]\). A different approach was chosen for the perturbation of the thrust magnitude. In fact, usually the thrust magnitude exhibits small fluctuations. This time-varying behavior is modeled through a trigonometric series with random coefficients,

where T pert denotes the perturbed thrust, whereas a k represents a random number with Gaussian distribution, zero mean, and standard deviation equal to 0.01. The atmospheric density fluctuations are modeled through a similar trigonometric series,

where ρ pert denotes the perturbed density, whereas b k represents a random number with the same statistic properties as a k .

At the end of the algorithmic process described in Sect. 3.3, two statistical quantities are evaluated, i.e. the mean value and the standard deviation for all of the outputs of interest. The symbols \(\bar{\chi }\) and χ (σ) will denote the mean value and standard deviation of χ (χ = z or v or γ or t f ) henceforth. Five campaigns are performed, each including 100 runs. The first four campaigns (MC1 through MC4) use a sampling time Δ t S = 2 s, whereas MC5 adopts a sampling time Δ t S = 1 s. MC1 assumes only perturbations of the initial state. The second campaign (MC2) considers the thrust fluctuations, whereas MC3 takes into account only the atmospheric density perturbations. MC4 and MC5 include all the deviations from the nominal flight conditions (with different sampling times). Table 1 summarizes the results for the five Monte Carlo campaigns and reports the related statistics. Application of VTD-NOG to the problem of interest leads to excellent results, with modest errors on the desired final conditions. More specifically, inspection of Table 1 points out that errors on the initial conditions are corrected very effectively; however, also the remaining results exhibit modest deviations at the final time. The latter is extremely close to the optimal value, and this is an intrinsic characteristic of VTD-NOG, which employs first order expansions of the state and costate equations in the proximity of the optimal solution. As a final remark, in the presence of all of the perturbations (MC4 and MC5), decreasing the sampling time implies a reduction of the final errors. Figures 8, 9, 10, and 11 depict the perturbed state components and control angle, obtained in MC5.

5 Interception

As a second application of VTD-NOG, this section considers the interception of a target by a maneuvering vehicle in exoatmospheric flight or in the presence of negligible aerodynamic forces, e.g. an intercepting rocket operating at high altitudes. Both the pursuing vehicle and the target are modeled as point masses, in the context of a three-degree-of-freedom problem.

5.1 Problem Definition

Under the assumption that interception occurs in a sufficiently short time interval, the flat Earth approximation can be adopted again. This means that the Cartesian reference frame can be defined as follows: the x 1-axis is aligned with the local upward direction, the x 2-axis is directed eastward, and the x 3-axis is aligned with the local North direction. As a result, the Cartesian equations of motion for the intercepting rocket are

where the derivatives are written with respect to τ, {x 1, x 2, x 3} are the three position coordinates, {x 4, x 5, x 6} are the corresponding velocity components, and t f represents the time of flight up to interception. The symbol g denotes the magnitude of the (constant) gravitational force at the reference altitude, whereas the thrust acceleration has magnitude a T and direction identified through the two angles u 1 and u 2. The target position is assumed as known, and therefore it is expressed by three specified functions of the dimensionless time τ,

While the state vector contains the position and velocity components \(\left \{x_{i}\right \}_{i=1,\ldots,6}\), the control vector is u = [u 1 u 2]T, and the parameter vector a includes only t f (as in the previous application). As the time is to be minimized, the objective function is

5.2 Optimal Trajectory

The first-order conditions for optimality are obtained after introducing the Hamiltonian H and the function of the boundary conditions Φ, according to (7)

where the subscript f refers to the value of the respective variable at the final time. The adjoint equations (9) in conjunction with the respective boundary conditions (10) for λ 4 through λ 6 lead to

where λ i, 0 denotes the (unknown) initial value of the adjoint variable λ i . Then, the Pontryagin minimum principle yields

These relations imply that the optimal thrust direction is time-independent, regardless of the (known) target position. It is relatively straightforward to prove that for the present application the remaining necessary conditions coming from (10) are useless for the purpose of identifying the optimal solution, in the sense that they do not lead to establishing any new relation among the unknowns of the problem, i.e., \(\left \{\lambda _{1,0},\lambda _{2,0},\lambda _{3,0},t_{f}\right \}\). Also the parameter condition (11) can be proven to be ignorable, in a way similar to that used in Sect. 4.2. Moreover, as the two angles u 1 and u 2 are constant, they can be considered as the unknown quantities in place of \(\left \{\lambda _{1,0},\lambda _{2,0},\lambda _{3,0}\right \}\). Under the assumption that a T is constant, integration of (67)–(72) leads to obtaining the following explicit solution for x 1, x 2, and x 3:

These expressions are evaluated at τ = 1, then they are set equal to the respective position coordinates of the target at τ = 1. From (85)–(87) one obtains the following equations:

Depending on the analytical form of f 1, f 2, and f 3, (88) can either represent a transcendental equation or simplify to a polynomial equation of fourth degree. Once (88) has been solved, calculation of the optimal thrust angles is straightforward, by means of (89) and (90).

5.3 Application of VTD-NOG

The guidance algorithm described in this work is applied to the interception problem in the presence of nonnominal flight conditions. In particular, perturbations on the initial state and oscillations of the thrust acceleration magnitude over time are modeled. Several Monte Carlo campaigns are run, with the intent of obtaining some useful statistical information on the accuracy of the algorithm at hand, in the presence of the previously mentioned deviations, which are simulated stochastically. The nominal initial position is perturbed by a random vector \(\boldsymbol{\rho }\): its magnitude ρ is associated with a Gaussian distribution, with standard deviation equal to 5 m and maximal value never exceeding 10 m, whereas the corresponding unit vector \(\hat{\rho }\) has direction uniformly distributed over a unit sphere. Similarly, the nominal initial velocity is perturbed by a random vector w: its magnitude w is associated with a Gaussian distribution, with standard deviation equal to 5 m/s and maximal value never exceeding 10 m/s, whereas the corresponding unit vector \(\hat{w}\) has direction uniformly distributed over a unit sphere. A different approach is adopted for the perturbation of the thrust acceleration. As the thrust magnitude (and the related acceleration a T ) exhibits fluctuations, the perturbed thrust acceleration is modeled through a trigonometric series,

where a T is the nominal thrust acceleration, whereas the corresponding (time-varying) perturbed value a T, pert is actually used in the MC simulations. The coefficients \(\left \{c_{k}\right \}_{k=1,\ldots,10}\) have a random Gaussian distribution with zero mean and a standard deviation equal to 0.01. At the end of the algorithmic process described in Sect. 3.3, two statistical quantities are evaluated, i.e. the mean value and the standard deviation for all of the outputs of interest (with a notation similar to that adopted for the preceding application).

In this section, three different targets are taken into account. For each of them, four Monte Carlo campaigns have been performed, each including 100 runs. The first campaign (MC1) assumes only perturbations of the initial state. The second campaign (MC2) considers only oscillations of the thrust acceleration magnitude, while the third and fourth campaigns (MC3 and MC4) include both types of perturbations, with different sampling time intervals (Δ t S = 1 s and Δ t S = 0. 5 s, respectively).

Fixed Target As a first special case, a fixed target is considered. This means that the three functions f 1, f 2, and f 3 equal three prescribed values x 1 (T), x 2 (T), and x 3 (T). As a result, (88) assumes the form of a fourth degree equation; its smallest real root represents the minimum time to interception.

In the numerical example that follows, the reference altitude (needed for defining the value of g) is set to the initial altitude of the intercepting rocket, whereas a T = 2g. The initial state of the pursuing vehicle is

whereas the target position is

The minimum time of flight up to interception turns out to equal 30.58 s, whereas the two optimal thrust angles are u 1 ∗ = 73. 3 deg and u 2 ∗ = −41. 8 deg. Figure 12 portrays the optimal intercepting trajectory.

Application of VTD-NOG yields results associated with the statistics summarized in Table 2. They regard the miss distance d f (at the end of nonnominal paths) and the time of flight. Inspection of Table 2 reveals that VTD-NOG generates accurate results, with modest values of the miss distance, which decreases by 40 % from MC3 to MC4. Figure 13 portrays the time evolution of the corrected control angles, obtained in MC3.

Falling Target The second special case assumes a target in free fall (e.g., a ballistic missile at high altitudes), with given initial conditions, denoted with \(\left \{x_{i,0}^{(T)}\right \}_{i=1,\ldots,6}\). This means that the three functions f 1, f 2, and f 3 are

As a result, (88) assumes again the form of a fourth degree equation; its smallest real root represents the minimum time to interception.

In the numerical example that follows, the reference altitude is set to the initial altitude of the rocket, whereas a T = 3g. The initial state of the pursuing vehicle is

whereas the initial state of the target is given by

The minimum time of flight up to interception turns out to equal 12.68 s, whereas the two optimal thrust angles are u 1 ∗ = 96. 6 deg and u 1 ∗ = −6. 5 deg.

The guidance algorithm is applied again in the presence of nonnominal flight conditions. The same Monte Carlo campaigns performed for the previous case are repeated for the application at hand. Table 3 summarizes the results for the four Monte Carlo campaigns and reports the related statistics, with regard to the miss distance (at the end of nonnominal paths) and the time of flight. It is worth remarking that decreasing the sampling time (cf. Table 3) leads again to reducing the mean miss distance. As in the previous application, the actual times of flight are extremely close to the minimal value (12.68 s).

Moving Target The third special case assumes a target that describes a circular path at constant altitude (e.g., an unmanned aerial vehicle). This means that

where x 1 (T) denotes the target constant altitude, \(\left (x_{2C}^{(T)},x_{3C}^{(T)}\right )\) identify the center of the circular path, whereas ω and φ define, respectively, the angular rate of rotation and the initial angular position. In this case, (88) assumes the form of a transcendental equation, to be solved numerically (for instance, through the Matlab native function fsolve). However, numerical solvers need a suitable approximate guess solution to converge to a refined result. For the application at hand, this guess can be easily supplied. In fact, if the radius R T is sufficiently small, one can assume that the target is located at \(\left (x_{1}^{(T)},x_{2C}^{(T)},x_{3C}^{(T)}\right )\). In the presence of a fixed target, an analytical solution exists, and derives from solving a fourth degree equation, as already explained in Sect. 5.2. This solution is used as a guess for the moving target.

In the numerical example that follows, the reference altitude is set to the initial altitude of the rocket, whereas a T = 3g. The initial state of the pursuing vehicle is

whereas the fundamental parameters of the target are

The minimum time of flight up to interception turns out to equal 14.76 s, whereas the two optimal thrust angles are u 1 ∗ = 74. 4 deg and u 2 ∗ = 51. 5 deg.

The guidance algorithm is applied again in the presence of nonnominal flight conditions. The same Monte Carlo campaigns performed for the previous case are repeated for the application at hand. Table 4 summarizes the results for the four Monte Carlo campaigns and reports the related statistics, with regard to the miss distance (at the end of nonnominal paths) and the time of flight. It is worth remarking that decreasing the sampling time (cf. Table 4) leads again to reducing the mean miss distance.

6 Concluding Remarks

This work describes and applies the recently introduced, general-purpose variable-time-domain neighboring optimal guidance algorithm. Usually, all the neighboring optimal guidance schemes require the preliminary determination of an optimal trajectory. Moreover, complex analytical developments accompany the implementation of this kind of perturbative guidance. However, the main difficulties encountered in former formulations of neighboring optimal guidance are the occurrence of singularities for the gain matrices and the challenging implementation of the updating law for the time-to-go. A fundamental original feature of the variable-time-domain neighboring optimal guidance is the use of a normalized time scale as the domain in which the nominal trajectory and the related vectors and matrices are defined. As a favorable consequence, the gain matrices remains finite for the entire time of flight and no extension of their domain is needed. Moreover, the updating formula for the time-to-go derives analytically from the natural extension of the accessory optimization problem associated with the original optimal control problem. This extension leads also to obtaining new equations for the sweep method, which provides all the time-varying gain matrices, computed offline and stored in the onboard computer. In this mathematical framework, the guidance termination criterion finds a logical, consistent definition, and corresponds to the upper bound of the interval to which the normalized time is constrained. Two applications are considered in the paper: (a) minimum-time-to-climb path of a Boeing 727 aircraft and (b) minimum-time exoatmospheric interception of fixed or moving targets. In both cases (especially for (a)), as well as in alternative applications already reported in the scientific literature [16, 17], the variable-time-domain neighboring optimal guidance yields very satisfactory results, with runtime (per simulation) never exceeding the time of flight. This means that VTD-NOG actually represents an effective, general algorithm for the real-time determination of the corrective actions aimed at maintaining an aerospace vehicle in the proximity of its optimal path.

References

Afshari, H.H., Novinzadeh, A.B., Roshanian, J.: Determination of nonlinear optimal feedback law for satellite injection problem using neighboring optimal control. Am. J. Appl. Sci. 6 (3), 430–438 (2009)

Bryson, A.E.: Dynamic Optimization. Addison Wesley Longman, Boston (1999)

Calise, A.J., Melamed, N., Lee, S.: Design and evaluation of a three-dimensional optimal ascent guidance algorithm. J. Guid. Control. Dyn. 21 (6), 867–875 (1998)

Charalambous, C.B., Naidu, S.N., Hibey, J.L.: Neighboring optimal trajectories for aeroassisted orbital transfer under uncertainties. J. Guid. Control. Dyn. 18 (3), 478–485 (1995)

Chuang, C.-H.: Theory and computation of optimal low- and medium-thrust orbit transfers. NASA-CR-202202, NASA Marshall Space Flight Center, Huntsville (1996)

Hull, D.G.: Robust neighboring optimal guidance for the advanced launch system. NASA-CR-192087, Austin (1993)

Hull, D.G.: Optimal Control Theory for Applications. Springer, New York (2003)

Jo, J.-W., Prussing, J.E.: Procedure for applying second-order conditions in optimal control problems. J. Guid. Control. Dyn. 23 (2), 241–250 (2000)

Kugelmann, B., Pesch, H.J.: New general guidance method in constrained optimal control, part 1: numerical method. J. Optim. Theory Appl. 67 (3), 421–435 (1990)

Pontani, M., Conway, B.A.: Particle swarm optimization applied to space trajectories. J. Guid. Control. Dyn. 33 (5), 1429–1441 (2010)

Pontani, M., Conway, B.A.: Particle swarm optimization applied to impulsive orbital transfers. Acta Astronaut. 74, 141–155 (2012)

Pontani, M., Conway, B.A.: Optimal finite-thrust rendezvous trajectories found via particle Swarm algorithm. J. Spacecr. Rocket. 50 (6), 1222–1234 (2013)

Pontani, M., Conway, B.A.: Optimal low-thrust orbital maneuvers via indirect swarming method. J. Optim. Theory Appl. 162 (1), 272–292 (2014)

Pontani, M., Ghosh, P., Conway, B.A.: Particle swarm optimization of multiple-burn rendezvous trajectories. J. Guid. Control. Dyn. 35 (4), 1192–1207 (2012)

Pontani, M., Cecchetti, G., Teofilatto, P.: Variable-time-domain neighboring optimal guidance, part 1: algorithm structure. J. Optim. Theory Appl. 166 (1), 76–92 (2015)

Pontani, M., Cecchetti, G., Teofilatto, P.: Variable-time-domain neighboring optimal guidance, part 2 application to lunar descent and soft landing. J. Optim. Theory Appl. 166 (1), 93–114 (2015)

Pontani, M., Cecchetti, G., Teofilatto, P.: Variable-time-domain neighboring optimal guidance applied to space trajectories. Acta Astronaut. 115, 102–120 (2015)

Seywald, H., Cliff, E.M.: Neighboring optimal control based feedback law for the advanced launch system. J. Guid. Control. Dyn. 17 (3), 1154–1162 (1994)

Teofilatto, P., De Pasquale, E.: A non-linear adaptive guidance algorithm for last-stage launcher control. J. Aerosp. Eng. 213, 45–55 (1999)

Vinh, N.X.: Optimal Trajectories in Atmospheric Flight. Elsevier, New York (1981)

Yan, H., Fahroo, F., Ross, I.: Real-time computation of neighboring optimal control laws. AIAA Guidance, Navigation and Control Conference, Monterey. AIAA Paper 2002–465 (2002)

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Rights and permissions

Copyright information

© 2016 Springer International Publishing Switzerland

About this chapter

Cite this chapter

Pontani, M. (2016). A New, General Neighboring Optimal Guidance for Aerospace Vehicles. In: Frediani, A., Mohammadi, B., Pironneau, O., Cipolla, V. (eds) Variational Analysis and Aerospace Engineering. Springer Optimization and Its Applications, vol 116. Springer, Cham. https://doi.org/10.1007/978-3-319-45680-5_16

Download citation

DOI: https://doi.org/10.1007/978-3-319-45680-5_16

Published:

Publisher Name: Springer, Cham

Print ISBN: 978-3-319-45679-9

Online ISBN: 978-3-319-45680-5

eBook Packages: Mathematics and StatisticsMathematics and Statistics (R0)