Abstract

All human clinical trials of neuroprotection after brain ischemia and reperfusion injury have failed. Brain ischemia is currently conceptualized as an “ischemic cascade” and therapy is directed to treating one or another element of this cascade. This approach conflates the science of cell injury with the development of neuroprotective technologies. Here we review a theory that describes the generic nonlinear dynamics of acute cell injury. This approach clearly demarcates the science of cell injury from any possible downstream technological applications. We begin with a discussion that contrasts the qualitative, descriptive approach of biology to the quantitative, mathematical approach used in physics. Next we discuss ideas from quantitative biology that underlie the theory. After briefly reviewing the autonomous theory, we present, for the first time, a non-autonomous theory that describes multiple injuries over time and can simulate pre- or post-conditioning or post-injury pharmacologics. The non-autonomous theory provides a foundation for three-dimensional spatial models that can simulate complex tissue injuries such as stroke. The cumulative theoretical formulations suggest new technologies. We outline possible prognosticative and neuroprotective technologies that would operate with engineering precision and function on a patient-by-patient basis, hence personalized medicine. Thus, we contend that a generic, mathematical approach to acute cell injury will accomplish what highly detailed descriptive biology has so far failed to accomplish: successful neuroprotective technology.

Access provided by CONRICYT-eBooks. Download chapter PDF

Similar content being viewed by others

Keywords

- Brain ischemia

- Ischemic cascade

- Autonomous theory

- Lac operon

- Boolean network

- Acute cell injury

- Bistable bifurcation

1 Introduction

In the setting of brain ischemia, neuroprotection can be defined as taking a post-ischemic brain region we know will die and performing some intervention to prevent it from dying. While many neuroprotective interventions are described in the preclinical literature, none have successfully “translated” to clinical stroke neuroprotection in humans [1–4]. Analogous failures have plagued other biomedical fields, such as cardiac and renal ischemia [5, 6]. These are not isolated cases, but part of a larger pattern of deficiencies in biomedical research. The “lack of reproducibility” of biomedical results has garnered national media attention and serious reform efforts from journal editorial boards and the NIH [4, 7–11]. All of this, we suggest, is part of the same picture. Attempts to remedy these deficiencies have focused almost exclusively on technical details of experimental execution [12–14]. That empirical ambiguity needs to be minimized should go without saying. We have argued that an equal, or even greater, contributing factor is the lack of theoretical foundation in biomedical research [15–19].

The purpose of this chapter is to consider what neuroprotection might look like in a world that possessed a working theoretical biomedicine. We have offered such a theory and summarize it below. The theory has not yet been empirically validated. Nonetheless, the theoretical construct clarifies a number of critical issues. Perhaps most importantly, it makes clear the distinction between the science of cell injury and therapeutic technology. Developing and confirming the theory constitute the science. Any application that stems from the scientific results constitutes technology. Hence, neuroprotection becomes a technological goal that comes after the science is completed. Efforts that conflate science and technology in biomedical research have only served to confound both.

This chapter has two main parts. In the first part, we discuss the broader views that underlie our approach. We consider how physics and biology differ and why it matters to the idea of neuroprotection. We begin at the root of the problem and briefly compare the scientific cultures of physics and biology. We then briefly discuss the foundations of our approach which are grounded in network theory. In the second part, we illustrate an approach to acute cell injury that utilizes the principles and notions described in the first part. The solutions of this theory offer paradigm-transforming insight into the nature of acute cell injury. The theoretical findings provide clear directions for technological application, of which we consider three facets: (1) therapy as sublethal injury, (2) the technology of prognostication, and (3) the technology of neuroprotection.

2 Part 1: Physics and Biology

2.1 A Tale of Two Cultures

Since their respective inceptions in the modern era, biology and physics have developed along separate tracts. Biology was burdened with the task of describing the myriad biological organisms and the almost infinite variations of their structures, functions, niches, and so forth [20]. Physics, on the other hand, since the time of Galileo, sought to find mathematical patterns that described some general aspect of entire classes of phenomena. In Galileo’s own words [quoted in [21]]:

Philosophy [nature] is written in that great book whichever is before our eyes—I mean the universe—but we cannot understand it if we do not first learn the language and grasp the symbols in which it is written. The book is written in mathematical language…without whose help it is impossible to comprehend a single word of it; without which one wanders in vain through a dark labyrinth.

The essence of the method of physics was captured succinctly by the mathematician Morris Kline [21]:

The bold new plan, proposed by Galileo and pursued by his successors, is that of obtaining quantitative descriptions of scientific phenomena independently of any physical explanation.

The distinction between the qualitative classifications of biology and the quantitative descriptions of physics illuminates the crisis in biomedical research as explained by entrepreneur Bill Frezza [22]:

[We must]…shift the life sciences over to practices that have been advancing the physical sciences for years. In order to do this the culture must change. Mathematics is the language of engineering and life scientists can no longer take a pass on it. A system that cannot be modeled cannot be understood, and hence cannot be controlled. Statistical modeling is not enough, for the simple reason that correlation is not causation. Life science engineers need to catch up with their peers in the physical sciences when it comes to developing abstract mathematical representations of the systems they are studying. Progress comes from constantly refining these models through ever more detailed measurements.

2.2 What Is Measured?

Since the time of Newton, physicists have struggled with the link between the physical objects we perceive and their description by mathematical patterns [21]. The dominance of mathematical abstraction over physical intuition was definitively established in the 1920s. The founders of quantum mechanics were forced by the empirical behavior of light and atoms to abandon appeals to everyday physical intuition. Quantum mechanics made clear that physics provides mathematical descriptions of phenomena whether or not they make sense to everyday intuition. For example, a physical intuition of the superposition of quantum states is not possible [23]. This approach has been justified by the overwhelming scientific and technological success of quantum mechanics.

Physics of course did not come to this realization overnight. The transition occurred over centuries. However, from the time of Galileo, physical objects were idealized into mathematical quantities. Physical objects were imagined to be points (center of mass) moving through frictionless media, whose motion traced out idealized geometric curves. Idealizing perceptions of the real world in this fashion allowed the recognition that the same mathematical pattern could be applied, for example, to projectiles and planetary motion, as Newton famously showed [24].

Thus, from its inception, what was to be measured in physics was intimately bound to mathematical description. It did not matter if it was an apple, cannon ball, or the Moon. The qualitative differences were ignored and each was to be treated as a point-like object distinguished only by its quantitative mass. Mass is a nonsensory abstraction [25]. We perceive weight, which is a function of position in a gravity field. A cannon ball becomes weightless in outer space, but the mass remains constant. The perception of weight was the intuitive forerunner of mass. Mass is a mathematical quantity in the equations of physics. Thus, what is measured in physics are operational quantities that are defined exclusively in mathematical terms.

In contrast, in modern biomedicine , organisms are dissected/homogenized into relatively stable pieces that are measured by a variety of means (centrifugation, Western blot, etc.), and intuitively thought of as classical objects with classical functions. There is no underlying mathematical theory to guide the definition of such objects. The nature of the objects is wholly dependent on the methods of isolation. Different methods can result in different manifestations of supposedly the same object [26]. Some subcellular components are not yet amenable to biochemical isolation [27]. The employment of the arsenal of molecular biological tools without an underlying mathematical conception has led to empirical chaos where definitions rest not on sound theory, but on the methods used to generate the objects of study. This approach is not systematic, and it is no wonder that control of such systems has been elusive.

We present below an approach to acute cell injury based on the method of mathematical abstraction and idealization used in physics, where the main concepts and objects of measurement are defined by the theory. Before describing the theory, we discuss the foundations on which it rests.

2.3 The Mathematizing of Biology

It is a common assertion that biology is too complex to treat mathematically the way physics treats physical phenomena. The truth of this assertion has continuously eroded over time. This section briefly reviews one line of development that has successfully mathematized biology. Some of this material was discussed in greater detail elsewhere [19], so only salient aspects are presented here.

The first major step was the discovery of graph theory by the famous eighteenth century mathematician Euler, which initiated the study of networks as mathematical entities [28]. However, the relevance of network mathematics to biology did not become explicit until the 1960s.

After the discovery of the structure of DNA, and the cracking of the genetic code in the 1960s, the physical and informational structure of chromosomes was at least partially revealed. Even in the context of the now discredited “one gene one protein” model, a central paradox became apparent in the construction of organisms. Using current numbers, there are on order 20,000–30,000 genes in multicellular organisms. Yet an organism is made of a much smaller number of cell types, on the order of several dozens. The question thus arose [29]: how can such a large number of genes lead to a much smaller number of cell phenotypes? This question has a paradoxical character because there are an astronomical number of combinations of gene expression patterns. Yet, the limited number of cell phenotypes indicates that most of these possibilities play no role in organismic biology. Somehow, only an extremely small percentage of possible gene expression patterns actually mattered. Could there be a basic theoretical principle behind this observation?

The discovery leading to the resolution of this seeming paradox was the Nobel Prize winning work of Jacob and Monad. As is well-known, Jacob and Monad discovered the Lac operon [30], providing the first clear example of gene regulation. The key finding was that the product of the lacI gene, the lac repressor protein, could control the transcription of the lac operon. Binding of lac repressor protein inhibited transcription of the genes contained in the lac operon. But lactose binding to the lac repressor protein dissociated it from the DNA, thereby inducing lac operon transcription. This discovery revealed that gene products could regulate the expression of other genes and showed that genes were functionally interlinked to form a self-regulating network of mutual influences.

Shortly after discovery of the Lac operon, in 1969 Stuart Kauffman demonstrated that Boolean network s, in which each node is either “on” or “off”, could model gene networks [29]. Kauffman did not use Boolean networks to model any specific gene network. Instead, he studied the generic mathematical properties of random Boolean networks. A random Boolean network of N nodes has 2N possible states. For example, a network of N = 25 nodes will have 225 = 33,554,432 possible states.

Kaufmann’s main finding was that, of all the possible states, only a small number of them were stable. A stable network state, also called an attractor state, is one that, once obtained, no longer changes to another state [31]. He found that the number of attractor states was on the order of \( \sqrt{N} \) [29, 32]. Thus, for N = 25 nodes, there would be ~5 attractor states. This represents ~150 parts per billion of the possible network states, a vanishingly small fraction.

Kauffman’s theoretical finding gave insight into how gene networks could operate. If each gene was taken as a node, and the network was approximated as Boolean (i.e. each gene was simply on or off), there would be 220,000 possible states. However, there would only be \( \sim \sqrt{20,000} \) or 141 stable states, a not unrealistic number of cell types in an organism. Thus, Kauffman’s work led to a critical new insight: the stable states of a network, the attractor states, could be associated with the phenotypes of cells. If validated, this would stand as a basic principle in theoretical biology based on the mathematical properties of networks.

The empirical demonstration of Kauffmann’s theory had to await the advent of omic technology where thousands of genes could be measured simultaneously. In 2007, Huang and colleagues provided compelling evidence that changes in gene expression could be modeled as changes in the gene network from one stable attractor state to another [33]. The initial and final gene expression patterns, each associated with a distinct cell type, were stable, but the intermediate gene changes between the two phenotypes were dynamic and followed a precise mathematical pattern of change. Below, we utilize the same mathematical pattern to theoretically model acute cell injury.

These ideas were a critical advance towards quantitatively abstracting biological systems. Cell phenotypes correspond to gene network attractor states. This thinking accounts for two levels of biological action simultaneously: (1) the level of the gene network and its potentially very complex molecular interactions, and (2) the level of the phenotype which represents the net action of the underlying molecular network. In physics, this is sometimes called a “dual” representation, where a problem that may be overwhelmingly complex in one representation is considerably simplified in the other representation [34]. Phenotypes and genotypes have been linked since Mendel. The view above cracks the barrier to precisely quantifying what has until now been treated in qualitative terms. Our theory of acute cell injury is grounded in this quantitative network view that links the gene network and cell phenotype.

3 Part 2: A Theory of Acute Cell Injury

3.1 Introduction to the Theory

We have presented our theory in detail elsewhere [35] and so here summarize salient points. We begin with a qualitative heuristic, and then present the autonomous form of the theory, where “autonomous” is a technical mathematical term that means time, t, is absent from the right hand side of a differential equation [31]. We briefly review the solutions of the autonomous theory. We then discuss three possible technological directions suggested by the theory:

-

1.

A non-autonomous version of the theory allows for sequential injuries. This simulates preconditioning and other clinically relevant conditions. However, this result has much broader significance by indicating that therapy in general is synonymous with sublethal injury.

-

2.

To develop a quantitative approach to prognostication of acute cell injury, such as stroke, we outline an externally perturbed spatial model on a 3D connected lattice.

-

3.

Based on the previous two discussions, we describe a possible quantitative approach that would use precisely targeted radiation to affect neuroprotection.

The “take home message” of our presentation is that the main advantage of a theory-driven biomedicine is that the theory provides a clear, stepwise roadmap from the science of acute cell injury to therapeutic technology.

3.2 Qualitative Description of the Theory

The theory is an idealization of what happens when a single cell is acutely injured. Three features of cell injury are abstracted as continuous mathematical quantities : (1) the intensity of the injury, I, (2) the total amount of cell damage, D, and (3) the total amount of all stress responses induced by the injured cell, S. The theory addresses how D and S change over time, t, as a function of I. How to characterize the specific injury and specific cell type naturally emerges as we proceed.

Imagine a generic cell acutely injured by a generic injury mechanism with intensity, I. This will activate many simultaneous damage and stress response pathways. The sum of all damage at any instant is D. The sum of all activated stress responses at any instant is S. By definition, D and S are mutually antagonistic. The function of stress responses is to combat damage, but the damage products can inhibit or destroy the stress responses. Therefore, D and S “battle”, and the level of each changes over time. The “battle” concludes with only one of two possible outcomes: D > S or S > D (the special case of D = S is discussed below). If D > S, damage wins out over the stress responses and, unable to overcome the damage, the cell dies. If S > D, then stress responses win, the cell repairs itself and survives.

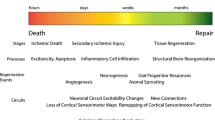

We can summarize the qualitative idea with a circuit diagram (Fig. 3.1). The core of the diagram is the mutual antagonism of D and S. D is positively driven, and S is negatively driven, by I. This is intuitive: the stronger an injury (the higher the value of I), the more damage it will produce, and the less the cell will be able to respond effectively to the injury.

Tying into the general ideas discussed above, our theory recognizes an uninjured cell as a stable phenotype generated by a stable pattern of gene expression. Application of an acute injury is an extrinsic perturbation (of intensity I) to the system. The genetic changes associated with cell injury are explained as a deviation from the stable gene network state into a series of unstable states. The instability of the gene network resolves itself either by returning to its original stable state, or by becoming so unstable that the system can no longer maintain integrity and so disintegrates, i.e. dies.

In addition to the gene network, we posit that the damage pathways generated by acute injury also act as a network [19]. The various damage pathways do not act independent of each other. Instead, each damage pathway has a point or points of contact with others that link them into a unified network. Hence, D, the damage network that seeks to destroy the cell, interacts with S, the gene network that seeks to maintain homeostasis. Thus, we need only consider the net action of the two competing networks and not concern ourselves with the details of the specific pathways that instantiate the networks.

3.3 The Autonomous Theory

The above qualitative picture becomes a mathematical theory via the following four postulates:

-

1.

D and S exist.

-

2.

The mutual antagonism of D and S follows S-shaped curves (Hill functions).

-

3.

D and S are exponentially driven by I and −I, respectively.

-

4.

Decay from the attractor state, (D*, S*), is a function of |D* − S*|.

Postulate 1 was justified in the previous section. Postulate 2 is expressed by Eq. (3.1), a system of autonomous ordinary differential equations that is well-known [36] to model two mutually antagonistic factors.

Equation (3.1) specifies that the net rate of change of D or S equals the rate of formation minus the rate of decay. The rate of formation is given by Hill functions with a Hill coefficient n, threshold Θ, and velocity v. Θ D is the value of D at a 50 % decrease in S. Θ S is the value of S at a 50 % decrease in D (Fig. 3.2). The mutual inhibition is captured by the inverse relationships dD/dt ∝ 1/S n and dS/dt ∝ 1/D n. Eq. (3.1) treats the decay rate as first order, with decay constants k D and k S.

Postulate 3 is expressed by having the thresholds change as a function of injury intensity, I. The minimal assumption is an exponential relationship. The threshold of D, Θ D, is proportional to IeI. The threshold of S goes as Ie−I. To convert to equality, multiplier (c) and exponential (λ) proportionality constants are required:

The specific injury and the specific cell type naturally emerge from Eq. (3.2) via the proportionality constants [15]. (c D, λ D) quantify the lethality of the injury mechanism, analogous to how LD50 quantifies the lethality of a substance. Larger values of (c D, λ D) mean an injury mechanism is more lethal than one with lower values. (c S, λ S) quantify the strength of a cell’s intrinsic stress responses. Larger c S and smaller λ S correspond to stronger stress responses. Thus, every possible acute injury mechanism and every specific cell type can, in principle, be given numerical values for (c D, λ D) and (c S, λ S), respectively. This step is analogous to how an apple, cannon ball, or planet is abstracted to its numerical mass, which eliminates the qualitative distinctions. Similarly, specific qualitative injures such as ischemia, head trauma, or poisoning will each have distinct values of (c D, λ D). Specific cell types, such as neurons, myocytes, or endothelial cells will each have distinct values of (c S, λ S).

Substituting Eq. (3.2) into Eq. (3.1) gives the autonomous version of the nonlinear dynamical theory of acute cell injury:

The autonomous theory is based on the first three postulates. Postulate 4 is introduced after studying the solutions to Eq. (3.3). By studying the solutions to Eq. (3.3) and giving them biological interpretations, the theory provides a universal understanding of acute cell injury dynamics.

3.4 Solutions of the Autonomous Theory

Equation (3.3) is solved by Runge-Kutta numerical methods [32] that apply the input parameters to Eq. (3.3) and output the solution as a phase plane containing all possible trajectories. By varying I as the control parameter, the theory can model an injury system over a range of injury intensities. A single trajectory starts at an initial condition (D 0, S 0). Each trajectory converts to a pair of covarying D and S time courses. The time course pairs converge to some steady state. This steady state is called a fixed point, which, by definition, is where the rates of all variables simultaneously equal zero. There are two types of fixed points, notated (D*, S*), relevant to our theory. Trajectories converge to attractors and diverge from repellers [31]. Plotting fixed points vs. the control parameter is called a bifurcation diagram and shows quantitative and qualitative changes in fixed points. A qualitative change in fixed points is called a bifurcation.

Above we said if D > S, the cell dies, but if S > D the cell recovers. In the solutions to Eq. (3.3), outcome is determined by the D and S values of the attractors. If S* > D*, the cell recovers. If D* > S*, the cell dies. We demonstrated for all numerical combinations of (c D, λ D, c S, λ S, I), that Eq. (3.3) outputs only four types of bifurcation diagrams [35]. Here we discuss two of the bifurcation diagram types to illustrate our main theoretical findings.

3.5 Monostable Outcome

We now explain how the theory mathematically defines cell death, illustrated by the bifurcation diagrams. Figure 3.3, panel 1, shows a bifurcation diagram plotting D* vs. I (red) and S* vs. I (green). As I increases continuously, at each I the phase plane is monostabl e, containing only one attractor. Four phase planes are shown (at values of I indicated by orange dashed lines on bifurcation diagrams). Trajectories from (D 0, S 0) = (0, 0) either recover (green) or die (red). Initial conditions (0, 0) correspond to the uninjured state, or “the control condition”.

A monostable bifurcation diagram describes the case where there is only one outcome at each value of injury intensity, I. The phase planes in panels 2–5 are marked by orange dashed lines on the bifurcation diagram in panel 1. The attractor states are indicated by green (S* > D*) or red (D* > S*) circles on each phase plane

The special case of D = S occurs when the bifurcation curves cross at D* = S* (Fig. 3.3, panel 1, arrow). This crossing occurs at a specific value of I termed I X , the tipping-point value of I. I X is intuitively understood as the “cell death threshold”. Technically, I X is not a threshold (thresholds are illustrated in Fig. 3.2). I X is the tipping point value of injury intensity, I, defined as that value of I where D* = S*. For I < I X , S* > D* and the cell recovers. For I > I X , D* > S*, and the cell dies.

At D* = S*, Θ D = Θ S, and Eq. (3.2) can be solved to calculate I X :

This is a rather amazing result in the context of descriptive biomedicine. Given the parameters for any injury mechanism (c D, λ D) and any cell type (c S, λ S), we can, in principle, calculate beforehand the “cell death threshold” or tipping point intensity, I X , for that combination. Additionally, Eq. (3.4) makes explicit that the “cell death threshold”, I X , is a function of both the injury mechanism and cell type. That means different cell types in a tissue (e.g. cortical vs. hippocampal neurons in brain) will die at different values of injury intensity. This is, at best, only intuitively understood in descriptive biomedicine. Our theory derives it as a mathematical fact.

The monostable case in Fig. 3.3 matches the general intuition that a cell will survive injury intensities less than the “cell death threshold” (I X ), but die if injury intensity is greater than I X .

3.6 Bistable Outcome

We now state the most important feature of the autonomous theory: For some parameter sets, both a survival and a death attractor are present on the same phase plane. The scenario of two attractors on a phase plane is called bistability [31, 32]. This result is counterintuitive. The monostable case corresponds to our intuition that a single injury intensity leads to either a survival or a death outcome. Our theoretical investigation demonstrates that some injury magnitudes are bistable and have both a survival and a death outcome.

Bistability is illustrated in Fig. 3.4, panel 1. As I increases, there is a value of I at which the phase planes transform from having one attractor to having two attractors and one repeller. The system is said to bifurcate at this value of I. The system is bistable for a range of I (yellow region) and then bifurcates again to monostable. The value of I X falls exactly in the middle of the bistable range of I values.

The monostable bifurcation diagram (Fig. 3.3) displays only two dynamical states: (1) recovery for I < I X , and (2) death for I > I X . The bistable bifurcation diagram shows four dynamical states: (1) monostable recovery, (2) bistable recovery, (3) bistable death, and (4) monostable death.

3.7 Pre-treatment Therapies

The mathematical results described in the previous section provide a new, paradigm-transforming definition of therapy. In the monostable case, there is only one possible outcome at each value of injury intensity, either recovery for I < I X , or death if I > I X . No therapy is possible for injured cells with monostable dynamics because there is no attractor with S* > D* when I > I X . For the bistable bifurcation diagram, a range of injury intensities contain both the capacity to recover and to die. In other words, our theory demonstrates that bistability is required if we wish to take a system on a pro-death trajectory and convert it to a pro-survival trajectory.

For the autonomous theory, whether a trajectory leads to recovery or death depends on initial conditions (D 0, S 0). Altering initial conditions corresponds to a pre-treatment therapy. The effect of altering initial conditions was extensively studied [15], so only salient points are outlined here. When D 0 < 0 (D 0 is a negative number), this represents pre-administering an agent (drug) that inhibits damage. Starting at S 0 > 0 (S 0 is a positive number) corresponds to a manipulation that activates stress responses prior to inducing injury, for example, by transfecting a protective gene (e.g. heat shock 70).

For the bistable death range, all trajectories from (D 0, S 0) = (0, 0) will die. But if initial conditions D 0 < 0 or S 0 > 0, then the system may follow a trajectory to the recovery attractor (Fig. 3.4, panel 4, green trajectory). Thus, the theory clearly indicates that the ability to convert a cell on a pro-death trajectory to a pro-survival trajectory is due to the bistable dynamics of the system.

This is truly a paradigm-transforming insight. It mathematically expresses the intuition that some injury magnitudes that are lethal if untreated can be reversed by therapy. Such insight is, at best, only intuitively apprehended using the prevalent qualitative molecular pathways approach and certainly cannot be calculated. Our theory defines therapeutics mathematically and calculates the range of lethal injury intensities that can be recovered by any possible therapy.

However, a limitation of the autonomous version is that it cannot model a post-injury treatment. The need to model a post-treatment provided major motivation to develop the non-autonomous theory. We described this new theory for the first time below. However, before giving the new theory, we turn attention to the main limitation of the autonomous version.

3.8 Closed Trajectories and the Autonomous Theory

The solutions to Eq. (3.3) capture only half of what happens to an acutely injured cell. Acute injury displaces the cell from its stable phenotype through a continuous series of unstable states . The attractor solutions to Eq. (3.3) represent the maximum deviation from the stable state and specify whether outcome will be recovery (S* > D*) or death (D* > S*). However, the cell must “decay” from maximum instability either to its initial phenotype (recover) or cease to exist (death). We developed [35] a stop-gap solution to overcome this limitation which is expressed by Postulate 4: decay from the attractor state, (D*, S*), is a function of |D* − S*|.

We noted that, from the perspective of the theory, both recovery and death are represented when D = S = 0. Recovery is the state where there is no longer any damage (D = 0) and stress responses are no longer expressed (S = 0). Death is the complete disappearance of the cell, and hence all of its variables, including D and S, will equal zero.

Thus, we asked: what determines how long it takes (the decay time, τ D) for the cell to go from the attractor (D*, S*) back to (D, S) = (0, 0)? If D* is much greater than S* (D* ≫ S*), damage is so great the cell will die quickly (τ D is short), which is a condition widely recognized as necrosis. If S* ≫ D*, stress responses overwhelm the small amount of damage, and the cell recovers quickly (τ D is short). If D* is only slightly larger than S*, it will take a longer time for damage to overcome the stress responses (τ D is long), and vice versa if S* is only slightly larger than D*. We thus noticed the importance of the magnitude |D* − S*|. The decay time, τ D, from the attractor state (D*, S*) is an inverse function of |D* − S*|.

The simplest assumption was an exponential decay of the cell from the attractor to (D, S) = (0, 0):

We then concatenated solutions from Eq. (3.5) to Eq. (3.3) to obtain closed trajectories (Fig. 3.5). In Fig. 3.5, the trajectory solution to Eq. (3.5) is overlaid on the phase plane solution to Eq. (3.3). In actual fact, Eqs. (3.3) and (3.5) would have different phase planes, but depicting it as we have makes clear the need to have a closed loop trajectory on the phase plane to fully model the temporal progression of acute cell injury and recovery or death.

This line of questioning showed that the empirically observed facts of rapid (necrotic) and delayed death after injury emerged naturally from our theory. Delayed neuronal death (DND ) occurs in hippocampal CA1 after global ischemia [37] and in penumbra after stroke [38]. Many molecular pathways have been advanced to explain DND such as apoptosis, aponecrosis, protein aggregation, and so on [39–42]. However, the main feature of DND is time, the quintessential dynamical quantity. The theory indicates that if |D* − S*| is small, the cell will take a long time to die. Thus, delayed death after injury is a consequence of the injury dynamics. Biological factors are not causative, but instead merely mediate what is intrinsically a dynamical effect.

However, even though the theory could address rapid and delayed death after injury, the need to concatenate equations was artificial. Thus, an important motivation behind the non-autonomous theory was to develop a mathematical framework that could automatically calculate closed loop trajectories that model the full sequence of injury and recovery or death. Solving this problem automatically solved the post-injury treatment issue, as we now discuss.

4 Technological Applications

We now describe possible technological applications of the dynamical theory of acute cell injury. These require modifications of the autonomous formulation. We discuss: (1) multiple sequential injuries, (2) a three-dimensional version of the theory to model tissue injury, and (3) a possible neuroprotective technology based on the former two results.

4.1 Approaches to Therapy

The autonomous theory, Eq. (3.3), can only deal with pre-treatment by altering the initial conditions. In clinical practice, injuries need to be treated after they occur, necessitating post-injury therapy. The key to achieving this result is the realization that therapy is necessarily a sublethal injury. However, therapy is generally not thought of as such in typical biomedical research. Instead, there are diverse approaches to defining therapy. Typical notions of therapy include: (1) inhibiting damage mechanisms induced by injury [43], (2) bolstering stress responses (including the immune system) to allow injured tissue to repair itself [44], (3) some combination of 1 and 2 (e.g. multiple drug treatments) [45, 46], and (4) treatments designed to decrease the intensity or duration of the injury [47]. Our theory clearly demarcates control of injury intensity, I, from control of D and S.

4.2 Ascertaining Injury Intensity

In clinical practice, controlling injury intensity, I, is often not an option. For a given injury mechanism (ischemia, trauma, poisoning), a large range of continuous values of injury intensity, I, can present clinically. The clinical problem is therefore to estimate the magnitude of I (e.g. duration of ischemia, force of trauma, concentration of poison) and to determine if an intervention is possible, e.g. tPA or surgery for stroke [48]. It is important to know the duration from time of injury to clinical presentation (e.g. 2 h time window for stroke).

Bifurcation diagrams (Figs. 3.3 and 3.4) provide a systematic framework for estimating injury intensity, I. A given injury will correspond to specific value of I and its accompanying phase plane. The phase plane provides time courses from different initial conditions, one of which will describe the injury evolution over time. Thus, in principle, it is possible to achieve engineering levels of prognosticative precision by using our theory.

4.3 Protective Therapeutics

The most common view of post-injury therapy is that there exists a “silver bullet” treatment (pharmacologic or otherwise) to stop cell death [49]. We note this notion fails completely to account for the range of injury intensities that are possible for a given acute injury. In the context of stroke, such a treatment to stop neuron death is called neuroprotection. It is almost universally agreed that a drug will specifically target the molecular mechanism that causes cell death. This definition of therapy presumes a detailed understanding of the biological specifics of the injury and the drug action. We assert that this view is wholly incorrect.

Instead, our theory clearly indicates that a drug is a nonspecific form of injury. If given at high enough concentration (≫LD50), a drug is lethal. This is well-known. Ideas of “on-target” and “off-target” effects are arbitrary distinctions that reflect only our ignorance of the total biological action of a drug [17]. For example, how many neuroprotectants were thought to have “specific” actions inhibiting a damage pathway but later discovered to simply lower temperature? In the most general terms, any drug will interfere with normal cell function and act as an injury mechanism. At low concentrations (low values of I), it is sublethal. At high concentrations (high values of I), it is lethal.

We also note that the logic of “specific targets” has proven elusive with the two most neuroprotective stroke therapies: hypothermia and pre-conditioning. Pre-conditioning is, by definition, sub-lethal injury. Hypothermia too is an injury mechanism. If the intensity of hypothermia is too large (e.g. temperature is reduced too much), the system will die. Therapeutic hypothermia is the application of a sublethal dose (intensity) of hypothermia.

Therefore, through the lens of our theory, all possible therapies fall into only two categories: (1) efforts to reduce injury intensity, which have limited practicality when an injury has already occurred prior to clinical presentation, and (2) sublethal injurie s whose main effect is to alter the trajectories of D and S. This understanding provides very strong motivation to reformulate our theory so that we can apply sequential injuries over time. The solution to this problem is the non-autonomous version of the theory.

4.4 The Non-autonomous Dynamical Theory of Acute Cell Injury

The non-autonomous theory builds stepwise on the autonomous theory. The autonomous theory treats the threshold parameters, Θ, in Eq. (3.2) as functions of injury intensity, I, but treats the v and k parameters as constants. The non-autonomous theory assigns functions to the v and k parameters. These are captured by modifying postulate 4 and adding a new postulate 5:

-

4.

The decay parameter, k, is a function of the instantaneous value of |D − S|.

-

5.

The velocity parameter, v, decays exponentially with time: v ∝ e−t.

With regard to new postulate 4, the importance of the term |D* − S*| for realistic injury dynamics (e.g. necrosis vs. DND) was discussed above. Instead of taking |D − S| only at the attractor, we set the decay parameter k for both D and S equal to the instantaneous value of |D − S| times a constant of proportionality c 2. The effect of |D − S| is augmented by multiplying it by time, t, causing the injury to decay faster than if k was taken only as a function of |D − S|:

For postulate 5, recall that the velocity parameter, v, gives the rate of D and S formation in Eq. (3.1). Setting v = 1 (or any number) means D and S will continue to increase at a constant rate over time. This is physically unrealistic. We expect the rate of formation of both D and S to slow down with time after the injury. Of the possible functional forms, the simplest is an exponential decay:

In Eq. (3.7), v 0 is the initial velocity of formation of both D and S, and c 1 is a time constant that specifies the rate of decay of velocity. The presence of time, t, in Eqs. (3.6) and (3.7) makes the theory non-autonomous.

Substituting Eqs. (3.6) and (3.7) into Eq. (3.3) gives the non-autonomous version of the dynamical theory of acute cell injury:

4.5 Sequential Injuries

Equation (3.8) solves both limitations of the autonomous theory : (1) it generates closed trajectories that automatically return to (D, S) = (0, 0) and (2) it allows simulation of multiple injuries over time as, for example, with ischemic preconditioning. Injury 1 (at intensity, I 1) is applied at time zero, and injury 2 (at intensity, I 2) can be applied at any time thereafter. In general, the sequential injuries either interact or they do not interact. The non-interacting case is not realistic and so discarded on the grounds that the second injury will necessarily interact with the first.

There are multiple ways to model how injuries 1 and 2 interact. We consider only one form of interaction here where D and S from the second injury (D 2, S 2) antagonize each other as well as D and S from the first injury (D 1, S 1). This formulation is based on the approach of Zhou and colleagues [50], is represented by the circuit diagram in Fig. 3.6, and gives rise to a system of four coupled nonlinear, non-autonomous differential equations:

In Eq. (3.9), v is as given in Eq. (3.7). The k parameter is modified to allow D and S to interact:

For Eqs. (3.9) and (3.10), the interaction of injury 1 and injury 2 is by addition of the values of D and S from each injury. Whether this is true or not must be empirically tested. For our purposes, it stands as an assumption. Our goal here is to study solutions of Eq. (3.9) and determine if they do or do not conform to what is already empirically established.

4.6 Solutions of the Multiple Injury Model

We present three examples of solutions to Eq. (3.9). Example 1 considers the case of preconditioning. Examples 2 and 3 simulate a post-injury drug treatment, where example 2 considers the effect of time of administration, and example 3 illustrates the effect of dose.

4.6.1 Preconditioning

Preconditioning is simulated by setting the parameters of injury 1 equal to those of injury 2, except for injury intensity, I. Equal values of (c D, λ D) and (c S, λ S) for both injuries mean applying the same injury mechanism to the same cell type. Injury 1 is sublethal (I 1 < I X ) and injury 2 is lethal (I 2 > I X ), thereby simulating the case where a sublethal injury precedes a lethal injury, which is the definition of ischemic preconditioning.

Injuries 1 and 2 increasingly interact as time between them (Δt) decreases. When Δt = 250 h, injury 1 mostly runs its course and has little effect on lethal injury 2, and the system dies (Fig. 3.7a). When Δt is 72 h, the injuries interact, but not enough for injury 1 to salvage injury 2 and thus D edges out S and the system dies (Fig. 3.7b). However, at Δt = 48 h, the excess total stress responses of injury 1 adds, in a nonlinear fashion given by Eq. (3.9), to those of injury 2, allowing it to overcome total damage and survive (Fig. 3.7c). Therefore, Eq. (3.9) effectively simulates preconditioning.

4.6.2 Post-injury Drug Treatment

By modifying the input parameters to Eq. (3.9), it can be used to model a post-injury therapy. In this case, injury 1 is the lethal injury, and injury 2 is the therapy, treated as a sublethal injury. The parameter sets used in the example are:

INJURY 1 parameters | INJURY 2 parameters |

|---|---|

c S1 = 0.25; λ S1 = 0.9; n 1 = 4 | c S2 = 0.25; λ S2 = 0.9; n 2 = 4 |

c D1 = 0.1; λ D1 = 0.1 | c D2 = 0.001; λ D2 = 0.01 |

I X = 0.92 | I X = 6.1 |

v 0,1 = 0.1; c 1,1 = 0.1 | v 0,2 = 0.5; c 1,2 = 1 |

c 2,1 = 0.2 | c 2,2 = 1 |

To model applying the injuries to the same cell type, the parameters (c S, λ S) are set equal for the two injuries. The Hill coefficients are, arbitrarily, also kept equal. We assume that a drug, as a form of injury, will be considerably weaker than the main injury (which might be ischemia, or trauma, or etc.). Therefore, for injury 2, c D is 1/100th and λ D is 1/10th that of injury 1. Eq. (3.4) calculates the tipping point injury intensities for injury 1, I X = 0.92, and for injury 2, I X = 6.1. Thus, the main injury is lethal if I 1 > 0.92, and the therapy is lethal if I 2 > 6.1. The velocity parameters, v 0 and c 1, and decay parameter, c 2, control the overall rate of the injury. It is assumed that a drug will act quicker and so these parameters are 5×, 10×, and 5× that of injury 1. There are two additional parameters typically associated with a post-injury therapy. The time of administration of the therapy is set by the time after injury 1 when injury 2 is initiated. The dose of the therapy (e.g. drug dose) is set by the parameter, I, the injury intensity.

The results of running Eq. (3.9) with the above parameters are shown in Fig. 3.8. Figure 3.8a shows the main injury, injury 1, with lethal I = 1, and no treatment. The injury runs >90 % of its course over 100 time units. To precisely quantify whether the system survives or dies, we calculated the area under the D time course (A D), the cumulative total damage, and the S time course (A S), the cumulative total stress responses. We assert but do not attempt to justify here that A S > A D is the condition of survival, and A D > A S is the condition for death. When injury 1 with I = 1 is untreated, A D = 5.5 > A S = 3.5 and it dies.

Panels B–D of Fig. 3.8 show the effect of altering the time of administration. When the drug is given very early in the time course (t = 0.9), A S > A D, and the system survives. However, if the sublethal injury (i.e., drug) is given at t = 5 or t = 20, the system dies. This result is consistent with the common experience that a drug must be administered within a specific time window to be effective at halting cell death.

Panels E–G show the effect of concentration of the sublethal therapy. Panel F reproduces panel B showing that sublethal therapy of I 2 = 1 at t = 0.9 causes survival of lethal injury 1. However, if the dose of therapy is either halved (I 2 = 0.5, panel E) or doubled (I 2 = 2, panel G), damage dominates and the system dies. This result reproduces the notion of an optimal dose of therapy. If the dose is too small, it will be ineffective. If the dose is too large, it contributes to lethality.

These examples show proof of principle that the non-autonomous theory can simulate multiple sequential injuries. We are systematically studying the non-autonomous model and will present a full exposition at a later date. The above examples illustrate how the theory generalizes the notion of therapy as sublethal injury. As such, the theory provides a novel framework for pharmacodynamics.

The therapy (sublethal injury) may be applied before the lethal injury (pre-conditioning) or after (post-injury therapy), capturing mathematically several important empirical results. The theory provides a systematic framework to calculate beforehand all doses and times of administration, allowing optimization of outcome. Further, the theory is not confined only to two sequential injuries, but can model any number of injuries over time.

4.7 Spatial Applications

In this section, we briefly outline a spatial application of the theory that can be used to model three-dimensional (3D) tissue injury and thereby, for example, prognosticate stroke. We do not give a mathematical exposition, but instead merely outline the construction of this application.

Spatial applications involve two main components: (1) a 3D lattice with (2) a spatially distributed injury superimposed over the lattice. Each vertex of the lattice is taken as a biological cell, and multiple instances of the theory [e.g. Eq. (3.9)] are run in parallel at each lattice point. Figure 3.9 illustrates a simple 3D grid lattice. The injury mechanism is depicted as a colored solid in which the lattice is embedded. The legend maps the colors to a continuous distribution of injury intensity, I, which runs from sublethal to lethal and depicts a bistable system. The value of I at the location of a vertex serves as the I input parameter for that vertex.

The new feature of a spatial model is a coupling function between the cells/vertices, indicated by μ in Fig. 3.9. In general, the coupling function, μ, represents interaction between neighboring cells. Examples of what could be modeled by μ include an incremental addition of I to neighboring cells if the central cell dies, paracrine or autocrine influences between cells, or both.

Every aspect of a spatial application is constructible. The 3D lattice and superimposed injury mechanism can take on any geometry. The injury mechanism may be static or dynamic. The coupling functions can represent any possible interactions among cells. The common features of any spatial applications are: (1) a (x, y, z) dependence on both cell locations and the distribution of the injury mechanism (2), the coupling among cells, and (3) the need to run multiple instances of the theory in parallel. In general, spatial applications will be massively parallel computing problems.

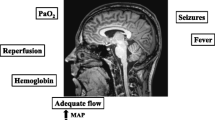

We can envision a spatial application to invent technology to prognosticate stroke outcome. The cerebral blood flow (CBF) distribution, ascertained by PET or fMRI, could be used to generate the spatially distributed injury. The blood flow distribution could be mapped across an entire bifurcation diagram (Fig. 3.10a). The 3D cell lattice can approximate the distribution of cells in the affected brain tissue. The lattice is then superimposed with the patient’s 3D CBF distribution (Fig. 3.10b, C). Blood or cerebrospinal fluid could also provide biomarkers to further parameterize the theory, e.g. by estimating time after injury. Such a simulation could, in principle, be generated and solved in near real time on a patient-by-patient basis directly at the patient bedside. Figure 3.10b–d show an initial time (say, patient presentation) used to parameterize the spatial application. Solving D & S time courses at each vertex, based on the patient’s CBF gradient, could, in principle, fully prognosticate outcome. The blow-up in Fig. 3.10c shows calculated initial and final lesions. Here green points survive, yellow points are bistable and live or die depending on I (penumbra), and red is necrotic core. Figure 3.10d shows the final 3D reconstructions that could be generated in the clinic for physician use at the bedside.

The spatial model applied to stroke prognosis. (a) Mapping a bistable bifurcation diagram to cerebral blood flow (CBF) rates. (b) Superimposing a lattice of cells over real time CBF measurements, where CBF serves as the spatially distributed I parameter for the simulation. (c) Zoomed view from panels in (b). (d) Final 3D reconstruction calculating outcome would serve to prognosticate changes in lesion volume over time

Many neuroimaging efforts are directed at prognosticating stroke outcome [51, 52]. To date there has been no unequivocal success in this endeavor [53]. Our theoretical approach provides a missing link between the raw biological data of neuroimaging and biomarkers, on one hand, and prognostication on the other hand. The raw data needs to serve as input into a theory capable of prognosticating outcome, which is precisely the purpose of the dynamical theory of acute cell injury.

4.8 A Possible Neuroprotective Technology

From the multiple injury models, we conclude that, theoretically, any form of protective therapy is necessarily a form of sublethal injury. Spatial applications hold out the promise to model, simulate, and prognosticate outcome in 3D tissue. We combine these with other insights offered by the theory and present a possible technology for stroke neuroprotection.

From studying bifurcation diagrams with I as the control parameter, it is necessarily the case that the behavior of an acutely injured system varies with injury intensity, I. This means a patient who experiences 40 % CBF is a completely different case than one with 20 % CBF or 0 % CBF. Simply stated, there will never be a “one size fits all single” or a “silver bullet” treatment for stroke. Each case will be different in the particulars of time after injury, degree and distribution of CBF reductions, comorbidities, etc. Furthermore, the theory unambiguously specifies that therapy is only possible when the injury dynamics are bistable. Prognostic efforts must be able to identify the bistable volumes of tissue. Then, the theory can calculate the conditions required to transform bistable lethal trajectories to survival trajectories.

Therefore, if clinical neuroprotection is possible, it must be tailor-made for each patient, on a patient-by-patient basis. That is, stroke neuroprotection is inherently an example of so-called “personalized medicine”, not by choice but by necessity. The prognosticative technology considered in the previous sections shows how a patient-specific prognosis is possible in principle. It is only a small conceptual step from the prognosticative technology to neuroprotective technology that can be applied on a patient-by-patient basis. Our example will describe a technology that does not yet exist. It speaks to the power of our theory that it allows us to imagine new technology.

The example of a post-injury treatment given above (Fig. 3.8) considered the post-injury therapy to be a drug. But once we recognize that therapy is, in the context of our theory, always a form of sublethal injury, we are free to consider other ways to induce sublethal injury. We require a form of sublethal injury that can be precisely targeted and whose intensity can be precisely controlled. We also require something that can penetrate the skin and skull and be controlled to penetrate to any required depth in the brain tissue. The obvious possibility is some form of radiation that can be administered on an intensity continuum from nonlethal to lethal. We thus envision a machine that targets a brain-penetrant radiation, of variable intensity, to specific voxels in the brain. This is not an unheard of possibility. Recent work suggests that infrared or other radiation may be used as a neuroprotectant [54–57]. Our theory suggests that the “mechanism” of the neuroprotective action of radiation may precisely be its ability to impact acute injury dynamics.

Location, intensity, and duration of radiation are determined by the calculated prognosis (Fig. 3.11). If left untreated, the theory can calculate which bistable regions (e.g. penumbra) will survive and which will die, and therefore predict the maximum final lesion. Because the prognostic calculations can distinguish bistable from monostable lethal cases, the regions susceptible to neuroprotective therapy can be determined. Further, on a voxel by voxel basis, the intensity of the sublethal injury required to convert death trajectories to survival trajectories can be calculated (e.g. as illustrated in Fig. 3.8). Therapy would then be administered in the form of precision targeted sublethal injury (Fig. 3.11).

Ideally, all bistable regions susceptible to therapy would be shifted to pro-survival dynamics, resulting in the minimal lesion volume where only monostable lethal volumes die. It is well-recognized that core is not subject to salvage. The goal of neuroprotective therapy is to minimize the extent to which penumbra converts to additional core lesion. Our theory offers the possibility of engineering-level precision over these factors.

This section provides merely a rough sketch of a possible neuroprotective technology in the context of the dynamical theory of acute cell injury. Many scientific and technological hurdles exist before such technology can become reality. That the theory allows imagining such possibilities speaks of the strength of a mathematical and theoretical view of cell injury. Such technology cannot be envisioned from the qualitative, descriptive, pathway-centric view of cell injury that currently dominates biomedical research.

5 The Mathematical Road to Neuroprotection

This chapter has outlined the path from the science of acute cell injury to the technology of neuroprotection. A succinct summary of the steps is:

-

1.

The correct mathematical form of the theory must be empirically validated for single injuries.

-

2.

The correct mathematical form of the theory applied to multiple sequential injuries must be empirically validated.

-

3.

The links between autonomous and non-autonomous versions must be systematically studied.

-

4.

The theory provides the scientific basis to determine the parameters for combinations of injury mechanisms (c D , λ D ) and cell types (c S , λ S ) in the laboratory.

-

5.

As injury dynamics become well-understood empirically, the doors to technological applications open. Possible technologies to prognosticate stroke outcome and to administer stroke neuroprotection were given as examples.

There is thus a stepwise progression from science to technology. The scientific step is to fully parameterize and validate the theory. This in turn provides the information to calculate outcomes at all injuries I (e.g. Fig. 3.11a). Technology then uses this information to design applications. The theory thus provides a quantitative and systematic platform to study therapeutics for all possible combinations of acute injury mechanisms and cell types.

By applying the method that Galileo advocated some 350 years ago to the study of cell injury, we have constructed a mathematical theory that has the potential to radically alter the understanding and treatment of acute cell injury. In short, the theory can usher in a new paradigm of acute cell injury that is firmly grounded in a mathematical paradigm of biology. We feel this direction will go a long way to overcome the weaknesses that are evident in the qualitative, pathways-based approach that currently dominates biomedical research.

References

Cheng YD, Al-Khoury L, Zivin JA (2004) Neuroprotection for ischemic stroke: two decades of success and failure. NeuroRx 1(1):36–45

O’Collins VE, Macleod MR, Donnan GA, Horky LL, van der Worp BH, Howells DW (2006) 1,026 experimental treatments in acute stroke. Ann Neurol 59(3):467–477

Turner RC, Dodson SC, Rosen CL, Huber JD (2013) The science of cerebral ischemia and the quest for neuroprotection: navigating past failure to future success. J Neurosurg 118(5):1072–1085

Xu SY, Pan SY (2013) The failure of animal models of neuroprotection in acute ischemic stroke to translate to clinical efficacy. Med Sci Monit Basic Res 19:37–45

Jo SK, Rosner MH, Okusa MD (2007) Pharmacologic treatment of acute kidney injury: why drugs haven’t worked and what is on the horizon. Clin J Am Soc Nephrol 2(2):356–365

Kloner RA (2013) Current state of clinical translation of cardioprotective agents for acute myocardial infarction. Circ Res 113(4):451–463

Freedman LP, Gibson MC, Ethier SP, Soule HR, Neve RM, Reid YA (2015) Reproducibility: changing the policies and culture of cell line authentication. Nat Methods 12(6):493–497

Halsey LG, Curran-Everett D, Vowler SL, Drummond GB (2015) The fickle P value generates irreproducible results. Nat Methods 12(3):179–185

Landis SC, Amara SG, Asadullah K, Austin CP, Blumenstein R, Bradley EW et al (2012) A call for transparent reporting to optimize the predictive value of preclinical research. Nature 490(7419):187–191

Plant AL, Locascio LE, May WE, Gallagher PD (2014) Improved reproducibility by assuring confidence in measurements in biomedical research. Nat Methods 11(9):895–898

Vasilevsky NA, Brush MH, Paddock H, Ponting L, Tripathy SJ, Larocca GM et al (2013) On the reproducibility of science: unique identification of research resources in the biomedical literature. PeerJ 1, e148

Drummond GB, Paterson DJ, McGrath JC (2010) ARRIVE: new guidelines for reporting animal research. J Physiol 588(pt 14):2517

Kilkenny C, Browne W, Cuthill IC, Emerson M, Altman DG, National Centre for the Replacement, Refinement and Reduction of Animals in Research et al (2011) Animal research: reporting in vivo experiments—the ARRIVE guidelines. J Cereb Blood Flow Metab 31(4):991–993

Kilkenny C, Browne WJ, Cuthill IC, Emerson M, Altman DG (2010) Improving bioscience research reporting: the ARRIVE guidelines for reporting animal research. J Pharmacol Pharmacother 1(2):94–99

DeGracia DJ (2013) A program for solving the brain ischemia problem. Brain Sci 3(2):460–503

DeGracia DJ (2010) Towards a dynamical network view of brain ischemia and reperfusion. Part II: a post-ischemic neuronal state space. J Exp Stroke Transl Med 3(1):72–89

DeGracia DJ (2010) Towards a dynamical network view of brain ischemia and reperfusion. Part III: therapeutic implications. J Exp Stroke Transl Med 3(1):90–103

DeGracia DJ (2010) Towards a dynamical network view of brain ischemia and reperfusion. Part IV: additional considerations. J Exp Stroke Transl Med 3(1):104–114

DeGracia DJ (2010) Towards a dynamical network view of brain ischemia and reperfusion. Part I: background and preliminaries. J Exp Stroke Transl Med 3(1):59–71

Nordenskiöld E, Eyre LB (1935) The history of biology, a survey. Tudor, New York, pp 3–629

Kline M (1985) Mathematics and the search for knowledge. Oxford University Press, New York, p 257

Frezza W (2012) The skeptical outsider: how to rescue the life sciences from technological torpor. Updated 13 July 2012. Available from: http://www.bio-itworld.com/BioIT_Article.aspx?id=117147, http://www.bio-itworld.com2013

Bao L, Radish EF (2002) Understanding probabilistic interpretations of physical systems: a prerequisite to learning quantum physics. Am J Phys 70(3):210–217

Newton I, Cohen IB, Whitman AM (1999) The Principia: mathematical principles of natural philosophy. University of California Press, Berkeley, p 966

Weyl H (1934) Mind and nature. University of Pennsylvania Press, Philadelphia, p 100

Hillman H (1972) Certainty and uncertainty in biochemical techniques. Ann Arbor Science, Ann Arbor, p 126

Kedersha N, Anderson P (2007) Mammalian stress granules and processing bodies. Methods Enzymol 431:61–81

Biggs N, Lloyd EK, Wilson RJ (1986) Graph theory, 1736-1936. Oxfordshire/Clarendon Press, New York, p 239

Kauffman SA (1969) Metabolic stability and epigenesis in randomly constructed genetic nets. J Theor Biol 22(3):437–467

Jacob F, Perrin D, Sanchez C, Monod J (1960) Operon: a group of genes with the expression coordinated by an operator. C R Hebd Seances Acad Sci 250:1727–1729

Strogatz SH (1994) Nonlinear dynamics and Chaos: with applications to physics, biology, chemistry, and engineering. Addison-Wesley, Reading, p 498

Kaplan D, Glass L (1995) Understanding nonlinear dynamics. Springer, New York, p 420

Huang S, Guo YP, May G, Enver T (2007) Bifurcation dynamics in lineage-commitment in bipotent progenitor cells. Dev Biol 305(2):695–713

Vaughn MT (2007) Introduction to mathematical physics. Wiley-VCH, Weinheim, p 527

DeGracia DJ, Huang ZF, Huang S (2012) A nonlinear dynamical theory of cell injury. J Cereb Blood Flow Metab 32(6):1000–1013

Alon U (2007) An introduction to systems biology: design principles of biological circuits. Chapman & Hall/CRC, Boca Raton, p 301

Kirino T (2000) Delayed neuronal death. Neuropathology 20(suppl):S95–S97

Hossmann KA (1994) Viability thresholds and the penumbra of focal ischemia. Ann Neurol 36(4):557–565

Balduini W, Carloni S, Buonocore G (2012) Autophagy in hypoxia-ischemia induced brain injury. J Matern Fetal Neonatal Med 25(suppl 1):30–34

Chalmers-Redman RM, Fraser AD, Ju WY, Wadia J, Tatton NA, Tatton WG (1997) Mechanisms of nerve cell death: apoptosis or necrosis after cerebral ischaemia. Int Rev Neurobiol 40:1–25

Ge P, Zhang F, Zhao J, Liu C, Sun L, Hu B (2012) Protein degradation pathways after brain ischemia. Curr Drug Targets 13(2):159–165

Jouan-Lanhouet S, Riquet F, Duprez L, Vanden Berghe T, Takahashi N, Vandenabeele P (2014) Necroptosis, in vivo detection in experimental disease models. Semin Cell Dev Biol 35:2–13

Ginsberg MD (2008) Neuroprotection for ischemic stroke: past, present and future. Neuropharmacology 55(3):363–389

Alonso de Lecinana M, Diez-Tejedor E, Gutierrez M, Guerrero S, Carceller F, Roda JM (2005) New goals in ischemic stroke therapy: the experimental approach—harmonizing science with practice. Cerebrovasc Dis 20(suppl 2):159–168

Hermann DM, Bassetti CL (2007) Neuroprotection in the SAINT-II aftermath. Ann Neurol 62(6):677–678, author reply 8

Savitz SI, Fisher M (2007) Future of neuroprotection for acute stroke: in the aftermath of the SAINT trials. Ann Neurol 61(5):396–402

Molina CA, Saver JL (2005) Extending reperfusion therapy for acute ischemic stroke: emerging pharmacological, mechanical, and imaging strategies. Stroke 36(10):2311–2320

Molina CA, Alvarez-Sabin J (2009) Recanalization and reperfusion therapies for acute ischemic stroke. Cerebrovasc Dis 27(suppl 1):162–167

Rother J (2008) Neuroprotection does not work! Stroke 39(2):523–524

Zhou JX, Brusch L, Huang S (2011) Predicting pancreas cell fate decisions and reprogramming with a hierarchical multi-attractor model. PLoS One 6(3), e14752

Harston GW, Rane N, Shaya G, Thandeswaran S, Cellerini M, Sheerin F et al (2015) Imaging biomarkers in acute ischemic stroke trials: a systematic review. AJNR Am J Neuroradiol 36(5):839–843

Ward NS (2015) Does neuroimaging help to deliver better recovery of movement after stroke? Curr Opin Neurol 28(4):323–329

Hirano T (2014) Searching for salvageable brain: the detection of ischemic penumbra using various imaging modalities? J Stroke Cerebrovasc Dis 23(5):795–798

Brennan KM, Roos MS, Budinger TF, Higgins RJ, Wong ST, Bristol KS (1993) A study of radiation necrosis and edema in the canine brain using positron emission tomography and magnetic resonance imaging. Radiat Res 134(1):43–53

Lapchak PA (2010) Taking a light approach to treating acute ischemic stroke patients: transcranial near-infrared laser therapy translational science. Ann Med 42(8):576–586

Sanderson TH, Reynolds CA, Kumar R, Przyklenk K, Huttemann M (2013) Molecular mechanisms of ischemia-reperfusion injury in brain: pivotal role of the mitochondrial membrane potential in reactive oxygen species generation. Mol Neurobiol 47(1):9–23

Wong CS, Van der Kogel AJ (2004) Mechanisms of radiation injury to the central nervous system: implications for neuroprotection. Mol Interv 4(5):273–284

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Rights and permissions

Copyright information

© 2017 Springer International Publishing Switzerland

About this chapter

Cite this chapter

DeGracia, D.J., Taha, D., Anggraini, F.T., Huang, Z. (2017). Neuroprotection Is Technology, Not Science. In: Lapchak, P., Zhang, J. (eds) Neuroprotective Therapy for Stroke and Ischemic Disease. Springer Series in Translational Stroke Research. Springer, Cham. https://doi.org/10.1007/978-3-319-45345-3_3

Download citation

DOI: https://doi.org/10.1007/978-3-319-45345-3_3

Published:

Publisher Name: Springer, Cham

Print ISBN: 978-3-319-45344-6

Online ISBN: 978-3-319-45345-3

eBook Packages: MedicineMedicine (R0)