Abstract

We describe a simple method for making inference on a functional of a multivariate distribution. The method is based on a copula representation of the multivariate distribution, where copula is a flexible probabilistic tool that allows the researcher to model the joint distribution of a random vector in two separate steps: the marginal distributions and a copula function which captures the dependence structure among the vector components. The method is also based on the properties of an approximate Bayesian Monte Carlo algorithm, where the proposed values of the functional of interest are weighted in terms of their empirical likelihood. This method is particularly useful when the likelihood function associated with the working model is too costly to evaluate or when the working model is only partially specified.

Access provided by CONRICYT-eBooks. Download conference paper PDF

Similar content being viewed by others

1 Introduction

Theoretical proposals are now available to model complex situations, thanks to the recent advances in computational methodologies and to the increased power of modern computers. In particular, there are new methods for multivariate analysis, however the goal of modelling complex multivariate structures and estimating them has not yet been reached in a completely satisfactory way.

Copula models have been introduced as probabilistic tools to describe a multivariate random vector via the marginal distributions and a copula function which captures the dependence structure among the vector components, thanks to the Sklar’s theorem [1], which states that any d-dimensional absolutely continuous density can be uniquely represented as

While it is often straightforward to produce reliable estimates of the marginals, making inference on the dependence structure is more complex. Major areas of application include econometrics, hydrological engineering, biomedical science, signal processing and finance.

In a parametric frequentist approach to copula models, there are no broadly satisfactory methods for the joint estimation of marginal and copula parameters. The most popular method is the so called Inference From the Margins (IFM), where the parameters of the marginal distributions are estimated first, and then pseudo-observations are obtained by pluggin-in the estimates of the marginal parameters. Then inference on the copula parameters is performed using the pseudo-observations: this approach obviously does not account for the uncertainty on the estimation of the marginal parameters. Bayesian alternatives are not yet fully developed, although there are remarkable exceptions ([2, 3], among others).

In this work we consider the general problem of estimating some specific quantities of interest of a generic copula (such as, for example, tail dependence index or Spearman’s \(\rho \)) by adopting an approximate Bayesian approach along the lines of [4]. In particular, we discuss the use of the an approximate Bayesian computation algorithm based on empirical likelihood weights (in the following, \(BC_{EL}\)), based on the empirical likelihood approximation of the marginal likelihood of the quantity of interest (see [5] for a complete and recent survey on empirical likelihood methods). This approach produces an approximation of the posterior distribution of the quantities of interest, based on an approximation of the likelihood function and on a Monte Carlo approximation of the posterior distribution via simulations. Our approach is general, in the sense that it could be adapted both to parametric and nonparametric modelling of the marginal distributions. Also, the use of empirical likelihood avoids the need of choosing a specific parametric copula model.

2 Approximate Bayesian Computation

The idea underlying likelihood-free methods (or approximate Bayesian computation, ABC) is to propose a candidate \(\theta '\) and to generate a data set from the working model with parameter set to \(\theta '\). If the observed and the simulated data are similar “in some way”, then the proposed value is considered a good candidate to have generated the data and becomes part of the sample which will form the approximation to the posterior distribution. Conversely, if the observed and the simulated data are too different, the proposed \(\theta '\) is discarded.

In order to implement a basic version of the ABC algorithm one needs to specify a set of summary statistics to make comparisons, a distance to quantify comparisons and a tolerance level \(\epsilon >0\).

The basic ABC may be inefficient, because it proposes values of \(\theta \) from its prior distribution, therefore, ABC algorithms are often linked with other methods, for instance, with Markov Chain Monte Carlo (MCMC) or Sequential Monte Carlo (SMC) methods. In this work, we will focus on a different ABC approach, described in Algorithm 1 where M simulations from the prior are generated; this method avoids the most expensive step in computational time, that is the generation of new data sets. A detailed description of the method is in [4]; it represents a re-sampling scheme where the proposed values are re-sampled with weights proportional to their empirical likelihood values.

3 The Proposed Approach

We propose to adapt the BC\(_{EL}\) algorithm to a situation where the statistical model is only partially specified and the main goal is the estimation of a finite dimensional quantity of interest, i.e. a situation where the complete structure of the mutual dependence is considered a nuisance parameter and it is kept as general as possible. While the main interest of [4] was the approximation of the full posterior distribution of the parameters of the model, here we use the empirical likelihood (EL) approach to avoid a parametric definition of the model for the observed data and focus only on a particular functional of the distribution, which summarizes the correlation among the variables.

We assume that a data set is available in the form of a size \(n\times d\) matrix \(\varvec{X}\), where n is the sample size and d is the number of variables, that is

In the following, \(X_{[\cdot ,j]}\) will denote the j-th column (variable) and \(X_{[i,\cdot ]}\) the i-th row of \(\varvec{X}\), respectively. For each \(j=1, \dots , d\), we consider the available data information in \(X_{[\cdot ,j]}\) to produce an estimate of the marginal CDF of \(X_{[\cdot ,j]}\). Let \(\varvec{\lambda }_j=(\lambda _j^{(1)}, \lambda _j^{(2)}, \dots \lambda _j^{(B)}),\, j=1, 2, \dots d\), be the posterior sample of size B obtained for the distribution of \(X_{[\cdot ,j]}\). Notice that the vector \(\varvec{\lambda }_j\) can be either a sample from the posterior distribution of the parameters of the model we have adopted for \(X_{[\cdot ,j]}\) or a posterior sample of CDF’s in a nonparametric set-up.

Then we use a copula representation for estimating the multivariate dependence structure of the random vector \(\varvec{X}\),

where \(\theta \) is the parameter related to the copula function.

Estimating the copula \(C_\theta (\cdot )\) can be managed either using some parametric model for the copula (such as Clayton, Gaussian, Skew-t, Gumbel, etc.) or using a nonparametric approach. In this paper, we take a nonparametric route (in many situations it is difficult to prefer a model instead of another) and we concentrate on some specific function of \(C_\theta (\cdot )\), say \(\varphi =T(\varvec{F})\), for example the Spearman’s measure of association \(\rho \) between two components of \(\varvec{X}\), say \(X_h\) and \(X_j\), which is defined as the correlation coefficient among the transformed values \(U_i= F_i(x_i),\, i=j,h\):

Its sampling counterpart \(\rho _n\) is the correlation among ranks \(\mathbf {R}\) and \(\mathbf {S}\) of the data observed for the two variables of interest and it can be written as:

If interest lies only in a functional of the copula, instead of in its entire structure, we use Algorithm 2 to produce an approximation of the posterior distribution of the functional itself \(\varphi =T(\varvec{F})\).

It is important to note that the approximation might hold only asymptotically: for example, if the sample version of the Spearman’s \(\rho \) is used to approximate the posterior distribution of \(\rho \), one has to consider that the sample version is only asymptotically unbiased. One advantage of the proposed Algorithm is that prior information is only provided for the marginal distributions and for \(\varphi \); so the prior elicitation is easier: it is not necessary to define a prior distribution for the entire copula function.

Moreover, the method is robust with respect to different prior opinions about non-essential aspects of the dependence structure and with respect to the copula definition. The most important disadvantage of the method is its inefficiency when compared to a parametric copula, as usual in nonparametric or semiparametric setting; however this is true only under the assumption that the parametric copula is the true model.

From a computational perspective Algorithm 2 is quite demanding, since one needs to run a \(BC_{EL}\) algorithm for each row of the posterior sample from the marginals. Even though the estimation of the marginal densities of the \(X_{[\cdot ,j]}\)’s might not require a huge number of iterations B, still it might be very expensive to run B different \(BC_{EL}\) algorithms. To avoid this computational burden, we propose to modify the above algorithm by simply performing a single run of the \(BC_{EL}\) algorithm, where, for each iteration \(m=1, \dots , M\), a randomly selected (among the B rows) row \(\varvec{\lambda }^{b}\) is used to transform the actual data into pseudo-observations lying in \([0,1]^d.\)

4 An Example: Spearman’s \(\rho \)

The definition of the Spearman’s \(\rho \) given in (4) can be interpreted as an average distance between the copula C and the independence copula \(\varPi (u,v)=uv\). Thus, in a d-dimensional setting the multivariate \(\rho \) becomes

The multivariate extension of the empirical copula is

where \(\hat{U}_{ijn}=\hat{F}(X_{ij})\) for \(i=1,\ldots ,d\) and \(\hat{F}(\cdot )\) is the empirical marginal distribution function. Therefore, a nonparametric estimator of \(\rho \) is

where \(h(d)=(d+1)/(2^d-d-1)\). An alternative estimator is

Asymptotic properties of these estimators are assessed in [6].

100 simulations from a Clayton copula: sample size is 100; the true value of \(\rho \) is equal to 0.5 (red line). The results for the frequentist procedure are available above, the ones for the Bayesian procedure are available below. The black lines are the point estimates of \(\rho _1\), the blue lines represent the lower and the upper bounds of the intervals of level 0.95

Once an estimator of the multivariate version of \(\rho \) is available, it is possible to apply the procedure presented in Sect. 3. On the other hand, the variance of the proposed estimators can be explicitly computed only in few cases, for example in the case of the independence copula. [6] proposes to estimate it in a nonparametric way via a bootstrap method. Nevertheless, in practice this method tends to underestimate the variance, as it is shown in Fig. 1, where the frequentist procedure for a fixed n leads to a coverage of about \(10\,\%\) (coverage of \(0\,\%\) for the interval of \(\hat{\rho }_2\)), while the proposed Bayesian method has the expected coverage, even if the average length is necessarily greater, about 0.822, i.e. the intervals contain almost half of the parameter space.

5 Further Research

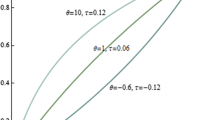

Algorithm 2 produces an approximation of the posterior distribution of any summary of the multivariate dependence, once a multivariate estimator is available, as in the case of the Spearman’s \(\rho \). In some cases the analysis may be focused on measures of dependence as functions of some available conditioning variables. In the case of two response variables \(X_1\) and \(X_2\), both depending on the same covariate Z, the observations \((x_{1i},x_{2i},z_i)\) follow a distribution \(F_{X_1,X_2|Z}(\cdot |z)\). [7] proposes the following estimator for the Spearman’s \(\rho \).

where \(\hat{U}_{i,j}=\sum _{i'=1}^n w_{i'}(x,h_n) \mathbb {I}(U_{i'j}\le u_{ij})\) for \(j=1,2\), \(U_{ij}=F_j(x_{ij})\) and \(w_{ij}(x,h_n)\) are appropriately chosen weights depending on \(x_{ij}\) and a bandwidth \(h_n\), for example kernel-based weights as the Nadaraya-Watson. Unfortunately, estimator (9) is based on an estimator of the conditional copula, given in [7], which is biased. A first simulation study implemented for 10,000 simulations of the function \(\rho (x)\) (see Fig. 2) shows that, while the estimator (9) is not able to capture the true function (it underestimates the dependence among values), the Bayesian estimate obtained via Algorithm 2 can recover it, even if the variance increases as the value of the covariate increases. Further research will be focused on trying to understand why this happens and on producing more stable estimates.

References

Sklar M (1959) Fonctions de répartition à \(n\) dimensions et leurs marges. Publ Inst Statist Univ Paris 8:229–231

Craiu VR, Sabeti A (2012) In mixed company: Bayesian inference for bivariate conditional copula models with discrete and continuous outcomes. J Multivar Anal 110:106–120

Mengersen K, Pudlo P, Robert CP (2013) Bayesian computation via empirical likelihood. Proc Natl Acad Sci 110(4):1321–1326

Min A, Czado C (2010) Bayesian inference for multivariate copulas using pair-copula constructions. J Financ Econometrics 8(4):511–546

Owen AB (2010) Empirical likelihood. Chapman & Hall/CRC Press, New York

Schmid F, Schmidt R (2007) Multivariate extensions of Spearmans Rho and related statistics. Stat Probab Lett 77(4):407–416

Gijbels I, Veraverbeke N, Omelka M (2011) Conditional copulas, association measures and their applications. Comput Stat Data Anal 55(5):1919–1932

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Rights and permissions

Copyright information

© 2017 Springer International Publishing Switzerland

About this paper

Cite this paper

Grazian, C., Liseo, B. (2017). Approximate Bayesian Methods for Multivariate and Conditional Copulae. In: Ferraro, M., et al. Soft Methods for Data Science. SMPS 2016. Advances in Intelligent Systems and Computing, vol 456. Springer, Cham. https://doi.org/10.1007/978-3-319-42972-4_33

Download citation

DOI: https://doi.org/10.1007/978-3-319-42972-4_33

Published:

Publisher Name: Springer, Cham

Print ISBN: 978-3-319-42971-7

Online ISBN: 978-3-319-42972-4

eBook Packages: EngineeringEngineering (R0)