Abstract

We consider a mathematical model describing Intermittent Androgen Suppression therapy (IAS therapy) of prostate cancer. The system has a hybrid structure, i.e., the system consists of two different systems by the medium of an unknown binary function denoting the treatment state. In this paper, we shall prove that the hybrid system has a unique solution with the property that the binary function keeps on changing its value. In the clinical point of view, the result asserts that one can plan the IAS therapy for each prostate cancer patient, provided that the tumor satisfies a certain condition.

Access provided by Autonomous University of Puebla. Download conference paper PDF

Similar content being viewed by others

Keywords

1 Introduction

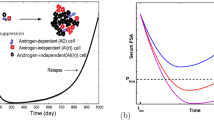

Prostate cancer is one of the diseases of male. By the fact that prostate cells proliferate by a male hormone so-called androgen, it is expected that prostate tumors are sensitive to androgen suppression. Huggins and Hodges [10] demonstrated the validity of the androgen deprivation. Since then, the hormonal therapy has been a major therapy of prostate cancer. The therapy aims to induce apoptosis of prostate cancer cells under the androgen suppressed condition. For instance, the androgen suppressed condition can be kept by medicating a patient continuously [22], and the therapy is called Continuous Androgen Suppression therapy (CAS therapy). However, during several years of the CAS therapy, the relapse of prostate tumor often occurs. More precisely, the relapse means that the prostate tumor mutates to androgen independent tumor. Then the CAS therapy is not effective in treating the tumor [5]. The fact was also verified mathematically by [13, 14]. It is known that there exist Androgen-Dependent cells (AD cells) and Androgen-Independent cells (AI cells) in prostate tumors. AI cells are considered as one of the causes of the relapse. For, AD cells can not proliferate under the androgen suppressed condition, whereas AI cells are not sensitive to androgen suppression and can still proliferate under the androgen poor condition [2, 18]. Thus the relapse of prostate tumors is caused by progression to androgen independent cancer due to emergence of AI cells.

In order to prevent or delay the relapse of prostate tumors, Intermittent Androgen Suppression therapy (IAS therapy) was proposed and has been studied clinically by many researchers (e.g., see [1, 3, 19], and the references therein). In contrast to the CAS therapy, the IAS therapy does not aim to exterminate prostate cancer. We mention the typical feature of the clinical phenomenon. Since prostate cancer cells produce large amount of Prostate-Specific Antigen, the PSA is regarded as a good biomarker of prostate cancer [21], and the plan of IAS therapy is based on the level:

- (F) :

-

In the IAS therapy, the medication is stopped when the serum PSA level falls enough, and resumed when the serum PSA level rises enough.

Indeed, if one can optimally plan the IAS therapy, then the size of tumor remains in an appropriate range by way of on and off of the medication. In order to comprehend qualitative property of prostate tumors under the IAS therapy, several mathematical models were proposed and have been studied in the mathematical literature, for instance, ODE models ([9, 11, 12, 20], and references therein) and PDE models [8, 15, 23–25]. Due to (F), an unknown binary function, denoting the treatment state, appears in the models. The discontinuity of the binary function is the difficulty in mathematical analysis on the models. To the best of our knowledge, there is no result dealing with switching phenomena of the binary function in the PDE models.

The purpose of this paper is to prove the existence of a solution with the switching property for the PDE model introduced by Tao et al. [23]:

where \(I=(\,0,1\,)\), \(\mathbb {R}_+=\{t \in \mathbb {R}\, | \, t>0\}\), \(I_\infty =I \times \mathbb {R}_+\), and

The unknowns a, u, w, v, R, and S denote respectively the androgen concentration, the volume fraction of AD cells, the volume fraction of AI cells, the advection velocity of the cancer cells, the radius of the tumor, and the treatment state. Here \(S=0\) and \(S=1\) correspond to the medication state and the non-medication state, respectively. The authors of [23] assumed that the prostate tumor is radially symmetric and densely packed by AD and AI cells. Moreover they regarded the tumor as a three dimensional sphere. Thus the unknowns u, w, and v are radially symmetric functions defined on the unit ball \(B_1 =\{ x \in \mathbb {R}^3 \mid |x|<1 \}\), i.e., \(\rho =|x|\). The unknown S(t) is governed by R(t), for they formulated the serum PSA level as the radius of the tumor. Although the condition on S in (IAS) is a concise form, the precise form is expressed as follows: \(S(t)\in \{0,1\}\) and

The parameters \(a_*\), \(\gamma \), \(c_1\), \(c_2\), \(r_0\), and \(r_1\) denote the normal androgen concentration, the reaction velocity, the effective competition coefficient from AD to AI cells, and from AI to AD cells, the lower and upper thresholds, respectively. The given functions \(f_1 : [\,0, a_*\,] \rightarrow \mathbb {R}\) and \(f_2 : [\,0, a_*\,] \rightarrow \mathbb {R}\) describe the net growth rate of AD and AI cells, respectively. Although the typical form of \(f_{i}\) were given by [23], we deal with general \(f_{i}\) satisfying several conditions, which are stated later.

In [23], it was shown that, for each initial data \(u_{0} \in W^{2}_{p}(I)\), there exists a short time solution \(u \in W^{2,1}_{p}(I \times (\,0,T\,))\) of (IAS). However, the result is not sufficient to construct a “switching solution”. For, if (u, w, v, R, a, S) is a switching solution of (IAS), then (IAS) must be solvable at least locally in time for each “initial data” \((u,w,R,a,S)|_{\{t=t_{j} \}}\), where \(t_{j}\) is a switching time. Nevertheless, the result in [23] does not ensure the solvability.

The existence of switching solutions of (IAS) is a mathematically outstanding question. We are interested in the following mathematical problem:

Problem 1.1

Does there exist a switching solution of (IAS) with appropriate thresholds \(0< r_0< r_1 < \infty \)? Moreover, what is the dynamical aspect of the solution?

We consider the initial data \((u_0, w_0, R_0, a_0, S_0)\) satisfying the following:

where \(\alpha \in (\,0,1\,)\). Let \(f_1\) and \(f_2\) satisfy

We note that (A0) is a natural assumption in the clinical point of view, and typical \(f_1\) and \(f_2\), which were given in [23], also satisfy (A0). In order to comprehend the role of \(f_i\) and \(c_i\), we classify asymptotic behavior of non-switching solutions of (IAS) in terms of \(f_i\) and \(c_i\) under (A0) (see Theorems 3.2–3.5). Following the results obtained by Theorems 3.2–3.5, we impose (A0) and the following assumptions on \(f_i\) and \(c_i\):

From now on, let \(Q_T := B_1\times (\,0, T\,)\). We denote by \(C^{2\kappa +\alpha , \kappa +\beta }(Q_T)\) the Hölder space on \(Q_T\), where \(\kappa \in \mathbb {N}\cup \{0\}\), \(0< \alpha <1\), and \(0<\beta <1\) (for the precise definition, see [16]).

Then we give an affirmative answer to Problem 1.1:

Theorem 1.1

Let \(f_i\) and \(c_i\) satisfy (A0)–(A2). Let \((u_0, w_0, R_0, a_0, S_0)\) satisfy (3), \(u_0>0\) in \(\overline{B_1}\), and \(S_0=0\). Then, there exists a pair \((r_0, r_1)\) with \(0< r_0< r_1 < \infty \) such that the system (IAS) has a unique solution (u, w, v, R, a, S) in the class

Moreover, the following hold\(:\)

-

(i)

There exists a strictly monotone increasing divergent sequence \(\,\{t_j\}_{j=0}^{\infty }\,\) with \(t_0=0\) such that \(a\in C^1((\,t_j,t_{j+1}\,))\) and

$$\begin{aligned} S(t)= {\left\{ \begin{array}{ll} 0 \quad \text {in} \quad [\,t_{2j},t_{2j+1}\,),\\ 1 \quad \text {in} \quad [\,t_{2j+1},t_{2j+2}\,), \end{array}\right. } \quad \text {for any} \quad j \in \mathbb {N}\cup \{0\}; \end{aligned}$$ -

(ii)

There exist positive constants \(C_1<C_2\) such that

$$\begin{aligned} C_1 \le R(t) \le C_2 \quad \text {for any} \quad t\ge 0. \end{aligned}$$

We mention the mathematical contributions of Theorem 1.1 and a feature of the system (IAS). The system is composed of two different systems (S0) and (S1) by the medium of the binary function S(t), where (S0) and (S1) respectively denote (IAS) with \(S(t) \equiv 0\) and \(S(t) \equiv 1\). Generally the system with such structure is called hybrid system. Regarding (S0), the assumption (A1) implies that R(t) diverges to infinity as \(t \rightarrow \infty \) (see Theorem 3.4). On the other hand, regarding (S1), we can show that the assumption (A2) implies the following: (i) R(t) diverges to infinity as \(t \rightarrow \infty \) if \(u_0\) is sufficiently small (Theorem 3.2); (ii) R(t) converges to 0 as \(t \rightarrow \infty \) if \(u_0\) is sufficiently close to 1 (Theorem 3.3). It is natural to ask whether a solution R(t) of (IAS) is bounded or not. One of the contributions of the present paper is to show how to determine thresholds \(0< r_0< r_1 < \infty \) such that (IAS) with the thresholds has a bounded solution with infinite opportunities of switching. Furthermore, due to the discontinuity of S(t), it is expected that the switching solution is not so smooth. However, Theorem 1.1 indicates that the switching solution gains its regularity with the aid of the “indirectly controlled parameter” a(t). The other contribution of this paper is to mathematically clarify the immanent structure of the hybrid system (IAS).

We mention the clinical contribution of Theorem 1.1. Although one can expect that the system (IAS) gives us how to optimally plan the IAS therapy for each prostate cancer patient, it is not trivial matter. To do so, first we have to prove the existence of admissible thresholds for each patient. Moreover, if the admissible threshold is not unique, then we investigate the optimality of the admissible thresholds. Here, we say that the thresholds is admissible for a prostate cancer patient, if for the initial data (IAS) with the thresholds has a switching solution. Although [23] indicated that the problem, even the existence, is difficult to analyze mathematically, they numerically showed that (i) the IAS therapy fails for unsuitable thresholds, more precisely, the radius of tumor diverges to infinity after several times of switching opportunities, and while, (ii) the IAS therapy succeeds for suitable thresholds, i.e., the radius of tumor remains in a bounded range by way of infinitely many times of switching opportunities. One of the clinical contribution of Theorem 1.1 is to prove the existence of admissible thresholds for each patients, provided that (A0)–(A2) are fulfilled. Moreover, Theorem 1.1 also implies that the IAS therapy has an advantage over the CAS therapy for some patients. Indeed, Theorem 3.2 gives an instance showing a failure of the CAS therapy, whereas Theorem 1.1 asserts that the patient can be treated successfully by the IAS therapy. The fact is an example that switching strategy under the IAS therapy is able to be a successful strategy. On the other hand, the pair of admissible thresholds given by Theorem 1.1 is not uniquely determined. Thus, in order to optimally plan the IAS therapy, we have to investigate its optimality. However the optimality of the admissible thresholds is an outstanding problem.

The paper is organized as follows: In Sect. 2, we give a modified system of (IAS) and reduce the system to a simple hybrid system. Making use of the modified system, we prove the short time existence of the solution to (IAS). In Sect. 3, we show the existence of the non-switching solution of (IAS) for any finite time. Moreover, we classify the asymptotic behaviors of the non-switching solutions in terms of \(f_i\) and \(c_i\). In Sect. 4, we prove Theorem 1.1, i.e., we show the existence of a switching solution of (IAS) and give its property.

2 Short Time Existence

The main purpose of this section is to show the short time existence of the solution of (IAS). As [23] mentioned, it is difficult to prove that (IAS) has a short time solution in the Hölder space (see Remark 4.1 in [23]). The difficulty rises from the singularity of \(v/\rho \) at \(\rho =0\). Indeed, the singularity prevents us from applying the Schauder estimate. To overcome the difficulty, first we consider a modified hybrid system. More precisely, we replace the “boundary condition”

by

Then the modified hybrid system is expressed as follows:

To begin with, we show that \(u+w\) is invariant under (mIAS).

Lemma 2.1

Let \((u_0, w_0, R_0, a_0, S_0)\) be an initial data satisfying (3). Assume that (u, w, v, R, a, S) is a solution of (mIAS) with \(u,w \in C^{2+\alpha ,1+\alpha /2} (Q_T)\) and \(S(t)\equiv S_0\) in \([\,0,T\,)\). Then \(u+w \equiv 1\) in \(B_1\times [\,0,T\,)\).

Proof

Setting \(V:= u+w\), we reduce (mIAS) to the following system:

In the derivation of the second equation in (5), we used the fact \(F_u + F_w = F_v\) and the equation on v. We shall prove that \(V \equiv 1\) in \(B_1\times [\,0,T\,)\). The second equation in (5) is written as

in terms of the three-dimensional Cartesian coordinates, where \(\rho = |x|\). In what follows, we use \(\nabla \) and \(\varDelta \) instead of \(\nabla _x\) and \(\varDelta _x\), respectively, if there is no fear of confusion. First, we observe from (6) that

We start with an estimate of \(J_1\). Since it follows from the third and fourth equations in (5) that

we have

where \(\kappa \) is a positive constant given by

We turn to \(J_2\). By the relation

the integral \(J_2\) is reduced to

Observing that

and using Hölder’s inequality and Young’s inequality, we find

Regarding \(J_3\) and \(J_4\), the same argument as in the estimate of \(J_2\) asserts that

Thus, letting \(\varepsilon >0\) small enough, we obtain

Since \(V(\cdot ,0)=1\), applying Gronwall’s inequality to (7), we obtain the conclusion. \(\square \)

Here we reduce the system (mIAS) to the following hybrid system:

where

The reduction is justified as follows:

Lemma 2.2

The system (mIAS) is equivalent to (P).

Proof

If (u, w, v, R, a, S) satisfies (mIAS), then Lemma 2.1 implies that \(u+w\equiv 1\). Using \(w= 1-u\), we can reduce (mIAS) to (P). On the other hand, if (u, v, R, a, S) satisfies (P), then, setting \(w:=1-u\), we obtain (mIAS) from (P). \(\square \)

In order to prove the short time existence of a solution to (mIAS), we first consider the following system, which is formally derived from (P) provided \(S(t) \equiv S_0\).

Lemma 2.3

Let \((u_0, R_0, a_0, S_0)\) satisfy (3). Then there exists \(T>0\) such that the system (\(\mathrm{PS}_{0}\)) has a unique solution (u, v, R, a) in the class

Proof

We shall prove Lemma 2.3 by the contraction mapping principle. Let us define a metric space \((X_{M}, \Vert \cdot \Vert _X)\) as follows:

where \(\Vert u\Vert _X = \Vert u\Vert _{C^{\alpha ,\alpha /2}(Q_T)}\). We will take the constants \(T>0\) and \(M>0\) appropriately, later.

Step 1: We shall construct a mapping \(\varPsi : X_{M} \rightarrow X_{M}\). Let \(u\in X_{M}\). For \(u(\rho , t)\), let us define \((v(\rho ,t),R(t))\) as the solution of the following system:

For (v, R) defined by (9), let \(\tilde{u}(x,t)=\tilde{u}(|x|,t)=\tilde{u}(\rho ,t)\) denote the solution of

If we consider the problem as an initial-boundary value problem for a one dimensional parabolic equation, the parabolic equation has a singularity at \(\rho =0\). In order to eliminate the singularity, we rewrite the problem in terms of the three dimensional Cartesian coordinate as follows:

We prove that \(\tilde{u}\in X_{M}\) by applying the Schauder estimate to (11). Since \(u\in X_M\), it is clear that \(F(u,a)\in C^{\alpha ,\alpha /2}(Q_T)\), \(P(u,a)\in C^{\alpha ,\alpha /2}(Q_T)\), and

Moreover, since \(R(t)>0\) in \([\,0,T\,)\), the fact (12) implies \(1/R(t)^2 \in C^{\alpha /2}((\,0,T\,))\). In the following, we will show

-

(i)

Let us fix \(\rho \in (\,0,1\,)\) arbitrarily. Since now \(\mathscr {V}\) satisfies, for any \(0<t<s<T\),

$$\begin{aligned} \mathscr {V}(\rho ,s)-\mathscr {V}(\rho ,t)=\frac{1}{\rho ^3}\int ^\rho _0 \{F(u(r,s),a(s))-F(u(r,t),a(t))\}r^2\,dr, \end{aligned}$$(14)we estimate the integrand. It follows from \(u \in X_M\) that

$$\begin{aligned}&|F(u(r,s),a(s))-F(u(r,t),a(t))| \\&\quad \le C(M)\left\{ |u(r,s)-u(r,t)| +\sum _{i=1}^2 |f_i(a(s))-f_i(a(t))|\right\} \nonumber \\&\quad \le C(M)\left\{ M |s-t|^{\frac{\alpha }{2}} +\sum _{i=1}^2 |f_i(a(s))-f_i(a(t))|\right\} . \nonumber \end{aligned}$$(15)Furthermore, the mean value theorem implies

$$\begin{aligned} |f_i(a(s))-f_i(a(t))|\le C|s-t| \quad \text {for} \quad i=1, 2, \end{aligned}$$where \(C=C(f_i, a_*, \gamma )\). Combining the estimate with (15), we find

$$\begin{aligned} |F(u(r,s),a(s))-F(u(r,t),a(t))| \le C(M)|s-t|^{\frac{\alpha }{2}}. \end{aligned}$$Consequently, we deduce from (14) that

$$\begin{aligned} |\mathscr {V}(\rho ,s)-\mathscr {V}(\rho ,t)| \le C(M)|s-t|^{\frac{\alpha }{2}}. \end{aligned}$$ -

(ii)

Let \(\rho =0\). Then by the same argument as in (i), we see that

$$\begin{aligned} |\mathscr {V}(0,s)-\mathscr {V}(0,t)|=\frac{1}{3}|F(u(0,s),a(s))-F(u(0,t),a(t))| \le C(M)|s-t|^{\frac{\alpha }{2}} \end{aligned}$$for any \(0<t<s<T\).

-

(iii)

Fix \(0<t<T\) arbitrarily. Then, for any \(0<\rho<\sigma < 1\), it holds that

$$\begin{aligned} \mathscr {V}(\sigma ,t)-\mathscr {V}(\rho ,t)&=\{ \mathscr {V}(\sigma ,t)-\mathscr {V}(0,t) \}-\{ \mathscr {V}(\rho ,t)-\mathscr {V}(0,t) \}\\&=\frac{1}{\sigma ^3}\int ^\sigma _\rho \{F(u(r,t),a(t))-F(u(0,t),a(t))\}r^2 \,dr\\&\quad +\left( \frac{1}{\sigma ^3}-\frac{1}{\rho ^3}\right) \int ^\rho _0 \{F(u(r,t),a(t))-F(u(0,t),a(t))\}r^2 \,dr. \end{aligned}$$Since \(u \in X_M\), we observe that

$$\begin{aligned} |F(u(\rho ,t),a(t))-F(u(0,t),a(t))| \le C(M)|u(\rho ,t)-u(0,t)| \le C(M) \rho ^\alpha . \end{aligned}$$Therefore we obtain

$$\begin{aligned} |\mathscr {V}(\sigma ,t)-\mathscr {V}(\rho ,t)|&\le C(M)\frac{1}{\sigma ^3}\int ^\sigma _\rho r^{2+\alpha } \,dr+C(M) \Bigm |\frac{\rho ^3-\sigma ^3}{\sigma ^3 \rho ^3}\Bigm |\int ^\rho _0 r^{2+\alpha } \,dr\\&\le C(M)\Bigm |\frac{\sigma ^3- \rho ^3}{\sigma ^{3-\alpha }}\Bigm | \le C(M)|\sigma - \rho |^\alpha . \end{aligned}$$ -

(iv)

Let us fix \(0<t<T\) arbitrarily. The same argument as in (iii) implies that

$$\begin{aligned} |\mathscr {V}(\rho ,t)-\mathscr {V}(0,t)| \le C(M) \frac{1}{\rho ^3} \int ^\rho _0 r^{2+\alpha }\, dr \le C(M) \rho ^\alpha \quad \text {for any} \quad \rho \in (\,0,1\,). \end{aligned}$$

From (i)–(iv), we conclude (13). Hence, by virtue of (11) we can apply the Schauder estimate (Theorem 5.3, [16]) to (10):

On the other hand, it follows from the mean value theorem that

Therefore, for \(T<1\), we obtain

Consequently, for \(M:=1+\Vert u_0\Vert _{C^{2+\alpha }(B_1)}\), setting \(T<1\) small enough as

we deduce that \(\tilde{u}\in X_{M}\). We define a mapping \(\varPsi :X_{M} \rightarrow X_{M}\) as \(\varPsi (u)= \tilde{u}\).

Step 2: We show that \(\varPsi \) is a contraction mapping. Let \(u_i\in X_{M}\). We denote by \((v_i(\rho ,t),R_i(t))\) the solution of (9) with \(u=u_i\), where \(i=1,2\). For \(\tilde{u}_i:=\varPsi (u_i)\), set \(U:=\tilde{u}_1-\tilde{u}_2\). By a simple calculation, we see that U satisfies

where \(G(u_1,u_2)\) is given by

Adopting a similar argument as in Step 1, we find \(G(u_1,u_2)\in C^{\alpha ,\alpha /2}(Q_T)\) and

Then the Schauder estimate asserts that

By the fact that \(U(|x|,0)=0\) in \(B_1\) and a similar argument as in (16), it holds that

where \(C= C(T, u_0,R_0)\). Thus, letting T small enough as \(T^{1-\alpha /2} C<1\), we conclude that \(\varPsi \) is a contraction mapping. Then Banach’s fixed point theorem indicates that there exists \(u\in X_{M}\) uniquely such that \(\varPsi (u)=u\). By the definition of \(\varPsi \), u is a unique solution of (\(\mathrm{PS}_{0}\)) on \([\,0,T\,)\). Moreover, we infer from the above argument that \(u \in C^{2+\alpha ,1+\alpha /2}(Q_T)\).

Finally we prove that \(v \in C^{1+\alpha , \alpha /2} ([\,0,1\,) \times (\,0,T\,))\cap C^1([\,0,1\,) \times (\,0,T\,))\). By a direct calculation, we have \(v \in C([\,0,T\,); H^1(I))\). Combining the fact with the Sobolev embedding theorem \(H^1(I) \hookrightarrow C^{0,1/2}(\bar{I})\), we obtain \(v \in C([\,0,T\,); C^{0,1/2}(\bar{I}))\), in particular \(v \in C(\bar{I} \times [\,0,T\,))\). Thus it follows from the continuity that

Then, along the same line as in [23], we see that \(v \in C^1([\,0,1\,)\times (\,0,T\,))\). Moreover, applying the same argument as in (13) to

we find \(v \in C^{1+\alpha , \alpha /2}([\,0,1\,) \times (\,0,T\,))\). This completes the proof. \(\square \)

Theorem 2.1

Let \((u_0, w_0, R_0, a_0,S_0)\) satisfy (3). Then there exists \(T>0\) such that the system (IAS) has a unique solution (u, w, v, R, a, S) with \(S(t) \equiv S_0\) in \([\,0,T\,)\) in the class

Proof

Let (u, v, R, a) be the solution of (\(\mathrm{PS}_{0}\)). According to Lemma 2.3, we see that the solution (u, v, R, a) belongs to the class

for some \(T>0\). To begin with, we prove the existence of a short time solution to (P). If there exists \(T_1 \in (\,0,T\,]\) such that \(R(t) \equiv R_0\) in \([\,0, T_1\,)\), then (u, v, R, a, S) with \(S(t) \equiv S_0\) is a solution of (P), for the fact that \(dR/dt = 0\) in \((\,0,T_1\,)\) implies that S(t) does not switch in \((\,0,T_1\,)\). On the other hand, if there exists no such \(T_1\), there exists \(T_2 \in (\,0,T\,]\) such that \(R(t) \not \in \{r_0, r_1\}\) in \((\,0,T_2\,)\), for R(t) is continuous. Then it is clear that (u, v, R, a, S) with \(S(t) \equiv S_0\) satisfies (P) in \((\,0,T_2\,)\). Thus we see that (u, v, R, a, S) with \(S(t) \equiv S_0\) is a solution of (P) in \((\,0,T^*\,)\) for some \(T^* \in (\,0,T\,]\).

We show the uniqueness. Let \((u_1, v_1, R_1, a_1, S_1) \not = (u_2, v_2, R_2, a_2, S_2)\) be solutions of (P) satisfying (19). Along the same line as above, we see that \(S_1(t) = S_2(t) =S_0\) in \([\,0, \widetilde{T}\,)\) for some \(\widetilde{T} \in (\,0, T^*\,]\). Then the uniqueness of the solution of (\(\mathrm{PS}_{0}\)) leads a contradiction.

Thanks to Lemma 2.2, we observe that (mIAS) has a unique solution. Moreover, it follows from (18) that the solution satisfies (IAS). Finally we show the uniqueness of solutions of (IAS). Suppose that \((u_i, w_{i}, v_i, R_i,a_i,S_0)\) are solutions of (IAS) in the class (19), where \(i=1,2\). Then, by the proof of Lemma 2.2, we observe that (IAS) is reduced to (P) replaced the condition on \(v/\rho \) by (4). It is clear that \(a_1(t)=a_2(t)\) in \([\,0,T\,)\). Set \(U:=u_1-u_2\). Then it follows from Step 2 in the proof of Lemma 2.3 that

Moreover, we find

Letting T be small enough such that \(CT^{1- \alpha /2} <1\), we observe from (21) that \(\Vert U\Vert _{C^{\alpha , \alpha /2}} =0\). Combining the fact with (20), we obtain the conclusion. \(\square \)

In order to prove u, \(w \in [\, 0, 1\,]\) in \(B_1\times [\, 0,T \,)\), we apply a parabolic comparison principle to (IAS). Using (u, v, R, a, S), which is the solution of (P) in \(Q_T\) constructed by Theorem 2.1, we define the operator

as follows:

Regarding the operator \(\mathscr {P}_i\), the following parabolic comparison principle holds:

Lemma 2.4

Assume that \(z,\zeta \in C^{2,1}(B_1\times (\,0,T\,))\cap C(\overline{B_1} \times [\,0,T\,)) \) satisfy

Then \(z \ge \zeta \) in \(\overline{B_1} \times [\,0,T\,)\).

Proof

Since the proof of Lemma 2.3 implies that the coefficients in the operator \(\mathscr {L}'(v,R)\) are bounded, we can prove Lemma 2.4 along the standard argument (e.g., see [4, 17]). \(\square \)

By virtue of Lemma 2.4, one can verify \(0\le u \le 1\) and \(0 \le w \le 1\):

Lemma 2.5

Let (u, w, v, R, a, S) be a solution of (IAS) obtained by Theorem 2.1. Then, \(0\le u \le 1\) and \(0\le w \le 1\) in \(\overline{B_1} \times [\,0,T\,)\).

We close this section with a property of certain quantities of u and w.

Lemma 2.6

Let us define

Then U, W, \(V_1\), and \(V_2\) satisfy

respectively, where g is a function defined by

Proof

The Eqs. (22) and (23) were obtained by [23]. We shall show (24) and (25). It follows from Jensen’s inequality and Lemma 2.5 that

Combining (27) with the same argument as in [23], we obtain the conclusion. \(\square \)

Remark 2.1

The function g denotes the difference of net growth rate of AD cells and AI cells. We employ the notation frequently in the rest of the paper.

3 Asymptotic Behavior of Non-switching Solutions

We devote this section to investigating the asymptotic behavior of “non-switching” solutions of (IAS). To begin with, we shall show the long time existence of the non-switching solutions of (IAS).

Theorem 3.1

Let \((u_0, w_0, R_0, a_0,S_0)\) satisfy (3) and \(S_0=1\). Then the system (IAS) with \(r_0=0\) has a unique solution (u, w, v, R, a, S) with \(S(t)\equiv 1\) in \([\,0,\infty \,)\) in the class

Proof

It follows from Theorem 2.1 that (IAS) with \(r_0=0\) has a unique solution with \(S(t)\equiv 1\) in \(Q_T\) for some \(T>0\). Since

we observe from the continuity of the solution that R(t) is positive, i.e. \(S(t) \equiv 1\), while the solution exists. Thus, by a standard argument (e.g., see [6]), we prove that the solution can be extended beyond for any \(T>0\). Indeed, if there exists \(\widetilde{T}>0\) such that the solution can not be extended beyond \(\widetilde{T}\), then the proof of Theorem 2.1 implies that

On the other hand, since u is a solution of (\(\mathrm{PS}_{0}\)) on \([\,0,\widetilde{T}\,)\), it holds that

Since (29) contradicts (28), we obtain the conclusion. \(\square \)

Remark 3.1

The system (IAS) with \(r_0=0\) and \(S_0=1\) describes a tumor growth under the CAS therapy.

Corollary 3.1

Let \((u_0, w_0, R_0, a_0, S_0)\) satisfy (3) and \(S_0=0\). Then the system (IAS) with \(r_1=\infty \) has a unique solution (u, w, v, R, a, S) with \(S(t)\equiv 0\) in \([\,0,\infty \,)\) in the class

In the following, we classify the asymptotic behavior of non-switching solutions obtained by Theorem 3.1 and Corollary 3.1. Recalling Lemma 2.2 and Theorem 2.1, we may consider (P) instead of (IAS).

If \(u_0\) is trivial, i.e., \(u_0 \equiv 0\) or \(u_0 \equiv 1\), then Lemma 2.4 asserts that u is also trivial in \(Q_T\). Thus it is sufficient to consider the initial data \((u_0, R_0,a_0,S_0)\) satisfying

where \(0<\alpha <1\). Regarding \(f_i\) and \(c_i\), we assume (A0) throughout this section.

From now on, for a function \(h:[\,0,a_*\,]\rightarrow \mathbb {R}\), we define \(\Vert h \Vert _{\infty }\) by

First we consider the asymptotic behavior of solutions to (P) with \(S\equiv 1\).

Theorem 3.2

Let \(r_0=0\). Let \((u_0,R_0,a_0,S_0)\) satisfy (IC) and \(S_0=1\). Assume that either of two assumptions holds\(:\)

(i) \(g(0)+c_2<0\mathrm{;}\)

(ii) \(g(0)+c_2>0\) and

Then the solution (u, v, R, a, S) of (P) satisfies \(R(t) \rightarrow \infty \) as \(t\rightarrow \infty \).

Proof

To begin with, we note that \(S(t)\equiv 1\) under (P) with \(r_0=0\) and \(S_0=1\).

We prove the case (i). Since \(S \equiv 1\) yields the monotonicity of a(t), especially that of \(f_{i}(a(t))\), from the assumptions (A0) and (i), we find \(s_1>0\) such that

Recalling that \(u_0 \not \equiv 1\) yields \(V_2(t)>0\) for any \(t\ge 0\) and setting \(\widetilde{V_2}(t):=1/V_2(t)\), we observe from (25) that

Applying Gronwall’s inequality to (32), we have

Since

one can verify that \(\limsup _{t\rightarrow \infty } \widetilde{V_2}(t) \le 3\). On the other hand, since \(w \le 1\) yields \(\widetilde{V_2}(t) \ge 3\) in \([\, 0, \infty \,)\), we find \(\liminf _{t \rightarrow \infty } \widetilde{V_2}(t) \ge 3\). Thus we have \(\lim _{t\rightarrow \infty } \widetilde{V_2}(t)=3\) and then

By way of \(u+w\equiv 1\), it follows from (33) that for any \(\varepsilon \) with

there exists \(T_1>s_1\) such that

In what follows, let \(t>T_1\). Since R satisfies

it is sufficient to estimate the integrals in the right-hand side of (36). We observe from the continuity of \(v(1,\cdot )\) that

for some \(C>0\). Moreover, we obtain

Hence, it follows from (34) and (35) that \(\liminf _{t \rightarrow \infty }R(t) = \infty \).

Next we turn to the case (ii). By the assumption (A0) and the monotonicity of \(f_i(a(\cdot ))\), there exists \(s_2\ge 0\) such that

Recalling \(V_1(t)>0\) in \([\,0,\infty \,)\) and setting \(\widetilde{V_1}(t):=1/V_1(t)\), we reduce (24) to

Since it follows from the same argument as in (i) that

we estimate the integral in the right-hand side of (37). Noting that \(a(\cdot )\) is monotone decreasing, we use the change of variable \(a(s)=z\), and then

where \(\tilde{z}\in (\,0,a_0\,)\). Combining (37) with (38), we obtain

Under (A0) and (31), the inequality implies that \(\widetilde{V_1} \rightarrow \infty \) as \(t \rightarrow \infty \), i.e., \(V_1(t) \rightarrow 0 \) as \(t \rightarrow \infty \). Thus for any \(\varepsilon \) with

there exists \(T_2\ge s_2\) such that

By virtue of (39) and (40), we have

Thus we see that \(\liminf _{t \rightarrow \infty } R(t)=\infty \) along the same line as in (i). \(\square \)

Next we give the asymptotic behavior of solutions to (P) with \(r_0=0\) and \(S_0=1\).

Theorem 3.3

Let \(r_0=0\). Let \((u_0,R_0,a_0,S_0)\) satisfy (IC) and \(S_0=1\). Assume that

and

Then the solution (u, v, R, a, S) of (P) satisfies \(R(t) \rightarrow 0\) as \(t\rightarrow \infty \).

Proof

Recalling that \(S \equiv 1\) under (P) with \(r_0=0\) and \(S_0=1\), and using (A0), we find \(s_3 \ge 0\) such that

Let \(\overline{w}\) be the solution of the following initial value problem:

Then Lemma 2.4 asserts that

i.e., \(\overline{w}\) is a supersolution of w. Since \(w_0 \not \equiv 0\), the relation (44) implies \(\overline{w}(t)>0\) for any \(t\ge 0\). Setting \(\omega := 1/ \overline{w}\), we see that \(\omega \) is expressed by

Here we have

Since it follows from (41) that

we observe from (42) that \(\lim _{t \rightarrow \infty }\omega (t)= \infty \), i.e., \(\lim _{t \rightarrow \infty }\overline{w}(t) = 0\), where we used the positivity of \(\overline{w}\). With the aid of (44), for any \(\varepsilon \) with

there exists \(T_3>s_3\) such that

Recalling \(u=1-w\) and using the same argument as in the proof of Theorem 3.2 (i), we can verify that

Then (45) yields \( \limsup _{t \rightarrow \infty } R(t)= 0\). \(\square \)

We turn to the case of (P) with \(r_1=\infty \) and \(S_0=0\). We note that (P) with \(r_1=\infty \) and \(S_0=0\) describes the behavior of prostate tumor under non-medication.

Theorem 3.4

Let \(r_1=\infty \). Let \((u_0,R_0,a_0,S_0)\) satisfy (IC) and \(S_0=0\). We suppose that one of the following assumptions holds\(:\)

(i) \(f_1(a_*)-c_1>0\mathrm{;}\) (ii) \(f_2(a_*)-c_2>0\mathrm{;}\) (iii) \(g(a_*)-c_1>0\mathrm{;}\)

(iv) \(-g(a_*)+c_1>0\), \(f_2(a_*)>0\), and

(v) \(g(a_*)+c_2>0\) and

Then the solution (u, v, R, a, S) of (P) satisfies \(R(t) \rightarrow \infty \) as \(t\rightarrow \infty \).

Proof

We prove the case (i). Remark that \(S \equiv 0\) yields the monotonicity of a(t), especially that of \(f_{i}(a(t))\). Under the assumption (i), we find \(s_4 \ge 0\) such that \(f_1(a(t))-c_1>0\) for any \(t \ge s_4\). Since it follows from (22) that

making use of Gronwall’s inequality and the monotonicity of \(f_1(a(\cdot ))\), we find

Consequently we see that

Regarding the other cases, we obtain the conclusion along the same line as in the proof of Theorem 3.2. \(\square \)

By the same argument as in the proof of Theorem 3.3, we obtain the following:

Theorem 3.5

Let \(r_1=\infty \). Let \((u_0,R_0,a_0,S_0)\) satisfy (IC) and \(S_0=0\). Assume that

and (46). Then the solution (u, v, R, a, S) of (P) satisfies \(R(t) \rightarrow 0\) as \(t\rightarrow \infty \).

4 Proof of the Main Theorem

The purpose of this section is to prove the existence of a switching solution of (IAS) and investigate its property under the assumption (A0)–(A2). Here we note that (A1) and (A2) are written as \(g(a_*)-c_1>0\) and \(g(0)+c_2>0\), respectively, where g was defined by (26). For this purpose, we may deal with (P) instead of (IAS), for the solution of (P) constructed in Sect. 2 also satisfies (IAS). In the following, we fix \((u_0,R_0,a_0,S_0)\) satisfying (IC), \(u_0>0\), and \(S_0=0\), arbitrarily.

To begin with, we shall study the behavior of solutions of (P) with \(S\equiv 0\). More precisely, for each “initial data” \(({\tilde{u}}_0,{\widetilde{R}}_0,{\tilde{a}}_0)\), we consider the following system:

where the operator \(\mathscr {L}'\) was defined by (8). We characterize the time variable in terms of the solution \({\tilde{a}}(\cdot )\) to (P0). Recalling that \(f_1\) is monotone, we define a function \(\tau _0 : (\,0, f_1(a_*)-f_1({\tilde{a}}_0)\,] \rightarrow [\,0,\infty \,)\) as

where \({\tilde{a}}^{-1}\) and \(f_1^{-1}\) denote the inverse functions of \({\tilde{a}}\) and \(f_1\), respectively. Note that, since \({\tilde{a}}(t) \uparrow a_*\) as \(t\rightarrow \infty \), \(\varepsilon \downarrow 0\) is equivalent to \(\tau _0(\varepsilon )\rightarrow \infty \).

From now on, we will follow the notation \(\Vert \cdot \Vert _{\infty }\) defined in (30).

Lemma 4.1

Assume that there exist constants \(A \in (\,0,1\,)\) and \(\kappa \in (\,0,a_*\,)\) such that \(({\tilde{u}}_0,{\widetilde{R}}_0,{\tilde{a}}_0)\) satisfies (IC) and the following\(:\)

Then there exists a strictly monotone increasing continuous function

with \(\varGamma _0(\varepsilon ; A, \kappa ) \downarrow 0\) as \(\varepsilon \downarrow 0\) such that the solution of (P0) satisfies

Proof

Let us consider

By way of Lemma 2.4, one can easily verify that \(\overline{w}\) is a supersolution of \(1-{\tilde{u}}\). Solving (51) and setting \(t=\tau _0(\varepsilon )\), we find

where \(\omega =1/\overline{w}\). From the change of variable \({\tilde{a}}(s)=z\), we have

where we used (A1) in the last inequality. Therefore, using (49) and (50), we define the required function \(\varGamma _0(\varepsilon ; A, \kappa )\) as follows:

This completes the proof. \(\square \)

Lemma 4.2

Under the same assumption as in Lemma 4.1, there exists a constant \(\varepsilon _1 \in (\,0, f_1(a_*) \,)\), independent of \(({\tilde{u}}_0,{\widetilde{R}}_0,{\tilde{a}}_0)\), such that the solution of (P0) satisfies

Proof

Since \(d {\widetilde{R}}/dt\) is written by

we observe that the sign of \(d{\widetilde{R}}/dt\) is determined by that of the integral in (53). In particular, we focus on the sign of F. From \(\partial ^{2}_{z} F(z,\alpha )= 2(c_1 + c_2) > 0\), we find

where we used the monotonicity of \(\partial _{z} F(1,\alpha )=g(\alpha )-c_1-c_2\) in the second inequality. Here, noting the positivity of \(\partial _z F(1, a_*)\), we denote by \(z_0(\alpha )\) the zero point of \(y(z;\alpha )\) given by

Since (48) yields that

we see that

Then, for each \(\varepsilon \in (\,0, f_1(a_*)\,)\), we observe from (56) that \(y(z, {\tilde{a}}(\tau _0(\varepsilon )))\ge 0\) for all \(z \in [\, z_0({\tilde{a}}(\tau _0(\varepsilon ))), 1\,]\). Combining the fact with (54)–(55), we infer that

In order to complete the proof of Lemma 4.2, it is sufficient to prove the claim: there exists a constant \(\varepsilon _1 \in (\, 0, f_{1}(a_{*})\,)\), independent of \(({\tilde{u}}_0,{\widetilde{R}}_0,{\tilde{a}}_0)\), such that the solution \(({\tilde{u}},{\tilde{v}},{\widetilde{R}},{\tilde{a}})\) of (P0) satisfies

Indeed, combining the claim with (57), we clearly obtain the conclusion. We shall show the claim by way of Lemma 4.1. Since \(z_0({\tilde{a}}(\tau _0(f_1(a_*)))=1\) and

from the monotonicity of \(z_0({\tilde{a}}(\tau _0(\varepsilon )))\) and \(1 - \varGamma _0(\varepsilon ; A, \kappa )\), we find a constant \({\tilde{\varepsilon }_1} \in (\,0, f_1(a_*)\,)\) uniquely, independent of \(({\tilde{u}}_0,{\widetilde{R}}_0,{\tilde{a}}_0)\), such that

Recalling (50) implies that \(f_1(\kappa ) \ge f_1({\tilde{a}}_0)\) and setting \(\varepsilon _1 := \min \{\tilde{\varepsilon }_1, f_1(a_*) - f_1(\kappa ) \}\), we observe from (58) and Lemma 4.1 that

Then the claim holds true and we have completed the proof. \(\square \)

Lemma 4.3

Let \(({\tilde{u}}_0,{\widetilde{R}}_0,{\tilde{a}}_0)=(u_0,R_0,a_0)\). Then there exist monotone decreasing functions \(M^-\) and \(M^+\) defined on \((\,0,f_1(a_*)-f_1(0)\,]\) such that the solution of (P0) satisfies

where the second inequality is strict for any \(\varepsilon \in (\,0,f_1(a_*)-f_1(a_0))\). Moreover, \(M^-\) and \(M^+\) satisfy the following\(:\)

Proof

Since \({\widetilde{R}}(\tau _0(\varepsilon ))\) is given by

we will estimate the integral in (62). To this aim, setting \({\tilde{w}}=1-{\tilde{u}}\), we decompose the integral as follows:

First we construct \(M^-\). Regarding \(I_1\), it follows from Jensen’s inequality that

Employing a differential inequality in (25), we see that \(\mathscr {W}\) satisfies

Furthermore, the same argument as in (52) yields

where

Hence, combining (64) with (65)–(66), we have

Changing the variable

and setting

we can define \(M^-_1:(\, 0, f_1(a_*)- f_1(a_0) \,]\rightarrow \mathbb {R}\) as follows:

where \(C_1= (c_1+c_2)/ (27(g(a_*)+c_2))\), and

Regarding \(I_2\), it follows from \({\tilde{w}}=1-{\tilde{u}}\) that

Using (24) and the same calculation as in (66), we have

Then, by the same argument as in the derivation of \(M_1^-\), we obtain

It follows from the same argument as in (52) that

where \(\tilde{z}\in (a_0,a_*)\). Setting \(M^-(\varepsilon )= \sum ^{3}_{i=1} M_i^-(\varepsilon )\) and recalling (48), we see that \(M^-\) is well-defined on \((\,0,f_1(a_*)-f_1(a_0)\,]\).

We shall derive \(M^+\). Since \({\tilde{w}}=1-{\tilde{u}}\le 1\), the same argument as in \(M_2^-\) yields

Regarding \(I_2\), we have

Eliminating the negative term from the first line in (69), we find

where the first inequality is followed from the monotonicity of \(f_1\), and it is strict for any \(\varepsilon \in (\,0,f_1(a_*)-f_1(a_0))\). Setting \(M^+(\varepsilon ):= \sum ^{3}_{i=1} M_i^+(\varepsilon )\), we observe that \(M^+(\varepsilon )\) is well-defined on \((\,0,f_1(a_*)-f_1(a_0)\,]\).

From the definition of \(M^-\) and \(M^+\), we see that (59) and (61) hold true. Moreover, thanks to \({\tilde{a}}(\tau _0(\varepsilon )) = f_1^{-1}(f_1(a_*)-\varepsilon )\), we infer that \(M^-\) and \(M^+\) can be extended on \((\,0,f_1(a_*)-f_1(0)\,]\) and (60) holds. This completes the proof. \(\square \)

Lemma 4.4

Let \(M^\pm : (\,0,f_1(a_*)-f_1(0)\,] \rightarrow \mathbb {R}\) be the functions constructed by Lemma 4.3. Let \(({\tilde{u}}_0, {\widetilde{R}}_0, {\tilde{a}}_0)\) satisfy

and (IC), where \(\kappa _0\) is defined by (68). Then the solution of (P0) satisfies

where the second inequality is strict for any \(\varepsilon \in (\,0, f_1(a_*)-f_1({\tilde{a}}_0)\,)\).

Proof

In the same manner as in the proof of Lemma 4.3, we see that (59) replaced \((M^-,M^+,a_0)\) by \((\tilde{M}^-,\tilde{M}^+,{\tilde{a}}_0)\) holds true, where \(\tilde{M}^-\) and \(\tilde{M}^+\) are respectively determined by \(M^-\) and \(M^+\), replaced \((u_0,a_0)\) by \(({\tilde{u}}_0,{\tilde{a}}_0)\). Since (70) and (71) imply that

and

we find

Thus we obtain (72). \(\square \)

In order to investigate the behavior of solutions of (P) with \(S\equiv 1\), for each “initial data” \(({\tilde{u}}_0,{\widetilde{R}}_0,{\tilde{a}}_0)\), we consider the following system:

We characterize the time variable in terms of the solution \({\tilde{a}}(\cdot )\) to (P1). Following the same manner as in (48) and recalling the monotonicity of \(f_1\), we define a function \(\tau _1 : (\,0, f_1({\tilde{a}}_0)-f_1(0)\,] \rightarrow [\,0,\infty \,)\) as

Since \({\tilde{a}}(t) \downarrow 0\) as \(t\rightarrow \infty \), \(\delta \downarrow 0\) is equivalent to \(\tau _1(\delta )\rightarrow \infty \).

Lemma 4.5

Let \(({\tilde{u}}_0,{\widetilde{R}}_0,{\tilde{a}}_0)\) satisfy (IC) and

Then the solution \(({\tilde{u}},{\tilde{v}},{\widetilde{R}},{\tilde{a}})\) of (P1) satisfies

Proof

Recalling that (A2) and (74) respectively correspond to (41) and (42), we can construct the supersolution \(\overline{w}\) of \({\tilde{w}}=1-{\tilde{u}}\) along the same argument as in the proof of Theorem 3.3. Using the change of variable \({\tilde{a}}(t)=z\), we have

for any \(\delta \in (\,0, f_1({\tilde{a}}_0)-f_1(0)\,]\). Then \(\underline{u} := 1-\varGamma _1\) is a subsolution of \({\tilde{u}}\). In particular, the monotonicity of \(\varGamma _1(\cdot )\) gives us the conclusion. \(\square \)

Next we construct an analogue of Lemma 4.2 for (P1). To this aim, we note that

where

Lemma 4.6

Let \(({\tilde{u}}_0,{\widetilde{R}}_0,{\tilde{a}}_0)\) satisfy (IC), (74), and the following\(:\)

Then the solution \(({\tilde{u}},{\tilde{v}},{\widetilde{R}},{\tilde{a}})\) of (P1) satisfies

Proof

In order to verify the sign of \(d{\widetilde{R}}/dt\), we use a similar way in Lemma 4.2, i.e., focus on the sign of \(F({\tilde{u}},{\tilde{a}})\). First we note that (77) is equivalent to \(f_1({\tilde{a}}_0)>0\). Recalling the relation \((\,0,-f_1(0)\,) \subset (\,0,f_1({\tilde{a}}_0)-f_1(0)\,]\), we find

Since (A2) implies \(K^*(0)<1\), the monotonicity of \(K^*(\cdot )\) and (78) asserts that

By virtue of (74), we can apply Lemma 4.5 to the solution \({\tilde{u}}\) and then (76) implies that

Therefore we have completed the proof. \(\square \)

Lemma 4.7

Let \(({\tilde{u}}_0,{\widetilde{R}}_0,{\tilde{a}}_0)\) satisfy

and (IC). Then there exist monotone increasing functions \(L^-\) and \(L^+\) defined on the interval \((\,0,f_1(a_*)-f_1(0)\,]\), independent of \(({\tilde{u}}_0,{\widetilde{R}}_0,{\tilde{a}}_0)\), such that the solution of (P1) satisfies

in particular, the first inequality in (83) is strict in \((\,0, f_1({\tilde{a}}_0)-f_1(0)\,)\). Moreover, \(L^-\) and \(L^+\) satisfy the following\(:\)

Proof

Along the same line as in the proof of Lemma 4.3, we will estimate the following:

where \({\tilde{w}}=1-{\tilde{u}}\). First, since \(I_1 \ge 0\), we set \(L_1^-(\delta ) \equiv 0\). Using the supersolution \(\overline{w}\) of \({\tilde{w}}\) constructed in the proof of Theorem 3.3 and its estimate, we observe from (81) that

Since the change of variable \({\tilde{a}}(\tau ')=s'\) yields

the inequality (86) is reduced to

Moreover, we find

where \(\tilde{z} \in (0,{\tilde{a}}_0)\). The last inequality is followed from the monotonicity of \(f_1\), and it is strict for any \(\delta \in (\,0, f_1({\tilde{a}}_0)-f_1(0)\,)\). Setting \(L^-(\delta ):= \sum ^{3}_{i=1} L^-_i(\delta )\) and recalling (73), we observe that \(L^-\) is well-defined on \((\,0,f_1({\tilde{a}}_0)-f_1(0)\,]\).

Next, we derive \(L^+\). By a similar argument as in the derivation of \(L_2^-\), we obtain

Since \(I_2 \le 0\), we set \(L_2^+(\delta ) \equiv 0\). From the first equality in (87), we have

Setting \(L^+(\delta ):= \sum ^{3}_{i=1} L^+_i(\delta )\), we see that \(L^+\) is well-defined on \((\,0,f_1({\tilde{a}}_0)-f_1(0)\,]\).

From the definitions of \(L^-\) and \(L^+\), it is clear that (83), (84), and (85) hold true. We have completed the proof. \(\square \)

We are in the position to prove Theorem 1.1.

Proof of Theorem 1.1. To begin with, we prove the existence of switching solution of (IAS). The key of the proof is how to determine the appropriate thresholds \(r_0\) and \(r_1\). We divide the proof of the existence into 4 steps. Finally we shall prove a boundedness of the switching solution and its regularity.

Step 1: Fix \(r_{0} \in (\,0, \infty \,)\) arbitrarily. Let \(r_{1}\) satisfy

where remark that \(L^- (-f_1(0)) < 0\). We claim the following: if \(({\tilde{u}}_0,{\widetilde{R}}_0,{\tilde{a}}_0)\) satisfies

and (IC), then there exists \(\beta _1 \in (\,0,-f_{1}(0)\,)\) such that the solution of (P1) satisfies

Let \(\delta _0:=f_1({\tilde{a}}_0)-f_1(0)\), i.e., \(\tilde{a}(\tau _1(\delta _0))={\tilde{a}}_0\). Remark that the third inequality in (89) yields \(\delta _0 > -f_1(0)\). Since (89) allows us to apply Lemma 4.7, there exists \(\beta '_1 \in (0, \delta _{0} \,)\) such that

Moreover, we infer from (89) that Lemma 4.6 implies that

Therefore it is sufficient to prove that \(\beta '_1 < -f_1(0)\). Then \(\beta '_1\) is nothing but the required constant \(\beta _1\). Combining the relation (83) with (88), we have

Then the monotonicity of \(L^-\) yields \(\beta '_1 < -f_1(0)\).

Step 2: We shall show that, there exists \(\varepsilon _1^* \in (\,0,f_1(a_*)-f_1(0)\,)\) such that for any \(r_0 \in (\,0,\infty \,)\) and \(r_1 \ge r_0 \exp [M^+(\varepsilon _1^*)]\), the following holds: if \(({\tilde{u}}_0,{\widetilde{R}}_0,{\tilde{a}}_0)\) satisfies

and (IC), then there exists \(\beta _2 \in (\,0,\varepsilon _1^*\,)\) such that the solution of (P0) satisfies

Let \(\varepsilon _0:=f_1(a_*)-f_1({\tilde{a}}_0)\), i.e., \(\tilde{a}(\tau _0(\varepsilon _0))={\tilde{a}}_0\). Remark that \(\varepsilon _0 > f_1(a_*)\) by the third inequality in (91). By Lemma 4.4, there exists a constant \(\beta _2' \in (\,0, \varepsilon _{0} \,)\) such that

We define \(\varepsilon _1^*\) as \(\varepsilon _1\) in Lemma 4.2 with \(A = \mathscr {U}_{0}\) and \(\kappa =f_1^{-1}(0)\), i.e.,

Then Lemma 4.2 asserts that \(\varepsilon _1^*\in (\,0,f_1(a_*)\,)\) and

Thus it is sufficient to prove that \(\beta '_2 \in (\,0,\varepsilon _1^*\,)\). Then \(\beta _2'\) is nothing but the required constant \(\beta _2\). Letting \(r_1\) satisfy

we show that \(\beta '_2 \in (\,0,\varepsilon _1^*\,)\). Indeed, since the relation (72) in Lemma 4.4 holds true, we observe from (95) that

Then the monotonicity of \(M^+\) clearly yields \(\varepsilon _1^* > \beta '_2\).

Step 3: We shall prove that, there exists \(\varepsilon _0^* \in (\,0,f_1(a_*)-f_1(0)\,)\) such that for any \(r_0 \in (\,0,\infty \,)\) and \(r_1 \ge R_0 \exp {M^+(\varepsilon _0^*)}\), the following holds: if \(({\tilde{u}}_0,{\widetilde{R}}_0,{\tilde{a}}_0)= (u_0,R_0,a_0)\), then there exists \(\beta _0 \in (\,0,\varepsilon _0^*\,)\) such that the solution of (P0) satisfies the following:

Setting \(\tilde{\varepsilon }_1\) as \(\varepsilon _1\) in Lemma 4.2 with \(A=\min _{\rho \in [\,0,1\,]}u_0(\rho )\) and \(\kappa =a_0\), we have

By way of the function \(\varGamma _0^*\) defined by

we define \(\tilde{\varepsilon }_2\) as follows:

From now on, we set \(\varepsilon _0^* := \min \{\tilde{\varepsilon }_1, \tilde{\varepsilon }_2\}\) and let \(r_1\) satisfy \(r_1 \ge R_0 \exp {M^+(\varepsilon _0^*)}\). Let \(\varepsilon '_0:=f_1(a_*)-f_1(a_0)\), i.e., \(\tilde{a}(\tau _0(\varepsilon '_0))=a_0\). With the aid of Lemma 4.3, we find a constant \(\beta _0' \in (\,0, \varepsilon '_{0} \,)\) such that (93) holds for \(\beta _2' = \beta _0'\). Noting that the latter relation in (97) is equivalent to \(\beta '_0 < f_1(a_*)\) and recalling \(\varepsilon ^*_0 \le \tilde{\varepsilon }_1 < f_1(a_*)\), we have \(\beta '_0 < \varepsilon ^*_0\). The same argument as in Step 2 implies that

where the last inequality is followed from Lemma 4.3. Then the monotonicity of \(M^+\) gives us the required relation. Finally we prove the former relation in (97). Thanks to the monotonicity of \(\varGamma _0\), we observe from Lemma 4.1 that, for any \(\varepsilon \in [\,\beta '_0, \tilde{\varepsilon }_2\,]\),

Therefore \(\beta '_0\) is nothing but the required constant \(\beta _0\).

Step 4: We shall prove that, for a suitable pair of theresholds \((r_0, r_1)\), the system (P) has a unique solution with the property (i) in Theorem 1.1. Fix \(r_{0} \in (\, 0, \infty \,)\) and let \(r_1\) satisfy

We note that (99) yields \(\max \{ r_0, R_0 \} < r_1\), for \(M^+\) is positive in \((\, 0, f_1(a_*) - f_1(0)\,]\).

With the aid of Step 3, there exist \(\beta _0 \in (\, 0, \varepsilon ^{*}_0 \,)\) and a unique solution \(({\tilde{u}},{\tilde{v}},{\widetilde{R}},{\tilde{a}})\) of (P0) with \(({\tilde{u}}_0,{\widetilde{R}}_0,{\tilde{a}}_0) = (u_0, R_0, a_0)\) such that (96) and (97) hold. Since \(\beta _0\) is uniquely determined, setting \((u,v,R,a)=({\tilde{u}},{\tilde{v}},{\widetilde{R}},{\tilde{a}})\) in \(\bar{I} \times [\,0, t_1 \,]\), we observe from (96) and the proof of Theorem 3.1 that (u, v, R, a, S) is a unique solution of (P) in \(\bar{I} \times [\,0, \tau _0(\beta _0)\,)\) such that \(S(t) = 0\) in \([\,0, t_1\,)\) and S(t) switches from 0 to 1 at \(t_1\), where \(t_1 := \tau _0(\beta _0)\).

Since (96)–(97) asserts that (89) holds for \(({\tilde{u}}_0,{\widetilde{R}}_0,{\tilde{a}}_0) = (u, R, a) |_{t=t_1}\), it follows from Step 1 that there exist \(\beta _1 \in (\,0, -f_1(0)\,)\) and a unique solution \(({\tilde{u}}_1,{\tilde{v}}_1,{\widetilde{R}}_1,{\tilde{a}}_1)\) of (P1), with \(({\tilde{u}}_0,{\widetilde{R}}_0,{\tilde{a}}_0) = (u, R, a) |_{t=t_1}\), satisfying (90). Since \(\beta _1\) is uniquely determined, setting \((u,v,R,a)=({\tilde{u}}_1,{\tilde{v}}_1,{\widetilde{R}}_1,{\tilde{a}}_1)\) in \(\bar{I} \times [\,t_1, t_2\,]\) and \(S(t) = 1\) in \([\,t_1, t_2\,)\), we deduce from (90) and the proof of Theorem 3.1 that (u, v, R, a, S) is a unique solution of (P) in \(\bar{I} \times [\,0, t_2\,)\) satisfying the following: \(S(t)=1\) in \([\,t_1, t_2\,)\); S(t) switches from 1 to 0 at \(t_2\), where \(t_2\) is the time determined by \(\tau _1(\beta _1)\).

Here we claim that (91) holds for \(({\tilde{u}}_0,{\widetilde{R}}_0,{\tilde{a}}_0) = (u, R, a) |_{t=t_2}\). Since (96)–(97) implies that \(\min _{\rho \in [\,0, 1\,]} u(\rho , t_1) \ge \max \{ \underline{\omega }, \mathscr {U}_0\}\), we infer from Lemma 4.5 that

Thus the claim holds true. Then it follows from Step 2 that there exist \(\beta _2 \in (\,0, \varepsilon ^*_1\,)\) and a unique solution \(({\tilde{u}}_2,{\tilde{v}}_2,{\widetilde{R}}_2,{\tilde{a}}_2)\) of (P0), with \(({\tilde{u}}_0,{\widetilde{R}}_0,{\tilde{a}}_0) = (u, R, a) |_{t=t_2}\), satisfying (92). Thanks to the uniqueness of \(\beta _2\), setting

where \(t_3\) is the time determined by \(\tau _0(\beta _2)\), we deduce from the same argument as above that (u, v, R, a, S) is a unique solution of (P) in \(\bar{I} \times [\,0, t_3\,)\) satisfying the following: \(S(t)=1\) in \([\,t_2, t_3\,)\); S(t) switches from 0 to 1 at \(t_3\).

In order to apply Step 1 again, we verify that \(u(\cdot , t_3)\) satisfies the first property in (89). Combining Lemma 4.4 with (99), we see that

and then the monotonicity of \(M^+\) yields \(\varepsilon _0^* > \beta _2\). Recalling the monotonicity of \(\varGamma _0\) and using Lemma 4.1, we have for any \(\varepsilon \in [\,\beta _2, \varepsilon _0^*\,]\)

Thus Step 1 is applicable again. Therefore we can construct inductively a solution of (P) with the property (i) in Theorem 1.1.

Step 5: We prove the property (ii) in Theorem 1.1. Using the sequence \(\{ t_j \}^{\infty }_{j=0}\) obtained by Step 4, we inductively define sequences \(\{\varepsilon _0^{2j}\}_{j=0}^\infty \), \(\{\delta _0^{2j+1}\}_{j=0}^\infty \), and \(\{\beta _j\}_{j=0}^\infty \). Let \(\varepsilon _0^0 := f_1(a_*) - f_1(a_0)\), i.e., \(\tau _0(\varepsilon _0^0)=t_0=0\). Set

By the definition of \(\tau _0\), the relation (101) is equivalent to \(a(\tau _0(\beta _0)) = a(t_1)\). We set

The definitions of \(\tau _0\) and \(\tau _1\) yield \(a(\tau _1(\delta _0^1))=a(\tau _0(\beta _0))\). Since \(a(\cdot )\) is monotone in \([\,0,t_1\,]\), it holds that \(\tau _0(\beta _0)=t_1=\tau _1(\delta _0^1)\). Next we set

Then, from (102) and (103), we find \(a(\tau _1(\beta _1))=a(t_2)\) and \(a(\tau _0(\varepsilon _0^2))=a(\tau _1(\beta _1))\). The monotonicity of \(a(\cdot )\) in \([\,t_1, t_2\,]\) gives us the relation \(\tau _1(\beta _1)= \tau _0(\varepsilon _0^2)\). Along the same manner as above, we define inductively \(\varepsilon _0^{2j}\), \(\delta _0^{2j+1}\), and \(\beta _j\) for each \(j \ge 2\) as follows:

We note that the monotonicity of \(a(\cdot )\) in \([\,t_j, t_{j+1}\,]\) implies \(\tau _0(\beta _{2j})=\tau _1(\delta _0^{2j+1})\) and \(\tau _1(\beta _{2j+1})=\tau _0(\varepsilon _0^{2j+2})\) for each \(j \in \mathbb {N}\cup \{0\}\). Then, it follows from the definitions of the sequences that, for any \(j \in \mathbb {N}\cup \{0\}\),

We give the lower and upper bounds of R when \(S \equiv 0\), i.e., for the case of (104). We note that, for the case of \(j=0\), it clearly follows from Lemma 4.3 that

where the first inequality was obtained by the monotonicity of \(M^{-}\). For any \(j \in \mathbb {N}\), we observe from Lemma 4.3 that

Here, by (105) and Lemma 4.7, we find \(\log {(r_0/r_1)} \le L^+(\beta _{2j-1})\). Since \(L^+(\delta )\) is monotone and diverges to \(-\infty \) as \(\delta \downarrow 0\), there exists \(\hat{\delta } \in (\,0, \beta _{2j-1}\,]\), independent of j, such that \(L^+(\hat{\delta })= \log (r_0/r_1)\). Thus, setting \(\hat{\varepsilon }:=f_1(a_*)-f_1(0)-\hat{\delta }\), we obtain

Since \(j \in \mathbb {N}\) is arbitral, we observe from (107) and (108) that

In particular, we see that

Next, we derive the lower and upper bounds of R when \(S \equiv 1\), i.e., for the case of (105). For any \(j \in \mathbb {N}\cup \{0\}\), we observe from (105) and Lemma 4.7 that

where the last inequality was followed from the monotonicity of \(L^{+}\). Here, it follows from (104) and Lemma 4.3 that \(M^-(\beta _{2j}) \le \log (r_1/ \min \{R_0, r_0\})\). Since \(M^-(\varepsilon )\) is monotone and diverges to \(\infty \) as \(\varepsilon \downarrow 0\), there exists \(\bar{\varepsilon } \in (\,0, \beta _{2j}\,]\), independent of j, such that \(M^-(\bar{\varepsilon }) = \log (r_1/ \min \{ R_0, r_0\})\). Setting \(\bar{\delta }:=f_1(a_*)-f_1(0)-\bar{\varepsilon }\), we deduce from a similar argument as in (108) that the relation \(\bar{\delta } \ge \delta _0^{2j+1}\) holds. Combining the fact with (111), we have

In particular, we see that

Consequently, by virtue of (106), (109)–(110), and (112)–(113), we conclude that the property (ii) in Theorem 1.1 holds for

Step 6: Finally we prove the regularity of the switching solution constructed by the above arguments. The equation of a implies

Fix \(j \in \mathbb {N}\) arbitrarily. Then, for any t and s with \(t_{j-1} \le t<t_j< s < t_{j+1}\), we have

where \(\tau _1 \in (\,t,t_j\,)\) and \(\tau _2 \in (\,t_j, s\,)\). Since j is arbitrary, we see that \(a \in C^{0, 1}(\mathbb {R}_+)\).

We consider the following initial boundary problem:

Since \(a \in C^{0, 1}(\mathbb {R}_+)\), the proofs of Lemma 2.3 and Theorem 3.1 indicate that (\(\mathscr {P}\)) has a unique solution \((\tilde{u}, \tilde{v}, \tilde{R})\) in the class

Recalling that (u, v, R), which is obtained by Step 4, also satisfies (\(\mathscr {P}\)), we observe from the uniqueness that \((\tilde{u}, \tilde{v}, \tilde{R})=(u,v,R)\) in \(Q_\infty \). We obtain the conclusion. \(\square \)

References

Akakura, K., Bruchovsky, N., Goldenberg, S.L., Rennie, P.S., Buckley, A.R., Sullivan, L.D.: Effects of intermittent androgen suppression on androgen-dependent tumors: apoptosis and serum prostate specific antigen. Cancer 71, 2782–2790 (1993)

Bladow, F., Vessella, R.L., Buhler, K.R., Ellis, W.J., True, L.D., Lange, P.H.: Cell proliferation and apoptosis during prostatic tumor xenograft involution and regrowth after castration. Int. J. Cancer 67, 785–790 (1996)

Bruchovsky, N., Rennie, P.S., Coldman, A.J., Goldenberg, S.L., Lawson, D.: Effects of androgen withdrawal on the stem cell composition of the Shionogi carcinoma. Cancer Res. 50, 2275–2282 (1990)

Daners, D., Medina, P.K.: Abstract Evolution Equations, Periodic Problems and Appications, Pitman Research Notes in Mathematics Series, vol. 279. Longman Scientific & Technical (1992)

Feldman, B.J., Feldman, D.: The development of androgen-independent prostate cancer. Nat. Rev. Cancer 1, 34–45 (2001)

Friedman, A., Lolas, G.: Analysis of a mathematical model of tumor lymphangiogenesis. Math. Models Methods Appl. Sci. 1, 95–107 (2005)

Gleave, M., Goldenberg, S., Bruchovsky, N., Rennie, P.: Intermittent androgen suppression for protate cancer: rationale and clinical experience. Prostate cancer Prostatic Dis. 1, 289–296 (1998)

Guo, Q., Tao, Y., Aihara, K.: Mathematical modeling of prostate tumor growth under intermittent androgen suppression with partial differential equations. Int. J. Bifurc. Chaos Appl. Sci. Eng. 18, 3789–3797 (2008)

Hirata, Y., Bruchovsky, N., Aihara, K.: Development of a mathematical model that predicts the outcome of hormone therapy for prostate cancer. J. Theor. Biol. 264, 517–527 (2010)

Huggins, G., Hodges, C.: Studies of prostate cancer: I. The effects of castration, oestrogen and androgen injections on serum phosphates in metastatic carcinoma of the prostate, Cancer Res. 1, 207–293 (1941)

Hatano, T., Hirata, Y., Suzuki, H., Aihara, K.: Comparison between mathematical models of intermittent androgen suppression for prostate cancer. J. Theor. Biol. 366, 33–45 (2015)

Ideta, A., Tanaka, G., Aihara, K.: A mathematical model of intermittent androgen suppression for prostate cancer. J. Nonlinear Sci. 18, 593–614 (2008)

Jackson, T.L.: A mathematical model of prostate tumor growth and androgen-independent relapse. Discret. Contin. Dyn. Syst. B 4, 187–201 (2004)

Jackson, T.L.: A mathematical investigation of multiple pathways to recurrent prostate cancer: comparison with experimental data. Neoplasia 6, 697–704 (2004)

Jain, H.V., Friedman, A.: Modeling prostate cancer response to continuous versus intermittent androgen ablation therapy. Discret. Contin. Dyn. Syst. B 18, 945–967 (2013)

Ladyženskaja, O.A., Solonnikov, V.A., Ural’ceva, N.N.: Linear and Quasi-linear Equations of Parabolic Type, Translations of Mathematical Monographs, vol. 23. American Mathematical Society (1967)

Quittner, P., Souplet, P.: Superlinear Parabolic Problems Blow-up, Global Existence and Steady States. Birkhäuser Advanced Texts Basler LehrBücher (2007)

Rennie, P.S., Bruchovsky, N., Coldman, A.J.: Loss of androgen dependence is associated with an increase in tumorigenic stem cells and resistance to cell-death genes. J. Steroid Biochem. Mol. Biol. 37, 843–847 (1990)

Sato, N., Gleave, M.E., Bruchovsky, N., Rennie, P.S., Goldenberg, S.L., Lange, P.H., Sullivan, L.D.: Intermittent androgen suppression delays progression to androgen-independent regulation of prostate-specific antigen gene in the LNCaP prostate tumor model. Biochem. Mol. Biol. 58, 139–146 (1996)

Shimada, T., Aihara, K.: A nonlinear model with competition between prostate tumor cells and its application to intermittent androgen suppression therapy of prostate cancer. Math. Biosci. 214, 134–139 (2008)

Stamey, T.A., Yang, N., Hay, A.R., McNeal, J.E., Freiha, F.S., Redwine, E.: Prostate-specific antigen as a serum marker for adenocarcinoma of the prostate. N. Engl. J. Med. 317, 909–916 (1987)

Tolis, G., Ackman, D., Stellos, A., Metha, A., Labrie, F., Fazekas, A.T.A., Comaru-Schally, A.M., Schally, A.V.: Tumor growth inhibition in patients with prostatic carcinoma treated with luteinizing hormone-releasing hormone agonists. Proc. Natl. Acad. Sci. USA 79, 1658–1662 (1982)

Tao, Y., Guo, Q., Aihara, K.: A model at the macroscopic scale of prostate tumor growth under intermittent androgen suppression. Math. Models Methods Appl. Sci. 12, 2177–2201 (2009)

Tao, Y., Guo, Q., Aihara, K.: A mathematical model of prostate tumor growth under hormone therapy with mutation inhibitor. J. Nonlinear Sci. 20, 219–240 (2010)

Tao, Y., Guo, Q., Aihara, K.: A partial differential equation model and its reduction to an ordinary differential model for prostate tumor growth under intermittent androgen suppression therapy. J. Math. Biol. 69, 817–838 (2014)

Acknowledgments

The second author was partially supported by Grant-in-Aid for Young Scientists (B), No. 24740097, and by Strategic Young Researcher Overseas Visits Program for Accelerating Brain Circulation (JSPS). The authors would like to express their gratitude to Professor Izumi Takagi of Tohoku University who gave them many useful comments.

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Rights and permissions

Copyright information

© 2016 Springer International Publishing Switzerland

About this paper

Cite this paper

Hiruko, K., Okabe, S. (2016). Dynamical Aspects of a Hybrid System Describing Intermittent Androgen Suppression Therapy of Prostate Cancer. In: Gazzola, F., Ishige, K., Nitsch, C., Salani, P. (eds) Geometric Properties for Parabolic and Elliptic PDE's. GPPEPDEs 2015. Springer Proceedings in Mathematics & Statistics, vol 176. Springer, Cham. https://doi.org/10.1007/978-3-319-41538-3_12

Download citation

DOI: https://doi.org/10.1007/978-3-319-41538-3_12

Published:

Publisher Name: Springer, Cham

Print ISBN: 978-3-319-41536-9

Online ISBN: 978-3-319-41538-3

eBook Packages: Mathematics and StatisticsMathematics and Statistics (R0)