Abstract

PET/CT is a routinely performed diagnostic imaging procedure. To ensure that the system is performing properly and produces images of best possible quality and quantitative accuracy, a comprehensive quality control (QC) program should be implemented. This should include a rigorous initial acceptance test of the system, performed at the time of installation. This will ensure the system performs to the manufacturer’s specifications and also serves as a reference point to which subsequent tests can be compared to as the system ages. Once in clinical use, the system should be tested on a routine basis to ensure that the system is fully operational and provides consistent image quality. To implement a successful and effective QC program, it is important to a have an understanding of the basic imaging components of the system. This chapter will describe the basic system components of a PET system and the dataflow that will aid a user in identifying potential problems. Acceptance tests and QC procedures will also be described and explained, all designed to ensure that a consistent image quality and quantitative accuracy are maintained. As will be discussed, an effective QC program can be implemented with a few relatively simple routinely performed tests.

Access provided by CONRICYT-eBooks. Download chapter PDF

Similar content being viewed by others

Keywords

These keywords were added by machine and not by the authors. This process is experimental and the keywords may be updated as the learning algorithm improves.

PET/CT is a routinely performed diagnostic imaging procedure. To ensure that the system is performing properly and produces images of best possible quality and quantitative accuracy, a comprehensive quality control (QC) program should be implemented. This should include a rigorous initial acceptance test of the system, performed at the time of installation. This will ensure the system performs to the manufacturer’s specifications and also serves as a reference point to which subsequent tests can be compared to as the system ages. Once in clinical use, the system should be tested on a routine basis to ensure that the system is fully operational and provides consistent image quality. To implement a successful and effective QC program, it is important to a have an understanding of the basic imaging components of the system. This chapter will describe the basic system components of a PET system and the dataflow that will aid a user in identifying potential problems. Acceptance tests and QC procedures will also be described and explained, all designed to ensure that a consistent image quality and quantitative accuracy are maintained. As will be discussed, an effective QC program can be implemented with a few relatively simple routinely performed tests.

1 Introduction

PET/CT imaging has for several years been an accepted and a routine clinical imaging procedure. To ensure that the imaging system is operating properly and is providing the best possible image quality, a thorough acceptance testing of the system should be performed. The purpose is to ensure that that the system is performing as specified by the manufacturer and also to ensure that the system is fully functional before being taken into clinical use. Following the initial testing, the system should be tested on a routine basis to ensure that the system is operational for patient imaging and will provide consistent image quality. These tests are not as rigorous as the initial acceptance testing, but should provide the user with enough information to decide if the system is operational or is in the need of service.

This chapter will describe various tests that are used in an acceptance test of a PET scanner. The various aspects of quality control (QC) procedures will be discussed as well as the different elements of a QC program.

2 PET Instrumentation and Dataflow

To develop tests for a PET system, it is important to have a basic understanding of the detector and data acquisition system used in a PET scanner. Although the specific design differs between manufacturers, the overall designs are fairly similar [1, 2]. A schematic of the dataflow in a PET system is shown in Fig. 10.1. The detector system typically consists of a large number of scintillation detector modules with associated front-end electronics, such as high voltage, pulse-shaping amplifiers, and discriminators. The outputs from the detector modules are then fed into processing units or detector controllers that determine which detector element was hit by a 511 keV photon, how much energy was deposited, and information about when the interaction occurred. This information is then fed into a coincidence processor, which receives information from all detectors in the system. The coincidence processor determines if two detectors were registering an event within a predetermined time period (i.e., the coincidence time window). If this is the case, a coincidence has been recorded, and the information about the two detectors and the time of when the event occurred are saved. This information is later used for image reconstruction. Since the events are saved as they occur as a stream or long list of events, this method of data storage is sometimes referred to as list mode. Events can also be sorted into projection data or sinograms on the fly, which is a convenient method of organizing the data prior to image reconstruction. Data are subsequently stored on disk and later reconstructed.

Schematic of the dataflow in a PET system. The detector system consists of a large number of detector modules with associated front-end electronics. The outputs from the detector models are then fed into processing units or detector controllers that determines in which detector element a 511 keV photon interaction occurred. This information is then fed into a coincidence processor, which receives information from all detectors in the system. The coincidence processor determines if two detectors were registering an event within a predetermined time period (i.e., the coincidence time window). If this is the case, a coincidence has been recorded and the information about the two detectors and the time of when the event occurred are saved

In the most recent generation of PET systems, time-of-flight (TOF) information is also recorded. The TOF information that is recorded is the difference in time of arrival or detection of a pair of photons that triggered a coincidence. In the case of a system with infinite time resolution (i.e., there is no uncertainty in time when the detector triggered and the actual time the photon interacted in the detector), the event could be exactly localized to a point along the line connecting the two detectors [3]. This would allow the construction of the activity distribution without the need of an image reconstruction algorithm. However, all detector systems used in modern PET systems have a certain finite time resolution, which translates into an uncertainty in positioning of each event [3]. Currently the fastest detectors available have a time resolution of a few hundred picoseconds, which translates into a positional uncertainty of several centimeters. This time resolution is clearly not good enough to eliminate the need for image reconstruction. However, the TOF information can be used in the image reconstruction to reduce the noise in the reconstructed image. Since systems having TOF capability need to detect events to an accuracy of a few hundred picoseconds, compared to tenths of nanoseconds in conventional PET systems, these systems require a very accurate timing calibration and stable electronics [see Chap. 8 for further details on TOF technology].

2.1 Detector Flood Maps

The detector system used in all modern commercial PET systems is based on scintillation detectors coupled to some sort of photodetector readout. One of the most commonly used detector designs is the block detector [4]. In this design, an array of scintillation detector elements is coupled to a smaller number of PMTs, typically four, via a light guide. An example of a block detector is illustrated in Fig. 10.2. The light generated in scintillator following an interaction by a 511 keV photon is distributed between the PMTs in such a way that each detector element produces a unique combination of signal intensities in the four PMTs. To assign the event to a particular detector element, the signals from the PMTs are used to generate two position indices, X pos and Y pos:

where PMTA, PMTB, PMTC, and PMTD are the signals from the four PMTs.

This is similar to the positioning of events in a conventional scintillation camera; however, the X pos and Y pos values do not directly translate into a spatial position or a location of the individual detector elements in the block detector. Each module therefore has to be calibrated to allow an accurate detector element assignment following an interaction of a photon in the detector. The distribution of X pos and Y pos can be visualized if a block detector is exposed to a flood source of 511 keV photons. For each detected event, X pos and Y pos are calculated using an equation [1] and are then histogrammed into a 2-dimensional matrix which can be displayed as a gray-scale image. An example of this is shown in Fig. 10.3. As can be seen in this figure, the distribution of X pos and Y pos is not uniform, but instead the events are clustered around specific positions. These clusters are the X pos and Y pos values that correspond to a specific detector element in the array. As can be seen from the flood image, the location of the clusters does not align on an orthogonal or linear grid. Instead, there is a significant pincushion effect due to the nonlinear light collection versus the position of the detector elements. To identify each element in the detector block, a look-up table is generated based on a flood map like the one shown in Fig. 10.3. First, the centroid of each peak is localized (i.e., one peak for each detector element in the array). Then a region around each peak is generated that will assign the range of X pos and Y pos values to a particular element in the array. During a subsequent acquisition, the values of X pos and Y pos are calculated for each event; then the look-up table is used to assign the event to a specific detector element. Also associated with the positioning, look-up table is an element-specific energy calibration, which will accept or reject the event if it falls within or outside the predefined energy range.

Schematic of a block detector module used in modern PET systems. The array of scintillator detector elements is coupled to the four PMTs via a light guide. The light guide can be integral (i.e., made of the scintillator material) or a non-scintillating material (e.g., glass). The purpose of the light guide is to distribute the light in the detector elements to the four PMTs in such a way that a signal amplitude pattern unique to each detector element is produced. Three examples are illustrated. Top: almost all of the light is channeled to PMTB and almost no light is channeled to the three other PMTs. This uniquely identifies the corner detector. Middle: In the next element over, most of the light is channeled to PMTB and a small amount is channeled to PMTA. By increasing the light detected by PMTA, this allows this scintillator element to be distinguished from the corner element. Bottom: In the central detector element, light is almost equally shared between the PMTB and PMTA, but since the element is closer to PMTB, this signal amplitude will be slightly larger compared to the signal in PMTA. How well the elements can be separated depends on how much light is produced in the scintillator/photon absorption (more light allows better separation). Increasing the number of detector elements in the array makes it more challenging to accurately identify each detector element

The detector elements in a block detector are identified using a flood map. The flood map is generated by exposing the block detector to a relatively uniform flood of 511 keV photons. For each energy-validated detected event, the position indices X pos and Y pos are calculated from the PMT signals (PMTA, PMTB, PMTC, and PMTD). The X pos and Y pos values are histogrammed into a 2-D matrix, which can be displayed as a gray-scale image. The distribution of X pos and Y pos values is not uniform but is instead clustered around specific values, which corresponds to specific detector elements. The peak and the area of X pos and Y pos values around it are then assumed to originate from a specific detector element in the block detector, as illustrated in the figure. From the flood map and the peak identification, a look-up table is generated which rapidly identifies each detector element during an acquisition

To ensure that the incoming events are assigned to the correct detector element, the system has to remain very stable. A small drift in the gain in one or more of the PMTs would cause an imbalance and would affect the locations of the clusters in the flood map. This would in the end result in a misalignment between the calculated X pos and Y pos and the predetermined look-up table [5], which will result in a mispositioning of the events. As will be discussed below, to check gain balance between the tubes is one of the calibration procedures that need to be performed regularly.

2.2 Sinogram

The line connecting a pair of detector is referred to as a coincidence line or line of response (LOR). If the pair of detectors is in the same detector ring, this line can be described by a radial offset r from the origin with an angle θ. A set of LORs that all have the same angle (i.e., they are all parallel to each other), but they all have different radial offsets; these then form a projection of the object to be imaged at that angle. For instance, all lines with θ = 0° would produce a lateral projection, whereas all lines with a θ = 90° would produce an anterior-posterior view.

A complete set of projections necessary for image reconstruction would consist a large number of projections collected between 0° and 180°. If all the projections between these angles are organized in a 2-dimensional array, where the 1st dimension is the radial offset r and the 2nd dimension is the projection angle θ, then this arrangement or matrix forms a sinogram, as illustrated in Fig. 10.4. A sinogram is a common method of organizing the projection data prior to image reconstruction. The name sinogram comes from the fact that a single point source located at an off-center position in the field of view (FOV) would trace a sine wave in the sinogram. The visual inspection of a sinogram also turns out to be a very useful and efficient way to identify detector or electronic problems in a system.

A sinogram is a convenient of histogramming and storing the events along the coincidence lines or LORs in a tomographic study. In its simplest form, a sinogram is a 2-D matrix where the horizontal axis is the radial offset r of a LOR and the vertical axis is the angle θ, of the LOR. If one considers all LOR that are parallel to each other, these would fall along a horizontal line in the sinogram. In the left figure, this is illustrated for two different angles of LOR. If one considers all the coincidences or LOR between one specific detector and the detectors on the opposite side in the detector ring, these will follow a diagonal line in the sinogram, as illustrated in the right figure. The sum of all the counts from the fan of coincidence lines or LOR is sometimes referred to as a fan sum. These fan sums are used in some systems to generate the normalization that corrects for efficiency variations and can also be used to detect drifts and other problems in the detector system. In multi-ring systems, there will be a sinogram for each detector ring combination. In a TOF system each time bin will also have its own sinogram. A sinogram can therefore have up to five dimensions (r, θ, z, ϕ, t) depending on the design of the system

Consider a one particular detector in the system and the coincidence lines it forms with the detectors on the opposite side in the detector ring (Fig. 10.4, right). In a sinogram, this fan of LORs would follow a diagonal line across the width of the FOV. By placing a symmetrical positron emitting source such as a uniform-filled cylinder with 68Ge or 18F, at the center of the FOV of the scanner, all detectors in the system would be exposed to approximately the same photon flux. If all detectors in the system had the same detection efficiency, then each detector would record the same number of counts per second in this source geometry. In this source geometry, the summation of all the coincidences between a particular detector element and its opposing detector element is sometimes referred to as fan sum. The fan sums are used in some systems to derive the normalization correction that is used to correct for efficiency variations between the detector elements in the system. The fan sums can also be used to detect problems in the detector system

Figure 10.5 shows a normal sinogram of a uniform cylinder phantom in a PET system. In addition to the noise originating from the counting statistics, it contains a certain amount of a more structured noise or texture. The observed variation seen in the sinogram is normal and originates primarily from the fact that all detectors in the system do not have the same detection efficiency. There are several additional factors that contribute to this, such as variation in how energy thresholds are set, geometrical differences, differences in physical size of the each detector element, etc. These variations are removed using a procedure that is usually referred to as normalization, which is discussed later in this chapter.

Illustration of how the sinogram can be used to identify detector problems in a PET system. (a) A normal sinogram of a uniform cylinder phantom placed near the center of the FOV of the system. The crosshatch pattern reflects the normal variation in efficiency between the detector elements in the system. (b) The dark diagonal line indicates a failing detector element in the system (no counts generated). (c) The broad dark diagonal line indicates that an entire detector module is failing in. (d) Example of when a detector module is generating random noise. (e) Example of when the receiver or multiplexing board of the signals from a group of the detector modules is failing. (f) Example of problems in the coincidence processing board. In this case, the signals from two groups of opposite detector modules are lost. (g) Example of problems in the histogramming memory, where random numbers are added to the valid coincidences in the sinogram

One thing that is clearly noticeable in the sinogram is the “crosshatch” pattern, which reflects the variation in detection efficiency as discussed above. As mentioned, it is expected to observe a certain amount of normal variation in detection efficiency. This can under most circumstances be calibrated or normalized out as long as the system is stable in there is no drift in the gain of the detector signals. Since a certain amount of electronic drift is inevitable, a normalization calibration needs to be performed at regular intervals. The frequency depends on the manufacturers specifications but can be as frequent as every single day as part of the daily QC or done monthly or quarterly.

If a detector drifts enough or fails, this is typically very apparent by a visual inspection of the sinogram. A detector failure of a PET detector block can either result in that the detector does not respond at all when exposed to a source or that the detector constantly produces event even when no source is present (i.e., noise). The cause of a detector failure could be many, ranging from simple problems such as a loose cable, which usually results in a nonresponding detector, to more complex problems as a faulty PMT, drift in PMT gains, and drift in set energy threshold. The latter issues may result in either a nonresponding detector or that a detector is producing an excessive count rate.

Examples of failing detectors or associated electronics are also illustrated in Fig. 10.5. A failing single detector element in the detector module would appear as a single dark diagonal line in the sinogram (Fig. 10.5b). This problem was fairly common in older PET systems where each detector element was coupled to its own PMT. A common cause of this type of failure was either a failing PMT or the associated electronics (e.g., amplifiers). In the case of a system that uses block detectors, a single dark line may also occur if the detector tuning software had difficulties identifying all detector elements in the detector array. This could be caused by poor coupling of the detector element to the light guide and/or the PMTs or poorly balanced PMTs.

Since the PMTs and associated electronics are shared by many detector elements in block detector, a failure in these components will affect a larger number of detector channels. This is then seen as a wider diagonal band across the sinogram, as illustrated in Fig. 10.5c. Another common detector problem is illustrated in Fig. 10.5d. In this case one of the detectors is generating a large number of random or noise pulses. This could be caused by an energy threshold that is set too low, which, for instance, could be caused by a failing or weak PMT.

As described above, the signals from a larger group of detector blocks are typically multiplexed into what is sometimes referred to as a detector controller. In the case of a failure of the detector controller, the signals from the entire detector group might be lost, which in the sinogram is visualized as an even broader band of missing data (Fig. 10.5e). A problem further downstream in the signal processing chain such as in the electronics determining coincidences may result in a problem illustrated in Fig. 10.5f. Here the signals from two entire detector groups are lost in the coincidence processor, which in this case is seen as a diamond-shaped area in the sinogram. A final example of a hardware failure is shown in Fig. 10.5g. In this case there is a problem with the histogramming system, where random counts are added to the sinogram in addition to the normal data.

There are naturally a large number of variations in other artifacts or patterns in the sinograms that are specific to the design or a particular system, but the sinogram patterns related to the front electronics as described in this section are common for most modern PET systems.

Thus, by a visual inspection of the sinograms from a phantom scan, it is possible to relatively quickly identify whether the system is operational or not. By looking at the patterns in the sinogram, it is also possible to figure out where in the signal chain a problem is occurring. A complete failure is very apparent in the sinogram and makes it easy to make the determination that the system is in need of repair. However, many times there are more subtle problems, such as a slow drift in the system that is not easily detected by visual inspection. Some systems therefore provide a numerical assessment that gives the user some guidance whether the system is operational or not. These tests usually require that the user acquires data of a phantom, such as a 68Ge-filled cylinder every day at the same position in the FOV for a fixed number of counts. The daily QC scan is then compared to a reference scan (e.g., a scan acquired at the time of the most recent calibration or tuning). By comparing the number of counts acquired by each detector module in the QC scan to that in the reference scan, it is possible to determine if a drift has occurred in the system and the system needs to be retuned and recalibrated.

3 Detector and System Calibration

The purpose of the detector and system calibration is to make sure that the recorded events are assigned to the correct detector element in the detector module. An energy validation is performed such that only events within a certain energy range are passed on to the subsequent event processing. In addition to positioning and energy calibration of each detector module, each module has to be time calibrated to make sure that coincidence time windows between all detector modules are aligned.

3.1 Tube Balancing and Gain Adjustments

As described earlier, the assignment of the events to individual detector elements in the block is based on the comparison of the signal output from the four PMTs. The first step in the calibration of the front-end detector electronics is to adjust the gain of the amplifiers such that the signal amplitude from the four PMTs is on the average and is about the same. This usually entails exposing the detectors to a flood source of 511 keV photons, and the gains are adjusted until an acceptable signal balance is achieved.

Following the tube balancing, detector flood histograms are acquired and generated (as described earlier) to make sure that each detector element in each detector block can be identified. A look-up table is then generated from the flood histogram that is used to assign a particular combination of X pos and Y pos values to a particular detector element.

3.2 Energy Calibration

Once all detector elements in the block have been identified, the signal originating from each detector element has to be energy calibrated. Independent of the design of the detector block, it is very likely that signal from each detector element will vary in terms of amplitude, primarily due to differences in light collection by the photodetectors. A detector positioned right above a PMT is very likely to produce a stronger signal compared to a detector located at the edge of a PMT. This is similar to what is observed in conventional scintillation cameras. When comparing energy spectra from the individual elements in a block detector, the location of the photopeak will vary depending on the light collection efficiency of the light.

For the energy calibration, energy spectra are acquired for each detector element, and the calibration software will search for the photopeak in each spectrum. A simple energy calibration is then typically performed where it is assumed that the photopeak corresponds to 511 keV (provided that the source is emitting 511 keV photons and zero amplitude corresponds to zero energy).

3.3 Timing Calibration

Coincidence measurements only accept pairs of events that occur within a narrow time window of each other. In order to ensure that most true coincidences are recorded, it is imperative that all detector signals in the system are adjusted to a common reference time. How this is done in practice is dependent of the manufacturer and the system design. The general principle is to acquire timing spectra between all detector modules in the system when a positron emitting source is placed at the center of the system by recording differences in the time of detection of annihilation photon pairs. For a non-calibrated system, the timing spectrum has approximately a Gaussian distribution, centered around an arbitrary time. The distribution around the mean is caused by the timing characteristic of scintillation detectors and the associated electronics and the location of centroid caused by variation in time delays in PMTs, cables, etc. In the timing calibration, this time delay is measured for each detector, and time adjustments are introduced such that the centroid of all timing spectra is aligned. It is around this centroid where the coincidence time window is placed. For non-TOF PET systems, the timing calibration has to be calibrated to an accuracy of around a few nanoseconds. For TOF system, the calibration has to be accurate to below 100 picoseconds.

A modern PET system consists of several tens of thousands of detector elements and requires very accurate and precise calibration. The outcome of the calibration steps many times depends on each other and some of the processes are iterative. Due to the complexity of the system, manufacturers have developed highly automated procedures for these calibration steps. It used to be that a trained on-site physicist or engineer had access to the manufacturer’s calibration utilities, which allowed recalibration or retuning of parts or the entire system. However, in recent years the general trend among manufacturers is that the user does not have access to the calibration utilities, and these tasks are only performed by the manufacturer’s service engineers. Fortunately, PET scanners today are very stable, and the need for recalibration and retuning is far less compared to systems that were manufactured 10–20 years ago.

3.4 System Normalization

After a full calibration of the detectors in a PET system, there will be a significant residual variation in both intrinsic and coincidence detection efficiency of the detector elements. There are several reasons for this, such as imperfections in the calibration procedures, geometrical efficiency variations, imperfections in manufacturing, etc. These residual efficiency variations need to be calibrated out to avoid the introduction of image artifacts. This process is analogous to the high-count flood calibration used in SPECT imaging to remove small residual variation in flood-field uniformity. In PET this process is usually referred to as the normalization, and the end result is usually a multiplicative correction matrix that is applied to the acquired sonograms as illustrated in Fig. 10.6. The effects of the normalization on the sinogram and the reconstruction of uniform cylinder phantom are shown in Fig. 10.6. If the normalization is not applied, there is a subtle but noticeable artifact in the image such as the ring artifacts and the cold spot in the middle of the phantom. The origin of the ring artifacts comes from a repetitive pattern in detection efficiency variation across the face of the block detector modules, where the detectors in the center have a higher detections efficiency compared to the edge detectors. Once the normalization is applied, these artifacts are greatly reduced. It should also be noted that the normalization is a volumetric correction as can be noted by the removal of the “zebra” pattern in the axial direction when the normalization is applied (Fig. 10.6).

1st row: The sinogram to the left is a normal uncorrected sinogram of a uniform cylinder phantom. The crosshatch pattern reflects the normal variation in efficiency between the detector elements in the system. The normalization matrix (middle), which is multiplicative, corrects for this and produces a corrected sinogram (right), where the efficiency variations have been greatly reduced. 2nd row: Illustration of the effect of the normalization in the axial direction of the system. The efficiency variation is typically greater in the z-direction compared to the in-plane variation. 3rd and 4th rows: Illustration of the effect of the normalization on a reconstructed image. The transaxial image to the left in the 3rd row shows ring artifacts due to the lack of normalization. These are eliminated when the normalization is applied (right image). The axial cross section of the reconstructed cylinder in the 4th row reflects the efficiency variation axially in a “zebra pattern” when the normalization is not applied (left image). These artifacts are greatly reduced when the normalization is applied (right)

Normalization is usually performed after a detector calibration. There are several approaches on how to acquire the normalization. The most straightforward method is to place a plane source filled with a long-lived positron emitting isotope, such as 68Ge in the center of the FOV. This allows a direct measurement of the detection efficiencies of the LORs that are approximately perpendicular to the source [6]. The source typically has to be rotated to several angular positions in the FOV to measure the efficiency factors for all detector pairs in the system. However, there are several drawbacks of this method. First of all, it is very time consuming to acquire enough counts at each angular position to ensure that the emission data are not contaminated with statistical noise from the normalization. This problem can to a certain degree be alleviated by the use of variance-reducing data processing methods [7].

The most common method for determining the normalization is the component-based method [8, 9]. This method is based on the combination of efficiency factors that are less likely to change over time, such as geometrical factors and other factors that are expected to change over time due to drifts in detector efficiency or due to settings of energy thresholds. This method typically only requires a single measurement that estimates the individual detector efficiency. This is usually done with a uniform cylinder phantom placed at the center of the FOV. The measured detector efficiencies are then combined with the factory-determined factors to generate the final normalization

It is usually not necessary to generate a new normalization, unless there is some noticeable detector drift, which most of the time would require detector service. Some manufacturers have found that by acquiring the normalization more frequently or even to be included in the daily QC, a better stability in image quality and quantification is achieved.

3.5 Activity Calibration

The final step in the calibration of a PET system is to perform an activity calibration. When the acquired emission data are reconstructed with all corrections applied, including normalization, scatter, and attenuation correction, the values in the resulting images are proportional to the activity concentration, but are typically not quantitative (i.e., in units of Bq/ml). It is therefore necessary to acquire a calibration scan in order to produce images that are quantitative and allow quantitative measurements. This is usually done by acquiring scan of a uniform cylinder phantom, filled with a known amount of activity. 18F and 68Ge are the most commonly used isotopes for this purpose. Images of the phantom are then reconstructed, with all corrections applied (normalization, scatter, attenuation, isotope decay). A volume of interest of the reconstructed phantom is then placed well within the borders of the phantom. This is used to determine the average phantom concentration expressed in arbitrary units (counts per second). Since the activity concentration is known from either the manufacture of the 68Ge cylinder or by a careful assay of the 18F dose in a dose calibrator, knowing the volume of the phantom used in the calibration, the calibration factor can then be calculated from

It is important that the branching fraction of the isotope used in the calibration is included in the calculation of the calibration factor as well as the branching fraction of the isotope used in subsequent measurements.

It should be mentioned that a common source of error in the determination of the calibration factor comes from the activity in the phantom. If 18F is used, the activity is usually determined using a dose calibrator. Studies have shown that there can be a large variation in the determination of the calibration factors which can be traced back to how well the dose calibrator itself is calibrated and how the dose is assayed [10] [11]. In the past, the activity concentration in 68Ge cylinders had a relatively large uncertainty due to the lack of available NIST traceable standards of 68Ge for dose calibrators. In recent years, NIST traceable standards have become available, which allow a more accurate calibration of the PET system [12]. The accuracy of the activity calibration is of great importance in multicenter trials, where data of subjects imaged on several different PET systems at different sites are used in quantitative studies for the evaluation of, for instance, response to new therapies. A large variation in the system calibration between the different systems could potentially mask out an actual physiological response when the data from the subjects are pooled together for analysis. As will be discussed later in this chapter, there are additional QC steps needed when using PET in multicenter trials.

4 Acceptance Testing

Acceptance testing is the rigorous testing a user would perform of a system when it has been installed and handed over to the user for clinical use. The purpose of the acceptance testing is to make sure that the system is fully operational and is performing to the manufacturer’s specifications. The test results from the acceptance test should also be used as a reference for future testing (e.g., annual performance testing) to make sure the system performance has not degraded since the time of installation.

NEMA has in collaboration with scientists and manufacturers of PET scanners developed guidelines and a set of tests that are used to specify the performance of a PET system. These standardized tests were initially developed to allow a relatively unbiased comparison of the performance of PET systems of different designs. These tests have also become the core of the tests that are performed in the acceptance test of a newly installed system. As PET systems have evolved over the years, so have the NEMA specifications and the latest document is the NEMA NU 2–2012 [13]. These tests include a series of tests that are described in the sections below. For a more detailed description of each test, the reader is referred to the NEMA NU-2 document.

Although most PET systems manufactured today are hybrid systems such as PET/CT and PET/MRI systems, NEMA has chosen to only specify tests for the PET component and not for the system as a whole. Some suggested additional measurements are included below which tests the system as a whole. Chapter 2 covers some details about the CT component.

4.1 Spatial Resolution

Unlike a scintillation camera, the spatial resolution is not expected to degrade due to the aging of the scintillation crystal itself since the crystal materials used in PET systems are not hygroscopic. However, other components of the block detectors do age over time, such as the PMTs, which may result in a loss in spatial resolution. It is therefore important to have a reference of the spatial resolution, which can be used as indicator of detector degradation as the system ages.

The spatial resolution measurements as specified by NEMA represent the lower limit of the resolution that can be achieved on the system and do not represent the resolution of clinical images. Since the spatial resolution of PET system varies across the FOV, measurements of the resolution are performed at different positions in the FOV (see Fig. 10.7). The measurements are performed with a set of point sources of 18F with a physical extent of less than 1 mm in diameter (radially and axially). The sources used for these measurements are made by filling the tip of a capillary tube (1 mm inner diameter and 2 mm outer diameter) such that the axial extent of the source is less than 1 mm. Measurements of the source should be performed at 1, 10, and 20 cm horizontal or vertical offsets, where the 1 cm offset represents the center of the FOV. It is worth mentioning that the locations of the point source measurements have changed since the previous NEMA 2–2007 document [14], where the resolution measurements were limited to within 10 cm of the central FOV. Extending the measurements out to a radial offset of 20 cm adds a measure of the spatial resolution toward the edge of the FOV where there is a significant degradation in resolution, which is relevant for whole body imaging.

NEMA spatial resolution measurements. The NEMA protocol specifies that the spatial resolution of a PET system should be measured at six different points in the system. The measurements should be taken at three radial offsets: 1 cm, 10 cm, and 20 cm at the axial center of the FOV (left figure). These measurements are then repeated at a 3/8 offset of the axial FOV

Two sets of measurements should be acquired, where the source is positioned at the center of axial FOV and at 3/8 offset from center of the axial FOV (or 1/8 of the axial FOV from the edge of the FOV). In order to minimize secondary effects on the spatial resolution (e.g., pulse pile-up), the activity in the source should induce less than 5 % dead-time and the randoms rate should be less than 5 % of the total event rate. For each data point, enough counts should be acquired at each position to ensure that a smooth and well-defined point-spread function can be generated from the acquired data. Typically at least 100,000 counts should be acquired.

The data acquired from the point sources are then reconstructed using filtered back projection (FBP) without any filtering, to ensure that the measurements as close as possible reflect the intrinsic resolution properties of the scanner. Resolution measurements in all three spatial directions (radial, tangential, and axial) are derived from orthogonal profiles through the point source in the reconstructed images and are reported as FWHM and FWTM. The NEMA specifications state very specifically how these measurements should be performed. The axial, radial, and tangential spatial resolutions for the three radial positions, averaged over the two axial positions, are reported as the system spatial resolution.

4.2 Scatter Fraction

The scatter fraction (SF) is a measure of the contamination of the coincidence data by photons that have scattered prior to being detected. If not corrected for, the scattered events will result in a loss in image contrast. Although scattered events can be corrected for, this usually results in an increase in image noise and/or bias. The SF is highly dependent on the source geometry and scattering environment as well as instrumentation parameters such as system geometry, shielding, and energy thresholds. To measure the SF according to the NEMA specifications, a catheter source is placed at 4.5 cm vertical offset inside a 20 cm diameter and 70 cm long polyethylene phantom, which is placed at the center of the FOV. The SF is defined as the number of scattered to total events measured and is estimated from the sinogram data of the line source. It is important that the data is acquired at a low count rate to minimize the influence of dead time and random coincidences on the estimate. In the analysis of the projection data, only data within the central 24 cm of the FOV is considered. A summed projection profile of the line source is generated from all the projection angles in the sinogram. Prior to summation, the projection at each angle has to be shifted in such a way that the pixel with the highest intensity is aligned with the central pixel in the sinogram (see Fig. 10.8). From the summed projection, the scatter is estimated by first determining the counts at 20 mm of either side of the peak (Fig. 10.8). These two values are then used to create a trapezoidal region under the peak. Scatter is defined as the total counts outside the 20 mm central region and area of the trapezoidal region under the peak. The SF is then simply the scatter counts divided by the total counts in the summed profile. This calculation is performed for each slice of the system and for the system as a whole. If data are acquired in 3-D, the sinograms have to be binned into 2-D data sets using single-slice rebinning (SSRB) [19] prior to the analysis.

The NEMA protocol specifies that the scatter fraction is measured with a line source placed at a 4.5 cm vertical offset inside a 20 cm diameter and 70 cm long cylindrical polyethylene phantom, placed at the center of the FOV. A typical sinogram of the line source is shown in the top figure. Each row or projection line in the sinogram is shifted in such a way that the pixel with the highest intensity is aligned with the central pixel in the sinogram. All the rows are then summed to form a summed projection profile. The scatter counts are all the counts outside the 40 mm central region plus the trapezoidal section under the peak. The scatter fraction is the scatter counts divided by the total counts

4.3 Count Rate Performance and Correction Accuracy

The count rate performance of a PET system is a measurement that determines the count rate response of the system at different activity levels in the FOV. For this measurement, the same phantom used for the scatter fraction measurements is used (i.e., a 20 cm diameter and 70 cm long polyethylene phantom). The catheter is filled with a known amount of 18F, carefully assayed in a dose calibrator, and the total length of the source should be 70 cm. The amount of activity in the source at the start of the measurement should be high enough so that the peak count rate of the system is exceeded. Count rate data are then collected at regular time points as the source decays until the dead-time losses in the true event rate is less than 1 %. Data should be collected frequent enough so that the peak count rate can be accurately determined. If possible, the acquisition should be configured in such a way that random coincidences are acquired separately from the prompt coincidences, which allows for a more straightforward analysis of the count rates. If not possible, the NEMA documents provide an alternative approach.

From the collected data, count rate curves should be generated, which includes the system true count rate (T), system random count rate (R), system scatter count rate (S), system total count rate (TOT), and system noise equivalent count rate (NEC). The NEC [15] is calculated using

where k is 1 for a system that acquires randoms separate from prompt coincidences and 2 for system that subtracts the randoms directly during the acquisition. The NEC is a valuable performance metric that has been shown to be proportional to the signal-to-noise ratio of the reconstructed images [16, 17]. From the count rate curves, the following metrics should be derived: maximum true count rate and at what activity this is reached and maximum NEC rate and at what activity this is reached.

The count rate data is also used to determine how accurately the system can correct for dead time at high activity levels in the FOV. The dead-time correction is typically incorporated as part of the numerous correction applied to the data before and during the image reconstruction. For each image slice and time frame, the average count rate (R image) is determined within a 180 mm diameter regions of interest (ROI). This value is compared to a “true” average count rate (R true), which is determined from an average of image count rates at activity concentrations less than or equal to where the peak NEC is reached. The relative count rate error is then derived for image slice and frame:

The maximum error or bias below the activity at which the maximum NEC is reached should be reported.

4.4 Sensitivity

The sensitivity of the PET scanner is the count rate of the system for a given amount and distribution of activity in the FOV. According to the NEMA specifications, the sensitivity of the PET system should be measured with a 70 cm long thin catheter filled with activity. The activity in the source should be low enough to minimize any dead time in the system (preferably less than 1 % and a random rate less than 5 % of the true coincidence rate). The sensitivity is calculated as the total count rate of the system divided by the activity in the catheter. To ensure that all positrons annihilate near the location of the radioactive decay, the catheter source has to be placed inside a sleeve such as an aluminum sleeve that stops all positrons. This sleeve will unfortunately also attenuate some of the annihilation photons, which prevents a direct measurement of the sensitivity in air. To estimate the attenuation-free sensitivity in air, NEMA has adopted the method first described by Bailey et al. [18]. By successive measurement of the count rate, using sleeves of known thickness, the attenuation-free sensitivity in air can be estimated by exponentially extrapolating the count rate to zero sleeve thickness (see Fig. 10.9).

NEMA sensitivity (extrapolation back to zero thickness). The sensitivity according to the NEMA protocol is determined from successive measurements of a 70 cm line source of known activity placed inside aluminum sleeves of known thickness. To estimate the attenuation-free count rate in air is estimated by exponentially extrapolating the measured count rates back to zero sleeve thickness. The sensitivity is then the attenuation-free count rate divided by the activity in the line source

The measurement of sensitivity is performed both at the center and at a 10 cm radial offset and reported for the system as a whole (i.e., total counts acquired by the system). The individual plane sensitivity should also be reported and is derived from the data acquired at the center position. For a system acquiring data in 2-D, the plane sensitivity is straightforward to determine since the data are directly sorted into individual planes. The plane sensitivity is simply the total count rate in each plane divided by the activity in the 70 cm long source. For a system acquiring data in 3-D, the plane sensitivity is determined by first sorting the 3-D sinograms into 2-D sinograms using SSRB. The plane sensitivity is then calculated from the rebinned sinograms.

4.5 Systems with Intrinsic Background

The detector materials used in the latest generation of PET systems are lutetium-based scintillators. LSO was the first lutetium-based scintillator and was discovered in 1992 [20]. LSO is an almost ideal detector material for PET in that it is dense, bright, and fast scintillator. Since the initial discovery, lutetium-based scintillators with similar properties as LSO have been manufactured, such as LYSO and LGSO. The lutetium-based scintillators have allowed the manufacturers to produce PET systems that allow 3-D and TOF acquisition. One of the slight drawbacks of all lutetium-based scintillator is that they all contain a natural background radioactivity originating from 176Lu. The decay of 176Lu results in a significant intrinsic background coincidence count rate in the system, which is not observed in systems using traditional PET scintillators such as BGO. The background coincidence count rate is primarily due to randoms, but there is also a small contribution of true coincidences. These are produced by coincidences between a beta particle absorbed in the local detector and the absorption in a distant detector of a gamma that is also emitted in the beta decay of 176Lu. The distribution of these events is uniform across the FOV, and the count rates from these are on the order of 1000 cps, without any objects in the FOV. This count rate is dependent on the energy window used, and with attenuating objects in the FOV, the contribution from these events will be attenuated. The contribution of these events in clinical imaging can in general be considered negligible since the contribution from emission counts of the patient in the FOV is several orders of magnitude higher [21]. Nevertheless, the presence of the coincidences generated by 176Lu background makes it necessary to modify the original NEMA procedure in order to minimize the influence of the background on the results.

The procedure for estimating the scatter fraction states that the measurement is measured as part of the count rate performance test, using data from the test where the randoms to true count rate is less that 1 % (i.e., a near randoms free condition). For a system with lutetium-based scintillator, this condition is impossible to achieve due to the background radiation. Using the original NEMA protocol for estimating the scatter fraction would result in an overestimation of the scatter fraction, due to the randoms rate from the 176Lu background [22]. It is therefore suggested that the protocol is modified in such a way that the prompts and randoms are both measured and stored. Randoms free data (i.e., net trues) can then be produced by subtracting the measured random coincidences from the prompt coincidences. This data can then be used to estimate the scatter fraction. Since this is part of the count rate performance test, this method allows the estimation of the scatter fraction as a function of count rate.

For the sensitivity measurements, it is necessary to correct the prompt coincidences in the same manner as described above. It may also be necessary to correct the true coincidence data for the true coincidences produced by 176Lu background since the background may not be negligible compared to the count rate from the line source. To perform the correction, a blank scan is acquired without phantom or source in the FOV. This count rate is then subtracted from the count rate in the line source measurements.

A more complete discussion of the concerns and special considerations for NEMA performance testing of scanners using lutetium-based scintillators can be found in [21]. Detailed results and discussion from a modified NEMA performance test of a system using LSO scintillators can be found in [23].

4.6 Image Quality

The NEMA document also specifies an image quality test, which serves to provide an overall assessment of the imaging capabilities of the system under similar conditions as a clinical whole body scan. This test uses a chest-like phantom, sometimes referred to as the NEMA image quality phantom or IEC phantom, which contains six fillable spheres with diameters between 10 and 37 mm and a 50 mm diameter low-density cylindrical insert to simulate the lower attenuation of the lungs. This test also uses the 20 cm diameter and 70 cm long phantom used in the count rate and scatter fraction test. This phantom is placed adjacent to the chest phantom with activity in the catheter that serves as a source of out-of-field background activity as one would expect in clinical whole body imaging conditions. The phantom should be filled with a background activity concentration of 5.3 kBq/ml (0.14 μCi/ml) of 18F, which corresponds to typical whole body injection of 370 MBq (10 mCi) into a 70 kg patient. The line source in the 70 cm long phantom should be filled with 116 MBq (3.08 mCi) of 18F, which yields approximately the same activity concentration as in the chest phantom.

The two largest spheres should be filled with cold water to allow an evaluation of cold lesion imaging. The remaining spheres should be filled with an activity concentration that is four times the background concentration for evaluation of hot lesion imaging. NEMA also recommends that this test also should be performed at 8:1 lesion to background ratio. The acquisition time of the phantom should be selected to simulate a whole body scan covering 100 cm in 30 min. Because of the relatively high noise levels in the resulting image, it is recommended that three replicate scans are acquired of the phantom, where the scan time in each replicate is adjusted for the decay of the isotope.

The acquired data is then reconstructed using the standard protocol that a site uses for routine whole body imaging. The images are then evaluated in terms of:

-

Hot sphere contrast (four values)

-

Cold sphere contrast (two values)

-

Background variability for each sphere (four values)

-

Attenuation and scatter correction (one value for each image slice)

NEMA describes in great detail how the phantom is to be analyzed, including placement and dimensions of ROIs for the derivation of these values, and the reader is referred to the NEMA document to the details.

The manufacturers of PET systems do not specify the values or ranges of expected values of the parameters derived from the image quality test for a specific scanner. One of the reasons for this is the large number of factors that will influence the values, especially acquisition and reconstruction parameters, which are specific to each imaging site. However, one of the primary values in this test is that it provides a baseline imaging performance values that can be used for comparison as the system ages. Due to the complexity of the test, it is of great importance that all imaging parameters, such as phantom activities, acquisition times, and reconstruction parameters, are well documented so the test can be reproduced at a later time.

The image quality test is also a good starting point to optimize existing or develop new imaging protocols. Using the acquired data sets, the effect of reconstruction parameters in contrast and noise can be evaluated.

4.7 Other Tests

In addition to the NEMA tests described above, there are a number of other tests a user might perform on the system. The purpose of these tests is to determine that the system is operational and to check that corrections applied to the data are producing images that are free of artifacts and also are quantitatively accurate. These tests may also be used as a reference for the long-term evaluation of the system and may be used as part of an annual performance testing.

4.7.1 Image Uniformity

Uniformity was part of the first NEMA specifications for the performance testing of PET scanner [24]. This test was for various reasons dropped in later revisions of the NEMA specification, but the tests still have some merits in terms of testing an individual PET scanner, since it can reveal problems in the processing chain of generating an image. This test involves imaging of a uniform phantom (typically 20 cm diameter and 20 cm or longer axially). At least 20 million counts/slice should be acquired and then reconstructed with FBP without filtering, but with all corrections applied (i.e., scatter, attenuation, and normalization). The high number of counts is necessary in order to better visualize any subtle problems in the corrections that otherwise might be masked by statistical noise.

The images can either be evaluated by visual inspection or quantitatively as described in [24]. During a visual inspection, any signs of concentric ring artifacts are typically a sign of issues in the normalization. This type of artifact could also originate from detector pileup if the amount of activity in the phantom is too high. It is therefore important that this test is performed at an activity level that does not produce more than 10–20 % dead time in the system.

It is also suggested that horizontal and vertical profiles through the phantom are generated to ensure that activity profile is flat and uniform. Any asymmetries may be the result of an incorrect attenuation correction (e.g., misalignment of the CT-generated attenuation correction and the emission data). Any increase or decrease in the activity profile toward the center may be the result from a problem in the scatter correction or the attenuation correction.

4.7.2 Overall Imaging Performance

As an alternative to the NEMA method, to evaluate the overall imaging performance of the system is to use a phantom like the Jaszczak phantom. Using this phantom, the lesion contrast, uniformity, and spatial resolution can be fairly quickly evaluated semiquantitatively. The phantom should be filled with an activity concentration that is close to what is used on the system clinically. The phantom should then be imaged and reconstructed using typical clinical imaging protocols (e.g., whole body and brain). This test would show the typical expected imaging performance during routine imaging.

A high statistic scan may also be useful to evaluate “best possible” imaging performance. This may also reveal artifacts from corrections that otherwise might be masked by statistical noise. Both the typical and high statistic images can then be used as reference images when evaluating the imaging performance as the system ages.

4.7.3 Quantification

Since most sites use the data from a PET scanner to generate quantitative values such as the standardized uptake value or SUV [25], it is important that the quantitative accuracy of the system is tested. This is done by imaging a uniform phantom, of known volume, filled with a known amount of activity. Images of the phantom are then reconstructed with all corrections applied. The images are analyzed by drawing regions of interest (ROIs) on each image slice, typically a centrally placed circular region with a diameter that is well within the edge of the phantom. The measured activity concentration from the ROI analysis is then compared with the calibrated activity concentration, decay corrected to the same reference time as the phantom scan (typically scan start time). Most workstations designed for the analysis of PET images provide values in SUV, and this test may also reveal issues in the calculation of the SUV or more likely issues in data entry of relevant parameters (calibrated activity, time of calibration, and phantom weight or volume) when the scan was acquired. If all parameters were entered correctly, then the SUV values should not deviate from 1.0 more than 5–10 % for a properly calibrated system.

4.7.4 Bed Motion

Since most PET/CT system is used for whole body imaging, the bed motion should be tested to make sure the movement and assembly of the multiple data sets do not introduce artifacts. This test can be performed with a uniform cylinder phantom that is placed in such a way in the FOV that is necessary to image the phantom in two or more axial bed position. Once the images have been reconstructed and assembled into a whole body image set, the images are visually inspected for artifacts. In addition, an axial count profile from a centrally placed ROI in the images of the cylinder can also be helpful to reveal problems in the assembly of the multiple bed position. Any problem with the bed movement would be manifested in an increased nonuniformity in the region where the adjacent image sets are assembled.

A phantom with internal structures, such as the Jaszczak phantom, can also be useful in detecting problems in the bed motion assembly of the image sets. In this case the phantom has to position in such a way that the structures to be evaluated are in the part of the FOV where the adjacent bed positions overlap. Any issues with the bed motion would be seen as distortions such as discontinuities in the coronal and sagittal views of the final assembled images.

One manufacturer recently introduced a continuous bed motion as an alternative to the conventional step-and-shoot bed motion [26]. To verify that this bed motion is working properly, one could image a phantom with a resolution pattern such as the Jaszczak or Derenzo phantom. In this case the axis of the phantom has to be rotated 90° to the axis of the scanner so the resolution pattern is visualized in the coronal view of the final images. In this case the images would be inspected for potential losses in resolution and image distortions and should be compared to corresponding images acquired as a single static bed position.

4.7.5 Image Registration

Most modern PET systems are multimodality systems such as PET/CT systems and in recent years PET/MRI systems where the images from the two modalities are fused in the diagnostic readout. It is therefore important that the images from the two modalities are spatially registered. Another reason image registration is of importance is that the images from the CT (or MRI) are used to generate the attenuation correction and any mis-registration could introduce image artifacts and quantitative errors. There are several methods for performing the image registration calibration and how to check the registration. One manufacturer uses a set of angled line sources that are imaged on both the PET and CT systems. From the reconstructed PET and CT images, a transformation matrix (three translations and three rotations) is generated to which is used to register the two image sets. Another manufacturer uses a set of distributed glass spheres imbedded in a foam phantom that is imaged the PET and the CT systems. In this case the PET scanner generates a transmission image by using a built-in rotating pin source. Similarly, from the two reconstructed image sets, a transformation matrix is generated that allows the registration of subsequent image acquisitions.

Under normal circumstances, the transformation matrix should not change over time if since the image gantries are fixed relative each other. However, during service of a system, the gantries are usually physically separated, and the calibrations need to be repeated once the system is reassembled. Although the calibration procedure for the registration calibration is very robust, it is recommended that the registration is checked from time to time. One method is to image a series of point sources placed at different positions in the FOV. It is important that the sources can be visualized on both the PET and CT systems, which can be accomplished by using, for instance, 68Ge or 22Na source mixed with iodine. The position of each source in FOV of the PET and CT images can then be calculated using, for instance, a center of mass calculation, and the position of each source is then compared between the two image sets. For a well-calibrated system, the error should not be more than about a millimeter. Since point sources mixed with iodine may not be readily available, a registration check can also be performed using a phantom with some built-in structures such as spheres. This could, for instance, be a Jaszczak phantom with fillable spheres or the NEMA image quality phantom. The spheres in these phantoms can then be filled with a mixture of 18F and iodine contrast and imaged on the system. The registration of the two image sets can then either be checked visually by fusing the PET images with the contrast-enhanced CT images or by calculating the center of mass of each sphere in the two image sets, and the registration error can be quantified.

4.7.6 PET/MRI

Combined PET and MRI systems have been recently developed by all major manufacturers of PET systems [27, 28]. The tests for these systems are basically the same as the test performed on a PET/CT (e.g., NEMA test, image/gantry registration, quantification). One of the main challenges in combined PET/MRI systems is the derivation of an accurate attenuation map for the object to be imaged. Unlike CT images, the MRI images do not provide information regarding the photon attenuation properties of the object. Although in many situations the use of appropriate pulse sequences together with sophisticated segmentation techniques using a priori information about the imaged object, fairly accurate attenuation maps can be generated. However, a major complication arises when an object does not produce a signal in the MRI scanner such as bone in patients and the plastic used in phantom. These objects will appear as voids in the MRI image, and as a consequence, most algorithms for producing an attenuation map would incorrectly consider these objects to have zero attenuation. This will result in an undercorrection of the emission data and will result in an underestimation of the activity concentration in the reconstructed PET images. In the worst scenario, this may also lead reconstruction artifacts.

For phantoms, the amount of attenuation from the container or other solid structures can be added to the MRI-generated attenuation map by using data from separately acquired CT images. In order to avoid reconstruction artifacts, the attenuation map derived from CT images has to be accurately registered to the MRI images.

Another source of attenuation in PET/MRI scanners is the presence of transmission and receiver coils, which are essential in the acquisition of the MR images. These coils are typically placed in close vicinity of the object that is imaged and are therefore inside the FOV of the PET scanner. Since these coils are not visualized in the MRI images, they would not contribute to an MRI-derived attenuation map. The amount of attenuation of these coils would cause depends on the design and materials used in the construction of the coil. Some of the coils used for whole body imaging are very thin and are made of low attenuating materials and therefore have almost a negligible amount of attenuation. If the coil has produced a significant amount of attenuation, a CT-derived attenuation map of the coil can be added to the MRI-derived attenuation map. This requires that the coil is rigid and is placed at a fixed and known position during the scan (e.g., a head coil).

5 Routine Quality Assurance

Routine quality assurance tests are performed to verify that the system is operational for routine imaging. It will also ensure that a consistent image quality is maintained and that image quantification is reliable and accurate. These tests are designed to give the operator an overall assessment of the status of the system and whether it can be used for imaging or not. Although the particulars of these routine QC tests are manufacturer specific, the basic test are in general very similar and common to all systems.

5.1 Daily Tests

Common for all PET systems, independence of manufacturer is a test of the detector system that should be performed every day, prior to scanning the first patient. This is analogous to the daily flood image that is acquired on a conventional scintillation camera. Depending on the manufacturer, this test is either performed with a uniform cylinder source or a built-in rotating line source filled with a long-lived positron emitter such as 68Ge. The idea is to expose all detectors in the system to a uniform flux of annihilation photons. The collected data are then presented to the user either as a series of sinograms or a display of the counts collected by each detector element (i.e., fan sum). An example of this is shown in Fig. 10.10, where four out of the total 109 sinograms of a uniform 68Ge are shown in the upper half of the figure. The lower part of the same figure shows the fan-sum counts for all of the detectors in the systems, shown as a gray-scale image. The diagonal lines in green and yellow in the sinogram are the fan sums for a pair of detectors in the system. The locations of these detectors are also indicated in the fan-sum count image. This figure illustrates a fully functional system. The dark diagonal lines seen in the sinograms are normal for this system and are not an indication of failing detector elements. The variation in the fan sums is also normal for this system. This particular system has four rings of block detectors with 48 blocks in each ring, and the efficiency of the detector elements at the edge tends to be less efficient compared to the centrally located elements. This drop in efficiency is the explanation for the lower number of counts between each detector module and detector ring.

Example of the presentation of the sinograms (4 out of 109) and the fan-sum counts displayed as a gray-scale image (bottom) from a normal QC scan of a cylinder phantom. The yellow and green diagonal lines indicate two specific detector elements in the system and are also indicated with their corresponding locations in the fan-sum display. Any detector problem would be seen in the sinogram as illustrated in Fig. 10.5, with corresponding dark or hot spots in the fan-sum image (Note: the repetitive pattern or dark diagonal lines are normal for this particular system)

As described earlier in this chapter, this will give the operator a quick feedback whether the system is operational or not and whether there are some apparent deficiencies in the system such as a failing detector module. A trained operator may also detect more subtle changes in the system such as a drift in the energy thresholds or detector gains by changes in patterns in the sinograms (e.g., appearances of cold or hot diagonal streaks). The daily flood test performed on most PET system is very sensitive in detecting the most common problem seen in a PET system (i.e., detector failures).

One manufacturer uses a calibrated 68Ge cylinder source as the source for the daily QC. This source is placed at the center of the FOV, and a fixed number of true coincidence counts are acquired every day. This data set is used not only to check that all the detectors are operational but also to generate a new normalization file and calibration factor every day. By generating a new normalization every day, small drifts in the detector system can be corrected for without the need of any detector adjustments or tuning. Measuring the calibration factor every day allows the long-term stability of the system to be tracked, and any large change may indicate that the system is in the need of retuning or might possibly be a sign of a hardware failure (e.g., failing high-voltage supply).

Figure 10.11 shows the output from a successful QC scan. The top window tells the operator that the system has passed all the QC tests. A more detailed report of the results of the test can also be generated which is shown in the bottom window. In case of a failure, this report will tell the user what part of the QC test that failed. This will provide guidance whether the problem can be easily resolved (e.g., repeat the QC scan after repositioning the phantom) or whether the system is in need of service.

Example of the results of reports from the daily QC from a Siemens PET/CT system. The top figure tells the user that the system passed all the daily QC tests and that the systems are operational. A more detailed report is also produced which would provide information what test(s) did not pass and could also give insight to what might cause the QC to fail

5.2 Monthly/Quarterly Tests

Since a PET system is used to provide quantitative images, the quantitative accuracy should be tested. Most modern PET systems tend to be very stable in terms of detector drifts; this test is typically only necessary to perform quarterly. It is recommended that quantitative accuracy is tested after a major service or detector adjustments. As described earlier, this is tested with a uniform cylinder phantom filled with a known amount of activity. ROI analysis is then used to compare the activity concentration in the reconstructed images to the actual assayed activity concentration. It is important to point out that this test should not be performed with the same phantom used for the determination of the calibration factor (e.g., the 68Ge phantom used in the daily QC) since this would not reveal any problems in the quantitative accuracy.

5.3 Annual Tests

It is recommended that a more thorough test is performed on the system on an annual basis. In the US, this is required by the ACR as part of PET scanner accreditation. The main purpose of the annual test is to ensure that the imaging properties of the system have not degraded. The annual test does not have to be as rigorous as the acceptance test, but it is a good idea to perform at least a subset of these tests. An overall imaging performance test using, for instance, a Jaszczak phantom or ACR accreditation phantom allows for a quick evaluation of the spatial resolution, contrast, and uniformity. This test should be performed using the same phantom and under similar conditions as the acceptance testing. This allows a comparison of the performance since the time of installation and could reveal any degradation in imaging performance.

It is also recommended to perform the spatial resolution, sensitivity, and scatter fraction tests. Any significant changes in the measured values over time may be an indication that the system may be in the need of a readjustment of detector LUTs and energy thresholds.

A count rate performance test is a good way to ensure that the whole system is operational under somewhat extreme count rate conditions. In a way, it can be seen as a stress test of the system, since it requires that the whole system is fully functional from the front-end detectors, through the processing electronics to the final data storage.

5.4 Tests for Clinical Trials

Quantitative PET imaging is becoming increasingly important in various clinical trials in the evaluation of new cancer therapies. The most commonly used semiquantitative index in PET imaging is the SUV, which is a simplified measure of glucose metabolism. The SUV has been used extensively to characterize lesions and to differentiate between malignant and benign lesions and for the assessment of therapy response [29–33]. The SUV is defined as the tissue concentration of the tissue activity divided by the activity injected per body weight:

where VOI is the activity within a volume of interest and A injected is the total injected activity decay corrected to the time same as the VOI measurement. Using the SUV as a quantitative index is very attractive from a practical point of view since it eliminates the complexities of traditional quantitative study protocols used in PET. These studies involve applying a pharmacokinetic model to the dynamically acquired PET data. These types of studies are typically limited to single organ studies and are typically length and labor intensive to perform. On the other hand, the SUV is very sensitive to a number of factors that may introduce unacceptable large errors. For instance, it is very important that all clocks used to record relevant time points (i.e., dose assay time, injection time, and scan start time) are synchronized. Furthermore, the SUV has been shown to be very sensitive to the uptake time where the SUV in lesions tend to increase over time. The blood glucose level is another factor that will affect the SUV value.

In a clinical trial, a subject is typically scanned prior to the start of treatment. The subject is then imaged in one or more PET imaging sessions, either during or after the treatment. The change in SUV in a lesion is then used as an indicator of the efficacy of the drug.

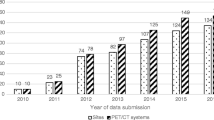

In addition to keeping the imaging condition close to identical at each imaging session, it is also of great importance to maintain the quantitative accuracy and stability in quantification of the PET system over time. Large studies are typically performed as multicenter trials, where the subjects are imaged at different institutions and imaging centers. Although each individual patient is imaged at a specific imaging center and on the same scanner, the entire subject population is very likely to have been imaged on a range of different systems, each with its own specific imaging characteristics. A major challenge in these multicenter trials is therefore to ensure that data acquired on these different systems are quantitatively accurate and comparable.