Abstract

Combat between governmental forces and insurgents is modelled in an asymmetric Lanchester-type setting. Since the authorities often have little and unreliable information about the insurgents, ‘shots in the dark’ have undesirable side-effects, and the governmental forces have to identify the location and the strength of the insurgents. In a simplified version in which the effort to gather intelligence is the only control variable and its interaction with the insurgents based on information is modelled in a non-linear way, it can be shown that persistent oscillations (stable limit cycles) may be an optimal solution. We also present a more general model in which, additionally, the recruitment of governmental troops as well as the attrition rate of the insurgents caused by the regime’s forces, i.e. the ‘fist’, are considered as control variables.

Access provided by Autonomous University of Puebla. Download chapter PDF

Similar content being viewed by others

Keywords

1 Introduction

In a remarkably early paper Lanchester (1916) describes the course of a battle between two adversaries by two ordinary differential equations. By modelling the attrition of the forces as result of the attacks by their opponent, the author is able to predict the outcome of the combat. There are dozens, maybe even hundreds of papers dealing with variants of Lanchester model and extending it. There is, however, a remarkable fact.

Together with A.K. Erlang’s idea to model telephone engineering problems by queueing methods, Lanchester’s approach may be seen as one of the forerunners of Operations Research. And OR is—at least to a certain extent—the science of optimization. But, strangely enough, virtually all existing Lanchester models neglect optimization aspects.

Lanchester’s model has been modified and extended in various directions. Deitchman (1962) and later Schaffer (1968) study asymmetric combat between a government and a group of insurgents. Since the regime’s forces have only limited situational awareness of their enemies, they ‘shoot in the dark’. But such tactics lead to collateral damage. Innocent civilians are killed and infrastructure is destroyed. Moreover, insurgents may escape unharmed and will continue their terroristic actions.

Collateral damage will trigger support for the insurgency and new potential terrorists will join the insurgents, as modelled, e.g., in Caulkins et al. (2009) and Kress and Szechtmann (2009); see also Caulkins et al. (2008). For a more detailed discussion on such ‘double-edged sword’ effects see also Kaplan et al. (2005), Jacobson and Kaplan (2007), and Washburn and Kress (2009, Sect. 10.4). In a recent paper Kress and MacKay (2014) generalize Deitchman’s guerrilla warfare model to account for trade-off between intelligence and firepower.

To avoid responses with these undesirable side-effects improved intelligence is required. As stressed by Kress and Szechtmann (2009):‘Efficient counter-insurgency operations require good intelligence’. These authors were first to include intelligence in a dynamic combat setting. While their model was descriptive, Kaplan et al. (2010) presented a first attempt to apply optimization methods to intelligence improvement.

The present paper uses dynamic optimization to determine an optimal intelligence gathering rate. Acting as decision maker, the government tries to minimize the damage caused by the insurgents, the cost of gathering intelligence (the ‘eye’) and militarily attacking the insurgents (the ‘fist’) as well as the costs for maintaining its forces, and, possibly, recruitment costs.

The continuous-time version in which we will formulate the model below (see Sect. 2) and the use of optimal control theory allows one to derive interesting insights into the course of the various variables of the model especially concerning the occurrence of persistent oscillations. Using Hopf bifurcation theory we will demonstrate (at least numerically) the existence of stable limit cycles for the variables of the model.

The paper is organized as follows. The model is presented in Sect. 2. In Sect. 3 a simplified model is presented and analysed leading to two results. On one hand it can be shown analytically that interior steady states can only exist iff the marginal effect of casualties on the increase of insurgents is smaller than the average effect; on the other hand the existence of periodic solutions is shown numerically by applying Hopf bifurcation theory. Moreover the qualitative structure of periodic solution paths is discussed. Finally, in Sect. 4 some conclusions are drawn and suggestions for possible extensions are given.

2 The Model

Following the model of Kress and Szechtmann (2009) we describe the interaction between governmental forces and insurgents by a variant of Lanchester’s model by

with given initial values G(0) and I(0). The variable \(t \in [0,\infty )\) denotes time,Footnote 1 G(t) and I(t) describe the size of governmental forces, and the fraction of insurgents in the population at time t, respectively. Population size is constant over time and normalized to 1 for simplicity. C(t) describes the collateral damages which may result in the increase of insurgents \(\theta (C)\). Footnote 2 It is reasonable to assume that \(\theta (C)\) is a positive, strictly monotonically increasing continuous function of collateral damages C. α and δ are non-negative constants; α is the attrition rate of the government force due to insurgents’ actions, whereas δ is the natural decay rate of soldiers due to natural attrition and defection. The reinforcement rate β(t) as well as the attrition rate γ(t) may be seen either as time dependent decision variables of the government, or as constant fixed parameters in a simplified version of the model.Footnote 3

Key in the interaction between soldiers and insurgents is the level of intelligence μ(t),(0 ≤ μ(t) ≤ 1). Without knowledge about the location of insurgents (i.e. μ(t) = 0) the government is ’shooting in the dark’, where the probability of reducing insurgents is proportional to the size of the insurgency. On the other hand insurgents can be combated precisely if intelligence is perfect, that is μ(t) = 1. This effect can also be seen by the number of collateral casualties C(t) which is zero under perfect information μ(t) = 1.

Contrary to the model of Feichtinger et al. (2012) it is assumed that μ(t) cannot be directly chosen by the government, rather it depends on the effort ε(t) ≥ 0 of intelligence gathering (e.g., number of informants) but also on the level of the insurgency, i.e. μ(t) = μ(ε(t), I(t)). We assume that μ(0, I) = 0, the partial derivative with respect to ε being positive, μ ε > 0, and that μ ε ε < 0 which means that μ is concave w.r.t. ε. Additionally a saturation effect in the sense that \(\lim _{\epsilon \rightarrow \infty }\mu (\epsilon,I) \leq 1\) should hold. With respect to I the level of intelligence should be hump-shaped. This accounts for the fact that it may be harder to gather information for low fractions of insurgents (as the cooperating population may not be aware of the location of terrorists) as well as for high levels of insurgencies (as nobody would dare to give corresponding information to the government). For the mixed second order partial derivative μ ε, I we assume that it is positive for small and negative for large values of I. For a possible specification see Eq. (14) below.

In our model the government, as a decision maker, has to decide on the effort ε(t) invested in intelligence as well as on recruitment β(t) and attrition γ(t) to minimize the damage caused by insurgents together with the costs of counter-measures. To keep the analysis simple we assume an additive structure. Therefore we consider the following discounted stream of instantaneous costs over an infinite time horizon

as objective value of our intertemporal optimization problem which has to be minimized.

The first term D(I) describes the (monetary) value of damages created by the insurgents which is an important but also problematic issue. Insurgencies always lead to human casualities, and it is almost cynical to measure these losses in monetary terms. Nevertheless, efforts have been made to determine the economic value of a human life (see, e.g., Viscusi and Aldy 2003). Additionally there are also financial damages, such as destroyed infrastructure, which can be evaluated straightforwardly. It is assumed that D(0) = 0 and that damages are an increasing and convex function depending on the size of the insurgency.

The second term K 0(G) captures the costs of keeping an army of size G. The remaining terms K i denote the costs of the control variables ε, γ, β, respectively. These cost functions K j , j = 0, ⋯ , 3 are assumed to be increasing and convex. The positive time preference rate (discount rate) of the government is denoted as ρ.

Summarizing the control problem and modelling it as an maximization problem leads to

subject to

with given initial conditions G(0) and I(0) under the constraints

Note that our model does not address any operational requirements and it stipulates that the decision variables are optimized via a cost function. In a more realistic context of the problem a government might have the objective that the number of attacks by insurgents or the fraction of insurgents within the population must never exceed a certain threshold. Also, it might be a requirement that the size of the governmental troops never falls below a certain threshold as this might be seen as a sign of weakness to third parties trying to exploit the conflict. By reinforcement of the troops, one can keep the size of the governmental troops above a threshold. Additionally, more intense attacks by the government’s forces mean a stronger decline of the insurgents leading to less attacks. In order to keep the fraction of the insurgents below a certain threshold, one can attack the insurgents more intensely and put more efforts into intelligence to make counter-insurgency operations more effective. However, a higher recruitment of the government leads to higher capabilities related to counter-insurgency actions. A future task is to investigate how the introduction of such political objectives affects the optimal application of the available control instruments. One could compare the costs of a strict enforcement of such objectives opposed to a policy where the decision maker does not care about the size of the two groups on the short run as long as the long run outcome is favorable. The inclusion of such state constraints will most likely lead to the occurrence of additional steady states, history-dependence and areas in the state space where no solution is feasible.

3 Simplified Model

To show that periodic solutions may be optimal we consider a simplified version of the above model. As the main goal of our analysis is to study the effect of intelligence, the effort ε in increasing this level of intelligence is the only control variable. Recruitment β as well as the strength of fighting insurgents γ are assumed to be constants and do not enter the objective functional. Moreover δ = 0 is chosen and the damage and cost functions are linear; i.e. we assume \(D(I) = fI,K_{0}(G) = gG,K_{1}(\epsilon ) =\epsilon\) with positive parameters f, g.

Therefore this simplified version can be written as

subject to the system dynamics

where initial values G(0) and I(0) are given.

For simplicity the dependence of the level of intelligence on the effort and on the size of the insurgency is modelled as

a function which shows all features like saturation effect w.r.t. ε and unimodality w.r.t. I required in the general model setup. With this specification the intelligence measures act most efficiently in case that the level of insurgency is about 50 %. Note that by introducing a further parameter this hump of I(1 − I) at I = 0. 5 could be shifted to any arbitrary value within the interval [0, 1].

Additionally the constraints

have to hold.Footnote 4

3.1 Analysis

We analyse the above optimal control problem by applying Pontryagin’s maximum principle and derive the canonical system of differential equations in the usual way. Note that in our analysis we could not verify any sufficiency conditions and therefore the canonical system only leads to extremals which are only candidates for optimal solutions. First the current value Hamiltonian is built and given by

where \(\lambda _{1}\) and \(\lambda _{2}\) are the time dependent adjoint variables and \(\lambda _{0}\) a non-negative constant.Footnote 5

As the optimal control ε has to maximize the Hamiltonian, the first order condition leads to

Remarks

-

1.

It is difficult to exclude the anormal case \(\lambda _{0} = 0\) in a formal way. Nevertheless it can be easily seen from the Hamiltonian given by (16) that in this case the optimal control ε would either be 0 or unbounded in case that 0 < I < 1 (depending on the sign of the adjoint variable \(\lambda _{2}\)), and undeterminate otherwise. As these cases are unrealistic we restrict our further analysis to the normal case \(\lambda _{0} = 1.\)

-

2.

To determine the sign of the adjoint variable \(\lambda _{2}\) notice that for positive values of \(\lambda _{2}\) the Hamiltonian is strictly monotonically decreasing for increasing ε, such that the constraint ε ≥ 0 would become active leading to the boundary solution ε = 0 as value of the optimal effort.

The adjoint variable \(\lambda _{2}\) can be interpreted as shadow price of the state variable I(t) and by economic reasoning we assume that this has to be negative for the cases we are considering in the following.

-

3.

As \(\lim _{\epsilon \rightarrow \infty }H = -\infty\) there exists an interior maximizer ε of the Hamiltonian if

$$\displaystyle{ \left.\frac{\partial H} {\partial \epsilon } \right \vert _{\epsilon =0}> 0. }$$(18)This condition holds for sufficiently small values of \(\lambda _{2}\), i.e. iff

$$\displaystyle{ \lambda _{2} <\frac{-1} {\gamma GAI(1 - I)^{2}[1 +\theta ^{{\prime}}(C)]}. }$$(19) -

4.

Under the additional assumption that the effect of collateral damages on the increase of the insurgency is marginally increasing i.e. \(\theta (C)\) being convex, the Hamiltonian is concave w.r.t. ε and the first order condition (17) leads to a unique interior solution iff (19) holds. A sigmoid (convex/concave) relationship may be more plausible to describe the effect of collateral damages, but then the Hamiltonian is not concave any more leading to problems when solving for the optimal control variable.

The adjoint variables satisfy the adjoint differential equations

and the transversality conditions

In analysing dynamical systems the existence of steady states and its stability is of major concern. In the following proposition a necessary condition for the existence of interior steady states is derived.

Proposition

Under the assumption that keeping an army of size G leads to costs gG (i.e. g > 0) and that the shadow price \(\lambda _{1}\) of the army is positive, an interior steady state of the optimization model can exist only if the marginal effect of casualties on the increase of insurgents is smaller than the average effect, i.e. only if

at the steady state level. Footnote 6

Proof

The steady states of the canonical system are solutions of \(\dot{G} =\dot{ I} =\dot{\lambda } _{1} =\dot{\lambda } _{2} = 0,\) where the optimal control is implicitly given by (17).

At an interior steady state \(\dot{I} = 0\) implies

As

\(\dot{\lambda }_{1} = 0\) leads to

Note that the LHS of (26) is positive, therefore

has to be positive. (27) together with (24) leads to

Remarks

-

1.

Assuming a power function \(\theta (C) = C^{\alpha }\) condition (23) holds iff α < 1, i.e. if the effect of collateral casualties on the inflow of insurgents is marginally decreasing.

For a linear function \(\theta (C) =\theta _{0} +\theta _{1}C\) condition (23) holds iff the intercept \(\theta _{0}\) is strictly positive.

-

2.

In Feichtinger et al. (2012) \(\theta (C) =\theta C^{2}\) is assumed. In their model interior steady states only exist if the utility of keeping an army of size G is larger than the corresponding costs, i.e. if G enters the objective functional positively. Obviously an army of size G may also lead to some benefits as it can be seen as status symbol or it leads also to some level of deterrence. That these utilities, however, compared to keeping costs result in a net benefit seems to be rather unrealistic.

-

3.

Note that condition (23) is also required for the existence of interior steady states in the more general model (5)–(9).

In the following we specify the function \(\theta (C)\) as linear, i.e. as \(\theta (C) =\theta _{0} + (\theta _{1} - 1)C\), with \(\theta _{0}> 0,\theta _{1}> 1.\) The first order optimality condition (17) then reduces to

which leads to the optimal level of effort

As the partial derivative of casualities w.r.t. I is given by

we have to analyse the canonical system

Solutions of the differential equation system (32)–(35) with the level of intelligence μ given by (36) together with initial values G(0) and I(0) where also the transversality conditions hold are extremals and strictly speaking only candidates for an optimal solution as we could not verify any sufficiency conditions so far.

In the following we show that periodic paths may exist as solutions of the dynamical system (32)–(36) by applying the Hopf bifurcation theorem (see, e.g., Guckenheimer and Holmes 1983, for details). This theorem considers the stability properties of a family of smooth, nonlinear dynamic systems for variation of a bifurcation parameter. More precisely, this theorem states that periodic solutions exist if (i) two purely imaginary eigenvalues of the Jacobian matrix exist for a critical value of the bifurcation parameter, such that (ii) the imaginary axis is crossed at nonzero velocity. Note that stable or unstable periodic solutions may occur and to determine its stability further computations, either analytically or numerically are necessary.

Unfortunately, for the canonical system (32)–(35) it is not even possible to find steady state solutions explicitly and therefore we have to base our results on simulations given in the following numerical example.

3.2 Numerical Example

We choose the discount rate ρ as bifurcation parameter. Values for the other parameters are specified as follows:Footnote 7

The Jacobian possesses a pair of purely imaginary eigenvalues for the critical discount rate ρ crit = 0. 14305948 at the steady state

The optimal effort to be invested into intelligence at the steady state level amounts to \(\epsilon ^{\infty } = 4.8115\) leading to the level of intelligence \(\mu ^{\infty } = 0.4986.\)

According to the computer code BIFDD (see Hassard et al. 1981) stable limit cycles occur for discount rates less than ρ crit .

To present a cycle and discuss its properties we used the boundary value problem solver COLSYS to find a periodic solution of the canonical system for ρ = 0. 127 by applying a collocation method.

For ρ = 0. 127 the steady state is shifted to \(G^{\infty } = 13.655,I^{\infty } = 0.7532,\lambda _{1} = 46.6175,\lambda _{2} = -31.8485\), with an optimal effort of \(\epsilon ^{\infty } = 4.1850\) leading to a level of intelligence \(\mu ^{\infty } = 0.4861.\) This steady state is marked as a cross in Figs. 2–5. Additionally solutions spiraling towards the persistent oscillation are depicted in these figures.

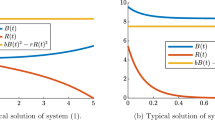

In Fig. 1 the time path of the control ε as well as the resulting level of intelligence μ together with the two state variables G and I along one period of the cycle can be seen. The length of the period is 19.7762 time units.

As can be seen from Figs. 2, 3, 4, and 5 we can distinguish four different regimes along one cyclical solution. In the first phase which could be called ‘increasing terror phase’ both the strength of governmental troops G(t) as well as the size of the insurgency I(t) increase.

In the following phase, called ‘dominant terror phase’ the insurgency increases, but the government reduces its counter-terror measures as this will also reduce the negative impact due to collateral damages.

As the negative effect of collateral damages is reduced also the number of insurgents decreases in the ‘recovery phase’ where G(t) and I(t) both shrink.

In the last phase the government can be seen as being dominant as G(t) starts to increase again due to the low size of the insurgency, which is still decreasing. Due to an increasing effect of collateral damages the insurgency will start to become larger at the end and the cycle is closed.

Obviously the size of governmental troops G(t) is the leading variable whereas I(t) lags behind. This is caused by the effect of collateral damages and the induced inflow of insurgents.

Having a closer look at the effect of the effort on gathering information on the level of intelligence it turns out that along a fraction of 57. 5 % of the cycle an increasing effort leads to a higher intelligence level, and in 30. 0 % both the effort as well as intelligence are decreasing. Nevertheless there exist rather short phases along the cycle with opposing trends. The level of intelligence is increasing despite a falling effort along 10. 5 % of the periodic solution due to the decreasing size of the insurgency, since then the effort becomes more effective. On the contrary along 2 % even an increasing effort in gathering information does not lead to a higher level of intelligence since, due to an increasing insurgency, it becomes harder to obtain reliable information.

4 Conclusions and Extensions

Surprisingly, Lanchester’s pivotal attrition paradigm has never enriched the optimization scenario.Footnote 8 In the present paper we use optimal control theory to study how a government should apply intelligence efficiently to fight an insurgency. Due to the formal structure of the model, i.e. the multiplicative interaction of the two states with the control variable, complex solutions might be expected. As a first result in that direction a Hopf bifurcation analysis is carried out establishing the possibility of persistent stable oscillations.Footnote 9

Since our analysis can be seen only as a first step, several substantial extensions are possible. The first is to include the ’fist’, i.e. the attrition rate of the insurgents caused by the regime’s forces, in addition to the ‘eye’, i.e. the intelligence level of the government. In particular, we intend to study the optimal mix of these two policy variables. A main question which arises in that context is whether these instruments might behave complementarily or substitutively.Footnote 10

Among the further extensions we mention a differential game approach with the government and the insurgents as the two players. Moreover, it would be interesting to study the case where the regime has to fight against two (or more) opponents (insurgents). Additionally following the ideas by Udwadia et al. (2006) the combination of both direct military intervention to reduce the terrorist population and non-violent persuasive intervention to influence susceptibles to become pacifists could be analyzed under aspects of optimization.

Finally we should stress the fact that the model we discussed is not validated by empirical data. Even if this would be the case, the proposed model seems much to simple to deliver policy recommendations for concrete insurgencies.

Notes

- 1.

Note that the time argument t will be dropped to simplify notation whenever appropriate.

- 2.

This term models the double-edged sword effect as described in Sect. 1.

- 3.

The attrition rate γ is assumed to be non-negative, but there are no restrictions on β because it can be seen as hiring/firing rate and negative values correspond to dismissing soldiers.

- 4.

A ≤ 4 assures that μ ≤ 1 as the maximum value of I(1 − I) is 1∕4 and \(\left (1 - \frac{1} {1+\epsilon }\right )\) is bounded by 1.

- 5.

Actually one only has to distinguish between the normal case \(\lambda _{0} = 1\) which follows for strictly positive values of \(\lambda _{0}\) by rescaling, or the abnormal case \(\lambda _{0} = 0.\)

- 6.

In economic theory this means that the elasticity of \(\theta\) is smaller than 1.

- 7.

Note that these values do not represent any real situation and are purely fictitious to illustrate the occurrence of periodic solutions in principle.

- 8.

There are, however, two remarkable exceptions. Kaplan et al. (2010) formulate an optimal force allocation problem for the government based on Lanchester’s dynamics and develop a knapsack approximation and also model and analyse a sequential force allocation game. Feichtinger et al. (2012) study multiple long-run steady states and complex behaviour and additionally propose a differential game between terrorists and government.

- 9.

Persistent oscillations, more precisely, stable limit cycles, occur in quite few optimal control models with more that one state, see Grass et al. (2008), e.g. particularly in Sect. 6.2

- 10.

E.g. the interaction between marketing price and advertising is a classical example of a synergism of two instruments influencing the stock of customers in the same direction. A further example is illicit drug control as analysed by Behrens et al. (2000), where prevention and treatment are applied but not at the same time.

References

Behrens, D. A., Caulkins, J. P., Tragler, G., & Feichtinger, G. (2000). Optimal control of drug epidemics: Prevent and treat - but not a the same time? Management Science, 46(3), 333–347.

Caulkins, J. P., Feichtinger, G., Grass, D., & Tragler, G. (2009). Optimal control of terrorism and global reputation. Operations Research Letters, 37(6), 387–391.

Caulkins, J. P., Grass, D., Feichtinger, G., & Tragler, G. (2008). Optimizing counter-terror operations: Should one fight fire with “fire” or “water”? Computers & Operations Research, 35(6), 1874–1885.

Deitchman, S. J. (1962). A Lanchester model of guerrilla warfare. Operations Research, 10(6), 818–827.

Feichtinger, G., Novak, A. J., & Wrzaczek, S. (2012). Optimizing counter-terroristic operations in an asymmetric Lanchester model. In Proceedings of the IFAC Conference Rimini, Italy. Control Applications of Optimization (Vol. 15(1), pp. 27–32). doi:10.3182/20120913-4-IT-4027.00056.

Grass, D., Caulkins, J. P., Feichtinger, G., Tragler, G., & Behrens, D. A. (2008). Optimal control of nonlinear processes. with applications in drugs, corruption and terror. Heidelberg: Springer.

Guckenheimer, J., & Holmes, P. (1983). Nonlinear oscillations, dynamical systems, and bifurcation of vector fields. New York: Springer.

Hassard, B. D., Kazarnioff, N. D., & Wan, Y. H. (1981). Theory and applications of hopf bifurcation. Mathematical Lecture Note Series. Cambridge: Cambridge University Press.

Jacobson, D., & Kaplan, E. H. (2007). Suicide bombings and targeted killings in (counter-) terror games. Journal of Conflict Resolution, 51(5), 772–792.

Kaplan, E. H., Kress, M., & Szechtman, R. (2010). Confronting entrenched insurgents. Operations Research, 58(2), 329–341.

Kaplan, E. H., Mintz, A., Mishal, S., & Samban, C. (2005). What happened to suicide bombings in Israel? insights from a terror stock model. Studies in Conflict & Terrorism, 28, 225–235.

Kress, M., & MacKay, N. J. (2014). Bits or shots in combat? The generalized Deitchman model of guerrilla warfare. Operations Research Letters, 42, 102–108.

Kress, M., & Szechtmann, R. (2009). Why defeating insurgencies is hard: the effect of intelligence in counter insurgency operations - a best case scenario. Operations Research, 57(3), 578–585.

Lanchester, F. W. (1916). Aircraft in warfare: The dawn of the fourth arm. London: Constable and Company.

Schaffer, M. B. (1968). Lanchester models of guerrilla engagements. Operations Research, 16(3), 457–488.

Udwadia, F., Leitmann, G., & Lambertini, L. (2006). A dynamic model of terrorism. Discrete Dynamics in Nature and Society, 2006, 132. doi:10.1155/DDNS/2006/85653.

Viscusi, W. K., & Aldy, J. E. (2003). The value of a statistical life: A critical review of market estimates throughout the world. Journal of Risk and Uncertainty, 27(1), 5–76.

Washburn, A., & Kress, M. (2009). Combat modeling. New York: Springer.

Acknowledgements

The authors thank Jon Caulkins, Dieter Grass, Moshe Kress, Andrea Seidl, Stefan Wrzaczek for helpful discussions and two anonymous referees for their comments.

This research was supported by the Austrian Science Fund (FWF) under Grant P25979-N25.

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Rights and permissions

Copyright information

© 2016 Springer International Publishing Switzerland

About this chapter

Cite this chapter

Novák, A.J., Feichtinger, G., Leitmann, G. (2016). On the Optimal Trade-Off Between Fire Power and Intelligence in a Lanchester Model. In: Dawid, H., Doerner, K., Feichtinger, G., Kort, P., Seidl, A. (eds) Dynamic Perspectives on Managerial Decision Making. Dynamic Modeling and Econometrics in Economics and Finance, vol 22. Springer, Cham. https://doi.org/10.1007/978-3-319-39120-5_13

Download citation

DOI: https://doi.org/10.1007/978-3-319-39120-5_13

Published:

Publisher Name: Springer, Cham

Print ISBN: 978-3-319-39118-2

Online ISBN: 978-3-319-39120-5

eBook Packages: Business and ManagementBusiness and Management (R0)