Abstract

Reconstructing ancestral gene orders in a given phylogeny is a classical problem in comparative genomics. Most existing methods compare conserved features in extant genomes in the phylogeny to define potential ancestral gene adjacencies, and either try to reconstruct all ancestral genomes under a global evolutionary parsimony criterion, or, focusing on a single ancestral genome, use a scaffolding approach to select a subset of ancestral gene adjacencies. In this paper, we describe an exact algorithm for the small parsimony problem that combines both approaches. We consider that gene adjacencies at internal nodes of the species phylogeny are weighted, and we introduce an objective function defined as a convex combination of these weights and the evolutionary cost under the Single-Cut-or-Join (SCJ) model. We propose a Fixed-Parameter Tractable algorithm based on the Sankoff-Rousseau dynamic programming algorithm, that also allows to sample co-optimal solutions. An implementation is available at http://github.com/nluhmann/PhySca.

Access provided by Autonomous University of Puebla. Download conference paper PDF

Similar content being viewed by others

Keywords

- Small Parsimony Problem

- Ancestral Gene Order

- Extant Genomes

- Contiguous Ancestral Regions (CARs)

- Extremity Markers

These keywords were added by machine and not by the authors. This process is experimental and the keywords may be updated as the learning algorithm improves.

1 Introduction

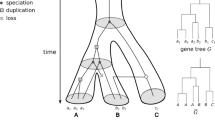

Reconstructing ancestral gene orders is a long-standing computational biology problem with important applications, as shown in several recent large-scale projects [8, 17, 18]. Informally, the problem can be defined as follows: Given a phylogenetic tree representing the speciation history leading to a set of extant genomes, we want to reconstruct the structure of the ancestral genomes corresponding to the internal nodes of the tree.

Existing ancestral genome reconstruction methods concentrate on two main strategies. Local approaches consider the reconstruction of one specific ancestor at a time independently from the other ancestors of the tree. Usually, they do not consider an evolutionary model and proceed in two stages: (1) comparing gene orders of ingroup and outgroup species to define potential ancestral gene adjacencies, and (2) selecting a conflict-free subset of ancestral gene adjacencies to obtain a set of Contiguous Ancestral Regions (CARs) [3, 7, 14, 15]. Global approaches on the other hand simultaneously reconstruct ancestral gene orders at all internal nodes of the considered phylogeny, generally based on a parsimony criterion within an evolutionary model. This small parsimony problem has been studied with several underlying genome rearrangement models, such as the breakpoint distance or the Double-Cut-and-Join (DCJ) distance [1, 11, 23]. While rearrangement scenarios based on complex rearrangement models can give insights into underlying evolutionary mechanisms, from a computational point of view, the small parsimony problem is NP-hard for most rearrangement distances [21]. One exception is the Single-Cut-or-Join (SCJ) distance, for which linear/circular ancestral gene orders can be found in polynomial time [9], however constraints required to ensure algorithmic tractability yield fragmented ancestral gene orders.

The work we present is an attempt to reconcile both approaches. We introduce a variant of the small parsimony problem based on an optimality criterion that accounts for both an evolutionary distance and the difference between the initial set of potential ancestral adjacencies and the final consistent subset of adjacencies. More precisely we consider that each potential ancestral gene adjacency can be provided with a (prior) non-negative weight at every internal node. These adjacency weights can e. g. be obtained as probabilities computed by sampling scenarios for each potential adjacency independently [6] or can be based on ancient DNA (aDNA) sequencing data providing direct prior information assigned to certain ancestral nodes. It follows that the phylogenetic framework we present can then also assist in scaffolding fragmented assemblies of aDNA sequencing data [12, 19]. We describe an exact exponential time algorithm for reconstructing consistent ancestral genomes under this optimality criterion, and show that the small parsimony problem variant we introduce is Fixed-Parameter Tractable (FPT), with a parameter linked to the amount of conflict in the data. Moreover, this also allows us to provide a FPT sampling algorithm for co-optimal solutions. We evaluate our method on two data sets: mammalian genomes spanning roughly one million years of evolution, and bacterial genomes (pathogen Yersinia) spanning 20, 000 years of evolution. See [13] for an extended preprint of this paper.

2 Background

Genomes and adjacencies. Genomes consist of chromosomes and plasmids. Each such component can be represented as a linear or circular sequence of oriented markers over a marker alphabet. Markers correspond to homologous sequences between genomes, e. g. genes or synteny blocks. We assume that each marker appears exactly once in each genome, so our model does not consider duplications or deletions. To account for its orientation, each marker x is encoded as a pair of marker extremities \((x_h,x_t)\) or \((x_t,x_h)\). An adjacency is an unordered pair of marker extremities, e. g. \(\{x_t,y_h\}\). The order of markers in a genome can be encoded by a set of adjacencies. Two distinct adjacencies are said to be conflicting if they share a common marker extremity. If a set of adjacencies contains conflicting adjacencies, it is not consistent with a mixed linear/circular genome model.

The small parsimony problem and rearrangement distances. In a global phylogenetic approach, we are given a phylogenetic tree with extant genomes at its leaves and internal nodes representing ancestral genomes. We denote by \(\mathcal {A}\) the set of all adjacencies present in at least one extant genome and assume that every ancestral adjacency belongs to \(\mathcal {A}\). Then the goal is to find a labeling of the internal nodes by consistent subsets of \(\mathcal {A}\) minimizing a chosen genomic distance over the tree. This is known as the parsimonious labeling problem. It is NP-hard for most rearrangement distances. The only known exception is the set-theoretic Single-Cut-or-Join (SCJ) distance [9]. It defines a rearrangement distance by two operations: the cut and join of adjacencies. Given two genomes defined by consistent sets of adjacencies A and B, the SCJ distance between these genomes is \(d_{SCJ}(A,B)\;=\; \mid A-B\mid +\mid B-A\mid \).

The small parsimony problem under the SCJ model can be solved by computing a parsimonious gain/loss history for each adjacency separately with the dynamic programming Fitch algorithm [10]. Consistent labelings can be ensured with the additional constraint that in case of ambiguity at the root of the tree, the absence of the adjacency is chosen [9]. As each adjacency is treated independently, this constraint might automatically exclude all adjacencies being part of a conflict to ensure consistency and thus results in an unnecessarily sparse reconstruction.

Generalization by weighting adjacencies. When considering an internal node v, we define node u as its parent node in T. We assume that a specific adjacency graph is associated to each ancestral node v, whose edges are annotated by a weight \(w_{v,a} \in [0,1]\) representing a confidence measure for the presence of adjacency a in species v. Then in a global reconstruction, cutting an adjacency of a higher weight has higher impact in terms of the optimization criterion, than cutting an adjacency of lower weight.

Formally, we define two additional variables for each adjacency \(a \in \mathcal {A}\) at each internal node \(v \in V\): The presence (or absence) of a at node v is represented by \(p_{v,a} \in \{0,1\}\), while \(c_{v,a} \in ~\{0,1\}\) indicates a change for the status of an adjacency along an edge (u, v), i.e., \(p_{u,a} \ne p_{v,a}\). We consider the problem of optimizing the following objective function, where \(\alpha \in [0, 1]\) is a convex combination factor.

Definition 1

(Weighted SCJ labeling problem). Let \(T=(V,E)\) be a tree with each leaf l labeled with a consistent set of adjacencies \(\mathcal {A}_l \subseteq \mathcal {A}\) and each adjacency \(a\in \mathcal {A}\) is assigned a given weight \(w_{v,a}\in [0,1]\) for each node \(v \in V\). A labeling \(\lambda \) of the internal nodes of T with \(\lambda (l) = \mathcal {A}_l\) for each leaf is an optimal weighted SCJ labeling if none of the internal nodes \(v \in V\) contains a conflict and it minimizes the criterion

Further, we can state the corresponding co-optimal sampling problem.

Definition 2

(Weighted SCJ sampling problem). Given the setting of the weighted SCJ labeling problem, sample uniformly from all labelings \(\lambda \) of the internal nodes of T that are solutions to the weighted SCJ optimal labeling problem.

Existing results. There exist a few positive results for the weighted SCJ labeling problem with specific values of \(\alpha \). If \(\alpha = 0\), the objective function corresponds to the small parsimony problem under the SCJ distance and hence a solution can be found in polynomial time [9]. A generalization towards multifurcating, edge-weighted trees including prior information on adjacencies at exactly one internal node of the tree is given in [12]. Recently, Miklós and Smith [16] proposed a Gibbs sampler for sampling optimal labelings under the SCJ model with equal branch lengths. This method addresses the issue of the high fragmentation of internal node labelings, but convergence is not proven, and so there is no bound on the computation time. If \(\alpha = 1\), i.e., we do not take evolution in terms of SCJ distance along the branches of the tree into account, we can solve the problem by applying independently a maximum-weight matching algorithm at each internal node [15]. So the extreme cases of the problem are tractable, and it remains open to see if the general problem is hard.

3 Methods

In order to find a solution to the weighted SCJ labeling problem, we first show that we can decompose the problem into smaller independent subproblems. Then, for each subproblem containing conflicting adjacencies, we show that, if it contains a moderate level of conflict, it can be solved using the Sankoff-Rousseau algorithm [20] with a complexity parameterized by the size of the subproblem. For a highly conflicting subproblem, we show that it can be solved by an Integer Linear Program (ILP).

Decomposition into independent subproblems. We first introduce a graph that encodes all adjacencies present in at least one internal node of the considered phylogeny (Definition 3). As introduced previously, we consider a tree \(T=(V,E)\) where each node is augmented by an adjacency graph.

Definition 3

(Global adjacency graph). The set of vertices \(V_{AG}\) of the global adjacency graph AG consists of all marker extremities present in at least one of the adjacency graphs. There is an edge between two vertices \(a, b\in V_{AG}\) that are not extremities of a same marker, if there is an internal node in the tree T whose adjacency graph contains the adjacency \(\{a,b\}\). The edge is labeled with the list of all internal nodes v that contain this adjacency.

Each connected component C of the global adjacency graph defines a subproblem composed of the species phylogeny, the set of marker extremities equal to the vertex set of C and the set of adjacencies equal to the edge set of C. According to the following lemma, whose proof is straightforward, it is sufficient to solve each such subproblem independently.

Lemma 1

The set of all optimal solutions of the weighted SCJ labeling problem is the set-theoretic Cartesian product of the sets of optimal solutions of the instances defined by the connected components of the global adjacency graph.

To solve the problem defined by a connected component C of the global adjacency graph containing conflicts, we rely on an adaptation of the Sankoff-Rousseau algorithm with exponential time complexity, parameterized by the size and nature of conflicts of C, and thus can solve subproblems with moderate amount of conflict.

Application to the weighted SCJ optimal labeling problem. In order to use the Sankoff-Rousseau algorithm to solve the problem defined by a connected component C of the global adjacency graph, we define a label of an internal node of the phylogeny as the assignment of at most one adjacency to each marker extremity. More precisely, let x be a marker extremity in C, v an internal node of T, and \(e_1,\dots ,e_{d_x}\) be all edges in the global adjacency graph that are incident to x and whose label contains v (i.e., represent adjacencies in the adjacency graph of node v). We define the set of possible labels of v as \(L_{x,v}=\{\emptyset ,e_1,\dots ,e_{d_x}\}\). The set of potential labels \(L_v\) of node v is then the Cartesian product of the label sets \(L_{x,v}\) for all \(x\in V(C)\) resulting in a set of discrete labels for v of size \(\prod _{x\in V(C)}(1+d_x)\). Note that not all of these joint labelings are valid as they can assign an adjacency \(a=(x,y)\) to x but not to y, or adjacency \(a=(x,y)\) to x and \(b=(x,z)\) to z thus creating a conflict (see [13] for an example).

For an edge (u, v) in the tree, we can then define a cost matrix that is indexed by pairs of labels of \(L_u\) and \(L_v\), respectively. The cost is infinite if one of the labels is not valid, and defined by the objective function otherwise. We can then apply the Sankoff-Rousseau approach to find an optimal labeling of all internal nodes of the tree for connected component C. Note that, if C is a connected component with no conflict, it is composed of two vertices and a single edge, and can be solved in space O(n) and time O(n).

Solving a general instance. Given a general instance, i.e., an instance not limited to a single connected component of the global adjacency graph, we can consider each connected component independently (Lemma 1). For a set of N markers and c connected components in the global adjacency graph defining a conflicting instance, we define D as the maximum degree of a vertex and M as the maximum number of vertices in all such components. Then, the complexity analysis in the appendix shows that the problem is Fixed-Parameter Tractable (FPT).

Theorem 1

The weighted SCJ labeling problem can be solved in worst-case time \(O(nN(1+D)^{2M})\) and space \(O(nN(1+D)^{M})\).

In practice, the exponential complexity of our algorithm depends on the structure of the conflicting connected components of the global adjacency graph. The dynamic programming algorithm will be effective on instances with either small conflicting connected components or small degrees within such components, and will break down with a single component with a large number of vertices of high degree. For such components, the time complexity is provably high and we propose an ILP (see [13]) to solve such components.

Sampling co-optimal labelings. The Sankoff-Rousseau DP algorithm can easily be modified to sample uniformly from the space of all optimal solutions to the weighted SCJ labeling problem in a forward-backward fashion. The principle is to proceed in two stages: first, for any pair (v, a) we compute the number of optimal solutions under this label for the subtree rooted at v. Then, when computing an optimal solution, if a DP equation has several optimal choices, one is randomly picked according to the distribution of optimal solutions induced by each choice (see [13] for more details). This classical dynamic programming approach leads to the following result.

Theorem 2

The weighted SCJ sampling problem can be solved in worst-case time \(O(nN(1+D)^{2M})\) and space \(O(nN(1+D)^{M})\).

For subproblems that are too large for being handled by the Sankoff-Rousseau algorithm, the SCJ small parsimony Gibbs sampler recently introduced [16] can easily be modified to incorporate prior weights, although there is currently no proven property regarding its convergence.

4 Results

We evaluated our reconstruction algorithm on two datasets: mammalian and Yersinia genomes. The mammalian dataset was used in the studies [7, 16]. Our second dataset contains eleven Yersinia genomes, an important human pathogen. This dataset contains contigs from the recently sequenced extinct agent of the Black Death pandemic [4] that occurred roughly 650 years ago. We refer to [13] for the species phylogenies of these two datasets and extended information on how adjacency weights have been obtained for both datasets.

4.1 Mammalian Dataset

Unique and universal markers were computed as synteny blocks with different resolution in terms of minumum marker length. Note that all rearrangement breakpoints are therefore located outside of marker coordinates. It results in five different datasets varying from 2, 185 markers for a resolution of 100 kb to 629 markers for a resolution of 500 kb.

We considered all adjacencies present in at least one extant genome as potentially ancestral. To weight an adjacency at all internal nodes of the tree, we relied on evolutionary scenarios for each single adjacency, in terms of gain/loss, independently of the other adjacencies (i. e. without considering consistency of ancestral marker orders). We obtain these weights using the software DeClone [6], and we refer to them as DeClone weights. We considered two values of the DeClone parameter kT, 0.1 and 1, the former ensuring that only adjacencies appearing in at least one optimal adjacency scenario have a significant DeClone weight, while the latter samples adjacencies outside of optimal scenarios. For the analysis of the ancestral marker orders obtained with our algorithm, we considered the data set at 500 kb resolution and sampled 500 ancestral marker orders for all ancestral species under different values of \(\alpha \).

The complexity of our algorithm is dependent on the size of the largest connected component of the global adjacency graph. In order to restrict the complexity, we kept only adjacencies whose weights are above a given threshold x. In most cases, all connected components are small enough to be handled by our exact algorithm in reasonable time except for very large components in the marker sets with higher resolution under a low threshold x. For the 500 kb dataset with \(x=0.2\) and \(kT=1\), the computation of one solution takes on average 200 s on a 2.6 GHz i5 with 8 GB of RAM. It can be reduced to 30 s when DeClone weights are based on \(kT=0.1\). This illustrates that our algorithm, despite an exponential worst-case time complexity, can process realistic datasets in practice. Next, we analyzed the 500 optimal SCJ labelings obtained for \(\alpha =0\), i. e. aiming only at minimizing the SCJ distance, and considered the fragmentation of the ancestral gene orders (number of CARs) and the total evolutionary distance. Note that, unlike the Fitch algorithm used in [9], our algorithm does not favor fragmented assemblies by design but rather considers all optimal labelings. Sampling of co-optimal solutions shows that the pure SCJ criterion leads to some significant variation in terms of number of CARs (Fig. 1). The optimal SCJ distance in the tree for \(\alpha =0\) is 1, 674, while the related DCJ distance in the sampled reconstructions varies between 873 and 904 (Fig. 2). In comparison, we obtained a DCJ distance of 829 with GASTS [22], a small parsimony solver directly aiming at minimizing the DCJ distance. This illustrates both a lack of robustness of the pure SCJ optimal labelings, and some significant difference between the SCJ and DCJ distances.

For \(\alpha > 0\), our method minimizes a combination of the SCJ distance with the DeClone weights of the adjacencies discarded to ensure valid ancestral gene orders. We distinguish between DeClone parameter \(kT=0.1\) and \(kT=1\). Figures 2 and 3 show the respective observed results in terms of evolutionary distance and fragmentation. For \(kT=0.1\), the optimal SCJ and DCJ distance over the whole tree hardly depends on \(\alpha \). Including the DeClone weights in the objective actually results in the same solution, independent of \(\alpha >0\). In fact, while applying a low weight threshold of \(x=0.2\), the set of potential adjacencies is already consistent at all internal nodes except for a few conflicts at the root that are solved unambiguously for all values of \(\alpha \). This indicates that building DeClone weights on the basis of mostly optimal adjacency scenarios (low kT) results in a weighting scheme that agrees with the evolution along the tree for this dataset. More importantly, Figs. 2 and 3 show that the combination of DeClone weights followed by our algorithm, leads to a robust set of ancestral gene orders.

In comparison, for \(kT=1\), we see an increase in SCJ and DCJ distance for higher \(\alpha \), while the number of CARs at internal nodes decreases, together with a loss of the robustness of the sampled optimal results when \(\alpha \) gets close to 1. It can be explained by the observation that the weight distribution of ancestral adjacencies obtained with DeClone and \(kT=1\) is more balanced than with \(kT=0.1\) as it considers suboptimal scenarios of adjacencies with a higher probability.

4.2 Yersinia Pestis Dataset

We started from fully assembled DNA sequences of seven Yersinia pestis and four Yersinia pseudotuberculosis genomes. In addition, we included aDNA single-end reads and \(2\,134\) contigs of length >500bp assembled from these reads for the Black Death agent, considered as ancestral to several extant strains [4]. We refer to this augmented ancestral node as the Black Death (BD) node. The marker sequences for all extant genomes were computed as described in [19], restricting the set of markers to be unique and universal. We obtained a total of 2, 207 markers in all extant genomes and 2, 232 different extant adjacencies. As for the mammalian dataset, we considered as potentially ancestral any adjacency that appears in at least one extant genome. However for this dataset, reducing the complexity by applying a weight threshold x was not necessary. For the BD node, adjacency weights can be based on the given aDNA reads for a given potential ancestral adjacency as follows. First, we used FPSAC [19] to compute DNA sequences filling the gaps between any two adjacent marker extremities. Then we computed the weights as a likelihood of this putative gap sequence given the aDNA reads, using the GAML probabilistic model described in [5].

Again we sampled 500 solutions for this dataset. We computed the weights at the BD node based on the aDNA data, while adjacencies at all other nodes were given weight 0. Hence we can investigate the influence of including the aDNA sequencing data in the reconstruction while for the rest of the tree, the weights do not impact the objective function. As shown in Fig. 4, for selected internal nodes of the phylogeny, the pure SCJ solutions at \(\alpha = 0\) result in the highest fragmentation, while the number of CARs decreases as we increase the importance of the adjacency weights in the objective of our method. For the BD node, when including the aDNA weights, the fragmentation is decreasing while the reconstructions for each \(\alpha >0\) are robust. At the other nodes, the applied sequencing weights also reduce the fragmentation except for node6 which is located in the pseudotuberculosis subtree and hence more distant to the BD node. This shows that the aDNA weights not only influence the reconstructed adjacencies at the BD node, but also other nodes of the tree.

5 Conclusion

Our main contributions are the introduction of the small parsimony problem under the SCJ model with adjacency weights, together with an exact parameterized algorithm for the optimization and sampling versions of the problem. The motivation for this problem is twofold: incorporating sequence signal from aDNA data when it is available, and recent works showing that the reconstruction of ancestral genomes through the independent analysis of adjacencies is an interesting approach [2, 6, 9, 16].

Regarding the latter motivation, we address a general issue of these approaches that either ancestral gene orders are not consistent or are quite fragmented if the methods are constrained to ensure consistency. The main idea we introduce is to take advantage of sampling approaches recently introduced in [6] to weight potential ancestral adjacencies and thus direct, through an appropriate objective function, the reconstruction of ancestral gene orders. Our results on the mammalian dataset suggest that this approach leads to a robust ancestral genome structure. However, we can observe a significant difference with a DCJ-based ancestral reconstruction, a phenomenon that deserves to be explored further. Our sampling algorithm improves on the Gibbs sampler introduced in [16] in terms of computational complexity and provides a useful tool to study ancestral genome reconstruction from a Bayesian perspective.

There are several research avenues opened by our work. From a theoretical point of view, we know the problem we introduced is tractable for \(\alpha =0\) and \(\alpha =1\), but it remains to see whether it is hard otherwise. Further, given that the considered objective is a combination of two objectives to be optimized simultaneously, Pareto optimization is an interesting aspect that should be considered. From a more applied point of view, one would like to incorporate duplicated and deleted markers into our small parsimony problem. There exist efficient algorithms for the case of a single adjacency [2, 6] that can provide adjacency weights, and natural extensions of the SCJ model to incorporate duplicated genes.

References

Alekseyev, M., Pevzner, P.A.: Breakpoint graphs and ancestral genome reconstructions. Genome Res. 19, 943–957 (2009)

Bérard, S., Gallien, C., et al.: Evolution of gene neighborhoods within reconciled phylogenies. Bioinformatics 28, 382–388 (2012)

Bertrand, D., Gagnon, Y., Blanchette, M., El-Mabrouk, N.: Reconstruction of ancestral genome subject to whole genome duplication, speciation, rearrangement and loss. In: Moulton, V., Singh, M. (eds.) WABI 2010. LNCS, vol. 6293, pp. 78–89. Springer, Heidelberg (2010)

Bos, K.I., Schuenemann, V., et al.: A draft genome of yersinia pestis from victims of the black death. Nature 478, 506–510 (2011)

Boža, V., Brejová, B., Vinař, T.: GAML: genome assembly by maximum likelihood. Algorithms Mol. Biol. 10, 18 (2015)

Chauve, C., Ponty, Y., Zanetti, J.: Evolution of genes neighborhood within reconciled phylogenies: an ensemble approach. BMC Bioinform. 16(Suppl. 19), S6 (2015)

Chauve, C., Tannier, E.: A methodological framework for the reconstruction of contiguous regions of ancestral genomes and its application to mammalian genomes. PLoS Comput. Biol. 4, e1000234 (2008)

Denoeud, F., Carretero-Paulet, L., et al.: The coffee genome provides insight into the convergent evolution of caffeine biosynthesis. Science 345, 125527 (2013)

Feijǎo, P., Meidanis, J.: SCJ: a breakpoint-like distance that simplifies several rearrangement problems. IEEE/ACM TCBB 8, 1318–1329 (2011)

Fitch, W.: Toward defining the course of evolution: minimum change for a specific tree topology. Syst. Biol. 20, 406–416 (1971)

Kováč, J., Brejová, B., Vinař, T.: A practical algorithm for ancestral rearrangement reconstruction. In: Przytycka, T.M., Sagot, M.-F. (eds.) WABI 2011. LNCS, vol. 6833, pp. 163–174. springer, Heidelberg (2011)

Luhmann, N., Chauve, C., Stoye, J., Wittler, R.: Scaffolding of ancient contigs and ancestral reconstruction in a phylogenetic framework. In: Campos, S. (ed.) BSB 2014. LNCS, vol. 8826, pp. 135–143. Springer, Heidelberg (2014)

Luhmann, N., Thevenin, A., et al.: The SCJ small parsimony problem for weighted gene adjacencies (extended version) (2016). Preprint http://arxiv.org/abs/1603.08819

Ma, J., Zhang, L., et al.: Reconstructing contiguous regions of an ancestral genome. Genome Res. 16, 1557–1565 (2006)

Maňuch, J., Patterson, M., et al.: Linearization of ancestral multichromosomal genomes. BMC Bioinform. 13(Suppl. 19), S11 (2012)

Miklós, I., Smith, H.: Sampling and counting genome rearrangement scenarios. BMC Bioinform. 16(Suppl 14), S6 (2015)

Ming, R., Van Buren, R., et al.: The pineapple genome and the evolution of CAM photosynthesis. Nature Genet. 47, 1435 (2015)

Neafsey, D.E., Waterhouse, R.M., et al.: Highly evolvable malaria vectors: the genome of 16 Anopheles mosquitoes. Science 347, 1258522 (2015)

Rajaraman, A., Tannier, E., Chauve, C.: FPSAC: fast phylogenetic scaffolding of ancient contigs. Bioinformatics 29, 2987–2994 (2013)

Sankoff, D., Rousseau, P.: Locating the vertices of a steiner tree in an arbitrary metric space. Math. Program. 9, 240–246 (1975)

Tannier, E., Zheng, C., Sankoff, D.: Multichromosomal median and halving problems under different genomic distances. BMC Bioinform. 10(1), 120 (2009)

Xu, A.W., Moret, B.M.E.: GASTS: parsimony scoring under rearrangements. In: Przytycka, T.M., Sagot, M.-F. (eds.) WABI 2011. LNCS, vol. 6833, pp. 351–363. Springer, Heidelberg (2011)

Zheng, C., Sankoff, D.: On the pathgroups approach to rapid small phylogeny. BMC Bioinform. 12(Suppl. 1), S4 (2011)

Acknowledgements

NL and RW are funded by the International DFG Research Training Group GRK 1906/1. CC is funded by NSERC grant RGPIN-249834.

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Rights and permissions

Copyright information

© 2016 Springer International Publishing Switzerland

About this paper

Cite this paper

Luhmann, N., Thévenin, A., Ouangraoua, A., Wittler, R., Chauve, C. (2016). The SCJ Small Parsimony Problem for Weighted Gene Adjacencies. In: Bourgeois, A., Skums, P., Wan, X., Zelikovsky, A. (eds) Bioinformatics Research and Applications. ISBRA 2016. Lecture Notes in Computer Science(), vol 9683. Springer, Cham. https://doi.org/10.1007/978-3-319-38782-6_17

Download citation

DOI: https://doi.org/10.1007/978-3-319-38782-6_17

Published:

Publisher Name: Springer, Cham

Print ISBN: 978-3-319-38781-9

Online ISBN: 978-3-319-38782-6

eBook Packages: Computer ScienceComputer Science (R0)