Abstract

We study the min-cost chain-constrained spanning-tree (abbreviated MCCST) problem: find a min-cost spanning tree in a graph subject to degree constraints on a nested family of node sets. We devise the first polytime algorithm that finds a spanning tree that (i) violates the degree constraints by at most a constant factor and (ii) whose cost is within a constant factor of the optimum. Previously, only an algorithm for unweighted CCST was known [13], which satisfied (i) but did not yield any cost bounds. This also yields the first result that obtains an O(1)-factor for both the cost approximation and violation of degree constraints for any spanning-tree problem with general degree bounds on node sets, where an edge participates in multiple degree constraints.

A notable feature of our algorithm is that we reduce MCCST to unweighted CCST (and then utilize [13]) via a novel application of Lagrangian duality to simplify the cost structure of the underlying problem and obtain a decomposition into certain uniform-cost subproblems.

We show that this Lagrangian-relaxation based idea is in fact applicable more generally and, for any cost-minimization problem with packing side-constraints, yields a reduction from the weighted to the unweighted problem. We believe that this reduction is of independent interest. As another application of our technique, we consider the k-budgeted matroid basis problem, where we build upon a recent rounding algorithm of [4] to obtain an improved \(n^{O(k^{1.5}/\epsilon )}\)-time algorithm that returns a solution that satisfies (any) one of the budget constraints exactly and incurs a \((1+\epsilon )\)-violation of the other budget constraints.

A full version of the paper is available on the CS arXiv.

A. Linhares and C. Swamy—Research supported partially by NSERC grant 327620-09 and the second author’s Discovery Accelerator Supplement Award, and Ontario Early Researcher Award.

Access provided by Autonomous University of Puebla. Download conference paper PDF

Similar content being viewed by others

Keywords

These keywords were added by machine and not by the authors. This process is experimental and the keywords may be updated as the learning algorithm improves.

1 Introduction

Constrained spanning-tree problems, where one seeks a minimum-cost spanning tree satisfying additional (\(\{0,1\}\)-coefficient) packing constraints, constitute an important and widely-studied class of problems. In particular, when the packing constraints correspond to node-degree bounds, we obtain the classical min-cost bounded-degree spanning tree (MBDST) problem, which has a rich history of study [5, 7, 10–12, 15] culminating in the work of [15] that yielded an optimal result for MBDST. Such degree-constrained network-design problems arise in diverse areas including VLSI design, vehicle routing and communication networks (see, e.g., the references in [14]), and their study has led to the development of powerful techniques in approximation algorithms.

Whereas the iterative rounding and relaxation technique introduced in [15] (which extends the iterative-rounding framework of [9]) yields a versatile technique for handling node-degree constraints (even for more-general network-design problems), we have a rather limited understanding of spanning-tree problems with more-general degree constraints, such as constraints \(|T\cap \delta (S)|\le b_S\) for sets S in some (structured) family \(\mathcal {S}\) of node sets.Footnote 1 A fundamental impediment here is our inability to leverage the techniques in [10, 15]. The few known results yield: (a) (sub-)optimal cost, but a super-constant additive- or multiplicative- factor violation of the degree bounds [1–3, 6]; or (b) a multiplicative O(1)-factor violation of the degree bounds (when \(\mathcal {S}\) is a nested family), but no cost guarantee [13]. In particular, in stark contrast to the results known for node-degree-bounded network-design problems, there is no known algorithm that yields an O(1)-factor cost approximation and an (additive or multiplicative) O(1)-factor violation of the degree bounds. (Such guarantees are only known when each edge participates in O(1) degree constraints [2]; see however [16] for an exception.)

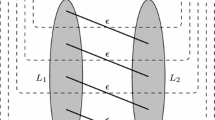

We consider the min-cost chain-constrained spanning-tree (MCCST) problem introduced by [13], which is perhaps the most-basic setting involving general degree bounds where there is a significant gap in our understanding vis-a-vis node-degree bounded problems. In MCCST, we are given an undirected connected graph \(G = (V,E)\), nonnegative edge costs \(\{c_e\}\), a nested family \(\mathcal {S}\) (or chain) of node sets \(S_1 \subsetneq S_2 \subsetneq \dots \subsetneq S_{\ell }\subsetneq V\), and integer degree bounds \(\{b_S\}_{S\in \mathcal {S}}\). The goal is to find a minimum-cost spanning tree T such that \(|\delta _T(S)|\le b_S\) for all \(S\in \mathcal {S}\), where \(\delta _T(S):=T\cap \delta (S)\). Olver and Zenklusen [13] give an algorithm for unweighted CCST that returns a tree T such that \(|\delta _T(S)|=O(b_S)\) (i.e., there is no bound on c(T)), and show that, for some \(\rho >0\), it is NP-complete to obtain an additive \(\rho \cdot \frac{\log |V|}{\log \log |V|}\) violation of the degree bounds. We therefore focus on bicriteria \((\alpha ,\beta )\)-guarantees for MCCST, where the tree T returned satisfies \(c(T)\le \alpha \cdot OPT \) and \(|\delta _T(S)|\le \beta \cdot b_S\) for all \(S\in \mathcal {S}\).

Our Contributions. Our main result is the first \(\bigl (O(1),O(1)\bigr )\)-approximation algorithm for MCCST. Given any \(\lambda >1\), our algorithm returns a tree T with \(c(T)\le \frac{\lambda }{\lambda -1}\cdot OPT \) and \(|\delta _T(S)|\le 9\lambda \cdot b_S\) for all \(S\in \mathcal {S}\), using the algorithm of [13] for unweighted CCST, denoted \(\mathcal {A}_{\text {OZ}}\), as a black box (Theorem 3). As noted above, this is also the first algorithm that achieves an \(\bigl (O(1),O(1)\bigr )\)-approximation for any spanning-tree problem with general degree constraints where an edge belongs to a super-constant number of degree constraints.

We show in Sect. 4 that our techniques are applicable more generally. We give a reduction showing that for any cost-minimization problem with packing side-constraints, if we have an algorithm for the unweighted problem that returns a solution with an O(1)-factor violation of the packing constraints and satisfies a certain property, then one can utilize it to obtain an \(\bigl (O(1),O(1)\bigr )\)-approximation for the cost-minimization problem. Furthermore, we show that if the algorithm for the unweighted counterpart satisfies a stronger property, then we can utilize it to obtain a \(\bigl (1,O(1)\bigr )\)-approximation (Theorem 9).

We believe that our reductions are of independent interest and will be useful in other settings as well. Demonstrating this, we show an application to the k-budgeted matroid basis problem, wherein we seek to find a basis satisfying k budget constraints. Grandoni et al. [8] devised an \(n^{O(k^2/\epsilon )}\)-time algorithm that returned a \((1,1+\epsilon ,\ldots ,1+\epsilon )\)-solution: i.e., the solution satisfies (any) one budget constraint exactly and violates the other budget constraints by a \((1+\epsilon )\)-factor (if the problem is feasible). Very recently, Bansal and Nagarajan [4] improved the running time to \(n^{O(k^{1.5}/\epsilon )}\) but return only a \((1+\epsilon ,\ldots ,1+\epsilon )\)-solution. Applying our reduction (to the algorithm in [4]), we obtain the best of both worlds: we return a \((1,1+\epsilon ,\ldots ,1+\epsilon )\)-solution in \(n^{O(k^{1.5}/\epsilon )}\)-time (Theorem 12).

The chief novelty in our algorithm and analysis, and the key underlying idea, is an unorthodox use of Lagrangian duality. Whereas typically Lagrangian relaxation is used to drop complicating constraints and thereby simplify the constraint structure of the underlying problem, in contrast, we use Lagrangian duality to simplify the cost structure of the underlying problem by equalizing edge costs in certain subproblems. To elaborate (see Sect. 3.1), the algorithm in [13] for unweighted CCST can be viewed as taking a solution x to the natural linear-programming (LP) relaxation for MCCST, converting it to another feasible solution \(x'\) satisfying a certain structural property, and exploiting this property to round \(x'\) to a spanning tree. The main bottleneck here in handling costs (as also noted in [13]) is that \(c^\intercal x'\) could be much larger than \(c^\intercal x\) since the conversion ignores the \(c_e\)s and works with an alternate “potential” function.

Our crucial insight is that we can exploit Lagrangian duality to obtain perturbed edge costs \(\{c^{y^*}_e\}\) such that the change in perturbed cost due to the conversion process is bounded. Loosely speaking, if the conversion process shifts weight from \(x_f\) to \(x_e\), then we ensure that \(c^{y^*}_e=c^{y^*}_f\) (see Lemma 5); thus, \((c^{y^*})^\intercal x=(c^{y^*})^\intercal x'\)!. The perturbation also ensures that applying \(\mathcal {A}_{\text {OZ}}\) to \(x'\) yields a tree whose perturbed cost is equal to \((c^{y^*})^\intercal x'=(c^{y^*})^\intercal x\). Finally, we show that for an optimal LP solution \(x^*\), the “error” \((c^{y^*}-c)^\intercal x^*\) incurred in working with the \(c^{y^*}\)-cost is \(O( OPT )\); this yields the \(\bigl (O(1),O(1)\bigr )\)-approximation.

We extend the above idea to an arbitrary cost-minimization problem with packing side-constraints as follows. Let \(x^*\) be an optimal solution to the LP-relaxation, and \(\mathcal {P}\) be the polytope obtained by dropping the packing constraints. We observe that the same Lagrangian-duality based perturbation ensures that all points on the minimal face of \(\mathcal {P}\) containing \(x^*\) have the same perturbed cost. Therefore, if we have an algorithm for the unweighted problem that rounds \(x^*\) to a point \(\hat{x}\) on this minimal face, then we again obtain that \((c^{y^*})^\intercal \hat{x}=(c^{y^*})^\intercal x^*\), which then leads to an \(\bigl (O(1),O(1)\bigr )\)-approximation (as in the case of MCCST).

Related Work. Whereas node-degree-bounded spanning-tree problems have been widely studied, relatively few results are known for spanning-tree problems with general degree constraints for a family \(\mathcal {S}\) of node-sets. With the exception of the result of [13] for unweighted CCST, these other results [1–3, 6] all yield a tree of cost at most the optimum with an \(\omega (1)\) additive- or multiplicative- factor violation of the degree bounds. Both [2, 3] obtain additive factors via iterative rounding and relaxation. The factor in [3] is \((r-1)\) for an arbitrary \(\mathcal {S}\), where r is the maximum number of degree constraints involving an edge (which could be |V| even when \(\mathcal {S}\) is a chain), while [2] yields an \(O(\log |V|)\) factor when \(\mathcal {S}\) is a laminar family (the factor does not improve when \(\mathcal {S}\) is a chain). The dependent-rounding techniques in [1, 6] yield a tree T satisfying \(|\delta _T(S)|\le \min \bigl \{O\bigl (\frac{\log |\mathcal {S}|}{\log \log |\mathcal {S}|}\bigr )b_S, (1+\epsilon )b_S+O\bigl (\frac{\log |\mathcal {S}|}{\epsilon }\bigr )\bigr \}\) for all \(S\in \mathcal {S}\), for any family \(\mathcal {S}\).

For MBDST, Goemans [10] obtained the first \(\bigl (O(1),O(1)\bigr )\)-approximation; his result yields a tree of cost at most the optimum and at most \(+2\) violation of the degree bounds. This was subsequently improved to an (optimal) additive \(+1\) violation by [15]. Zenklusen [16] considers an orthogonal generalization of MBDST, where there is a matroid-independence constraint on the edges incident to each node, and obtains a tree of cost at most the optimum and “additive” O(1) violation (defined appropriately) of the matroid constraints. To our knowledge, this is the only prior work that obtains an O(1)-approximation to both the cost and packing constraints for a constrained spanning-tree problem where an edge participates in \(\omega (1)\) packing constraints (albeit this problem is quite different from spanning tree with general degree constraints).

Finally, we note that our Lagrangian-relaxation based technique is somewhat similar to its use in [11]. However, whereas [11] uses this to reduce uniform-degree MBDST to the problem of finding an MST of minimum maximum degree, which is another weighted problem, we utilize Lagrangian relaxation in a more refined fashion to reduce the weighted problem to its unweighted counterpart.

2 An LP-Relaxation for MCCST and Preliminaries

We consider the following natural LP-relaxation for MCCST. Throughout, we use e to index the edges of the underlying graph \(G=(V,E)\). For a set \(S\subseteq V\), let E(S) denote \(\{uv\in E: u,v\in S\}\), and \(\delta (S)\) denote the edges on the boundary of S. For a vector \(z\in \mathbb {R}^E\) and an edge-set F, we use z(F) to denote \(\sum _{e\in F}z_e\).

For any \(x\in \mathbb {R}_+^E\), let \({{\mathrm{supp}}}(x):=\{e:x_e>0\}\) denote the support of x. It is well known that the polytope, \(\text {P}_{\text {ST}}(G)\), defined by (1), (2), and (4) is the convex hull of spanning trees of G. We call points in \(\text {P}_{\text {ST}}(G)\) fractional spanning trees. We refer to (1), (2) as the spanning-tree constraints. We will also utilize \((\text {P}_{{\lambda }})\), the modified version of (P) where we replace (3) with \(x\bigl (\delta (S)\bigr )\le \lambda b_S\) for all \(S\in \mathcal {S}\), where \(\lambda \ge 1\). Let \( OPT (\lambda )\) denote the optimal value of \((\text {P}_{{\lambda }})\), and let \( OPT := OPT (1)\).

Preliminaries. A family \(\mathcal {L}\subseteq 2^V\) of sets is a laminar family if for all \(A, B\in \mathcal {L}\), we have \(A\subseteq B\) or \(B\subseteq A\) or \(A\cap B=\emptyset \). As is standard, we say that \(A\in \mathcal {L}\) is a child of \(L\in \mathcal {L}\) if L is the minimal set of \(\mathcal {L}\) such that \(A\subsetneq L\). For each \(L\in \mathcal {L}\), let \(G^\mathcal {L}_L=(V^\mathcal {L}_L,E^\mathcal {L}_L)\) be the graph obtained from \(\bigl (L,E(L)\bigr )\) by contracting the children of L in \(\mathcal {L}\); we drop the superscript \(\mathcal {L}\) when \(\mathcal {L}\) is clear from the context.

Given \(x\in \text {P}_{\text {ST}}(G)\), define a laminar decomposition \(\mathcal {L}\) of x to be a (inclusion-wise) maximal laminar family of sets whose spanning-tree constraints are tight at x, so \(x\bigl (E(A)\bigr )=|A|-1\) for all \(A\in \mathcal {L}\). Note that \(V\in \mathcal {L}\) and \(\{v\}\in \mathcal {L}\) for all \(v\in V\). A laminar decomposition can be constructed in polytime (using network-flow techniques). For any \(L\in \mathcal {L}\), let \(x^\mathcal {L}_L\), or simply \(x_L\) if \(\mathcal {L}\) is clear from context, denote x restricted to \(E_L\). Observe that \(x_L\) is a fractional spanning tree of \(G_L\).

3 An LP-Rounding Approximation Algorithm

3.1 An Overview

We first give a high-level overview. Clearly, if (P) is infeasible, there is no spanning tree satisfying the degree constraints, so in the sequel, we assume that (P) is feasible. We seek to obtain a spanning tree T of cost \(c(T)=O( OPT )\) such that \(|\delta _T(S)|=O(b_S)\) for all \(S \in \mathcal {S}\), where \(\delta _T(S)\) is the set of edges of T crossing S.

In order to explain the key ideas leading to our algorithm, we first briefly discuss the approach of Olver and Zenklusen [13] for unweighted CCST. Their approach ignores the edge costs \(\{c_e\}\) and instead starts with a feasible solution x to (P) that minimizes a suitable (linear) potential function. They use this potential function to argue that if \(\mathcal {L}\) is a laminar decomposition of x, then \((x,\mathcal {L})\) satisfies a key structural property called rainbow freeness. Exploiting this, they give a rounding algorithm, hereby referred to as \(\mathcal {A}_{\text {OZ}}\), that for every \(L\in \mathcal {L}\), rounds \(x_L\) to a spanning tree \(T_L\) of \(G_L\) such that \(|\delta _{T_L}(S)|\approx O\bigl (x_L(\delta (S))\bigr )\) for all \(S\in \mathcal {S}\), so that concatenating the \(T_L\)s yields a spanning tree T of G satisfying \(|\delta _T(S)|=O\bigl (x(\delta (S))\bigr )=O(b_S)\) for all \(S\in \mathcal {S}\) (Theorem 2). However, as already noted in [13], a fundamental obstacle towards generalizing their approach to handle the weighted version (i.e., MCCST) is that in order to achieve rainbow freeness, which is crucial for their rounding algorithm, one needs to abandon the cost function c and work with an alternate potential function.

We circumvent this difficulty as follows. First, we note that the algorithm in [13] can be equivalently viewed as rounding an arbitrary solution x to (P) as follows. Let \(\mathcal {L}\) be a laminar decomposition of x. Using the same potential-function idea, we can convert x to another solution \(x'\) to (P) that admits a laminar decomposition \(\mathcal {L}'\) refining \(\mathcal {L}\) such that \((x',\mathcal {L}')\) satisfies rainbow freeness (see Lemma 1), and then round \(x'\) using \(\mathcal {A}_{\text {OZ}}\). Of course, the difficulty noted above remains, since the move to rainbow freeness (which again ignores c and uses a potential function) does not yield any bounds on the cost \(c^\intercal x'\) relative to \(c^\intercal x\). We observe however that there is one simple property (*) under which one can guarantee that \(c^\intercal x'=c^\intercal x\), namely, if for every \(L\in \mathcal {L}\), all edges in \({{\mathrm{supp}}}(x)\cap E_L\) have the same cost. However, it is unclear how to utilize this observation since there is no reason to expect our instance to have this rather special property: for instance, all edges of G could have very different costs!

Now let \(x^*\) be an optimal solution to (P) (we will later modify this somewhat) and \(\mathcal {L}\) be a laminar decomposition of \(x^*\). The crucial insight that allows us to leverage property (*), and a key notable aspect of our algorithm and analysis, is that one can leverage Lagrangian duality to suitably perturb the edge costs so that the perturbed costs satisfy property (*). More precisely, letting \(y^*\in \mathbb {R}_+^\mathcal {S}\) denote the optimal values of the dual variables corresponding to constraints (3), if we define the perturbed cost of edge e to be \(c^{y^*}_e:=c_e+\sum _{S\in \mathcal {S}:e\in \delta (S)}y^*_S\), then the \(c^{y^*}\)-cost of all edges in \({{\mathrm{supp}}}(x^*)\cap E_L\) are indeed equal, for every \(L\in \mathcal {L}\) (Lemma 5). In essence, this holds because for any \(e'\in {{\mathrm{supp}}}(x^*)\), by complementary slackness, we have \(c_{e'}=\text {(dual contribution to}\ \)e’\(\ \text {from}\) (1),(2)) \(-\sum _{S\in \mathcal {S}:e'\in \delta (S)}y^*_S\). Since any two edges \(e, f \in {{\mathrm{supp}}}(x^*)\cap E_L\) appear in the same sets of \(\mathcal {L}\), one can argue that the dual contributions to e and f from (1), (2) are equal, and thus, \(c^{y^*}_e=c^{y^*}_f\).

Now since \((x^*,\mathcal {L}^*)\) satisfies (*) with the perturbed costs \(c^{y^*}\), we can convert \((x^*,\mathcal {L}^*)\) to \((x',\mathcal {L}')\) satisfying rainbow freeness without altering the perturbed cost, and then round \(x'\) to a spanning tree T using \(\mathcal {A}_{\text {OZ}}\). This immediately yields \(|\delta _T(S)|=O(b_S)\) for all \(S\in \mathcal {S}\). To bound the cost, we argue that \(c(T)\le c^{y^*}(T)=\sum _e c^{y^*}_ex^*_e=c^\intercal x^*+\sum _{S\in \mathcal {S}} b_Sy^*_S\) (Lemma 6), where the last equality follows from complementary slackness. (Note that the perturbed costs are used only in the analysis.) However, \(\sum _{S\in \mathcal {S}} b_Sy^*_S\) need not be bounded in terms of \(c^\intercal x^*\). To fix this, we modify our starting solution \(x^*\): we solve \((\text {P}_{{\lambda }})\) (which recall is (P) with inflated degree bounds \(\{\lambda b_S\}\)), where \(\lambda >1\), to obtain \(x^*\), and use this \(x^*\) in our algorithm. Now, letting \(y^*\) be the optimal dual values corresponding to the inflated degree constraints, a simple duality argument shows that \(\sum _{S\in \mathcal {S}} b_Sy^*_S\le \frac{ OPT (1)- OPT (\lambda )}{\lambda -1}\) (Lemma 7), which yields \(c(T)=O( OPT )\) (see Theorem 3).

A noteworthy feature of our algorithm is the rather unconventional use of Lagrangian relaxation, where we use duality to simplify the cost structure (as opposed to the constraint-structure) of the underlying problem by equalizing edge costs in certain subproblems. This turns out to be the crucial ingredient that allows us to utilize the algorithm of [13] for unweighted CCST as a black box without worrying about the difficulties posed by (the move to) rainbow freeness. In fact, as we show in Sect. 4, this Lagrangian-relaxation idea is applicable more generally, and yields a novel reduction from weighted problems to their unweighted counterparts. We believe that this reduction is of independent interest and will find use in other settings as well.

3.2 Algorithm Details and Analysis

To specify our algorithm formally, we first define the rainbow-freeness property and state the main result of [13] (which we utilize as a black box) precisely.

For an edge e, define \(\mathcal {S}_e:=\{S\in \mathcal {S}: e\in \delta (S)\}\). Note that \(\mathcal {S}_e\) could be empty. We say that two edges \(e, f\in E\) form a rainbow if \(\mathcal {S}_{e}\subseteq \mathcal {S}_{f}\) or \(\mathcal {S}_{f}\subseteq \mathcal {S}_e\). (This definition is slightly different from the one in [13], in that we allow \(\mathcal {S}_e=\mathcal {S}_f\).) We say that \((x,\mathcal {L})\) is a rainbow-free decomposition if \(\mathcal {L}\) is a laminar decomposition of x and for every set \(L \in \mathcal {L}\), no two edges of \({{\mathrm{supp}}}(x)\cap E_L\) form a rainbow. (Recall that \(G_L=(V_L,E_L)\) denotes the graph obtained from \(\bigl (L,E(L)\bigr )\) by contracting the children of L.) The following lemma shows that one can convert an arbitrary decomposition \((x,\mathcal {L})\) to a rainbow-free one; the proof mimics the potential-function argument in [13] and is deferred to the full version.

Lemma 1

Let \(x\in \text {P}_{\text {ST}}(G)\) and \(\mathcal {L}\) be a laminar decomposition of x. We can compute in polytime a fractional spanning tree \(x'\in \text {P}_{\text {ST}}(G)\) and a rainbow-free decomposition \((x',\mathcal {L}')\) such that: (i) \({{\mathrm{supp}}}(x') \subseteq {{\mathrm{supp}}}(x)\); (ii) \(\mathcal {L} \subseteq \mathcal {L}'\); and (iii) \(x'(\delta (S)) \le x(\delta (S))\) for all \(S \in \mathcal {S}\).

Theorem 2

[13]. There is a polytime algorithm, \(\mathcal {A}_{\text {OZ}}\), that given a fractional spanning tree \(x' \in \text {P}_{\text {ST}}(G)\) and a rainbow-free decomposition \((x',\mathcal {L}')\), returns a spanning tree \(T_L\subseteq {{\mathrm{supp}}}(x')\) of \(G_L\) for every \(L\in \mathcal {L}'\) such that the concatenation T of the \(T_L\)s is a spanning tree of G satisfying \(|\delta _T(S)| \le 9 x'\bigl (\delta (S)\bigr )\) for all \(S \in \mathcal {S}\).

We can now describe our algorithm compactly. Let \(\lambda >1\) be a parameter.

-

1.

Compute an optimal solution \(x^*\) to \((\text {P}_{{\lambda }})\), a laminar decomposition \(\mathcal {L}\) of \(x^*\).

-

2.

Apply Lemma 1 to \((x^*,\mathcal {L})\) to obtain a rainbow-free decomposition \((x',\mathcal {L}')\).

-

3.

Apply \(\mathcal {A}_{\text {OZ}}\) to the input \((x', \mathcal {L}')\) to obtain spanning trees \(T^{\mathcal {L}'}_L\) of \(G^{\mathcal {L}'}_L\) for every \(L\in \mathcal {L}'\). Return the concatenation T of all the \(T^{\mathcal {L}'}_L\)s.

Analysis. We show that the above algorithm satisfies the following guarantee.

Theorem 3

The above algorithm run with parameter \(\lambda > 1\) returns a spanning tree T satisfying \(c(T)\le \frac{\lambda }{\lambda -1}\cdot OPT \) and \(|\delta _T(S)|\le 9\lambda b_S\) for all \(S\in \mathcal {S}\).

The bound on \(|\delta _T(S)|\) follows immediately from Lemma 1 and Theorem 2 since \(x^*\), and hence \(x'\) obtained in step 2, is a feasible solution to \((\text {P}_{{\lambda }})\). So we focus on bounding c(T). This will follow from three things. First, we define the perturbed \(c^{y^*}\)-cost precisely. Next, Lemma 5 proves the key result that for every \(L\in \mathcal {L}\), all edges in \({{\mathrm{supp}}}(x^*)\cap E_L\) have the same perturbed cost. Using this it is easy to show that \(c(T)\le c^{y^*}(T)=\sum _e c^{y^*}_ex^*_e= OPT (\lambda )+\lambda \sum _{S\in \mathcal {S}} b_Sy^*_S\) (Lemma 6). Finally, we show that \(\sum _{S\in \mathcal {S}} b_Sy^*_S\le \frac{ OPT - OPT (\lambda )}{\lambda -1}\) (Lemma 7), which yields the bound stated in Theorem 3.

To define the perturbed costs, we consider the Lagrangian dual of \((\text {P}_{{\lambda }})\) obtained by dualizing the (inflated) degree constraints \(x\bigl (\delta (S)\bigr )\le \lambda b_S\) for all \(S\in \mathcal {S}\):

For \(x \in \mathbb {R}^E\) and \(y \in \mathbb {R}^\mathcal {S}\), let \(\mathcal {G}_{\lambda }(x,y) := \sum _{e}c_e x_e+\sum _{S \in \mathcal {S}} \bigl (x(\delta (S)) - \lambda b_S\bigr ) y_S = \sum _{e} c^y_e x_e - \lambda \sum _{S \in \mathcal {S}} b_S y_S\) denote the objective function of \(g_\lambda (y)\), where \(c^y_e := c_e + \sum _{S \in \mathcal {S} : e \in \delta (S)} y_S\).

Let \(y^*\) be an optimal solution to (LD\(_\lambda \)). One can show via LP-duality that this holds iff there exist dual multipliers \(\mu ^*=(\mu ^*_S)_{\emptyset \ne S\subseteq V}\) corresponding to constraints (1), (2) of \((\text {P}_{{\lambda }})\) such that \((\mu ^*,y^*)\) is an optimal solution to the LP dual of \((\text {P}_{{\lambda }})\). This also implies that \(g_\lambda (y^*)=\mathcal {G}_\lambda (x^*,y^*)= OPT (\lambda )\). Our perturbed costs are \(\{c^{y^*}_e\}\). We defer the proof of Lemma 4 to the full version and use it to show that \(c^{y^*}_e=c^{y^*}_f\) for any \(L\in \mathcal {L}\) and any edges \(e,f\in {{\mathrm{supp}}}(x^*)\cap E_L\).

Lemma 4

We have \(g_{\lambda }(y^*) = \mathcal {G}_\lambda (x^*,y^*)= OPT (\lambda )\). Further, there exists \(\mu ^*=(\mu ^*_S)_{\emptyset \ne S\subseteq V}\) such that \((\mu ^*,y^*)\) is an optimal solution to the dual \((\text {D}_{{\lambda }})\) of \((\text {P}_{{\lambda }})\).

Lemma 5

For any \(L \in \mathcal {L}\), all edges of \({{\mathrm{supp}}}(x^*)\cap E_L\) have the same \(c^{y^*}\)-cost.

Proof Sketch

Consider any two edges \(e,f\in {{\mathrm{supp}}}(x^*)\cap E_L\). Suppose for a contradiction that \(c^{y*}_{e} < c^{y*}_{f}\). Obtain \(\hat{x}\) from \(x^*\) by increasing \(x^*_{e}\) by \(\epsilon \) and decreasing \(x^*_{f}\) by \(\epsilon \) (so \(\hat{x}_{e'}=x^*_{e'}\) for all \(e'\notin \{e,f\}\)). We argue that one can choose a sufficiently small \(\epsilon >0\) such that \(\hat{x}\in \text {P}_{\text {ST}}(G)\). This follows since any spanning-tree constraint that is tight at \(x^*\) can be expressed as a linear combination of the spanning-tree constraints for the sets in \(\mathcal {L}\). Since \(c^{y^*}_e<c^{y^*}_f\), we have \(g_\lambda (y^*)\le \mathcal {G}_\lambda (\hat{x},y^*)<\mathcal {G}_\lambda (x^*,y^*)=g_\lambda (y^*)\), which is a contradiction. \(\square \)

Lemma 6

We have \(c(T)\le \sum _e c^{y^*}_ex^*_e=\sum _e c_ex^*_e+\lambda \sum _{S\in \mathcal {S}}b_Sy^*_S\).

Proof

Observe that \(c(T)\le c^{y^*}(T)\) since \(c_e\le c^{y^*}_e\) for all \(e\in E\) as \(y^*\ge 0\). We now bound \(c^{y^*}(T)\). To keep notation simple, we use \(G_L=(V_L,E_L)\) and \(x^*_L\) to denote \(G^\mathcal {L}_L\) and \((x^*)^\mathcal {L}_L\) (which recall is \(x^*\) restricted to \(E^\mathcal {L}_L\)) respectively, and \(G'_L=(V'_L,E'_L)\) and \(x^{*'}_L\) to denote \(G^{\mathcal {L}'}_L\) and \((x^*)^{\mathcal {L}'}_L\) respectively.

We have \(c^{y^*}(T)=\sum _{L\in \mathcal {L}}c^{y^*}(T\cap E_L)\) since the sets \(\{E_L\}_{L\in \mathcal {L}}\) partition E. Fix \(L\in \mathcal {L}\). Note that \(x^*_L\) is a fractional spanning tree of \(G_L=(V_L,E_L)\) since for any \(\emptyset \ne Q\subseteq V_L\), if R is the subset of V corresponding to Q, and \(A_1,\ldots ,A_k\) are the children of L whose corresponding contracted nodes lie in Q, we have \(x^*_L\bigl (E_L(Q)\bigr )=x^*\bigl (E(R)\bigr )-\sum _{i=1}^k x^*\bigl (E(A_i)\bigr ) \le |R\setminus (A_1\cup \ldots \cup A_k)|+k-1=|Q|-1\) with equality holding when \(Q=V_L\). Note that \(T\cap E_L\) is a spanning tree of \(G_L\) since T is obtained by concatenating spanning trees for the graphs \(\{G'_{L'}\}_{L'\in \mathcal {L}'}\), and \(\mathcal {L}'\) refines \(\mathcal {L}\). Also, all edges of \({{\mathrm{supp}}}(x^*)\cap E_{L}\) have the same \(c^{y^*}\)-cost by Lemma 5. So we have \(c^{y^*}(T\cap E_L)=\sum _{e\in E_L}c^{y^*}_ex^*_e\). It follows that

\(\square \)

Lemma 7

We have \(\sum _{S\in \mathcal {S}} b_Sy^*_S\le \frac{ OPT (1)- OPT (\lambda )}{\lambda -1}\).

Proof

By Lemma 4, there exists \(\mu ^*\) such that \((\mu ^*,y^*)\) is an optimal solution to the dual \((\text {D}_{{\lambda }})\) of \((\text {P}_{{\lambda }})\). Also, \((\mu ^*,y^*)\) is a feasible solution to \((\text {D}_{{1}})\). Therefore,

Hence \( OPT (1)- OPT (\lambda )\ge (\lambda -1)\sum _{S\in \mathcal {S}} b_Sy^*_S\). \(\square \)

Proof of Theorem 3. As noted earlier, the bounds on \(\delta _T(S)\) follow immediately from Lemma 1 and Theorem 2: for any \(S\in \mathcal {S}\), we have \(|\delta _T(S)|\le 9x'\bigl (\delta (S)\bigr )\le 9x^*\bigl (\delta (S)\bigr )\le 9\lambda b_S\). The bound on c(T) follows from Lemmas 6 and 7 since \(\sum _ec_ex^*_e= OPT (\lambda )\). \(\square \)

4 A Reduction from Weighted to Unweighted Problems

We now show that our ideas are applicable more generally, and yield bicriteria approximation algorithms for any cost-minimization problem with packing side-constraints, provided we have a suitable approximation algorithm for the unweighted counterpart. Thus, our technique yields a reduction from weighted to unweighted problems, which we believe is of independent interest.

To demonstrate this, we first isolate the key properties of the rounding algorithm \(\mathcal {B}\) used above for unweighted CCST that enable us to use it as a black box to obtain our result for MCCST; this will yield an alternate, illuminating explanation of Theorem 3. Note that \(\mathcal {B}\) is obtained by combining the procedure in Lemma 1 and \(\mathcal {A}_{\text {OZ}}\) (Theorem 2). First, we of course utilize that \(\mathcal {B}\) is an approximation algorithm for unweighted CCST, so it returns a spanning tree T such that \(|\delta _T(S)|=O\bigl (x^*(\delta (S))\bigr )\) for all \(S\in \mathcal {S}\). Second, we exploit the fact that \(\mathcal {B}\) returns a tree T that preserves tightness of all spanning-tree constraints that are tight at \(x^*\). This is the crucial property that allows us to bound c(T), since this implies (as we explain below) that \(c^{y^*}(T)=\sum _e c^{y^*}_ex^*_e\), which then yields the bound on c(T) as before. The equality follows because the set of optimal solutions to the LP \(\min _{x\in \text {P}_{\text {ST}}(G)}\mathcal {G}_\lambda (x,y^*)\) is a face of \(\text {P}_{\text {ST}}(G)\); thus all points on the minimal face of \(\text {P}_{\text {ST}}(G)\) containing \(x^*\) are optimal solutions to this LP, and the stated property implies that the characteristic vector of T lies on this minimal face. In other words, while \(\mathcal {A}_{\text {OZ}}\) proceeds by exploiting the notions of rainbow freeness and laminar decomposition, these notions are not essential to obtaining our result; any rounding algorithm for unweighted CCST satisfying the above two properties can be utilized to obtain our guarantee for MCCST.

We now formalize the above two properties for an arbitrary cost-minimization problem with packing side-constraints, and prove that they suffice to yield a bicriteria guarantee. Consider the following abstract problem:

where \(\mathcal {P} \subseteq \mathbb {R}_+^n\) is a fixed polytope, \(c,b\ge 0\), and \(A\ge 0\). Observe that we can cast MCCST as a special case of (Q\(^\mathcal {P}\)), by taking \(\mathcal {P}=\text {P}_{\text {ST}}(G)\) (whose extreme points are spanning trees of G), c to be the edge costs, and \(Ax \le b\) to be the degree constraints. Moreover, by taking \(\mathcal {P}\) to be the convex hull of a bounded set \(\{x\in \mathbb {Z}_+^n: Cx\le d\}\) we can use (Q\(^\mathcal {P}\)) to encode a discrete-optimization problem.

We say that \(\mathcal {B}\) is a face-preserving rounding algorithm (FPRA) for unweighted (Q\(^\mathcal {P}\)) if given any point \(x\in \mathcal {P}\), \(\mathcal {B}\) finds in polytime an extreme point \(\hat{x}\) of \(\mathcal {P}\) such that:

-

(P1) \(\hat{x}\) belongs to the minimal face of \(\mathcal {P}\) that contains x.

We say that \(\mathcal {B}\) is a \(\beta \) -approximation FPRA (where \(\beta \ge 1\)) if we also have:

-

(P2) \(A\hat{x}\le \beta Ax\).

Let \((\text {R}^\mathcal {P}_{{\lambda }})\) denote the linear program: \(\min \bigl \{c^\intercal x: x\in \mathcal {P}, \ Ax\le \lambda b\bigr \}\); note that \((\text {R}^\mathcal {P}_{{1}})\) is the LP-relaxation of (Q\(^\mathcal {P}\)). Let \(\mathsf {opt}(\lambda )\) denote the optimal value of \((\text {R}^\mathcal {P}_{{\lambda }})\), and let \(\mathsf {opt}:=\mathsf {opt}(1)\). We say that an algorithm is a \((\rho _1,\rho _2)\)-approximation algorithm for (Q\(^\mathcal {P}\)) if it finds in polytime an extreme point \(\hat{x}\) of \(\mathcal {P}\) such that \(c^\intercal \hat{x}\le \rho _1\mathsf {opt}\) and \(A\hat{x}\le \rho _2b\).

Theorem 8

Let \(\mathcal {B}\) be a \(\beta \)-approximation FPRA for unweighted (Q\(^\mathcal {P}\)). Then, given any \(\lambda > 1\), one can obtain a \(\bigl (\frac{\lambda }{\lambda -1}, \beta \lambda \bigr )\)-approximation algorithm for (Q\(^\mathcal {P}\)) using a single call to \(\mathcal {B}\).

Proof Sketch

We dovetail the algorithm for MCCST and its analysis. We simply compute an optimal solution \(x^*\) to \((\text {R}^\mathcal {P}_{{\lambda }})\) and round it to an extreme point \(\hat{x}\) of \(\mathcal {P}\) using \(\mathcal {B}\). By property (P2), it is clear that \(A\hat{x}\le \beta (Ax^*)\le \beta \lambda b\).

Let m be the number of rows of A. For \(y\in \mathbb {R}_+^m\), define \(c^y:=c+A^\intercal y\). To bound the cost, as before, we consider the Lagrangian dual of \((\text {R}^\mathcal {P}_{{\lambda }})\) obtained by dualizing the side-constraints \(Ax\le \lambda b\).

Let \(y^*={{\mathrm{argmax}}}_{y\in \mathbb {R}_+^m}h_\lambda (y)\). We can mimic the proof of Lemma 4 to show that \(x^*\) is an optimal solution to \(\min _{x\in \mathcal {P}}\mathcal {H}_\lambda (x,y^*)\). The set of optimal solutions to this LP is a face of \(\mathcal {P}\). So all points on the minimal face of \(\mathcal {P}\) containing \(x^*\) are optimal solutions to this LP. By property (P1), \(\hat{x}\) belongs to this minimal face and so is an optimal solution to this LP. So \((c^{y^*})^\intercal \hat{x}=(c^{y^*})^\intercal x^*=c^\intercal x^*+(y^*)^\intercal Ax^*=\mathsf {opt}(\lambda )+\lambda (y^*)^\intercal b\), where the last equality follows by complementary slackness. Also, by the same arguments as in Lemma 7, we have \((y^*)^\intercal b\le \frac{\mathsf {opt}(1)-\mathsf {opt}(\lambda )}{\lambda -1}\). Since \(c\le c^{y^*}\), we have \(c^\intercal \hat{x}\le (c^{y^*})^\intercal \hat{x}\le \frac{\lambda }{\lambda -1}\cdot \mathsf {opt}\). \(\square \)

5 Towards a \(\varvec{\big (1,O(1)\big )}\)-Approximation Algorithm for (Q\(^{\mathcal {P}}\))

A natural question that emerges from Theorems 3 and 8 is whether one can obtain a \(\bigl (1,O(1)\bigr )\)-approximation, i.e., obtain a solution of cost at most \(\mathsf {opt}\) that violates the packing side-constraints by an (multiplicative) O(1)-factor. Such results are known for degree-bounded spanning tree problems with various kinds of degree constraints [3, 10, 15, 16], so, in particular, it is natural to ask whether such a result also holds for MCCST. (Note that for MCCST, the dependent-rounding techniques in [1, 6] yield a tree T with \(c(T)\le OPT \) and \(|\delta _T(S)|\le \min \bigl \{O\bigl (\frac{\log |\mathcal {S}|}{\log \log |\mathcal {S}|}\bigr )b_S, (1+\epsilon )b_S+O\bigl (\frac{\log |\mathcal {S}|}{\epsilon }\bigr )\bigr \}\) for all \(S\in \mathcal {S}\).) We show that our approach is versatile enough to yield such a guarantee provided we assume a stronger property from the rounding algorithm \(\mathcal {B}\) for unweighted (Q\(^\mathcal {P}\)).

Let \(A_i\) denote the i-th row of A, for \(i=1,\ldots ,m\). We say that \(\mathcal {B}\) is an \((\alpha ,\beta )\) -approximation FPRA for unweighted (Q\(^\mathcal {P}\)) if in addition to properties (P1), (P2), it satisfies:

-

(P3) it rounds a feasible solution x to \((\text {R}^\mathcal {P}_{{\alpha }})\) to an extreme point \(\hat{x}\) of \(\mathcal {P}\) satisfying \(A_i^\intercal \hat{x}\ge \frac{A_i^\intercal x}{\alpha }\) for every i such that \(A_i^\intercal x=\alpha b_i\).

(For MCCST, property (P3) requires that \(|\delta _T(S)|\ge b_S\) for every set \(S\in \mathcal {S}\) whose degree constraint (in \((\text {P}_{{\alpha }})\)) is tight at the fractional spanning tree x.)

Theorem 9

Let \(\mathcal {B}\) be an \((\alpha ,\beta )\)-approximation FPRA for unweighted (Q\(^\mathcal {P}\)). Then, one can obtain a \((1,\alpha \beta )\)-approximation algorithm for (Q\(^\mathcal {P}\)) using a single call to \(\mathcal {B}\).

Proof

We show that applying Theorem 8 with \(\lambda =\alpha \) yields the claimed result. It is clear that the extreme point \(\hat{x}\) returned satisfies \(A\hat{x}\le \alpha \beta b\). As in the proof of Theorem 8, let \(y^*\) be an optimal solution to \(\max _{y\in \mathbb {R}_+^m}h_\lambda (y)\) (where \(\lambda =\alpha \)). In Lemma 6 and the proof of Theorem 8, we use the weak bound \(c^\intercal \hat{x}\le (c^{y^*})^\intercal \hat{x}\). We tighten this to obtain the improved bound on \(c^\intercal \hat{x}\). We have \(c^\intercal \hat{x}=(c^{y^*})^\intercal \hat{x}-(y^*)^\intercal A\hat{x}\), and

The first and last equalities above follow because \(y^*_i>0\) implies that \(A_i^\intercal x^*=\lambda b_i\). The inequality follows from property (P3). Thus, following the rest of the arguments in the proof of Theorem 8, we obtain that

\(\square \)

We also obtain the following variant of Theorem 9 with two-sided additive guarantees (which can be proved by essentially the same arguments).

Theorem 10

Let \(\mathcal {B}\) be an FPRA for unweighted (Q\(^\mathcal {P}\)) that given \(x\in \mathcal {P}\) returns an extreme point \(\hat{x}\) of \(\mathcal {P}\) such that \(Ax - \varDelta \le A\hat{x}\le Ax + \varDelta \). Using a single call to \(\mathcal {B}\), we can obtain an extreme point \(\tilde{x}\) of \(\mathcal {P}\) such that \(c^\intercal \tilde{x}\le \mathsf {opt}\) and \(A\tilde{x}\le b + 2\varDelta \).

Application to k-budgeted Matroid Basis. Here, we seek to find a basis S of a matroid M satisfying k budget constraints \(\{d_i(S) \le B_i\}_{1 \le i \le k}\), where \(d_i(S) := \sum _{e \in S} d_i(e)\). Note that this can be cast a special case of (Q\(^\mathcal {P}\)), where \(\mathcal {P} = \mathcal {P}(M)\) is the basis polytope of M, the objective function encodes (say) the first budget constraint and \(Ax \le b\) encodes the remaining budget constraints. Applying Theorem 10 to a recent randomized algorithm of [4], we obtain a (randomized) algorithm that, for any \(\epsilon > 0\), returns in \(n^{O(k^{1.5}/\epsilon )}\) time a basis that (exactly) satisfies a chosen budget constraint, and violates the other budget constraints by (at most) a \((1 +\epsilon )\)-factor, where n is the size of the ground-set of M. This matches the current-best approximation guarantee of [8] (who give a deterministic algorithm) and the current-best running time of [4].

Theorem 11

[4]. There exists a randomized FPRA, \(\mathcal {B}_{\text {BN}}\), for unweighted \((Q^{\mathcal {P}(M)})\) that rounds any \(x\in \mathcal {P}(M)\) to an extreme point \(\hat{x}\) of \(\mathcal {P}(M)\) such that \(Ax-O(\sqrt{k})\varDelta \le A\hat{x}\le Ax + O(\sqrt{k}) \varDelta \), where \(\varDelta = (\max _{1\le j \le n} a_{ij})_{1 \le i \le k - 1} = (\max _{e} d_{i+1}(e))_{1 \le i \le k - 1}\).

Applying Theorem 10 with \(\mathcal {B}\)=\(\mathcal {B}_{\text {BN}}\), we obtain a basis S of M such that \(d_1(S)\le B_1\), and \(d_i(S)\le B_i+O(\sqrt{k})\max _{e} d_{i}(e)\) for all \(2\le i\le k\). We combine this with a partial-enumeration step, where we “guess” all elements e of an optimal solution having \(d_i(e)=\varOmega \bigl (\frac{\epsilon }{\sqrt{k}}\bigr )\cdot B_i\) for at least one index \(i \in \{2, \dots , k\}\), update M and the budget constraints, and then apply Theorem 10. This yields the following result.

Theorem 12

There exists a randomized algorithm that, given any \(\epsilon > 0\), finds in \(n^{O(k^{1.5}/\epsilon )}\) time a basis S of M such that \(d_1(S) \le B_1\) and \(d_i(S) \le (1 + \epsilon ) B_i\) for all \(2 \le i \le k\).

Notes

- 1.

Such general degree constraints arise in the context of finding thin trees [1], where \(\mathcal {S}\) consists of all node sets, which turn out to be a very useful tool in devising approximation algorithms for asymmetric TSP.

References

Asadpour, A., Goemans, M., Madry, A., Oveis Gharan, S., Saberi, A.: An \(O(\log n/\log \log n)\)-approximation algorithm for the asymmetric traveling salesman problem. In: Proceedings of the 20th SODA, pp. 379–389 (2010)

Bansal, N., Khandekar, R., Könemann, J., Nagarajan, V., Peis, B.: On generalizations of network design problems with degree bounds. Math. Program. 141(1–2), 479–506 (2013)

Bansal, N., Khandekar, R., Nagarajan, V.: Additive guarantees for degree-bounded directed network design. SICOMP 39(4), 1413–1431 (2009)

Bansal, N., Nagarajan, V.: Approximation-friendly discrepancy rounding. In: Louveaux. Q., Skutella, M. (eds.) IPCO 2016. LNCS, vol. 9682, pp. 375–386. Springer, Heidelberg (2016). Also appears arXiv:1512.02254 (2015)

Chaudhuri, K., Rao, S., Riesenfeld, S., Talwar, K.: What would Edmonds do? Augmenting paths and witnesses for degree-bounded MSTs. Algorithmica 55, 157–189 (2009)

Chekuri, C., Vondrak, J., Zenklusen, R.: Dependent randomized rounding via exchange properties of combinatorial structures. In: 51st FOCS (2010)

Fürer, M., Raghavachari, B.: Approximating the minimum-degree Steiner tree to within one of optimal. J. Algorithms 17(3), 409–423 (1994)

Grandoni, F., Ravi, R., Singh, M., Zenklusen, R.: New approaches to multi-objective optimization. Math. Program. 146(1–2), 525–554 (2014)

Jain, K.: A factor 2 approximation algorithm for the generalized Steiner network problem. Combinatorica 21, 39–60 (2001)

Goemans, M.: Minimum bounded degree spanning trees. In: 47th FOCS (2006)

Könemann, J., Ravi, R.: A matter of degree: improved approximation algorithms for degree-bounded minimum spanning trees. SICOMP 31, 1783–1793 (2002)

Könemann, J., Ravi, R.: Primal-dual meets local search: approximating MST’s with nonuniform degree bounds. In: Proceedings of the 35th STOC, pp. 389–395 (2003)

Olver, N., Zenklusen, R.: Chain-constrained spanning trees. In: Goemans, M., Correa, J. (eds.) IPCO 2013. LNCS, vol. 7801, pp. 324–335. Springer, Heidelberg (2013)

Ravi, R., Marathe, M., Ravi, S., Rosenkrantz, D., Hunt III, H.: Approximation algorithms for degree-constrained minimum-cost network-design problems. Algorithmica 31(1), 58–78 (2001)

Singh, M., Lau, L.: Approximating minimum bounded degree spanning trees to within one of optimal. In: Proceedings of the 39th STOC, pp. 661–670 (2007)

Zenklusen, R.: Matroidal degree-bounded minimum spanning trees. In: Proceedings of the 23rd SODA, pp. 1512–1521 (2012)

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Rights and permissions

Copyright information

© 2016 Springer International Publishing Switzerland

About this paper

Cite this paper

Linhares, A., Swamy, C. (2016). Approximating Min-Cost Chain-Constrained Spanning Trees: A Reduction from Weighted to Unweighted Problems. In: Louveaux, Q., Skutella, M. (eds) Integer Programming and Combinatorial Optimization. IPCO 2016. Lecture Notes in Computer Science(), vol 9682. Springer, Cham. https://doi.org/10.1007/978-3-319-33461-5_4

Download citation

DOI: https://doi.org/10.1007/978-3-319-33461-5_4

Published:

Publisher Name: Springer, Cham

Print ISBN: 978-3-319-33460-8

Online ISBN: 978-3-319-33461-5

eBook Packages: Computer ScienceComputer Science (R0)