Abstract

Hearing aids will continue to be acoustic, customizable, wearable, battery-operated, and regulated medical devices. Future technology and research will improve how these requirements are met and add entirely new functions. Microphones, loudspeakers, digital signal processors, and batteries will continue to shrink in size to enhance existing functionality and allow new functionality with new forms of signal processing to optimize speech understanding, enhance spatial hearing, allow more accurate sound environment detection and classification to control hearing aid settings, implement self-calibration, and expand wireless connectivity to other devices and sensors. There also is potential to provide new signals for tinnitus treatment and delivery of pharmaceuticals to enhance cochlear hair cell and neural regeneration. Increased knowledge and understanding of the impaired auditory system and effective technology development will lead to greater benefit of hearing aids in the future.

Access provided by Autonomous University of Puebla. Download chapter PDF

Similar content being viewed by others

Keywords

- Batteries

- Cognitive control

- Epidemiology

- Fitting methods

- Hearing aids

- Hearing impairment

- Microphones

- Music perception

- Real-ear measurements

- Receivers

- Regeneration

- Self-calibration

- Signal processing

- Spatial perception

- Speech Perception

- Wireless connectivity

11.1 Introduction

It is likely that a large proportion of future hearing aids will continue to be regulated medical devices that will be acoustic, wearable, battery operated, and intended to be worn continuously all day. Future devices must meet the existing basic requirements that include being comfortable to wear, being cosmetically acceptable, having a battery life of at least one full day, and being customizable to produce frequency- and level-dependent gains that are appropriate for the individual hearing-impaired person. They must be easily reprogrammable to compensate for changes in hearing function with aging or other factors. Future hearing aid technology and hearing aid-related research have the potential to improve how these requirements are met and to add entirely new functions.

11.2 Microphone Size and Technology

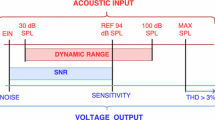

As described in Chap. 3 by Killion, Van Halteren, Stenfelt, and Warren, hearing aid microphones continue to shrink in size without sacrificing any of their already remarkable acoustic capabilities, including wide bandwidth (20–20,000 Hz), high maximum input level without overload (115 dB SPL), and low inherent noise floor (typically 25–30 dBA). Current microphones are robust and are available in extremely small packages, especially in the case of micro-electrical-mechanical systems (MEMS) microphones. Further size reductions will allow not only the possibility of producing smaller, more comfortable, and less visible hearing aids but also the ability to add additional multiple well-matched microphones on the same small ear-level devices. This opens up possibilities for having highly directional characteristics, which may be useful in noisy situations for selecting a “target” sound (e.g., a talker of interest) while rejecting or attenuating competing sounds. Biologically inspired highly directional microphones designed using silicon microfabrication are also on the horizon (Miles and Hoy 2006).

11.3 Receivers

Hearing aid loudspeakers, called receivers, also continue to shrink in size and their acoustic characteristics continue to be improved; see Chap. 3. The demands on receivers are complex. Their output requirements are related to the individual’s hearing status and to the receiver location with respect to the tympanic membrane. The sound reaching the tympanic membrane is influenced substantially by the physical dimensions of the external ear canal, by where in the canal the receiver is located, and by the size and configuration of the venting to the external environment (see Moore and Popelka, Chap. 1 and Munro and Mueller, Chap. 9). The outputs of the receivers of future hearing aids will continue to be greatly affected by these factors and it will continue to be necessary to specify the real-ear output, as discussed in Chap. 9.

Most individuals with age-related high-frequency sensorineural hearing loss require no amplification of low-frequency sound but do require amplification of sound at higher frequencies. A popular type of hearing aid for such people is a behind-the-ear (BTE) device with a receiver in the ear canal and an open fitting, that is, a nonoccluding dome or earmold. With this configuration, the low-frequency sounds are heard unamplified via leakage of sound through the open fitting, while the medium- and high-frequency sounds are amplified and are dominated by the output of the receiver. Such designs are popular partly because they are physically comfortable for all-day wear, they can be “instant fit” (a custom earmold is not required), and because low-frequency sounds are completely undistorted and natural sounding. There are, however, some problems with this approach. First, the amplified high-frequency sound is delayed relative to the low-frequency sound through the open fitting, leading to an asynchrony across frequency that may be perceptible as a “smearing” of transient sounds (Stone et al. 2008). Second, for medium frequencies, the interaction of amplified and unamplified sounds at comparable levels may lead to disturbing spectral ripples (comb-filtering effects); see Stone et al. (2008) and Zakis, Chap. 8. Third, for high-input sound levels, the gain of the hearing aid is reduced, and the sound reaching the tympanic membrane is strongly influenced by the sound passing through the open fitting. In this case, the benefits of any directional microphone system or beamformer (see Launer, Zakis, and Moore, Chap. 4) may be reduced or lost altogether.

An alternative approach is to seal the ear canal with an earmold or soft dome; see Chap. 1. In this case, the sound reaching the tympanic membrane is dominated by the amplified sound over a wide frequency range, including low frequencies. This approach may be required when significant gain is required at low frequencies to compensate for low-frequency hearing loss because it is difficult to achieve low-frequency gain with an open fitting (Kates 2008). A closed fitting avoids problems associated with temporal asynchrony across frequency, spectral ripples, and loss of directionality at high levels. However, there are also drawbacks with this approach. First, low-frequency sounds may be heard as less natural than with an open fitting because of the limited low-frequency response of the receiver or because the gain at low frequencies is deliberately reduced to prevent the masking of speech by intense low-frequency environmental sounds. Second, the user’s own voice may sound unnaturally loud and boomy because bone-conducted sound is transmitted into the ear canal and is trapped by the sealed fitting; this is called the occlusion effect (Killion et al. 1988; Stone and Moore 2002). There are two ways of alleviating the occlusion effect. One is to use a dome or earmold that fits very deeply inside the ear canal (Killion et al. 1988). The other is to actively cancel the bone-conducted sound radiated into the ear canal using antiphase sound generated by the receiver (Mejia et al. 2008). To the knowledge of the authors, active occlusion cancellation has not yet been implemented in hearing aids, but it may become available in the near future.

A completely different approach to sound delivery is the “Earlens” system described in Chap. 8; see also Perkins et al. (2010) and Fay et al. (2013). This uses a transducer that drives the tympanic membrane directly. The transducer is placed directly on the tympanic membrane and receives both signal and power via a light source driven by a BTF device. The light is transmitted from a light source in the ear canal to a receiver mounted on a “chassis” that fits over the tympanic membrane. The device has a maximum effective output level of 90–110 dB SPL and gain before acoustic feedback of up to 40 dB in the frequency range from 0.125 to 10 kHz. The ear canal can be left completely open, so any occlusion effect is small or nonexistent. The gain before acoustic feedback is relatively large at high frequencies because the eardrum vibrates in a chaotic manner (Fay et al. 2006) and vibration of the tympanic membrane by the transducer leads to a much smaller amount of sound being radiated from the ear canal back to the hearing aid microphone than would be the case for a conventional hearing aid (Levy et al. 2013). This can avoid the problems associated with the use of digital signal processing to cancel acoustic feedback (Freed and Soli 2006; Manders et al. 2012). The device has been undergoing clinical trials in 2014–2015 and may appear on the market soon after, if the trials are successful.

11.4 Digital Signal Processors and Batteries

Since the introduction of the first full digital hearing aid (Engebretson et al. 1985), wearable digital signal processors (DSPs) have shrunk progressively in size and power requirements, characteristics that have substantial implications for the future. Reduced power requirements together with improvements in battery technology will probably contribute to increased intervals between battery replacement or recharging. This is a consideration not only for patient convenience but also to ensure that more demanding signal processing can be accommodated without increasing battery or processor sizes. It is likely that, in the future, new effective and beneficial communication enhancement signal-processing algorithms, new automated convenience features, and new fitting and adjustment capabilities all can be added to substantially improve overall hearing aid function without increasing the physical size of the digital signal processor.

Battery technology is being actively researched, driven by rapid growth of mobile devices. The likely developments will be in both the battery chemistry and internal components such as anodes (Lin et al. 2015). Future improvements may include longer battery life, very rapid recharging for rechargeable batteries, innovative packaging to optimize space within the hearing aid casings, and possibly increased voltage that may help increase dynamic range and DSP processor speed.

Battery life and processor power consumption are also important for hearing aids that are inserted deeply into the ear canal and are intended to be left in the ear for extended periods (Palmer 2009). Currently, such devices use analog signal processing that requires less power than digital signal processing. The devices also have no wireless connectivity. At present, the devices can be left in place for 3–4 months, but improvements in battery and DSP technology could lead to longer durations of use.

Current DSPs already provide multiple adjustable channels that can compensate for sensitivity loss in a frequency-selective manner and provide amplification tailored to the individual’s hearing requirements. Almost every hearing aid incorporates some form of frequency-selective amplitude compression, also called automatic gain control (AGC). However, the way in which this is implemented differs markedly across manufacturers, and it remains unclear which method is best for a specific patient, if there is indeed a “best” method (Moore 2008). There is evidence that the preferred compression speed (see Chaps. 4 and 6) varies across hearing-impaired listeners (Gatehouse et al. 2006; Lunner and Sundewall-Thoren 2007), but there is at present no well-accepted method for deciding what speed will be best for an individual. Hopefully, in the future, methods for implementing multichannel AGC will be refined and improved, and better methods will be developed for tailoring the characteristics of the AGC to the needs and preferences of the individual.

A form of signal processing that has attracted considerable interest in recent years involves frequency lowering, whereby high-frequency components in the input signal are shifted to lower frequencies for which hearing function is usually better; see Chaps. 4, 6, and 8. Most major manufacturers of hearing aids now offer some form of frequency lowering, but the way in which it is implemented varies markedly across manufacturers. Most published studies evaluating the effectiveness of frequency lowering suffer from methodological problems, and at present, there is no clear evidence that frequency lowering leads to benefits for speech perception in everyday life. Also, it remains unclear how frequency lowering should be adjusted to suit the individual, what information should be used when making the adjustments, or how long it takes to adapt to the new processing. It is hoped that, in the future, frequency-lowering methods will be improved and well-designed clinical studies will be conducted to compare the effectiveness of different methods of frequency lowering and to develop better methods of fitting frequency lowering.

Many hearing aids perform a type of “scene analysis”; see Chaps. 4 and 8. For example, they may classify the current scene as speech in quiet, speech in noise, noise alone, or music. The parameters of the hearing aid may then be automatically adjusted depending on the scene. In current hearing aids, the number of identified scenes is usually limited to about four, and the classifier is pretrained by the manufacturer, using “neural networks” and a large set of prerecorded scenes. In the future, it may be possible to identify many more types of scenes—for example, speech in background music, speech in a combination of background noise and music, music in car noise (which has most of its energy at low frequencies), speech in a reverberant setting, music in a reverberant setting, classical music, pop music, jazz music—and to adjust the parameters of the hearing aid accordingly. Possibly, as mentioned in Chap. 8, the scene classifiers could automatically learn the scenes that the individual user encounters most often.

There are some problems with the use of classifiers to control hearing aid settings. First, it is often not obvious how to adjust the hearing aid for any specific scene. For example, should the compression speed be different for speech and for music and should the frequency-gain characteristic be different for speech and for music? More research is clearly needed in this area. A second problem is more fundamental. Sensory systems generally seem to have evolved to provide accurate information about the outside world. In the case of the auditory system, the goal is to determine the properties of sound sources. This requires a consistent and systematic relationship between the properties of sound sources and the signals reaching the ears. But if a hearing aid changes its characteristics each time a new scene is identified, there is no longer a consistent relationship between the properties of the sound source and the signals reaching the ears. This may make it more difficult for a hearing aid user to interpret auditory scenes, especially when they are complex. More research is needed to assess the severity of this problem and to determine whether there are overall benefits to be obtained from the use of scene classifiers.

As speech recognition systems improve, the opportunity may develop for real-time processing specifically intended to optimize perception of individual speech sounds by modifying both the temporal and spectral aspects of the sounds. Some forms of processing of this type, implemented “by hand” on “clean” speech, have been shown to be beneficial (Gordon-Salant 1986; Hazan and Simpson 2000). However, automatic processing of this type may be extremely difficult to implement, especially when background sounds are present. Also, automatic processing may involve significant time delays (Yoo et al. 2007) and this would disrupt the temporal synchrony between the auditory and visual signals from the person speaking. The audio component of speech can be delayed by up to 20 ms before there is a noticeable and interfering mismatch between what is seen on the face and the lips of the speaker and what is heard (McGrath and Summerfield 1985). Although modern DSPs are able to perform very large numbers of computations in 20 ms, there may be intrinsic limitations in automatic processing to enhance specific speech sound or features that prevent the processing delay from being reduced below 20 ms.

11.5 Self-Calibrating Hearing Aids

Variations in the geometry of the external ear canal, the position of the receiver, and the type of seal all greatly affect the sound reaching the tympanic membrane. This usually requires real-ear measures to check and adjust the actual output of the hearing aid, as described in Chap. 9. A possible way of reducing the need for such measures is via self-measuring and self-calibrating features in hearing aids. Such features were originally proposed and implemented in the first full digital hearing aid (Engebretson et al. 1985). The self-calibration required a microphone facing inward, toward the tympanic membrane. As microphones continue to shrink in size or as other approaches emerge that do not require onboard sound measurement technology (Wiggins and Bowie 2013), self-calibrating hearing aids are likely to become more common in the future. Such systems can help in achieving target frequency- and level-dependent gains at the initial fitting and can greatly speed up the initial fitting process. In addition, they could potentially compensate for day-to-day variations resulting from, for example, cerumen accumulation and removal and different ear canal positions of the receiver resulting from removing and reinserting the device. Insertion of a hearing aid could automatically trigger a self-adjustment procedure to ensure that the device provided the desired output at the tympanic membrane.

11.6 Wireless Connectivity

As discussed in Chap. 5 by Mecklenburger and Groth, wireless connectivity for hearing aids allows significant improvements in many hearing aid functions. Wireless connectivity allows the use of remote microphones and of signal processing in devices outside the hearing aid itself. Currently, the “streamer” modules described in Chap. 5 can communicate with the hearing aids worn on each ear. The microphones on a single “streamer” must be close together and do not provide the advantages for beamforming or spatial hearing benefits of the more separated microphone locations of the hearing aids worn on each ear, as discussed in Chaps. 4, 5, and 7. Future technology may allow wireless transmission of the outputs of the microphones on the two ear-level hearing aids to the “streamer” for signal processing and then transmission back to the ear-level devices. This could allow more computationally demanding signal processing than is possible at present.

In addition to the “streamer” component, the list of external devices that current hearing aids connect to wirelessly includes mobile telephones, television sets, and remote microphones. Because mobile telephones also independently connect wirelessly to a variety of other devices, such as the audio systems in cars, the number and variety of devices connected to hearing aids will increase automatically as the list of connected devices to mobile phones increases. It is already possible for a mobile telephone global positioning system to identify a specific location (e.g., a restaurant) and to select a set of parameters in the hearing aid that have previously been found to be preferred in that situation.

Currently, there is an emphasis on new technology embedded within “wearables,” small electronic devices that contain substantial computing power and sensors. Examples are glasses, wrist watches or other wrist-worn devices, and even contact lenses. Wearables may function as health and fitness monitors or medical devices. They collect physiological data from tiny sensors such as pressure sensors, temperature sensors, and accelerometers, analyze the data, and provide information to the wearer, often in real time via a visual display. Future hearing aids may incorporate such sensors or be linked wirelessly to devices containing the sensors and may present the information via an auditory speech signal tailored to the wearer. This represents only a small extension to the current capability of some hearing aids to provide a synthesized voice signal to indicate what program has been selected or to warn the user of the need to change the battery.

11.7 Tinnitus Treatment

Some hearing aids have the ability to generate sounds that may be used to mask tinnitus, to draw attention away from tinnitus, or to help the tinnitus sufferer to relax, especially when used together with appropriate counseling (Aazh et al. 2008; Sweetow and Sabes 2010). Future efforts may involve the use of hearing aids to supply digitally synthesized signals intended to reduce the severity of tinnitus using principles drawn from studies of neuroplasticity (Tass and Popovych 2012; Tass et al. 2012). The clinical effectiveness of such tinnitus intervention strategies has not yet been clearly determined. Further research is needed to determine the benefits of these approaches and to evaluate the relative effectiveness of the different types of tinnitus intervention.

11.8 Cognitively Controlled Hearing Aids

Hearing aids already exist that act as “binaural beamformers,” selectively picking up sounds from a particular, selectable direction (e.g., a specific talker); see Chaps. 4 and 5. A practical problem is that the hearing aids do not “know” which source the user wants to attend to at a specific time. There is evidence that brain activity and the corresponding evoked electrical responses change depending on which sound source a person is attending (Mesgarani and Chang 2012; Kidmose et al. 2014). In principle, therefore, the beamforming in hearing aids could be controlled by evoked potentials measured from the user such that the desired source/direction was automatically selected. This has sometimes been referred to as “cognitively controlled hearing aids.” There are many serious problems that need to be solved before such hearing aids become practical. A major problem is that users may switch attention very rapidly between sources from different directions. The hearing aids would need to switch almost as rapidly to avoid the directional beam “pointing” at the wrong source. Currently, considerable averaging over time is needed to extract “clean” evoked potentials from sensors on the scalp or in the ear canal (Kidmose et al. 2013). It is not known whether it will be possible to derive an evoked potential indicating the desired source signal or its direction with sufficient speed to satisfy the needs of the user. Research is currently ongoing to explore the feasibility of cognitively controlled hearing aids.

11.9 Using Hearing Aids to Enhance Regeneration

At present, many laboratories throughout the world are investigating a variety of approaches to regeneration or repair of sensory and related structures to restore auditory function. The approaches include use of stem cells and a variety of gene therapies (Izumikawa et al. 2005; Oshima et al. 2010; Rivolta 2013; Ronaghi et al. 2014). Although these approaches are beginning to show promise, none are expected to be successful in the near future.

Because of the wide variety of cochlear pathologies and genetic disorders, it is likely that a variety of approaches will emerge that are pathology specific. The biological interventions are usually designed to imitate the normal patterns of biological development. These are very complex and involve cell differentiation regulated by nerve growth factors and other chemicals that are released at very specific developmental periods. Furthermore, normal auditory development at the cellular level requires auditory signals to guide development. Abnormal auditory input can result in abnormalities and changes at many levels in the auditory system (Sharma et al. 2007). Future hearing devices may be developed to enhance biological treatments for regeneration or repair. A future hearing aid system may include an acoustic hearing aid and a linked implanted component capable of eluting chemicals and even producing electrical signals. The system would be able to provide controlled acoustic, electrical, and pharmaceutical signals at the appropriate time to control the developmental process and, when complete, the device could be removed.

11.10 Concluding Remarks

Age expectancy is increasing, but hearing function continues to decrease with increasing age. Hence the need for hearing aids, and improvements in hearing aids, is greater than ever. Current hearing aids are effective in improving the audibility of sounds, but they remain of limited benefit in the situations in which they are most needed, namely in noisy and reverberant environments.

Knowledge and understanding of the impaired auditory system continue to improve, and effective technology development continues. Hopefully, this will lead to greater benefit of hearing aids in the future and to a much greater extent of hearing aid use among those with hearing loss.

References

Aazh, H., Moore, B. C. J., & Glasberg, B. R. (2008). Simplified form of tinnitus retraining therapy in adults: A retrospective study. BMC Ear, Nose and Throat Disorders, 8, 7. doi:10.1186/1472-6815-8-7.

Engebretson, A. M., Morley, R. E., & Popelka, G. R. (1985). Hearing aids, signal supplying apparatus, systems for compensating hearing deficiencies, and methods. US Patent 4548082.

Fay, J. P., Puria, S., & Steele, C. R. (2006). The discordant eardrum. Proceedings of the National Academy of Sciences of the USA, 103, 19743–19748.

Fay, J. P., Perkins, R., Levy, S. C., Nilsson, M., & Puria, S. (2013). Preliminary evaluation of a light-based contact hearing device for the hearing impaired. Otology & Neurotology, 34, 912–921.

Freed, D. J., & Soli, S. D. (2006). An objective procedure for evaluation of adaptive antifeedback algorithms in hearing aids. Ear and Hearing, 27, 382–398.

Gatehouse, S., Naylor, G., & Elberling, C. (2006). Linear and nonlinear hearing aid fittings—1. Patterns of benefit. International Journal of Audiology, 45, 130–152.

Gordon-Salant, S. (1986). Recognition of natural and time/intensity altered CVs by young and elderly subjects with normal hearing. The Journal of the Acoustical Society of America, 80, 1599–1607.

Hazan, V., & Simpson, A. (2000). The effect of cue-enhancement on consonant intelligibility in noise: Speaker and listener effects. Language and Speech, 43, 273–294.

Izumikawa, M., Minoda, R., Kawamoto, K., Abrashkin, K. A., Swiderski, D. L., et al. (2005). Auditory hair cell replacement and hearing improvement by Atoh1 gene therapy in deaf mammals. Nature Medicine, 11, 271–276.

Kates, J. M. (2008). Digital hearing aids. San Diego: Plural.

Kidmose, P., Looney, D., Ungstrup, M., Rank, M. L., & Mandic, D. P. (2013). A study of evoked potentials from ear-EEG. IEEE Transactions on Biomedical Engineering, 60, 2824–2830.

Kidmose, P., Rank, M. L., Ungstrup, D., Park, C., & Mandic, D. P. (2014). A Yarbus-style experiment to determine auditory attention. In 32nd Annual International Conference of the IEEE EMBS (pp. 4650–4653). Buenos Aires, Argentina: IEEE.

Killion, M. C., Wilber, L. A., & Gudmundsen, G. I. (1988). Zwislocki was right: A potential solution to the “hollow voice” problem (the amplified occlusion effect) with deeply sealed earmolds. Hearing Instruments, 39, 14–18.

Levy, S. C., Freed, D. J., & Puria, S. (2013). Characterization of the available feedback gain margin at two device microphone locations, in the fossa triangularis and behind the ear, for the light-based contact hearing device. The Journal of the Acoustical Society of America, 134, 4062.

Lin, M.-C., Gong, M., Lu, B., Wu, Y., Wang, D.-Y., et al. (2015). An ultrafast rechargeable aluminium-ion battery. Nature, doi:10.1038/nature14340.

Lunner, T., & Sundewall-Thoren, E. (2007). Interactions between cognition, compression, and listening conditions: Effects on speech-in-noise performance in a two-channel hearing aid. Journal of the American Academy of Audiology, 18, 604–617.

Manders, A. J., Simpson, D. M., & Bell, S. L. (2012). Objective prediction of the sound quality of music processed by an adaptive feedback canceller. IEEE Transactions on Audio, Speech and Language Processing, 20, 1734–1745.

McGrath, M., & Summerfield, Q. (1985). Intermodal timing relations and audio-visual speech recognition by normal-hearing adults. The Journal of the Acoustical Society of America, 77, 678–685.

Mejia, J., Dillon, H., & Fisher, M. (2008). Active cancellation of occlusion: An electronic vent for hearing aids and hearing protectors. The Journal of the Acoustical Society of America, 124, 235–240.

Mesgarani, N., & Chang, E. F. (2012). Selective cortical representation of attended speaker in multi-talker speech perception. Nature, 485, 233–236.

Miles, R. N., & Hoy, R. R. (2006). The development of a biologically-inspired directional microphone for hearing aids. Audiology & Neurotology, 11, 86–94.

Moore, B. C. J. (2008). The choice of compression speed in hearing aids: Theoretical and practical considerations, and the role of individual differences. Trends in Amplification, 12, 103–112.

Oshima, K., Suchert, S., Blevins, N. H., & Heller, S. (2010). Curing hearing loss: Patient expectations, health care practitioners, and basic science. Journal of Communication Disorders, 43, 311–318.

Palmer, C. V. (2009). A contemporary review of hearing aids. Laryngoscope, 119, 2195–2204.

Perkins, R., Fay, J. P., Rucker, P., Rosen, M., Olson, L., & Puria, S. (2010). The EarLens system: New sound transduction methods. Hearing Research, 263, 104–113.

Rivolta, M. N. (2013). New strategies for the restoration of hearing loss: Challenges and opportunities. British Medical Bulletin, 105, 69–84.

Ronaghi, M., Nasr, M., Ealy, M., Durruthy-Durruthy, R., Waldhaus, J., et al. (2014). Inner ear hair cell-like cells from human embryonic stem cells. Stem Cells and Development, 23, 1275–1284.

Sharma, A., Gilley, P. M., Dorman, M. F., & Baldwin, R. (2007). Deprivation-induced cortical reorganization in children with cochlear implants. International Journal of Audiology, 46, 494–499.

Stone, M. A., & Moore, B. C. J. (2002). Tolerable hearing-aid delays. II. Estimation of limits imposed during speech production. Ear and Hearing, 23, 325–338.

Stone, M. A., Moore, B. C. J., Meisenbacher, K., & Derleth, R. P. (2008). Tolerable hearing-aid delays. V. Estimation of limits for open canal fittings. Ear and Hearing, 29, 601–617.

Sweetow, R. W., & Sabes, J. H. (2010). Effects of acoustical stimuli delivered through hearing aids on tinnitus. Journal of the American Academy of Audiology, 21, 461–473.

Tass, P. A., & Popovych, O. V. (2012). Unlearning tinnitus-related cerebral synchrony with acoustic coordinated reset stimulation: Theoretical concept and modelling. Biological Cybernetics, 106, 27–36.

Tass, P. A., Adamchic, I., Freund, H. J., von Stackelberg, T., & Hauptmann, C. (2012). Counteracting tinnitus by acoustic coordinated reset neuromodulation. Restorative Neurotology and Neuroscience, 30, 137–159.

Wiggins, D., & Bowie, D. L. (2013). Calibrated hearing aid tuning appliance. US Patent US8437486 B2.

Yoo, S. D., Boston, J. R., El-Jaroudi, A., Li, C. C., Durrant, J. D., et al. (2007). Speech signal modification to increase intelligibility in noisy environments. The Journal of the Acoustical Society of America, 122, 1138–1149.

Conflict of interest

Gerald Popelka declares that he has no conflict of interest.Brian C.J. Moore has conducted research projects in collaboration with (and partly funded by) Phonak, Starkey, Siemens, Oticon, GNReseound, Bernafon, Hansaton, and Earlens. Brian C.J. Moore acts as a consultant for Earlens.

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Rights and permissions

Copyright information

© 2016 Springer International Publishing Switzerland

About this chapter

Cite this chapter

Popelka, G.R., Moore, B.C.J. (2016). Future Directions for Hearing Aid Development. In: Popelka, G., Moore, B., Fay, R., Popper, A. (eds) Hearing Aids. Springer Handbook of Auditory Research, vol 56. Springer, Cham. https://doi.org/10.1007/978-3-319-33036-5_11

Download citation

DOI: https://doi.org/10.1007/978-3-319-33036-5_11

Published:

Publisher Name: Springer, Cham

Print ISBN: 978-3-319-33034-1

Online ISBN: 978-3-319-33036-5

eBook Packages: Biomedical and Life SciencesBiomedical and Life Sciences (R0)