Abstract

Specific kinds of neuronal interactions, such as phase coupling of neuronal oscillations, are likely to be essential systems-level mechanisms for coordinating neuronal communication, integration, and segregation. The functional roles of these interactions during cognitive tasks in healthy humans can be investigated with magneto- and electroencephalography (MEG/EEG), the only means for noninvasive electrophysiological recordings of human cortical activity. While advances in source modeling have opened new avenues for assessing inter-areal interactions with MEG/EEG, several factors limit the accuracy and inferential value of such analyses. In this chapter, we provide an overview of common source analysis strategies for mapping inter-areal interactions with MEG/EEG. Linear mixing between sources, as caused by volume conduction and signal mixing, is the principal confounder in connectivity analysis and always leads to false positive observations. We discuss the sensitivity of different interaction metrics to directly and indirectly caused false positives and conclude with approaches to mitigate these problems. In conclusion, MEG and EEG are becoming increasingly useful for assessing inter-areal neuronal interaction in humans.

Access provided by Autonomous University of Puebla. Download chapter PDF

Similar content being viewed by others

Keywords

- Independent Component Analysis

- Volume Conduction

- Neuronal Oscillation

- True Interaction

- Source Reconstruction

These keywords were added by machine and not by the authors. This process is experimental and the keywords may be updated as the learning algorithm improves.

1 Introduction

1.1 Measuring Neuronal Oscillations and Synchronization in Noninvasive Electrophysiological Recordings

During the past two decades, a number of seminal studies have shown that synchronous neuronal activity and neuronal oscillations can mechanistically support the coordination of neuronal processing across distributed neuronal assemblies (Fries 2015; Singer 1999, 2009).

Neuronal oscillations occur in a wide range of frequency bands (at least 0.01−200 Hz) associated with distinct cognitive functions (Buzsaki and Draguhn 2004) and can be characterized by their amplitude, phase, and frequency. Behaviorally relevant oscillations characterize all scales of the nervous system and can be measured at millisecond temporal resolution by electrophysiological recordings. Spatial resolutions range from macroscopic (1–3 cm) in magneto- and electroencephalography (MEG and EEG) over mesoscopic (1–10 mm), such as in human intracranial EEG (iEEG), to microscopic levels in extracellular field potentials and neuronal membrane potentials accessible with microelectrodes in animal models. The amplitude of neuronal oscillations in macro- and mesoscopic recordings is largely determined by the fraction of local neurons that receive coherent synaptic inputs or operate coherently as a synchronized assembly among less coherent neurons. Amplitude, hence, is primarily a measure of local synchronization. Phase, on the other hand, quantifies the temporal evolution of the oscillatory cycle (see Fig. 1b) and is essential for the assessment of the phase-locking of local oscillations to internal or external events. Importantly, it is also critical for determining whether signals from two regions have a consistent phase relationship (i.e., synchronized): a statistically nonrandom inter-areal phase relationship indicates phase synchronization.

Oscillations facilitate intra- and inter-areal synchronization. (a) Oscillations reflect local synchronization of neuronal spiking within a population. (b) Synchronization of two different neuronal populations at a specific phase difference enables communication. In this example, synchrony is enabled at zero phase difference, so neurons in both populations are spiking coincidentally and inhibited at 180° phase difference. Note that depending on the distance between the populations, synchronization can also occur at non-zero phase differences

1.2 Overview of the Confounds in the Estimation of Synchrony in Electrophysiological Recordings

While sensor-to-sensor analyses of MEG and EEG data have indicated that task demands for cognitive integration are associated with long range synchronization of neuronal oscillations (Palva and Palva 2012), these analyses not only suffer from confounds introduced by signal mixing and source amplitude modulations but also yield essentially no information about the anatomical structures generating the signals. In contrast, invasive recordings provide precise information on the anatomical location of the sources of neuronal oscillations and avoid confounds due to signal mixing. Their relevance to cortex-wide phase synchrony is, however, limited due to the sparse sampling of cortical locations that they offer. Despite methodological challenges that will be detailed below, MEG and EEG recordings can be used with source reconstruction methods to investigate the cortex-wide networks of phase synchrony (Palva et al. 2005, 2010, 2011; Palva and Palva 2012). Of these two, MEG offers a better spatial resolution as it is not confounded by the volume conduction and high resistivity of the skull, but combining MEG and EEG improves the detectability of some sources because MEG and EEG have distinct sensitivities (Sharon et al. 2007).

1.3 Problem Statement and Outline

In this chapter, we will first outline the data analysis “pipeline” for MEG and EEG from preprocessing to filtering and inverse modeling (Sect. 2). We will assess the basic interaction metrics that can be used to estimate pairwise interactions and their inherent advantages and disadvantages (Sect. 3). Section 4 will present the causes and consequences of linear mixing arising from volume conduction and signal mixing, the most profound challenge arising in MEG/EEG data analysis, and how sensitive different interaction metrics are to this problem. In Sect. 5, the advantages and challenges of all-to-all connectivity mapping will be discussed, and in Sect. 6, we will introduce methods of edge bundling to minimize the effects of signal mixing and improve the ratio of true to false positives.

2 The MEG/EEG Data Analysis Pipeline

2.1 Preprocessing of MEG/EEG Data

Identifying behaviorally relevant patterns of inter-areal correlations or synchronization in MEG/EEG data can be confounded by numerous sources of artifacts. We outline next the preprocessing steps that are essential for excluding extracranial signal sources and artifacts. MEG/EEG data are usually recorded while participants are carrying out a cognitive task of interest or, if applicable, are in a resting state. In a step often referred to as preprocessing, the sensor-space raw data are cleaned or de-noised using manual and/or automatic procedures. In the next step, the preprocessed sensor-space time series are filtered in order to estimate their oscillatory components in relatively narrow frequency bands. Using an appropriate source reconstruction technique, the time series of cortical sources are then estimated. These cortical time series are then used to compute the strength of inter-areal correlations or synchronization between each pair of cortical areas in time windows of interest. Finally, statistical thresholding procedures are used to identify which pairs of cortical areas are truly phase synchronized, yielding the spatial, spectral, and temporal characteristics of inter-areal phase synchronized networks.

The preprocessing of raw MEG/EEG sensor-space data involves both visual data inspection and several noise reduction techniques. Raw data are visually inspected to remove bad channels (sensors with excessive noise or reoccurring artifacts) and to mark bad data segments, such as time periods with increased environmental noise, head movement artifacts, or large-amplitude facial or neck muscle artifacts. MEG and EEG data are also contaminated by both environmental and participant noise that cannot be removed by simple manual inspection. Although MEG scanners are set up in shielded rooms designed to minimize noise and interference from other sources of magnetic fields, it is unavoidable that some extracranial environmental noise will be present in the raw signal. Using signal separation techniques such as temporal extension of signal space separation (tSSS) (Taulu et al. 2005), the presence of this type of noise in the data can be minimized. Also, participants themselves contribute continuous non-cortical noise to the MEG and EEG recordings, most often in the form of cardiac artifacts as well as eye blinks or movements. Using independent component analysis (ICA) (Bell and Sejnowski 1995), electrooculography (EOG), and electrocardiography (ECG) measured concurrently with MEG/EEG, data components resulting from these interferences can be identified and removed.

2.1.1 Filtering for the Extraction of Narrowband Oscillations from Broadband Signals

Filtering is necessary to extract the oscillatory components of a signal for different frequency bands. Classical methods for converting a time-domain signal into the frequency domain, such as the Fourier transform, are, however, suboptimal here. These methods assume stationary signals, cannot detect temporal interactions (Li et al. 2007), and yield fixed bin-sized frequency scaling. In contrast, analyses of task modulations of neuronal oscillations require techniques that yield estimates of instantaneous amplitude and phase values of the signals, most often from a time–frequency transform of the original signal (Bruns 2004). Using such time-resolved spectral analysis, a time-dependent spectrum s(t,f) can be computed from a time series of interest s(t) where t is time and f is frequency. This time–frequency representation is complex-valued and consists, for each time point, of an instantaneous amplitude A(t,f) and an instantaneous phase θ (t,f):

Such complex valued time–frequency representations can be obtained using wavelet-based filtering. The time-domain signal s(t) is convolved with a series or family of filter kernels, wavelets w(t, f 0), that cover the frequency range of interest. For each center frequency f 0, the wavelet is defined as the product between a complex-valued oscillation at the frequency of interest and a bell-shaped, real-valued envelope: w(t, f 0) = A·exp(−t 2/2σ t 2·2)·exp(2iπf 0 t) where σ t = m/2πf 0 is the frequency domain standard deviation. The convolution S(t, f 0) = s(t) × w(t,f 0) gives a complex vector S(t,f 0), the angle of which is the phase of the signal s in a frequency band with a center frequency of f 0. The parameter m determines the effective number of oscillation cycles used to estimate signal amplitude and phase and therefore represents the compromise between time and frequency resolutions.

2.1.2 Source Reconstruction

While the magnetic fields generated by known sources can be estimated accurately from known current sources through forward modeling, estimation of the location and strengths of current sources in MEG/EEG data is an ill-posed inverse problem with no unique solutions. Nevertheless, inverse modeling can produce good estimates of those sources through the use of constraints such as minimization of total current and modeling of source dipoles on individual cortical surfaces obtained from MRI scans (Palva et al. 2010; Palva and Palva 2012).

Before solving the inverse problem, a forward model must be constructed that gives the distribution of currents and magnetic fields created by known sources in the head. For this, volume conduction models of the head are created assuming a spherical model or spherical harmonics (Mosher et al. 1999), which should be integrated with individual anatomical information obtained with MRI. For accurate modeling, co-registration of MEG/EEG and MRI should be as accurate as possible; subjects’ head movements should be as small as possible (Whalen et al. 2008; Gross et al. 2013).

The two most common approaches to inverse modeling are the Beamformer method (Van Veen et al. 1997) and minimum norm estimates (MNE) (Hamalainen and Sarvas 1989; Lin et al. 2006).

MNEs yield time series of 6000–8000 sources, “vertices,” covering the cortical surface. Considering that MEG/EEG recordings have only two to three hundreds of sensors and even fewer degrees of freedom, these sources are highly redundant. Thus, to decrease redundancy and improve signal-to-noise ratio (SNR), as well as to analyze MEG/EEG data in a form that is directly comparable with MRI studies, the MNE vertex time series can be collapsed into time series of a few hundreds of cortical areas. These “cortical parcels” can be obtained either by computing a weighted average of the vertex time series (Palva et al. 2010, 2011) or by using the time series of the vertex with maximum power (Hillebrand et al. 2012).

2.1.3 Parcellations

Anatomical parcellations, such as Desikan-Killiany and Destrieux, are derived from brain atlases of cortical gyral and sulcal structure revealed in MRI structural imaging (Dale et al. 1999; Fischl et al. 1999, 2002, 2004). As finer resolutions and/or more balanced parcel sizes are commonly needed, parcels from these atlases can be subdivided further (Cammoun et al. 2012; Hagmann et al. 2008; Palva et al. 2010, 2011). To accurately localize parcels on individual brains, structural scans can be recorded from all subjects with MRI. Software such as FreeSurfer (http://surfer.nmr.mgh.harvard.edu) can then be used for volumetric segmentation, surface reconstruction, flattening, parcellation, and neuroanatomical labeling of individual brains.

2.1.4 Fidelity-Optimized Source Solution Collapse Operators

One approach to maximize the source reconstruction accuracy is to use sparse fidelity-optimized collapse operators for collapsing the source vertex time series into parcel time series. Simulations are used to select for each parcel the MNE reconstructed vertex time series that is thought to best represent the true parcel time series. Simulated time series for each parcel are forward- and inverse modeled to compute “fidelity,” a measure of how accurately an MNE vertex time series represents the original time series. Only the vertices with the highest fidelity are used to compute a parcel’s time series as fidelity-weighed average. The enhanced time series reconstruction has been shown to improve accuracy of subsequent analyses of both local dynamics and large-scale interaction mapping while decreasing computational load (Korhonen et al. 2014; Fig. 2).

The optimization of inverse collapse weighting operator increases the fidelity of all parcels, especially on the lateral surface. Adapted from Korhonen et al. (2014). (a) The vertex and parcel fidelities of inverse solutions collapsed with standard anatomical and fidelity-optimized weighting operators for one representative subject. Low fidelity (f < 0.11) parcels are colored black. (b) Grand-average parcel fidelity for anatomical and fidelity-optimized weighting operators. The color scale is similarly as in a, and the group level low-fidelity parcels are identified on the anatomical map (bottom panel). (c) Significant parcel fidelity differences between inverse solutions collapsed with the different operators (opt–anat; p < 0.001, uncorrected, paired t-test across 13 subjects, parcels without a significant change uncolored)

3 Interaction Metrics, Solutions to the Linear Mixing Problem, and Residual Challenges

To quantify the connections between signal pairs, various interaction metrics exist that can be estimated from either the amplitude or the phase of signals’ time series.

3.1 Amplitude Correlations

The correlations between the amplitude envelopes can be assessed with the Pearson correlation coefficient (CC). CC will, however, yield inflated values in the presence of linear mixing. To estimate amplitude correlations in a manner insensitive to linear mixing, the narrowband time series are orthogonalized for each pair of cortical parcels or electrode contacts, using linear regression algorithm (Brookes et al. 2012), and the orthogonalized correlation coefficient (oCC) is computed for the amplitude envelopes of the orthogonalized time series:

where \( {A}_X \), \( {A}_Y \) and \( {A}_{X\Big|Y} \), \( {A}_{Y\Big|X} \) are the amplitude envelopes of the original and orthogonalized time series, respectively.

The narrowband time series X and Y can be orthogonalized as follows:

and analogously for \( {X}_{\Big|Y} \).

3.2 Phase Locking and Phase Lag Index

Phase locking between two sources is statistically indicated by a nonrandom phase or phase-difference distribution. The most common index is the phase locking value (PLV) that is given by means of the complex phases θ i (Lachaux et al. 1999; Sinkkonen et al. 1995).

where N t is the number of samples pooled across trials and/or time and i is the imaginary unit.

PLV is 1 for perfect coupling (delta-function phase distribution) and approaches 0 for a uniform phase distribution when N t → ∞. If samples are independent and the marginal phase distributions are uniform, the no-interaction null hypothesis is characterized by a uniform distribution and the Rayleigh test can be applied. When samples are pooled across time, and are not independent, and/or when the underlying process is not sinusoidal (see Nikulin et al. 2007), statistical testing needs to be carried out with surrogate data. Further, the PLV is sample-size biased, and hence sample sizes always need to be equalized across conditions. It is also helpful to define the complex PLV:

so that PLV = |cPLV|. While PLV is equally sensitive to coupling at all phase differences, it is also sensitive to inflation of the coupling estimates by linear mixing. The imaginary part of the cPLV is only sensitive to non-zero phase differences, and, thus, imaginary PLV (iPLV = |im(cPLV)| can be used to estimate phase coupling in a manner insensitive to linear mixing.

An alternative approach to quantifying phase relationships relies on quantifying the asymmetry of the distribution of phase differences. For non-phase-coupled time series, the distribution of phase differences Ф would be flat, so any deviation from a flat distribution can be taken as evidence of phase synchronization. From this, the phase lag index (PLI) is derived: \( \mathrm{P}\mathrm{L}\mathrm{I}=\left|\langle sign\left(\varPhi \right)\rangle \right| \), where ⟨ ⟩ denotes the expectation value (Stam et al. 2007; Stam and van Straaten 2012).

4 Effects of Linear Mixing on Estimates of Phase and Amplitude Correlations

In MEG/EEG sensor space, interaction analyses can be confounded by signal mixing, source amplitude changes, and other issues (Gross et al. 2013; Palva and Palva 2012; Schoffelen and Gross 2009). Identification of the correct anatomical sources is crucial for appropriate interpretations of the results because even nearby cortical regions may play very different functional roles. In connectivity analysis, signal mixing gives rise to two confounders: artificial and spurious connections. Unfortunately, these terms have been used with various definitions in the literature. We will use here the definition from Palva et al. (Palva and Palva 2012): artificial connections are a direct result of the source-space signal spread caused by linear signal mixing. The reconstructed signal in a given cortical parcel is contaminated by signals emanating from its neighboring parcels, giving rise to an increase in zero-lag phase differences, and thus zero-lag synchrony, in the absence of any true interactions. These are termed false positives of the first order. Spurious connections, or false positives of the second order, are “false” interactions created by the concurrent presence of a true interaction and linear mixing. Since the reconstructed time series of parcels close to the truly connected parcels are contaminated by signals that are truly phase synchronized, these parcels will appear to also be phase synchronized. In contrast to artificial connections, spurious connections are only present between parcels close to true interactions and neither limited to nearby parcels nor to zero-lag phase differences.

4.1 Influence of Linear Mixing on Interaction Metrics

In contrast to true neuronal interactions that often involve a conduction-delay-related phase lag, artificial connections are characterized by zero-lag phase differences. As a consequence, choosing a metric that is not sensitive to zero-lag phase differences such as iPLV or PLI for phase coupling or oCC for amplitude correlations will suppress the effects of artificial linear mixing. This is, however, achieved at the cost of missing also any true phase interactions whose lag is zero or \( \pm \pi \). On the other hand, spurious interactions can have any phase lag and are therefore not generally discarded by such metrics.

In both amplitude- and phase-based analyses, measures have been developed that are insensitive to the direct effects of signal mixing (Brookes et al. 2012; Hipp et al. 2012). While the correlation coefficient (CC) reveals artificial connections caused by linear mixing, as can be seen for simulated data (Fig. 3a), orthogonalization of the real-valued signals x(t) and y(t) before the estimation of their amplitude envelopes and their correlation removes linear dependencies. This orthogonalized correlation coefficient (oCC) therefore does not yield artificial correlations between neighboring sensors arising directly from source spread (Fig. 3c), although it will still pick up long-range spurious connections. Further, it can been shown that the values of these measures are also sensitive to the value of phase difference when phase correlations are present (Fig. 3b, d).

Interaction metrics are affected by coupling strength, phase difference, and linear mixing. (a) Correlation coefficient (CC) as a function of amplitude coupling strength c A in the absence of phase correlations (phase coupling c Θ = 0, phase difference nϕ xy = 0). CC increases with linear mixing m, leading to false positives. (b) CC between the signals as a function of phase difference nϕ xy, for c Θ = 0.4. The horizontal lines visualize the mean CC obtained at c Θ = 0. In the presence of phase coupling, CC is biased by nϕ xy . (c) oCC as a function of amplitude coupling strength c A in the absence of phase correlations (c Θ = 0, nϕ xy = 0). oCC decreases with linear mixing and does not report false positives. (d) oCC as a function of nϕ xy , for c Θ = 0.4. Like CC, oCC is biased by nϕ xy , but in a different manner. (e) PLV as a function of phase coupling strength c Θ for different linear mixing strengths m. PLV increases with mixing, especially at low or zero coupling, leading to false positives. Here, nϕ xy = −0.3. (f) PLV as a function of normalized phase difference nϕ xy for different strengths m of linear mixing and for c Θ = 0.4. PLV is greatly affected by the phase difference when signal mixing is strong. (g) iPLV as a function of c Θ for nϕ xy = −0.3. iPLV decreases with linear mixing and does not report false positives. (h) iPLV as a function of nϕ xy for c Θ = 0.4. The strength of iPLV depends highly on phase difference and is biased towards large phase differences; iPLV is 0 for integer values of nϕ xy . (i) wPLI as a function of c Θ for nϕ xy = −0.3. wPLI is unaffected by linear mixing and does not report false positives. (j) wPLI as a function of nϕ xy for c Θ = 0.4. Like iPLV, wPLI depends highly on phase difference

Similar to the CC, the PLV is sensitive to artificial connections (Fig. 3e) and biased by phase differences when signal mixing is strong (Fig. 3f).

Metrics which ignore the real part of the complex phase differences, such as the iPLV (the imaginary part of complex PLV) and the weighted phase lag index [wPLI, (Vinck et al. 2011)], are therefore insensitive to direct effects of linear mixing while revealing phase-lagged interactions (Fig. 3g, i). However, not only are these insensitive to true interactions at 0 or ± π phase lag but also their value is dependent on the phase difference per se in addition to the strength of the interaction (Fig. 3h, j).

Ultimately, all interaction measures are sensitive to linear mixing effects, albeit in different manners. While iPLV, wPLI, and oCC do not report artificial connections, they are still sensitive to spurious connections and to the value of phase differences. Therefore, one should not expect optimal results solely from choosing a good interaction measure.

4.2 Interaction of Phase and Amplitude Dynamics

It has been shown that in the analysis of pairwise interactions between signals containing noise, amplitude and phase dynamics influence each other (Daffertshofer and van Wijk 2011; Schoffelen and Gross 2009). In real signals, noise levels change over time, resulting in a fluctuating signal-to-noise ratio (SNR). Not only does this imply that lower noise levels will result in higher measured phase synchrony but also that synchrony estimates will increase when the SNR of the sources is correlated. There can even be cases in which a decrease in true connectivity coinciding with an increase in SNR leads to an increase in measured connectivity (Schoffelen and Gross 2009). Further, as mentioned in the last paragraph, simulations show that as a result of signal mixing, phase coupling also influences amplitude correlations.

When phase differences are small, signal mixing increases amplitude correlations measured with the correlation coefficient CC, whereas when signals are close to anti-phase, signal mixing reduces CC. The orthogonalized correlation coefficient oCC may be further biased by phase effects because the orthogonalization process uses the real part of the signals, which contains both amplitude and phase information.

Spontaneous and stimulus-induced changes in the amplitudes of ongoing activity influence (1) the signal-to-noise ratio of signals from a given source in relation to environmental and sensor noise and (2) the balance in the mixing of signals from multiple concurrent sources. Both effects influence the accuracy of phase (and amplitude) computations and therefore bias interaction estimates.

5 All-to-All Connectivity Mapping

Connectivity studies have traditionally focused on analyzing a limited set of interactions between selected regions of interest (ROIs). While this approach has the advantage of limiting computational complexity, it is not without drawbacks. First, relevant interactions between ROIs not included in the analysis will be missed, giving the initial ROI choice a decisive role in the final results. Furthermore, using the same data for ROI selection and connectivity analyses leads to circular analyses and in turn to invalid statistical inference (Ioannidis 2005; Kriegeskorte et al. 2009). In contrast, all-to-all connectivity mapping explores interactions between all possible pairs of cortical parcels. By avoiding selection bias, it ensures that the strongest interactions will be identified regardless of their anatomical location, although it leads to increased computational costs and multiple comparisons, which should be corrected for.

5.1 Statistical Analysis and Thresholding

In all-to-all connectivity matrices, every interaction will have a non-zero value. For network analysis, it is however preferable to have a sparse matrix with only the most relevant connections. One simple way to obtain such a sparse matrix is to discard all connections with values under a chosen threshold. However, it is preferable to estimate the task-related significance of connections and keep only those passing a particular statistical test, for example indexing significant increases in synchrony compared to a chosen baseline. Afterwards, a threshold (or several, see next paragraph) can still be applied to the resulting connectivity matrix to further reduce the number of connections.

5.2 Graph Theory and Networks

Graph theory can be used to characterize synchrony networks, quantifying properties of vertices (parcels), edges (interactions), and whole graphs. Brain connectivity graphs have high clustering, short average path lengths, dense intramodular connectivity, and sparse intermodular connections (Bullmore and Sporns 2009) that are the hallmarks of small-world networks (Watts 2004) These small-world networks are associated with high local and global efficiency in information transmission as well as facilitated parallel processing within hierarchically organized modules (Bullmore and Sporns 2009). Not only can graph properties be modulated by task demands and differ between frequency bands but they also can be significantly different between pathological vs. healthy participants, for example, in schizophrenia (Bassett et al. 2008, 2011; Micheloyannis et al. 2006; Siebenhühner et al. 2013).

Graph metrics are, however, biased by connection density, which is artificially increased by spurious connections (Antiqueira et al. 2010; van Wijk Bernadette et al. 2010). An approach to account for this could be to analyze graph metrics at different density levels which can be obtained by thresholding edges at a range of values of the interaction metric (Bassett et al. 2011; Siebenhühner et al. 2013). This is less problematic for vertex centrality measures such as degree and betweenness centrality that can be used to identify hubs that are highly connected and/or important “relay stations” for information transfer. Modularity analyses may be used to identify subsystems or modules of vertices that are densely connected among each other and work together to fulfill particular cognitive functions.

5.3 Alternatives to All-to-All Connectivity Mapping

An alternative to inverse modeling of the data and then computing pairwise interactions between all reconstructed sources is to use a multivariate (MVAR) approach. Here, an independent component analysis is performed on the sensor-space signals, and an MVAR model is fitted to the components. The residuals of the fitted MVAR model contain true interactions between brain regions and are largely unaffected by signal mixing (Brookes et al. 2012).

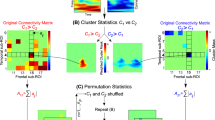

6 Using Edge Bundling to Identify True Interactions in the Presence of Signal Mixing

As detailed above, regardless of source reconstruction approach or chosen interaction metric, signal mixing in M/EEG data results in the detection of synchronization between cortical areas that are not truly connected. In this section, we will illustrate how these false positives arise and how edge bundling methods can be used to detect the underlying true interactions. As mentioned in earlier text, artificial interactions are first order, zero-phase lag, false positives directly caused by volume conduction and linear mixing (Drakesmith et al. 2013; Palva and Palva 2012). When an interaction metric sensitive to zero-phase lag interactions, such as PLV, is used to estimate pairwise interactions, the resulting all-to-all connectivity graphs will contain artificial interactions as edges connecting neighboring sources in areas where mixing is significant (Fig. 4a). Interaction metrics insensitive to zero-phase lag interactions, such as iPLV, will not report these (Fig. 4b), albeit at the cost of also discarding any true zero-phase lag and anti-phase interactions.

Using iPLV and edge bundling to detect true positives among artificial and spurious connections. On a grid toy model, one true interaction (coupling = 0.9) was simulated between two sources U 1 and V 1 (the centers of the concentric circles), while the rest of sources were uncorrelated. Linear mixing, i.e., magnetic field spread from true sources, was simulated as a 2D Gaussian function and its strength is indicated by color gradient. (a) Phase interaction between all source pairs was estimated with the phase locking value (PLV). Both artificial and spurious connections are reported by PLV. Signal mixing directly causes zero-phase lag artificial connections between a true source and its neighbors. Additionally, it indirectly causes non-zero-phase lag spurious connections between true sources and another source’s neighbors or between neighbors of the true sources. (b) Using iPLV, the imaginary part of complex PLV, artificial connections are not reported because iPLV is insensitive to zero-phase lag interactions. However, spurious connections, which can have non-zero phase lag, are still observed. (c) Hyperedge bundling: an edge representing a true interaction and the edges representing its neighboring spurious connections can be bundled into a hyperedge connecting regions U and V. (d) On a realistic cortical system, 200 edges connecting randomly chosen nodes were simulated and estimated with iPLV (the ground truth graph has uniform degree distribution K = 1). Significant edges were overlaid on a flattened cortical map. The resulting graph contains 901 significant edges, of which 112 are true positives (red), and has average path length = 4.5 and efficiency = 0.22. The color code of the regions indicates cortical subsystems identified with fMRI-based FC network analysis (Yeo et al. 2011). (e) The graph in d is transformed into a hypergraph where raw edges were all bundled into hyperedges, and only hyperedges that contain at least five raw edges are shown

Spurious interactions are second-order false positives indirectly caused by linear mixing. Spurious interactions arise from the signal spread of truly connected sources to their neighboring sources, which in turn leads to false positive observations of synchronization between such neighbors, or between these neighbors and one of the truly connected sources (Fig. 4a and b). Because two true interacting neuronal processes can have non-zero-phase lag, the same is true for spurious interactions.

Simulations using zero-phase lag insensitive interaction metrics show that a single simulated true interaction can be mirrored into 10–100 of spurious interactions in source-reconstructed cortical networks. Such a large number of false positives severely confound the neurophysiological interpretability of the graphs and distorts any network metrics based on the topology of the connectivity network. We have examined an approach to minimize the impact of spurious interactions using graph and hypergraph theoretical tools. First, we denote a cortical connectivity network as a “raw” graph wherein brain regions or parcels are nodes and significant interactions are raw edges (Newman 2003; Rubinov and Sporns 2010). We argue that by transforming a “raw” graph into a hypergraph, in which the mixing effect is estimated and raw edges are bundled into hyperedges, it is possible to identify true interactions with high reliability. In such a hypergraph, a true cortical interaction between parcels U 1 and V 1 and the spurious interactions involving neighboring parcels to U 1 and V 1 would be represented as a hyperedge between regions U and V (Fig. 4c). These regions U and V would be centered on U 1 and V 1, respectively.

This hyperedge bundling solution provides a much improved estimate of the true underlying neuronal connectivity graph as well as an enhanced visualization that represents the extent of field spread of the sources. The implementation of hyperedge bundling requires: (1) a linear mixing function f mix(u i ,v j ) that quantifies the amount of signal mixing between all pairs of brain region and (2) a sparse pairwise interaction matrix as raw graph G raw with n edges resulting from statistical analysis and/or thresholding. The linear mixing function can be obtained through a similar process as described in Sect. 3 for fidelity estimation, in which simulated time series are forward and inverse modeled.

In the bundling procedure, first, an edge-to-edge adjacency matrix EA ij is constructed using f mix(u i ,v j ) and G raw. EA ij is an n × n matrix that describes the adjacency between all edge pairs in signal mixing, i.e., the closer two edges are in mixing, the more likely they are to reflect the same underlying true interaction(s). Next, a hierarchical clustering algorithm is applied to EA ij so that the resulting raw edge clusters become hyperedges, in which all raw edges are close to each other in signal mixing but far away from the raw edges assigned to other hyperedges.

Further, one can remove small hyperedges whose number of constituent raw edges is below a threshold. These hyperedges are more likely to represent false positives, although a small fraction of true positives may also be lost in the process.This can be seen in simulated data, where this approach can convert a raw graph of 900 edges, of which the vast majority are false positives, into a hypergraph of a few dozen hyperedges (Fig. 4d, e). Hyperedge bundling hence appears to be a promising solution to the problem of spurious edges and can theoretically be applied to graphs obtained with any interaction metric and source reconstruction method.

References

Antiqueira L, Rodrigues FA, van Wijk BCM, Costa LF, Daffertshofer A (2010) Estimating complex cortical networks via surface recordings—a critical note. Neuroimage 53:439–449

Bassett DS, Bullmore E, Verchinski BA, Mattay VS, Weinberger DR, Meyer-Lindenberg A (2008) Hierarchical organization of human cortical networks in health and schizophrenia. J Neurosci 28:9239–9248

Bassett DS, Nelson BG, Mueller BA, Camchong J, Lim KO (2011) Altered resting state complexity in schizophrenia. Neuroimage 59:2196–2207

Bell AJ, Sejnowski TJ (1995) An information-maximization approach to blind separation and blind deconvolution. Neural Comput 7:1129–1159

Brookes MJ, Woolrich MW, Barnes GR (2012) Measuring functional connectivity in MEG: a multivariate approach insensitive to linear source leakage. Neuroimage 63:910–920

Bruns A (2004) Fourier-, hilbert- and wavelet-based signal analysis: are they really different approaches? J Neurosci Methods 137:321–332

Bullmore E, Sporns O (2009) Complex brain networks: graph theoretical analysis of structural and functional systems. Nat Rev Neurosci 10:186–198

Buzsaki G, Draguhn A (2004) Neuronal oscillations in cortical networks. Science 304:1926–1929

Cammoun L, Gigandet X, Meskaldji D, Thiran JP, Sporns O, Do KQ, Maeder P, Meuli R, Hagmann P (2012) Mapping the human connectome at multiple scales with diffusion spectrum MRI. J Neurosci Methods 203:386–397

Daffertshofer A, van Wijk BCM (2011) On the influence of amplitude on the connectivity between phases. Front Neuroinform 5:6

Dale AM, Fischl B, Sereno MI (1999) Cortical surface-based analysis. I. Segmentation and surface reconstruction. Neuroimage 9:179–194

Drakesmith M, El-Deredy W, Welbourne S (2013) Reconstructing coherent networks from electroencephalography and magnetoencephalography with reduced contamination from volume conduction or magnetic field spread. PLoS One 8, e81553

Fischl B, Sereno MI, Dale AM (1999) Cortical surface-based analysis. II: inflation, flattening, and a surface-based coordinate system. Neuroimage 9:195–207

Fischl B, Salat DH, Busa E, Albert M, Dieterich M, Haselgrove C, van der Kouwe A, Killiany R, Kennedy D, Klaveness S, Montillo A, Makris N, Rosen B, Dale AM (2002) Whole brain segmentation: automated labeling of neuroanatomical structures in the human brain. Neuron 33:341–355

Fischl B, van der Kouwe A, Destrieux C, Halgren E, Segonne F, Salat DH, Busa E, Seidman LJ, Goldstein J, Kennedy D, Caviness V, Makris N, Rosen B, Dale AM (2004) Automatically parcellating the human cerebral cortex. Cereb Cortex 14:11–22

Fries P (2015) Rhythms for cognition: communication through coherence. Neuron 88:220–235

Gross J, Baillet S, Barnes GR, Henson RN, Hillebrand A, Jensen O, Jerbi K, Litvak V, Maess B, Oostenveld R, Parkkonen L, Taylor JR, van Wassenhove V, Wibral M, Schoffelen JM (2013) Good practice for conducting and reporting MEG research. Neuroimage 65:349–363

Hagmann P, Cammoun L, Gigandet X, Meuli R, Honey CJ, Wedeen VJ, Sporns O (2008) Mapping the structural core of human cerebral cortex. PLoS Biol 6, e159

Hamalainen MS, Sarvas J (1989) Realistic conductivity geometry model of the human head for interpretation of neuromagnetic data. IEEE Trans Biomed Eng 36:165–171

Hillebrand A, Barnes GR, Bosboom JL, Berendse HW, Stam CJ (2012) Frequency-dependent functional connectivity within resting-state networks: an atlas-based MEG beamformer solution. Neuroimage 59:3909–3921

Hipp JF, Hawellek DJ, Corbetta M, Siegel M, Engel AK (2012) Large-scale cortical correlation structure of spontaneous oscillatory activity. Nat Neurosci 15(6):884–890

Ioannidis JP (2005) Why most published research findings are false. PLoS Med 2, e124

Korhonen O, Palva S, Palva JM (2014) Sparse weightings for collapsing inverse solutions to cortical parcellations optimize M/EEG source reconstruction accuracy. J Neurosci Methods 226C:147–160

Kriegeskorte N, Simmons WK, Bellgowan PS, Baker CI (2009) Circular analysis in systems neuroscience: the dangers of double dipping. Nat Neurosci 12:535–540

Lachaux JP, Rodriguez E, Martinerie J, Varela FJ (1999) Measuring phase synchrony in brain signals. Hum Brain Mapp 8:194–208

Li X, Yao X, Fox J, Jefferys JG (2007) Interaction dynamics of neuronal oscillations analysed using wavelet transforms. J Neurosci Methods 160:178–185

Lin FH, Witzel T, Ahlfors SP, Stufflebeam SM, Belliveau JW, Hamalainen MS (2006) Assessing and improving the spatial accuracy in MEG source localization by depth-weighted minimum-norm estimates. Neuroimage 31:160–171

Micheloyannis S, Pachou E, Stam CJ, Breakspear M, Bitsios P, Vourkas M, Erimaki S, Zervakis M (2006) Small-world networks and disturbed functional connectivity in schizophrenia. Schizophr Res 87:60–66

Mosher JC, Leahy RM, Lewis PS (1999) EEG and MEG: forward solutions for inverse methods. IEEE Trans Biomed Eng 46:245–259

Newman M (2003) The structure and function of complex networks. SIAM Rev 45:167–256

Nikulin VV, Linkenkaer-Hansen K, Nolte G, Lemm S, Muller KR, Ilmoniemi RJ, Curio G (2007) A novel mechanism for evoked responses in the human brain. Eur J Neurosci 25:3146–3154

Palva JM, Palva S, Kaila K (2005) Phase synchrony among neuronal oscillations in the human cortex. J Neurosci 25:3962–3972

Palva S, Palva JM (2012) Discovering oscillatory interaction networks with M/EEG: challenges and breakthroughs. Trends Cogn Sci 16:219–230

Palva JM, Monto S, Kulashekhar S, Palva S (2010) Neuronal synchrony reveals working memory networks and predicts individual memory capacity. Proc Natl Acad Sci USA 107:7580–7585

Palva S, Kulashekhar S, Hamalainen M, Palva JM (2011) Localization of cortical phase and amplitude dynamics during visual working memory encoding and retention. J Neurosci 31:5013–5025

Rubinov M, Sporns O (2010) Complex network measures of brain connectivity: uses and interpretations. Neuroimage 52:1059–1069

Schoffelen JM, Gross J (2009) Source connectivity analysis with MEG and EEG. Hum Brain Mapp 30:1857–1865

Sharon D, Hamalainen MS, Tootell RB, Halgren E, Belliveau JW (2007) The advantage of combining MEG and EEG: comparison to fMRI in focally stimulated visual cortex. Neuroimage 36:1225–1235

Siebenhühner F, Weiss SA, Coppola R, Weinberger DR, Bassett DS (2013) Intra- and inter-frequency brain network structure in health and schizophrenia. PLoS One 8, e72351

Singer W (1999) Neuronal synchrony: a versatile code for the definition of relations? Neuron 24(49–65):111–125

Singer W (2009) Distributed processing and temporal codes in neuronal networks. Cogn Neurodyn 3:189–196

Sinkkonen J, Tiitinen H, Naatanen R (1995) Gabor filters: an informative way for analysing event-related brain activity. J Neurosci Methods 56:99–104

Stam CJ, van Straaten ECW (2012) Go with the flow: use of a directed phase lag index (dPLI) to characterize patterns of phase relations in a large-scale model of brain dynamics. Neuroimage 62:1415–1428

Stam CJ, Nolte G, Daffertshofer A (2007) Phase lag index: assessment of functional connectivity from multi channel EEG and MEG with diminished bias from common sources. Hum Brain Mapp 28:1178–1193

Taulu S, Simola J, Kajola M (2005) Applications of the signal space separation method. IEEE Trans Signal Process 53:3359–3372

Van Veen BD, van Drongelen W, Yuchtman M, Suzuki A (1997) Localization of brain electrical activity via linearly constrained minimum variance spatial filtering. IEEE Trans Biomed Eng 44:867–880

van Wijk Bernadette CM, Stam CJ, Daffertshofer A (2010) Comparing brain networks of different size and connectivity density using graph theory. PLoS One 5, e13701

Vinck M, Oostenveld R, van Wingerden M, Battaglia F, Pennartz CM (2011) An improved index of phase-synchronization for electrophysiological data in the presence of volume-conduction, noise and sample-size bias. Neuroimage 55:1548–1565

Yeo BT, Krienen FM, Sepulcre J, Sabuncu MR, Lashkari D, Hollinshead M, Roffman JL, Smoller JW, Zollei L, Polimeni JR, Fischl B, Liu H, Buckner RL (2011) The organization of the human cerebral cortex estimated by intrinsic functional connectivity. J Neurophysiol 106:1125–1165

Watts DJ (2004) Small worlds. Princeton University Press, Princeton, NJ

Whalen C, Maclin EL, Fabiani M, Gratton G (2008) Validation of a method for coregistering scalp recording locations with 3D structural MR images. Hum Brain Mapp 29:1288–1301

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Rights and permissions

Copyright information

© 2016 Springer International Publishing Switzerland

About this chapter

Cite this chapter

Siebenhühner, F., Lobier, M., Wang, S.H., Palva, S., Palva, J.M. (2016). Measuring Large-Scale Synchronization with Human MEG and EEG: Challenges and Solutions. In: Palva, S. (eds) Multimodal Oscillation-based Connectivity Theory. Springer, Cham. https://doi.org/10.1007/978-3-319-32265-0_1

Download citation

DOI: https://doi.org/10.1007/978-3-319-32265-0_1

Published:

Publisher Name: Springer, Cham

Print ISBN: 978-3-319-32263-6

Online ISBN: 978-3-319-32265-0

eBook Packages: Biomedical and Life SciencesBiomedical and Life Sciences (R0)