Abstract

We present a new approach to prove the macroscopic hydrodynamic behaviour for interacting particle systems, and as an example we treat the well-known case of the symmetric simple exclusion process (SSEP). More precisely, we characterize any possible limit of its empirical density measures as solutions to the heat equation by passing to the limit in the gradient flow structure of the particle system.

Access provided by Autonomous University of Puebla. Download conference paper PDF

Similar content being viewed by others

Keywords

1 Introduction

The aim of this work is to show how one can use gradient flow structures to prove convergence to the hydrodynamic limit for interacting particle systems. The exposition is focused on the case of the symmetric simple exclusion process on the discrete d-dimensional torus, but the strategy can be adapted to other reversible particle systems, such as zero-range processes (see [11] for the definitions of these models). Gradient flows are ordinary differential equations of the form

where \(\nabla V\) denotes the gradient of the function V. De Giorgi and his collaborators showed in [5] how to give a meaning to solutions to such equations in the setting of metric spaces: these solutions are called minimizing-movement solutions, or curves of maximal slope. When considering the case of spaces of probability measures, one can use this notion to rewrite the partial differential equations governing the time evolution of the laws of diffusion processes, such as the heat equation, as gradient flows, for which V is the entropy with respect to the optimal transport (or Wasserstein) distance. We refer to [3] for more details. This framework can be adapted to the case of reversible Markov chains on finite spaces. This was proven independently by Maas [13] and Mielke [16], who both developed a discrete counterpart to the Lott-Sturm-Villani theory of lower bounds on Ricci curvature for metric spaces.

These gradient flow structures are a powerful tool to study convergence of sequences of dynamics to some limit. Two main strategies have already been developed. One of them consists in using the discrete (in time) approximation schemes suggested by the gradient flow structure (see for example [4]). The second one, which we shall use here, consists in characterizing gradient flows in terms of a relation between the energy function and its variations, and passing to the limit in this characterization. It was first developed by Sandier and Serfaty in [18], and then generalized in [19]. This strategy can be combined with the gradient flow structure of [13, 16] to prove convergence to some scaling limit for interacting particle systems. This was recently done for chemical reaction equations in [14] and mean-field interacting particle systems on graphs in [6].

Gradient flow structures are also related to large deviations, at least when considering diffusion processes, see [1, 8]. While we only present here the case of the SSEP, the technique is fairly general, and can be adapted to other reversible interacting particle systems. For example, the adaptation of the proof to the case of a zero-range process on the lattice (with nice rates) is quite straightforward. It would be very interesting to apply this method to obtain other PDEs, more degenerate than the heat equation, as hydrodynamic limits of some interacting particle system: for instance, porous medium and fast diffusion equations (see [17, 20]) also have a gradient flow structure, and are not directly solvable by standard techniques.

The plan of the sequel is as follows: in Sect. 2, we present the gradient flow framework for Markov chains on discrete spaces developed in [13, 16]. In Sect. 3, we expose the setup for proving convergence of gradient flows. Finally, in Sect. 4, we investigate the symmetric simple exclusion process, and reprove the convergence to its hydrodynamic limit.

2 Gradient Flow Structure for Reversible Markov Chains

2.1 Framework

We start by describing the gradient flow framework for Markov chains on discrete spaces. The presentation we use here is the one of [13]. We consider an irreducible continuous time reversible Markov chain on a finite space \(\mathscr {X}\) with kernel \(K : \mathscr {X}\times \mathscr {X} \rightarrow \mathbb {R}_+\) and invariant probability measure \(\nu \). Let \(\mathscr {P}(\mathscr {X})\) (resp. \(\mathscr {P}_+(\mathscr {X})\)) be the set of probability densities (resp. positive) with respect to \(\nu \). The probability law \(\rho _t\nu \) of the Markov chain at time t satisfies the evolution equation

Hereafter we denote by \(\dot{\rho }_t(x)\) the derivative with respect to time of the function \((t,x)\mapsto \rho _t(x)\). Given a function \(\psi : \mathscr {X} \rightarrow \mathbb {R}\), we define \(\nabla \psi (x,y): = \psi (y) - \psi (x)\). The discrete divergence of a function \(\varPhi : \mathscr {X}\times \mathscr {X}\longrightarrow \mathbb {R}\) is defined as

With these definitions, we have the integration by parts formula

Let us introduce three notions we shall use to define the gradient flow structure:

Definition 2.1

-

1.

The relative entropy with respect to \(\nu \) is defined as

$$\begin{aligned} {{{\mathrm{Ent}}}}_\nu (\rho ) := \sum _{x \in \mathscr {X}} \nu (x)\rho (x)\log \rho (x), \quad \text { for } \rho \in \mathscr {P}(\mathscr {X}), \end{aligned}$$with the convention that \(\rho (x)\log \rho (x)=0\) if \(\rho (x)=0\). We sometimes denote \(\mathscr {H}(\rho ):={{{\mathrm{Ent}}}}_\nu (\rho )\), whenever \(\nu \) is fixed and no confusion arises.

-

2.

The symmetric Dirichlet form is given for two real-valued functions \(\phi ,\psi \) by

$$\begin{aligned} \mathscr {E}(\phi , \psi ) := \frac{1}{2}\sum _{x,y \in \mathscr {X}} (\phi (y) - \phi (x))(\psi (y) - \psi (x))K(x,y)\nu (x), \end{aligned}$$ -

3.

The Fisher information (or entropy production) writes as \(\mathscr {I}(\rho ) := \mathscr {E}(\rho , \log \rho )\).

Notice that \({{\mathrm{Ent}}}_{\nu }\) is the mathematical entropy, and not the physical entropy. It decreases along solutions of (1), so in physical terms it plays the role of a free energy. We call \(\mathscr {I}\) the entropy production since along solutions of (1) we have

2.2 Continuity Equation

We introduce the logarithmic mean \(\varLambda (a,b)\) of two non-negative numbers a, b as

and \(\varLambda (a,a)=a\), and also \(\varLambda (a,b)=0\) if \(a=0\) or \(b=0\). The mean \(\varLambda \) satisfies:

Let us now define, for \(\rho \in \mathscr {P}_+(\mathscr {X})\), its logarithmic mean \(\hat{\rho }\) defined on \(\mathscr {X}\times \mathscr {X}\) as

In order to define a suitable metric on \(\mathscr {P}(\mathscr {X})\), we need a representation of curves as solving a continuity equation:

Lemma 2.1

Given a smooth flow of positive probability densities \(\{\rho _t\}_{t \ge 0}\) on \(\mathscr {X}\), there exists a function \((t,x)\mapsto \psi _t(x)\) such that the following continuity equation holds for any \(t \ge 0\) and \(x \in \mathscr {X}\):

Moreover, for any \(t\ge 0\), \(\psi _t(\cdot )\) is unique up to an additive constant.

We refer to [13, Sect. 3] for the proof.

Definition 2.2

Given \(\rho \in \mathscr {P}(\mathscr {X})\) and \(\psi :\mathscr {X}\rightarrow \mathbb {R}\), we define the action

A distance between two probability densities \((\rho _0,\rho _1)\) could then be defined as the infimum of the action of all curves \(\{\rho _t,\psi _t\}_{t\in [0,1]}\) linking these densities, as was done in [13]. However, we do not need to introduce that metric here, since we shall only use the formulation of gradient flows as minimizing-movement curves, as follows:

Proposition 2.2

Let \(\{\rho _t\}_{t \ge 0}\) be a smooth flow of probability densities on \(\mathscr {X}\), and let \(\{\psi _t\}_{t\ge 0}\) be such that the continuity equation (5) holds. Then, for any \(T>0\),

with equality if and only if \(\{\rho _t\}_{t \ge 0}\) is the flow of the Markov process on \(\mathscr {X}\) with kernel K and invariant measure \(\nu \), solution to (1).

This is the analogue of the characterization of solutions to \(\dot{x_t} = -\nabla V(x_t)\) on \(\mathbb {R}^d\) as the only curves for which the non-negative functional

cancels. Hence in the framework of Markov chains, \({{\mathrm{Ent}}}_{\nu }\) plays the role of V, and the entropy production \(\mathscr {I}\) plays the role of \(|\nabla V|^2\).

Proof

(of Proposition 2.2) Denote \(\mathscr {H}(\rho )={{\mathrm{Ent}}}_\nu (\rho )\). We have

Using the reversibility of the invariant measure \(\nu \), we write

with equality if and only if, for all \(x,y \in \mathscr {X}\) and almost every \(t \in [0, T]\), we have

which is equivalent to saying that for almost every t and for every x we have

3 Scaling Limits and Gradient Flows

With the formulation of Proposition 2.2, we can use the approach of Sandier and Serfaty [19] to study convergence of sequences of Markov chains to a scaling limit. Let \((K_n)\) be a sequence of reversible Markov kernels on finite spaces \(\mathscr {X}_n\), and let \((\nu _n)\) be the sequence of invariant measures on \(\mathscr {X}_n\). Since we wish to investigate the asymptotic behaviour of the sequence of random processes, it is much more convenient to work in a single space \(\mathscr {X}\) that contains all the \(\mathscr {X}_n\). Hence we shall assume that we are given a space \(\mathscr {X}\) and a collection of embeddings \(\mathbf {p}_n : \mathscr {X}_n \longrightarrow \mathscr {X}\). In practice, the choice of \(\mathscr {X}\) and \(\mathbf {p}_n\) is suggested by the model under investigation. In the next section, which is focused on the simple exclusion process on the torus, the embeddings will map a configuration \(\eta \) onto the associated empirical measure \(\pi ^n(\eta )\) (see (12), Sect. 4.1). Such embeddings immediately define embeddings of \(\mathscr {P}(\mathscr {X}_n)\) into \(\mathscr {P}(\mathscr {X})\).

In order to simplify the exposition below, we adopt the following convention: whenever we say that a sequence \((x_n)\) of elements of \(\mathscr {X}_n\) converges to \(x \in \mathscr {X}\), we shall mean that \(\mathbf {p}_n(x_n) \longrightarrow x\) as n goes to infinity. In particular, the topology used for convergence is implicitly the topology of \(\mathscr {X}\), which is assumed to be a separable complete metric space. The strategy is to characterize possible candidates for the limit as gradient flows. For that purpose we give a definition of minimizing-movement curves in the metric setting:

Definition 3.1

Let \((\mathscr {X},d)\) be a complete metric space. The gradient flows of an energy functional \(\mathscr {H} : \mathscr {X}\rightarrow \mathbb {R}\) with respect to the metric d are the curves \(\{m_t\}\) s.t.

where g is the local slope for \(\mathscr {H}\), defined as

Remark 3.1

This is not a complete definition. To make it correct, we should introduce the notion of absolutely continuous curves, whose slopes are well defined. This is not a real issue here, since we shall only use it for reversible Markov chains (for which the notions have already been well defined previously for curves of strictly positive probability measures) and the heat equation, for which smooth curves of strictly positive functions do not cause any issue (see the next example). We refer to [3, 19] for a more rigorous discussion of the issues in the metric setting.

Example 3.1

(Heat equation) Let us consider the parabolic PDE

We know from [2] that (7) is associated to a gradient flow, since we have:

with \(h(x) = x\log x + (1-x)\log (1-x)\) and, given \(u:[0,1] \rightarrow \mathbb {R}\),

where the supremum is over all smooth test functions J.

We now state the main result of that section. Hereafter, when we assert that a sequence of curves of probability measures (in \(\mathscr {P}(\mathscr {X}_n) \hookrightarrow \mathscr {P}(\mathscr {X})\)) converges to a deterministic curve \(\{m_t\}\) (in \(\mathscr {X}\)), we mean that it converges to a curve of Dirac measures \(\{\delta _{m_t}\}\). Definition 4.1 below gives a more precise meaning in the case of particle systems. It is important to stress that we study convergence of probability measures, which are deterministic objects.

Theorem 3.1

Let \((a_n)\) be an increasing diverging sequence of positive numbers. We first assume that the topology on \(\mathscr {P}(\mathscr {X})\) has the following property:

\((\mathbf {P})\) For any sequence \((\rho _t^n\nu _n)\) of smooth curves of positive probability measures that converges to some deterministic curve \(\{m_t\}\), the following inequalities hold:

$$\begin{aligned} \liminf _{n \rightarrow \infty }&\frac{1}{a_n}{{\mathrm{Ent}}}_{\nu _n}(\rho _T^n) \ge \mathscr {H}(m_T)\end{aligned}$$(8)$$\begin{aligned} \liminf _{n \rightarrow \infty }&\frac{1}{a_n}\int _0^T{\mathscr {I}_n(\rho _t^n)dt} \ge \int _0^T{g(m_t)dt} \end{aligned}$$(9)$$\begin{aligned} \liminf _{n \rightarrow \infty }&\frac{1}{a_n}\int _0^T{\mathscr {A}_n(\rho _t^n, \psi _{t}^n)dt} \ge \int _0^T{|\dot{m}_t|^2dt}, \end{aligned}$$(10)where \(\psi _t^n\) is such that \((\rho _t^n,\psi _t^n)\) solves (5).

Now consider a sequence \((\rho _t^n\nu _n)\) of gradient flows (so that there is equality in (6)), assume that the initial sequence \((\rho _0^n\nu _n)\) does converge in distribution to some \(m_0\), and that moreover

Then, any possible weak limit \(\{m_t\}\) of \((\rho _t^n\nu _n)\) is almost surely a gradient flow of the energy \(\mathscr {H}\), starting from \(m_0\). In particular, if gradient flows starting from a given initial data are unique, \((\rho ^n_t\nu _n)\) weakly converges to a Dirac measure concentrated on the unique gradient flow of \(\mathscr {H}\) starting from \(m_0\).

Moreover, for any \(t \in [0,T]\), we have

Above \((a_n)\) is a sequence of weights that corresponds to the correct scaling of the system. For particle systems on the discrete torus of length n in dimension d under diffusive scaling, we would take \(a_n = n^d\). This result is a slight variation of the abstract method developed in [19], to which we refer for more details. The main difference (apart from the setting which is restricted to gradient flows in spaces of probability measures arising from reversible Markov chains) is that we consider curves of probability measures that converge to a deterministic curve, rather than any possible limit.

One of the interesting features of this technique is that it does not require an assumption of uniform semi-convexity on the sequence of relative entropies, which can be hard to establish for interacting particle systems (see [7] for the general theory of geodesic convexity of the entropy for Markov chains, and [9] for the study of this property for interacting particle systems on the complete graph). Such an assumption of semi-convexity is known as a lower bound on Ricci curvature for the Markov chain, by analogy with the situation for Brownian motion on a Riemannian manifold. For the simple exclusion on the discrete torus, it seems reasonable to conjecture that curvature is non-negative, but this is still an unsolved problem.

Proof

First of all, for any weak limit \(\mathscr {Q}\) of the laws of the trajectories, we also have

and

where we denote by \(\{m_t\}\) a random trajectory with law \(\mathscr {Q}\). This is a direct consequence of the following lemma (whose proof is given below):

Lemma 3.2

Let \((f_n)\) be a sequence of real-valued, non-negative functions on a space \((\varOmega ,\mathbb {P})\), and assume that there exists a function f such that for any sequence of random variables \((X_n)\) that converges in law to a deterministic limit x, we have

Then, for any sequence \((X_n)\) of random variables that converges in law to a random variable \(X_{\infty }\), we have

We now use Proposition 2.2 with the gradient flows \(\{\rho _t^n\nu _n\}\), and pass to the limit in

and therefore

Since the above quantity is an expectation of a non-negative functional, we see that

This means that \(\mathscr {Q}\)-almost surely, \(\{m_t\}\) is a gradient flow of \(\mathscr {H}\). If uniqueness of gradient flows with initial condition \(m_0\) holds, convergence immediately follows.

Convergence of the relative entropy at time T necessarily holds, since otherwise it would contradict (11). Finally, it also holds at any other time \(t \in [0,T]\), since one can rewrite the same result on the time-interval [0, t].

We still have to prove Lemma 3.2. This proof is taken from [6].

Proof

(of Lemma 3.2) Consider a sequence \((X_n)\) that converges in law to a random variable \(X_{\infty }\). Using the almost-sure representation theorem, there exists a sequence \((Y_n)\) such that for any n, \(Y_n\) has the same law as \(X_n\), and \((Y_n)\) almost surely converges to \(Y_{\infty }\). If we condition the whole sequence on the event \(\{Y_{\infty } = y\}\), then \((Y_n)\) almost surely converges to y. Then we have, using Fatou’s lemma

4 Symmetric Simple Exclusion Process (SSEP)

4.1 Model: Definitions and Notations

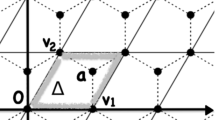

To make notations easier we consider the interacting particle systems on the one-dimensional torus \(\mathbb {T}_n=\{0,\ldots ,n-1\}\), but the result is valid in any dimension \(d\ge ~1\). Let us define \(\mathscr {X}_n:=\{0,1\}^{\mathbb {T}_n}\), \(\mathscr {X}:=\{0,1\}^{\mathbb {Z}}\), and \(\mathbb {T}= [0,1)\) the continuous torus, We create a Markov process \(\{\eta _t^n \; ; \; t \ge 0 \}\) on the state space \(\mathscr {X}_n\), which satisfies for any \(\eta \in \mathscr {X}_n\):

-

\(\eta (i)=1\) if there is a particle at site \(i \in \mathbb {T}_n\),

-

\(\eta (i)=0\) if the site i is empty,

-

any particle waits independently an exponential time and then jumps to one of its neighbouring sites with probability 1 / 2, provided that the chosen site is empty.

We are looking at the evolution of the Markov process in the diffusive time scale, meaning that time is accelerated by \(n^2\). The generator is given for \(f:\mathscr {X}_n\rightarrow \mathbb {R}\) by

where \(\eta ^{i,j}\) is the configuration obtained from \(\eta \) exchanging the occupation variables \(\eta (i)\) and \(\eta (j)\). The hydrodynamics behavior of the SSEP is well-known, and we refer the reader to [11] for a survey. Let \(\nu _\alpha ^n\) be the Bernoulli product measure of parameter \(\alpha \in (0,1)\), the invariant measures for the dynamics. Under \(\nu _\alpha ^n\), the variables \(\{\eta (i)\}_{i \in \mathbb {T}_n}\) are independent with marginals given by

Let us fix once and for all \(\alpha \in (0,1)\) and denote by \(\rho _t^n\) the probability density of the law of \(\eta _t^n\) (whose time evolution is generated by \(n^2\mathscr {L}_n\)) with respect to \(\nu _\alpha ^n.\)

To prove convergence, we need to embed our particle configurations in a single metric space. For each configuration \(\eta \in \mathscr {X}_n\), we construct a measure on \(\mathscr {X}\) associated to \(\eta \), denoted by \(\pi ^n(\eta )\). We do it here through the empirical measures:

where \(\delta _\theta \) stands for the Dirac measure concentrated on \(\theta \in \mathbb {T}\). Let us denote by \(\mathscr {M}_+=\mathscr {M}_+(\mathscr {X})\) the space of finite positive measures on \(\mathscr {X}\) endowed with the weak topology. Assume moreover that, for each n, \(\pi ^n:\mathscr {X}_n\rightarrow \mathscr {M}_+\) is a continuous function. Our goal is to prove the convergence of the flow of measures \((\pi ^n(\eta _t^n))\). In particular, \(\pi ^n\) inherits the Markov property from \(\eta ^n\).

We start by defining properly two notions of convergence. For any function \(G:\mathscr {X}\rightarrow \mathbb {R}\) and any measure \(\pi \) on \(\mathscr {X}\), we denote by \(\langle \pi ,G\rangle \) the integral of G with respect to the measure \(\pi \). In the following \(T>0\) is fixed.

Definition 4.1

Let \((\pi _t^n)\) be a sequence of flows of measures, each element belonging to the Skorokhod space \(\mathscr {D}([0,T],\mathscr {M}_+)\). For each n, let \(\mathscr {Q}_n\) be the probability measure on \(\mathscr {D}([0,T],\mathscr {M}_+)\) corresponding to \(\{\pi _t^n\; ; \; t\in [0,T]\}\).

-

1.

We say that the sequence \((\pi _t^n)\) converges to the deterministic flow \(\{\pi _t\}\) if the probability measure \(\mathscr {Q}_n\) converges to the Dirac probability measure concentrated on the deterministic flow \(\{\pi _t\}\).

-

2.

Fix \(t \in [0,T]\). We say that \((\pi _t^n)\) converges in probability to the deterministic measure \(\pi _t \in \mathscr {M}_+\) if, for all smooth test functions \(G: \mathscr {X}\rightarrow \mathbb {R}\), and all \(\delta >0\),

$$\begin{aligned} \mathscr {Q}_n\Big [ \big | \big \langle \pi _t^n, G \big \rangle - \big \langle \pi _t, G \big \rangle \big | > \delta \Big ] \xrightarrow [n\rightarrow \infty ]{} 0. \end{aligned}$$(13)

The next proposition gives the equivalence between the two notions above.

Proposition 4.1

Let \((\pi _t^n)\) be a sequence of flows of measures which converges to a deterministic flow \(\{\pi _t\}\). Assume that \(t \in [0,T] \mapsto \pi _t \in \mathscr {M}_+\) is continuous (with respect to the weak topology). Then, for any \(t \in [0,T]\) fixed, \((\pi _t^n)\) converges in probability to \(\pi _t \in \mathscr {M}_+\).

Proof

By assumption, the limiting probability measure on \(\mathscr {D}([0,T],\mathscr {M}_+)\) is concentrated on weakly continuous trajectories. Therefore, the limiting flow is almost surely continuous, and the map \(\{\pi _t\; ; \; t\in [0,T]\} \mapsto \pi _t\) is continuous from \(\mathscr {D}([0,T],\mathscr {M}_+)\) to \(\mathscr {M}_+\). Then, for \(t \in [0,T]\), \((\pi _t^n)\) converges in distribution to \(\pi _t\). Since the latter is deterministic, this induces convergence in probability.

We recall here the main result, that we are going to prove in a different way. Recall that \(\pi _t^n\) is the empirical measure defined in (12) and \(\mathscr {Q}_n\) is the probability measure on \(\mathscr {D}([0,T],\mathscr {M}_+)\) corresponding to the flow \(\{\pi _t^n\}\).

Theorem 4.2

(Hydrodynamic limits for the SSEP) Fix a density profile \(m_0: \mathbb {T}\rightarrow [0,1]\) and let \((\mu ^n)\) be a sequence of probability measures such that, under \(\mu ^n\), the sequence \((\pi _0^n(d\theta ))\) converges in probability to \(m_0(\theta )d\theta \). In other words,

for any \(\delta >0\) and any smooth function \(G: \mathbb {T}\rightarrow \mathbb {R}\). Assume moreover that this initial data is well-prepared, in the sense that:

where h has been defined in Example 3.1. Then, for any \(t>0\), the sequence \(\{\pi _t^n\}_{n \in \mathbb {N}}\) converges in probability to the deterministic measure \(\pi _t(d\theta )=m(t,\theta )d\theta \) where m is solution to the heat equation (7) on \(\mathbb {R}_+\times \mathbb {T}\). The entropy also converges:

Note that \(\int m(t,\theta )d\theta \) is actually a constant, given by the fixed density of particles. The convergence of the entropy is equivalent to the local Gibbs behavior (see [12]). Hence, the assumptions and conclusions are those obtained with the relative entropy method of [21]. However, the techniques and restrictions are the same as for the entropy method of [10]: we do not use smoothness of solutions to the hydrodynamic PDE, but we use the replacement lemma (see Sect. 4.3), which relies on the two-block estimate, rather than the one-block estimate alone as in [21].

4.2 The Gradient Flow Approach to Theorem 4.2

We are going to apply Theorem 3.1 to obtain Theorem 4.2. The main steps are as follows:

-

1.

We first need to prove that the sequence \((\mathscr {Q}_n)\) is relatively compact, so that there exists a converging subsequence. Such an argument was already part of the entropy method of [10]. We refer to [11][Chap. 4, Sect. 2] for the proof in the context of the simple exclusion process.

-

2.

In order to prove (8), we have to investigate the convergence of the relative entropy with respect to the invariant measure \(\nu _\alpha ^n\) towards the free energy associated to the limiting PDE (7), which in our case reads as

$$ \mathscr {H}(m)=\int _\mathbb {T}{h(m(\theta ))d\theta } - h\Big (\int _\mathbb {T}{m(\theta ) d\theta }\Big ). $$This result is actually equivalent to the large deviation principle for \(\nu ^n_{\alpha }\) (see for example [15]), and is standard (see [11]). Moreover, if our initial data is close (in relative entropy) to a slowly varying Bernoulli product measureFootnote 1 associated to m, which satisfies \( \nu ^n_{\rho (\cdot )}\{\eta (i)=1\}=m(i/n), \) then its relative entropy with respect to \(\nu _\alpha ^n\) converges to the limiting free energy, so that we can easily have (14).

-

3.

We prove the lower bound for the entropy production along curves (9) and the lower bound for the slopes (10) in Sect. 4.3.

-

4.

When passing to the limit, we obtain that for any weak limit \(\mathscr {Q}\) of \((\mathscr {Q}_n)\),

$$\begin{aligned}&\mathscr {Q}\bigg [\int _\mathbb {T}h(m(T,\theta ))d\theta - \int _\mathbb {T}h(m(0,\theta ))d\theta + \\&\qquad \qquad \frac{1}{2}\int _0^T{\int _\mathbb {T}{m(1-m)\Big (\frac{\partial (h'(m))}{\partial \theta }\Big )^2d\theta }dt} + \frac{1}{2}\int _0^T{\Vert \dot{m}_t\Vert ^2_{-1,m}\; dt}\bigg ] \le 0. \end{aligned}$$Since the expression inside the expectation is the characterization of solutions to the heat equation as minimizing-movement curves, it is non-negative, and almost surely m is a solution to the heat equation. Uniqueness of solutions starting from \(m_0\) allows us to conclude.

4.3 Bounds and Convergence

Here we prove that (9) and (10) are satisfied for the density \(\rho _{t}^n\) of the SSEP accelerated in time, assuming that the empirical measure \((\pi _t^n(d\theta ))\) converges to a deterministic curve \(m_t(\theta )d\theta \). Let us start with (10). The argument is based on a duality argument (Proposition 4.3) and on the replacement lemma (Lemma 4.4) which is commonly used in the literature (see for example [11]).

Proposition 4.3

Consider a couple \((\rho _t, \psi _t)\) satisfying the continuity equation (5) for almost every \(t\ge 0\). For any smooth (in time) function \(J: [0,T] \times \mathscr {X} \rightarrow \mathbb {R}\),

Proof

From the continuity equation (5), we have

We symmetrize in x and y the last term, and get

and therefore

To apply Proposition 4.3 to the SSEP, we consider observables of the form

for smooth functions \(G:[0,T] \times \mathbb {T}\rightarrow \mathbb {R}\). For any \(\ell \in \mathbb {N}\) and \(i\in \mathbb {T}_n\), we denote by \(\eta ^\ell (i)\) the empirical density of particles in a box of size \(2\ell +1\) centered at i:

Hereafter we also denote by \(\tau _x\) the translated operator that acts on local functions \(g:\{0,1\}^{\mathbb {Z}}\rightarrow \mathbb {R}\) as \((\tau _x g)(\eta ):=g(\tau _x \eta )\), and \(\tau _x \eta \) is the configuration obtained from \(\eta \) by shifting: \((\tau _x \eta )_y=\eta _{x+y}\). The main tool that we are going to use is the well-known replacement lemma, which is a consequence of the averaging properties of the SSEP. We recall the main statement and refer the reader to [10, 11] for a proof:

Lemma 4.4

(Replacement Lemma) Denote by \(\mathbb {P}_{\mu ^n}\) the probability measure on the Skorokhod space \(\mathscr {D}([0,T],\mathscr {X}_n)\) induced by the Markov process \(\{\eta _{t}^n\}_{t\ge 0}\) starting from \(\mu ^n\). Then, for every \(\delta >0\) and every local function g,

where

and \(\tilde{g} : (0,1) \rightarrow \mathbb {R}\) corresponds to the expected value: \(\tilde{g}(\alpha ):= \int g(\eta ) d\nu _\alpha (\eta ).\)

We are now able to conclude the proof. We treat separately the terms in the right-hand side of Proposition 4.3, taking J as in (15). Since, for any fixed t, \((\pi _t^n(d\theta ))\) converges in probability to \(\pi _t(d\theta )=m_t(\theta )d\theta \) we have

And the same happens at initial time for \(\rho _0^n\). Similarly,

Then, we write

We now use the logarithmic inequality (3) and write that the latter is smaller than

From the invariance property of \(\nu _\alpha ^n\) with respect to the change of variables \(\eta \rightarrow \eta ^{i,i+1}\), and from the smoothness of G we get that the above quantity is equal to

where \(G'\) denotes the space derivative of G.

Above we want to replace \(\eta (i)(1-\eta (i+1))\) by \(m(i/n)(1-m(i/n))\). For \(\varepsilon >0\) we define the approximation of the identity \(i_\varepsilon (u)=(2\varepsilon )^{-1} \mathbf {1}\{|u|\le \varepsilon \}.\) With that notation, \(\eta _{t}^{\varepsilon n}(0)\) is very close to \(\langle \pi _t^n, i_\varepsilon \rangle \). Let us denote \(h(\eta ):=\eta (0)(1-\eta (1))\). Since G is a smooth function, (16) equals

A summation by parts shows that the previous term can be written as

By Lemma 4.4, this expression is then equal to

where \(R_{n,\varepsilon ,T}\) vanishes in probability as n goes to infinity and then \(\varepsilon \) goes to 0. From the convergence in probability of \((\pi _t^n)\), the last expression converges to

As a result, since the convergences above are valid for any smooth function G,

In the same way, we need to prove

Since the arguments are essentially the same as for the slopes, we shall be more brief in the exposition. We denote

By duality, we have

Using the replacement lemma, passing to the supremum in G, and to the limit,

and this is exactly what we were seeking to prove.

Notes

- 1.

This is also the assumption used to make Yau’s relative entropy method work, see [21].

References

Adams, S., Dirr, N., Peletier, M.A., Zimmer, J.: From a large-deviations principle to the Wasserstein gradient flow: a new micro-macro passage. Commun. Math. Phys. 307, 791–815 (2011)

Adams, S., Dirr, N., Peletier, M. A., Zimmer, J.: Large deviations and gradient flows. Phil. Trans. R. Soc. A 371(2005), 0341 (2013)

Ambrosio, L., Gigli, N., Savaré, G.: Gradient Flows in Metric Spaces and in the Space of Probability Measures. Lectures in Mathematics ETH Zurich, 2nd edn. Birkhauser Verlag, Basel (2008)

Ambrosio, L., Savaré, G., Zambotti, L.: Existence and stability for Fokker-Planck equations with log-concave reference measure. Probab. Theory Relat. Fields 145, 517–564 (2009)

De Giorgi, E., Marino, A., Tosques, M.: Problems of evolution in metric spaces and maximal decreasing curve. Att. Acc. Naz. Linc. R. Cl. Sci. Fis. Mat. Nat. 8(68)(3), 180–187 (1980)

Erbar, M., Fathi, M., Laschos, V., Schlichting, A.: Gradient flow structure for McKean-Vlasov equations on discrete spaces. arXiv:1601.08098

Erbar, M., Maas, J.: Ricci curvature of finite Markov chains via convexity of the entropy. Arch. Ration. Mech. Anal. 206(3), 997–1038 (2012)

Fathi, M.: A gradient flow approach to large deviations for diffusion processes. Journal de Mathématiques Pures et Appliquées (2014). arXiv:1405.3910

Fathi, M., Maas, J.: Entropic Ricci curvature bounds for discrete interacting systems. Ann. Appl. Probab. (2015)

Guo, M.Z., Papanicolaou, G.C., Varadhan, S.R.S.: Nonlinear diffusion limit for a system with nearest neighbor interactions. Commun. Math. Phys. 118, 31–59 (1988)

Kipnis, C., Landim, C.: Scaling Limits of Interacting Particle Systems. Grundlehren der Mathematischen Wissenschaften [Fundamental Principles of Mathematical Sciences], vol. 320. Springer, Berlin (1999)

Kosygina, E.: The behavior of the specific entropy in the hydrodynamic scaling limit. Ann. Probab. 29(3), 1086–1110 (2001)

Maas, J.: Gradient flows of the entropy for finite Markov chains. J. Funct. Anal. 261(8), 2250–2292 (2011)

Maas, J., Mielke, A.: Gradient structures for chemical reactions with detailed balance: I. Modeling and large-volume limit (2015)

Mariani, M.: A Gamma-convergence approach to large deviations. Preprint arXiv:1204.0640v1 (2012)

Mielke, A.: Geodesic convexity of the relative entropy in reversible Markov chains. Calc. Var. Partial. Differ. Equ. 48(1–2), 1–31 (2013)

Otto, F.: The geometry of dissipative evolution equations: the porous medium equation. Commun. Partial. Differ. Equ. 26(1–2), 101–174 (2001)

Sandier, E., Serfaty, S.: Gamma-convergence of gradient flows with applications to Ginzburg-Landau. Commun. Pure Appl. Math 57(12), 1627–1672 (2004)

Serfaty, S.: Gamma-convergence of gradient flows on Hilbert and metric spaces and applications. Discret. Contin. Dyn. Syst. A 31(4), 1427–1451 (2011)

Vazquez, J.L.: The Porous Medium Equation: Mathematical Theory. Oxford Mathematical Monographs. A Clarendon Press Publication, Oxford (2006)

Yau, H.T.: Relative entropy and hydrodynamics of Ginzburg-Landau models. Lett. Math. Phys. 22, 63–80 (1991)

Acknowledgments

M.F. : I would like to thank Hong Duong, Matthias Erbar, Vaios Laschos and André Schlichting for discussions on convergence of gradient flows. Part of this work was done while I was staying at the Hausdorff Institute for Mathematics in Bonn, whose support is gratefully acknowledged. I also benefited from funding from GDR MOMAS and from NSF FRG grant DMS-1361185. M.S.: This work has been supported by the French Ministry of Education through the grant ANR (EDNHS), and also by CAPES (Brazil) and IMPA (Instituto de Matematica Pura e Aplicada, Rio de Janeiro) through a post-doctoral fellowship.

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Rights and permissions

Copyright information

© 2016 Springer International Publishing Switzerland

About this paper

Cite this paper

Fathi, M., Simon, M. (2016). The Gradient Flow Approach to Hydrodynamic Limits for the Simple Exclusion Process. In: Gonçalves, P., Soares, A. (eds) From Particle Systems to Partial Differential Equations III. Springer Proceedings in Mathematics & Statistics, vol 162. Springer, Cham. https://doi.org/10.1007/978-3-319-32144-8_8

Download citation

DOI: https://doi.org/10.1007/978-3-319-32144-8_8

Published:

Publisher Name: Springer, Cham

Print ISBN: 978-3-319-32142-4

Online ISBN: 978-3-319-32144-8

eBook Packages: Mathematics and StatisticsMathematics and Statistics (R0)