Abstract

Lie group integrators preserve by construction the Lie group structure of a nonlinear configuration space. In multibody dynamics, they support a representation of (large) rotations in a Lie group setting that is free of singularities. The resulting equations of motion are differential equations on a manifold with tangent spaces being parametrized by the corresponding Lie algebra. In the present paper, we discuss the time discretization of these equations of motion by a generalized-\(\alpha \) Lie group integrator for constrained systems and show how to exploit in this context the linear structure of the Lie algebra. This linear structure allows a very natural definition of the generalized-\(\alpha \) Lie group integrator, an efficient practical implementation and a very detailed error analysis. Furthermore, the Lie algebra approach may be combined with analytical transformations that help to avoid an undesired order reduction phenomenon in generalized-\(\alpha \) time integration. After a tutorial-like step-by-step introduction to the generalized-\(\alpha \) Lie group integrator, we investigate its convergence behaviour and develop a novel initialization scheme to achieve second-order accuracy in the application to constrained systems. The theoretical results are illustrated by a comprehensive set of numerical tests for two Lie group formulations of a rotating heavy top.

Access provided by Autonomous University of Puebla. Download chapter PDF

Similar content being viewed by others

Keywords

- Constrained System

- Order Reduction Phenomenon

- First-order Error Term

- Linear Configuration Spaces

- Algebraic Solution Components

These keywords were added by machine and not by the authors. This process is experimental and the keywords may be updated as the learning algorithm improves.

1 Introduction

Structure-preserving integrators overcome limitations of classical time integration methods from the fields of ordinary differential equations (ODEs) and differential-algebraic equations (DAEs). They are known for their favourable nonlinear stability properties for the long-term integration of conservative systems, see, e.g., (Hairer et al. 2006).

The focus of the present paper is slightly different since we consider a class of time integration methods that is tailored to flexible multibody system models with dissipative terms resulting, e.g., from friction forces or control structures. The methods are applied to constrained systems with a nonlinear configuration space with Lie group structure. They preserve this structural property of the equations of motion in the sense that the numerical solution remains by construction in this nonlinear configuration space.

The Lie group setting allows a representation of (large) rotations that is globally free of singularities. Local parametrizations could be used to transform the system in each time step in a linear configuration space such that classical time integration methods could be used. As an alternative to such local parametrizations, Simo and Vu-Quoc (1988) proposed a Newmark-type method that is directly based on the equations of motion in a nonlinear configuration space with Lie group structure.

Starting with the work of Crouch and Grossman (1993) and Munthe-Kaas (1995, 1998), the time discretization of ordinary differential equations on Lie groups has found much interest in the numerical analysis community. This work was summarized in the comprehensive survey paper by Iserles et al. (2000). In that time, the application of Lie group time integration methods to multibody system models was studied, e.g., by Bottasso and Borri (1998) and Celledoni and Owren (2003).

In 2010, the combination of Lie group time integration with the time discretization by generalized-\(\alpha \) methods was proposed, see (Brüls and Cardona 2010). Generalized-\(\alpha \) methods are Newmark type methods that go back to the work of Chung and Hulbert (1993). They may be considered as a generalization of Hilber–Hughes–Taylor (HHT) methods, see (Hilber et al. 1977), and have found new interest in industrial multibody system simulation since they avoid the very strong damping of high-frequency solution components that is characteristic of other integrators in this field, see, e.g., (Negrut et al. 2005; Lunk and Simeon 2006; Jay and Negrut 2007, 2008; Arnold and Brüls 2007).

Cardona and Géradin (1994) investigated systematically the stability and convergence of HHT methods for constrained systems. This analysis may be extended to generalized-\(\alpha \) methods, see Géradin and Cardona (2001, Sect. 10.5), and shows a risk of order reduction and large transient errors in the Lagrange multipliers and constrained forces. Numerical test results for the generalized-\(\alpha \) Lie group integrator illustrate that this undesired numerical effect is strongly related to the specific Lie group formulation of the equations of motion, see (Brüls et al. 2011).

Therefore, the error analysis for the Lie group integrator has to consider the global errors in long-term integration as well as the transient behaviour of the numerical solution. In a series of papers, we developed a strategy for defining, implementing and analysing the Lie group integrator that is based on the observation that the increments of the configuration variables in each time step are parametrized by elements of the Lie algebra, i.e., by elements of a linear space, see (Arnold et al. 2011b, 2014, 2015) and (Brüls et al. 2011, 2012). In the present paper, we follow this Lie algebra approach and consider local and global discretization errors of the Lie group integrator as elements of the corresponding Lie algebra.

We introduce the Lie group setting in a tutorial like style and show how to discretize the equations of motion by a generalized-\(\alpha \) Lie group integrator. There is a specific focus on practical aspects like corrector iteration and initialization of the integrator. In a comprehensive numerical test series, we consider different Lie group formulations of a heavy top benchmark problem. For the convergence analysis, we follow to a large extent the presentation in the recently published paper (Arnold et al. 2015).

The remaining part of the paper is organized as follows: Basic aspects of Lie group theory in the context of multibody dynamics and the equations of motion of constrained systems are introduced in Sect. 2. Furthermore, we discuss two different Lie group formulations of a rotating heavy top that will be used as benchmark problem throughout the paper.

In Sect. 3, we consider the generalized-\(\alpha \) Lie group DAE integrator and study its asymptotic behaviour for time step sizes \(h\rightarrow 0\). Classical results of Hilber and Hughes (1978) on “overshooting” of Newmark type methods in the application to linear problems with high-frequency solutions are shown to result in an order reduction phenomenon for the constrained case, see (Cardona and Géradin 1994). In Sect. 3.3, the large first-order error terms are illustrated by numerical tests for the heavy top benchmark problem. They may be reduced drastically by index reduction and a modification of the generalized-\(\alpha \) Lie group integrator that is based on the so-called stabilized index-2 formulation of the equations of motion, see Sect. 3.4. Implementation aspects and some technical details are discussed in Sects. 3.5 and 3.6.

For the convergence analysis, we discuss in Sect. 4.1 a one-step error recursion of generalized-\(\alpha \) methods for constrained systems. The coupled error propagation in differential and algebraic solution components may be studied extending the convergence analysis of ODE one-step methods to the Lie group DAE case, see Sect. 4.2. The convergence theorem for the generalized-\(\alpha \) Lie group DAE integrators is given in Sect. 4.3. It provides the basis for an optimal initialization using perturbed starting values that guarantee second-order convergence in all solution components such that order reduction may be avoided.

2 Constrained Systems in a Configuration Space with Lie Group Structure

The main interest of this paper is in time integration methods for constrained mechanical systems that have a configuration space with Lie group structure. In the present section, we introduce this Lie group setting by studying the configuration space of a rigid body (Sect. 2.1). Lie groups are differentiable manifolds that are in a very natural way parametrized locally by elements of the corresponding Lie algebra (Sect. 2.2).

Lie groups may be used to represent large rotations in \(\mathbb {R}^3\) without singularities. They are part of the mathematical framework for a generic finite element approach to flexible multibody dynamics that has been applied successfully for more than two decades (Géradin and Cardona 1989, 2001). In Sect. 2.3, we consider constrained systems and discuss the general structure of the equations of motion. As a typical example, two different Lie group formulations of a heavy top benchmark problem are introduced in Sect. 2.4. Finally, some technical details of the Lie group setting are discussed in Sect. 2.5.

2.1 The Configuration Space of a Rigid Body in \(\mathbb {R}^3\)

The position of a rigid body in an inertial frame is represented by a vector \(\mathbf {x}\in \mathbb {R}^3\), i.e., by an element of a linear space. There are three additional degrees of freedom that describe the orientation of this rigid body but these degrees of freedom may not be represented globally by elements of a three-dimensional linear space. In engineering, small deviations from a nominal state are often characterized by three angles of rotation like Euler angles or Bryant angles (Géradin and Cardona 2001, Sect. 4.8) that suffer, however, from singularities in the case of large rotations.

Alternative representations that are free of singularities are provided, e.g., by Euler parameters that are also known as quaternions (Betsch and Siebert 2009 and Géradin and Cardona 2001, Sect. 4.5) or by the rotation matrix

The set \(\mathrm {SO}(3)\) is a three-dimensional differentiable manifold in \(\mathbb {R}^{3\times 3}\) and may be combined in two alternative ways with the linear space \(\mathbb {R}^3\) to describe the configuration of the rigid body by an element \(q:=(\mathbf {R},\mathbf {x})\) of a six-dimensional group G (Brüls et al. 2011; Müller and Terze 2014a): In the direct product \(G=\mathrm {SO}(3)\times \mathbb {R}^3\), the group operation \(\circ \) is defined by

and results in kinematic relations

with \(\mathbf {u}\in \mathbb {R}^3\) denoting the translation velocity in the inertial frame and a skew symmetric matrix

that represents the angular velocity \(\varvec{\Omega }=(\,\Omega _1,\,\Omega _2,\,\Omega _3\,)^\top \in \mathbb {R}^3\). The semi-direct product \(G=\mathrm {SO}(3)\ltimes \mathbb {R}^3\) is known as the special Euclidean group SE(3) with the group operation

kinematic relations

and \(\mathbf {U}\in \mathbb {R}^3\) denoting the translation velocity in the body-attached frame.

For group elements \(q=(\mathbf {R},\mathbf {x})\), the group operations in \(\mathrm {SO}(3)\times \mathbb {R}^3\) and in \(\mathrm {SE}(3)\) are equivalent to the matrix multiplication of non-singular block-structured matrices in \(\mathbb {R}^{7\times 7}\) and in \(\mathbb {R}^{4\times 4}\), respectively, that are defined by

Therefore, the groups \(\mathrm {SO}(3)\times \mathbb {R}^3\) and \(\mathrm {SE}(3)\) as well as the group \(\mathrm {SO}(3)\) of all rotation matrices \(\mathbf {R}\) are isomorphic to a subset of a general linear group \(\mathrm {GL}(r)=\{\,\mathbf {A}\in \mathbb {R}^{r\times r}\,:\, \mathrm{det}\,\mathbf {A}\ne 0\,\}\) of suitable degree \(r>0\). The structure of the block matrices in (4) and the orthogonality condition \(\mathbf {R}^\top \mathbf {R}=\mathbf {I}_3\) imply that the groups \(\mathrm {SO}(3)\times \mathbb {R}^3\), \(\mathrm {SE}(3)\) and \(\mathrm {SO}(3)\) are isomorphic to differentiable manifolds in \(\mathrm {GL}(7)\), \(\mathrm {GL}(4)\) and \(\mathrm {GL}(3)\), respectively.

2.2 Differential Equations on Manifolds: Matrix Lie Groups

A group G with group operation \(\circ \) and neutral element \(e\in G\) is called a Lie group if G is a differentiable manifold and the group operation \(\circ \,:\,G\times G \rightarrow G\) as well as the map \(q\mapsto q^{-1}\) are differentiable (\(\,q\circ q^{-1}=e\,\)). Lie groups that are subgroups of \(\mathrm {GL}(r)\) for some \(r>0\) are called matrix Lie groups if the group operation \(\circ \) is given by the matrix multiplication. For a compact introduction to analytical and numerical aspects of such matrix Lie groups, the interested reader is referred to (Hairer et al. 2006, Sect. IV.6).

It is a trivial observation that a continuously differentiable function q(t) with \(q(t_0)\in G\) will remain in a Lie group G if and only if its time derivative \(\dot{q}(t)\) is in the tangent space \(T_qG\) at the point \(q=q(t)\): \(\dot{q}(t)\in T_{q(t)}G\), (\(t\ge t_0\)). The tangent space at the neutral element e defines the Lie algebra \(\mathfrak {g}:=T_eG\). As a linear space, it is isomorphic to a finite dimensional linear space \(\mathbb {R}^k\) with an invertible linear mapping \(\widetilde{(\bullet )}\,:\,\mathbb {R}^k\rightarrow \mathfrak {g},\;\mathbf {v}\mapsto \widetilde{\mathbf {v}}\).

The group structure of G makes it possible to represent the elements of \(T_qG\) at any element \(q\in G\) by the elements \(\widetilde{\mathbf {v}}\) of the Lie algebra: The left translation

defines a bijection in G. Its derivative \(DL_q(y)\) at \(y=e\) represents the corresponding bijection between the tangent spaces \(\mathfrak {g}:=T_eG\) and \(T_qG\), i.e.,

With these notations, kinematic relations like (1) and (3) may be summarized in compact form:

with a velocity vector \(\mathbf {v}(t)\in \mathbb {R}^k\). In (6), the left translation \(L_q\) as well as the tilde operator \(\widetilde{(\bullet )}\) depend on the specific Lie group setting.

For constant velocity \(\mathbf {v}\), the kinematic relation (6) yields locally

with the exponential map \(\exp \,:\,\mathfrak {g}\rightarrow G\). For matrix Lie groups, this exponential map is given by

It is a local diffeomorphism, i.e., for any \(q_a\in G\) there are neighbourhoods \(U_{q_a}\subset G\) and \(V_{\widetilde{\mathbf {0}}}\subset \mathfrak {g}\) such that any \(q\in U_{q_a}\) may be expressed by

with a uniquely defined element \(\widetilde{\varvec{\Delta }}_q\in V_{\widetilde{\mathbf {0}}}\).

Example 2.1

(a) Using the block matrix representation (4), the groups \(\mathrm {SO}(3)\times \mathbb {R}^3\), \(\mathrm {SE}(3)\) and \(\mathrm {SO}(3)\) are seen to be matrix Lie groups. The Lie algebra corresponding to Lie group \(G=\mathrm {SO}(3)\) is given by the set

of all skew symmetric matrices in \(\mathbb {R}^{3\times 3}\). As a linear space, this Lie algebra is isomorphic to \(\mathbb {R}^3\) with the tilde operator being defined in (2). In \(\mathrm {SO}(3)\), the exponential map (8) may be evaluated very efficiently by Rodrigues’ formula

with \(\Phi :=\Vert \varvec{\Omega }\Vert _2\) since powers \(\widetilde{\varvec{\Omega }}^i\) with \(i\ge 3\) may be expressed in terms of \(\mathbf {I}_3\), \(\widetilde{\varvec{\Omega }}\) and \(\widetilde{\varvec{\Omega }}^2\) because each matrix \(\widetilde{\varvec{\Omega }}\in \mathbb {R}^{3\times 3}\) is a zero of its characteristic polynomial \(\chi _\mu (\widetilde{\varvec{\Omega }})= \mathrm{det}(\mu \mathbf {I}_3-\widetilde{\varvec{\Omega }})= \mu ^3+\Vert \varvec{\Omega }\Vert _2^2\,\mu =\mu ^3+\Phi ^2\mu \), i.e., \(\widetilde{\varvec{\Omega }}^3=-\Phi ^2\,\widetilde{\varvec{\Omega }}\) (Cayley-Hamilton theorem).

According to (1), (3) and (6), the Lie algebras of \(\mathrm {SO}(3)\times \mathbb {R}^3\) and \(\mathrm {SE}(3)\) are parametrized by vectors \(\mathbf {v}=(\varvec{\Omega }^\top ,\mathbf {u}^\top )^\top \) and \(\mathbf {v}=(\varvec{\Omega }^\top ,\mathbf {U}^\top )^\top \), respectively. In block matrix form, they are represented by (Brüls et al. 2011)

with exponential maps

and the so-called tangent operator \(\mathbf {T}_{\mathrm {SO}(3)}\,:\,\mathbb {R}^3\rightarrow \mathbb {R}^{3\times 3}\), see (33), that will be discussed in more detail in Remark 2.8(b) below.

(b) The linear space \(\mathbb {R}^k\) with vector addition \(+\) as group operation \(\circ \) is a trivial example of a matrix Lie group since \(\mathbf {x}\in \mathbb {R}^k\) may be identified with the non-singular \(2\times 2\) block matrix

Substituting vector \(\mathbf {x}\) by \(\mathbf {u}\in \mathbb {R}^k\) and the main diagonal blocks by \(\mathbf {0}_{k\times k}\) and by 0, respectively, we get the block matrix representation of the corresponding Lie algebra that is parametrized by \(\mathbf {u}\):

Alternatively, the exponential map may be expressed directly in terms of \(\mathbf {u}\in \mathbb {R}^k\) using \(\exp _{\mathbb {R}^k}=\mathrm {id}_{\mathbb {R}^k}\), i.e., \(\mathbf {x}\circ \exp _{\mathbb {R}^k}(\widetilde{\mathbf {u}})=\mathbf {x}+\mathbf {u}\).

(c) The block matrix representation of \((\mathbf {R},\mathbf {x})_{\mathrm {SO}(3)\times \mathbb {R}^3}\) in (4) is block-diagonal with diagonal blocks for \(\mathbf {R}\in \mathrm {SO}(3)\) and \(\mathbf {x}\in \mathbb {R}^3\), see (12). The same block-diagonal structure is observed for the elements of the corresponding Lie algebra \(\mathfrak {so}(3)\times \mathbb {R}^3\), for the tilde operator and for \(\exp _{\mathrm {SO}(3)\times \mathbb {R}^3}\), see (11a) and (13). It is typical for direct products of Lie groups and may be used as well for Lie groups \(G^N=G\times G\times \,\cdots \,\times G\) that are direct products of \(N\ge 2\) factors G with \(G=\mathrm {SO}(3)\times \mathbb {R}^3\) or \(G=\mathrm {SE}(3)\). In particular, we have

Hence, the exponential map \(\exp _{G^N}\,:\,\mathfrak {g}^N\rightarrow G^N\) in the direct product \(G^N\) may be evaluated as efficiently as the one in its factors G, see (10) and (11). In flexible multibody dynamics, the configuration spaces \((\mathrm {SO}(3)\times \mathbb {R}^3)^N\) and \((\mathrm {SE}(3))^N\) are of special interest since they allow to represent the configuration of an articulated system of rigid and flexible bodies in the nonlinear finite element method by \(N\ge 1\) pairs of absolute nodal translation and rotation variables, see (Brüls et al. 2012; Géradin and Cardona 2001).

Remark 2.2

The parametrization (9) offers a generic way to interpolate between \(q_a\) and any point \(q_b\) in a sufficiently small neighbourhood \(U_{q_a}\subset G\), see Fig. 1: If \(q_b=q_a\circ \exp (\widetilde{\varvec{\Delta }}_q)\) with a vector \(\varvec{\Delta }_q\in \mathbb {R}^k\) of sufficiently small norm \(\Vert \varvec{\Delta }_q\Vert \), then \(\exp (\vartheta \widetilde{\varvec{\Delta }}_q)\) is well defined for any \(\vartheta \in [0,1]\) and \(q_a,q_b\in G\) are connected by the path

Because of \(q_a=q_b\circ \exp (-\widetilde{\varvec{\Delta }}_q)\) the parametrization of this path by \(\vartheta \in [0,1]\) is symmetric in the sense that \(q(\vartheta ;q_a,\varvec{\Delta }_q)=q(1-\vartheta ;q_b,-\varvec{\Delta }_q)\). This expression is the Lie group equivalent to the identity \(\mathbf {q}_a+\vartheta \varvec{\Delta }_q=\mathbf {q}_b-(1-\vartheta )\varvec{\Delta }_q\) that is trivially satisfied for a path that interpolates two points \(\mathbf {q}_a,\mathbf {q}_b\in \mathbb {R}^k\).

In the Lie group setting, the nonlinear structure of the configuration space G makes it possible to represent large rotations globally without singularities. Under reasonable smoothness assumptions, there are smooth functions \(q\,:\,[t_0,t_{\mathrm {end}}]\rightarrow G\) solving the equations of motion on a time interval \([t_0,t_{\mathrm {end}}]\) of finite length, see Sect. 2.3 below. Locally, for a fixed time \(t=t^*\in [t_0,t_{\mathrm {end}}]\), the configuration space in a sufficiently small neighbourhood of \(q(t^*)\) may nevertheless be parametrized by elements of the linear space \(\mathfrak {g}\) that is independent of \(t^*\) and \(q(t^*)\), see (9).

The local parametrization of G by elements \(\widetilde{\mathbf {v}}\in \mathfrak {g}\) provides the basis for an efficient implementation of Lie group time integration methods and for the analysis of discretization errors, see Sects. 3 and 4 below. Using the notation \(\exp (\cdot )\) we will assume tacitly throughout the paper that the argument of the exponential map is in a small neighbourhood of \(\widetilde{\mathbf {0}}\in \mathfrak {g}\) on which \(\mathrm {exp}\) is a diffeomorphism.

The basic concepts of time discretization and error analysis in Lie group time integration are not limited to the specific parametrization by the exponential map, see, e.g., (Kobilarov et al. 2009) for an analysis of variational Lie group integrators that may be combined with the exponential map \(\exp \), with the Cayley transform \(\mathrm{cay}(\widetilde{\mathbf {v}}/2)= (\mathbf {I}-\widetilde{\mathbf {v}}/2)^{-1}(\mathbf {I}+\widetilde{\mathbf {v}}/2)\) or with other local parametrizations. In the present paper, we restrict ourselves, however, to the exponential map that reproduces the flow exactly if the velocity \(\widetilde{\mathbf {v}}\in \mathfrak {g}\) is constant, see (7).

2.3 Configuration Space with Lie Group Structure: Equations of Motion

In a k-dimensional configuration space G with Lie group structure, the kinematic relations are given by (6) with position coordinates \(q(t)\in G\) and the velocity vector \(\mathbf {v}(t)\in \mathbb {R}^k\).

We consider constrained systems with \(m\le k\) linearly independent holonomic constraints \(\varvec{\Phi }(q)=\mathbf {0}\) that are coupled by constraint forces \(-\mathbf {B}^\top (q)\varvec{\lambda }\) to the equilibrium equations for forces and momenta. Here, \(\varvec{\lambda }(t)\in \mathbb {R}^m\) denotes a vector of Lagrange multipliers which is multiplied by the transposed of the constraint matrix \(\mathbf {B}(q)\in \mathbb {R}^{m\times k}\) with \({\text {rank}}\mathbf {B}(q)=m\) that represents the constraint gradients in the sense that

The notation \(D\varvec{\Phi }(q)\cdot \bigl (DL_q(e)\cdot \widetilde{\mathbf {w}}\bigr )\) is used for the directional derivative of \(\varvec{\Phi }\,:\,G\rightarrow \mathbb {R}^m\) at \(q\in G\) in the direction of \(DL_q(e)\cdot \widetilde{\mathbf {w}}\in T_qG\).

Kinematic equations, equilibrium conditions and holonomic constraints are summarized in the equations of motion

that form a differential-algebraic equation (DAE) on Lie group G, see (Brüls and Cardona 2010). Matrix \(\mathbf {M}(q)\) denotes the mass matrix that is supposed to be symmetric, positive definite. The force vector \(-\mathbf {g}(q,\mathbf {v},t)\) summarizes external, internal and complementary inertia forces. Throughout the present paper, we consider equations of motion (15) with functions \(\mathbf {M}(q)\), \(\mathbf {g}(q,\mathbf {v},t)\) and \(\varvec{\Phi }(q)\) being smooth in the sense that they are as often continuously differentiable as required by the convergence analysis.

Remark 2.3

(a) For linear configuration spaces, the equations of motion (15) are well known from textbooks on DAE time integration, see, e.g., (Brenan et al. 1996, Sect. 6.2 and Hairer and Wanner 1996, Sect. VII.1). Model equations of constrained mechanical and mechatronic systems in industrial applications have often a more complex structure with additional first-order differential equations \({\dot{\mathbf{c}}}=\mathbf {h}_{\mathbf {c}}(q,\mathbf {v},\mathbf {c},t)\) or additional algebraic equations \(\mathbf {0}=\mathbf {h}_{\mathbf {s}}(q,\mathbf {s})\) that are locally uniquely solvable w.r.t. \(\mathbf {s}=\mathbf {s}(q)\) if the Jacobian \((\partial \mathbf {h}_{\mathbf {s}}/\partial \mathbf {s})(q,\mathbf {s})\) is non-singular. Other useful generalizations of (15) are rheonomic, i.e., explicitly time-dependent constraints \(\varvec{\Phi }(q,t)=\mathbf {0}\) and force vectors \(\mathbf {g}=\mathbf {g}(q,\mathbf {v},\varvec{\lambda },t)\) that contain friction forces depending nonlinearly on \(\varvec{\lambda }\), see (Arnold et al. 2011c and Brüls and Golinval 2006) for a more detailed discussion. All these additional model components may be considered straightforwardly in the convergence analysis of generalized-\(\alpha \) Lie group integrators, see (Arnold et al. 2015).

(b) The full rank assumption on \(\mathbf {B}(q)\) is essential for the analysis and numerical solution of (15) since otherwise the Lagrange multipliers \(\varvec{\lambda }(t)\) would not be uniquely defined, see (García de Jalón and Bayo 1994, Sect. 3.4) and the more recent material in (García de Jalón and Gutiérrez-López 2013). On the other hand, the assumptions on \(\mathbf {M}(q)\) may be slightly relaxed considering symmetric, positive semi-definite mass matrices that are positive definite on \({\text {ker}}\mathbf {B}(q)\), see (Géradin and Cardona 2001). The extension of the convergence analysis to this more complex class of model equations has recently been discussed in (Arnold et al. 2014).

The holonomic constraints (15c) imply hidden constraints at the level of velocity coordinates and at the level of acceleration coordinates. The first ones are obtained by differentiation of (15c) w.r.t. t:

For the second time derivative of (15c), we have to consider partial derivatives of \(\,\varvec{\Theta }(q,\mathbf {z}):=\mathbf {B}(q)\mathbf {z}\,\) w.r.t. \(q\in G\). Since \(\varvec{\Theta }\,:\,G\times \mathbb {R}^k\rightarrow \mathbb {R}^m\) is by construction linear in \(\mathbf {z}\) we have

with a bilinear form \(\mathbf {Z}(q)\,:\,\mathbb {R}^k\times \mathbb {R}^k\rightarrow \mathbb {R}^m\). Using these notations, the time derivative of (16) gets the form

It defines the hidden constraints at the level of acceleration coordinates.

The dynamical equations (15b) and the hidden constraints (18) are linear in \({\dot{\mathbf{v}}}(t)\) and \(\varvec{\lambda }(t)\) and may formally be used to eliminate \(\varvec{\lambda }(t)\) and to express \({\dot{\mathbf{v}}}(t)\) in terms of t, q(t) and \(\mathbf {v}(t)\), see (Hairer and Wanner 1996, Sect. VII.1):

Initial value problems for the resulting analytically equivalent unconstrained system for functions \(q\,:\,[t_0,t_{\mathrm {end}}]\rightarrow G\) and \(\mathbf {v}\,:\,[t_0,t_{\mathrm {end}}]\rightarrow \mathbb {R}^k\) are uniquely solvable whenever its right-hand side satisfies a Lipschitz condition, see, e.g., (Walter 1998). This proves unique solvability of initial value problems for the constrained system (15) if \(q(t_0)\) and \(\mathbf {v}(t_0)\) are consistent with the (hidden) constraints (15c) and (16), i.e., \(\varvec{\Phi }\bigl (q(t_0)\bigr )=\mathbf {B}\bigl (q(t_0)\bigr )\mathbf {v}(t_0)=\mathbf {0}\). The initial values \({\dot{\mathbf{v}}}(t_0)\) and \(\varvec{\lambda }(t_0)\) are given by (19) with \(t=t_0\), \(q=q(t_0)\) and \(\mathbf {v}=\mathbf {v}(t_0)\).

The index analysis of Lie group DAE (15) follows step by step the classical index analysis for the equations of motion for constrained mechanical systems in linear configuration spaces, see (Hairer and Wanner 1996, Sect. VII.1). The algebraic variables \(\varvec{\lambda }=\varvec{\lambda }(q,\mathbf {v},t)\) are defined by the system of linear equations (19) that contains the second time derivative of (15c). A formal third differentiation step yields \({\dot{\varvec{\lambda }}}={\dot{\varvec{\lambda }}}(q,\mathbf {v},t)\) and illustrates that (15) is an index-3 Lie group DAE in \(G\times \mathbb {R}^k\times \mathbb {R}^m\). Therefore, Eq. (15) is called the index-3 formulation of the equations of motion.

Remark 2.4

Block-structured systems of linear equations

with a symmetric, positive definite matrix \(\mathbf {M}\in \mathbb {R}^{k\times k}\) and a rectangular matrix \(\mathbf {B}\in \mathbb {R}^{m\times k}\) of full rank \(m\le k\) are uniquely solvable since left multiplication of the upper block row by \(\mathbf {B}\mathbf {M}^{-1}\) yields equations

that may be solved w.r.t. \(\mathbf {x}_{\varvec{\lambda }}\in \mathbb {R}^m\) since \(\mathbf {B}\mathbf {M}^{-1}\mathbf {B}^\top \) is symmetric, positive definite. Inserting this vector \(\mathbf {x}_{\varvec{\lambda }}\) in the upper block row, we get \(\mathbf {x}_{{\dot{\mathbf{v}}}}\in \mathbb {R}^k\) from \(\mathbf {M}\mathbf {x}_{{\dot{\mathbf{v}}}}=\mathbf {r}_{{\dot{\mathbf{v}}}}-\mathbf {B}^\top \mathbf {x}_{\varvec{\lambda }}\). The most time-consuming parts of this block Gaussian elimination are the Cholesky factorization of \(\mathbf {M}\in \mathbb {R}^{k\times k}\) (to get \(\mathbf {M}^{-1}\mathbf {B}^\top \in \mathbb {R}^{k\times m}\) and \(\mathbf {M}^{-1}\mathbf {r}_{{\dot{\mathbf{v}}}}\in \mathbb {R}^k\)) the evaluation of the matrix-matrix product \(\mathbf {B}(\mathbf {M}^{-1}\mathbf {B}^\top )\in \mathbb {R}^{m\times m}\) and the Cholesky factorization of this matrix.

Alternatively, we could follow a nullspace approach that separates the nullspace of \(\mathbf {B}\in \mathbb {R}^{m\times k}\) from a non-singular matrix \(\bar{\mathbf {R}}\in \mathbb {R}^{m\times m}\): For any non-singular matrix \(\mathbf {Q}\in \mathbb {R}^{k\times k}\) with \(\mathbf {B}\mathbf {Q}=\bigl (\,\bar{\mathbf {R}}^\top ,\,\mathbf {0}_{m\times (k-m)}\,\bigr )\), system (20) is equivalent to

\(\bar{\mathbf {x}}_{{\dot{\mathbf{v}}}}=\mathbf {Q}^{-1}\mathbf {x}_{{\dot{\mathbf{v}}}}\) and \(\bar{\mathbf {r}}_{{\dot{\mathbf{v}}}}=\mathbf {Q}^\top \mathbf {r}_{{\dot{\mathbf{v}}}}\). This block-structured system may be solved in three steps by block backward substitution to get \(\bar{\mathbf {x}}_{{\dot{\mathbf{v}}},1}\), \(\bar{\mathbf {x}}_{{\dot{\mathbf{v}}},2}\) and \(\bar{\mathbf {x}}_{\varvec{\lambda }}\) since matrices \(\bar{\mathbf {R}}^\top \), \(\bar{\mathbf {M}}_{22}\) and \(\bar{\mathbf {R}}\) are non-singular. Betsch and Leyendecker (2006) discussed analytical nullspace representations of the constraint matrix \(\mathbf {B}\) for typical types of constraints in engineering systems. If such analytical expressions are not available, then matrices \(\mathbf {Q}\) and \(\bar{\mathbf {R}}\) could be computed, e.g., by a QR-factorization of \(\mathbf {B}^\top \in \mathbb {R}^{k\times m}\), see (Golub and van Loan 1996).

2.4 Benchmark Problem: Heavy Top

The Lie group formulation of the equations of motion is the backbone of a rather general finite element framework for flexible multibody dynamics (Géradin and Cardona 2001). In the present paper, we focus on basic aspects of Lie group time integration in multibody dynamics and restrict the numerical tests to the simulation of a single rigid body in a gravitation field. This heavy top has found much interest in mechanics and serves as a benchmark problem for Lie group methods (Géradin and Cardona 2001, Sect. 5.8). The simulation of more complex flexible structures by Lie group time integration methods is discussed, e.g., in (Brüls et al. 2012).

Figure 2 shows the configuration of the heavy top in \(\mathbb {R}^3\) with \(\mathbf {R}(t)\in \mathrm {SO}(3)\) characterizing its orientation and the position vector \(\mathbf {x}(t)\in \mathbb {R}^3\) of the centre of mass in the inertial frame. In the body-attached frame, the centre of mass is given by \(\mathbf {X}=(\,0,\,1,\,0\,)^\top \). Here and in the following, we omit all physical units. We consider a gravitation field with fixed acceleration vector \(\varvec{\gamma }=(0,0,-9.81)^\top \). Mass and inertia tensor are given by \(m=15.0\) and \(\mathbf {J}=\mathrm{{diag}}\,(0.234375,0.46875,0.234375)\) with \(\mathbf {J}\) denoting the inertia tensor w.r.t. the centre of mass.

In the benchmark problem, the top rotates about a fixed point. Therefore, the configuration variables \((\mathbf {R},\mathbf {x})\) are subject to holonomic constraints \(\mathbf {x}=\mathbf {R}\mathbf {X}\). We consider an initial configuration being defined by \(\mathbf {R}(0)=\mathbf {I}_3\) with an angular velocity \(\varvec{\Omega }(0)=(0,150,-4.61538)^\top \). All other initial values are supposed to be consistent with \(\mathbf {0}=\varvec{\Phi }\bigl ((\mathbf {R},\mathbf {x})\bigr ):=\mathbf {X}-\mathbf {R}^\top \mathbf {x}\) and with the corresponding hidden constraints (16) and (18) at the level of velocity and acceleration coordinates.

The equations of motion (15) of the rotating heavy top result from the principles of classical mechanics. In (Brüls et al. 2011), they were derived for configuration spaces \(G=\mathrm {SO}(3)\times \mathbb {R}^3\) and \(G=\mathrm {SE}(3)\) following an augmented Lagrangian method. In \(\mathrm {SO}(3)\times \mathbb {R}^3\), we get hidden constraints

and a constraint matrix \(\mathbf {B}=(-\widetilde{\mathbf {X}}\;\;-\!\mathbf {R}^\top )\). The equations of motion are given by

with kinematic relations (1). Figure 3 shows a reference solution that has been computed with the very small time step size \(h=2.5\times 10^{-5}\). The position \(\mathbf {x}(t)\in \mathbb {R}^3\) of the centre of mass varies slowly in the inertial frame. For the Lagrange multipliers \(\varvec{\lambda }(t)\in \mathbb {R}^3\), we observe much higher frequencies that reflect the fast rotation of the top being caused by the rather large initial velocity \(\varvec{\Omega }(0)\). Note, that the time scale in the right plot of Fig. 3 has been zoomed by a factor of 10.

In the configuration space \(\mathrm {SE}(3)\), we have \({\dot{\mathbf{x}}}=\mathbf {R}\mathbf {U}\) resulting in hidden constraints \(\mathbf {0}=-\widetilde{\mathbf {X}}\varvec{\Omega }-\mathbf {U}\) with a constraint matrix \(\mathbf {B}=(-\widetilde{\mathbf {X}}\;\;-\!\mathbf {I}_3)\) that is constant and does not depend on \(q\in G\). The equations of motion are given by

with kinematic relations (3). The position coordinates \(q=(\mathbf {R},\mathbf {x})\) coincide for both formulations (21) and (22) but there may be substantial differences between the velocity coordinates \(\mathbf {u}(t)\) in the inertial frame and their counterparts \(\mathbf {U}(t)\) in the body-attached frame. This is illustrated by the simulation results in Fig. 4 that have been obtained again with time step size \(h=2.5\times 10^{-5}\). In \(\mathrm {SO}(3)\times \mathbb {R}^3\), we observe low frequency changes of \(\mathbf {u}(t)\) that correspond to the solution behaviour of \(\mathbf {x}(t)\) in the left plot of Fig. 3. For the configuration space \(G=\mathrm {SE}(3)\), we see in the right plot of Fig. 4 the dominating influence of the large initial velocity \(\varvec{\Omega }(0)\) on the qualitative solution behaviour of \(\mathbf {U}(t)\).

Throughout the paper, we will use the two different formulations (21) and (22) of the heavy top benchmark problem for numerical tests to discuss various aspects of the convergence analysis for the generalized-\(\alpha \) Lie group integrator.

2.5 More on the Exponential Map

Equation (7) illustrates the crucial role of the exponential map for multibody system models that have a configuration space with Lie group structure. Since the numerical solution proceeds in time steps, we have to study the composition of exponential maps with different arguments in more detail. Furthermore, the proposed Lie group time integration methods are implicit and rely on a Newton–Raphson iteration that requires the efficient evaluation of Jacobians \((\partial \mathbf {h}/\partial \mathbf {v})\bigl (q\circ \exp (\widetilde{\mathbf {v}})\bigr )\) for vector-valued functions \(\mathbf {h}\,:\,G\rightarrow \mathbb {R}^l\). In the present section, we follow the presentation in (Hairer et al. 2006, Sect. III.4) to discuss these rather technical aspects of Lie group time integration.

For matrix Lie groups, the exponential map \(\exp \) is given by the matrix exponential. For \(s\in \mathbb {R}\) and any matrices \(\mathbf {A},\mathbf {C}\in \mathbb {R}^{r\times r}\), the series expansion (8) shows

with the matrix commutator \([\mathbf {A},\mathbf {C}]:=\mathbf {A}\mathbf {C}-\mathbf {C}\mathbf {A}\) that vanishes iff matrices \(\mathbf {A}\) and \(\mathbf {C}\) commute. For a slightly more detailed analysis of the product of matrix exponentials, we use the Baker–Campbell–Hausdorff formula, see (Hairer et al. 2006, Lemma III.4.3), to get the following estimate:

Lemma 2.5

For \(s\rightarrow 0\), the product of matrix exponentials \(\exp (s\mathbf {A})\) and \(\exp (s\mathbf {C})\) satisfies

Proof

The Baker–Campbell–Hausdorff formula defines the argument of the matrix exponential at the right-hand side of (23) by the solution of an initial value problem with zero initial values at \(s=0\). Solving this initial value problem by Picard iteration with starting guess \(s\mathbf {A}+s\mathbf {C}+[s\mathbf {A},s\mathbf {C}]/2\), we may show that all higher order terms result in a remainder term of size \(\mathcal {O}(s)\Vert [s\mathbf {A},s\mathbf {C}]\Vert \), see (23).\(\quad \square \)

For fixed argument \(\mathbf {A}\), the matrix commutator defines a linear operator

that is called the adjoint operator. By recursive application of \({\text {ad}}_{\mathbf {A}}\) we may represent directional derivatives of the exponential map \(\exp (\mathbf {A})=\sum _i\mathbf {A}^i/\;\!i!\) in compact form: We denote \({\text {ad}}_{\mathbf {A}}^0(\mathbf {C}):=\mathbf {C}\) and

and consider powers \((\mathbf {A}+s\mathbf {C})^i\), (\(\,i\ge 0\,\)), in the limit case \(s\rightarrow 0\). For \(i=2\), we get

Here, the term \({\text {ad}}_{-\mathbf {A}}(\mathbf {C})\) results from the non-commutativity of matrix multiplication and could be represented as well by the adjoint operator \({\text {ad}}_{\mathbf {A}}\) itself since \(2\mathbf {A}\mathbf {C}+{\text {ad}}_{-\mathbf {A}}(\mathbf {C})= 2\mathbf {C}\mathbf {A}+{\text {ad}}_{\mathbf {A}}(\mathbf {C})\), see (Hairer et al. 2006). The use of \({\text {ad}}_{-\mathbf {A}}\) corresponds, however, to the characterization of the tangent space \(T_qG\) by left translations \(L_q\), see (5) and the discussion in (Iserles et al. 2000). In multibody dynamics, this characterization implies that vector \(\mathbf {v}\) in the kinematic relations (6) is a left-invariant velocity vector. These left-invariant vectors are favourable since the associated rotational inertia are defined in the body-attached frame and the body mass matrices remain constant during motion (Brüls et al. 2011).

Lemma 2.6

For \(s\rightarrow 0\) and matrices \(\mathbf {A},\mathbf {C}\in \mathbb {R}^{r\times r}\), the asymptotic behaviour of \((\mathbf {A}+s\mathbf {C})^i\) and \(\exp (\mathbf {A}+s\mathbf {C})\) is characterized by

and

with the matrix-valued function

that satisfies \({\text {dexp}}_{-\mathbf {A}}(\mathbf {C})=\mathbf {C}\) whenever \(\mathbf {A}\) and \(\mathbf {C}\) commute.

Proof

To prove (26) by induction, we multiply this expression from the right by \((\mathbf {A}+s\mathbf {C})\) and observe that \(\mathbf {A}^i\,s\mathbf {C}= s\mathbf {A}\;\!\!^{(i+1)-j-1}{\text {ad}}_{-\mathbf {A}}^j(\mathbf {C})\) with \(j=0\). Taking into account the identity

see (25), we get (26) with i being substituted by \(i+1\) since

For the proof of (27), we scale (26) by 1/i! and use the series expansion (8) to get

For commuting matrices \(\mathbf {A}\) and \(\mathbf {C}\), the iterated adjoint operators \({\text {ad}}_{-\mathbf {A}}^j(\mathbf {C})\) vanish for all \(j>0\) resulting in \({\text {dexp}}_{-\mathbf {A}}(\mathbf {C})=\mathbf {C}\), see (28).\(\quad \square \)

Lemma 2.6 shows that the directional derivative of the matrix exponential is given by \((\partial /\partial \mathbf {A})\exp (\mathbf {A})\mathbf {C}= \exp (\mathbf {A}){\text {dexp}}_{-\mathbf {A}}(\mathbf {C})\). In the Lie group setting, we use this expression to study the Jacobian of vector-valued functions \(\mathbf {h}\bigl (q\circ \exp (\widetilde{\mathbf {v}})\bigr )\) w.r.t. \(\mathbf {v}\in \mathbb {R}^k\). For elements \(\widetilde{\mathbf {v}},\widetilde{\mathbf {w}}\in \mathfrak {g}\), the terms \({\text {ad}}_{-\widetilde{\mathbf {v}}}(\widetilde{\mathbf {w}})\) and \({\text {dexp}}_{-\widetilde{\mathbf {v}}}(\widetilde{\mathbf {w}})\) are linear in \(\mathbf {w}\in \mathbb {R}^k\) and may be represented by matrix-vector products in \(\mathbb {R}^k\) using the notation

With (29), the operators \({\text {ad}}_{\widetilde{\mathbf {v}}}\), \({\text {ad}}_{-\widetilde{\mathbf {v}}}\) and \({\text {ad}}_{-\widetilde{\mathbf {v}}}^j\) correspond to \(k\times k\)-matrices \(\widehat{\mathbf {v}}\), \(-\widehat{\mathbf {v}}\) and \((-\widehat{\mathbf {v}})^j\), respectively, and the counterpart to \(\widetilde{\mathbf {z}}= {\text {dexp}}_{-\widetilde{\mathbf {v}}}(\widetilde{\mathbf {w}}) \in \mathfrak {g}\), see (28), is given by \(\mathbf {z}=\mathbf {T}(\mathbf {v})\mathbf {w}\in \mathbb {R}^k\) with the tangent operator

see (Iserles et al. 2000). Using the chain rule, we obtain

Corollary 2.7

Consider a continuously differentiable function \(\mathbf {h}:G\rightarrow \mathbb {R}^l\) and a matrix-valued function \(\mathbf {H}:G\rightarrow \mathbb {R}^{l\times k}\) that represents the derivative of \(\mathbf {h}\) in the sense that

see (14). The Jacobian of \(\mathbf {h}\bigl (q\circ \exp (\widetilde{\mathbf {v}})\bigr )\) w.r.t. \(\mathbf {v}\in \mathbb {R}^k\) is given by

Remark 2.8

(a) For commuting elements of the Lie algebra (\(\widetilde{\mathbf {v}},\widetilde{\mathbf {w}}\in \mathfrak {g}\) with \([\widetilde{\mathbf {v}},\widetilde{\mathbf {w}}]=\widetilde{\mathbf {0}}\)), the adjoint operator vanishes resulting in \(\widehat{\mathbf {v}}\mathbf {w}=\mathbf {0}_k\) and \(\mathbf {T}(\mathbf {v})\mathbf {w}=\mathbf {w}\). Therefore, the tangent operator satisfies \(\mathbf {T}(\mathbf {v})\mathbf {v}=\mathbf {v}\), (\(\mathbf {v}\in \mathbb {R}^k\)), and Corollary 2.7 implies

with \(\vartheta \in \mathbb {R}\) and any vector \(\mathbf {v}\in \mathbb {R}^k\).

(b) The efficient evaluation of the tangent operator is essential for an efficient implementation of implicit Lie group integrators. In the Lie group \(G=\mathrm {SO}(3)\), the hat operator maps \(\varvec{\Omega }\in \mathbb {R}^3\) to \(\widehat{\varvec{\Omega }}:=\widetilde{\varvec{\Omega }}\) with the skew symmetric matrix \(\widetilde{\varvec{\Omega }}\) being defined in (2). Similar to Rodrigues’ formula (10), the tangent operator \(\mathbf {T}_{\mathrm {SO}(3)}\) may be evaluated in closed form (Brüls et al. 2011):

For \(G=\mathrm {SO}(3)\times \mathbb {R}^3\), the Lie algebra \(\mathfrak {g}=\mathfrak {so}(3)\times \mathbb {R}^3\) is parametrized by vectors \(\mathbf {v}=(\varvec{\Omega }^\top ,\mathbf {u}^\top )^\top \in \mathbb {R}^6\) and we get

More complex expressions are obtained for the Lie group \(G=\mathrm {SE}(3)\) and its Lie algebra \(\mathfrak {se}(3)\) that is parametrized by vectors \(\mathbf {v}=(\varvec{\Omega }^\top ,\mathbf {U}^\top )^\top \in \mathbb {R}^6\) with

Using the identities \(\widetilde{\varvec{\Omega }}^3=-\Phi ^2\widetilde{\varvec{\Omega }}\), \(\widetilde{\mathbf {U}}\widetilde{\varvec{\Omega }}\,{=}\, -(\varvec{\Omega }^\top \mathbf {U})\mathbf {I}_3+\varvec{\Omega }\mathbf {U}^\top \), \(\widetilde{\mathbf {U}}\widetilde{\varvec{\Omega }}^2+ \widetilde{\varvec{\Omega }}^2\widetilde{\mathbf {U}}\) \(\,{=}\,-\Phi ^2\widetilde{\mathbf {U}}-(\varvec{\Omega }^\top \mathbf {U})\widetilde{\varvec{\Omega }}\) and \(\widetilde{\varvec{\Omega }}\widetilde{\mathbf {U}}\widetilde{\varvec{\Omega }}= -(\varvec{\Omega }^\top \mathbf {U})\widetilde{\varvec{\Omega }}\) with \(\Phi :=\Vert \varvec{\Omega }\Vert _2\), we prove by induction

and

for all \(l\ge 0\) and get the tangent operator

with \(\mathbf {S}_{\mathrm {SE}(3)}(\mathbf {0},\mathbf {U})=-\widetilde{\mathbf {U}}/2\) and

if \(\varvec{\Omega }\ne \mathbf {0}\), see (Brüls et al. 2011 and Sonneville et al. 2014, Appendix A).

(c) If \(\mathbb {R}^k\) with the addition is considered as a Lie group, then we get \(\widehat{\mathbf {v}}=\mathbf {0}_{k\times k}\) and \(\mathbf {T}_{\mathbb {R}^k}(\mathbf {v})=\mathbf {I}_k\) for any vector \(\mathbf {v}\in \mathbb {R}^k\) since the group operation is commutative.

(d) Similar to the discussion in Example 2.1(c), we observe for direct products like \(\mathrm {SO}(3)\times \mathbb {R}^3\) that the matrix \(\widehat{\mathbf {v}}\) and the tangent operator \(\mathbf {T}(\mathbf {v})\) are block-diagonal. In \((\mathrm {SO}(3)\times \mathbb {R}^3)^N\) and \((\mathrm {SE}(3))^N\), the tangent operators are given by

with \(G=\mathrm {SO}(3)\times \mathbb {R}^3\) and \(G=\mathrm {SE}(3)\), respectively.

3 Generalized-\(\alpha \) Lie Group Time Integration

The time integration of the equations of motion (15) by Lie group methods is based on the observation that (15a) implies

In Sect. 3.1, a generalized-\(\alpha \) Lie group method for the index-3 formulation (15) is introduced. In Sect. 3.2, we recall some well-known facts about order, stability and “overshooting” of generalized-\(\alpha \) methods in linear spaces. For the heavy top benchmark problem, second-order convergence of the Lie group integrator and an order reduction phenomenon in the transient phase may be observed numerically (Sect. 3.3). In Sect. 3.4, we show that the error constant of the first-order error term may be reduced drastically by an analytical index reduction before time discretization. Implementation aspects and the discretization errors in hidden constraints are studied in Sects. 3.5 and 3.6.

3.1 The Lie Group Time Integration Method

As proposed by Brüls and Cardona (2010), we consider a generalized-\(\alpha \) method for the index-3 formulation (15) of the equations of motion that updates the numerical solution \((q_n,\mathbf {v}_n,\mathbf {a}_n,\varvec{\lambda }_n)\) in a time step \(t_n\rightarrow t_n+h\) of step size h according to

with vectors \({\dot{\mathbf{v}}}_{n+1}\), \(\varvec{\lambda }_{n+1}\) satisfying the equilibrium conditions

The term generalized-\(\alpha \) method refers to the coefficients \(\alpha _m\), \(\alpha _f\) in the update formula (37d) for the acceleration like variables \(\mathbf {a}_n\). These auxiliary variables \(\mathbf {a}_n\) were introduced by Chung and Hulbert (1993) who studied the time integration of unconstrained linear systems in linear spaces and proposed a one-parametric set of algorithmic parameters \(\alpha _m\), \(\alpha _f\), \(\beta \) and \(\gamma \) that may be considered as a quasi-standard for this type of methods, see Sect. 3.2 below.

Method (37) is initialized with starting values \(q_0\in G\) and \(\mathbf {v}_0\in \mathbb {R}^k\) that approximate the (consistent) initial values \(q(t_0)\), \(\mathbf {v}(t_0)\) in (15). The starting values \({\dot{\mathbf{v}}}_0\), \(\mathbf {a}_0\) at acceleration level are approximations of \({\dot{\mathbf{v}}}(t_0)\in \mathbb {R}^k\), see (19). The convergence analysis in Sect. 4 below will show that the starting values need to be selected carefully to guarantee second order convergence in all solution components and to avoid spurious oscillations in the numerical solution \(\varvec{\lambda }_n\).

In practical applications, variable step size implementations with error control are expected to be superior to methods with fixed time step size h. For constrained systems in linear configuration spaces, a step size control algorithm for generalized-\(\alpha \) methods with \(\alpha _m=0\) (HHT-methods, see Hilber et al. 1977) was developed in (Géradin and Cardona 2001, Chap. 11). For this problem class, Jay and Negrut (2007) proposed a linear update formula for the auxiliary variables \(\mathbf {a}_n\) to compensate a first-order error term resulting from a step size change at \(t=t_n\).

An alternative approach is based on the elimination of these variables \(\mathbf {a}_n\) in the multi-step representation of generalized-\(\alpha \) methods according to Erlicher et al. (2002). Here, the algorithmic parameters \(\alpha _m\), \(\alpha _f\), \(\beta \) and \(\gamma \) have to be updated in each time step considering the step size ratio \(h_{n+1}/h_n\), see (Brüls and Arnold 2008).

There is no straightforward extension of the results of Erlicher et al. (2002) from linear configuration spaces to the Lie group setting of the present paper. Furthermore, the analysis of the error propagation in time integration is simplified substantially if the time step size h is fixed for all time steps. For both reasons, the convergence analysis for generalized-\(\alpha \) Lie group integrators (37) with variable time step size \(h_n\) will be a topic of future research that is beyond the scope of the present paper.

3.2 The Generalized-\(\alpha \) Method in Linear Spaces

For linear configuration spaces \(G=\mathbb {R}^k\) and unconstrained systems (15) with constant mass matrix \(\mathbf {M}\), the generalized-\(\alpha \) Lie group method (37) coincides with the “classical” generalized-\(\alpha \) method that goes back to the work of Chung and Hulbert (1993). Multiplying (37d) by the (constant) mass matrix \(\mathbf {M}\) and eliminating vectors \(\varvec{\Delta }\mathbf {q}_n\) and \({\dot{\mathbf{v}}}_{n+1}\), we get

with \(\mathbf {g}_n:=\mathbf {g}(\mathbf {q}_n,\mathbf {v}_n,t_n)\) and vectors \(\mathbf {q}_n,\mathbf {q}_{n+1}\in \mathbb {R}^k\) that are typeset in boldface font to indicate the linear structure of the configuration space.

For a local error analysis, we suppose that \(\mathbf {a}_n\) approximates \({\dot{\mathbf{v}}}(t_n+\Delta _\alpha h)\) with a fixed offset \(\Delta _\alpha \in \mathbb {R}\), see (Jay and Negrut 2008, Sect. 2), and substitute in (38) the numerical solution vectors \(\mathbf {q}_n\), \(\mathbf {v}_n\), \(\mathbf {a}_n\), \(\mathbf {g}_n\) by \(\mathbf {q}(t_n)\), \(\mathbf {v}(t_n)\), \({\dot{\mathbf{v}}}(t_n+\Delta _\alpha h)\) and \(-\mathbf {M}{\dot{\mathbf{v}}}(t_n)\), respectively. The resulting residuals define local truncation errors \(\mathbf {l}_n^{\mathbf {q}}\), \(\mathbf {l}_n^{\mathbf {v}}\) and \(\mathbf {l}_n^{\mathbf {a}}\):

For sufficiently smooth solutions \(\mathbf {q}(t)\), the local truncation errors in (39) may be analysed by Taylor expansion of functions \(\mathbf {q}(t)\), \(\mathbf {v}(t)\) and \({\dot{\mathbf{v}}}(t)\) at \(t=t_n\):

We get local truncation errors \(\mathbf {l}_n^{\mathbf {q}}=\mathcal {O}(h^3)\), \(\mathbf {l}_n^{\mathbf {v}}=\mathcal {O}(h^3)\) and \(\mathbf {l}_n^{\mathbf {a}}=\mathcal {O}(h^2)\) if the algorithmic parameters satisfy the order condition

Chung and Hulbert (1993) studied the scalar test equation \(\ddot{q}+\omega ^2q=0\) with periodic analytical solutions \(q(t)=c_1\sin \omega t+c_2\cos \omega t\) and observed that (38) results in a frequency-dependent linear mapping \((q_n,v_n,a_n)\mapsto \) \((q_{n+1},v_{n+1},a_{n+1})\). Scaling the update formulae (38a, 38b) by factors 1/\(h^2\) and 1/h, respectively, we get

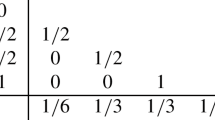

Recursive application yields \(\mathbf {z}_n=\mathbf {T}_{h\omega }^n\mathbf {z}_0\) with \(\mathbf {T}_{h\omega }:=(\mathbf {T}_{h\omega }^+)^{-1}\mathbf {T}_{h\omega }^0\). Therefore, the stability and (numerical) damping properties of the generalized-\(\alpha \) method (38) applied to \(\ddot{q}+\omega ^2q=0\) may be characterized by an eigenvalue analysis of \(\mathbf {T}_{h\omega }\in \mathbb {R}^{3\times 3}\). Chung and Hulbert (1993) propose to choose a user-defined parameter \(\rho _\infty \in [0,1]\) to characterize the numerical damping properties in the limit case \(h\omega \rightarrow \infty \). They show that the algorithmic parameters \(\alpha _m\), \(\alpha _f\), \(\beta \) and \(\gamma \) may be defined such that the order condition (41) is satisfied and the spectral radius \(\varrho (\mathbf {T}_{h\omega })\) is monotonically decreasing for \(h\omega \in (0,+\infty )\) with \(\lim _{h\omega \rightarrow 0}\varrho (\mathbf {T}_{h\omega })=1\) and \(\varrho (\mathbf {T}_\infty )=\rho _\infty \,\):

For these parameters, all three eigenvalues of \(\mathbf {T}_{h\omega }=\mathbf {T}_{h\omega }(\rho _\infty )\) coincide in the limit case \(h\omega \rightarrow \infty \) and the Jordan canonical form of \(\mathbf {T}_\infty (\rho _\infty )\in \mathbb {R}^{3\times 3}\) consists of a single \(3\times 3\) Jordan block for the eigenvalue \(\mu :=-\rho _\infty \), i.e., \(\mathbf {T}_\infty (\rho _\infty )=\mathbf {X}(\mu )\mathbf {J}(\mu )\mathbf {X}^{-1}(\mu )\) with

With algorithmic parameters \(\alpha _m\), \(\alpha _f\), \(\beta \) and \(\gamma \) according to (42) and a damping parameter \(\rho _\infty <1\), the linear stability of the generalized-\(\alpha \) method (38) is always guaranteed. For the test equation \(\ddot{q}+\omega ^2q=0\), the numerical solution \((q_n,v_n,a_n)^\top \) will finally be damped out for any starting values \(q_0\), \(v_0\), \(a_0\) since \(\mathbf {z}_n=\mathbf {T}_{h\omega }^n(\rho _\infty )\mathbf {z}_0\) and \(\lim _{n\rightarrow \infty }\mathbf {T}_{h\omega }^n(\rho _\infty )=\mathbf {0}\) because \(\varrho (\mathbf {T}_{h\omega }(\rho _\infty ))<1\), (\(\,h\omega \in (0,\infty )\,\)).

In a transient phase, however, \(\Vert \mathbf {z}_n\Vert \) may be much larger than \(\Vert \mathbf {z}_0\Vert \) since \(\Vert \mathbf {T}^n\Vert \) may be much larger than \((\varrho (\mathbf {T}))^n\) for matrices that are not diagonalisable (non-normal matrices). Typical values are \(\max _n\Vert \mathbf {T}^n\Vert _2=\Vert \mathbf {T}^3\Vert _2=7.4\) for \(\mathbf {T}=\mathbf {T}_\infty (\rho _\infty )\) with \(\rho _\infty =0.6\) and \(\max _n\Vert \mathbf {T}^n\Vert _2=\Vert \mathbf {T}^{14}\Vert _2=34.3\) for \(\mathbf {T}=\) \(\mathbf {T}_\infty (\rho _\infty )\) with \(\rho _\infty =0.9\). In structural dynamics, this phenomenon is called overshooting since \(|q_n|\) may grow rapidly in a transient phase before the numerical dissipation results finally in \(\lim _{n\rightarrow 0}q_n=0\). Overshooting is a well-known problem of unconditionally stable Newmark-type methods with second-order accuracy (Hilber and Hughes 1978) and may be a motivation to prefer first-order accurate Newmark integrators in industrial multibody system simulation (Sanborn et al. 2014).

In the quantitative error analysis, we denote the global errors of the generalized-\(\alpha \) method in linear spaces by \(\mathbf {e}_n^{(\bullet )}\) with \((\bullet )(t_n)=(\bullet )_n+\mathbf {e}_n^{(\bullet )}\). For the auxiliary vectors \(\mathbf {a}_n\) that do not have a corresponding component of the analytical solution, we take into account the offset parameter \(\Delta _\alpha \) from (41) and define the global error \(\mathbf {e}_n^{\mathbf {a}}\) by \({\dot{\mathbf{v}}}(t_n+\Delta _\alpha h)=\mathbf {a}_n+\mathbf {e}_n^{\mathbf {a}}\). For the scalar test equation \(\ddot{q}+\omega ^2q=0\), these global errors as well as the local errors \(l_n^q\), \(l_n^v\), \(l_n^a\) are scalar quantities and \(\mathbf {T}_{h\omega }^+\mathbf {z}_{n+1}=\mathbf {T}_{h\omega }^0\mathbf {z}_n\) implies

see (39). As before, the first and second row are scaled by \(1/h^2\) and 1/h, respectively. The resulting first-order error term \(l_n^q/h^2=C_qh\ddot{v}(t_n)+\mathcal {O}(h^2)\) may strongly affect the result accuracy.

This order reduction phenomenon is known from the convergence analysis for the application of Newmark-type methods to constrained mechanical systems in linear configuration spaces, see (Cardona and Géradin 1994). In the limit case \(\omega \rightarrow \infty \), the transient solution behaviour is dominated by an oscillating first-order error term that is finally damped out by numerical dissipation. To study this qualitative solution behaviour in full detail, we introduce a new variable \(\lambda :=\omega ^2q\) and rewrite the test equation as a singular singularly perturbed problem with perturbation parameter \(\varepsilon :=1/\omega \), see (Lubich 1993):

The corresponding reduced system (\(\varepsilon =0\), i.e., \(\omega \rightarrow \infty \)) is a constrained system (15) with \(G=\mathbb {R}\) and \(k=m=1\):

With the notation \(\lambda _n:=\omega ^2q_n\), the generalized-\(\alpha \) method (38) for the singularly perturbed system (44) converges for \(\omega \rightarrow \infty \) to the generalized-\(\alpha \) method (37) for the constrained system (45) and we get in (43) both for finite frequencies \(\omega \) and in the limit case \(\omega \rightarrow \infty \):

with

and

The error recursion in terms of \(e_n^\lambda \), \(r_n\) and \(e_n^a\) provides the basis for a detailed convergence analysis:

Theorem 3.1

Consider the time discretization of the linear test equations (44) and (45) by a generalized-\(\alpha \) method with parameters \(\alpha _m\), \(\alpha _f\), \(\beta \) and \(\gamma \) according to (42) for some numerical damping parameter \(\rho _\infty \in [0,1)\).

-

(a)

The discretization errors are bounded by

$$\begin{aligned} \Vert \mathbf {l}_n^{\mathbf {r}}\Vert =\mathcal {O}(h^2)\,,\;\; \Vert \mathbf {e}_{n+1}^{\mathbf {r}}-\mathbf {T}_{h\omega }\mathbf {e}_n^{\mathbf {r}}\Vert =\mathcal {O}(h^2)\,, \end{aligned}$$(49)$$\begin{aligned} \Vert \mathbf {e}_n^{\mathbf {r}}-\mathbf {T}_{h\omega }^n\mathbf {e}_0^{\mathbf {r}}\Vert =\mathcal {O}(h^2) \end{aligned}$$(50)and

$$\begin{aligned} \Vert \mathbf {e}_n^{\mathbf {r}}\Vert \le \Vert \mathbf {T}_{h\omega }^n\Vert \,\Vert \mathbf {e}_0^{\mathbf {r}}\Vert + \mathcal {O}(h^2)\,. \end{aligned}$$(51) -

(b)

For starting values \(\lambda _0=\lambda (t_0)+\mathcal {O}(h^2)\), \(a_0=\dot{v}(t_0+\Delta _\alpha h)+\mathcal {O}(h^2)\), we have \(\Vert \mathbf {e}_0^{\mathbf {r}}\Vert =\mathcal {O}(h)\) if \(v_0=v(t_0)+\mathcal {O}(h^2)\). This error estimate may be improved by one power of h perturbing the starting value \(v_0\) such that

$$\begin{aligned} v_0=v(t_0)+C_qh^2\ddot{v}(t_0)+\mathcal {O}(h^3)\,. \end{aligned}$$(52)

In that case, we get \(\Vert \mathbf {e}_n^{\mathbf {r}}\Vert =\mathcal {O}(h^2)\), (\(\,n\ge 0\,\)).

Proof

(a) Because of

the local error term \(\mathbf {l}_n^{\mathbf {r}}\) is of size \(\mathcal {O}(h^2)\), see (40), and (49) is a direct consequence of the error recursion (46). The assumptions on parameters \(\alpha _m\), \(\alpha _f\), \(\beta \) and \(\gamma \) imply \(\varrho (\mathbf {T}_{h\omega })<1\) and the existence of a norm \(\Vert \mathbf {T}\Vert _\rho \) with \(\kappa :=\Vert \mathbf {T}_{h\omega }\Vert _\rho <1\), see, e.g., (Quarteroni et al. 2000, Sect. 1.11.1). Therefore,

with an appropriate constant \(C>0\), see (49). Recursive application of this error estimate results in

and (50) follows from the equivalence of all norms in the finite dimensional space \(\mathbb {R}^3\). Error bound (51) is a straightforward consequence of the triangle inequality.

(b) We get \(\Vert \mathbf {e}_0^{\mathbf {r}}\Vert =|r_0|+\mathcal {O}(h^2)\) and the estimates for \(\Vert \mathbf {e}_0^{\mathbf {r}}\Vert \) and for \(\Vert \mathbf {e}_n^{\mathbf {r}}\Vert \), (\(\,n>0\,\)), follow from the definition of \(r_n\), see (48), and from part (a) of the theorem.\(\quad \square \)

The most natural choice of starting values \(\lambda _0:=\lambda (t_0)\), \(v_0:=v(t_0)\), \(a_0:=\) \(\dot{v}(t_0+\Delta _\alpha h)\) yields \(\mathbf {e}_0^{\mathbf {r}}=(\,0,\,r_0,\,0\,)^\top \) with \(r_0=C_qh\ddot{v}(t_0)+\mathcal {O}(h^2)\), see (48). In error estimate (50), we obtain for \(\ddot{v}(t_0)\ne 0\) a first-order error term being amplified by matrix-valued factors \(\mathbf {T}_{h\omega }^n\) that are well known from the analysis of the “overshoot” phenomenon by Hilber and Hughes (1978). In the limit case \(h\omega \rightarrow \infty \), this term may be studied in more detail using the Jordan canonical form of \(\mathbf {T}_\infty \), see (Cardona and Géradin 1989, 1994). We get

with the Jordan block \(\mathbf {J}(-\rho _\infty )\in \mathbb {R}^{3\times 3}\). It may be verified by induction that the non-zero elements of \(\mathbf {J}^n(-\rho _\infty )\) are given by \((-\rho _\infty )^n\), \(n(-\rho _\infty )^{n-1}\) and \(n(n-1)(-\rho _\infty )^{n-2}/2\). Straightforward computations show that the global error \(e_n^\lambda \) (that coincides up to a term of size \(\mathcal {O}(h^2)\) with the first component of \(\mathbf {T}_\infty ^n\mathbf {e}_0^{\mathbf {r}}\,\)) satisfies \(e_n^\lambda =c_nh\ddot{v}(t_0)+\mathcal {O}(h^2)\) with

After a transient phase, the first-order error term \(c_nh\ddot{v}(t_0)\) is damped out since \(\lim _{n\rightarrow \infty }c_n=0\) for any \(\rho _\infty \in [0,1)\). In the transient phase, however, the error constants \(c_n\) may become very large with maximum absolute values of size \(|c_3|=6.8\) for \(\rho _\infty =0.6\), \(|c_{15}|=31.9\) for \(\rho _\infty =0.9\) and \(|c_{161}|=334.3\) for \(\rho _\infty =0.99\).

For the test equation (45) itself, this error analysis has not much practical relevance since \(q(t)\equiv 0\) implies \(\ddot{v}(t)\equiv 0\) and \(\mathbf {e}_0^{\mathbf {r}}=\mathbf {0}\) for exact starting values \(\lambda _0=\lambda (t_0)=0\), \(v_0=v(t_0)=0\), \(a_0=\dot{v}(t_0+\Delta _\alpha h)=0\). Substituting the trivial constraint \(q=0\) by a rheonomic constraint \(q(t)=t^3/6\), we may construct, however, a slightly more complex test problem with non-vanishing first-order error term \(r_0=C_qh\) since \(l_n^q=C_qh^3\ddot{v}(t_n)=C_qh^3\) and the local truncation errors \(l_n^v\), \(l_n^a\) vanish identically. For this test problem, the global error in \(\lambda \) really suffers from order reduction since \(e_n^\lambda =c_nh\).

The convergence analysis for generalized-\(\alpha \) methods shows that this order reduction phenomenon is typical for the initialization of method (37) with exact starting values \(\varvec{\lambda }_0=\varvec{\lambda }(t_0)\), \(\mathbf {v}_0=\mathbf {v}(t_0)\) and \(\mathbf {a}_0={\dot{\mathbf{v}}}(t_0+\Delta _\alpha h)\), see (Arnold et al. 2015) and Sect. 4 below. For linear configuration spaces \((G=\mathbb {R}^k)\), the global error in \(\varvec{\lambda }\) is bounded by

with the error constants \(c_n\) being defined in (53). The undesired first-order error term is nicely illustrated by numerical test results for the mathematical pendulum, see (Arnold et al. 2015, Sect. 2.3):

Example 3.2

Consider a mathematical pendulum of mass m and length l in Cartesian coordinates \(\mathbf {q}=(x,y)^\top \) with constraint \((x^2+y^2-l^2)/2=0\), see (15c). In (15), we have \(\mathbf {M}=m\mathbf {I}_2\), \(\mathbf {g}=(\,0\,,\,g\,)^\top \) with \(m=l=1\), \(g=9.81\) (here and in the following, all physical units are omitted). We fix the total energy \(E=m(\dot{x}_0^2+\dot{y}_0^2)/2+mgy_0\) to \(E=m/2-mgl\) and determine the consistent initial values \(x_0\), \(y_0\), \(\dot{x}_0\), \(\dot{y}_0\) and \(\lambda _0\) by the initial deviation \(x_0\) from the equilibrium position.

Method (37) is applied with algorithmic parameters according to (42) and damping parameter \(\rho _\infty =0.9\). The starting values are set to \(\mathbf {q}_0:=\) \((x_0,y_0)^\top \), \(\mathbf {v}_0:=(\dot{x}_0,\dot{y}_0)^\top \) and \({\dot{\mathbf{v}}}_0:=(\ddot{x}_0,\ddot{y}_0)^\top \) with accelerations \(\ddot{x}_0\), \(\ddot{y}_0\) that are obtained from evaluating the equations of motion for the consistent initial values \(x_0\), \(y_0\), \(\dot{x}_0\), \(\dot{y}_0\), \(\lambda _0\). The acceleration like variables \(\mathbf {a}_n\) are initialized with \(\mathbf {a}_0={\dot{\mathbf{v}}}(t_0)+\Delta _\alpha h{\ddot{\mathbf{v}}}(t_0)+\mathcal {O}(h^2)= {\dot{\mathbf{v}}}(t_0+\Delta _\alpha h)+\mathcal {O}(h^2)\) using the starting value \({\dot{\mathbf{v}}}_0={\dot{\mathbf{v}}}(t_0)\) and a difference approximation of \({\ddot{\mathbf{v}}}(t_0)\).

Figure 5 shows on a short time interval the global error in \(\lambda \) for initial values \(x_0=0\) (marked by dots) and \(x_0=0.2\) (marked by “\(+\)”) for two different step sizes h. If we start in the equilibrium position, the error is very small but for \(x_0=0.2\), the oscillating error in \(\lambda \) reaches a maximum amplitude of \(2.48\times 10^{-1}\) for \(h=2.0\times 10^{-2}\) and \(1.23\times 10^{-1}\) for \(h=1.0\times 10^{-2}\). After about 100 time steps these transient errors are damped out.

The numerical results in Fig. 5 show that in the transient phase the generalized-\(\alpha \) method (37) may suffer from spurious oscillations of amplitude \(\mathcal {O}(h)\). According to (54), this first-order error term is given by \(c_nh\,\mathbf {B}\bigl (\mathbf {q}(t_0)\bigr ){\ddot{\mathbf{v}}}(t_0)\) with \(\mathbf {B}\bigl (\mathbf {q}(t_0)\bigr ){\ddot{\mathbf{v}}}(t_0)=-3gx_0\dot{x}_0/y_0\). Therefore, the spurious oscillations and the order reduction disappear if we start at the equilibrium position \(x_0=0\). Reducing the damping parameter \(\rho _\infty \) in (42), the oscillations are damped out more rapidly but may still be observed.

3.3 Numerical Tests for the Heavy Top Benchmark Problem

In the present section, we study the convergence behaviour of the generalized-\(\alpha \) Lie group integrator (37) numerically. We use algorithmic parameters according to (42) with the numerical damping parameter \(\rho _\infty =0.9\) and apply (37) to the equations of motion (21), (22) of the heavy top benchmark problem in configuration spaces \(G=\mathrm {SO}(3)\times \mathbb {R}^3\) and \(G=\mathrm {SE}(3)\), respectively. Initial values \(q(t_0)\), \(\mathbf {v}(t_0)\) are given in Sect. 2.4. In the numerical tests, the integrator was initialized with starting values \(q_0:=q(t_0)\), \(\mathbf {v}_0:=\) \(\mathbf {v}(t_0)\), \({\dot{\mathbf{v}}}_0:={\dot{\mathbf{v}}}(t_0)\) and \(\mathbf {a}_0:={\dot{\mathbf{v}}}(t_0)\) with \({\dot{\mathbf{v}}}(t_0)\) denoting the consistent acceleration vector being defined in (19).

Heavy top benchmark (index-3 formulation): Global error of integrator (37) versus h for \(t\in [0,1]\). Left plot \(\mathrm {SO}(3)\times \mathbb {R}^3\), right plot \(\mathrm {SE}(3)\)

In Fig. 6, the asymptotic behaviour of the global errors in \(q_n\), \(\mathbf {v}_n\) and \(\varvec{\lambda }_n\) for \(h\rightarrow 0\) is visualized in terms of the maximum \(\max _n\Vert \mathbf {e}_n^{(\bullet )}\Vert /\Vert (\bullet )_n\Vert \) of the norm of relative errors in the time interval \([t_0,t_{\mathrm {end}}]=[0,1]\). Here, the numerical solutions for \(h=1.25\times 10^{-4}\), \(h=2.5\times 10^{-4}\), \(h=5.0\times 10^{-4},\ldots , h=4.0\times 10^{-3}\) are compared to a reference solution that has been obtained numerically with the very small time step size \(h=2.5\times 10^{-5}\). In double logarithmic scale, the plots of global errors in \(q_n\) and \(\mathbf {v}_n\) are straight lines of slope \(+2\) (for both configuration spaces). These numerical test results indicate second-order convergence for components q and \(\mathbf {v}\).

The error constants depend on model parameters, initial values and configuration space. With the test setup of Sect. 2.4, the velocity components \(\mathbf {v}(t)\) vary much more rapidly for \(G=\mathrm {SE}(3)\) than for \(G=\mathrm {SO}(3)\times \mathbb {R}^3\), see Fig. 4. This might explain the substantially larger error constants for \(q_n\) and \(\mathbf {v}_n\) in the right plot of Fig. 6. For other setups, much smaller error constants have been observed for the configuration space \(\mathrm {SE}(3)\), see, e.g., the numerical test results of Brüls et al. (2011) for a slowly rotating top with an initial angular velocity \(\varvec{\Omega }(0)\) that has been reduced by a factor of 100.

Note, that Fig. 6 shows the norm of relative errors. The rather large nominal values of \(\mathbf {v}(t)\) with \(\Vert \varvec{\Omega }(0)\Vert \approx 150.0\) result systematically in relative errors that have a substantially smaller norm than the ones in the position coordinates q(t).

For the Lagrange multipliers \(\varvec{\lambda }(t)\), we observe order reduction since slope \(+1\) of the curve for the global errors in \(\varvec{\lambda }_n\) in the left plot of Fig. 6 indicates first-order convergence. The test results for \(G=\mathrm {SE}(3)\) in the right plot of Fig. 6 are qualitatively different from the ones in the left plot since they indicate second-order convergence for all solution components. A formal proof of this numerically observed convergence behaviour will be given in Theorem 4.18 and Example 4.19 below.

Guided by the test results for the mathematical pendulum in Example 3.2, we expect that the order reduction phenomenon might affect the numerical solution only in a transient phase and the first-order error terms in \(\varvec{\lambda }_n\) are finally damped out by numerical dissipation. This is nicely illustrated by Fig. 7 that shows the numerical solution \(\lambda _{n,1}\) for \(t\in [0,0.1]\) and two different time step sizes. In the configuration space \(G=\mathrm {SO}(3)\times \mathbb {R}^3\) (solid lines), spurious oscillations are observed that are damped out after about 50 time steps and have a maximum amplitude that depends linearly on h. Beyond this transient phase, the results coincide up to plot accuracy with the dashed lines showing simulation results for the configuration space \(G=\mathrm {SE}(3)\) that do not suffer from order reduction.

Heavy top benchmark (index-3 formulation): Global error of integrator (37) versus h for \(t\in [0.5,1]\). Left plot \(\mathrm {SO}(3)\times \mathbb {R}^3\), right plot \(\mathrm {SE}(3)\)

Neglecting the transient behaviour, we observe for both Lie group formulations second-order convergence in all solution components, see Fig. 8 that shows the maximum of the norm of global errors in time interval [0.5, 1], i.e., beyond the transient phase.

Heavy top benchmark (\(h=1.0\times 10^{-3}\), index-3 formulation): Residuals in constraints (15c). Left plot \(\mathrm {SO}(3)\times \mathbb {R}^3\), right plot \(\mathrm {SE}(3)\)

By construction, the Lie group integrator (37) defines a numerical solution \(q_n\) that satisfies the holonomic constraints \(\varvec{\Phi }(q)=\mathbf {0}\). In a practical implementation, the residuals remain in the size of the stopping bounds for the Newton method that is used to solve in each time step the system of nonlinear equations (37). For the numerical tests we applied a combined absolute and relative error criterion with tolerances \(\mathrm {ATOL}=10^{-10}\) for the absolute errors and \(\mathrm {RTOL}=10^{-8}\) for the relative errors and observe constraint residuals of size \(\Vert \varvec{\Phi }(q_n)\Vert \ll 10^{-10}\), see Fig. 9.

Heavy top benchmark (\(h=1.0\times 10^{-3}\), index-3 formulation): Residuals in hidden constraints (16). Left plot \(\mathrm {SO}(3)\times \mathbb {R}^3\), right plot \(\mathrm {SE}(3)\)

Situation is different for the residuals in the hidden constraints (16) that are in general of the size of global discretization errors since \(\mathbf {B}\bigl (q(t)\bigr )\mathbf {v}(t)=\mathbf {0}\). The left plot of Fig. 10 shows these non-vanishing residuals \(\mathbf {B}(q_n)\mathbf {v}_n\) for \(h=1.0\times 10^{-3}\) and \(G=\mathrm {SO}(3)\times \mathbb {R}^3\). They are of size \(\Vert \mathbf {B}(q_n)\mathbf {v}_n\Vert \le 0.025\) and suffer from the transient spurious oscillations being known from Fig. 7 above. For the configuration space \(G=\mathrm {SE}(3)\), the constraint residuals are smaller by eight orders of magnitude with \(\max _n\Vert \mathbf {B}(q_n)\mathbf {v}_n\Vert \approx 1.0\times 10^{-10}\). This unexpected solution behaviour is visualized in the right plot of Fig. 10. It is closely related to the fact that the constraint Jacobian \(\mathbf {B}(q)\) in (22) is constant along the analytical solution q(t), see Sect. 3.6 below for a more detailed analysis.

In all numerical tests of the present section, the numerical damping parameter was set to \(\rho _\infty :=0.9\). The qualitative behaviour of the numerical solution in configuration spaces \(\mathrm {SO}(3)\times \mathbb {R}^3\) and \(\mathrm {SE}(3)\) is, however, not sensitive w.r.t. this algorithmic parameter, see, e.g., the results for \(\rho _\infty =0.8\) and the test setup of Fig. 7 in (Brüls et al. 2011) and the results for \(\rho _\infty =0.6\) and the test setup of Fig. 20 below in (Arnold et al. 2015).

3.4 Lie Group Time Integration and Index Reduction

The large amplitude of spurious oscillations in the numerical solution \(\varvec{\lambda }_n\), see Fig. 7, results from order reduction in Newmark-type methods that are directly applied to the index-3 formulation of the equations of motion for constrained mechanical systems, see (Cardona and Géradin 1994) and (Arnold et al. 2015). As an alternative to this direct time discretization of the index-3 Lie group DAE (15) we consider in the present section an analytical index reduction before time integration. We follow the approach of Gear et al. (1985) that is well known for equations of motion in linear spaces and was extended to the Lie group setting of the present paper in (Arnold et al. 2011a).

Gear et al. (1985) introduced an auxiliary vector \(\varvec{\eta }(t)\in \mathbb {R}^m\) in the kinematic equations to couple the hidden constraints at the level of velocity coordinates to the equations of motion. In the Lie algebra approach to Lie group time integration, these modified kinematic equations get the form \(\dot{q}(t)=DL_{q(t)}(e)\cdot \widetilde{\varvec{\Delta }\mathbf {q}}(t)\) with \(\widetilde{\varvec{\Delta }\mathbf {q}}\in \mathfrak {g}\) being defined by \(\varvec{\Delta }\mathbf {q}=\mathbf {v}-\mathbf {B}^\top (q)\varvec{\eta }\), see (6). The resulting stabilized index-2 formulation of the equations of motion is given by

For the modified kinematic equations (55a), the time derivative of the holonomic constraints (55d) is given by \(\mathbf {0}=\mathbf {B}(q)\varvec{\Delta }\mathbf {q}\), see (16). Therefore, Eqs. (55b) and (55e) yield \(\mathbf {0}=[\mathbf {B}\mathbf {B}^\top ](q)\varvec{\eta }\) and \(\varvec{\eta }(t)\equiv \mathbf {0}\) since the full rank assumption on the constraint matrix \(\mathbf {B}\in \mathbb {R}^{m\times k}\) implies that \(\mathbf {B}\mathbf {B}^\top \in \mathbb {R}^{m\times m}\) is non-singular. Hence, \(\varvec{\Delta }\mathbf {q}(t)=\mathbf {v}(t)\) and the stabilized index-2 formulation (55) is analytically equivalent to the original equations of motion (15).

The index analysis of Gear et al. (1985) is extended straightforwardly from linear spaces to the Lie group setting of the present paper and shows that the analytical transformation from (15) to (55) reduces the DAE index of the equations of motion from three to two.

The generalized-\(\alpha \) method for the index-2 system (55) satisfies at \(t=t_{n+1}\) the holonomic constraints (55d) as well as the hidden constraints (55e). An auxiliary vector \(\varvec{\eta }_n\in \mathbb {R}^m\) is added to the definition of the increment vector \(\varvec{\Delta }\mathbf {q}_n\), see (55b):

Heavy top benchmark (stabilized index-2 formulation): Global error of integrator (56) versus h for \(t\in [0,1]\). Left plot \(\mathrm {SO}(3)\times \mathbb {R}^3\), right plot \(\mathrm {SE}(3)\)

Following the test scenario of Sect. 3.3, we study the asymptotic behaviour of integrator (56) for \(h\rightarrow 0\) by numerical tests for the heavy top benchmark in configuration spaces \(G=\mathrm {SO}(3)\times \mathbb {R}^3\) and \(G=\mathrm {SE}(3)\), respectively. As before, we scale the norm of the (absolute) global errors by the norm of nominal values and consider the maximum of these relative errors in time interval \([t_0,t_{\mathrm {end}}]=[0,1]\). Figure 11 shows these maximum values of the norm of global errors in \(q_n\), \(\mathbf {v}_n\) and \(\varvec{\lambda }_n\) versus time step size h. In double logarithmic scale, we get in the step size range \(h\ge 2.5\times 10^{-4}\) curves of slope \(+2\) indicating second-order error terms in all solution components.

For the configuration space \(\mathrm {SO}(3)\times \mathbb {R}^3\) (left plot) and very small time step sizes \(h<2.5\times 10^{-4}\), the errors in \(\varvec{\lambda }_n\) are dominated by a first-order term. On the other hand, the error constants of the second-order error terms are slightly smaller than the ones in the corresponding plots for the index-3 integrator (37), see Figs. 6 and 8. The results for configuration space \(\mathrm {SE}(3)\) in the right plot of Fig. 11 coincide up to plot accuracy with the ones in Figs. 6 and 8.

The comparison of time histories for \(\varvec{\lambda }_n\) in Figs. 7 and 12 shows that the spurious oscillations seem to disappear if hidden constraints are taken into account for time integration, see (56g). For a more detailed analysis, we consider in Fig. 13 the relative global error in \(\lambda _{n,1}\) for \(G=\mathrm {SO}(3)\times \mathbb {R}^3\) and two different time step sizes. There is an oscillating first-order error term of maximum amplitude \(0.64\,h\) that is rapidly damped out. For time step sizes \(h\ge 5.0\times 10^{-4}\), it does not contribute significantly to the overall global error in \(\varvec{\lambda }_n\) on time interval [0, 1] that is approximately of size \(3.0\times 10^3\,h^2\), see Fig. 11.

The test results in the right plot of Fig. 10 indicate that the index-3 integrator (37) yields for the heavy top benchmark in \(G=\mathrm {SE}(3)\) a numerical solution \(q_n\), \(\mathbf {v}_n\) that satisfies the hidden constraints (56g) up to (very) small residuals. Therefore, the auxiliary variables \(\varvec{\eta }_n\in \mathbb {R}^m\) that represent the differences between integrators (37) and (56) vanish in that case identically, see also Sect. 3.6 below.

For the configuration space \(G=\mathrm {SO}(3)\times \mathbb {R}^3\), we observed in the left plot of Fig. 10 non-vanishing constraint residuals \(\mathbf {B}(q_n)\mathbf {v}_n\) for the index-3 integrator (37). In integrator (56), they are compensated by auxiliary variables \(\varvec{\eta }_n=\mathcal {O}(h^2)\) for the stabilized index-2 formulation of the equations of motion. Figure 14 shows \(\varvec{\eta }_n\) versus \(t_n\) for two different time step sizes. The maximum amplitudes of \(\varvec{\eta }_n\) differ by a factor of 4 if step sizes h and h/2 are considered, \(h=1.0\times 10^{-3}\). Therefore, we expect second-order convergence for solution components \(\varvec{\eta }_n\).

Finally, we study the constraint residuals for a practical implementation of integrator (56). As before, the residuals in the holonomic constraints (15c) at the level of position coordinates are very small. For the hidden constraints (16) at the level of velocity coordinates, the residuals for integrator (56) are shown in Fig. 15. For the heavy top benchmark, they are of size \(2.0\times 10^{-9}\) for \(G=\mathrm {SO}(3)\times \mathbb {R}^3\) and of size \(2.0\times 10^{-15}\) for \(G=\mathrm {SE}(3)\).