Abstract

Web-based social relations mirror several known phenomena identified by Social Sciences, such as Homophily. Social circles are inferable from those relations and there are already solutions to find the underlying sentiment of social interactions. We present an empirical study that combines existing Graph Clustering and Sentiment Analysis techniques for reasoning about Sentiment dynamics at cluster level and analyzing the role of social influence on sentiment contagion, based on a large dataset extracted from Twitter during the 2014 FIFA World Cup. Exploiting WebGraph and LAW frameworks to extract clusters, and SentiStrength to analyze sentiment, we propose a strategy for finding moments of Sentiment Homophily in clusters. We found that clusters tend to be neutral for long ranges of time, but denote volatile bursts of sentiment polarity locally over time. In those moments of polarized sentiment homogeneity there is evidence of an increased, but not strong, chance of one sharing the same overall sentiment that prevails in the cluster to which he belongs.

Access provided by Autonomous University of Puebla. Download chapter PDF

Similar content being viewed by others

Keywords

These keywords were added by machine and not by the authors. This process is experimental and the keywords may be updated as the learning algorithm improves.

1 Introduction

Twitter is a highly dynamic social environment where 316 million monthly active users generate a stream of 500 million tweets per day. It not only allows millions of users to interact among each other, but it is also a window for those interactions. Since it is an accessible and prolific source of social data, Twitter and other web-based social networks are widely used in the literature for different Social-related Analysis [8], such as Network Dynamics [15], Community Detection [16], Event Detection and Prediction [7, 18], Information Flow [2], Influence and Homophily Analysis [1, 21], Sentiment Analysis [12]. Some of these study the interdependencies and possible correlations among the different topics, however we found that there is not an extensive study about sentiment prevalence on clusters and whether this sentiment can be spread by influence into a state of sentiment homophily inside those clusters. Understanding how sentiment behaves at a cluster level can be useful for mining the overall mood of communities, and it may also be useful for improving sentiment classification techniques using enriched information about surrounding emotions.

Easley and Kleinberg [8] define homophily as the principle that people tend to be similar to their friends, which may be caused by selection or social influence. We found that sometimes homophily is defined in the literature as selection itself [19], i.e., people select friends with similar characteristics. Following the first definition, we search for moments of sentiment homophily in social circles and we try to understand if they are caused by social influence. The hypotheses that motivate our work are:

-

H1: The sentiment expressiveness inside clusters is highly dynamic over time.

-

H2: Clusters show moments of sentiment prevalence.

-

H3: During moments of sentiment homogeneity in a cluster, there is an increased chance that a user is influenced by the surrounding emotion and shows a similar sentiment to the one prevailing at that moment.

Regarding some specific terms related with Twitter, a tweet is a message with a maximum size of 140 characters that can include photos and videos. By retweeting a tweet, a user is forwarding that tweet to his own followers. A mention is an explicit reference to a user using the tag “@” followed by his unique username. For instance, typing “@maria” is a mention to the user “maria”. A reply is a particular case of a mention in which the mention is located at the bottom of the tweet. Replies are used to comment or answer something that the mentioned user has tweeted.

Using existing clustering and sentiment classification techniques, we propose to measure the overall sentiment of clusters based on the frequency of tweets for each possible sentiment value, regarding their sentiment classification. We found that the neutral value is the most frequent classification during the clusters’ lifetime, however different sentiment values appear, usually in spikes and with different polarities over time, confirming the highly dynamic nature of clusters’ sentiment (H1). We also observed moments of sentiment homophily (H2), for instance in chains of retweets or topic-related discussions and we describe a systematic strategy for finding those moments. Finally, we used dubious sentiment classifications for testing the role of influence in the origin of those moments of sentiment homophily by comparing the extrapolation of the clusters’ overall sentiment with human-coders’ evaluations. With this strategy we found a tendency for ambiguous classifications being correctly relabeled with the prevalent sentiment of respective clusters (H3).

2 Related Work

Fowler and Christakis [10] conducted a study about the spread of happiness within social networks, using data from the Framingham Heart Study,Footnote 1 collected between 1983 and 2003. From a network of 4,739 individuals, each person was weekly asked how often they experienced certain feelings during the previous week: “I felt hopeful about the future”, “I was happy”, “I enjoyed life”, “I felt that I was just as good as other people”. They used this information to measure the state of happiness of individuals throughout a period of time. According to their results there is happiness homophily up to three degrees of separation between nodes. This study not only found evidence of sentiment contagion through influence, it also suggests that it may cause sentiment homophily at a cluster level.

Thelwall [22] searched for homophily in social network sites using data extracted from MySpace, concluding that there was a highly significant evidence of homophily for several characteristics such as ethnicity, age, religion, marital status. Then, he conducted another study on emotion homophily [23], based on the same type of data. Using an initial version of SentiStrength [24] for sentiment classification, two different methods were tested to seek emotion homphily between pairs of friends: a direct method and an indirect method. The direct method compares only the sentiment of the conversational comments between each pair of friends. The indirect method compares the average emotion classification of comments directed to each node, independently, in each pair of friends. Weak but statistically significant levels of homophily were found with both methods. However, the direct method can only give insight of the average homophily at a maximum distance of 1, while the indirect method covers a maximum distance of 3.

Gruzd et al. [11] followed the study of Fowler and Christakis with web-based social network data, focusing on the potential propagation factors for sentiment contagious instead of searching for evidence of sentiment homophily. They performed a topic-oriented data extraction from Twitter in order to minimize possible bias caused by the occurrence of multiple events that generate multiple unrelated discussions, and they found on the 2010 Winter Olympics a well covered and very popular event on Twitter, from which they got strong emotional content. Using SentiStrength for tweets’ sentiment classification, they found that a tweet is more likely to be retweeted through a network of follow relations if its tone and content are both positive. Fan et al. [9] decomposed sentiment into four emotions: angry, joyful, sad and disgusting. They used a bayesian classifier to infer these emotions based on emoticon occurrence in interactions extracted from Weibo. Considering pairs of direct friends in a follow-relation network, they only found evidence of emotion homophily regarding anger and joy, observing that anger was the most influential emotion and the chance of contagion was higher in stronger ties. Using a follow-relation network extracted from Twitter, Bollen et al. [5] also found sentiment homophily but regarding sentiment polarity, which they called subjective well-being assortativity. They observed that pairs of friends connected by strong ties are more assortative, however they did not identify whether this phenomenon was caused by selection or social influence. None of these studies analyzed sentiment dynamics over time nor looked into an overall sentiment at community level.

Following these findings, we propose to look for signs of sentiment homophily at a cluster level and understand whether prevalent sentiment in social circles can be used for estimating individuals’ sentiment.

3 Dataset Overview

To find social circles and analyze their behavior over time, a large amount of data needs to be extracted during a period of several weeks. We extracted the dataset using Twitter Public Streaming API,Footnote 2 filtering the data according to a list of keywords related to 2014 FIFA World Cup. Extraction started on March 13th of 2014 and it ended on July 15th of 2014, covering the entire event that took place from June 12th to July 13th of 2014. It resulted in 166 GB of compressed data containing a collection of 339,702,345 tweets, having missed an estimated amount of at most 30 million tweets about the topic according to the limit messages received from the API. Due to the large amount of countries participating in the World Cup, we only considered a subset of the entire dataset for our analysis. This subset covers the knock-out stage of the event, from June 27th until July 15th, with 97,403,564 tweets that represent \(28.7\,\%\) of the entire data. We did this to minimize the sparsity of the information, since only 16, from the initial 32 participating countries, were still in competition. English is the most spoken language in the subset, representing \(45.8\,\%\) of the tweets, followed by Spanish with \(24.2\,\%\), and Portuguese with \(10.2\,\%\). Regarding the distribution of each type of tweets in the subset we found that \(38.2\,\%\) are simple tweets, \(55.3\,\%\) are retweets, and \(6.5\,\%\) are replies. We also noticed that \(64.7\,\%\) of all tweets have at least one mention, which makes it the most frequent type of strong relations in the dataset, followed by retweets and then replies. However, the set of mentions contains the set of replies and also intersects the set of retweets.

4 Approach

Our approach is divided into four stages: User Clustering; Tweet Clustering; Sentiment Analysis; and Influence and Homophily Analysis in time series, as it is outlined in Fig. 1. The first three stages integrate existing solutions for clustering and sentiment analysis with several scripts for data transformation. They were used to process the extracted dataset into time-series of sentiment information about social circles. With the preprocessed data obtained from these three stages, we propose a set of metrics to evaluate the extent of sentiment homophily. Then, we propose a strategy to ascertain a possible relation between influence and sentiment, which can eventually improve the sentiment classification of tweets in clusters that denote sentiment homophily.

4.1 User Clustering

Before finding the social circles, we needed to find the social network that comprises them. We decided to build the network’s graph considering only strong ties, which the literature states to be found in retweets and mentions [6, 13, 20]. However, we chose to use only replies because retweets and replies are mutually exclusive and replies represent direct conversations, which may not be necessarily true with mentions. We started by filtering all retweets and replies from the dataset, converting them from JSON to the condensed format: “type tweetID userID receiverID timestamp”. To analyze the clusters in different periods of time, the set of retweets and replies were individually filtered and sorted by their timestamp values, for independent analysis.

Once we were dealing with networks with millions of nodes and edges, we chose to use WebgraphFootnote 3 [4] to build and analyze underlying graphs, and used Layered Label Propagation (LLP) algorithm in LAW software libraryFootnote 4 for clustering them. Besides compressing the ASCIIGraph to the WebGraph’s format BVGraph, we had to symmetrize it to an undirected and loop-less graph to be used by LLP algorithm, to do user clustering. The symmetric graph was also used to calculate the connected components of the network. LLP [3] is an iterative strategy that reorders the graph such that nodes with the same label are close to one another. This node reordering is useful for graph compression, however, for our purposes we only require the node labeling assignment produced by the label propagation algorithm that returns a clustering configuration of the graph. The clustering result is mappable with a sorted list of user IDs, and all these steps are outlined in Fig. 2.

4.2 Tweet Clustering

Our strategy to classify the sentiment of a cluster was getting the tweets that the users in that cluster tweeted during the lifetime of the cluster, and then classifying each one independently to sum up an overall result. For that we clustered the tweets according to the previously obtained clusters of users, i.e., we extracted from the dataset all the tweets of each user in the cluster, created in the same period of time used to cluster the users. Then we converted these tweets to the shorter format: “userID tweetID language epochTimestamp hashtagCounter URLCounter mentionCounter tweetText”. All the clusters with only one or two tweets were removed. Each cluster of tweets was filtered and divided by its prevalent language, in order to perform the sentiment classification without mixed languages (Fig. 3).

4.3 Sentiment Analysis

We chose the lexicon-based SentiStrength tool [24] to perform automatic sentiment classification of the tweets, because (1) it does not require training data when working in unsupervised mode; (2) it has good performance and it is able to process more than 16,000 tweets/s in standard machines; (3) and has good results on Twitter datasets [11, 24]. Giving a text file as input, SentiStrength outputs another file with each line of text of the input file annotated with two sentiment values: a positive integer \(s_{+} \in \{1,\ldots , 5\}\) and a negative integer \(s_{-} \in \{-5, \ldots , -1\}\). The higher the absolute value, the higher the polarity strength of that value.

To classify the tweets in each cluster of tweets we filtered only the tweet text. To avoid words out of context that could be matched by SentiStrength, we removed all the mentions, retweet indicatives and URLs occurrences in the text. After running SentiStrength over the clusters of tweets we got, for each cluster, a matching file with the classified sentiment annotated for each tweet.

4.4 Influence and Sentiment Homophily Analysis over Time

The user clustering, tweet clustering and sentiment analysis stages were scripted to extract the information about the clusters in the network and their sentiment, during desired time intervals. For our analysis we performed a round-based clustering for each round of the knock-out stage subset, which includes the round of 16, quarter-finals, semi-finals and final stage of the World Cup.

Since we were seeking an overall sentiment, we chose to condensate the two sentiment values in one unique value, calculating the Absolute Sentiment value,

This way, a tweet is positive with a strength between 1 and 4, neutral when 0, or negative with a strength between \(-1\) and \(-4\). This approach promotes clearly polarized sentiment results and penalizes balanced strength results. Thus, the results \((5, -5)\), \((4, -4)\), \((3, -3)\), \((2, -2)\), which we consider ambiguous results, have the same absolute sentiment of 0 as the SentiStrength neutral result \((1, -1)\).

We focused on polarity changes over time and we calculated the distribution of the absolute sentiment values per hour, in each cluster, by counting the number of tweets for each absolute sentiment result. By analyzing these distributions over time we were able to observe sentiment dynamics and detect sentiment homophily, when existing.

To systematically find periods of polarity homophily, assuming that sentiment homophily is found locally in time, we defined a time window t, a minimum number of tweets m needed to consider a sentiment prevalence in t, and minimum rate of polarity prevalence p in t, as metric for sentiment homogeneity. Let \(\varDelta t(x_1, x_2)\) be the time interval between two tweets, and \(pol(x_1,\ldots , x_n)\) be the rate of the prevalent polarity in a sequence of tweets, there is sentiment homophily for a sequence of tweets \(x_1, x_2, \ldots , x_n\) when,

However, finding time intervals that satisfy this metric does not show if there is an increased chance of any user in that cluster of sharing the same befitting sentiment with the overall sentiment that surrounds him, i.e., being influenced by his peers’ mood. Our approach to evaluate whether moments of sentiment homophily are caused by influence is to look for ambiguous tweets in moments of prevalent polarized sentiment in the cluster, to which we assign that same prevalent polarization, and then we compare this updated sentiment classification with human coders classifications.

Lets assume the pairs \((1, -1), (2, -2), (3, -3), (4, -4), (5, -5)\) as ambiguous results in polarized clusters. The reason for this assumption regarding \((2, -2)\), \((3, -3)\), \((4, -4)\), \((5, -5)\) is that they reveal sentiment strength but not a decided polarization, even in a polarized environment. We also include \((1, -1)\) because SentiStrength outputs this value both for neutral sentences and for sentences that do not match any word in the lexicon, which gives a dubious meaning to this value. This way, we trust more in polarized classifications.

After identifying ambiguous results, we search for an ambiguity a that has a number of surrounding tweets equal or greater than m, with a prevalence of a certain polarity equal or greater than p during a period of time t that includes a. For each ambiguity a found in a context with these characteristics, we set its polarity to be the same as the prevalent polarity of the tweets surrounding it. We propose two algorithms to do this sentiment extrapolation, that only differ in the position that the ambiguity occupies in the context configuration. The first algorithm searches for ambiguities that have a central position in the polarized context, being fixed at the center of the time window. For a set of ambiguities A found in a sequence of tweets \(T = \{x_1,\ldots , x_n\}\), when \(x_a \in A \wedge x_a \in T\), and

then the sentiment polarity of \(x_a\) is relabeled with the prevalent sentiment polarity in \(x_b,\ldots , x_e\).

The second algorithm considers any ambiguity that belongs to a sliding time window t that fulfills those restrictions, independently of its position towards the context. For a set of ambiguities A found in a sequence of tweets \(T = \{x_1,\ldots , x_n\}\), when \(x_a \in A \wedge x_a \in T\), and

then the sentiment polarity of \(x_a\) is relabeled with the prevalent sentiment polarity in \(x_b,\ldots , x_e\).

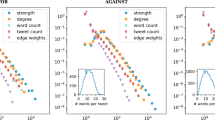

Time-line of tweets’ frequency of absolute sentiment for each accumulation of 3 h. Cluster “413547” from the Spanish-speaking set of reply-based clusters over the quarter-finals stage, cluster “1000883” from the English-speaking set of reply-based clusters over the semi-finals, and cluster “2049176” from the Spanish-speaking set of retweet-based clusters over the final stage

5 Results and Discussion

We used the modularity coefficient Q, that measures the division of the nodes in a graph into different clusters and the strength of their connections [17], to evaluate the quality of the clusters obtained with LLP algorithm. For clusters obtained from retweet-relation graphs we got an average of \(Q = 0.620\), while for reply-relation graphs this value increased for \(Q = 0.800\). This denotes that reply-relations are more restrict than retweets and generate smaller but denser clusters. The size distribution of all sets of clusters followed a power-law, regardless the round, language, or type of relation of the graphs. Considering hypothesis H1 and H2 we can observe in Fig. 4 that sentiment is highly dynamic, especially for reply-based clusters. With periods of sentiment neutrality interleaved with periods of sentiment polarity, there are moments in which a certain polarity prevails, where we can find periods of local sentiment homophily. Even though these moments appear to be quite ephemeral. The majority of clusters have sentiment peaks during their lifetime, which represents \(85.5\,\%\) of the clusters obtained from retweet-relation graphs and \(95.2\,\%\) of the clusters obtained from reply-relation graphs, confirming H1. Clusters that showed total neutral sentiment were mainly small size clusters. We detected that chains of polarized retweets generate moments of sentiment homogeneity (H2), as well as some topic-related conversations, which are respectively more frequent (but not exclusively) on retweet-based clusters, and reply-based clusters. If we assume that, when some user retweets a certain status, there is a chance of that user being also sharing the inherent sentiment of that status’ message, then we may say that there is sentiment influence on cascades of retweets.

Regarding H3, we gathered 24 human-coders, in which 23 of them are Portuguese native-speakers and the remaining one is a Spanish native-speaker. All of them are able to read and interpret English, and 18 are also able to read and interpret Spanish. We shuffled them into 8 groups of 3, and each group evaluated two sets of 100 ambiguous tweets. This way, each ambiguity was classified by three different human-coders. The testing samples were randomly collected from the set of ambiguous tweets found with the sliding window algorithm, using the fixed parameters \(t = 6\) h, \(m = 10\), and \(p = 0.7\). These samples sum a total of 1,600 ambiguous tweets, divided into 800 for English, 600 for Spanish, and 200 for Portuguese. Half of the sets of each language was extracted from retweet-based clusters, and the other half from reply-based clusters. Each person was asked to classify the sentiment expressed in the tweet message, as positive, neutral, or negative. We chose to only ask for the polarity and not the sentiment strength to simplify the classification process. We included the neutral option assuming that there are indeed some tweets that do not express any kind of polarization. The results in Table 1 suggest a tendency for the real sentiment of ambiguous tweets to match the overall sentiment of their clusters, over having a neutral or mismatching sentiment polarity, and this value is clearly higher than it would be assigned by chance. However, this matching rate is not sufficient to claim that when there is a period of sentiment homophily there is a strong chance of a user in that cluster sharing a tweet with an equivalent polarity. We evaluated the reliability of the human coder classifications in terms of agreement using the Krippendorff’s alpha-coefficient [14], which varied between 0.24703 and 0.53167, i.e., they are statistically reliable but with a certain level of disagreement, unveiling the subjective nature of this task.

6 Conclusion and Future Work

With this work we observed that sentiment reveals a highly dynamic behavior at cluster level, having ephemeral spikes of polarity usually lasting for a few hours. We were able to locally find moments of sentiment homogeneity during those spikes by setting a time window t, a minimum number of tweets m needed to consider a sentiment prevalence in t, and minimum rate of polarity prevalence p in t. Using a sample with 97,403,564 tweets where 14,075,547 different users participate in retweet/reply relations, we found similar results for clusters of different languages (English, Spanish, Portuguese) during different periods of time. For understanding if an existing overall sentiment in a cluster may influence the sentiment of its individuals, we relabeled the sentiment of ambiguous classifications surrounded by a context of sentiment homophily with the prevalent sentiment of that cluster during t and we evaluated this extrapolation with human coders. The matching rate between the human-coders classification and the clusters’ sentiment polarity extrapolation always shows higher and more stable expressiveness over mismatching and neutral rates. However, with the best matching result around \(60\,\%\), we can only say we found a weak but significant tendency of a user sharing a befitting sentiment in a cluster during a period of sentiment homogeneity.

Given the level of disagreement between human coders it would be desirable to use a higher odd number of coders for each evaluation set. In the future it would be interesting to separate neutral sentiment classifications from undecidable sentiment classifications, which have the same value \((1, -1)\) when classified by SentiStrength, and see what would happen to the rate of neutral classifications among the human coder classifications. It could also be interesting testing the repeatability of the results when exploring different techniques for building and clustering the network’s graph, such as using ego-networks and local clustering methods, respectively.

Notes

- 1.

Medical study about cardiovascular disease—https://www.framinghamheartstudy.org/.

- 2.

- 3.

- 4.

References

Bakshy, E., Hofman, J.M., Mason, W.A., Watts, D.J.: Everyone’s an influencer: quantifying influence on twitter. In: Proceedings of the Fourth ACM International Conference on Web Search and Data Mining. WSDM ’11, pp. 65–74. ACM, NY (2011)

Bakshy, E., Rosenn, I., Marlow, C., Adamic, L.: The role of social networks in information diffusion. In: Proceedings of the 21st International Conference on World Wide Web. WWW ’12, pp. 519–528. ACM, NY (2012)

Boldi, P., Rosa, M., Santini, M., Vigna, S.: Layered label propagation: a multiresolution coordinate-free ordering for compressing social networks. In: Proceedings of the 20th International Conference on World Wide Web. WWW ’11, pp. 587–596. ACM, NY (2011)

Boldi, P., Vigna, S.: The webgraph framework I: compression techniques. In: Proceedings of the 13th International Conference on World Wide Web. WWW ’04, pp. 595–602. ACM, NY (2004)

Bollen, J., Gonçalves, B., Ruan, G., Mao, H.: Happiness is assortative in online social networks. Artif. Life 17(3), 237–251 (2011)

Cha, M., Haddadi, H., Benevenuto, F., Gummadi, K.P.: Measuring user influence in twitter: the million follower fallacy. In: ICWSM ’10: Proceedings of international AAAI Conference on Weblogs and Social (2010)

Conover, M., Gonçalves, B., Ratkiewicz, J., Flammini, A., Menczer, F.: Predicting the political alignment of twitter users. In: SocialCom/PASSAT, pp. 192–199. IEEE (2011)

Easley, D., Kleinberg, J.: Networks, Crowds, and Markets: Reasoning About a Highly Connected World. Cambridge University Press, NY (2010)

Fan, R., Zhao, J., Chen, Y., Xu, K.: Anger is more influential than joy: sentiment correlation in weibo. PLoS ONE 9(10), e110184 (2014)

Fowler, J., Christakis, N.: Dynamic spread of happiness in a large social network: longitudinal analysis over 20 years in the framingham heart study. Br. Med. J. 337, a2338 (2008)

Gruzd, A., Doiron, S., Mai, P.: Is happiness contagious online? A case of twitter and the 2010 winter olympics. In: Proceedings of the 2011 44th Hawaii International Conference on System Sciences. HICSS ’11, pp. 1–9. IEEE Computer Society, Washington (2011)

Hodeghatta, U.R.: Sentiment analysis of hollywood movies on twitter. In: Proceedings of the 2013 IEEE/ACM International Conference on Advances in Social Networks Analysis and Mining. ASONAM ’13, pp. 1401–1404. ACM, NY (2013)

Huberman, B., Romero, D., Wu, F.: Social networks that matter: twitter under the microscope. First Monday 14(1) (2008)

Krippendorff, K.: Computing krippendorff’s alpha reliability. Technical report, University of Pennsylvania, Annenberg School for Communication (2011)

Leskovec, J., Huttenlocher, D., Kleinberg, J.: Signed networks in social media. In: Proceedings of the SIGCHI Conference on Human Factors in Computing Systems. CHI ’10, pp. 1361–1370. ACM, NY (2010)

Mcauley, J., Leskovec, J.: Discovering social circles in ego networks. ACM Trans. Knowl. Discov. Data 8(1), 4:1–4:28 (2014)

Newman, M.: Networks: An Introduction. Oxford University Press Inc, NY (2010)

Sakaki, T., Okazaki, M., Matsuo, Y.: Earthquake shakes twitter users: real-time event detection by social sensors. In: Proceedings of the 19th International Conference on World Wide Web. WWW ’10, pp. 851–860. ACM, NY (2010)

Shalizi, C.R., Thomas, A.C.: Homophily and contagion are generically confounded in observational social network studies (2010)

Tang, J., Chang, Y., Liu, H.: Mining social media with social theories: a survey. SIGKDD Explor. Newsl. 15(2), 20–29 (2014)

Tang, J., Gao, H., Hu, X., Liu, H.: Exploiting homophily effect for trust prediction. In: Proceedings of the Sixth ACM International Conference on Web Search and Data Mining. WSDM ’13, pp. 53–62. ACM, NY (2013)

Thelwall, M.: Homophily in myspace. J. Am. Soc. Inf. Sci. Technol. 60(2), 219–231 (2009)

Thelwall, M.: Emotion homophily in social network site messages. First Monday 15(4) (2010)

Thelwall, M., Buckley, K., Paltoglou, G.: Sentiment strength detection for the social web. J. Am. Soc. Inf. Sci. Technol. 63(1), 163–173 (2012)

Acknowledgments

This work was partly supported by national funds through Fundação para a Ciência e Tecnologia (FCT), under projects EXCL/EEI-ESS/0257/2012 and UID/CEC/50021/2013.

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Rights and permissions

Copyright information

© 2016 Springer International Publishing Switzerland

About this chapter

Cite this chapter

Lopes, H., Pinto, H.S., Francisco, A.P. (2016). Influence and Sentiment Homophily on Twitter Social Circles. In: Cherifi, H., Gonçalves, B., Menezes, R., Sinatra, R. (eds) Complex Networks VII. Studies in Computational Intelligence, vol 644. Springer, Cham. https://doi.org/10.1007/978-3-319-30569-1_27

Download citation

DOI: https://doi.org/10.1007/978-3-319-30569-1_27

Published:

Publisher Name: Springer, Cham

Print ISBN: 978-3-319-30568-4

Online ISBN: 978-3-319-30569-1

eBook Packages: EngineeringEngineering (R0)