Abstract

Generally speaking, for a network of interconnected systems, synchronisation consists in the mutual coordination of the systems’ motions to reach a common behaviour. For homogeneous systems that have identical dynamics this typically consists in asymptotically stabilising a common equilibrium set. In the case of heterogeneous networks, in which systems may have different parameters and even different dynamics, there may exist no common equilibrium but an emergent behaviour arises. Inherent to the network, this is determined by the connection graph but it is independent of the interconnection strength. Thus, the dynamic behaviour of the networked systems is fully characterised in terms of two properties whose study may be recast in the domain of stability theory through the analysis of two interconnected dynamical systems evolving in orthogonal spaces: the emergent dynamics and the synchronisation errors relative to the common behaviour. Based on this premise, we present some results on robust stability by which one may assess the conditions for practical asymptotic synchronisation of networked systems. As an illustration, we broach a brief case-study on mutual synchronisation of heterogeneous chaotic oscillators.

Chapter gladly devoted to our dear friend H. Nijmeijer who introduced the authors to the topic of synchronisation 19 years ago.

Access provided by CONRICYT-eBooks. Download chapter PDF

Similar content being viewed by others

Keywords

These keywords were added by machine and not by the authors. This process is experimental and the keywords may be updated as the learning algorithm improves.

1 Introduction

As its etymology suggests, synchronisation may be defined as the adjustment of rhythms of repetitive events (phenomena, processes, ...) through sufficiently strong interaction. In dynamical systems theory, we also speak of synchronised systems if their movements are coordinated in time and/or space. It can be of several types: if one system “dominates” over the rest, we speak of master–slave synchronisation; in this case, the motion of the so-called master system becomes a reference for the motion of the so-called slave system(s). Alternatively, synchronisation may be mutual, in which case a set of systems synchronise their movements without specified hierarchy. Controlled synchronisation of dynamical systems consists, generally speaking, in ensuring that two or more systems coordinate their motions in a desired manner.

Synchronisation has been a subject of intense research in several disciplines before control theory: it was introduced in the 1970s in the USSR in the field of mechanical vibration by Professor Blekhman. Ever since, research on synchronisation has been popular among physicists, e.g. in the context of synchronisation of chaotic systems since the early 1990s, but also among engineers, especially on automatic control. In this community, the paradigm of synchronisation was largely popularised by H. Nijmeijer. His seminal paper [12] is a landmark tutorial on master–slave synchronisation and his pioneer work [13] on mutual synchronisation (of mechanical systems) preceeds the bulk of literature on a paradigm that is nowadays better known in our comunity under the name of consensus—see [19].

Consensus pertains to the case in which a (large) group of interconnected systems mutually synchronise their behaviours. In this case, we speak of networks of systems. These are not just large-scale and complex systems but they are characterised by decentralised, distributed, networked compositions of (semi)autonomous elements. These new systems are, in fact, systems of systems. The complexity of network interconnected systems may not be overestimated. For instance, in neuronal networks, experimental evidence shows that inhibition/excitation unbalance may result in excessive neuronal synchronisation, which, in turn, may be linked to neuro-degenerative diseases such as Parkinson and epilepsy. In energy transformation networks, the improper management of faults, overloads or simply adding to or subtracting a generator from the transportation network may result in power outages or even in large-scale (continent-wide) blackouts.

In this chapter, we briefly describe a framework, which was originally and recently introduced in [15], for analysis and control of synchronisation of networked heterogeneous systems that is, with different parameters or even completely distinct dynamic models.

We limit our study to the analysis paradigm, as opposed to that of controlled synchronisation. At the expense of technological and dynamical aspects related directly to the network communication (delays, noise, etc.), we focus on structural properties of the network that affect the synchronisation of the agents’ motions in one way or another. More precisely, the following issues play a key role in analysis and control of synchronisation of networked systems:

-

the coupling strength;

-

the network topology;

-

the type of coupling between the nodes, i.e. how the units are interconnected;

-

the dynamics of the individual units.

Among the latter, our technical results establish how the coupling strength affects synchronisation. Our analysis is carried out from a dynamical systems and stability theory viewpoint.

2 The Networked Systems Synchronisation Paradigm

Let us consider a network of dynamical systems modelled via ordinary differential equations,

where \(\varvec{x}_i \in {\mathbb {R}}^n \), \({\varvec{u}}_i\in {\mathbb {R}}^m\) and \({\varvec{y}}_i \in {\mathbb {R}}^m\) denote the state, the input and the output of the ith unit, respectively. The network’s topology is usually described via graph theory: a network of N nodes is defined by its \(N\times N\) adjacency matrix \(D=[d_{ij}]\) whose (i, j) element, denoted by \(d_{ij}\), specifies an interconnection between the ith and jth nodes. See [19].

The interaction among nodes depends, in general, on the strength of the coupling and on the nodes’ state variables or on functions of the latter, i.e. outputs which define the coupling terms. The interaction is also determined by the form of coupling, i.e. the way how the output of one node affects another; this can be linear, as it is fairly common to assume, but it may also be nonlinear, as in the well-known example of Kuramoto’s oscillator model in which the interconnection is made via sinusoids—see [3].

Here, we consider a network composed of N heterogeneous diffusively coupled nonlinear dynamical systems in normal form:

As it may be clear from the notation, each unit possesses one input \(u_i\) and one output \(y_i\) of the same dimension, i.e. \(u_i\), \(y_i \in \mathbb {R}^m\). The state \( z_i\) corresponds to that of the ith agent’s zero dynamics—see [7]. The functions \(f^1_i : \mathbb {R}^m \times \mathbb {R}^{n-m}\rightarrow \mathbb {R}^m\), \(f^2_i : \mathbb {R}^m \times \mathbb {R}^{n-m}\rightarrow \mathbb {R}^{n-m}\) are assumed to be locally Lipschitz.

It is convenient to remark that there is little loss of generality in considering systems in normal form, these are equivalent to systems of the form (4.1) under the assumption that the matrices \(B\in \mathbb {R}^{n\times m}\) and \(C\in \mathbb {R}^{m\times n}\) satisfy a similarity condition for CB that is, if there exists U such that \(U^{-1}CB U=\varLambda \) where \(\varLambda \) is diagonal positive—see e.g. [17, 18].

We also assume that the units possess certain physical properties reminiscent of energy dissipation and propagation. Notably, one of our main hypotheses is that the solutions are ultimately bounded; we recall the definition below.

Definition 4.1

(Ultimate boundedness) The solutions of the system \(\dot{x}=f(x)\), defined by absolutely continuous functions \((t,x_\circ )\mapsto x\), are said to be ultimately bounded if there exist positive constants \(\varDelta _\circ \) and \(B_x\) such that for every \(\varDelta \in (0,\varDelta _\circ )\), there exists a positive constant \(T(\varDelta )\) such that, for all \(x_\circ \in B_\varDelta =\{ x\in \mathbb {R}^n: |x| \le \varDelta \} \) they satisfy

If this bound holds for any arbitrarily large \(\varDelta \) then the solutions are globally ultimately bounded.

Ultimate boundedness is a reasonable assumption for the class of systems of interest here, such as oscillators. In a more general context, boundedness holds, for instance, if the units are strictly semi-passive—cf. [14].

Our second main assumption concerns the zero dynamics.

Assumption 4.1

For any compact sets \({B}_z\subset {\mathbb {R}}^{n-m}\), \({B}_y\subset {\mathbb {R}}^{m}\) there exist N continuously differentiable positive definite functions \(V_{\circ k}: {B}_z \rightarrow {\mathbb {R}}_+\) with \(k\le N\), class \(\mathscr {K}_\infty \) functions \(\gamma _{1k}\), \(\gamma _{2k}\) and constants \( \bar{\alpha }_k\), \(\beta _k >0\) such that

where \(\nabla V_{\circ k} := \frac{\partial V_{\circ k}}{\partial \varvec{z}}\), for all \(\varvec{z}\), \(\varvec{z}' \in {B}_z\) and \({\varvec{y}} \in {B}_y\).

Assumption 4.1 may be interpreted as a condition of incremental stability of the zero dynamics in a practical sense. Note that when \(\beta _k=0\), we recover the characterisation provided in [1].

2.1 Network Model

We assume that the network units are connected via diffusive coupling, i.e. for the ith unit the coupling is given by

where the scalar \(\sigma \) corresponds to the coupling gain between the units and the individual interconnections weights, \(d_{ij}\), satisfy the property \(d_{ij}=d_{ji}\). Assuming that the network graph is connected and undirected, the interconnections amongst the nodes are completely defined by the adjacency matrix, \(D=[d_{ij}]_{i,j\in {\mathscr {I}}}\), which is used to construct the corresponding Laplacian matrix,

By construction, all row-sums of L are equal to zero. Moreover, since L is symmetric and the network is connected it follows that all eigenvalues of the Laplacian matrix are real and, moreover, L has exactly one eigenvalue (say, \(\lambda _1\)) equal to zero, while others are positive, i.e. \(0=\lambda _1<\lambda _2\le \cdots \le \lambda _N\).

Next, we introduce a compact notation that is convenient for our purposes of analysis. We introduce the following vectors of outputs, inputs and states, respectively:

as well as the function \(F: \mathbb {R}^{nN}\rightarrow \mathbb {R}^{nN}\), defined as

With this notation, the diffusive coupling inputs \(u_i\), defined in (4.3), can be re-written in the compact form

where the symbol \(\otimes \) stands for the right Kronecker product.Footnote 1 Then, the network dynamics becomes

where \(E_m^\top = [I_m,0_{m\times (n-m)}]\). The qualitative analysis of the solutions to the latter equations is our main subject of study.

2.2 Dynamic Consensus and Practical Synchronisation

In a general setting, as for instance that of [13], for the purpose of analysis, synchronisation may be qualitatively measured by equating a functional of the trajectories to zero and measuring the distance of the latter to a synchronisation manifold, e.g.

For networks of homogeneous systems, i.e. if \(f_i=f_j\) for all i, \(j\in \mathscr {I}\), synchronisation is often described in terms of the asymptotically identical evolution of the units, i.e. \(x_i \rightarrow x_j\). This is especially clear in the classical consensus paradigm of simple integrators, in which we have \(x_i \rightarrow x_j\rightarrow \) const. In more complex cases, as for instance in problem of formation tracking control, we may have that each unit follows a (possibly unique) reference trajectory, that is, \(x_i \rightarrow x_j\rightarrow x^*(t)\). What is more, controlled synchronisation is sometimes assimilated to a problem of “collective” tracking control—see e.g. [5, 13].

Hence, whether a set-point equilibrium or a reference trajectory, it seems natural to formulate the consensus problem as one of asymptotic stability (or stabilisation for that effect) of the synchronisation manifold \({\mathscr {S}}\). Such stability problem may be approached, for instance, using tools developed for semi-passive, incrementally passive or incrementally input-output stable systems—see [6, 8, 9, 16, 17, 21]. If the manifold \(\mathscr {S}\) is stabilised, one says that the networked units are synchronised. For networks of non-identical units, the paradigm is much more complex due to the fact that the synchronisation manifold \(\mathscr {S}\) does not necessarily exist. Yet, it may also be recast in terms of stability analysis.

To that end, we generalise the consensus paradigm by introducing what we call dynamic consensus. We shall say that this property is achieved by the systems interconnected in a network if their motions converge to one generated by what we shall call emergent dynamics. In the case of undirected graphs, for which the corresponding Laplacian is symmetric, the emergent dynamics is naturally defined as the average of the units’ drifts, that is, the functions \(f^1_s : \mathbb {R}^m \times \mathbb {R}^{n-m}\rightarrow \mathbb {R}^m\), \(f^2_s : \mathbb {R}^m \times \mathbb {R}^{n-m}\rightarrow \mathbb {R}^{n-m}\) defined as

hence, the emergent dynamics may be written in the compact form

For the sake of comparison, in the classical (set-point) consensus paradigm, all systems achieving consensus converge to a common equilibrium point, that is, \(f_s \equiv 0\) and \(x_e\) is constant. In the case of formation tracking control, Eq. (4.7) can be seen as the reference dynamics to the formation. In the general case of dynamic consensus, the motions of all the units converge to a motion generated by the emergent dynamics (4.7).

Then, to study the behaviour of the individual network-interconnected systems, relative to that of the emergent dynamics, we introduce the average state (also called mean-field) and its corresponding dynamics. Let

which comprises an average output, \(y_s\in \mathbb {R}^m\), defined as \(y_s = E_m^\top x_s\) and the state of the average zero dynamics, \(z_s\in \mathbb {R}^{n-m}\), that is, \(x_s=[y_s^\top ,z_s^\top ]^\top \). Now, by differentiating on both sides of (4.8) and after a direct computation in which we use (4.2), (4.3) and the fact that the sums of the elements of the Laplacian’s rows equal to zero, i.e.

we obtain

Then, in order to write the latter in terms of the average state \(x_s\), we use the functions \(f_s^1\) and \(f_s^2\) defined above so, from (4.9), we derive the average dynamics

It is to be remarked that this model is intrinsic to the diffusively interconnected network. Indeed, since the row-sums of the Laplacian equals to zero, the interconnection strength \(\sigma \) does not appear in (4.10). Another interesting feature of Eq. (4.10) is that they may be regarded as composed of the nominal part

and the perturbation terms \(\big [f^1_i(y_i,z_i) -f^1_i(y_s,z_s)\big ]\) and \(\big [f^2_i(y_i,z_i) -f^2_i(y_s,z_s)\big ]\). The former corresponds exactly to (4.7), only re-written with another state variable. In the case that dynamic consensus is achieved (that is, in the case of complete synchronisation) and the graph is balanced and connected, we have \((y_i,z_i)\rightarrow (y_s,z_s)\). Nonetheless, in the case of a heterogeneous network, asymptotic synchronisation is in general hard to achieve hence,  and, consequently, the terms \(\big [f^1_i(y_i,z_i) -f^1_i(y_s,z_s)\big ]\) and \(\big [f^2_i(y_i,z_i)\) \(-f^2_i(y_s,z_s)\big ]\) do not vanish.

and, consequently, the terms \(\big [f^1_i(y_i,z_i) -f^1_i(y_s,z_s)\big ]\) and \(\big [f^2_i(y_i,z_i)\) \(-f^2_i(y_s,z_s)\big ]\) do not vanish.

Thus, from a dynamical systems’ viewpoint, the average dynamics may be considered as a perturbed variant of the emergent dynamics. Consequently, it appears natural to study the problem of dynamic consensus, recast in that of robust stability analysis, in a broad sense. On one hand, in contrast to the more commonly studied case of state synchronisation, we shall admit that synchronisation may be established with respect to part of the variables only, i.e. with respect to the outputs \(y_i\). More precisely, for the former case, similarly to (4.6), we introduce the state synchronisation manifold

and, for the study of output synchronisation, we analyse the stability of the manifold

Since, in the general case of heterogeneous networks, the perturbation terms may prevail it becomes natural to study synchronisation in a practical sense, that is, by seeking to establish stability of the output or state synchronisation manifolds \({\mathscr {S}}_y \) or \({\mathscr {S}}_x\) in a practical sense only. This is precisely defined next.

Consider a parameterised system of differential equations

where \(x \in R^n\), the function \(f: \mathbb {R}^n \rightarrow \mathbb {R}^n\) is locally Lipschitz and \(\varepsilon \) is a scalar parameter such that \(\varepsilon \in (0,\varepsilon _\circ ]\) with \(\varepsilon _\circ <\infty \). Given a closed set \(\mathscr {A}\), we define the norm \(|x|_{\mathscr {A}} := \displaystyle \inf _{y\in \mathscr {A}} |x-y|\).

Definition 4.2

For the system (4.13), we say that the closed set \(\mathscr {A}\subset \mathbb {R}^n\) is practically uniformly asymptotically stable if there exists a closed set \(\mathscr {D}\) such that \(\mathscr {A}\subset \mathscr {D}\subset \mathbb {R}^n\) and

-

(1)

the system is forward complete for all \(x_\circ \in \mathscr {D}\);

-

(2)

for any given \(\delta >0\) and \(R>0\), there exist \(\varepsilon ^*\in (0,\varepsilon _\circ ]\) and a class \(\mathscr {K}\mathscr {L}\) function \(\beta _{\delta R}\) such that, for all \(\varepsilon \in (0,\varepsilon ^*]\) and all \(x_\circ \in \mathscr {D}\) such that \(|x_\circ |_{\mathscr {A}}\le R\), we have

$$|x(t,x_\circ ,\varepsilon )|_{\mathscr {A}} \le \delta + \beta _{\delta R} \big (|x_\circ |_{\mathscr {A}},t\big ).$$

Remark 4.1

Similarly, to the definition of uniform global asymptotic stability of a set, the previous definition includes three properties: uniform boundedness of the solutions with respect to the set, uniform stability of the set and uniform practical convergence to the set.

The following statement, which establishes practical asymptotic stability of sets, may be deduced along the lines of the proof of the main result in [4].

Proposition 4.1

Consider the system \(\dot{x}=f(x)\), where \(x\in \mathbb {R}^n\) and f is continuous and locally Lipschitz. Assume that the system is forward complete, there exists a closed set \({\mathscr {A}}\subset \mathbb {R}^n\) and a \(C^1\) function \(V:\mathbb {R}^n\rightarrow \mathbb {R}_+\) as well as functions \(\alpha _1,\alpha _2\in {\mathscr {K}}_\infty \), \(\alpha _3\in {\mathscr {K}}\) and a constant \(c>0\), such that, for all \(x\in \mathbb {R}^n\),

Then, for any \(R,\varepsilon >0\) there exists a constant \(T=T(R,\varepsilon )\) such that for all \(t\ge T\) and all \(x_\circ \) such that \(|x_\circ |_{\mathscr {A}} \le R\)

where \(r=\alpha _1^{-1}\circ \alpha _2\circ \alpha _3^{-1}(c)\).

3 Network Dynamics

In the previous section, we motivated, albeit intuitively, the study of dynamic consensus and practical synchronisation as a stability problem of the attractor of the emergent dynamics as well as of the synchronisation manifold. In this section, we render this argument formal by showing that the networked dynamical systems model (4.5) is equivalent, up to a coordinate transformation, to a set of equations composed of the average system dynamics (4.10) with average state \(x_s\) and a synchronisation errors equation with state \(e= [e_1^\top \, \ldots \, e_N^\top ]^\top \), where \(e_i = x_i - x_s\) for all \(i \in \mathscr {I}\). It is clear that \(x\in \mathscr {S}_x\) if and only if \(e= 0\); hence, the general synchronisation problem is recast in the study of stability of the dynamics of e and \(x_s\).

3.1 New Coordinates

Let us formally justify that the choice of coordinates \(x_s\) and e completely and appropriately describe the networked systems’ behaviours.

Considering a network with an undirected and connected graph, the Laplacian matrix \(L=L^\top \) has a single zero eigenvalue \(\lambda _1=0\) and its corresponding right and left eigenvectors \(v_{r1}\), \(v_{l1}\) coincide with \(v=\frac{1}{\sqrt{N}}{} \mathbf{1}\) where \(\mathbf{1} \in \mathbb {R}^N\) denotes the vector \([1\ 1\, \ldots \, 1]^\top \). Moreover, since L is symmetric and non-negative definite, there exists (see [2, Chap. 4, Theorems 2 and 3]) an orthogonal matrix U (i.e. \(U^{-1}=U^\top \)) such that \(L=U\varLambda U^\top \) with \(\varLambda = \text{ diag }\{[0\ \lambda _2\ \ldots \ \lambda _N ]\}\), where \(\lambda _i>0\) for all \(i\in [2,N]\), are eigenvalues of L. Furthermore, the ith column of U corresponds to an eigenvector of L related to the ith eigenvalue, \(\lambda _i\). Therefore, recognising v as the first eigenvector, we decompose the matrix U as:

where \(U_1\in \mathbb {R}^{N\times N-1}\) is a matrix composed of \(N-1\) eigenvectors of L related to \(\lambda _2,\, \ldots ,\, \lambda _N\) and, since the eigenvectors of a real symmetric matrix are orthogonal, we have

Based on the latter observations, we introduce the coordinate transformation

where the block diagonal matrix \({\mathscr {U}} \in \mathbb {R}^{nN\times nN}\) is defined as

which, in view of (4.15), is also orthogonal. Then, we use (4.14) to partition the new coordinates \(\bar{x}\), i.e.

The coordinates \(\bar{x}_1\) and \(\bar{x}_2\) thus obtained are equivalent to the average \(x_s\) and the synchronisation errors e, respectively. Indeed, observing that the state of the average unit, defined in (4.8), may be re-written in the compact form

we obtain \(\bar{x}_1 = \sqrt{N} x_s\). On the other hand, \(\bar{x}_2=0\) if and only if \(e= 0\). To see the latter, let \({\mathscr {U}}_1= U_1\otimes I_n\), then, using the expression

we obtain

and, observing that

we get

Therefore, multiplying \(\bar{x}_2=\mathscr {U}_1^\top x\) by \({\mathscr {U}}_1\) and using (4.18), we obtain

which, in view of (4.17), is equivalent to

Since \(\mathscr {U}_1\) has column rank equal to \((N-1)n\), which corresponds to the dimension of \(\bar{x}_2\), we see that \(\bar{x}_2\) is equal to zero if and only if so is e.

Even though the state space of \((x_s,e)\) is of higher dimension than that of the original networked system (4.1), only both together, the synchronisation error dynamics and the average dynamics, may give a complete characterisation of the network behaviour. Thus, the states \(x_s\) and e are \({\textit{intrinsic}}\) to the network and not the product of an artifice with purely theoretical motivations.

We proceed to derive the differential equations in terms of the average state \(x_s\) and the synchronisation errors e.

3.2 Dynamics of the Average Unit

Using the network dynamics Eq. (4.5a), as well as (4.16), we obtain

Now, using the property of the Kronecker product, (4.17), and in view of the identity \(\mathbf{1 }^\top L=0\), we obtain

This reveals the important fact that the average dynamics, i.e. the right-hand side of (4.19), is independent of the interconnections gain \(\sigma \), even though the solutions \(x_s(t)\) are, certainly, affected by the synchronisation errors; hence, by the coupling strength.

Now, using (4.4) and defining

we obtain

Therefore, defining

we see that we may write the average dynamics in the compact form,

Furthermore, since the functions \(F_i\), with \(i\in {\mathscr {I}}\), are locally Lipschitz so is the function \(G_s\) and, moreover, there exists a continuous, positive, non-decreasing function \(k: \mathbb {R}_+\times \mathbb {R}_+\rightarrow \mathbb {R}_+\), such that

In summary, the average dynamics is described by the Eq. (4.21). That is, it consists in the nominal system (4.7), which corresponds to the emergent dynamics, perturbed by the synchronisation error of the network via the term \(G_s\).

3.3 Dynamics of the Synchronisation Errors

To study the effect of the synchronisation errors, e(t), on the emergent dynamics, we start by introducing the vectors

i.e. \(\tilde{F}(e,x_s) = F(x) - F_s(x_s)\). Then, differentiating on both sides of

and using (4.5a) and (4.21), we obtain

Next, let us introduce the output synchronisation errors \(e_{yi}=y_i-y_s\), that is, \(e_y = [e_{y1}^\top ,\ \ldots , e_{yN}^\top ]^\top \), which may also be written as

and let us consider the first term and the two groups of bracketed terms on the right-hand side of (4.23), separately. For the term \(\big (L\otimes E_m\big )y\) we observe, from (4.24), that

and we use (4.17) and the fact that \(L\mathbf{1 }=0\) to obtain

Second, concerning the first bracket on the right-hand side of (4.23), we observe that, in view of (4.20) and (4.22),

Therefore,

Then, using (4.17) we see that

So, introducing

we obtain

Finally, concerning the term \( \tilde{F}(e,x_s)-({\mathbf{1}\otimes I_n})G_s(e, x_s)\) on the right-hand side of (4.23), we see that, by definition, \(G(e,x_s)=\frac{1}{N} \big ({\mathbf{1}^\top \otimes I_n}\big ) \tilde{F}(e,x_s)\), hence, from (4.25), we obtain

and

Using (4.26) and (4.27) in (4.23), we see that the latter may be expressed as

The utility of this equation is that it clearly exhibits three terms: a term linear in the output \(e_y\) which reflects the synchronisation effect of diffusive coupling between the nodes, the term \(P\tilde{F}(e,x_s)\) which vanishes with the synchronisation errors, i.e. if \(e=0\), and the term

which represents the variation between the dynamics of the individual units and the average unit. This term equals to zero, e.g. when the nominal dynamics, \(f_i\) in (4.1a), of all the units are identical that is, in the case of a homogeneous network (Fig. 4.1).

4 Stability analysis

All is in place to present our main statements on stability of the networked systems model (4.5). For the purpose of analysis, we use the equations previously developed, in the coordinates e and \(x_s\), which we recall here for convenience:

These equations correspond to those of two feedback interconnected systems, as it is illustrated in Fig. 4.1. For the system (4.28a), we study the stability with respect to a compact attractor which is proper to the emergent dynamics and we establish conditions under which the average of the trajectories of the interconnected units remains close to this attractor. For the system (4.28b) we study robust stability of the synchronisation manifolds \(\mathscr {S}_y\) and \(\mathscr {S}_x\).

4.1 Practical Synchronisation Under Diffusive Coupling

We formulate conditions that ensure practical global asymptotic stability of the sets \({\mathscr {S}}_x\) and \({\mathscr {S}}_y\)—see (4.11), (4.12). This implies practical state and output synchronisation of the network, respectively. Furthermore, we show that the upper bound on the state synchronisation error depends on the mismatches between the dynamics of the individual units of the network.

Theorem 4.1

(Output synchronisation) Let the solutions of the system (4.5) be globally ultimately bounded. Then, the set \({\mathscr {S}}_y\) is practically uniformly globally asymptotically stable with \(\varepsilon =1/\sigma \). If, moreover, Assumption 4.1 holds, then there exists a function \(\beta \in {\mathscr {K}}_\infty ^3\) such that for any \(\varepsilon \ge 0\) and \(R>0\) there exist \(T^*>0\) and \(\sigma ^* >0\) such that the solutions of (4.28b) with \(\sigma =\sigma ^*\) satisfy

where

The bound on the synchronisation errors, \(\beta \), is a function of the constants \(\bar{\alpha }_k\), \(\beta _k\) defined in Assumption 4.1 as well as on the degree of heterogeneity of the network, characterised by \(\varDelta _f\). In the definition of the latter, \(B_x\) corresponds to a compact set to which the solutions ultimately converge by assumption. That is, Theorem 4.1 guarantees, in particular, that the perturbing effect of heterogeneity in the network may be diminished at will by increasing the interconnection strength.

The proof of this theorem is provided in [15]. Roughly speaking, the first statement (synchronisation) follows from two properties of the networked system—namely, negative definiteness of the second smallest eigenvalue of the Laplacian metric L and global ultimate boundedness. Now, global ultimate boundedness holds, e.g. under the following assumption.

Assumption 4.2

For each i, the system (4.2) defines a strictly semi-passive map \(u_i\mapsto y_i\) with continuously differentiable and radially unbounded storage functions \(V_i: \mathbb {R}^n\rightarrow \mathbb {R}_+\), where \( i\in \mathscr {I}\). That is, there exist positive definite and radially unbounded storage functions \(V_i\), positive constants \(\rho _i\), continuous functions \(H_i\) and positive continuous functions \({ \varrho }_i\) such that

and \( H_i(x_i) \ge \varrho _i(|x_i|)\) for all \(|x_i|\ge \rho _i\).

Indeed, the following statement is reminiscent of [16, Corollary 1].

Proposition 4.2

Consider a network of N diffusively coupled units (4.5). Let the graph of interconnections be undirected and connected and assume that all the units of the network are strictly semi-passive (i.e. Assumption 4.2 holds). Then, the solutions of the system (4.5) are ultimately bounded.

Proof

We proceed as in the proof of [16, Lemma 1] and [22, Proposition 2.1]. Let Assumption 4.2 generate positive definite storage functions \(V_{i}\), as well as functions \(\varrho _i\), \(H_i\) and constants \(\rho _i\), defined as above and let

Then, taking the derivative of \( V_\varSigma (x)\) along trajectories of the system (4.5), we obtain

where for the last inequality we used the fact that Laplacian matrix is semi-positive definite. Next, let \(\bar{\rho }=\max _{1\le i \le N}\{\rho _i\}\) and consider the function \(\bar{\varrho }:[\bar{\rho },+\infty )\rightarrow \mathbb {R}_{\ge 0}\) as \(\bar{\varrho }(s)=\min _{1\le i \le N}\{\varrho _i(s)\}\). Note that \(\bar{\varrho }\) is continuous and \(\bar{\varrho }(s)\) positive for all \(s\ge \bar{\rho }\). Furthermore, for any \(|x|\ge N\bar{\rho }\) there exists \(k\in {\mathscr {I}}\) such that \(|x_k|\ge \frac{1}{N}|x|\ge \bar{\rho }\). Therefore, for all \(|x|\ge N\bar{\rho }\),

Using the last bound in (4.30) we obtain, for all \(|x|\ge N\bar{\rho }\),

Hence, invoking [23, Theorem 10.4] we conclude that the solutions of the system (4.5) are ultimately bounded. \(\square \)

Some interesting corollaries, on state synchronisation, follow from Theorem 4.1, for instance, if the interconnections among the network units depend on the whole state, that is, if \(y=x\).

Corollary 4.1

Consider the system (4.5). Let Assumptions 4.1 and 4.2 be satisfied and let \(y=x\). Then, the system is forward complete and the set \({\mathscr {S}}_x\) is practically, uniformly, globally and asymptotically stable with \(\varepsilon =1/\sigma \).

The constant \(\varDelta _f\) defined in (4.29) represents the maximal possible mismatch between the dynamics of any individual unit and that of the averaged unit, on a ball of radius \(B_x\). The more heterogeneous is the network, the bigger is the constant \(\varDelta _f\). Conversely, in the case that all the zero dynamics of the units are identical, we have \(\varDelta _f=0\). In this case, we obtain the following statement.

Corollary 4.2

Consider the system (4.5) under Assumptions 4.1 and 4.2. Assume that the functions \(f^2_i\), which define zero dynamics of the network units, are all identical, i.e. \(f^2_i(x)=f^2_j(x)\) for all \(i,j\in \mathscr {I}\) and all \(x\in \mathbb {R}^{n}\). Then the set \({\mathscr {S}}_x\) is practically uniformly globally asymptotically stable with \(\varepsilon =1/\sigma \).

4.2 On Practical Stability of the Collective Network Behaviour

Now we analyse the behaviour of the average unit, whose dynamics is given by the Eq. (4.28a). We assume that the nominal dynamics of average unit (i.e. with \(e=0\)) has a stable compact attractor \({\mathscr {A}}\) and we establish that the stability properties of this attractor are preserved under the network interconnection, albeit, slightly weakened.

Assumption 4.3

For the system (4.7), there exists a compact invariant set \({\mathscr {A}} \subset \mathbb {R}^{n}\) which is asymptotically stable with a domain of attraction \({\mathscr {D}} \subset \mathbb {R}^n\). Moreover, we assume that there exists a continuously differentiable Lyapunov function \(V_{\mathscr {A}}: \mathbb {R}^n\rightarrow \mathbb {R}_{\ge 0}\) and functions \(\alpha _i\in {\mathscr {K}}_\infty \), \(i\in \{1,\ldots , 4\}\) such that for all \(x_e \in {\mathscr {D}}\) we have

The second part of the assumption (the existence of V) is purely technical whereas the first part is essential to analyse the emergent synchronised behaviour as well as the synchronisation properties of the network, recast as a (robust) stability problem. The following statement applies to the general case of diffusively coupled networks.

Theorem 4.2

For the system (4.5), assume that the solutions are globally ultimately bounded and Assumptions 4.1, 4.3 hold. Then, there exist a non-decreasing function \(\gamma :\mathbb {R}_+\times \mathbb {R}_+\rightarrow \mathbb {R}_+\) and, for any \(R,\varepsilon >0\) there exists \(T^* = T^*(R,\varepsilon )\), such that for all \(t\ge T^*\) and all \(x_\circ \) such that \(|x_\circ |_{\mathscr {A}} \le R\),

In the case that the network is state practically synchronised, it follows that the set \({\mathscr {A}}\) is practically stable for the network (4.5).

Corollary 4.3

Consider the system (4.5) under Assumption 4.3. If the set \({\mathscr {S}}_x\) is practically uniformly globally asymptotically stable for this system, then the attractor \({\mathscr {A}}\) defined in Assumption 4.3 is practically asymptotically stable for the average unit (4.21).

5 Example

To illustrate our theoretical findings we present a brief case-study of analysis of interconnected heterogeneous systems via diffusive coupling. We consider three of the best known chaotic oscillators: the Rössler [20], the Lorenz [10] and the Lü system [11]. The dynamics equations of these forced oscillators are the following:

The values of the parameters of the three systems are fixed in order for them to exhibit a chaotic behaviour when unforced. These are collected in Table 4.1.

Since the three chaotic systems are oscillators their trajectories are globally ultimately bounded, they converge to the strange attractors depicted in Fig. 4.2. In this figure we also show the phase portrait for the average solutions \(x_s(t)\) for the three unforced oscillators (with \(u_\ell =u_m=u_r=0\)). Then, we apply the respective inputs

where \(\text {y}_{(\cdot )}\) are measurable outputs. We have simulated two scenarios: in the first case, we assume that only the \(x_{(\cdot )}\) coordinates of each oscillator are measured hence,

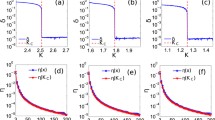

In Fig. 4.3, we depict the phase portrait for the Rössler system overlapped with that of the average solutions. It is appreciated, on one hand, that the synchronisation errors diminish as the interconnection gain is increased. On the other hand, the behaviour of the oscillators’ solutions also changes: for relatively large values of \(\sigma \) (50 and 80), the chaotic behaviour is lost and the systems stabilise.

In the second scenario, we assume that both \(x_{(\cdot )}\) and \(y_{(\cdot )}\) are measured hence,

The simulation results in this case, for different values of the interconnection gain \(\sigma \), are showed in Figs. 4.4 and 4.5. With two inputs, the systems “loose” the chaotic response and stabilise to an equilibrium. In Fig. 4.4 we plot the norm of the output synchronisation errors \(|e_y(t)| = |\text{ y }(t) - \mathbf{1}\otimes y_s(t)|\); it is clearly appreciated that the errors diminish as the interconnection gain increases. Finally, in Fig. 4.5 we show the phase portraits for four different values of \(\sigma \); it is clear that output synchronisation occurs.

Notes

- 1.

For two matrices A and B of any dimension, \(A\otimes B\) consists in a block-matrix in which the ijth block corresponds to \(a_{ij}B\).

References

Angeli, D.: A Lyapunov approach to incremental stability properties. IEEE Trans. Autom. Cont. 47(3), 410–421 (2002)

Bellman, R.E.: Introduction to Matrix Analysis, vol. 10. McGraw-Hill, New York (1970)

Belykh, I., de Lange, E., Hasler, M.: Synchronization of bursting neurons: what matters in the network topology. Phys. Rev. Lett. 94, 188101 (2005)

Corless, M., Leitmann, G.: Continuous state feedback guaranteeing uniform ultimate boundedness for uncertain dynamic systems. IEEE Trans. Autom. Cont. 26(5), 1139–1144 (1981)

Efimov, D., Panteley, E., Loría, A.: Switched mutual–master–slave synchronisation: Application to mechanical systems. In: Proceedings of the 17th IFAC World Congress, pp. 11508–11513, Seoul, Korea (2008)

Franci, A., Scardovi, L., Chaillet, A.: An input–output approach to the robust synchronization of dynamical systems with an application to the Hindmarsh–Rose neuronal model. In: Proceedings of the 50th IEEE Conference on Decision and Control and European Control Conference (CDC-ECC), pp. 6504–6509 (2011)

Isidori, A.: Nonlinear Control Systems II. Springer, London (1999)

Jouffroy, J., Slotine, J.J.: Methodological remarks on contraction theory. In: Proceedings of the 43rd IEEE Conference on Decision and Control, vol. 3, pp. 2537–2543 (2004)

Lohmiller, W., Slotine, J.-J.: Contraction analysis of non-linear distributed systems. Int. J. Control 78, 678–688 (2005)

Lorenz, E.N.: Deterministic nonperiodic flow. J. Atmos. Sci. 20, 130–141 (1963)

Lü, J.H., Chen, G.: A new chaotic attractor coined. Int. J. Bifurc. Chaos 12(3), 659–661 (2002)

Nijmeijer, H., Mareels, I.: An observer looks at synchronization. IEEE Trans. Circuits Sys. I: Fundam. Theory Appl. 44(10), 882–890 (1997)

Nijmeijer, H., Rodríguez-Angeles, A.: Synchronization of Mechanical Systems. Nonlinear Science, Series A, vol. 46. World Scientific, Singapore (2003)

Oguchi, T., Yamamoto, T., Nijmeijer, H.: Synchronization of bidirectionally coupled nonlinear systems with time-varying delay. Topics in Time Delay Systems, pp. 391–401. Springer, Berlin (2009)

Panteley, E.: A stability-theory perspective to synchronisation of heterogeneous networks. Habilitation à diriger des recherches (DrSc Dissertation). Université Paris Sud, Orsay, France(2015)

Pogromski, A.Y., Glad, T., Nijmeijer, H.: On difffusion driven oscillations in coupled dynamical systems. Int. J. Bifurc. Chaos Appl. Sci. Eng. 9(4), 629–644 (1999)

Pogromski, A.Y., Nijmeijer, H.: Cooperative oscillatory behavior of mutually coupled dynamical systems. IEEE Trans. Circuits Sys. I: Fundam. Theory Appl. 48(2), 152–162 (2001)

Pogromski, A.Y., Santoboni, G., Nijmeijer, H.: Partial synchronization: from symmetry towards stability. Phys. D: Nonlinear Phenom. 172(1–4), 65–87 (2002)

Ren, W., Beard, R.W.: Distributed consensus in multivehicle cooperative control. Springer, Heidelberg (2005)

Rössler, O.E.: An equation for hyperchaos. Phys. Lett. A 71(2–3), 155–157 (2007)

Scardovi, L., Arcak, M., Sontag, E.D.: Synchronization of interconnected systems with an input–output approach. Part I: main results. In: Proceedings of the 48th IEEE Conference on Decision and Control, pp. 609–614 (2009)

Steur, E., Tyukin, I., Nijmeijer, H.: Semi-passivity and synchronization of diffusively coupled neuronal oscillators. Phys. D: Nonlinear Phenom. 238(21), 2119–2128 (2009)

Yoshizawa, T.: Stability Theory by Lyapunov’s Second Method. The Mathematical Society of Japan, Tokyo (1966)

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Rights and permissions

Copyright information

© 2017 Springer International Publishing Switzerland

About this chapter

Cite this chapter

Panteley, E., Loría, A. (2017). Synchronisation and Emergent Behaviour in Networks of Heterogeneous Systems: A Control Theory Perspective. In: van de Wouw, N., Lefeber, E., Lopez Arteaga, I. (eds) Nonlinear Systems. Lecture Notes in Control and Information Sciences, vol 470. Springer, Cham. https://doi.org/10.1007/978-3-319-30357-4_4

Download citation

DOI: https://doi.org/10.1007/978-3-319-30357-4_4

Published:

Publisher Name: Springer, Cham

Print ISBN: 978-3-319-30356-7

Online ISBN: 978-3-319-30357-4

eBook Packages: EngineeringEngineering (R0)