Abstract

We address the problem of making syntactic sense of text for which the grammar has only partial information. Our proposed methodology is to adapt a recent formalism, Womb Grammars, into parsing creative text that departs from the grammar at hand, or which cannot rely on a complete grammar being available. We argue that unspecified information can be detected with appropriate ontologies together with our adaptation of a recently introduced constraint-based methodology for acquiring linguistic information on a given language from that of another. Our implementation tool is CHRG (Constraint Handling Rule Grammars). We examine as well possible extensions to multilingual text parsing. Our proposed methodology exploits the descriptive power of constraints both for defining sentence acceptability and for inferring lexical knowledge from a word’s sentential context, even when foreign.

This research was supported by NSERC Discovery grant 31611024 and was started during a visit by Veronica Dahl and Sergio Tessaris to Universidade Nova de Lisboa.

Access provided by Autonomous University of Puebla. Download conference paper PDF

Similar content being viewed by others

Keywords

- Partial grammars

- Womb grammars

- Ontologies

- Imperfect querying

- Mixed language text

- Constraint acquisition

- Universal grammar

- Parsing

- CHRG (Constraint Handling Rule Grammars)

- Constraint based grammars

- Property grammars

1 Introduction

Social media promotes communication across countries, multiplying the opportunities for users to spontaneously mix syntax, lexicons and jargons. Also, there are domains where syntactic arrangements different from the standard arrangement are acceptable. These factors, together with the increasing infiltration of English words and specific group jargons into technical and even every day communications in many other languages, results in the need for ever more flexible parsers if we are to succeed in extracting information from text in timely fashion. Yet we are quite far from being able to address the challenges inherent in multilingual and creative text. In fact, one of the worst nightmares for linguistics is that of trying to parse textual sources that do not respect the grammar.

Traditional parsers focus on constructing syntactic trees for complete and correct sentences in a given language. More flexible parsing models can be arrived at in economic fashion by giving up syntactic trees as a focus and focusing instead on grammar constraints, also called properties. For instance, if we were to work with tree-oriented rules such as:

their adaptation into a language where nouns must precede adjectives would require changing every rule where these two constituents are involved. In contrast, by expressing the same rule in terms of separate constraints, we only need to change the precedence constraint into saying that adjectives must precede nouns, and the modification carries over to the entire grammar without further ado.

In this paper we propose to combine Womb Grammar parsing—a property-based methodology for multilingual parsing developed by Dahl and Miralles [10]- with ontologies, in view of further specifying partial information which can be lexical or structural, in an automatic manner.

The remainder of this paper is organized as follows: Sect. 2 discusses our motivation; Sect. 3 overviews the relevant background; Sect. 4 presents our methodology; and Sect. 5 present our concluding remarks.

2 Motivation

Taking into account the way humans speak and the way we interact via social media, it is very important to propose parsing techniques that are able to parse non-canonical input. Among the potential benefits are the consequent improvement of information retrieval tools, and the possibility of treating hybrid, cross-cultural jargons, which are becoming ubiquitous with the proliferation of texting and of social media communications.

Program transformation is one of the research areas that has received fair attention in the past few years in CHR literature. It has been successfully used in particular for simplifying program development (e.g. [19] studies how to transform transaction-augmented CHR programs into CHR ones); for program optimization; and for mechanizing the generation of programs with certain desired features. Grammar transformation on the other hand is just as promising at least all of these subfields, but has been fairly neglected so far.

The encouraging results in using grammar transformation to induce a target language’s grammar from that of a known grammar plus appropriate corpuses [10] have motivated us to adapt this same methodology of grammar transformation to the needs of partially known grammar parsing.

3 Background

3.1 Womb Grammars

Womb Grammars [10] were designed for inducing a target language’s syntax from the known syntax of a source language plus a representative corpus of correct sentences in the target language. As such they can be considered a kind of self-modifying grammar, whose approach is quite different from that of predecessors (e.g. [15] resorts heavily to push-down automata; [7], while being more declarative, are an extension of attribute grammars.) Womb grammars, in contrast, are constraint-based: they derive a target language’s syntax by observing the list of violated properties that are output when correct sentences in the target language are fed to the source grammar, and correcting that grammar so that these properties are no longer violated. WGs have been useful in various applications such as second language tutoring [2], language acquisition [12] and bio-inspired computation [1].

Using linguistic information from one language for the task of describing another language has historically yielded good results, albeit for specific tasks–such as disambiguating the other language [5], or fixing morphological or syntactic differences by modifying tree-based rules [16]–rather than for syntax induction.

This usually requires parallel corpora, an interesting exception being [9], where information from the models of two languages is shared to train parsers for two languages at a time, jointly. This is accomplished by tying grammar weights in the two hidden grammars, and is useful for learning dependency structure in an unsupervised empirical Bayesian framework.

Most of these approaches have in common the target of inferring syntactic trees. As exemplified above and discussed for instance in [4], constraint-based formalisms that make it possible to evaluate each constraint separately are advantageous in comparison with classical, tree-based derivation methods. For instance the Property Grammar framework [3] defines phrase acceptability in terms of the properties or constraints that must be satisfied by groups of categories. For instance, English noun phrases can be described through a few constraints such as precedence (a determiner must precede a noun, an adjective must precede a noun), uniqueness (there must be at most one determiner), exclusion (an adjective phrase must not coexist with a superlative), obligation (a noun phrase must contain the head noun), and so on. Rather than resulting in either a parse tree or failure, such frameworks characterize a sentence through the list of the constraints a phrase satisfies and the list of constraints it violates, so that even incorrect or incomplete phrases will be parsed.

For partially known grammars, this flexibility comes in very handy, but must be complemented, as we shall argue, with ontological information. Ontologies are nowadays part of the essential tools for natural language processing. It is well understood that semantic models can be exploited in order to improve and share lexical resources [14]. The ability of representing and maintaing the relations between words and semantic concepts is crucial for charting and using models of language. In our work we build upon the advances in this research area in order to use ontological knowledge-sharing to fill the gaps in our target lexicon.

In the original Womb Grammar formalism, we had two languages: the source language, of which both the syntax and the lexicon were known, and the target language, of which only the lexicon and a correct input corpus were known. Here we still assume a main language such as English, but it might be creatively cross fertilized with multilingual contributions, both in structure and lexicon, from other languages.

Since Womb Grammars are implemented in CHRG, we now briefly summarize the subset of CHRG relevant to understanding the code.

3.2 CHRG

CHRGs, or Constraint handling Rule Grammars [6], are a grammatical interface to CHR, providing it what DCGs provide to Prolog—namely, they invisibly handle input and output strings for the user. In addition, they include constructs to access those strings dynamically, and the possibility of reasoning in non-classical ways, with abduction or with resource-based assumptions.

For the purposes of this paper, we only use two types of CHRG rules, which parallel the CHR rules of propagation and simplification, and are respectively defined as follows:

A propagation rule is of the form

The part of the rule preceding the arrow

is called the head, G the guard, and \(\delta \) the body; \(\alpha , \beta , \gamma , \delta \) are sequences of grammar symbols and constraints so that \(\beta \) contains at least one grammar symbol, and \(\delta \) contains exactly one grammar symbol which is a nonterminal (and perhaps constraints); \(\alpha \) (\(\gamma \)) is called left (right) context and \(\beta \) the core of the head; G is a conjunction of built-in constraints as in CHR and no variable in G can occur in \(\delta \). If left or right context is empty, the corresponding marker is left out and if G is empty (interpreted as

), the vertical bar is left out. The convention from DCG is adopted that constraints (i.e., non-grammatical stuff) in head and body of a rule are enclosed by curly brackets. Gaps and parallel match are not allowed in rule bodies. A gap in the rule head is noted “...”. Gaps are used to establish references between two long distant elements.

A simplification (grammar) rule is similar to a propagation rule except that the arrow is replaced by

.

4 Our Proposed Methodology

The main difficulty in adapting our methodology is that the target language’s input can no longer be considered correct. We shall first consider lexical and structural intrusions separately, and then discuss how to deal with them jointly.

4.1 Failure-Driven Parsing

Notation. As said, our implementation of Womb Grammars [10] is done in terms of CHRG. During our explanation below we show some actual code for completeness, but our description should be intuitively clear that the main ideas can be followed independently from the code.

Parsing Strategy. Each word is stored in a CHRG symbol word/3, along with its category and traits (i.e. word(n,[sing,masc],livre)).

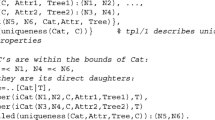

Grammar constraints are entered in terms of a CHRG constraint g/1, whose argument stores each possible grammar property. For instance, an English noun phrase parser would include the constraints:

-

g(obligatority(n)), g(constituency(det)),

-

g(precedence(det,adj)), g(unicity(det)),

-

g(requirement(n,det)), g(dependence(det,n))

Our proposal adopts the Direct PG parsing strategy introduced in [11], in which constraints are tested only for failure. In contrast, all previous methods exhaustively test each constraint for all constituents that can participate in it.

Concretely, a notion not unlike obligation can be used to identify new phrases, and those phrases can be tentatively expanded from nearby constituents.

For each tentatively expanded phrase, all other constraints are tested for failure only. The phrase is allowed to expand only if either no constraint fails, or all constraints that fail have been declared as relaxable. Exhaustive satisfaction check is thus replaced by a smart guided search for a falsifying assignment. This is appropriate provided that the set of satisfied constraints is the exact complement of the set of failed constraints - an assumption that seems reasonable, and that we make.

Should we need to explicitly output those constraints that hold, they can be inferred from the list of constraints that must be satisfied plus those output as unsatisfied, at less computational cost than the usual practice of evaluating all constraints between every pair of constituents, or of adding heuristics to reduce the search space.

This is significant because deep parsing with Property Grammars is theoretically exponential in the number of categories of the grammar and the size of the sentence to parse [18]. Since all previous approaches to PG parsing (except for Womb Parsing) have to calculate all constraints between every pair of constituents, and since the number of failed constraints will in general be much smaller than the number of satisfied constraints, any parsing methodology that manages to mostly check the failed ones will have a substantial efficiency advantage.

Violation Detection. Properties are weeded out upon detection of a violation by CHRG rules that look for them, e.g. an input noun phrase where an adjective precedes a noun will provoke deletion of the constraint g(precedence(n,adj)) plus perhaps (if the rest of the input corpus warrants it) inclusion of the converse constraint: g(precedence(adj,n)). The following CHRG rule accomplishes that:

Note that the rule works bottom-up, and that the three dots are a facility of CHRG which allows us to skip over an unspecified substring of words. The curly brackets indicate a call to a procedure (as opposed to a grammar symbol).

The CHRG parse predicate stores and abstracts the position of each word in the sentence. In plain English, the above rule states that if a word of category C2 precedes a word of category C1, and there is a precedence rule stipulating that words of category C1 must precede words of category C2, the precedence-updating rule needs to be invoked (in CHRG syntax the symbols prefixed with exclamation points are kept, while the ones without are replaced by the body of the rule, in this case an update constraint that invokes some housekeeping procedures).

Each of the properties dealt with has similar rules associated with it.

4.2 Inferring Lexical Knowledge from Sentential Context

Let us first consider the problem of making sense of extraneous words. We assume in a first stage that we have only one language with known syntax and lexicon, and an input corpus which is correct save for the occasional intrusion of neologisms or words belonging to another language or jargon. We can adapt our Womb Grammar methodology to this situation, by running the input corpus as is and observing the list of violated properties that will be output.

Since we know everything to be correct except that some lexical items do not “belong”, we know that the violated properties stem from those lexical items that failed to parse. By examining the violated properties, we can draw useful inferences about the lexical items in question.

For instance, if the head noun appears as an unknown word, among the violated properties we will read that the obligatory character of a noun phrase’s noun has been violated, which can lead us to postulate that the word in question is a noun. A violated exigency property would likewise suggest that the unrecognized word has the category that is required and has not been found.

But do we Really Need Womb Grammars? It is clear that with sufficient programming effort, any computational linguistic methodology can be adapted to guess lexical categories of extraneous words from context. However in most of them, this would require a major modification of the parser. Take for instance DCGs (Definite Clause Grammars, [17]), where lexical rules would appear as exemplified by:

If the lexicon does not explicitly include the word “borogrove” among the nouns, the parser would simply fail when encountering it. One could admit unknown nouns through the following rule:

But since this rule would indiscriminately accept any word as a noun (and similar rules would have to be included in order to treat possible extraneous words in any other category), this approach would mislead the parser into trying countless paths that are doomed to fail, and might even generate wrong results.

In contrast, we can parse extraneous words through Womb Grammar by anonymizing the category and its features rather than the word itself, e.g. word(Category,[Number, Gender],borogrove)), which more accurately represents what we know and what we don’t. The category and features will become efficiently instantiated through constraint satisfaction, taking into account all the properties that must be satisfied by this word in interaction with its context.

Of course, what would be most interesting would be to derive the meaning of the word that “does not belong”. While Womb Grammars do not yet have a complete way of treating semantics, the clues they can provide regarding syntactic category can serve to guide a subsequent semantic analysis, or to bypass the need for a complete semantic analysis by the concomitant use of ontologies relevant to domain-specific uses of our parser. In general, we are not necessarily interested in capturing the exact meaning of each unrecognized word; but rather to infer its relation with known words. The problem can be casted into the (automatic) extraction of a portion of the hypernym relation involving the extraneous word using the actual document or additional sources as corpora (see [8]).

Some Examples. In the poem “Jabberwocky”, by Lewis Carroll,Footnote 1 nonsense words are interspersed within English text with correct syntax. Our target lexicon, which we might call Wonderland Lexicon or WL, can be to some extent reconstructed from the surrounding English words and structure by modularly applying the constraints for English. Thus, “borogoves” must be labelled as a noun in order not to violate a noun phrase’s exigency for a head noun.

In other noun phrases, the extraneous words can be recognized only as adjectives. This is the case for “the manxome foe” and “his vorpal sword”, once the following constraints are applied: adjectives must precede nouns, a noun phrase can have only one head noun, determiners are also unique within a noun phrase.

In the case of “the slithy toves”, where there are two WL words, the constraint that the head noun is obligatory implies that one of these two words is a noun, and the noun must be “toves” rather than “slithy” (which is identified as an adjective as in the two previous examples) in order not to violate the precedence constraint between nouns and adjectives.

In other cases we may not be able to unambiguously determine the category, for instance the WL word “frabjous” preceding the English word “day” may remain ambiguous no matter how we parse it, if it satisfies all the constraints either as a determiner or as an adjective.Footnote 2

Two of the poem’s noun phrases (“the Jubjub bird” and “the Tumtum tree”) provide ontological as well as lexical information (under the reasonable assumption that capitalised words must be proper nouns, coupled with the fact that as proper nouns, these words do not violate any constraints). Our adaptation of Womb Grammars includes a starting-point, domain dependent ontology (which could, of course, initially be empty), which can be augmented with such ontological information as the facts that Tumtums are trees and Jubjubs are birds. Similarly, input such as “Vrilligs are vampires” would result in additions to the ontology besides in lexical recognition.

It could be that some input allows us even to equate some extraneous words with their English equivalents. For instance, if instead of having in the same poem the noun phrases “his vorpal sword” and “the vorpal blade”, we’d encountered “his vorpal sword” and “the cutting blade”, we could bet on approximate synonymy between “vorpal” and “cutting”, on the basis of our English ontology having established semantic similarity between “sword” and “blade”.

Similarly, extraneous words that repeat might allow a domain-dependent ontology to help determine their meaning. Taking once more the example of “his vorpal sword” and “the vorpal blade”, by consulting the ontology besides the constraints, we can not only determine that “vorpal” is an adjective, but also that it probably refers to some quality of cutting objects. It would be most interesting to carefully study under which conditions such ontological inferences would be warranted.

4.3 Inferring Extraneous Structures

We have said that Womb Grammars figure out the syntax of a target language from that of a source language by “correcting” the latter’s syntax to include properties that were violated by the input corpus. Another variant of Womb Grammars, which we call Universal Womb Grammars, does not rely on a specific source language, but uses instead the set of all properties that are possible between any two constituents – a kind of universal syntax. This universal grammar contains contradictory properties, for instance it will state both that a constituent A must precede another constituent B, and that B must precede A. One or both of these properties will be weeded out by processing the input corpus, which is assumed to be correct and representative.

When dealing only with lexical intrusions, our solution discussed in the previous section does not affect the assumption, made by Womb Grammars, that the input corpus is correct: we merely postulate an anonymous category and features, and let constraint solving automatically find out from context which are the “correct” ones (correct in the sense of our multilingual or neologism-creating environment) to associate to an extraneous word.

Extraneous structures, particularly if coexisting with extraneous lexicon, might be more difficult to deal with, because we rely upon the structural constraints being correct in order to infer an unknown category (e.g. the constraint that adjectives must precede nouns helps to determine that the word “vorpal” functions as an adjective in Lewis Carrol’s poem). Therefore, in this section we assume there are no extraneous words and we only deal with extraneous structures. We shall then try to combine both approaches.

We assume, with no loss of generality, that the main language is English and that it is being infiltrated with structures of other languages—the same considerations apply if the main language is another one.

One possibility is to use the Hybrid Womb Grammar approach with the user’s mother tongue as target language and English as the source language, thus obtaining a parser for the mixed language, through training a hybrid Womb Grammar with a user-produced representative corpus of sentences. We can then run an input corpus that is representative of the user’s talk (e.g. Spanglish) and this will result in a Spanglish grammar adapted to the user in question. Thereafter, this user will be able to create all the neologisms he wants, given that the structures used, although they may be incorrect for either Spanish or English, will be adequately represented in the Spanglish grammar obtained, which is tailored to this user.

Mixed Language Text Parsing

The Training Phase. Before being able to parse a user’s mixed use of two languages, we propose to obtain a parser for the mixed language, through training a hybrid Womb Grammar with a user-produced representative corpus of sentences. Let \(L^S\) (the source language) be the main language used in the text we want to parse, e.g. English. Its syntactic component will be noted \(L^S_{syntax}\), and its lexical component, \(L^S_{lex}\).

Let \(L^T\) be the user’s mother tongue. We want to obtain the syntax for the user’s blending of \(L^S\) and \(L^T\). Let us call this mixed language \(L^M\).

Since we have made the assumption that during this training phase we have no extraneous words (that is, no words that do not appear in the lexicon), we have two options: we can either require that the user do not include them in the training phase, so that the target lexicon will be that of English (\(L^M_{lex}\)=\(L^S_{lex}\)) or we can simply extend the target lexicon to include both the source language’s and that of the user’s mother tongue (\(L^M_{lex}=L^S_{lex} \cup L^T_{lex}\)). Whichever of these two options we take, let us call the mixed language’s lexicon (\(L^M_{lex}\)). We can feed a sufficiently representative corpus \(L^\text {M}_\text {corpus}\) of sentences in \(L^M\) that the user has produced, to a hybrid parser consisting of \(L^S_{syntax}\) and \(L^M_{lex}\). This will result in some of the sentences being marked as incorrect by the parser. An analysis of the constraints these “incorrect” sentences violate can subsequently reveal how to transform \(L^S_{syntax}\) so it accepts as correct the sentences in the corpus of \(L_M\)—i.e., how to transform it into \(L^M_{syntax}\). Figures 1 and 2 respectively show our problem and our proposed solution through Hybrid Parsing in schematic form.

For example, let \(L^S = English\) and \(L^T = French\), and let us assume that English adjectives always precede the noun they modify, while in French they always post-cede it (an oversimplification, just for illustration purposes). Thus “the blue book” is correct English, whereas in French we would more readily say “le livre bleu”.

If we plug the French lexicon and the English syntax constraints into our Womb Grammar parser, and run a representative corpus of (correct) French noun phrases by the resulting hybrid parser, the said precedence property will be declared unsatisfied when hitting phrases such as “le livre bleu”. The grammar repairing module can then look at the entire list of unsatisfied constraints, and produce the missing syntactic component of \(L^T\)’s parser by modifying the constraints in \(L^S_{syntax}\) so that none are violated by the corpus sentences.

Some of the necessary modifications are easy to identify and to perform, e.g. for accepting “le livre bleu” we only need to delete the (English) precedence requirement of adjective over noun (noted \(adj < n\)). However, subtler modifications may be in order, perhaps requiring some statistical analysis in a second round of parsing: if in our \(L^M\) corpus, which we have assumed representative, all adjectives appear after the noun they modify, French is sure to include the reverse precedence property as in English: \(n < adj\). So in this case, not only do we need to delete \(adj < n\), but we also need to add \(n < adj\).

4.4 Extracting Domain Knowledge from Text Corpora

Extracting domain knowledge from text corpora is an active research area which involves several communities (see e.g. [8] for an overview). For our purposes we’ll focus on the problem of building a (partial) hypernym relation graph from textual corpora.

In our context, we are not interested in building a precise structured conceptualization of a domain but to recognize hypernyms and hyponyms of the extraneous words. Once we are able to recognise the meaning of related words (e.g. using a background source of information like EuroWordNet [22]) we can classify the missing words and grasp their meaning. For example, searching the web for the exact phrase “a borogove is” returns a snippet containing the sentence “a borogove is a thin shabby-looking bird” which allows us to infer that a “borogove” is a bird.

Different techniques have been developed to optimize the task of acquiring semantic structuring of a domain; however, our problem is much more limited because we are not interested in constructing a complete taxonomy. In particular, the problems of precision and recall will not affect us to the same extent as in the general case.

The fact that we start our search for hypernyms from specific seed words and we cannot make strong assumptions on the corpora we are analysing, makes approaches based on hyponym patterns a natural choice (see [13, 20]). The basic idea is to search the corpora for specific textual patterns which explicitly identify a hyponym relation between terms (e.g., “such authors as \(\langle X\rangle \)”). Hyponym patterns can be pre-defined or extracted from corpora using known taxonomies (e.g., [20]). For our purposes we can reuse known patterns and apply them to the text source being parsed or external sources like Wikipedia or a web search engine [21].

5 Conclusion

We have shown how to use the combined power of Womb grammars plus ontologies in order to make syntactic sense of text for which the grammar we dispose of has only partial information. As well, we have delineated how we could extend these abilities into semantics.

While in this paper we have focused on a specific language’s grammar, it might be useful to be able to consult in a second stage the relevant fragment (e.g. that of noun phrases if the extraneous word belongs to one) of a universal grammar. This will be the case for instance if the word that seems not to belong in the text exhibits some property that does not exist in the text’s main language. When this is the case, there will be no way to assign for some word a category that is in line with the surrounding ones and results in no more properties being violated.

Our work may have interesting connections with Chomskys innate theory of language, which states that all children share the same internal constraints which characterize narrowly the grammar they are going to construct, and exposure to a specific language determines their specialization into the specific rules for that language.

These internal constraints, if the theory is correct, characterize what may be seen as a latent universal language. Womb grammars may help to uncover its constraints phrase by phrase, perhaps relative to families of language, or help shed light upon specific problems, such as phylogenetic classification.

Notes

- 1.

- 2.

Which precise constraints are defined for a given language subset is left to the grammar designer; those in this paper are meant to exemplify more than to prescribe.

References

Becerra-Bonache, L., Dahl, V., Jiménez-López, M.D.: Womb grammars as a bio-inspired model for grammar induction. In: Bajo Perez, J., Corchado Rodríguez, J.M., Mathieu, P., Campbell, A., Ortega, A., Adam, E., Navarro, E.M., Arndt, S., Moreno, M.N., Soto, S.V., Julián, V. (eds.) Trends in Practical Applications of Heterogeneous Multi-agent Systems. The PAAMS Collection. AISC, vol. 293, pp. 79–86. Springer International Publishing, Heidelberg (2014)

Becerra Bonache, L., Dahl, V., Miralles, J.E.: On second language tutoring through womb grammars. In: Rojas, I., Joya, G., Gabestany, J. (eds.) IWANN 2013, Part I. LNCS, vol. 7902, pp. 189–197. Springer, Heidelberg (2013). http://dx.doi.org/10.1007/978-3-642-38679-4_18

Blache, P.: Property grammars: a fully constraint-based theory. In: Christiansen, H., Skadhauge, P.R., Villadsen, J. (eds.) CSLP 2005. LNCS (LNAI), vol. 3438, pp. 1–16. Springer, Heidelberg (2005)

Blache, P., Guénot, M.L., Vanrullen, T.: A corpus-based technique for grammar development. In: ICCL, pp. 123–131 (2003). https://halv3-preprod.archives-ouvertes.fr/hal-00135437

Burkett, D., Klein, D.: Two languages are better than one (for syntactic parsing). In: Proceedings of the Conference on Empirical Methods in Natural Language Processing, pp. 877–886. Association for Computational Linguistics (2008)

Christiansen, H.: CHR grammars. TPLP 5(4–5), 467–501 (2005)

Christiansen, H.: Adaptable grammars for non-context-free languages. In: Bio-Inspired Models for Natural and Formal Languages, pp. 33–51. Cambridge Scholars Publishing (2011)

Clark, M., Kim, Y., Kruschwitz, U., Song, D., Albakour, D., Dignum, S., Beresi, U.C., Fasli, M., De Roeck, A.: Automatically structuring domain knowledge from text: an overview of current research. Inf. Process. Manag. 48(3), 552–568 (2012)

Cohen, S.B., Smith, N.A.: Covariance in unsupervised learning of probabilistic grammars. J. Mach. Learn. Res. 11, 3017–3051 (2010)

Dahl, V., Miralles, J.: Womb grammars: constraint solving for grammar induction. In: Sneyers, J., Frühwirth, T. (eds.) Proceedings of the 9th Workshop on Constraint Handling Rules. vol. Technical report CW 624, Department of Computer Science, K.U. Leuven, pp. 32–40 (2012)

Dahl, V., Blache, P.: Directly executable constraint based grammars. In: Proceedings of the Journees Francophones de Programmation en Logique avec Contraintes, JFPLC 2004 (2004)

Dahl, V., Miralles, E., Becerra, L.: On language acquisition through womb grammars. In: 7th International Workshop on Constraint Solving and Language Processing, pp. 99–105 (2012)

Hovy, E., Kozareva, Z., Riloff, E.: Toward completeness in concept extraction and classification. In: Proceedings of the 2009 Conference on Empirical Methods in Natural Language Processing, vol. 2, pp. 948–957. Association for Computational Linguistics (2009)

Huang, C., Calzolari, N., Gangemi, A.: Ontology and the Lexicon: A Natural Language Processing Perspective. Studies in Natural Language Processing. Cambridge University Press, Cambridge (2010)

Jackson, Q.T.: Adapting to Babel: Adaptivity and Context-Sensitivity in Parsing. Verlag: Ibis Publishing, Plymouth (2006)

Nicolas, L., Molinero, M.A., Sagot, B., Sánchez Trigo, E., De La Clergerie, É., Alonso Pardo, M., Farré, J., Miquel-Vergès, J.: Towards efficient production of linguistic resources: the Victoria Project. In: Proceedings of the International Conference RANLP-2009, pp. 318–323. Association for Computational Linguistics, Borovets (2009). https://hal.inria.fr/inria-00553259

Pereira, F.C., Warren, D.H.: Definite clause grammars for language analysis—a survey of the formalism and a comparison with augmented transition networks. Artif. Intell. 13(3), 231–278 (1980)

van Rullen, T.: Vers une analyse syntaxique a granularite variable. Ph.D. thesis, Université de Provence (2005) (2005)

Schrijvers, T., Sulzmann, M.: Transactions in constraint handling rules. In: Garcia de la Banda, M., Pontelli, E. (eds.) ICLP 2008. LNCS, vol. 5366, pp. 516–530. Springer, Heidelberg (2008)

Snow, R., Jurafsky, D., Ng, A.Y.: Learning syntactic patterns for automatic hypernym discovery. In: Advances in Neural Information Processing Systems, vol. 17 (2004)

Snow, R., Jurafsky, D., Ng, A.Y.: Semantic taxonomy induction from heterogenous evidence. In: Proceedings of the 21st International Conference on Computational Linguistics and the 44th Annual Meeting of the Association for Computational Linguistics, pp. 801–808. ACL-44, Association for Computational Linguistics, Stroudsburg (2006). http://dx.doi.org/10.3115/1220175.1220276

Vossen, P.: EuroWordNet: a multilingual database of autonomous and language-specific wordnets connected via an inter-lingualindex. Int. J. Lexicography 17(2), 161–173 (2004). http://dx.doi.org/10.1093/ijl/17.2.161

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Rights and permissions

Copyright information

© 2015 Springer International Publishing Switzerland

About this paper

Cite this paper

Adebara, I., Dahl, V., Tessaris, S. (2015). Parsing with Partially Known Grammar. In: Duval, B., van den Herik, J., Loiseau, S., Filipe, J. (eds) Agents and Artificial Intelligence. ICAART 2015. Lecture Notes in Computer Science(), vol 9494. Springer, Cham. https://doi.org/10.1007/978-3-319-27947-3_18

Download citation

DOI: https://doi.org/10.1007/978-3-319-27947-3_18

Published:

Publisher Name: Springer, Cham

Print ISBN: 978-3-319-27946-6

Online ISBN: 978-3-319-27947-3

eBook Packages: Computer ScienceComputer Science (R0)