Abstract

The classical unbiasedness condition utilized e.g. by the best linear unbiased estimator (BLUE) is very stringent. By softening the “global” unbiasedness condition and introducing component-wise conditional unbiasedness conditions instead, the number of constraints limiting the estimator’s performance can in many cases significantly be reduced. In this paper we extend the findings on the component-wise conditionally unbiased linear minimum mean square error (CWCU LMMSE) estimator under linear model assumptions. We discuss the CWCU LMMSE estimator for complex proper Gaussian parameter vectors, and for mutually independent (and otherwise arbitrarily distributed) parameters. Finally, the beneficial properties of the CWCU LMMSE estimator are demonstrated in two applications.

This work was supported by the Austrian Science Fund (FWF): I683-N13.

Access provided by Autonomous University of Puebla. Download conference paper PDF

Similar content being viewed by others

Keywords

- Probability Density Function

- Channel Estimation

- Discrete Fourier Transform

- Linear Minimum Mean Square Error

- Good Linear Unbiased Estimator

These keywords were added by machine and not by the authors. This process is experimental and the keywords may be updated as the learning algorithm improves.

1 Introduction

Usually, when we talk about unbiased estimation of a parameter vector \(\mathbf {x}\in \mathbb {C}^{n\times 1}\) out of a measurement vector \(\mathbf {y}\in \mathbb {C}^{m\times 1}\), then the estimation problem is treated in the classical framework [1]. Letting \(\hat{\mathbf {x}} = \mathbf {g}(\mathbf {y})\) be an estimator of \(\mathbf {x}\), then the classical unbiased constraint asserts that

where \(p(\mathbf {y};\mathbf {x})\) is the probability density function (PDF) of vector \(\mathbf {y}\) parametrized by the unknown parameter vector \(\mathbf {x}\). The index of the expectation operator shall indicate the PDF over which the averaging is performed. Equation (1) can also be formulated in the Bayesian framework, where the parameter vector \(\mathbf {x}\) is treated as random, and whose realization is to be estimated. Here, the corresponding problem arises by demanding global conditional unbiasedness, i.e.

The attribute global indicates that the condition is made on the whole parameter vector \(\mathbf {x}\). However, the constricting requirement in (2) prevents the exploitation of prior knowledge about the parameters, and hence leads to a significant reduction in the benefits brought about by the Bayesian framework.

In component-wise conditionally unbiased (CWCU) Bayesian parameter estimation [2–5], instead of constraining the estimator to be globally unbiased, we aim for achieving conditional unbiasedness on one parameter component at a time. Let \(x_i\) be the \(i^{th}\) element of \(\mathbf {x}\), and \(\hat{x}_i = g_i(\mathbf {y})\) be an estimator of \(x_i\). Then the CWCU constraints are

for all possible \(x_i\) (and all \(i=1,2,...,n\)). The CWCU constraints are less stringent than the global conditional unbiasedness condition in (2), and it turns out that a CWCU estimator in many cases allows the incorporation of prior knowledge about the statistical properties of the parameter vector.

The paper is organized as follows: In Sect. 2 we discuss the CWCU linear minimum mean square error (LMMSE) estimator under different linear model assumptions, and we extend the findings of [2]. We particularly distinguish between complex proper jointly Gaussian (cf. [6]), and mutually independent (and otherwise arbitrarily distributed) parameters. Then, in Sect. 3 the CWCU LMMSE estimator is compared against the best linear unbiased estimator (BLUE) and the LMMSE estimator in two different applications.

2 CWCU LMMSE Estimation

We assume that a complex vector parameter \(\mathbf {x}\in \mathbb {C}^{n\times 1}\) is to be estimated based on a measurement vector \(\mathbf {y}\in \mathbb {C}^{m\times 1}\). Additionally, we assume that \(\mathbf {x}\) and \(\mathbf {y}\) are connected via a linear model

where \(\mathbf {H}\in \mathbb {C}^{m\times n}\) is a known observation matrix, \(\mathbf {x}\) has mean \(E_{\mathbf {x}}[\mathbf {x}]\) and covariance matrix \(\mathbf {C}_{\mathbf {x}\mathbf {x}}\), and \(\mathbf {n}\in \mathbb {C}^{m\times 1}\) is a zero mean noise vector with covariance matrix \(\mathbf {C}_{\mathbf {n}\mathbf {n}}\) and independent of \(\mathbf {x}\). Additional assumptions on \(\mathbf {x}\) will vary in the following. We note that the CWCU LMMSE estimator for the linear model under the assumption of complex proper Gaussian \(\mathbf {x}\) and complex and proper white Gaussian noise with covariance matrix \(\mathbf {C}_{\mathbf {n}\mathbf {n}}=\sigma _n^2\mathbf {I}\) has already been derived in [2].

As in LMMSE estimation we constrain the estimator to be linear (or actually affine), such that

with \(\mathbf {E}\in \mathbb {C}^{n\times m}\) and \(\mathbf {c}\in \mathbb {C}^{n\times 1}\). Note that in LMMSE estimation no assumptions on the specific form of the PDF \(p(\mathbf {x})\) have to be made. However, the situation is different in CWCU LMMSE estimation as will be shown shortly. Let us consider the \(i^{th}\) component of the estimator

where \(\mathbf {e}_i^H\) denotes the \(i^{th}\) row of the estimator matrix \(\mathbf {E}\). Furthermore, let \(\mathbf {h}_i\in \mathbb {C}^{m\times 1}\) be the \(i^{th}\) column of \(\mathbf {H}\), \(\bar{\mathbf {H}}_i\in \mathbb {C}^{m\times (n-1)}\) the matrix resulting from \(\mathbf {H}\) by deleting \(\mathbf {h}_i\), \(x_i\) be the \(i^{th}\) element of \(\mathbf {x}\), and \(\bar{\mathbf {x}}_i\in \mathbb {C}^{(n-1)\times 1}\) the vector resulting from \(\mathbf {x}\) after deleting \(x_i\). Then we can write \(\mathbf {y}=\mathbf {h}_i x_i + \bar{\mathbf {H}}_i \bar{\mathbf {x}}_i + \mathbf {n}\), and (6) becomes

The conditional mean of \(\hat{x}_i\) therefore is

From (8) we can derive conditions that guarantee that the CWCU constraints (3) are fulfilled. There are at least the following possibilities:

-

1.

(3) can be fulfilled for all possible \(x_i\) if the conditional mean \(E_{\bar{\mathbf {x}}_i|x_i}[\bar{\mathbf {x}}_i|x_i]\) is a linear function of \(x_i\). For complex proper Gaussian \(\mathbf {x}\) this condition holds (for all \(i=1,2,...,n\)).

-

2.

(3) can be fulfilled for all possible \(x_i\) (and all \(i=1,2,...,n\)) if \(E_{\bar{\mathbf {x}}_i|x_i}[\bar{\mathbf {x}}_i|x_i] = E_{\bar{\mathbf {x}}_i}[\bar{\mathbf {x}}_i]\) for all possible \(x_i\) (and all \(i=1,2,...,n\)), which is true if the elements \(x_i\) of \(\mathbf {x}\) are mutually independent.

-

3.

(3) is fulfilled for all possible \(x_i\) (and all \(i=1,2,...,n\)) if \(\mathbf {e}_i^H \mathbf {h}_i = 1\), \(\mathbf {e}_i^H\bar{\mathbf {H}}_i = \mathbf {0}^T\), and \(c_i=0\) for \(i = 1,2,\cdots ,n\). These constraints and settings correspond to the ones of the BLUE.

2.1 Complex Proper Gaussian Parameter Vectors

We start with the first case from above, assume a complex proper Gaussian parameter vector, i.e. \(\mathbf {x} \sim \mathcal {CN}(E_{\mathbf {x}}[\mathbf {x}], \mathbf {C}_{\mathbf {x}\mathbf {x}})\), and start with the derivation of the \(i^{th}\) component \(\hat{x}_i\) of the estimator. Because of the Gaussian assumption we have \(E_{\bar{\mathbf {x}}_i|x_i}[\bar{\mathbf {x}}_i|x_i]=E_{\bar{\mathbf {x}}_i}[\bar{\mathbf {x}}_i]+ (\sigma _{x_i}^2)^{-1}\mathbf {C}_{\bar{\mathbf {x}}_i x_i}(x_i-E_{x_i}[x_i])\), where \(\mathbf {C}_{\bar{\mathbf {x}}_i x_i} = E_{\mathbf {x}}[(\bar{\mathbf {x}}_i - E_{\bar{\mathbf {x}}_i}[\bar{\mathbf {x}}_i])(x_i - E_{x_i}[x_i])^H]\), and \(\sigma _{x_i}^2\) is the variance of \(x_i\). Consequently (8) becomes

Note that the only requirement on the noise vector so far was its independence on \(\mathbf {x}\). From (9) we see that \(E_{\mathbf {y}|x_i}[\hat{x}_i|x_i] = x_i\) is fulfilled if

and

With (10) and (11) can be reformulated according to

Furthermore, (10) can be simplified to obtain the constraint

Inserting (6), (12) and (13) into the Bayesian MSE cost function \(E_{\mathbf {y},\mathbf {x}}[|\hat{x}_i - x_i|^2]\) immediately leads to the constrained optimization problem

where “CL” shall stand for CWCU LMMSE. The solution can be found with the Lagrange multiplier method and is given by

Introducing the estimator matrix \(\mathbf {E}_{\text {CL}} = [\mathbf {e}_{\text {CL},1}, \mathbf {e}_{\text {CL},2}, \ldots , \mathbf {e}_{\text {CL},n}]^H\) together with (12) and (15) immediately leads us to the first part of the

Proposition 1

If the observed data \(\mathbf {y}\) follow the linear model in (4), where \(\mathbf {y}\in \mathbb {C}^{m\times 1}\) is the data vector, \(\mathbf {H}\in \mathbb {C}^{m\times n}\) is a known observation matrix, \(\mathbf {x}\in \mathbb {C}^{n\times 1}\) is a parameter vector with prior complex proper Gaussian PDF \(\mathcal {CN}(E_{\mathbf {x}}[\mathbf {x}], \mathbf {C}_{\mathbf {x}\mathbf {x}})\), and \(\mathbf {n}\in \mathbb {C}^{m\times 1}\) is a zero mean noise vector with covariance matrix \(\mathbf {C}_{\mathbf {n}\mathbf {n}}\) and independent of \(\mathbf {x}\) (the PDF of \(\mathbf {n}\) is otherwise arbitrary), then the CWCU LMMSE estimator minimizing the Bayesian MSEs \(E_{\mathbf {y},\mathbf {x}}[|\hat{x}_i - x_i|^2]\) under the constraints \(E_{\mathbf {y}|x_i}[\hat{x}_i|x_i]=x_i\) for \(i = 1,2,\cdots ,n\) is given by

with

where the elements of the real diagonal matrix \(\mathbf {D}\) are

The mean of the error \(\mathbf {e} = \mathbf {x}-\hat{\mathbf {x}}_{\mathrm {CL}}\) (in the Bayesian sense) is zero, and the error covariance matrix \(\mathbf {C}_{\mathbf {e}\mathbf {e},\mathrm {CL}}\) which is also the minimum Bayesian MSE matrix \(\mathbf {M}_{\hat{\mathbf {x}}_{\mathrm {CL}}}\) is

with \(\mathbf {A} = \mathbf {C}_{\mathbf {x}\mathbf {x}}\mathbf {H}^H(\mathbf {H}\mathbf {C}_{\mathbf {x}\mathbf {x}}\mathbf {H}^H+\mathbf {C}_{\mathbf {n}\mathbf {n}})^{-1} \mathbf {H}\mathbf {C}_{\mathbf {x}\mathbf {x}}\). The minimum Bayesian MSEs are \(\mathrm {Bmse}(\hat{x}_{\mathrm {CL},i}) = [\mathbf {M}_{\hat{\mathbf {x}}_{\mathrm {CL}}}]_{i,i}\).

The part on the error performance can simply be proved by inserting in the definition of \(\mathbf {e}\) and \(\mathbf {C}_{\mathbf {e}\mathbf {e}}\), respectively. From (17) it can be seen that the CWCU LMMSE estimator matrix can be derived as the product of the diagonal matrix \(\mathbf {D}\) with the LMMSE estimator matrix \(\mathbf {E}_{\text {L}}=\mathbf {C}_{\mathbf {x}\mathbf {y}}\mathbf {C}_{\mathbf {y}\mathbf {y}}^{-1} = \mathbf {C}_{\mathbf {x}\mathbf {x}}\mathbf {H}^H(\mathbf {H}\mathbf {C}_{\mathbf {x}\mathbf {x}}\mathbf {H}^H+\mathbf {C}_{\mathbf {n}\mathbf {n}})^{-1}\). Furthermore, we have \(E_{\mathbf {y}|x_i}[\hat{x}_{\mathrm {L},i}|x_i] = [\mathbf {D}]_{i,i}^{-1} x_i + (1-[\mathbf {D}]_{i,i}^{-1})E_{x_i}[x_i]\) for the LMMSE estimator. From (18) it also follows that

The CWCU LMMSE estimator will in general not commute over linear transformations, an exception is the transformation over a diagonal matrix as partly discussed in [5].

2.2 Complex Parameter Vectors with Mutually Independent Elements

In case the elements of the parameter vector are mutually independent (8) becomes

\(E_{\mathbf {y}|x_i}[\hat{x}_i|x_i]=x_i\) is fulfilled if \(\mathbf {e}_i^H\mathbf {h}_i=1\) and \(c_i = -\mathbf {e}_i^H\bar{\mathbf {H}}_i E_{\bar{\mathbf {x}}_i}[\bar{\mathbf {x}}_i]\). No further assumptions on the PDF of \(\mathbf {x}\) are required. Following similar arguments as above again leads to a constrained optimization problem [5]. Solving it leads to

Proposition 2

If the observed data \(\mathbf {y}\) follow the linear model in (4), where \(\mathbf {y}\in \mathbb {C}^{m\times 1}\) is the data vector, \(\mathbf {H}\in \mathbb {C}^{m\times n}\) is a known observation matrix, \(\mathbf {x}\in \mathbb {C}^{n\times 1}\) is a parameter vector with mean \(E_{\mathbf {x}}[\mathbf {x}]\), mutually independent elements and covariance matrix \(\mathbf {C}_{\mathbf {x}\mathbf {x}} = \mathrm {diag}\{\sigma _{x_1}^2,\sigma _{x_2}^2,\cdots ,\sigma _{x_n}^2\}\), \(\mathbf {n}\in \mathbb {C}^{m\times 1}\) is a zero mean noise vector with covariance matrix \(\mathbf {C}_{\mathbf {n}\mathbf {n}}\) and independent of \(\mathbf {x}\) (the PDF of \(\mathbf {n}\) is otherwise arbitrary), then the CWCU LMMSE estimator minimizing the Bayesian MSEs \(E_{\mathbf {y},\mathbf {x}}[|\hat{x}_i - x_i|^2]\) under the constraints \(E_{\mathbf {y}|x_i}[\hat{x}_i|x_i]=x_i\) for \(i = 1,2,\cdots ,n\) is given by (16) and (17), where the elements of the real diagonal matrix \(\mathbf {D}\) are

Since for mutually independent parameters the \(i^{th}\) row of the LMMSE estimator is \(\mathbf {e}_{\text {L},i}^H=\sigma _{x_i}^2\mathbf {h}_i^H(\mathbf {H}\mathbf {C}_{\mathbf {x}\mathbf {x}}\mathbf {H}^H+\mathbf {C}_{\mathbf {n}\mathbf {n}})^{-1}\) it follows from (22) that

It therefore holds that \(\mathrm {diag}\{\mathbf {E}_{\text {CL}}\mathbf {H}\} = \mathbf {1}\). Furthermore, in [5] we showed that for mutually independent parameters \(\mathbf {e}_{\text {CL},i}\) does not depend on \(\sigma _{x_i}^2\) and is also given by \(\mathbf {e}_{\text {CL},i} = (\mathbf {h}_i^H \mathbf {C}_i^{-1} \mathbf {h}_i)^{-1} \mathbf {C}_i^{-1} \mathbf {h}_i\), where \(\mathbf {C}_i= \bar{\mathbf {H}}_i \mathbf {C}_{\bar{\mathbf {x}}_i\bar{\mathbf {x}}_i} \bar{\mathbf {H}}_i^H + \mathbf {C}_{\mathbf {n}\mathbf {n}}\).

2.3 Other Cases

If \(\mathbf {x}\) is whether complex proper Gaussian nor a vector with mutually independent parameters, then we have the following possibilities: If \(E_{\mathbf {y}|x_i}[\hat{x}_i|x_i]\) is a linear function of \(x_i\) for all \(i = 1,2,\cdots ,n\) then we can derive the CWCU LMMSE estimator similar as in Sect. 2.1. In the remaining cases still an estimator can be found that fulfills the CWCU constraints. As discussed above the choice \(\mathbf {e}_i^H\mathbf {h}_i = 1\), \(\mathbf {e}_i^H\bar{\mathbf {H}}_i=\mathbf {0}^T\) together with \(c_i=0\) for all \(i = 1,2,\cdots ,n\) ensures that (3) holds. Inserting these constraints into the Bayesian MSE cost functions and solving the constrained optimization problems leads to

with \(\mathbf {C}_{\mathbf {e}\mathbf {e},\text {B}} = (\mathbf {H}^H\mathbf {C}_{\mathbf {n}\mathbf {n}}^{-1}\mathbf {H})^{-1}\) as the Bayesian error covariance matrix. \( \mathbf {e}_i^H\mathbf {h}_i = 1 \& \mathbf {e}_i^H\bar{\mathbf {H}}_i=\mathbf {0}\) for all \(i = 1,2,\cdots ,n\) is equivalent to \(\mathbf {E}\mathbf {H}=\mathbf {I}\). This implies \(\hat{\mathbf {x}}_{\text {B}} = \mathbf {E}_{\text {B}}\mathbf {y}= \mathbf {x} + \mathbf {E}_{\text {B}}\mathbf {n}\). It follows that the estimator in (24) also fulfills the global unbiasedness condition \(E_{\mathbf {y}|\mathbf {x}}[\hat{\mathbf {x}}_{\text {B}}|\mathbf {x}]=\mathbf {x}\) for every \(\mathbf {x}\in \mathbb {C}^{n\times 1}\). This estimator which is the BLUE is not able to exploit any prior knowledge about \(\mathbf {x}\). Usually the BLUE is treated in the classical instead of the Bayesian framework.

3 Applications

3.1 QPSK Data Estimation

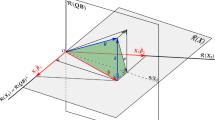

An example that exhibits the properties of the CWCU LMMSE concept most demonstrative is the estimation of channel distorted and noisy received quadrature amplitude modulated (QAM) data symbols. We assume an underlying linear model as in (4), with a parameter vector \(\mathbf {x}\) consisting of 4 mutually independent 4-QAM symbols, each out of \(\{\pm 1 \pm j\}\), complex proper additive white Gaussian noise (AWGN) with variance \(\sigma _n^2\), and a 4\(\,\times \,\)4 channel matrix \(\mathbf {H}\). Due to the mutually independence of the 4-QAM data symbols we use the CWCU LMMSE estimator from Proposition 2. The experiment is repeated a large number of times for a fixed \(\sigma _n^2\) and for a particularly chosen channel matrix \(\mathbf {H}\). Figure 1 visualizes the relative frequencies of the estimates \(\hat{x}_{\text {B},1} = [\hat{\mathbf {x}}_{\text {B}}]_1\) (BLUE), \(\hat{x}_{\text {CL},1} = [\hat{\mathbf {x}}_{\text {CL}}]_1\) (CWCU LMMSE), \(\hat{x}_{\text {L},1} = [\hat{\mathbf {x}}_{\text {L}}]_1\) (LMMSE) in the complex plane. The estimates of both \(\hat{x}_{\text {B},1}\) and \(\hat{x}_{\text {CL},1}\) are centered around the true constellation points since these estimators fulfill the CWCU constraints. The Bayesian MSE of \(\hat{x}_{\text {CL},1}\) is clearly below the one of \(\hat{x}_{\text {B},1}\) since the former is able to incorporate the prior knowledge inherent in \(\mathbf {C}_{\mathbf {x}\mathbf {x}}=\sigma _{\mathbf {x}}^2 \mathbf {I}\). \(\hat{x}_{\text {L},1}\) is conditionally biased towards the prior mean which is 0. While the LMMSE estimator exhibits the lowest Bayesian MSE it can be shown that the LMMSE and CWCU LMMSE estimator lead to the same bit error ratio (BER) when the decision boundaries are adapted properly. However, the BLUE shows a worse performance in the MSE and in the BER.

3.2 Channel Estimation

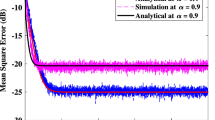

As a second example to demonstrate the properties of the CWCU LMMSE estimator we choose the well-known channel estimation problem for IEEE 802.11a/n WLAN standards [7], which extends our investigations in [5]. The standards define two identical length \(N=64\) preamble symbols designed such that their discrete Fourier transformed (DFT) versions show \(\pm 1\) at 52 subcarrier positions (indexes \(\{1,...,26,38,...63\}\)) and zeros at the remaining ones (indexes \(\{0,27,...,37\}\)). The channel impulse response (CIR) is modeled as a zero mean complex proper Gaussian vector, i.e. \(\mathbf {h}\sim \mathcal {CN}(\mathbf {0}, \mathbf {C}_{\mathbf {h}\mathbf {h}})\), with \(\mathbf {C}_{\mathbf {h}\mathbf {h}} = \mathrm {diag}\{\sigma _0^2,\sigma _1^2,...,\sigma _{l_h-1}^2\}\) and exponentially decaying power delay profile with \(\sigma _i^2 = \left( 1- e^{-T_s/\tau _{rms}})\right) e^{-i T_s/\tau _{rms}}\) for \(i=0,1,...,l_h-1\). \(T_s\) and \(\tau _{rms}\) are the sampling time and the channel delay spread, respectively, and \(l_h\) is the channel length which can be assumed to be considerably smaller than N. In our setup we chose \(T_s=50\)ns, \(\tau _{rms}=100\)ns, and \(l_h=16\). The transmission of the training symbols over the channel can again be written as a linear model with complex proper AWGN noise, and with \(\mathbf {h}\) as the vector parameter whose realization is to be estimated, cf. [5]. Figure 2 shows the Bayesian MSEs of the BLUE (\(\hat{\mathbf {h}}_{\text {B}})\), the LMMSE estimator (\(\hat{\mathbf {h}}_{\text {L}})\), and the CWCU LMMSE estimator (\(\hat{\mathbf {h}}_{\text {CL}})\) for a time domain noise variance of \(\sigma _n^2=0.01\). Proposition 2 has been used to derive \(\hat{\mathbf {h}}_{\text {CL}}\) since the elements of \(\mathbf {h}\) are mutually independent. \(\hat{\mathbf {h}}_{\text {CL}}\) almost reaches the performance of \(\hat{\mathbf {h}}_{\text {L}}\), and in contrast to the latter it additionally shows the property of conditional unbiasedness. Both estimators incorporate the prior knowledge inherent in \(\mathbf {C}_{\mathbf {h}\mathbf {h}}\) which results in a huge performance gain over \(\hat{\mathbf {h}}_{\text {B}}\). We now turn to frequency response estimators and note that the vector of frequency response coefficients \(\mathbf {\tilde{\mathbf {h}}}\in \mathbb {C}^{64\times 1}\) (which corresponds to the DFT of the zero-padded impulse response \(\begin{bmatrix} \mathbf {h}^T&\mathbf {0}^T \end{bmatrix}^T\)) consists of proper Gaussian elements, but the PDF of \(\mathbf {\tilde{\mathbf {h}}}\) cannot be written in the form of a multivariate proper Gaussian PDF. The LMMSE estimator \(\hat{\mathbf {\tilde{\mathbf {h}}}}_{\text {L}}\) is simply obtained by the DFT of \(\begin{bmatrix} \hat{\mathbf {h}}_{\text {L}}^T&\mathbf {0}^T \end{bmatrix}^T\) (since it commutes over linear transformations). As discussed in [5], the BLUE \(\hat{\mathbf {\tilde{\mathbf {h}}}}_{\text {B}}\) can be derived correspondingly. The CWCU LMMSE estimator \(\hat{\mathbf {\tilde{\mathbf {h}}}}_{\text {CL}}\) cannot be derived in this way since it does not commute over general linear transformations. However, although \(\mathbf {\tilde{\mathbf {h}}}\) is not a proper Gaussian vector, \(E_{\bar{\mathbf {\tilde{\mathbf {h}}}}_i|\tilde{h}_i}[\bar{\mathbf {\tilde{\mathbf {h}}}}_i|\tilde{h}_i]\) is linear in \(\tilde{h}_i\) (for all \(i=0,1, \cdots ,N-1\)), and one can easily show that (16)–(18) can be applied to determine the CWCU LMMSE estimator. The frequency domain version of the prior covariance matrix \(\mathbf {C}_{\mathbf {h}\mathbf {h}}\) is required for its derivation. Figure 3 shows the Bayesian MSEs of \(\hat{\mathbf {\tilde{\mathbf {h}}}}_{\text {B}}\), \(\hat{\mathbf {\tilde{\mathbf {h}}}}_{\text {L}}\), and \(\hat{\mathbf {\tilde{\mathbf {h}}}}_{\text {CL}}\), respectively. \(\hat{\mathbf {\tilde{\mathbf {h}}}}_{\text {B}}\) is outperformed by \(\hat{\mathbf {\tilde{\mathbf {h}}}}_{\text {L}}\) and \(\hat{\mathbf {\tilde{\mathbf {h}}}}_{\text {CL}}\) at all frequencies, but the performance loss is significant at the large gap from subcarrier 27 to 37, where no training information is available. In contrast, \(\hat{\mathbf {\tilde{\mathbf {h}}}}_{\text {L}}\) and \(\hat{\mathbf {\tilde{\mathbf {h}}}}_{\text {CL}}\) show excellent interpolation properties along this gap. Large estimation errors of \(\hat{\mathbf {\tilde{\mathbf {h}}}}_{\text {B}}\) in this spectral region are spread over all time domain samples which explains the poor performance of \(\hat{\mathbf {h}}_{\text {B}}\). Note that in practice this is only critical if \(\hat{\mathbf {h}}_{\text {B}}\) is incorporated in the receiver processing, however, pure frequency domain receivers only require estimates at the occupied 52 subcarrier positions.

4 Conclusion

In this work we investigated the CWCU LMMSE estimator for the linear model. First, we derived the estimator for complex proper Gaussian parameter vectors, and for the case of mutually independent (and otherwise arbitrarily distributed) parameters. For the remaining cases the CWCU LMMSE estimator may correspond to a globally unbiased estimator. The implications of the CWCU constraints have been demonstrated in a data estimation example using a discrete alphabet, and in a channel estimation application. In both applications the CWCU LMMSE estimator considerably outperforms the globally unbiased BLUE.

References

Kay, S.M.: Fundamentals of Statistical Signal Processing: Estimation Theory, 1st edn. Prentice-Hall PTR, Upper Saddle River (2010)

Triki, M., Slock, D.T.M.: Component-wise conditionally unbiased bayesian parameter estimation: general concept and applications to kalman filtering and LMMSE channel estimation. In: Proceedings of the Asilomar Conference on Signals, Systems and Computers, Pacific Grove, USA, pp. 670–674, November 2005

Triki, M., Salah, A., Slock, D.T.M.: Interference cancellation with Bayesian channel models and application to TDOA/IPDL mobile positioning. In: Proceedings of the International Symposium on Signal Processing and its Applications, pp. 299–302, August 2005

Triki, M., Slock, D.T.M.: Investigation of some bias and MSE issues in block-component-wise conditionally unbiased LMMSE. In: Proceedings of the Asilomar Conference on Signals, Systems and Computers, Pacific Grove, USA, pp. 1420–1424, November 2006

Huemer, M., Lang, O.: On component-wise conditionally unbiased linear bayesian estimation. In: Proceedings of the Asilomar Conference on Signals, Systems, and Computers, Pacific Grove, USA, pp. 879–885, November 2014

Adali, T., Schreier, P.J., Scharf, L.L.: Complex-valued signal processing: the proper way to deal with impropriety. IEEE Trans. Sig. Process. 59(11), 5101–5125 (2011)

IEEE Std 802.11a-1999, Part 11: Wireless LAN Medium Access Control (MAC) and Physical Layer (PHY) specifications: High-Speed Physical Layer in the 5 GHz Band (1999)

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Rights and permissions

Copyright information

© 2015 Springer International Publishing Switzerland

About this paper

Cite this paper

Lang, O., Huemer, M. (2015). CWCU LMMSE Estimation Under Linear Model Assumptions. In: Moreno-Díaz, R., Pichler, F., Quesada-Arencibia, A. (eds) Computer Aided Systems Theory – EUROCAST 2015. EUROCAST 2015. Lecture Notes in Computer Science(), vol 9520. Springer, Cham. https://doi.org/10.1007/978-3-319-27340-2_67

Download citation

DOI: https://doi.org/10.1007/978-3-319-27340-2_67

Published:

Publisher Name: Springer, Cham

Print ISBN: 978-3-319-27339-6

Online ISBN: 978-3-319-27340-2

eBook Packages: Computer ScienceComputer Science (R0)