Abstract

We introduce a new gesture-based user interface for drawing graphs that recognizes specific body gestures using the Microsoft Kinect sensor. Our preliminary user study demonstrates the potential for using gesture-based interfaces in graph drawing.

This research was supported in part by the Natural Sciences and Engineering Research Council of Canada (NSERC).

You have full access to this open access chapter, Download conference paper PDF

Similar content being viewed by others

Keywords

These keywords were added by machine and not by the authors. This process is experimental and the keywords may be updated as the learning algorithm improves.

Traditional input devices for manual data entry of graphs include mice, keyboards, and touch screens. Humans naturally communicate using body gestures. Recent research explores body or mid-air gestures as a form of interaction, particularly when using traditional input devices may be unintuitive or undesirable. Inspired by advances in gesture-based input technologies, we investigate the application of mid-air gestures to graph drawing. We created a prototype system called KiDGraD (using Kinect to Detect skeletons for Graph Drawing), which uses a Microsoft Kinect to recognize a limited set of body gestures designed to allow the user to manipulate a graph’s nodes and edges. We conducted a preliminary user evaluation examining the perceived naturalness of our proposed gesture set and users’ attitudes towards our general approach. Feedback from this initial user study suggests that gesture-based graph drawing has a number of potential applications, motivating future research into improved recognition capabilities as well as effective and expressive gesture sets.

Prior research on gesture-based interactions has focused on both gestures on digital surfaces (e.g., multi-touch gestures on digital tables [3]) and on mid-air gestures, where sensors and cameras are used to detect body movements (e.g., [2]). While a number of systems exist for inputting and editing graphs (e.g., [1]), there is limited prior research examining the use of non-traditional user interfaces for drawing or editing graphs from human input. To the authors’ knowledge, this work is the first to examine a mid-air gesture-based user interface for graph drawing.

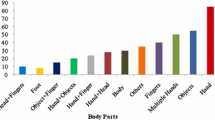

The KiDGraD user interface includes a drawing area, consisting of a grid illustrating the graph and overlayed with a sketch of the user’s detected skeleton, a sidebar to access commands, and a header that displays the active command. The system implements five operations: adding nodes, deleting nodes, adding edges, deleting edges, and reset. A user can activate commands in one of two ways: performing the corresponding gesture (see Fig. 1) or using the sidebar.

As a first proof-of-concept exploration of our gesture-based approach to graph drawing, we conducted an informal usability study with ten participants. The goals of the study were to gain initial insight into the intuitiveness and ease of the gestures, as well as to elicit feedback from users on the potential strengths and limitations of this approach. We asked participants to interact with KiDGraD by drawing a number of sample graphs, after which we solicited feedback on both the system concept and the gesture set.

Participants responded quite enthusiastically to the system and the idea of using gestures to draw graphs. With mean responses of 4.0 or greater on a 5-point Likert scale, participants appeared to find the system fun, simple to use, and relatively efficient. Responses for comfort and the system working as participants expected were slightly less positive, and participants suggested a number of potential improvements to both the gesture set and the drawing interface. The post-session interviews revealed that almost all participants felt that the idea of using gestures to draw graphs is interesting, and they were excited to move away from using mice or keyboards.

Once the gesture set and recognition technologies are refined, there are a number of interesting directions to explore in terms of applications. In particular, our participants thought that mid-air graph drawing might be beneficial in educational settings, where the system could be used as an engaging way to teach children about graphs.

References

Fröhlich, M., Werner, M.: Demonstration of the interactive graph visualization system da Vinci. In: Tamassia, R., Tollis, I.G. (eds.) GD 1994. LNCS, vol. 894, pp. 266–269. Springer, Heidelberg (1995)

Jang, S., Elmqvist, N., Ramani, K.: GestureAnalyzer: visual analytics for pattern analysis of mid-air hand gestures. In: Proceedings of SUI, pp. 30–39 (2014)

Pfeuffer, K., Alexander, J., Chong, M.K., Gellersen, H.: Gaze-touch: combining gaze with multi-touch for interaction on the same surface. In: Proceedings of UIST, pp. 509–518 (2014)

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Rights and permissions

Copyright information

© 2015 Springer International Publishing Switzerland

About this paper

Cite this paper

Bahoo, Y., Bunt, A., Durocher, S., Mehrpour, S. (2015). Drawing Graphs Using Body Gestures. In: Di Giacomo, E., Lubiw, A. (eds) Graph Drawing and Network Visualization. GD 2015. Lecture Notes in Computer Science(), vol 9411. Springer, Cham. https://doi.org/10.1007/978-3-319-27261-0_51

Download citation

DOI: https://doi.org/10.1007/978-3-319-27261-0_51

Published:

Publisher Name: Springer, Cham

Print ISBN: 978-3-319-27260-3

Online ISBN: 978-3-319-27261-0

eBook Packages: Computer ScienceComputer Science (R0)