Abstract

Extreme learning machine (ELM) is a fast algorithm to train single-hidden layer feedforward neural networks (SLFNs). Like the traditional classification algorithms, such as decision tree, Naïve Bayes classifier and support vector machine, ELM also tends to provide biased classification results when the classification tasks are imbalanced. In this article, we first analyze the relationship between ELM and Naïve Bayes classifier, and then take the decision outputs of all training instances in ELM as probability density representation by kernel probability density estimation method. Finally, the optimal classification hyperplane can be determined by finding the intersection point of two probability density distribution curves. Experimental results on thirty-two imbalanced data sets indicate that the proposed algorithm can address class imbalance problem effectively, as well outperform some existing class imbalance learning algorithms in the context of ELM.

Access provided by Autonomous University of Puebla. Download conference paper PDF

Similar content being viewed by others

Keywords

- Extreme learning machine

- Class imbalance learning

- Probability density estimation

- Naïve Bayes classifier

1 Introduction

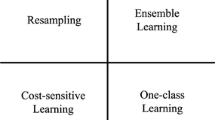

Extreme learning machine proposed by Huang et al., [1] has become a popular research topic in machine learning in recent years [2]. It is proved that single-hidden layer feedforward neural networks (SLFNs) with arbitrary hidden parameters and continuous activation function can universally approximate to any continuous functions [1]. Some recent research [3–6], however, indicated that the performance of ELM could be destroyed by class imbalance distribution, which is similar with some traditional classifiers, such as support vector machine, Naïve Bayes classifier and decision tree. In class imbalance scenario, the accuracy of the minority class always tends to be underestimated, causing meaningless classification results [7]. Therefore, it is necessary to adopt some strategies to make the classification model provide impartial classification results.

In the context of ELM, some researchers have presented several class imbalance learning algorithms. Weighted extreme learning machine (WELM) appoints different penalty parameters for the training errors belonging to the instances in different categories, decreasing the possibility of misclassifying the minority class samples [3]. The penalty parameters, however, can be only allocated empirically. A similar algorithm called Fuzzy ELM (FELM) was proposed in [4], which changes the distributions of penalty parameters by inserting a fuzzy matrix. As two well-known data-layer class imbalance learning algorithms, random oversampling (ROS) and synthetic minority oversampling technology (SMOTE) have also be integrated into ELM to deal with practical class imbalance applications [5, 6].

In this article, we try to present a novel algorithm to deal with class imbalance problem in the context of ELM. First, we analyze the relationship between ELM and Naïve Bayes classifier, and indicate that the decision output in ELM approximately equals to the posterior probability in Naïve Bayes classifier. Then, on the decision output space, we estimate the probability density distributions for two different classes, respectively. Finally, the optimal position of the classification hyperplane can be determined by finding the intersection point of two probability density distribution curves. We compare the proposed algorithm with several popular class imbalance learning algorithms, and the experimental results indicate its superiority.

2 Theories and Methods

2.1 Extreme Learning Machine

Considering a supervised learning problem where we have a training set with N training instances and m classes, \( ({\text{x}}_{i} , {\text{t}}_{i} ) \in {\text{R}}^{n} \times {\text{R}}^{m} \). Here, \( {\text{x}}_{i} \) is an n × 1 input vector and \( {\text{t}}_{i } \) is the corresponding m × 1 target vector. ELM aims to learn a decision rule or an approximation function based on the training data. In other words, ELM is used to create an approximately accurate mapping relationship between \( {\text{x}}_{i} \) and \( {\text{t}}_{i} \).

Unlike the traditional back-propagation (BP) algorithm [8], ELM provides the hidden parameters randomly to training SLFNs. Suppose there are L hidden layer nodes, then for an instance x, the corresponding hidden layer output can be presented by a row vector \( {\text{h}}\left( {\text{x}} \right) = [{\text{h}}_{1} \left( {\text{x}} \right), \ldots ,{\text{h}}_{L} \left( {\text{x}} \right)] \), thus the mathematical model of ELM is:

where \( {\text{H}} = \left[ {{\text{h}}\left( {{\text{x}}_{1} } \right), \ldots ,{\text{h}}\left( {{\text{x}}_{N} } \right)} \right]^{T} \) is the hidden layer output matrix for the whole training set, β is the output weight matrix and T is the target vector. Here, only the output weight matrix β is unknown. Then we can adopt least square method to acquire the solution of β that can be described as follows:

Here, \( {\hat{H}}^{\dag } \) is the Moore-Penrose “generalized” inverse of the hidden layer output matrix \( {\text{H}} \), which can guarantee the solution is least norm least square solution of Eq. (1). \( C \) is the penalty parameter to mediate the balance relationship between the training errors and the generalization ability.

2.2 Relationship Between ELM and Naïve Bayes Classifier

According to some previous work, the decision outputs of SLFNs trained by BP algorithm [8] can be regarded as an approximation of posteriori probability functions in Naïve Bayes classifier [9, 10]. Suppose there are lots of training instances, and each of them belongs to one of m classes. We can train an SLFNs to obtain the output weight matrix \( {\text{w}} \). Let \( f_{k} ({\text{x}},{\text{w}}) \) be the output of the kth output node of the SLFNs, i.e., the discriminant function corresponding to the kth class \( w_{k} \), then we can recall Bayes formula,

and the Bayes decision for any instance x: choosing the class \( w_{k} \) which has the largest discriminant function \( f_{k} \left( {\text{x}} \right) = P\left( {w_{k} | {\text{x}}} \right) \). Without loss of generality, suppose the training outputs are restricted as {0, 1}, where 1 denotes the output of the corresponding class and 0 denotes the outputs of the other classes. The contribution to the criterion function based on a single output unit k for finite number of training samples x is:

where n denotes the number of training instances, while \( n_{k} \) stands for the number of instances belonging to the class \( w_{k} \). In the limit of infinite data, we can use Bayes formula to express Eq. (3) as:

Obviously, the right-hand side in Eq. (4) is irrelevant with the weight w, thus SLFNs is to minimize:

Because this is true for each class, SLFNs minimizes the sum:

Therefore, in the limit of infinite data, the outputs of the trained SLFNs will approximate the true posterior probabilities in a least-squares sense, i.e., \( f_{k} \left( {{\text{x}},{\text{w}}} \right) = p(w_{k} |{\text{x}}) \).

As we know, like BP algorithm, ELM also provides approximate least squares solution of Eq. (1) though it simultaneously minimizes the norm of the weight matrix. Therefore, the decision outputs in ELM reflect the posteriori probabilities of different classes in Naïve Bayes classifier to some extent.

2.3 Probability Density Estimation

As described above, the decision outputs in ELM can reflect posteriori probabilities of different classes, thus for binary-class problem, we can map all instances from original feature space to an one-dimensional space. Here, ELM is used as a feature extraction tool. Then, we regard to estimate the probability density distributions of two different classes on the compressed one-dimensional feature space. Specifically, kernel probability density estimation [11], which is a nonparametric way to estimate the probability function of a random variable, is adopted.

Figure 1 shows a schematic diagram about probability density distributions of a binary-class problem on the one-dimensional decision output space acquired from ELM. From Fig. 1, we observe that after estimating probability density distributions, the prior probabilities for the two classes \( p(w_{ + }) \) and \( p(w_{ - }) \), and the corresponding conditional distribution probabilities \( p({\text{x}}|w_{ + }) \) and \( p({\text{x}}|w_{ - }) \) can be both obtained, then recall Bayes function, the posterior probabilities of two classes can be calculated as:

It is clear that when \( p\left( {w_{ + }| {\text{x}}} \right) = p\left( {w_{ - } | {\text{x}}} \right) \), i.e., when \( p\left( {{\text{x|}}w_{ + } } \right)p\left( {w_{ + } } \right) = p({\text{x}}|w_{ - })p(w_{ - }) \), the corresponding x value is selected as break point in Bayes decision. For class imbalance data, however, because \( p(w_{ + }) \ll p(w_{ - }) \), to guarantee \( p\left( {{\text{x|}}w_{ + } } \right)p\left( {w_{ + } } \right) = p({\text{x}}|w_{ - })p(w_{ - } ) \), the actual break point will be pushed towards the minority class. Therefore, Fig. 1 shows that using the original break point acquired from ELM will seriously destroy the performance of the minority class, but when we find the horizon axis corresponding to the intersection point of two density distribution curves, it can be seen as the optimal break point for the classification task.

2.4 Description of the Proposed Algorithm

The detailed computational procedure of the proposed algorithm is described as follows:

3 Experiments

3.1 Datasets and Parameters Settings

The experiments are carried out on thirty-two binary-class imbalanced data sets acquired from Keel data repository [12]. The detailed information of these data sets are summarized in Table 1, where IR denotes the class imbalance ratio.

To present the superiority of the proposed algorithm, we compared it with some other class imbalance learning algorithms in the context of ELM, including ELM [1], WELM1 [3], WELM2 [3], ELM-RUS, ELM-ROS [5] and ELM-SMOTE [6]. In addition, to guarantee the impartiality of the comparative results, grid search was adopted to search the optimal parameters, where sigmoid function was used as activation function at the hidden level, and two other parameters L and C were selected from {10, 20, …, 200} and {2−20, 2−18, …, 220}, respectively. As for the performance evaluation, G-mean metric was used.

3.2 Results and Discussions

Considering the randomness of ELM, five fold cross-validation was adopted, and each experiment was randomly executed 10 times, finally the average classification results were given. Table 2 provides the G-mean values of various algorithms, where in each row, the bold denotes the best result, the underline labels the second best and the italic stands for the worst one.

From Table 2, we observe that nearly all other algorithms outperform the original ELM algorithm, demonstrating each bias correction strategy can effectively alleviate the class imbalance problem. We also note that sampling and weighting technologies have quitely similar classification performance. As we know, WELM1 and WELM2 adopt different weights to punish the training errors, but there seems no a clear winner between them, as they have acquired the highest G-mean values on five data sets, respectively. We consider that the optimal weight settings should be closely related with the practical instance distributions. In sampling series algorithms, oversampling performs better than undersampling on majority data sets, especially on those highly skewed ones. On these data sets, the instances in the minority class are quitely sparse, causing much useful information loss by using RUS algorithm. Moreover, ELM-SMOTE obviously outperforms ELM-ROS as it have acquired two more best results and seven more second best results.

In contrast with six other algorithms, the proposed algorithm performs best, because it has acquired the highest G-mean value on nine data sets and the second highest G-mean value on fifteen ones. The results demonstrate that exploring the prior information about data distribution is helpful for improving classification performance in class imbalance tasks more or less.

4 Conclusions

In this article, a probability density estimation-based ELM classification algorithm is proposed to classify imbalanced data. Unlike other class imbalance learning algorithms, the proposed algorithm does not need to change the original data or weight distributions, but only to estimate the probability density distribution of the decision outputs acquired from ELM and then to find the optimal position to place the classification hyperplane. Experimental results on thirty-two benchmark data sets verified the effectiveness and superiority of the proposed algorithm.

References

Huang, G.B., Zhou, H., Ding, X., Zhang, R.: Extreme learning machine for regression and multiclass classification. IEEE Trans. Syst. Man Cybern. Part B Cybern. 42, 513–529 (2012)

Huang, G., Huang, G.B., Song, S., You, K.: Trends in extreme learning machine: a review. Neural Netw. 61, 32–48 (2015)

Zong, W., Huang, G.B., Chen, Y.: Weighted extreme learning machine for imbalance learning. Neurocomputing 101, 229–242 (2013)

Zhang, W.B., Ji, H.B.: Fuzzy extreme learning machine for classification. IET Electron. Lett. 49, 448–450 (2013)

Vong, C.M., Ip, W.F., Wong, P.K., Chiu, C.C.: Predicting minority class for suspended particulate matters level by extreme learning machine. Neurocomputing 128, 136–144 (2014)

Sun, S.J., Chang, C., Hsu, M.F.: Multiple extreme learning machines for a two-class imbalance corporate life cycle prediction. Knowl. Based Syst. 39, 214–223 (2013)

He, H., Garcia, E.A.: Learning from imbalanced data. IEEE Trans. Knowl. Data Eng. 21, 1263–1284 (2009)

Rumelhart, D.E., Hinton, G.E., Williams, R.J.: Learning representations by back-propagation errors. Nature 323, 533–536 (1986)

Ruck, D.W., Rogers, S.K., Kabrisky, M., Oxley, M.E., Suter, B.W.: The multilayer perceptron as an approximation to a Bayes optimal discriminant function. IEEE Trans. Neural Netw. 1, 296–298 (1990)

Wan, E.A.: Neural network classification: a Bayesian interpretation. IEEE Trans. Neural Netw. 1, 303–305 (1990)

Parzen, E.: On estimation of a probability density function and mode. Ann. Math. Stat. 33, 1065–1076 (1962)

Alcalá-Fdez, J., Fernandez, A., Luengo, J., Derrac, J., García, S., Sánchez, L., Herrera, F.: KEEL data-mining software tool: data set repository, integration of algorithms and experimental analysis framework. J. Multiple-Valued Logic Soft Comput. 17, 255–287 (2011)

Acknowledgements

This work was supported in part by National Natural Science Foundation of China under grant No. 61305058, Natural Science Foundation of Jiangsu Province of China under grant No. BK20130471, and China Postdoctoral Science Foundation under grant No. 2013M540404 and No. 2015T80481.

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Rights and permissions

Copyright information

© 2015 Springer International Publishing Switzerland

About this paper

Cite this paper

Yang, J., Yu, H., Yang, X., Zuo, X. (2015). Imbalanced Extreme Learning Machine Based on Probability Density Estimation. In: Bikakis, A., Zheng, X. (eds) Multi-disciplinary Trends in Artificial Intelligence. MIWAI 2015. Lecture Notes in Computer Science(), vol 9426. Springer, Cham. https://doi.org/10.1007/978-3-319-26181-2_15

Download citation

DOI: https://doi.org/10.1007/978-3-319-26181-2_15

Published:

Publisher Name: Springer, Cham

Print ISBN: 978-3-319-26180-5

Online ISBN: 978-3-319-26181-2

eBook Packages: Computer ScienceComputer Science (R0)