Abstract

Continuous glucose monitoring (CGM) systems are more informative than the traditional self-monitoring of capillary blood glucose (BG). Although advances in CGM technology have significantly improved the clinical utility of CGM devices compared with earlier versions, it is often difficult to assess the accuracy and precision of current devices due to differences in assessment protocols and reporting of results. Because CGM sensor accuracy can impact both the clinical utility and patient acceptance of CGM use, it is important to consider the performance characteristics seen in the current systems when assessing the clinical value of this technology. Moreover, standardization of the metrics used to assess CGM accuracy and precision are needed to help developers, clinicians, and patients make informed decisions regarding the CGM systems they are considering. In this chapter, we discuss the most commonly used methods for the assessment of CGM system performance, the accuracy and reliability of current CGM systems, and the remaining unsolved technological and physiological hurdles.

Access provided by Autonomous University of Puebla. Download chapter PDF

Similar content being viewed by others

Keywords

- Continuous Glucose Monitoring

- Artificial Pancreas

- Continuous Glucose Monitoring System

- Interstitial Glucose

- Blood Glucose Reading

These keywords were added by machine and not by the authors. This process is experimental and the keywords may be updated as the learning algorithm improves.

1 Background

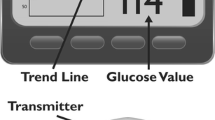

Continuous glucose monitoring (CGM) technology provides significant advantages to individuals treated with intensive insulin therapy compared with traditional self-monitoring of blood glucose (SMBG). Unlike SMBG, which measures the current glucose level at a single point in time, CGM presents a constant stream of data (measured every 1–15 min), indicating not only the current interstitial glucose level but also trends in glucose direction and velocity of glucose change. Moreover, CGM systems feature alarms that alert users when glucose is or predicted to be above or below programmed glucose thresholds, which makes the technology clinically useful in identifying postprandial hyperglycemia, nocturnal hypoglycemia, and in assisting individuals with hypoglycemia unawareness [43].

Numerous clinical trials have shown that use of CGM improves glycemic control and reduces hypoglycemia in children and adults with type 1 diabetes [6, 15, 20, 27, 38, 41, 48]. However, the clinical benefit of CGM use is directly related to the frequency of use of the technology. In many studies, significant clinical improvements were seen only in those patients who regularly wore their CGM devices 60–70 % of the time [2, 9, 20, 27, 41, 46–48].

As the technology continues to evolve, use of CGM is emerging as a standard of care for diabetes patients managed with intensive insulin therapy [8, 30, 39, 44]. Additionally, CGM is an integral component of the “artificial pancreas” (AP) systems under development, which utilize continuous subcutaneous insulin infusion (CSII) linked with CGM and an automated control algorithm to communicate between the two components to mimic physiologic insulin delivery.

Despite its demonstrated benefits, adoption and routine utilization of CGM has been relatively slow. A large US registry of individuals with type 1 diabetes showed that only 6 % of individuals used CGM as a regular part of their diabetes management [7]. It is also known that many patients who are started on CGM discontinue it shortly thereafter [42].

Although cost is often identified as a hindrance to CGM use; [4, 26] inadequate sensor accuracy may be a more influential obstacle to widespread adoption of CGM for current use in clinical diabetes management and future use in AP systems [13, 28]. In clinical trials, the number of patients who discontinued the studies due to accuracy or sensor-related issues was significant [20, 26, 41]. Therefore, it is important that both clinicians and patients are able to easily assess the performance of the CGM systems they are considering.

2 CGM Performance Assessment

2.1 Sensor Signal

The effectiveness of CGM devices in measuring current glucose and predicting future glucose levels is dependent upon the accuracy and reliability of the CGM signal. The raw (unfiltered) signal of a CGM sensor is the basis for meaningful data output. A raw signal that exhibits strong noise and artifacts requires robust filter algorithms in order to equalize the signal. The characteristics of noise and artifacts will dictate the type of filter algorithm needed. Regardless of the filter algorithm selected, all filters introduce a time lag in that the filtered signal lags behind the raw signal; the stronger the filter algorithm, the longer the time lag. A raw signal of high quality requires minimal filtering and data processing, resulting in a much cleaner sensor response with little delay between the shown data and the raw signal.

2.2 Reference Methodology

Blood glucose measurement is most commonly used as reference data for the analysis of CGM accuracy. However, the limitations of using this reference method must be considered. It is important to consider that this method is comparing glucose concentrations in two body compartments: blood and interstitial fluid. As demonstrated by Basu and colleagues, [5] who was the first to directly measure the transport of glucose from the vascular compartment to subcutaneous tissue, demonstrated that the mean time to appearance of tracer glucose in the abdominal subcutaneous tissue after intravenous bolus is between 5 and 6 min in the resting, overnight fasted state. Moreover, the glucose transport lag time may vary when the patient is not in the fasting state (e.g., glucose levels are rising or declining). An example of this lag is presented in Fig. 1. As a consequence, CGM and blood glucose points that are paired according to their measurement time stamp may be erroneously paired from the physiological point of view. Additionally, the blood glucose readings are paired with a very small fraction of CGM readings, most CGM data are neglected, and deviations of CGM readings from the blood glucose occurring between the blood glucose measurements are not detected at all.

2.3 Accuracy and Precision

A common metric for assessment of CGM accuracy is the aggregate mean absolute relative difference (MARD) between all temporally matched sensor data and reference measurements across all subjects of a study. Reported as a percentage, MARD is the average of the absolute error between all CGM values and matched reference values. A small percentage indicates that the CGM readings are close to the reference glucose value, whereas a larger MARD percentage indicates greater discrepancies between the CGM and reference glucose values.

Another metric is the median absolute relative difference, which is also expressed as a percentage. Both the MARD and median absolute relative difference are often reported in CGM accuracy studies [29, 34, 50]; however, some studies [10, 22, 49] report only the median difference, which is misleading because it diminishes the impact of outliers. When there are significant numbers of outliers, accuracy appears to be greater.

Although the MARD is a more stringent metric and is easy to compute and interpret for succinct summarization of CGM properties, certain limitations should be considered when comparing reported findings from different accuracy studies [37]. Because the MARD uses blood glucose readings as a reference, the influence of time lags is introduced and the majority of CGM values are neglected. Additionally, the MARD makes no distinction between positive and negative errors or between systematic and random errors.

It is also important to consider the composition of the study cohort and study setting. Are the study subjects prone to severe and/or frequent fluctuations in glucose? How often and how long are they in the hyperglycemic and hypoglycemic range? All of these factors can significantly influence the calculated MARD. For example, in study subjects with relatively stable glucose, the MARD may show close agreement with blood glucose readings. However, in subjects whose glucose is predominantly in the hypoglycemic range or fluctuating between the hypoglycemic and hyperglycemic states, the MARD would likely be less than desirable. Therefore, it is advisable to conduct separate accuracy evaluations for different glucose concentration ranges (e.g., 40–70 mg/dL) and different rates of glucose change (e.g., stable glucose vs. rapidly changing glucose). Additionally, when comparing the accuracy of two or more different CGM systems, it is advisable to perform head-to-head assessments in which the two systems are running simultaneously in each study patient. This will neutralize the potential impact study cohort or study setting differences.

Although use of the MARD provides easily interpreted information about the accuracy of CGM devices compared with reference blood glucose, its utility is impacted by the limitations previously discussed. However, when used in conjunction with calculation of sensor precision, the limitations are diminished (Table 1).

Precision of the absolute relative difference (PARD) is a metric used to compare glucose readings from two identical CGM sensors working simultaneously in the same patient; a lower percentage indicates greater precision (less variance) [3, 37, 50, 51]. It is calculated according to Eq. (1):

Using this metric, all CGM data are considered for performance evaluation. However, there is no correlation to blood glucose concentration. Nevertheless, the comparison of two concurrent CGM sensors provides additional and complementary insights into CGM sensor properties and performance.

CGM performance studies that present both MARD and PARD at various glucose ranges facilitate a more reliable assessment of a given CGM sensors true accuracy and precision characteristics. Data from a study that assessed the accuracy of three different CGM systems that were worn simultaneously (in duplicate) are provided as an example of how use of MARD and PARD allows for more straightforward comparisons of various CGM systems [21].

Although significant differences in accuracy and precision between the devices are readily apparent, the data also highlight the differences when comparing overall accuracy/precision across the full glucose range studied (40–400 mg/dL) and within the hypoglycemic range (40–70 mg/dL). This is important because accuracy and precision in the hypoglycemic range is required for the technology to provide timely warnings of immediate or impending hypoglycemia. Failure to provide reliable warnings can impact user acceptance; fear of hypoglycemia is widely recognized as a major barrier to achieving and sustaining optimal glycemic control [16].

3 State of the Art

Despite advances in technology, CGM devices remain less accurate than capillary blood glucose measurements. Some of these deviations are physiological, such as the time lags that occur when glucose levels are rapidly changing. At other times, CGM sensor data may deviate from measured blood glucose values due to inaccurate calibration of the CGM device, sensor dislodgment, and other artifacts such as local decrease of blood flow [25]. If these unpredictable deviations are of sufficient length or magnitude, they could result in erroneous and potentially harmful patient responses (e.g., insulin under-dosing or over-dosing) [32].

It should be noted that the “accuracy gap” between current CGM devices and blood glucose monitoring systems is shrinking. Whereas current blood glucose monitoring systems that fulfill the ISO 1597:2003 criteria exhibit overall MARD values ranging from 4.9 to 6.8 % [45], a recent study of CGM devices showed MARD values as low as 9.2 % [51].

When assessing CGM performance, it is also important to consider differences between the currently available CGM systems. Figure 2 presents comparisons in accuracy (MARD) from four recent studies [17, 18, 21, 33] between the first generation CGM system (FreeStyle NavigatorTM, Abbott Diabetes, Alameda, USA) and more recent CGM systems from Medtronic (Northridge, USA) and Dexcom (San Diego, USA).

Advances in CGM development have also facilitated significant improvements in accuracy at various rates of glucose change as assessed by MARD. Figure 3 presents data from a head-to-head comparison between a commercially available device (Dexcom G4® PLATINUM Dexcom) and a prototype system (Roche Diagnostics GmbH) [40].

4 Unresolved Issues

In addition to their continuing efforts to improve the accuracy and precision of CGM devices, developers are challenged to address factors that can impact sensor reliability and performance. These factors include motion (including micromotion within the subcutaneous tissue) and pressure, which can affect tissue physiology (interstitial stresses) and local blood flow around the sensor, resulting in signal disruption and/or erroneous glucose readings [25].

4.1 Transient Sensor Signal Disruption

Numerous experiments and clinical studies have revealed occasional, spurious sensor signal dropouts. When this occurs, the glucose values reported by the CGM device are usually lower than the actual values. An example of this is presented in Fig. 4.

Signal disruption most commonly occurs during sleep, due to individuals lying on top of their sensor. These signal dropouts tend to last approximately 15–30 min. Although nocturnal signal drops are not likely to prompt an erroneous response in patients using standalone CGM devices, resulting “false alarms” may provoke patients to turn off their CGM and/or discontinue use [19]. However, signal disruptions are of great concern within the context of sensor augmented insulin pump use in which insulin administration can be automatically suspended when CGM measure values fall below the programmed low-glucose threshold and (eventually) in closed-loop insulin delivery system (artificial pancreas) use. Signal disruption also poses a significant challenge in artificial pancreas development efforts.

4.2 Transient Significant CGM Inaccuracies

Whereas signal disruptions are often the result of acute forces such as pressure which can temporarily impact the sensor signal, chronic forces that trigger the foreign body response, and inflammation around the sensor site can impact sensor performance [25]. These forces can include the impact of motion (shear forces) that occur due to normal physiologic activity (e.g., walking, running) [23] and foreign body response [1, 36], which is influenced by the shape [35], size [31] and surface typography [11] of the implanted sensor. Reporting of erroneous interstitial glucose values are unpredictable and may continue over several hours.

5 Next Steps in CGM Development

Increasing standardization of performance metrics will enable CGM technology developers and patients to better assess the accuracy and precision of future CGM devices. The better understanding of the sensor-to-tissue interface will help developers to improve accuracy. As a result, adoption and sustained use of CGM as standalone devices will likely increase. However, the challenges posed by intermittent signal disruptions and transient inaccuracies must be addressed in order for closed-loop insulin delivery development to move forward.

Development of CGM sensors that incorporate intelligent failure detection algorithms, which may include sensors for activity, pressure, temperature, and heart rate, is a long-range goal in CGM development. The addition of glucose pattern recognition capability linked with global position data would enhance the utility of CGM in the future. However, use of multiple sensors presents a potential short-term solution [12]. This approach requires that the two sensors are redundant in that they are not housed in the same probe. Otherwise, tissue compression or other local effects that can impact both sensors and signal disruptions would remain undetected. Use of duplicate sensors can identify occurrence of signal disruptions and erroneous glucose data.

Another proposed solution is to use sensors that are soft and small with microtextured surfaces in order to match tissue modulus [24]. This would both enhance biomechanical biocompatibility and help minimize reduce interfacial stress concentrations [24].

6 Conclusion

Despite several years of expert discussions, neither industry developers nor independent investigators have been able to standardize methodologies for evaluating CGM performance [37]. The one guideline that does exist only covers performance metrics, how studies should be designed and the data analyzed, but no acceptance criteria are provided [14]. Consequently, studies continue to use differing designs and metrics for assessing CGM accuracy, which makes comparisons of currently available CGM devices difficult.

Although use of CGM has been shown to provide significant clinical benefits compared to SMBG [6, 15, 20, 27, 38, 41, 48], sensor performance has slowed widespread adoption of this technology and still is a limiting factor in the development of closed-loop insulin delivery systems.

Because CGM system performance is currently evaluated against blood glucose measurements, these assessments do not reflect the true nature of CGM and may lead to misleading results. Nevertheless, because BG values are still regarded as the “gold standard,” accuracy must be assessed in comparison to BG values.

As discussed, the MARD is the most relevant accuracy metric for CGM, not only when computed for the overall glucose range but also separately for different glucose concentration ranges and different rates of change. However, because the MARD does not fully detect sensor performance due to the limited number of paired data points and/or the inherent physiological differences between the two body compartments sampled (i.e., blood glucose vs. interstitial glucose), calculation of the PARD becomes important as a measure for CGM precision and in supporting the accuracy assessment by the MARD.

The question “how good is good enough?” can only be answered as it relates to the intended use. When used as a standalone, adjunctive device for monitoring current and trending glucose levels, with no control over insulin pump infusion, current CGM devices are adequate for their intended use. However, when the CGM data are used for therapy decisions or the CGM device directly controls insulin delivery, accuracy, and precision become increasingly important depending upon the degree of control. For example, when CGM is linked to a low-glucose suspend (LGS) insulin pump, which automatically stops administering basal insulin when current or impending low glucose is detected, the risk of patient harm due to CGM device malfunction is relatively low. Conversely, when CGM is functioning as a component of a closed-loop insulin delivery system, patient risk is significantly increased because detection of an erroneous high-glucose reading would automatically prompt the insulin pump to administer unneeded insulin, resulting in hypoglycemia. This highlights the importance of effectively addressing the critical biomechanical factors (micromotion and pressure) and sensor-to-tissue interface to ensure accuracy, precision, and reliability in clinical use.

In summary, simple, straightforward and clinically significant standards and guidelines are needed to establish common rules for the development of safe and effective products using CGM technology. These rules should include standardized metrics for accuracy and precision, guidance for study designs and data analyses schemata, and acceptance criteria based on intended CGM use.

References

Anderson, J.M., Rodriguez, A., Chang, D.T.: Foreign body reaction to biomaterials. Semin. Immunol. 20(2), 86–100 (2008)

Bailey, T.S., Zisser, H.C., Garg, S.K.: Reduction in hemoglobin A1C with real-time continuous glucose monitoring: results from a 12-week observational study. Diabetes Technol. Ther. 9(3), 203–210 (2007)

Bailey, T., Zisser, H., Chang, A.: New features and performance of a next-generation SEVEN-day continuous glucose monitoring system with short lag time. Diabetes Technol. Ther. 11(12), 749–755 (2009)

Bartelme, A., Bridger, P.: The role of reimbursement in the adoption of continuous glucose monitors. J. Diabetes Sci. Technol. 3(4), 992–995 (2009)

Basu, A., Dube, S., Slama, M., Errazuriz, I., Amezcua, J.C., Kudva, Y.C., Peyser, T., Carter, R.E., Cobelli, C., Basu, R.: Time lag of glucose from intravascular to interstitial compartment in humans. Diabetes 62(12), 4083–4087 (2013)

Battelino, T., Phillip, M., Bratina, N., Nimri, R., Oskarsson, P., Bolinder, J.: Effect of continuous glucose monitoring on hypoglycemia in type 1 diabetes. Diabetes Care 34(4), 795–800 (2011)

Beck, R.: T1D exchange overview - 25.000 opportunities, few limitations. American Diabetes Association 72nd Scientific Sessions, Philadelphia (2012)

Blevins, T.C., Bode, B.W., Garg, S.K., Grunberger, G., Hirsch, I.B., Jovanovič, L., Nardacci, E., Orzeck, E.A., Roberts, V.L., Tamborlane, W.V., Rothermel, C.: Statement by the American association of clinical endocrinologists consensus panel on continuous glucose monitoring. endocr pract 16(5), 730–745 (2010)

Buckingham, B., Beck, R.W., Tamborlane, W.V., Xing, D., Kollman, C., Fiallo-Scharer, R., Mauras, N., Ruedy, K.J., Tansey, M., Weinzimer, S.A., Wysocki, T.: Continuous glucose monitoring in children with type 1 diabetes. J. Pediatr. 151(4), 388–393 (2007)

Calhoun, P., Lum, J., Beck, R.W., Kollman, C.: Performance comparison of the medtronic sof-sensor and enlite glucose sensors in inpatient studies of individuals with type 1 diabetes. Diabetes Technol. Ther. 15(9), 758–761 (2013)

Campbell, C.E., von Recum, A.F.: Microtopography and soft tissue response. J. Investig. Surg. 2(1), 51–74 (1989)

Castle, J.R., Ward, W.K.: Amperometric glucose sensors: sources of error and potential benefit of redundancy. J. Diabetes Sci. Technol. 4(1), 221–225 (2010)

Chia, C.W., Saudek, C.D.: Glucose sensors: toward closed loop insulin delivery. Endocrinol. Metab. Clin. North Am. 33(1), 175–195 (2004)

Clinical and Laboratory Standards Institute: POCT05A: performance metrics for continuous glucose monitoring – approved guideline (2008)

Continuous glucose monitoring and intensive treatment of type 1 diabetes (2008). www.nejm.org

Cryer, P.E.: Hypoglycemia: still the limiting factor in the glycemic management of diabetes. Endocr. Pract. 14(6), 750–756 (2008)

Damiano, E.R., El-Khatib, F.H., Zheng, H., Nathan, D.M., Russell, S.J.: A comparative effectiveness analysis of three continuous glucose monitors. Diabetes Care 36(2), 251–259 (2013)

Damiano, E.R., McKeon, K., El-Khatib, F.H., Zheng, H., Nathan, D.M., Russell, S.J.: A comparative effectiveness analysis of three continuous glucose monitors: the Navigator, G4 Platinum, and Enlite. J. Diabetes Sci. Technol. 8(4), 699–708 (2014)

Davey, R.J., Jones, T.W., Fournier, P.A.: Effect of short-term use of a continuous glucose monitoring system with a real-time glucose display and a low glucose alarm on incidence and duration of hypoglycemia in a home setting in type 1 diabetes mellitus. J. Diabetes Sci. Technol. 4(6), 1457–1464 (2010)

Deiss, D., Bolinder, J., Riveline, J.P., Battelino, T., Bosi, E., Tubiana-Rufi, N., Kerr, D., Phillip, M.: Improved glycemic control in poorly controlled patients with type 1 diabetes using real-time continuous glucose monitoring. Diabetes Care 29(12), 2730–2732 (2006)

Freckmann, G., Pleus, S., Link, M., Zschornack, E., Klotzer, H.M., Haug, C.: Performance evaluation of three continuous glucose monitoring systems: comparison of six sensors per subject in parallel. J. Diabetes Sci. Technol. 7(4), 842–853 (2013)

Garg, S.K., Voelmle, M.K., Gottlieb, P.: Feasibility of 10-day use of a continuous glucose-monitoring system in adults with type 1 diabetes. Diabetes Care 32(3), 436–438 (2009)

Geerligs, M., Peters, G.W., Ackermans, P.A., Oomens, C.W., Baaijens, F.P.: Does subcutaneous adipose tissue behave as an (anti-)thixotropic material? J. Biomech. 43(6), 1153–1159 (2010)

Helton, K.L., Ratner, B.D., Wisniewski, N.A.: Biomechanics of the sensor-tissue interface-effects of motion, pressure, and design on sensor performance and foreign body response-part II: examples and application. J. Diabetes Sci. Technol. 5(3), 647–656 (2011)

Helton, K.L., Ratner, B.D., Wisniewski, N.A.: Biomechanics of the sensor-tissue interface-effects of motion, pressure, and design on sensor performance and the foreign body response-part I: theoretical framework. J. Diabetes Sci. Technol. 5(3), 632–646 (2011)

Hermanides, J., Phillip, M., DeVries, J.H.: Current application of continuous glucose monitoring in the treatment of diabetes: pros and cons. Diabetes Care 34(Suppl 2), 197–201 (2011)

Hirsch, I.B., Abelseth, J., Bode, B.W., Fischer, J.S., Kaufman, F.R., Mastrototaro, J., Parkin, C.G., Wolpert, H.A., Buckingham, B.A.: Sensor-augmented insulin pump therapy: results of the first randomized treat-to-target study. Diabetes Technol. Ther. 10(5), 377–383 (2008)

Hovorka, R.: Continuous glucose monitoring and closed-loop systems. Diabet. Med. 23(1), 1–12 (2006)

Keenan, D.B., Mastrototaro, J.J., Zisser, H., Cooper, K.A., Raghavendhar, G., Lee, S.W., Yusi, J., Bailey, T.S., Brazg, R.L., Shah, R.V.: Accuracy of the Enlite 6-day glucose sensor with guardian and Veo calibration algorithms. Diabetes Technol. Ther. 14(3), 225–231 (2012)

Klonoff, D.C., Buckingham, B., Christiansen, J.S., Montori, V.M., Tamborlane, W.V., Vigersky, R.A., Wolpert, H.: Continuous glucose monitoring: an endocrine society clinical practice guideline. J. Clin. Endocrinol. Metab. 96(10), 2968–2979 (2011)

Kvist, P.H., Iburg, T., Aalbaek, B., Gerstenberg, M., Schoier, C., Kaastrup, P., Buch-Rasmussen, T., Hasselager, E., Jensen, H.E.: Biocompatibility of an enzyme-based, electrochemical glucose sensor for short-term implantation in the subcutis. Diabetes Technol. Ther. 8(5), 546–559 (2006)

Leelarathna, L., Nodale, M., Allen, J.M., Elleri, D., Kumareswaran, K., Haidar, A., Caldwell, K., Wilinska, M.E., Acerini, C.L., Evans, M.L., Murphy, H.R., Dunger, D.B., Hovorka, R.: Evaluating the accuracy and large inaccuracy of two continuous glucose monitoring systems. Diabetes Technol. Ther. 15(2), 143–149 (2013)

Luijf, Y., Mader, J., Doll, W., Pieber, T., Farret, A., Place, J., Renard, E., Bruttomesso, D., Filippi, A., Avogaroand, A., Arnolds, S., Benesch, C., Heinemann, L., DeVries, J.: Accuracy and reliability of continuous glucose monitoring systems: a head-to-head comparison. Diabetes Technol. Ther. 15(8), 721–726 (2013)

Mastrototaro, J., Shin, J., Marcus, A., Sulur, G., Abelseth, J., Bode, B.W., Buckingham, B.A., Hirsch, I.B., Kaufman, F.R., Schwartz, S.L., Wolpert, H.A.: The accuracy and efficacy of real-time continuous glucose monitoring sensor in patients with type 1 diabetes. Diabetes Technol. Ther. 10(5), 385–390 (2008)

Matlaga, B.F., Yasenchak, L.P., Salthouse, T.N.: Tissue response to implanted polymers: the significance of sample shape. J. Biomed. Mater. Res. 10(3), 391–397 (1976)

Morhenn, V.B., Lemperle, G., Gallo, R.L.: Phagocytosis of different particulate dermal filler substances by human macrophages and skin cells. Dermatol. Surg. 28(6), 484–490 (2002)

Obermaier, K., Schmelzeisen-Redeker, G., Schoemaker, M., Klotzer, H.M., Kirchsteiger, H., Eikmeier, H., del Re, L.: Performance evaluations of contiuous glucose monitoring systems: precision absolute relative deviation is part of the assessment. J. Diabetes Sci. Technol. 7(4), 824–832 (2013)

O’Connell, M.A., Donath, S., O’Neal, D.N., Colman, P.G., Ambler, G.R., Jones, T.W., Davis, E.A., Cameron, F.J.: Glycaemic impact of patient-led use of sensor-guided pump therapy in type 1 diabetes: a randomised controlled trial. Diabetologia 52(7), 1250–1257 (2009)

Phillip, M., Battelino, T., Rodriguez, H., Danne, T., Kaufman, F.: Use of insulin pump therapy in the pediatric age-group: consensus statement from the European Society for Paediatric Endocrinology, the Lawson Wilkins Pediatric Endocrine Society, and the International Society for Pediatric and Adolescent Diabetes, endorsed by the American Diabetes Association and the European Association for the Study of Diabetes. Diabetes Care 30(6), 1653–1662 (2007)

Pleus, S., Haug, C., Link, M., Zschomack, E., Freckmann, G., Obermaier, K., Schoemaker, M.: Rate-of-change dependence of the performance of two CGM systems during induced glucose excursions. Diabetes 63(Suppl1), A216 (2014)

Raccah, D., Sulmont, V., Reznik, Y., Guerci, B., Renard, E., Hanaire, H., Jeandidier, N., Nicolino, M.: Incremental value of continuous glucose monitoring when starting pump therapy in patients with poorly controlled type 1 diabetes: the RealTrend study. Diabetes Care 32(12), 2245–2250 (2009)

Ramchandani, N., Arya, S., Ten, S., Bhandari, S.: Real-life utilization of real-time continuous glucose monitoring: the complete picture. J. Diabetes Sci. Technol. 5(4), 860–870 (2011)

Ramchandani, N., Heptulla, R.A.: New technologies for diabetes: a review of the present and the future. Int. J. Pediatr. Endocrinol. 2012(1), 28 (2012)

Standards of medical care in diabetes–2012. Diabetes Care 35(1), 11–63 (2012)

Tack, C., Pohlmeier, H., Behnke, T., Schmid, V., Grenningloh, M., Forst, T., Pfutzner, A.: Accuracy evaluation of five blood glucose monitoring systems obtained from the pharmacy: a European multicenter study with 453 subjects. Diabetes Technol. Ther. 14(4), 330–337 (2012)

Tamborlane, W.V., Beck, R.W., Bode, B.W., Buckingham, B., Chase, H.P., Clemons, R., Fiallo-Scharer, R., Fox, L.A., Gilliam, L.K., Hirsch, I.B., Huang, E.S., Kollman, C., Kowalski, A.J., Laffel, L., Lawrence, J.M., Lee, J., Mauras, N., O’Grady, M., Ruedy, K.J., Tansey, M., Tsalikian, E., Weinzimer, S., Wilson, D.M., Wolpert, H., Wysocki, T., Xing, D., Chase, H.P., Fiallo-Scharer, R., Messer, L., Gage, V., Burdick, P., Laffel, L., Milaszewski, K., Pratt, K., Bismuth, E., Keady, J., Lawlor, M., Buckingham, B., Wilson, D.M., Block, J., Benassi, K., Tsalikian, E., Tansey, M., Kucera, D., Coffey, J., Cabbage, J., Wolpert, H., Shetty, G., Atakov-Castillo, A., Giusti, J., O’Donnell, S., Ghiloni, S., Hirsch, I.B., Gilliam, L.K., Fitzpatrick, K., Khakpour, D., Wysocki, T., Fox, L.A., Mauras, N., Englert, K., Permuy, J., Bode, B.W., O’Neil, K., Tolbert, L., Lawrence, J.M., Clemons, R., Maeva, M., Sattler, B., Weinzimer, S., Tamborlane, W.V., Ives, B., Bosson-Heenan, J., Beck, R.W., Ruedy, K.J., Kollman, C., Xing, D., Jackson, J., Steffes, M., Bucksa, J.M., Nowicki, M.L., Van Hale, C., Makky, V., O’Grady, M., Huang, E., Basu, A., Meltzer, D.O., Zhao, L., Lee, J., Kowalski, A.J., Laffel, L., Tamborlane, W.V., Beck, R.W., Kowalski, A.J., Ruedy, K.J., Weinstock, R.S., Anderson, B.J., Kruger, D., LaVange, L., Rodriguez, H.: Continuous glucose monitoring and intensive treatment of type 1 diabetes. N. Engl. J. Med. 359(14), 1464–1476 (2008)

Weinzimer, S., Xing, D., Tansey, M., Fiallo-Scharer, R., Mauras, N., Wysocki, T., Beck, R., Tamborlane, W., Ruedy, K., Chase, H.P., Fiallo-Scharer, R., Messer, L., Tallant, B., Gage, V., Tsalikian, E., Tansey, M.J., Larson, L.F., Coffey, J., Cabbage, J., Wysocki, T., Mauras, N., Fox, L.A., Bird, K., Englert, K., Buckingham, B.A., Wilson, D.M., Clinton, P., Caswell, K., Weinzimer, S.A., Tamborlane, W.V., Doyle, E.A., Mokotoff, H., Steffen, A., Ives, B., Beck, R.W., Ruedy, K.J., Kollman, C., Xing, D., Stockdale, C.R., Jackson, J., Steffes, M.W., Bucksa, J.M., Nowicki, M.L., Van Hale, C.A., Makky, V., Grave, G.D., Horlick, M., Teff, K., Winer, K.K., Becker, D.M., Cleary, P., Ryan, C.M., White, N.H., White, P.C.: Prolonged use of continuous glucose monitors in children with type 1 diabetes on continuous subcutaneous insulin infusion or intensive multiple-daily injection therapy. Pediatr. Diabetes 10(2), 91–96 (2009)

Weinzimer, S., Miller, K., Beck, R., Xing, D., Fiallo-Scharer, R., Gilliam, L.K., Kollman, C., Laffel, L., Mauras, N., Ruedy, K., Tamborlane, W., Tsalikian, E.: Effectiveness of continuous glucose monitoring in a clinical care environment: evidence from the Juvenile Diabetes Research Foundation continuous glucose monitoring (JDRF-CGM) trial. Diabetes Care 33(1), 17–22 (2010)

Wilson, D.M., Beck, R.W., Tamborlane, W.V., Dontchev, M.J., Kollman, C., Chase, P., Fox, L.A., Ruedy, K.J., Tsalikian, E., Weinzimer, S.A.: The accuracy of the FreeStyle Navigator continuous glucose monitoring system in children with type 1 diabetes. Diabetes Care 30(1), 59–64 (2007)

Zisser, H.C., Bailey, T.S., Schwartz, S., Ratner, R.E., Wise, J.: Accuracy of the SEVEN continuous glucose monitoring system: comparison with frequently sampled venous glucose measurements. J. Diabetes Sci. Technol. 3(5), 1146–1154 (2009)

Zschornack, E., Schmid, C., Pleus, S., Link, M., Klotzer, H.M., Obermaier, K., Schoemaker, M., Strasser, M., Frisch, G., Schmelzeisen-Redeker, G., Haug, C., Freckmann, G.: Evaluation of the performance of a novel system for continuous glucose monitoring. J. Diabetes Sci. Technol. 7(4), 815–823 (2013)

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Rights and permissions

Copyright information

© 2016 Springer International Publishing Switzerland

About this chapter

Cite this chapter

Schoemaker, M., Parkin, C.G. (2016). CGM—How Good Is Good Enough?. In: Kirchsteiger, H., Jørgensen, J., Renard, E., del Re, L. (eds) Prediction Methods for Blood Glucose Concentration. Lecture Notes in Bioengineering. Springer, Cham. https://doi.org/10.1007/978-3-319-25913-0_3

Download citation

DOI: https://doi.org/10.1007/978-3-319-25913-0_3

Published:

Publisher Name: Springer, Cham

Print ISBN: 978-3-319-25911-6

Online ISBN: 978-3-319-25913-0

eBook Packages: EngineeringEngineering (R0)