Abstract

The following chapter will discuss the history and clinical utility of several different composite risk models. Composite risk models are used to combine the various known risk factors and translate them into a more easily interpretable risk value. The Framingham Risk Algorithm is among the oldest and most widely used risk scores for cardiovascular disease, and over the years, new cardiovascular disease risk algorithms, such as the Reynolds Risk Score and the Pooled Cohort Equations, have been developed. However, the applicability of these scores to ethnically and socioeconomically diverse populations has been questioned. As well, several lifetime cardiovascular disease models have been developed, but the clinical utility of assessing lifetime cardiovascular risk is still debated. Furthermore, different health organizations have developed several criteria for the metabolic syndrome, yet the clinical utility of the metabolic syndrome is still debated. In recent years, staging systems for obesity and cardiometabolic health have been developed to guide medical treatment, though due to their novelty, there is limited research on their effectiveness. However, for a given risk score, there are still individual differences in actual risk score, termed residual risk. This means that even if a patient achieves target levels of metabolic risk factors, some may still experience a cardiac event even if their predicted risk is low. This residual cardiovascular risk that is not accounted for by the risk models is true for all algorithms but can be reduced by adopting a healthy lifestyle or improving other important factors not accounted for by the algorithm. Finally, risk assessment is only valuable if the patient understands what that risk means, and therefore optimal risk communication between health professional and patient is vital for improving patient care. This review will describe the development and clinical utility of the Framingham Risk Score, the Reynolds Risk Score, the Pooled Cohort Equations, lifetime risk scores, the metabolic syndrome, the Edmonton Obesity Staging System, and the Cardiometabolic Disease Staging System. Residual cardiovascular risk and patient communication will also be discussed.

Access provided by Autonomous University of Puebla. Download chapter PDF

Similar content being viewed by others

Keywords

- Framingham Risk Score

- Reynolds Risk Score

- Metabolic syndrome

- Edmonton Obesity Staging System

- Cardiometabolic Disease Staging System

- Cardiometabolic risk

- Cardiovascular disease

- Residual cardiovascular risk

- Coronary heart disease

- Obesity

Introduction

Cardiovascular disease (CVD) remains the leading cause of death worldwide [1]. The most prominent risk factors for CVD include age, gender, obesity, smoking, diabetes, hypertension, and dyslipidemia [2]. During the past several decades, there have been major advances in treating CVD and its associated risk factors; it is now internationally accepted that the initiation and intensity of pharmacological therapy for CVD prevention should be based on a patient’s baseline absolute CVD risk [3]. Absolute CVD risk can be determined through composite risk scores, which assess multiple risk factors simultaneously to predict disease onset or outcomes and to help guide treatment. In order to be clinically useful, it is important to first understand the origin of the risk score in terms of the population and outcome that it was developed for and second that the risk model is updated when new evidence arises. Within the past several decades, there have been numerous updates to older risk scores as well as the development of new risk scores that assess several chronic conditions. Thus, choosing the most appropriate risk assessment model for patients can be difficult. Furthermore, no single risk algorithm can account for all relevant risk factors for CVD, and therefore patients may still exhibit residual cardiovascular risk , which is the risk of experiencing a cardiovascular event even when patients achieve target levels of metabolic risk factors [4]. Conversely, they may not experience CVD even though their predicted risk level is high. Lifestyle management, including diet, physical activity, smoking cessation , and weight management , remains the cornerstone of both CVD prevention and residual cardiovascular risk reduction [3, 4]. Further, several pharmacological agents have been identified that may help alleviate the burden of absolute and residual cardiovascular risk [5]. Importantly, risk assessment is only useful if a patient understands what their risk means and what they need to do to improve their risk. Therefore, optimal risk communication between health professional and patient is necessary for optimal patient care. This review will describe the development and clinical utility of the Framingham Risk Score , the Reynolds Risk Score , the Pooled Cohort Equations, lifetime risk scores, the metabolic syndrome (MetS) , the Edmonton Obesity Staging System , and the Cardiometabolic Disease Staging System . Residual cardiovascular risk and patient communication will also be discussed.

Framingham Risk Score

Some of our greatest understanding of the underlying causes of CVD derives from the Framingham Heart Study. The Framingham Heart Study was developed in Framingham, MA, in 1948, and the original cohort included 5209 adults, ages 30–62 years, who initially did not have CVD. This cohort has been followed since 1948 and has provided rich epidemiological data on the development of CVD [6]. From these data, the Framingham Risk Score was developed to estimate absolute CVD risk and is the oldest and most widely used and studied CVD risk score available [7]. To date, multiple risk scores for coronary heart disease (CHD) and CVD have been developed and modified over time using data from the Framingham original cohort as well as the offspring cohort.

Due to the ongoing nature of the Framingham Heart Study, the algorithm has been revised over time to reflect the latest evidence (Table 5.1) . The very first risk equation for CHD from the Framingham Heart Study was developed in 1967; [8] however, this equation was not validated and for the most part was not used clinically [9]. Subsequently, an 8-year risk of general CVD and specific subtypes of CVD was developed in 1976 [10]. This demonstrated that CVD is actually a heterogeneous condition, wherein some CVD risk factors are more relevant for certain subcomponents of CVD. For example, systolic blood pressure (SBP) is particularly important for stroke risk, whereas smoking and glucose intolerance may be more important for risk of intermittent claudication. This study also highlighted that certain risk factors have a risk continuum that should not be simply dichotomized into high and low. For example, CVD risk is proportional to the level of SBP and cholesterol, and there is no threshold for where risk begins to increase. Finally, this study demonstrated that an individual with a clustering of multiple subclinical risk factors might be more at risk than an individual with a single high-risk factor. In 1991, a new algorithm for 10-year risk of CHD was developed, and it was the first time that a points system was developed in order for clinicians to do a simple assessment of absolute CHD risk [11]. In 1998, a simplified sex-specific 10-year CHD prediction model that included age, diabetes status, smoking status, blood pressure, total cholesterol (TC), and high-density lipoprotein cholesterol (HDL-C) was developed (Table 5.1) [12]. This Framingham CHD risk score was adapted and incorporated into the National Cholesterol Education Program Expert Panel on Detection, Evaluation, and Treatment of High Blood Cholesterol in Adults (NCEP ATP III) as part of their updated recommendations for screening and treatment of dyslipidemia in 2001 [13]. According to the ATP III, the intensity of risk reduction therapy should be adjusted to reflect an individual’s level of absolute risk. One of the changes in this version of the Framingham CHD risk score by the ATP III was that it did not include diabetes but considered the presence of diabetes as the equivalent of having CHD.

The most recent adaptation of the Framingham Risk Score is a general cardiovascular risk profile that predicts risk of developing general CVD and the individual CVD components (CHD, stroke, peripheral artery disease, and heart failure; comparable to disease-specific algorithms) for use in primary care (Table 5.1) [2]. The risk factors included in the algorithm are age, TC, HDL-C, SBP, blood pressure treatment , smoking status, and diabetes status. Importantly, for the first time, a simple CVD risk score was developed for when blood measures are not available, allowing the physician to immediately assess the 10-year CVD risk of the patient by using age, body mass index (BMI), SBP, antihypertensive medication use, current smoking, and diabetes status.

A limitation to the Framingham Risk Score was that it was derived from a single community in the USA that was predominantly middle-aged and white. Others have also criticized the Framingham Risk Score because the Framingham population tended to be ‘high risk’ to begin with, having high levels of hypercholesterolemia, dietary intake of saturated fat, smoking, and other CVD risk factors [9]. A systematic review of studies that compared predicted Framingham 10-year CHD or CVD risk scores with observed risk reported that the accuracy of the risk score varied widely between populations and that the more high-risk the population, the greater the degree of underestimation [14]. Furthermore, a recent review observed that the majority of cross-sectional and cohort studies that have used the Framingham Risk Score applied it to populations (e.g., human immunodeficiency virus (HIV) or rheumatoid arthritis) and outcomes (non-CHD events) for which the scores were not originally developed [15]. Others have reported that the Framingham Risk Score overestimate CHD risk in populations from the UK, Belfast, and France [16] as well as in African Caribbean adults [17], while underestimating risk in white European and South Asian women [17]. There is also evidence that the Framingham Risk Score may grossly underestimate CVD mortality rate in low socioeconomic populations [18]. Among different ethnic cohorts in the USA, the Framingham Risk Score performed reasonably well in black and white men and women but overestimated risk in Japanese American and Hispanic men and Native American women. However, recalibrating the scores to take into consideration the prevalence of risk factors and underlying rates of developing CHD within different populations improves the predictive accuracy [19]. Therefore, when utilizing the Framingham Risk Score in populations that are not similar to the Framingham cohort, recalibration should be considered.

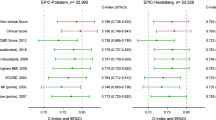

Despite the demonstrated ability of Framingham to predict CVD risk, some individuals who have low CVD risk will still experience a cardiac event, just as some persons with high risk will never develop CVD. This is termed residual risk and is the individual risk that is not accounted for by the risk algorithms. Residual risk exists for all algorithms and may be due to factors that are associated with CVD, but not included in the models, or they result from suboptimal assessment of predictive factors. Accordingly, some investigators have attempted to determine if the clinical utility and residual risk of the Framingham Risk Score could be improved by adding additional variables to the model. Wang et al. (2006) reported that the addition of nontraditional biomarkers , such as high-sensitivity C-reactive protein (hs-CRP), to conventional risk factors in the Framingham CHD risk scores had only small improvements for classifying risk [20]. However, another study reports that less than 50 % of patients who presented with myocardial infarction (MI) would be classified as high risk and be considered for intensive lipid-lowering therapy using common CVD risk scores (Framingham, Reynolds, PROCAM, ASSIGN, QRISK, and SCORE). However, within these algorithms, Framingham tended to classify patients as higher risk than the other algorithms. The study suggests that additional measures, such as high coronary artery calcium score or carotid artery plaques, may improve discrimination and that adding these risk factors to current algorithms may improve the classification of CVD risk [21]. Others have suggested that adding carotid intima thickness, ankle brachial index, or hs-CRP to the Framingham models could potentially improve classifying CVD risk in individuals [22–24]. However, the cost-effectiveness or clinical feasibility of testing these biomarkers in practice is not known.

Another commonly cited CVD risk factor that is not currently used in the Framingham Risk Score is history of premature parental CVD, even though this variable was reported to be an independent predictor of future CVD in the Framingham Offspring Cohort [25]. Accordingly, the Update of the Canadian Cardiovascular Society for the Diagnosis and Treatment of Dyslipidemia proposed that the Framingham CVD risk score be doubled when an individual has a family history of premature CVD [26]. However, Stern et al. [27] stated that clinicians should not use this modified score as there is no hard evidence to justify including family history in the algorithm, and besides, Framingham researchers reported that inclusion of parental data in the model increases predictive accuracy only to a small extent.

Finally, lifestyle modification behaviors, particularly diet and physical activity, are also associated with CVD risk and play a large role in CVD prevention [3] . However, none of the Framingham models, or any other CVD risk equation, include diet or physical activity in their risk algorithms. This may be because information for diet and physical activity is assessed using many different methods and is often considered unreliable as it is assessed using self-reported data. Nonetheless, information about a patient’s current diet and physical activity should be obtained during initial risk assessment and may be particularly important for estimating residual CVD risk.

In summary, the Framingham Heart Study has provided a wealth of knowledge about the major risk factors for CVD. Although some have challenged the clinical utility of the Framingham Risk Score in certain populations, these scores have been used in many different countries and populations to assess CVD risk and help guide treatment. Whether or not additional variables beyond the traditional risk factors could be used to better discriminate individuals at high risk of CVD is currently not known and requires further investigation.

Reynolds Risk Score

Like the Framingham Risk Score , the Reynolds Risk Score is an algorithm that predicts 10-year risk of CVD but includes hs-CRP and family history of MI in its algorithm. Dr. Paul Ridker and colleagues developed and validated the model for women in 2007 [28] and for men in 2008 [29]. Prediction algorithms were derived using data from 24,558 female health professionals in the Women’s Health Study aged 45 years and older and 10,724 men from the Physicians Health Study II aged 50–80 years old. The variables used in the Reynolds Risk Score are hs-CRP, parental history of premature MI, SBP, TC, HDL-C, current smoker, and hemoglobin A1c (HbA1C; for females with diabetes only; Table 5.1) .

The Reynolds Risk Score was derived using data from predominantly white adults from a high socioeconomic background. Therefore, as with the Framingham Risk Score, extrapolation to more ethnically and socioeconomically diverse populations, populations under 45 years for women, and under 50 years for men should be interpreted with caution. Another limitation is that the algorithm is based on self-report data for blood pressure in females and self-report BMI in males. Both the Framingham ATP-III CHD risk score and the Reynolds Risk Score are recommended for use by the American College of Cardiology (ACC) and the American Heart Association (AHA) and are also used as part of the national guidelines for CVD prevention in Canada. However, the few studies that have compared the Framingham and Reynolds scores often show that predicted CVD risk varies widely between the models. For example, the Reynolds Risk Score reclassified a number of adults into more appropriate risk categories and was reported to be a better predictor of CHD compared to the Framingham ATP-III model [28, 29]. However, this analysis may have been biased as it used the same population that was used to originally develop the Reynolds Risk Score. Another study reported that among a large multiethnic cohort of women, the ATP-III and Framingham CVD score overestimated risk for CHD and CVD, while the Reynolds Risk Score was better at classifying risk in black and white women [30]. Furthermore, another US study suggests that 4.7 % of US women would require more intense lipid management if the Reynolds Risk Score was used instead of the Framingham Risk Score . Conversely, 10.5 % of US men would require less intense lipid management if the Reynolds Risk Score was used in place of the Framingham Risk Score [31]. Thus, the relative clinical utility of Reynolds versus Framingham is unclear.

In summary, the best approach for CVD evaluation and prevention is to routinely test the patient for CVD risk factors and to use a risk score assessment. Although neither the Framingham Risk Score nor the Reynolds Risk Score are without limitation, assessing CVD risk is important to optimize preventive treatments and to guide therapy. Clearly, more research is needed to compare the clinical utility between the two risk scores.

ACC/AHA Guidelines for Assessing Cardiovascular Risk (Pooled Cohort Equations)

In 2013, the ACC and AHA provided updated guidelines for the assessment of CVD risk [3]. These guidelines include new Pooled Cohort Equations that predict 10-year atherosclerotic CVD risk in non-Hispanic black and white adults age 40–79 years with no clinical signs or symptoms of atherosclerotic CVD (Table 5.1) . The new Pooled Cohort Equations were derived using cohort data from Atherosclerosis Risk in Communities (ARIC), Cardiovascular Health Study, Coronary Artery Risk Development in Young Adults (CARDIA), and Framingham original and offspring study cohorts. The sex- and race-specific risk algorithms predict 10-year risk of initial hard atherosclerotic CVD events, including nonfatal MI or CHD death and fatal and nonfatal stroke [3]. The variables used in the algorithm are the same as those used in the Framingham CVD risk models and include age, TC, HDL-C, SBP, blood pressure treatment status, diabetes, and current smoking status . The guidelines recommend initiation of statin treatment in patients with high 10-year atherosclerotic (AS) CVD risk (≥ 7.5 %) and consideration of statin treatment in patients with intermediate risk (5−7.5 %). This translates into about one in three American adults being considered for statin therapy based on these guidelines [3]. These thresholds are considerably lower than the 20 % high-risk and 10−20 % intermediate-risk thresholds suggested in the ATP-III guidelines [13], and thus, more adults would be considered for statin therapy if these guidelines are implemented in clinical practice.

The guidelines also assessed the clinical utility of including novel risk factors for atherosclerotic CVD risk prediction , including family history of premature CVD, hs-CRP, coronary artery calcium, ankle-brachial index, coronary intima media thickness, apolipoprotein B, albuminuria, glomerular filtration rate, and cardiorespiratory fitness. It was recommended that if a risk-based treatment decision is still uncertain after initial risk assessment , a family history of premature CVD or measurement of hs-CRP, coronary artery calcium, or ankle-brachial index may be considered and that coronary artery calcium is likely the most clinically useful novel risk factor in adults with intermediate atherosclerotic CVD risk. However, the guidelines advise against measuring coronary intima media thickness in routine clinical practice due to concerns about measurement quality. Furthermore, there was insufficient evidence to recommend for or against measuring apolipoprotein B, albuminuria, glomerular filtration rate, or cardiorespiratory fitness for atherosclerotic CVD risk assessment [3].

The Pooled Cohort Equations have a demonstrated ability to estimate risk for both fatal and nonfatal MI and stroke and the ability to provide specific risk estimates for non-Hispanic blacks. Although not yet validated, the guidelines suggest that the equation for non-Hispanic whites may be used in other ethnic groups until ethnic-specific algorithms are developed. However, the Pooled Cohort Equations may overestimate atherosclerotic CVD risk in East Asian Americans and may underestimate risk in First Nation Americans and South Asian Americans [3]. Others have also criticized the new guidelines for excluding family history of premature CVD in the models, as well as including stroke as an endpoint, because it renders the algorithms much more sensitive to age and, additionally, because only ~ 40 % of strokes are the result of large-vessel atherosclerotic CVD [32].

Attempts to examine the validity of the Pooled Cohort Equations in other cohorts have resulted in mixed findings. One study reported that the Pooled Cohort Equations accurately predicted 5-year incident atherosclerotic CVD in non-Hispanic white and black adults from the REGARDS study [33]. Conversely, the Pooled Cohort Equations overestimated 10-year CVD risk in non-Hispanic white and black adults from the MESA study, the REGARDS study, newer follow-up data from ARIC, and the Framingham study [34]. The Pooled Cohort risk equations were also found to overestimate 10-year atherosclerotic CVD risk by 75−150 % in three large-scale US cohorts that were predominately made up of low-risk white individuals [34]. Moreover, among healthy European adults in the Rotterdam Study, the Pooled Cohort Equations greatly overestimated atherosclerotic CVD risk, and substantially more men and women would have been eligible for statin initiation based on these guidelines compared to the ATP-III guidelines or the European Society of Cardiology guidelines [35]. These results question the validity of the new Pooled Cohort risk equations, particularly in populations other than non-Hispanic white and black US adults. Clearly, more studies are needed to investigate if these risk equations are valid among individuals from other ethnic groups and countries and if they truly do provide better risk discrimination compared to more established CVD risk algorithms.

Lifetime Cardiovascular Risk

Lifetime cardiovascular risk is defined as the cumulative risk of developing CVD throughout the remainder of a person’s life [36]. Recently, there has been much discussion on the clinical utility of assessing lifetime CVD risk. Some argue that because short-term risk equations are strongly age-dependent, many younger individuals with adverse risk profiles who have a high long-term risk are often ‘overlooked’ when it comes to risk discussion and therapy initiation because their short-term risk is low [37]. As well, it has been reported that only assessing risk over 5–10 years restricts our appreciation of the true importance of the modifiable factors that cause CVD and, further, that lowering cholesterol has greater benefit if done earlier in life than later [38]. Indeed, life-time risk may be a clinically useful measure in those with low short-term but high long-term risk [39].

Several studies have attempted to assess the lifetime risk of CVD. In 2006, the first lifetime risk estimates for developing CVD at age 50 were estimated using Framingham data, and it was shown that lifetime risk is highly dependent on the number of elevated risk factors [40]. For example, men and women with an optimal risk factor profile at age 50 (only 3.2 % of men and 4.5 % of women) had a relatively low lifetime CVD risk, whereas those with at least two major risk factors had lifetime risks that exceeded 50 % [40]. Similar findings were confirmed in another study that also showed that the effect of risk factor burden on lifetime CVD risk was similar in black and white adults and across different birth cohorts [41]. In 2009, a 30-year model for risk of developing hard CVD was developed using Framingham data. This analysis demonstrated that 10-year CVD risk was very low for adults in their 20s and 30s, regardless of risk factors but that those with multiple elevated risk factors had 30-year risk profiles that were up to ten times higher [42]. Finally, Wilkins et al. (2012) reported that although middle-aged adults with an optimal risk factor profile have a lower absolute risk than middle-aged adults with one elevated risk factor, they still have a high absolute lifetime CVD risk of 30−40 % [43]. This reiterates the importance of maintaining a healthy lifestyle throughout life for CVD prevention .

Thus, although there is not yet a general consensus that lifetime CVD risk should be used in clinical practice, there is evidence that many adults have a high lifetime risk of CVD, particularly young adults with adverse risk factors, males, nonwhite adults, and those with a family history of premature CVD [37]. Assessing lifetime CVD risk may be beneficial in these populations as this could initiate discussion about lifestyle modification or consideration of early pharmacologic intervention that may not be apparent if using only short-term algorithms. However, a validated method of measuring lifetime risk that is applicable for different populations has yet to be established.

Residual Cardiovascular Risk

An important yet often under acknowledged concept to consider when assessing risk for any patient is that of residual risk. Residual risk is any difference in predicted risk that is not accounted for by the risk algorithm, which is why some individuals still experience a cardiac event even if metabolic targets are met or why some individuals will not have cardiac events even though their predicted risk is high [44]. All risk algorithms have an error component as no algorithm has a perfect model fit. For most CVD risk algorithms, the c-statistic (measure of discrimination) is usually between 0.70 and 0.80, which means that the probability that the predicted absolute risk is higher in individuals who develop CVD versus those who do not is 70−80 %, meaning that there could be inaccurate risk ranking in up to a third of individuals. This error could be related to inappropriate cutoffs used for risk factors in the algorithm, error in assessment of the risk factors , or not including all relevant risk factors in the model for that individual. For example, factors such as diet and physical activity are associated with CVD and can modify the effects of other CVD risk factors, yet these variables are not included in CVD risk algorithms. Further, there is a host of measures for glucose control and metabolism or inflammation that could be included in algorithms, but due to cost and ease of assessment as opposed to biological relevance, the simpler measures are most often included.

Another possible reason for residual risk is that not all relevant risk factors may be targeted with statin therapy that specifically lowers LDL-C. Thus, atherogenic dyslipidemia , which is an imbalance of high triglycerides and low HDL-C, would not be improved by statin therapy and has been identified as a likely contributor to lipid-related residual cardiovascular risk even when LDL levels are normal [4]. Some clinical trials have shown that combining a statin with a fibrate, niacin, omega-3 fatty acid, or ezetimibe to lower triglyceride levels and increase HDL-C may better help to alleviate the burden of atherogenic dyslipidemia. However, there is little evidence for the effects of these agents on cardiovascular outcomes, and therefore large cardiovascular outcome trials are needed [5]. On the other hand, lifestyle modifications , including body weight reduction, healthy diet, and increased physical activity, are all associated with decreased triglycerides and increased HDL-C, and are important factors for decreasing residual cardiovascular risk [45]. However, results from the Look AHEAD trial recently showed that intensive lifestyle modification was not enough to decrease cardiovascular deaths or events in adults with T2D compared to a control group despite significant improvements in metabolic risk factors [46]. Therefore, lifestyle modification is important for improving the metabolic profiles of individuals at risk for CVD, but pharmacotherapy will likely also be necessary for preventing CVD deaths and events. Large cardiovascular outcome trials with novel lipid therapies for reducing atherogenic dyslipidemia and improving residual cardiovascular risk are eagerly awaited.

Metabolic Syndrome

MetS , sometimes referred to as syndrome X, insulin resistance syndrome , cardiometabolic syndrome, or dysmetabolic syndrome, is generally defined as a clustering of cardiometabolic factors [47]. This term first appeared in PubMed in 1952, with only sporadic reports until the Banting Lecture in 1988 when Dr. Gerald Reaven described ‘Syndrome X’ [48]. In 2001, the National Cholesterol Education Program introduced the first diagnostic criteria for MetS, and research on this topic increased exponentially with now over 31,000 publications.

Despite the general consensus that MetS is a clustering of elevated fasting blood glucose and cardiovascular risk factors, there is debate as to which risk factors should be included in the diagnostic criteria, the thresholds for each criterion, and whether or not certain factors are central to the underlying pathology. Most commonly, increased waist circumference (WC), insulin resistance, dyslipidemia , and hypertension are included in the diagnostic criteria, with factors such as inflammation, kidney dysfunction [49], liver dysfunction, and ectopic fat deposition being suggested less frequently as features [50]. For example, both the World Health Organization (WHO) and the European Group for the Study of Insulin Resistance requires the presence of insulin resistance , but not necessarily obesity , to have the diagnosis of MetS [51, 52], while the International Diabetes Federation (IDF) requires the presence of abdominal obesity but not necessarily insulin resistance (Table 5.2) [53]. Of note, most definitions include WC as a component of the syndrome, but not BMI, due to excess abdominal fat being more highly associated with other components of MetS and its greater importance in the etiology of the syndrome [54]. The numerous differing diagnostic criteria that include different factors and use various thresholds created confusion in the clinical community as to what MetS is and made research using the different criteria more difficult to compare. Differences in the factors and the thresholds used can alter the prevalence of MetS to range from 19 to 39 % [55] and how strongly it is associated with morbidity and mortality [56, 57]. Thus, in 2009, there was a new harmonized diagnostic criteria that was jointly published by the International Diabetes Federation Task Force on Epidemiology and Prevention ; National Heart, Lung, and Blood Institute; American Heart Association; World Heart Federation; International Atherosclerosis Society; and International Association for the Study of Obesity. This criteria does not require the presence of any one component but, similar to past criteria, requires three of five of the factors for the diagnosis of MetS [47] (Table 5.2).

In youth and adolescents, the optimal diagnostic criteria for MetS are even less clear. Over the course of pubertal development, there are fluctuations in the metabolic profile that are not well understood. Clear thresholds for delineating healthy versus not healthy are not widely accepted. Consequently, it is not surprising that there are a variety of MetS criteria that have been developed using a mix of mainly age and sex percentiles or adult thresholds. These variations have resulted in a MetS prevalence that ranges between 6 and 39 % [58]. Thus, as with adults, this has led some to question the clinical usefulness of MetS [59].

In addition to the problematic diagnosis of MetS, there is also a debate as to the central importance of insulin sensitivity versus obesity as reflected by the disparate diagnostic criteria by WHO and IDF that require the presence of one versus the other. In order for MetS to be a ‘syndrome,’ there must be an underlying pathology or cause [60]. In his Banting Lecture in 1988 [48], Reaven proposed that insulin resistance was central to the development of these cardiometabolic factors but did not include obesity as one of the factors in the syndrome. Interestingly, he did suggest that treatment for MetS should be weight maintenance (or weight loss) and physical activity [48]. To date, there have been several examinations into the relative importance of insulin resistance and obesity with varying conclusions. To date, research has demonstrated that most, but not all, individuals diagnosed with ATP III [61] or IDF [62] are insulin resistant. Similarly, most, but not all, individuals with MetS are obese [63, 64]. This may be due to suboptimal diagnostic criteria for MetS, suboptimal assessment of insulin resistance or obesity, or may reflect that MetS is not truly a syndrome but more simply an array of risk factors or conditions without clear relationships.

In addition, several investigations on the association between MetS and mortality risk place doubt on whether MetS is in fact a ‘syndrome’ with a singular pathology and question whether MetS can uniquely identify risk beyond its individual factors [65]. MetS by most criteria is the compilation of 16 different metabolic factor combinations [63]. These combinations differ in their prevalence by age and sex and also in how they relate with mortality risk [63, 66]. Furthermore, some reports suggest that WC alone may be a better indicator of insulin resistance in young black South African women than ATP III MetS criteria [67]. Additionally, several reports indicate that MetS is much more strongly related to T2D risk than to CVD [68], largely owing to three of five factors (glucose, obesity, and triglycerides) being more predictive of T2D risk [69]. In fact, some studies demonstrate that certain MetS criteria are not predictive of all-cause or CVD morality risk [70]. Moreover, some studies indicate that MetS does not perform as well as traditionally used CVD risk algorithms, such as Framingham [71, 72]. This difference can be attributed to several mathematical as well as biological factors. Mathematically, the reduction of the five MetS criteria to a dichotomy reduces the information available and can only be used to provide relative risk estimates, as opposed to the Framingham algorithm that provides an absolute risk for CVD. From a biological standpoint, MetS does not consider clearly established non-metabolic CVD risk factors, such as age and smoking status , explaining why the relative risk estimates are inferior to Framingham. For these reasons, the clinical utility of MetS has been questioned [65] and may be why MetS is rarely diagnosed by clinicians [73].

Dysmetabolic syndrome is officially recognized as a medical diagnosis and is coded as ICD-9-CM 277.7. This code was replaced with ICD-10-CM E88.81 (metabolic syndrome) in October 2015. This is a billable medical code that can be used to specify a diagnosis on a reimbursement claim, formalizing the clinical diagnosis of this syndrome. However, Ford (2005) reported that 2 years after the release of the ICD-9-CM 277.7 code, very few patients had MetS listed as a diagnosis on medical records, suggesting that MetS is significantly underdiagnosed in patients [73]. The reasons for this are uncertain but may be related to the lack of pharmacological agents specifically for MetS.

Currently, the main lifestyle treatment goal of weight management and increasing physical activity for MetS has not changed from Dr. Reaven’s Banting Lecture in 1988 [54]. Dietary approaches are also suggested for many MetS factors. However, the factors differ in the types of therapeutic dietary approaches used, though sharing some similar approaches. For example, the Dietary Approaches to Stop Hypertension (DASH) recommends restricting sodium intake as an important dietary intervention for hypertension [74], but less important for weight, glucose, or lipid management. Dietary management for T2D also has recommendations for sodium intake but will focus more on caloric reduction and glycemic index [75]. Similarly, pharmacological interventions are generally not tailored directly for MetS due to the heterogeneous presentation of risk factors. Thus, pharmacological interventions are generally targeted for each specific risk factor as opposed to ‘metabolic syndrome’ as a whole. However, in understanding how MetS factors are interrelated in the same metabolic pathways, Ye et al. [76] have been able to design a unique pharmacotherapy that is able to simultaneously improve hypertension, hyperglycemia, obesity, and dyslipidemia in mice. Briefly, the antihypertensive alpha-2 adrenergic receptor agonist guanabenz activates a synthetic signal cascade that influences secretion of glucagon-peptide 1 (GLP-1) and leptin; these coordinated events attenuate blood pressure, blood glucose, blood lipids as well as reduced appetite and body weight [76].

Despite the limitations in comprehensively assessing, diagnosing, and treating MetS, the research on MetS has highlighted how T2D and CVD risk factors tend to cluster together, prompting assessment of other cardiometabolic risk factors when one is detected [77]. Nevertheless, although MetS is frequently the topic of research investigations, there is still debate on whether MetS can truly be called a ‘syndrome.’ In conjunction with the rare clinical diagnosis and the lack of pharmacological options, the clinical relevance of MetS has yet to be established.

Edmonton Obesity Staging System

The Edmonton Obesity Staging System (EOSS) is a model developed by Dr. Arya Sharma and Dr. Robert Kushner in 2009 that evaluates obesity-related health risk and recommends treatment according to the severity of risk [78]. The stages range from 0 to 4, indicating no obesity-related risk factors to severe end-stage disease. Unlike many of the other composite score models, EOSS considers not only metabolic risk factors (e.g., blood pressure) but also physical symptoms (e.g., aches and pains), psychopathology (e.g., depression), and functional ability and well-being in order to assess the overall health of the individual (Table 5.3).

The main reason why EOSS was developed was to individualize assessments since two people with similar levels of body fatness can have vastly different states of health. It was also proposed that a staging system would allow for the prioritization of treatment to patients who would most benefit from aggressive and resource-intensive weight management treatment. According to the current guidelines for weight management, all patients with obesity , regardless of their health risk profile, should be counseled to lose weight [79]. However, there exists a subgroup of obese individuals, commonly referred to as the ‘metabolically healthy obese,’ who are free from metabolic complications and may represent 6–32 % of the obese population [80, 81]. There is also evidence that weight loss in the metabolically healthy obese may not improve cardiometabolic risk factors [82] and may even be detrimental to insulin sensitivity [83]. Therefore, a model such as the EOSS would allow proper counseling to these patients and more resource-intensive treatment to those who would benefit most from weight loss.

As EOSS is a relatively new model, few studies have investigated its clinical utility. The predictive ability of EOSS for mortality risk was investigated using data from the Aerobics Center Longitudinal Study. Compared to normal-weight adults, obese individuals in EOSS stage 0/1 had a similar all-cause mortality risk and a lower CVD and CHD mortality risk, whereas individuals in stage 2 or 3 had higher all-cause, CVD, and CHD mortality risks [84]. Similarly, using data from the National Health and Nutrition Examination Survey, adults in EOSS stage 2 or 3 were at higher mortality risk than EOSS stage 0 or 1, independent of BMI, presence of MetS, hypertriglyceridemia, and WC [85]. These results provide further support that the EOSS may be a more relevant measure of assessing obesity-related health risk, compared to traditional anthropometric measures alone, and may be more useful in determining a proper prognosis and guiding treatment [85].

An advantage of using EOSS to guide weight management is that it takes into consideration multiple aspects of health and not just body weight. A limitation to using EOSS is that not all of the risk factors may be directly caused by obesity (e.g., depression), which may make it difficult to determine which stage a patient should be in. As well, some of the risk factors may be subjective and diagnosis may differ depending on the clinician (e.g., physical functioning) or patient demographic (e.g., age, sex, or ethnicity). Furthermore, this staging system has yet to be validated in clinical practice or be investigated in the context of specificity, sensitivity, and reliability [78]. However, given that the widely used BMI tends to be a poor indicator of health status at the individual level, and that not all obese people present with comorbidities, the use of EOSS may prove to be a valuable clinical tool for the obese population.

Cardiometabolic Disease Staging System

Cardiometabolic risk is a ubiquitous term generally used to describe T2D and CVD risk factors. The term ‘cardiometabolic risk’ was first used as a keyword in a single publication in PubMed in 1999 [86] and did not appear again until 2005. Since then, the use of the term ‘cardiometabolic risk’ has increased exponentially and is now a keyword for over 1800 publications. In many of these publications, cardiometabolic risk is defined using MetS diagnostic criteria. However, unlike MetS, which is a dichotomous outcome, cardiometabolic risk is a spectrum of states that span from optimal health to prediabetes to MetS to overt T2D and CVD. The Cardiometabolic Disease Staging System (CMDS) was published by Dr. Timothy Garvey and colleagues in 2014 and is the first defined cardiometabolic risk algorithm [87]. CMDS grades risk on a scale from 0 to 4 (Table 5.3), ranging from metabolically healthy to T2D and/or CVD. As with EOSS, CMDS is meant to help physicians objectively and systematically evaluate the severity of risk and balance the benefits versus the risks in deciding treatment interventions.

CMDS has been shown to predict incident 10-year T2D risk using data from CARDIA and CVD and all-cause mortality risk using data from NHANES III [88]. Further, they demonstrate that CMDS is able to predict risk independent of BMI. However, this is the only report to date using this algorithm, and it is unclear how this staging system performs in relationship to other algorithms.

CMDS is similar to EOSS [78] in its aim in developing a systematic treatment algorithm for many of the same chronic conditions but can be applied in all populations as opposed to only overweight and obese. Because the treatment therapy for many of the chronic conditions listed under CMDS and EOSS are the same, the patient will still likely receive a similar message in terms of increasing physical activity, improving dietary practices, and receiving consideration for pharmacological intervention. However, the stage at which intervention occurs, and whether or not weight loss is prescribed, may differ between the two models.

As with the various MetS criteria, there are differences in the centrality of the role of obesity versus insulin resistance in the etiology of cardiometabolic risk. The CMDS places insulin resistance at the center of the etiology of risk and places a secondary emphasis on obesity , whereas EOSS places obesity more centrally in the etiology of cardiometabolic, psychological, and physical impairments. These differences in the etiology can translate into differences in the suggested treatment decision-making strategy and specifically whether or not weight loss would be prescribed. Interestingly, CMDS stages 1, 2, and 3 would all fall under EOSS stage 1. Under EOSS stage 1, the physician is prompted to investigate other non-weight-related factors contributing to the patient’s subclinically elevated risk profile, including the prescription of more intense lifestyle interventions to prevent further weight gain. What is currently unclear is whether the same individual under CMDS stage 1, 2, or 3 would be suggested to lose weight as opposed to prevent further weight gain and at what stage that distinction would occur, if at all.

Both EOSS and the CMDS are relatively new models that have yet to be validated. However, given the ever-increasing prevalence of obesity and cardiometabolic disease, the clinical utility of using either of these staging systems to assess and guide treatment seems promising and offers a more comprehensive treatment guide than body weight alone.

Patient Communication

Risk communication is defined as an open two-way exchange of information and opinion about risk, which leads to better understanding and decisions about clinical management [89]. Despite guidelines advocating absolute CVD risk assessment , some have expressed concerns that it is an unfamiliar concept to most people, that the equations are abstract constructs derived from mathematical equations, and that informing someone of their absolute risk is not very useful unless they are informed of how their risk would change if they improved their risk factors [90]. Evidence suggests that presenting risk in multiple ways is beneficial for patient understanding. Thus, presenting natural frequencies instead of relative risks [91] and using visual aids, such as graphs and pictures, may help to improve patient cognition of risk [92]. Recently, the ‘Your Heart Forecast’ was developed as a way to convey to a patient through a series of graphs their 5-year CVD risk based on Framingham models, their 5-year CVD risk relative to a person of the same age with an optimal risk factor profile, their ‘cardiovascular age,’ their short-term risk over time, predicted age of drug initiation, and what their risk would be if they improved on their current risk factors [90]. A method such as this has the potential to convey meaningful information to a patient that would likely be more useful than simply informing them of their absolute short-term risk [93].

It is also important to acknowledge that terms that are often associated with risk discussions, such as’ low-risk,’, ‘high-risk,’ ‘likely,’ or ‘rare,’ are very subjective and can be interpreted in different ways depending on the patients’ knowledge and past experiences [94]. Furthermore, there is evidence that both physicians and patients may struggle with interpreting statistical information, which, when poorly presented, can lead to inaccurate communication of risks [95]. Therefore, simplifying statistical information may also be helpful. For example, telling a patient that they have a 10 % risk of developing CVD within the next 10 years may be less intuitive than telling them that if there were 100 patients just like them, 10 would develop CVD over the next 10 years. Furthermore, changing the population that they are being compared to will also influence their relative risk score. So, the same individual with a 10 % 10-year risk may have a three-fold higher risk compared to an individual with an optimal risk factor profile. Thus, the reference population and the messaging used can have a large impact on patient understanding and interpretation of risk.

There is also evidence that general practitioners tailor the approach of risk communication depending on their perception of patient risk, motivation, and anxiety. For example, positive strategies that focus on achievable changes have been used when patients are at low risk and motivated to change lifestyle habits, whereas scare tactics have been used for high-risk patients or those who are dismissive about their health or unwilling to change their lifestyle habits [96]. As well, some practitioners may choose to mention CVD risk but not make it the main focus of a patient visit, particularly when patients are very resistant to discuss their CVD risk or when they have more important acute health issues to discuss [96].

With respect to obesity , it is important to acknowledge that body weight is a sensitive topic for most patients, with any insinuations of weight bias or weight stigma often affecting attempts at weight loss . Simply telling a patient to ‘eat less and move more’ is far too simplistic of an approach and can lead to feelings of frustration for the patient. If the patient has obesity-related morbidities, the patient’s readiness to change should be assessed and any barriers to weight loss should be addressed. The patient should be made aware of the increased risk of disease associated with obesity, such as CVD, T2D, and certain cancers, and the potential health benefits of losing 5−10 % of body weight.

Thus, risk communication is not a simple ‘one size fits all’ approach. A patient’s risk should be presented in multiple ways that are simple and easy to understand. Health professionals may consider tailoring their risk communication approach based on the patient’s attitudes towards their current health and motivations about changing lifestyle factors or beginning pharmacotherapy. It is important to consider that risk scores were designed for populations, not individuals, and therefore when considering a person’s absolute risk, physician’s discretion is critically needed [34]. Further, although risk estimates are intended to guide treatment, no risk score is perfect, and the patient should be informed of the residual cardiovascular risk that may persist even if metabolic goals are met and also that lifestyle modification is crucial for reducing residual risk and preventing CVD.

Conclusion

In conclusion, composite risk models advocate assessing health risk in order to prevent disease and to guide treatment. When selecting a composite risk model, it is important to be aware of the characteristics of the population and the specific outcomes the model was developed for, and to potentially recalibrate the model to improve applicability. Some models, such as the Framingham Risk Score and MetS, are continuously revised over time to reflect current evidence of relevant risk factors. Conversely, because many of the currently available risk scores are relatively new, there has been a paucity of research that has directly compared similar risk models or validated them in different populations, making the clinical utility of such models often difficult to determine. Although health agencies recommend that the intensity of treatment be based on initial risk assessment, more research that evaluates how risk assessment actually affects primary prevention or health outcomes is needed. Finally, risk assessment is only valuable if the patient understands their risk and what they need to do to improve their health. Patients should be made aware of the residual CVD risk that may persist even if they achieve treatment targets. More patient education on the benefits of lifestyle modification for reducing this risk is also needed. Thus, optimal risk communication between health care professionals and patients is vital for improving patient care.

Abbreviations

- ACC:

-

American College of Cardiology

- AHA:

-

American Heart Association

- ARIC:

-

Atherosclerosis risk in communities

- AS:

-

Atherosclerotic

- BMI:

-

Body mass index

- CARDIA:

-

Cardiovascular Health Study, Coronary Artery Risk Development in Young Adults

- CHD:

-

Coronary heart disease

- CMDS:

-

Cardiometabolic disease staging system

- CVD:

-

Cardiovascular disease

- DASH:

-

Dietary approaches to stop hypertension

- EGIR:

-

European Group for the Study of Insulin Resistance

- EOSS:

-

Edmonton Obesity Staging System

- GLP-1:

-

Glucagon-peptide 1

- HDL-C:

-

High-density lipoprotein cholesterol

- HIV:

-

Human immunodeficiency virus

- hs-CRP:

-

High-sensitivity C-reactive protein

- IDF:

-

International Diabetes Federation

- IGT:

-

Impaired glucose tolerance

- LDL-C:

-

Low-density lipoprotein cholesterol

- MetS:

-

Metabolic syndrome

- MI:

-

Myocardial infarction

- NCEP ATP III:

-

National Cholesterol Education Program Adult Treatment Program III

- SBP:

-

Systolic blood pressure

- T2D:

-

Type-2 diabetes

- TC:

-

Total cholesterol

- WC:

-

Waist circumference

- WHO:

-

World Health Organization

References

Blaum CS, Xue QL, Michelon E, Semba RD, Fried LP. The association between obesity and the frailty syndrome in older women: the Women’s Health and Aging Studies. J Am Geriatr Soc. 2005;53(6):927–34.

D’Agostino RB, Vasan RS, Pencina MJ, et al. General cardiovascular risk profile for use in primary care: the Framingham Heart Study. Circulation. 2008;117(6):743–53.

Goff DC, Lloyd-Jones DM, Bennett G, et al. 2013 ACC/AHA guideline on the assessment of cardiovascular risk: a Report of the American College of Cardiology/American Heart Association Task Force on Practice Guidelines. J Am Coll Cardiol. 2014;63(25 Pt B):2935–59.

Fruchart J-C, Sacks FM, Hermans MP, et al. The residual risk reduction initiative: a call to action to reduce residual vascular risk in dyslipidaemic patient. Diab Vasc Dis Res. 2008;5(4):319–35.

Fruchart J-C, Davignon J, Hermans MP, et al. Residual macrovascular risk in 2013: what have we learned? Cardiovasc Diabetol. 2014;13:26.

Dawber TR, Meadors GF, Moore FE. Epidemiological approaches to heart disease: the Framingham Heart Study. Am J Public Health Nations Health. 1951;41:279–86.

Bitton A, Gaziano T. The Framingham Heart Study’s impact on global risk assessment. Prog Cardiovasc Dis. 2010;53(1):68–78.

Truett J, Cornfield J, Kannel W. A multivariate analysis of the risk of coronary heart disease in Framingham. J Chron Dis. 1967;20:511–24.

Bitton A, Gaziano T. The Framingham Heart Study’s impact on global risk assessment. Prog Cardiovasc Dis. 2010;53(1):68–78.

Kannel B, McGee D, Gordon T. A general cardiovascular risk profile: the Framingham Study. Am J Cardiol. 1976;38:46–51.

Anderson KM, Wilson PW, Odell PM, Kannel WB. An updated coronary risk profile. A statement for health professionals. Circulation. 1991;83:356–62.

Wilson PWF, D’Agostino RB, Levy D, Belanger AM, Silbershatz H, Kannel WB. Prediction of coronary heart disease using risk factor categories. Circulation. 1998;97:1837–47.

Expert Panel on Detection, Evaluation and T of HBC in A. Executive summary of the third report of the National Cholesterol Education Program (NCEP) expert panel on detection, evaluation, and treatment of high blood cholesterol in adults (Adult Treatment Panel III). JAMA. 2001;285(19):2486–97.

Brindle P, Beswick A, Fahey T, Ebrahim S. Accuracy and impact of risk assessment in the primary prevention of cardiovascular disease: a systematic review. Heart. 2006;92(12):1752–9.

Tzoulaki I, Seretis A, Ntzani EE, Ioannidis JPA. Mapping the expanded often inappropriate use of the Framingham Risk Score in the medical literature. J Clin Epidemiol. 2014;67:571–7.

Empana J. Are the Framingham and PROCAM coronary heart disease risk functions applicable to different European populations? The PRIME Study. Eur Hear J. 2003;24:1903–11.

Tillin T, Hughes AD, Whincup P, et al. Ethnicity and prediction of cardiovascular disease: performance of QRISK2 and Framingham scores in a U.K. tri-ethnic prospective cohort study (SABRE–Southall And Brent REvisited). Heart. 2014;100:60–7.

Brindle PM, McConnachie A, Upton MN, Hart CL, Davey Smith G, Watt GCM. The accuracy of the Framingham risk-score in different socioeconomic groups: a prospective study. Br J Gen Pract. 2005;55:838–45.

D’Agostino RB, Grundy SM, Sullivan LM, Wilson P. Validation of the Framingham coronary heart disease prediction scores. JAMA. 2001;286(2):180–7.

Wang TJ, Gona P, Larson MG, et al. Multiple biomarkers for the prediction of first major cardiovascular events and death. N Engl J Med. 2006;355:2631–9.

Sposito AC, Alvarenga BF, Alexandre AS, et al. Most of the patients presenting myocardial infarction would not be eligible for intensive lipid-lowering based on clinical algorithms or plasma C-reactive protein. Atherosclerosis. 2011;214(1):148–50.

Pen A, Yam Y, Chen L, Dennie C, McPherson R, Chow BJW. Discordance between Framingham Risk Score and atherosclerotic plaque burden. Eur Heart J. 2013;34:1075–82.

Ferket BS, van Kempen BJH, Hunink MGM, et al. Predictive value of updating Framingham risk scores with novel risk markers in the U.S. general population. PLoS ONE. 2014;9(2):e88312.

Ridker PM, Cook N. Clinical usefulness of very high and very low levels of C-reactive protein across the full range of Framingham Risk Scores. Circulation. 2004;109(16):1955–9.

Lloyd-Jones D, Nam B-J, D’Agostino R, et al. Parental cardiovascular disease as a risk factor for cardiovascular disease in middle-aged adults: a prospective study of parents and offspring. JAMA. 2004;291(18):2204–11.

Anderson TJ, Grégoire J, Hegele R, et al. 2012 update of the Canadian Cardiovascular Society guidelines for the diagnosis and treatment of dyslipidemia for the prevention of cardiovascular disease in the adult. Can J Cardiol. 2013;29:151–67.

Stern RH. Problems with modified Framingham Risk Score. Can J Cardiol. 2014;30:248.e3.

Ridker P, Buring JE, Rifai N, Cook NR. Development and validation of improved algorithms for the assessment of global cardiovascular risk in women: the Reynolds risk score. JAMA. 2007;297(6):611–20.

Ridker PM, Paynter NP, Rifai N, Gaziano JM, Cook NR. C-reactive protein and parental history improve global cardiovascular risk prediction: the Reynolds Risk Score for men. Circulation. 2008;118:2243–51.

Cook NR, Paynter NP, Eaton CB, et al. Comparison of the Framingham and Reynolds risk scores for global cardiovascular risk prediction in the Multiethnic Women’s Health Initiative. Circulation. 2012;125(14):1748–56.

Tattersall MC, Gangnon RE, Karmali KN, Keevil JG. Women up, men down: the clinical impact of replacing the Framingham Risk Score with the Reynolds Risk Score in the United States population. PLoS ONE. 2012;7(9):e44347.

Amin NP, Martin SS, Blaha MJ, Nasir K, Blumenthal RS, Michos ED. Headed in the right direction but at risk for miscalculation: a critical appraisal of the 2013 ACC/AHA risk assessment guidelines. J Am Coll Cardiol. 2014;63(25 Pt A):2789–94.

Muntner P, Colantonio LD, Cushman M, et al. Validation of the atherosclerotic cardiovascular disease Pooled Cohort risk equations. JAMA. 2014;311(14):1406–15.

Ridker PM, Cook NR. Statins: new American guidelines for prevention of cardiovascular disease. The Lancet. 2013;382(9907):1762–5.

Kavousi M, Leening MJG, Nanchen D, et al. Comparison of application of the ACC/AHA guidelines, Adult Treatment Panel III guidelines, and European Society of Cardiology guidelines for cardiovascular disease prevention in a European cohort. JAMA. 2014;311(14):1416–23.

Lloyd-Jones D, Larson M, Beiser A, Levy D. Lifetime risk of developing coronary heart disease. The Lancet. 1999;353:89–92.

Hippisley-cox J, Coupland C, Robson J, Brindle P. Derivation, validation, and evaluation of a new QRISK model to estimate lifetime risk of cardiovascular disease: cohort study using QResearch database. BMJ. 2010;341:c6624.

Sniderman AD, Furberg CD. Age as a modifiable risk factor for cardiovascular disease. The Lancet. 2008;371:1547–9.

Berry JD, Liu K, Folsom AR, et al. Prevalence and progression of subclinical atherosclerosis in younger adults with low short-term but high lifetime estimated risk for cardiovascular disease: the CARDIA and MESA studies. Circulation. 2009;119(3):382–9.

Lloyd-Jones DM, Leip EP, Larson MG, et al. Prediction of lifetime risk for cardiovascular disease by risk factor burden at 50 years of age. Circulation. 2006;113(6):791–8.

Berry J, Dyer A, Cai X, et al. Lifetime risks of cardiovascular disease. N Engl J Med. 2012;366(4):321–9.

Pencina MJ, D’Agostino RB, Larson MG, Massaro JM, Vasan RS. Predicting the 30-year risk of cardiovascular disease: the framingham heart study. Circulation. 2009;119:3078–84.

Wilkins JT, Ning H, Berry J, Zhao L, Dyer AR, Lloyd-jones DM. Lifetime risk and years lived free of total cardiovascular disease. JAMA. 2014;308(17):1795–801.

Libby P. The forgotten majority: unfinished business in cardiovascular risk reduction. J Am Coll Cardiol. 2005;46(7):1225–8.

Sampson U, Fazio S, Linton M. Residual cardiovascular risk despite optimal LDL cholesterol reduction with statins: the evidence, etiology, and therapeutic challenges. Curr Atheroscler Rep. 2012;14(1):1–10.

Wing RR, Bolin P, Brancati FL, et al. Cardiovascular effects of intensive lifestyle intervention in type 2 diabetes. N Engl J Med. 2013;369(2):145–54.

Alberti KGMM, Eckel RH, Grundy SM, et al. Harmonizing the metabolic syndrome: a joint interim statement of the International Diabetes Federation Task Force on Epidemiology and Prevention; National Heart, Lung, and Blood Institute; American Heart Association; World Heart Federation; International. Circulation. 2009;120(16):1640–5.

Reaven G. Banting lecture 1988. Role of insulin resistance in human disease. Diabetes. 1988;37:1595–607.

Alberti KG, Zimmet PZ. Definition, diagnosis and classification of diabetes mellitus and its complications. Part 1: diagnosis and classification of diabetes mellitus provisional report of a WHO consultation. Diabet Med. 1998;15:5395–53.

Després J-P. Is visceral obesity the cause of the metabolic syndrome? Ann Med. 2006;38(1):52–63.

Organization WH. Definition, diagnosis, and classification of diabetes mellitus and its complications: report of a WHO consultation. Part 1: diagnosis and classification of diabetes mellitus. 1999.

Balkau B, Charles M. Comment on the provisional report from the WHO. Diabet Med. 1999;16:442–3.

Zimmet P, Alberti G, Shaw J. A new IDF worldwide definition of the metabolic syndrome: the rationale and the results. Diabetes Voice. 2005;50(3):31–3.

Grundy SM, Cleeman JI, Daniels SR, et al. Diagnosis and management of the metabolic syndrome: an American Heart Association/National Heart, Lung, and Blood Institute Scientific Statement. Circulation. 2005;112(17):2735–52.

Cheung BMY, Ong KL, Man YB, Wong LYF, Lau C-P, Lam KSL. Prevalence of the metabolic syndrome in the United States National Health and Nutrition Examination Survey 1999–2002 according to different defining criteria. J Clin Hypertens. 2006;8:562–70.

Lakka H-M, Laaksonen DE, Lakka T, et al. The metabolic syndrome and total and cardiovascular disease mortality in middle-aged men. JAMA. 2002;288(21):2709–16.

McNeill AM, Rosamond WD, Girman CJ, et al. The metabolic syndrome and 11-year risk of incident cardiovascular disease in the atherosclerosis risk in communities study. Diabetes Care. 2005;28(2):385–90.

Marcovecchio ML, Chiarelli F. Metabolic syndrome in youth: chimera or useful concept? Curr Diab Rep. 2013;13:56–62.

Sabin M, Magnussen CG, Juonala M, Cowley M, Shield JPH. The role of pharmacotherapy in the prevention and treatment of paediatric metabolic syndrome–Implications for long-term health: part of a series on Pediatric Pharmacology, guest edited by Gianvincenzo Zuccotti, Emilio Clementi, and Massimo Molteni. Pharm Res. 2012;65:397–401.

Shahar E. Metabolic syndrome? A critical look from the viewpoints of causal diagrams and statistics. J Cardiovasc Med. 2010;11(10):772–9.

Carr DB, Utzschneider KM, Hull RL, et al. Intra-abdominal fat is a major determinant of the National Cholesterol Education Program Adult Treatment Panel III criteria for the metabolic syndrome. Diabetes. 2004;53:2087–94.

Jennings CL, Lambert EV, Collins M, Levitt NS, Goedecke JH. The atypical presentation of the metabolic syndrome components in black African women: the relationship with insulin resistance and the influence of regional adipose tissue distribution. Metabolism. 2009;58:149–57.

Kuk J, Ardern C. Age and sex differences in the clustering association with mortality risk. Diabetes Care. 2010;33(11):2457–61.

Wildman RP, Muntner P, Reynolds K, Mcginn AP. The obese without cardiometabolic risk factor clustering and the normal weight with cardiometabolic risk factor clustering. Arch Intern Med. 2008;168(15):1617–24.

Kahn R, Buse J, Ferrannini E, Stern M. The metabolic syndrome: time for a critical appraisal. Joint statement from the American Diabetes Association and the European Association for the study of diabetes. Diabetes Care. 2005;28:2289–304.

Guize L, Thomas F, Pannier B, Bean K, Jego B, Benetos A. All-cause mortality associated with specific combinations of the metabolic syndrome according to recent definitions. Diabetes Care. 2007;30:2381–7.

Liao Y, Kwon S, Shaughnessy S, et al. Critical evaluation of adult treatment panel III criteria in identifying insulin resistance with dyslipidemia. Diabetes Care. 2004;27:978–83.

Ford E. Risks for all-cause mortality, cardiovascular disease, and diabetes associated with the metabolic syndrome. Diabetes Care. 2005;28(7):1769–78.

Sattar N. Why metabolic syndrome criteria have not made prime time: a view from the clinic. Int J Obes. 2008;32:S30–S4.

Benetos A, Thomas F, Pannier B, Bean K, Jégo B, Guize L. All-cause and cardiovascular mortality using the different definitions of metabolic syndrome. Am J Cardiol. 2008;102:188–91.

Wannamethee SG, Shaper a G, Lennon L, Morris RW. Metabolic syndrome vs Framingham Risk Score for prediction of coronary heart disease, stroke, and type 2 diabetes mellitus. Arch Intern Med. 2005;165:2644–50.

Stern M, Williams K, Gonzalez-Villalpando C, Hunt K, Haffner S. Does the metabolic syndrome improve identification of individuals at risk of type 2 diabetes and/or cardiovascular disease? Diabetes Care. 2004;27(11):2676–81.

Ford ES. Rarer than a blue moon: the use of a diagnostic code for the metabolic syndrome in the U.S. Diabetes Care. 2005;28(7):1808–9.

Zemel M. Dietary pattern and hypertension: the DASH study. Nutr Rev. 1997;55(8):303–5.

Bhattacharyya OK, Estey E, Cheng AYY. Update on the Canadian Diabetes Association 2008 clinical practice guidelines. Can Fam Physician. 2009;55:39–43.

Ye H, Charpin-El Hamri G, Zwicky K, Christen M, Folcher M, Fussenegger M. Pharmaceutically controlled designer circuit for the treatment of the metabolic syndrome. Proc Natl Acad Sci U S A. 2013;110(1):141–6.

Simmons RK, Alberti KGMM, Gale E a M, et al. The metabolic syndrome: useful concept or clinical tool? Report of a WHO Expert Consultation. Diabetologia. 2010;53(4):600–5.

Sharma a M, Kushner RF. A proposed clinical staging system for obesity. Int J Obes (Lond). 2009;33(3):289–95.

National Heart, Lung, and Blood Institute Obesity Education Initiative Expert Panel on the Identification, Evaluation and T of O and O in A. Clinical guidelines on the identification, evaluation, and treatment of overweight and obesity in adults. 1998.

Kuk J, Ardern C. Are metabolically normal but obese individuals at lower risk for all-cause mortality? Diabetes Care. 2009;32(12):2297–9.

Wildman RP, Muntner P, Reynolds K, et al. The obese without cardiometabolic risk factor clustering and the normal weight with cardiometabolic risk factor clustering. Arch Intern Med. 2008;168(15):1617–24.

Kantartzis K, Machann J, Schick F, et al. Effects of a lifestyle intervention in metabolically benign and malign obesity. Diabetologia. 2011;54(4):864–8.

Karelis a D, Messier V, Brochu M, Rabasa-Lhoret R. Metabolically healthy but obese women: effect of an energy-restricted diet. Diabetologia. 2008;51(9):1752–4.

Kuk J, Ardern C, Church T, et al. Edmonton Obesity Staging System: association with weight history and mortality risk. Appl Physiol Nutr Metab. 2011;36:570–6.

Padwal R, Pajewski N, Allison D, Sharma A. Using the Edmonton obesity staging system to predict mortality in a population-representative cohort of people with overweight and obesity. CMAJ. 2011;183(14):1059–66.

O’Connor KG, Harman SM, Stevens TE, et al. Interrelationships of spontaneous growth hormone axis activity, body fat, and serum lipids in healthy elderly women and men. Metabolism. 1999;48(11):1424–31.

Guo F, Moellering DR, Garvey WT. The progression of cardiometabolic disease: validation of a new cardiometabolic disease staging system applicable to obesity. Obesity (Silver Spring). 2014;22:110–8.

Guo F, Moellering DR, Garvey WT. The progression of cardiometabolic disease: validation of a new cardiometabolic disease staging system applicable to obesity. Obesity. 2014;22:110–8.

Edwards A, Elwyn G, Mulley A. Explaining risks: turning numerical data into meaningful pictures. BMJ. 2002;324:827–30.

Wells S, Kerr A, Eadie S, Wiltshire C, Jackson R. ‘Your Heart Forecast’: a new approach for describing and communicating cardiovascular risk? Heart. 2010;96(9):708–13.

Gigerenzer G, Edwards A. Simple tools for understanding risks: from innumeracy to insight. BMJ. 2003;327:741–4.

Goodyear-Smith F, Arrol B, Chan L, Jackson R, Wells S, Kenealy T. Patients prefer pictures to numbers to express cardiovascular benefit. Ann Fam Med. 2008;6:213–7.

Jackson R. Lifetime risk: does it help to decide who gets statins and when? Curr Opin Lipidol. 2014;25(4):247–53.

Edwards A, Elwyn G, Mulley A. Explaining risks: turning numerical data into meaningful pictures. BMJ. 2002;324:827–30.

Gigerenzer G, Edwards A. Simple tools for understanding risks: from innumeracy to insight. BMJ. 2003;327:741–4.

Bonner C, Jansen J, McKinn S, et al. Communicating cardiovascular disease risk: an interview study of General Practitioners’ use of absolute risk within tailored communication strategies. BMC Fam Pract. 2014;15:106.

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Rights and permissions

Copyright information

© 2016 Springer International Publishing Switzerland

About this chapter

Cite this chapter

Brown, R., Kuk, J. (2016). Composite Risk Scores. In: Mechanick, J., Kushner, R. (eds) Lifestyle Medicine. Springer, Cham. https://doi.org/10.1007/978-3-319-24687-1_5

Download citation

DOI: https://doi.org/10.1007/978-3-319-24687-1_5

Published:

Publisher Name: Springer, Cham

Print ISBN: 978-3-319-24685-7

Online ISBN: 978-3-319-24687-1

eBook Packages: MedicineMedicine (R0)