Abstract

For the numerical solution of linear variational problems involving elliptic partial differential operators in n ≥ 2 space dimensions, iterative solution schemes are indispensable on account of their problem size. Our guiding principle is to devise iterative solvers which are optimal in the number of arithmetic operations, i.e., which are of linear complexity in the total number of unknowns. For these algorithms, asymptotically optimal preconditioners are required. The class of preconditioners for which this can be shown are of multilevel type, requiring nested approximation spaces to approximate the solution of the system on a fine user-specified grid. For smooth solutions of standard second and fourth order elliptic PDEs (partial differential equations) in variational form, approximations based on tensor products of higher-order B-splines yield high accuracy.For such problem classes, this survey collects the main ingredients for multilevel preconditioners in terms of higher order B-splines. There are three types of multilevel preconditioners for which asymptotic optimality can be shown. Two of them, the so-called additive preconditioners, are specified for isogeometric analysis involving linear elliptic partial differential operators in terms of variants of the BPX (Bramble-Pasciak-Xu) preconditioner and wavelet preconditioners. The third class are the so-called multiplicative preconditioners, specifically, multigrid methods.An essential ingredient for all these multilevel preconditioners are intergrid operators which transform vectors or matrices between grids of different grid spacing. For higher order B-splines, these intergrid operators can be derived from their refinement relations. In addition to a presentation of the theoretical ingredients, the performance of the different preconditioners will be demonstrated by some numerical examples.

Access provided by Autonomous University of Puebla. Download conference paper PDF

Similar content being viewed by others

Keywords

These keywords were added by machine and not by the authors. This process is experimental and the keywords may be updated as the learning algorithm improves.

1 Introduction

For variational systems involving linear elliptic partial differential equations (PDEs) in n ≥ 2 space dimensions, a standard finite element or finite difference discretization on a uniform grid with grid spacing 0 < h < 1 leads to the problem to numerically solve a large ill-conditioned system of linear equations. This is due to the fact that PDE operators have positive order 2r, i.e., r = 1 for second order or r = 2 for fourth order operators. Ill-conditioned means that the system matrix A h exhibits a spectral condition number \(\kappa _{2}(\mathbf{A}_{h})\) which is proportional to h −2r, i.e., \(\kappa _{2}(\mathbf{A}_{h}) \sim h^{-2r}\). Here and in the following, the relation \(a \sim b\) stands for \(a \lesssim b\) and \(b \lesssim a\) where the latter inequality means that b can be bounded by some constant times a uniformly in all parameters on which a and b may depend. Since the convergence speed of any iterative solution scheme depends on the spectral condition number, the scheme will therefore become prohibitively slow. This effect becomes even worse when h is chosen smaller, in order to obtain more accurate approximations; the number of unknowns N increases like \(N \sim h^{-n}\) and, thus, the system size also increases accordingly.

On the other hand, solutions of elliptic PDEs typically exhibit a multiscale behaviour. Enhancing iterative methods by multilevel ingredients, therefore, enables one to achieve much more efficient solution schemes. Ultimately, one strives for an ‘optimally efficient scheme’. This means that one can solve the problem with fine grid accuracy with an amount of arithmetic operations that is proportional to the number of unknowns on this grid. The first such methods which were proven to provide an asymptotically optimal iterative scheme were geometric multigrid algorithms [9]. The basic idea of these schemes is to successively solve smaller approximations of the linear system on the finest grid. These can often be interpreted as discretizations with respect to coarser grids. Their iterative solutions can be seen as approximating the inverses of the system matrices applied to the right hand side on the different grids, thereby reducing the spectral condition number of the original system matrix. This idea has, therefore, suggested the term ‘preconditioner’. We call a linear operator C h an (asymptotically) optimal preconditioner if its set-up, storage and application is of optimal linear complexity in the number of unknowns N and if \(\kappa _{2}(\mathbf{C}_{h}\mathbf{A}_{h}) \sim 1\) independent of h.

The search for such optimal preconditioners was a major topic for numerical elliptic PDE solvers in the 1980s. The goal was to better understand the ingredients which made a preconditioner optimal. Specifically, one aimed at finding directly applicable versions which could be interpreted as a change of basis. With the arrival of the hierarchical basis preconditioner [54], extending an idea of Babuška from the univariate case, a simple preconditioner became available. Although it is not optimal—one still has logarithmic growth in the grid spacing, \(\kappa _{2}(\mathbf{C}_{h}\mathbf{A}_{h}) \sim \log h^{-2r}\), in the bivariate case, and exponential growth for n = 3—its simplicity still makes it popular up to now [42]. Another multilevel preconditioner was presented in [3, 4]. Within the last years, (tensor product) hierarchical B-spline discretizations were increasingly employed in the area of isogeometric analysis, mainly in the context of deriving discretizations which can be locally adapted to singularities, see, e.g., [36, 51], the survey [5] and the references therein.

At the end of the 1980s, another methodology to derive preconditioners via space decompositions and subspace corrections was developed, see the review [52]. The BPX (Bramble-Pasciak-Xu) preconditioner proposed first in [10] was numerically observed to be optimal; it is based on a hierarchical generator system with grid-dependent weights. With techniques from approximation theory, its optimality was theoretically established in [21, 45]. Since then, its range of application has been widened extensively. For second and fourth order elliptic problems on the sphere a BPX-type preconditioner was developed and its optimality proved for triangular finite elements in [43]. The survey article [53] records extensions of the BPX and of multigrid preconditioners to H(grad), H(curl), and H(div) systems on adaptive and unstructured grids; to name just two extensions.

Multigrid preconditioners for isogeometric analysis were presented in [27], whereas domain decomposition type preconditioners were proposed in [6]. Within the class of domain decomposition methods, also tearing and interconnecting methods (FETI-BETI) are important [34]. Note that not all of these preconditioners are or have been proven to be asymptotically optimal.

In this survey, we present the main ideas of the BPX preconditioner from [11] in the context of isogeometric analysis, employing tensor products of higher order B-splines in Sect. 2. We will see that the main theoretical tool to prove optimality of the BPX preconditioner are multilevel characterizations of the underlying energy space, so-called norm equivalences between Sobolev space norms and weighted sequence norms, describing their subspace contributions. We will also see that the main computational ingredients are linear intergrid operators which map vectors and matrices between grids of different grid spacings.

At about the same time, wavelets as a special example of a multiscale orthogonal basis of \(L_{2}(\mathbb{R})\) with compact support were constructed [25]. While initially mainly developed and used for signal analysis and image compression, wavelets were soon discovered to provide optimal preconditioners in the above sense for second order elliptic boundary value problems [21, 33]. However, the fact that one cannot exploit L 2-orthogonality for elliptic boundary value problems together with the difficulty that the L 2-orthogonal Daubechies wavelets are only given implicitly led to the search for variants which are more practical for the numerical solution of PDEs. Soon, it was realized that biorthogonal spline-wavelets as developed in [17] are better suited since they allow one to work with piecewise polynomials for the discretization.

The principal and crucial property to prove optimality of a wavelet preconditioner are norm equivalences between Sobolev norms and sequence norms of weighted wavelet expansion coefficients. I mentioned before that multilevel characterizations for energy spaces are the crucial tool for proving optimality of the BPX preconditioner. Once a wavelet basis is available, one can represent the subspace contributions appearing there explicitly in terms of weighted wavelet coefficients. On this basis, optimal conditioning of the resulting linear system of equations can be achieved by applying the FWT (Fast Wavelet Transform) to a single-scale discretization on a uniform grid. While again multilevel characterizations of the underlying energy space play the crucial theoretical role for proving optimality, the intergrid operators which perform bases changes between different levels of resolution are the main practical ingredient for the efficiency of the FWT.

Nowadays, the terminology ‘wavelets’ is used in a more general sense that originally in [25]; we consider classes of multiscale bases with three main features: (R) they provide Riesz bases for the underlying function spaces, (L) the basis functions are local, and (CP) they exhibit cancellation properties. These will be detailed in Sect. 3.

After the initial results concerning optimal preconditioning with wavelets of local support in [21], research on employing wavelets for numerically solving elliptic PDEs went into different directions. One problem was that the original constructions in [17, 25] and many others were based on using the Fourier transform so that these constructions provide bases only for function spaces on all of \(\mathbb{R}\), on the torus or, by tensorization, on \(\mathbb{R}^{n}\). In contrast, PDEs naturally live on a bounded domain \(\varOmega \subset \mathbb{R}^{n}\). In order for wavelets to be employed for numerical PDEs, there arose the need for constructions of wavelets on bounded intervals and domains without, of course, loosing the crucial properties (R), (L) and (CP). The first such systematic construction of biorthogonal spline-wavelets on [0, 1] of arbitrary order and, by tensor products, on [0, 1]n, was provided in [22]. Different domain decomposition approaches yield constructions of biorthogonal wavelets on domains which can be represented as unions of parametric mappings of [0, 1]n [13, 23, 24, 40], see also [50] for details. Once such bases are available, the absolute value of the condition numbers of (systems of) elliptic PDEs can be ameliorated significantly by further inexpensive linear transformations taking into account a setup of the system matrices on the coarsest grid, a so-called operator-based preconditioning [12, 46]. A more recent survey on the results of wavelet-based preconditioning with extensions to PDE-constrained control problems can be found in [39].

Aside from optimal preconditioning, the built-in potential of local adaptivity for wavelets plays a prominent role when solving elliptic or parabolic PDEs with non-smooth solutions, on account of the fact that wavelets provide a locally supported Riesz basis for a whole function space. This issue is extensively addressed in the more recent survey paper [48]. In addition to the material in [26], there are at least four extensive surveys on wavelet and multiscale methods for more general PDEs addressing, among other things, the connection between adaptivity and nonlinear approximation and the evaluation of nonlinearities [15, 18–20].

In this article, I want to remain focused on multilevel preconditioning with higher-order discretizations for smooth solutions for which uniform grids provide a user-specified accuracy.

Isogeometric analysis is an the increasingly popular field in which higher order B-Splines are employed to reach this accuracy. Another such area is mathematical finance; specifically, option prizing problems. The fair pricing of an American option can in a standard model be formulated as a parabolic boundary value problem involving Black–Scholes’ equation with a free boundary. The aim is to compute the free boundary, the optimal exercise price of the option, together with the solution of the PDE, the value of the option. For American put options, one generally does not have closed form solutions so that one has to resort to numerical schemes. In the simplest Black–Scholes’ model, the volatility is assumed to be constant.

Numerical schemes for American option pricing are typically based on finite difference approaches, see, e.g., [1] and the references therein. In [14] and [44], corresponding multigrid methods are developed. However, here one is not only interested in the solution of the PDE, the option prize. For developing hedging strategies with options, it is of even more importance to accurately compute first and second derivatives of the solution, the so-called Greek letters. The availability of a smooth approximation enables to compute pointwise derivatives and, therefore, avoids additional numerical approximations of derivatives. Therefore, the idea in [30, 31] was to employ higher order B-splines for the solution of the variational inequality derived from Black-Scholes equation for American options. This approach enabled us to pointwise differentiate the option prize and, therefore, achieve a high accuracy approximation for the Greek letters. For the numerical solution, we extended the ideas about monotone multigrid methods with linear finite elements to solve variational inequalities from [35] to higher order B-splines. Here the intergrid operators mentioned before which stem from the refinement relations for B-splines had to be adapted in order not to violate the variational inequality; a task which is for higher order B-splines much more difficult than for linear finite elements since they are not interpolatory any more. Specific care has to be taken when deriving these intergrid operators in order not to violate the inequality constraints. The key feature was to replace function values by B-spline expansion coefficients which remain of the same sign because of the positivity of B-splines. Naturally, these ideas can be extended to obstacle problems which lead to variational inequalities and for which higher order approximations are sought.

While these results were very satisfying from a numerical point of view, the underlying Black-Scholes model has a severe deficiency in assuming that the volatility is constant. Particularly, an effect called volatility smile was observed in [32]. There are several approaches to estimate the volatility from observed stock data. Therefore, we adopted Heston’s approach [28] to model the volatility to satisfy a stochastic differential equation. For Heston’s model and one asset, the standard Itō approach yields a variational inequality in two space variables with a ‘mildly’ nonsymmetric parabolic differential operator. The ideas presented in [41] was as follows. A variational inequality for the American option pricing problem with Heston’s model was discretized in terms of linear finite elements with respect to space. The resulting linear inequality system was solved in each time step with optimal linear computational complexity using a projective Gauss-Seidel scheme together with a monotone multigrid method. For this, again appropriate intergrid operators were constructed. Unfortunately, due to page limitations, I am not able to present these approaches here and have to refer to [30, 31, 41].

Once optimal preconditioners are available, for any of the problems described above, one can construct for a standard elliptic PDE optimal iterative schemes to achieve discretization error accuracy on the finest level with optimal linear complexity as follows. The idea, the so-called nested iteration, has been employed together with multigrid methods for a long time: starting on a coarse grid, one iteratively solves the preconditioned linear system of equations up to discretization error accuracy on this level. The resulting solution is transformed to the next finer grid, employing again the linear intergrid operator from the coarse to the finer grid. In this way, only a fixed number of iterations are necessary on each grid, independent of that grid spacing, see, e.g., [39]. One should note that, in contrast, iterating only on the finest grid introduces an additional log N factor, where N denotes the amount of unknowns on the finest grid.

The structure of this survey paper is as follows. Section 2 is devoted to the description of the BPX preconditioner for isogeometric analysis together with the proof of its optimality and corresponding numerical results. Section 3 is concerned with wavelet approximations of solutions of PDEs, and the derivation of Fast Wavelet Transforms for optimal preconditioning. We conclude in Sect. 4 with a short summary and some outlook.

2 BPX Preconditioning for Isogeometric Analysis

In this section, we consider linear elliptic PDEs in the framework of isogeometric analysis, combining modern techniques from computer aided design with higher order approximations of the solution. We treat the physical domain by means of a regular B-spline mapping from the parametric domain \(\hat{\varOmega }= (0,1)^{n}\), n ≥ 2, to the physical domain Ω. The numerical solution of the PDE is computed by means of tensor product B-splines mapped onto the physical domain. We will construct additive BPX-type multilevel preconditioners and show that they are asymptotically optimal. This means that the spectral condition number of the resulting preconditioned stiffness matrix is independent of the grid spacing h. Together with a nested iteration scheme, this enables an iterative solution scheme of optimal linear complexity. The theoretical results are substantiated by numerical examples in two and three space dimensions. The results of this section are essentially contained in [11].

We consider linear elliptic partial differential operators of order 2r = 2, 4 on the domain Ω in variational form: for given f ∈ H −r(Ω), find u ∈ H 0 r(Ω) such that

holds. Here the energy space is H 0 r(Ω), a subset of the Sobolev space H r(Ω), the space of square integrable functions with square integrable derivatives up to order r, containing homogeneous Dirichlet boundary conditions for r = 1 and homogeneous Dirichlet and Neumann derivatives for r = 2. The bilinear form a(⋅ , ⋅ ) is derived from the linear elliptic PDE operator in a standard fashion, see, e.g., [8]. For example, the Laplacian is represented as \(a(v,w) =\int _{\varOmega }\nabla v \cdot \nabla w\,dx\). In order for the problem to be well-posed, we require the bilinear form \(a(\cdot,\cdot ): H_{0}^{r}(\varOmega ) \times H_{0}^{r}(\varOmega ) \rightarrow \mathbb{R}\) to be symmetric, continuous and coercive on H 0 r(Ω). With \(\langle \cdot,\cdot \rangle\), we denote on the right hand side of (1) the dual form between H −r(Ω) and H 0 r(Ω). Our model problem (1) covers the second order Laplacian with homogeneous boundary conditions

as well as fourth order problems with corresponding homogeneous Dirichlet boundary conditions,

where ∂ Ω denotes the boundary of Ω and n the outward normal derivative at ∂ Ω. These PDEs serve as prototypes for more involved PDEs like Maxwell’s equation or PDEs for linear and nonlinear elasticity. The reason we formulate these model problems of order 2r involving the parameter r is that this exhibits more clearly the order of the operator and the scaling in the subsequently used characterization of Sobolev spaces H r(Ω). Thus, for the remainder of this section, the parameter 2r denoting the order of the PDE operator is fixed.

The assumptions on the bilinear form a(⋅ , ⋅ ) entail that there exist constants \(0 < c_{A} \leq C_{A} < \infty \) such that the induced self-adjoint operator \(\langle Av,w\rangle:= a(v,w)\) satisfies the isomorphism relation

If the precise format of the constants in (4) does not matter, we abbreviate this relation as \(\|v\|_{H^{r}(\varOmega )} \lesssim \| Av\|_{H^{-r}(\varOmega )} \lesssim \| v\|_{H^{r}(\varOmega )}\), or shortly as

Under these conditions, Lax-Milgram’s theorem guarantees that, for any given f ∈ H −r(Ω), the operator equation derived from (1)

has a unique solution u ∈ H 0 r(Ω), see, e.g., [8].

In order to approximate the solution of (1) or (6), we choose a finite-dimensional subspace \(V _{h} \subset H_{0}^{r}(\varOmega )\). We will construct these approximation spaces by using tensor products of B-splines as specified next.

2.1 B-Spline Discretizations

Our construction of optimal multilevel preconditioners will rely on tensor products so that principally any space dimension \(n \in \mathbb{N}\) is permissible as long as storage permits; the examples cover the cases n = 2, 3. As discretization space, we choose in each spatial direction B-splines of the same degree p on uniform grids and with maximal smoothness. We begin with the univariate case and define B-splines on the interval [0, 1] recursively with respect to their degree p. Given this positive integer p and some \(m \in \mathbb{N}\), we call \(\varXi:=\{\xi _{1},\ldots,\xi _{m+p+1}\}\) a p-open knot vector if the knots are chosen such that

i.e., the boundary knots 0 and 1 have multiplicity p + 1 and the interior knots are single. For \(\varXi\), B-spline functions of degree p are defined following the well-known Cox-de Boor recursive formula, see [7]. Starting point are the piecewise constants for p = 0 (or characteristic functions)

with the modification that the last B-spline N m, 0 is defined also for ζ = 1. For p ≥ 1 the B-splines are defined as

with the same modification for N m, p . Alternatively, one can define the B-splines explicitly by applying divided differences to truncated powers [7]. This gives a set of m B-splines that form a basis for the space of splines, that is, piecewise polynomials of degree p with p − 1 continuous derivatives at the internal knots \(\xi _{\ell}\) for ℓ = p + 2, …, m. (Of course, one can also define B-splines on a knot sequence with multiple internal knots which entails that the spline space is not of maximal smoothness.) For p = 1, the B-splines are at least C 0([0, 1]) which suffices for the discretization of elliptic PDEs of order 2, and for p = 2 they are C 1([0, 1]) which suffices for r = 2. By construction, the B-spline N i, p is supported in the interval \([\xi _{i},\xi _{i+p+1}]\).

These definitions are valid for an arbitrary spacing of knots in \(\varXi\) (7). Recall that smooth solutions of elliptic PDEs can be approximated best with discretizations on a uniform grid. Therefore, we assume from now on that the grid is uniform, i.e., \(\xi _{\ell+1} -\xi _{\ell} = h\) for all ℓ = p + 1, …, m.

For n space dimensions, we employ tensor products of the one-dimensional B-splines. We take in each space dimension a p-open knot vector \(\varXi\) and define on the closure of the parametric domain \(\hat{\varOmega }= (0,1)^{n}\) (which we also denote by \(\hat{\varOmega }\) for simplicity of presentation) the spline space

In the spirit of isogeometric analysis, we suppose that the computational domain Ω can also described in terms of B-splines. We assume that the computational domain Ω is the image of a mapping \(\mathbf{F}:\hat{\varOmega }\rightarrow \varOmega\) with \(\mathbf{F}:= (F_{1},\ldots,F_{n})^{T}\) where each component F i of F belongs to \(S_{\bar{h} } (\hat{\varOmega })\) for some given \(\bar{h}\). In many applications, the geometry can be described in terms of a very coarse mesh, namely, \(\bar{h}\gg h\). Moreover, we suppose that F is invertible and satisfies

This assumption on the geometry can be weakened in that the mapping F can be a piecewise \(C^{\infty }\) function on the mesh with respect to \(\bar{h}\), independent of h, or the domain Ω may have a multi-patch representation. This means that one can allow Ω also to be the union of domains Ω k where each one parametrized by a spline mapping of the parametric domain \(\hat{\varOmega }\).

We now define the approximation space for (6) as

We will formulate three important properties of this approximation space which will play a crucial role later for the construction of the BPX-type preconditioners. The first one is that we suppose from now on that the B-spline basis is normalized with respect to L 2, i.e.,

Then one can derive the following facts [11].

Theorem 1

Let \(\{B_{i}\}_{i\in \mathbb{I}}\) be the B-spline basis defined in (10) and normalized as in (13), \(N = \#\mathbb{I}\) and V h r as in (12) . Then we have

-

(S)

Uniform stability with respect to L 2 (Ω)

For any \(\mathbf{c} \in \ell_{2}(\mathbb{I})\),

$$\displaystyle{ \left \|\sum _{i=1}^{N}c_{ i}\,B_{i} \circ \mathbf{F}^{-1}\right \|_{ L_{2}(\varOmega )}^{2} \sim \ \sum _{ i=1}^{N}\vert c_{ i}\vert ^{2} =:\| \mathbf{c}\|_{\ell_{ 2}}^{2},\qquad \mathbf{c}:= (c_{ i})_{i=1,\ldots,N}; }$$(14) -

(J)

Direct or Jackson estimates

$$\displaystyle{ \inf _{v_{ h}\in V _{h}^{r}}\|v - v_{h}\|_{L_{2}(\varOmega )} \lesssim h^{s}\,\vert v\vert _{ H^{s}(\varOmega )}\ \mbox{ for any }v \in H^{s}(\varOmega ),\ 0 \leq s \leq r, }$$(15)where \(\vert \cdot \vert _{H^{s}(\varOmega )}\) denotes the Sobolev seminorm of highest weak derivatives s;

-

(B)

Inverse or Bernstein estimates

$$\displaystyle{ \|v_{h}\|_{H^{s}(\varOmega )} \lesssim h^{-s}\|v_{ h}\|_{L_{2}(\varOmega )}\ \mbox{ for any }v_{h} \in V _{h}^{r}\mbox{ and }0 \leq s \leq r. }$$(16)

In all these estimates, the constants are independent of h but may depend on F , i.e., Ω, the polynomial degree p and the spatial dimension n.

In the next section, we construct BPX-type preconditioners for (6) in terms of approximations with (12) and show their optimality.

2.2 Additive Multilevel Preconditioners

The construction of optimal preconditioners are based on a multiresolution analysis of the underlying energy function space H 0 r(Ω). As before, 2r ∈ { 2, 4} stands for the order of the PDEs we are solving, and is always kept fixed.

We first describe the necessary ingredients within an abstract basis-free framework, see, e.g., [18]. Afterwards, we specify the realization for the parametrized tensor product spaces in (12).

Let \(\mathcal{V}\) be a sequence of strictly nested spaces V j , starting with some fixed coarsest index j 0 > 0, determined by the polynomial degree p which determines the support of the basis functions (which also depends on Ω), and terminating with a highest resolution level J,

The index j denotes the level of resolution defining approximations on a grid with dyadic grid spacing h = 2−j, i.e., we use from now on the notation V j instead of V h to indicate different grid spacings. Then, V J will be the space relative to the finest grid 2−J. We associate with \(\mathcal{V}\) a sequence of linear projectors \(\mathcal{P}:=\{ P_{j}\}_{j\geq j_{0}}\) with the following properties.

Properties 2

We assume that

- (P1):

-

P j maps H 0 r (Ω) onto V j,

- (P2):

-

\(P_{j}P_{\ell} = P_{j}\) for j ≤ ℓ,

- (P3):

-

\(\mathcal{P}\) is uniformly bounded on L 2 (Ω), i.e., \(\|P_{j}\|_{L_{2}(\varOmega )} \lesssim 1\) for any j ≥ j 0 with a constant independent of j.

These conditions are satisfied, for example, for L 2(Ω)-orthogonal projectors, or, in the case of splines, for the quasi-interpolant proposed and analyzed in [47, Chapter 4]. The second condition (P2) ensures that the differences \(P_{j} - P_{j-1}\) are also projectors for any j > j 0. We define next a sequence \(\mathcal{W}:=\{ W_{j}\}_{j\geq j_{0}}\) of complement spaces

which then yields the direct (but not necessarily orthogonal) decomposition

Thus, for the finest level J, we can express V J in its multilevel decomposition

upon setting \(W_{j_{0}-1}:= V _{j_{0}}\). Setting also \(P_{j_{0}-1}:= 0\), the corresponding multilevel representation of any v ∈ V J is then

We now have the following result which will be used later for the proof of the optimality of the multilevel preconditioners.

Theorem 3

Let \(\mathcal{P},\mathcal{V}\) be as above where, in addition, we require that for each V j , j 0 ≤ j ≤ J, a Jackson and Bernstein estimate as in Theorem 1 (J) and (B) hold with h = 2 −j . Then one has the function space characterization

Such a result holds for much larger classes of function spaces, Sobolev or even Besov spaces which are subsets of L q (Ω) for general q, possibly different from 2 and for any function v ∈ H r(Ω), then with an infinite sum on the right hand side, see, e.g., [18]. The proof of Theorem 3 for such cases heavily relies on tools from approximation theory and can be found in [21, 45].

Next we demonstrate how to exploit the norm equivalence (22) in the construction of an optimal multilevel preconditioner. Define for any v, w ∈ V J the linear self-adjoint positive-definite operator \(C_{J}: V _{J} \rightarrow V _{J}\) given by

which we call a multilevel BPX-type preconditioner. Let \(A_{J}: V _{J} \rightarrow V _{J}\) be the finite-dimensional operator defined by \((A_{J}v,w)_{L_{2}(\varOmega )}:= a(v,w)\) for all v, w ∈ V J , the approximation of A in (6) with respect to V J .

Theorem 4

With the same prerequisites as in Theorem 3 , C J is an asymptotically optimal symmetric preconditioner for A J , i.e., \(\kappa _{2}(C_{J}^{1/2}A_{J}C_{J}^{1/2}) \sim 1\) with constants independent of J.

Proof

For the parametric domain \(\hat{\varOmega }\), the result was proved independently in [21, 45] and is based on the combination of (22) together with the well-posedness of the continuous problem (6). The result on the physical domain follows then together with (11).\(\square \)

Concrete realizations of the preconditioner defined in (23) based on B-splines lead to representations of the complement spaces W j whose bases are called wavelets. For these, efficient implementations of optimal linear complexity involving the Fast Wavelet Transform can be derived explicitly, see Sect. 3.

However, since the order of the PDE operator r is positive, one can use here the argumentation from [10] which will allow to work with the same basis functions as for the spaces V j . The first part of the argument relies on the assumption that the P j are L 2-orthogonal projectors. For a clear distinction, we shall use the notation O j for L 2-orthogonal projectors and reserve the notation P j for the linear projectors with Properties 2. Then, the BPX-type preconditioner (23) (using the same symbol C J for simplicity) reads as

which is by Theorem 4 a BPX-type preconditioner for the self-adjoint positive definite operator A J . By the orthogonality of the projectors O j , we can immediately derive from (24) that

Since r > 0, by rearranging the sum, the exponentially decaying scaling factors allow one to replace C J by the spectrally equivalent operator

Recall that two linear operators \(\mathcal{A}: V _{J} \rightarrow V _{J}\) and \(\mathcal{B}: V _{J} \rightarrow V _{J}\) are spectrally equivalent if they satisfy

with constants independent of J. Thus, the realization of the preconditioner is reduced to a computation in terms of the bases of the spaces V j instead of W j . The orthogonal projector O j can, in turn, be replaced by a simpler local operator which is spectrally equivalent to O j , see [37] and the derivation below.

Up to this point, the introduction to multilevel preconditioners has been basis-free. We now show how this framework can be used to construct a BPX-preconditioner for the linear system (6). Based on the definition (12), we construct a sequence of spaces satisfying (17) such that \(V _{J} = V _{h}^{r}\). In fact, we suppose that for each space dimension we have a sequence of p-open knot vectors \(\varXi _{j_{0},\ell},\ldots,\varXi _{J,\ell}\), ℓ = 1, …, n, which provide a uniform partition of the interval [0, 1] such that \(\varXi _{j,\ell} \subset \varXi _{j+1,\ell}\) for \(j = j_{0},j_{0} + 1,\ldots,J\). In particular, we assume that \(\varXi _{j+1,\ell}\) is obtained from \(\varXi _{j,\ell}\) by dyadic refinement, i.e., the grid spacing for \(\varXi _{j,\ell}\) is proportional to 2−j for each ℓ = 1, …, n. In view of the assumptions on the parametric mapping F, we assume that \(\bar{h}= 2^{-j_{0}}\), i.e., F can be represented in terms of B-splines on the coarsest level j 0. By construction, we have now achieved that

Setting \(V _{j}^{r}:=\{ v \in H_{0}^{r}(\varOmega ):\ v \circ \mathbf{F} \in S_{j}(\hat{\varOmega })\}\), we arrive at a sequence of nested spaces

Setting \(\mathbb{I}_{j}:=\{ 1,\ldots,\dim S_{j}(\hat{\varOmega })\}\), we denote by B i j, \(i \in \mathbb{I}_{j}\), the set of L 2-normalized B-spline basis functions for the space \(S_{j}(\hat{\varOmega })\). Define now the positive definite operator \(P_{j}: L_{2}(\varOmega ) \rightarrow V _{j}^{r}\) as

Corollary 5

For the basis \(\{B_{i}^{j} \circ \mathbf{F}^{-1},\ i \in \mathbb{I}_{j}\}\) , the operators P j and the L 2 -projectors O j are spectrally equivalent for any j.

Proof

The assertion follows by combining (11), (14), with Remark 3.7.1 from [37], see [10] for the main ingredients.\(\square \)

Finally, we obtain an explicit representation of the preconditioner C J in terms of the mapped spline bases for V j r, j = j 0, …, J,

Note that this preconditioner involves all B-splines from all levels j with an appropriate scaling, i.e., in fact a properly scaled generating system for V J r.

Remark 6

The hierarchical basis (HB) preconditioner introduced for n = 2 in [54] for piecewise linear B-splines fits into this framework by choosing Lagrangian interpolants in place of the projectors P j in (23). However, since these operators do not satisfy (P3) in Properties 2, they do not yield an asymptotically optimal preconditioner for n ≥ 2. Specifically, for n = 3, this preconditioner does not have an effect at all.

So far we have not explicitly addressed the dependence of the preconditioned system on p. Since all estimates in Theorem 1 which enter the proof of optimality depend on p, it is to be expected that the absolute values of the condition numbers, i.e., the values of the constants, depend on and increase with p. Indeed, in the next section, we show some numerical results which also aim at studying this dependence.

2.3 Realization of the BPX Preconditioner and Numerical Results

Now we are in the position to describe the concrete implementation of the BPX preconditioner. Its main ingredient are linear intergrid operators which map vectors and matrices between different grids. Specifically, we need to define prolongation and restriction operators.

Since \(V _{j}^{r} \subset V _{j+1}^{r}\), each B-spline B i j on level j can be represented by a linear combination of B-splines B k j+1 on level j + 1. Arranging the B-splines in the set \(\{B_{i}^{j},i \in \mathbb{I}_{j}\}\) into a vector B j in a fixed order, this relation denoted as refinement relation can be written as

with prolongation operator I j j+1 from the trial space V j r to the trial space V j+1 r. The restriction I j+1 j is then simply defined as the transposed operator, i.e., \(\mathbf{I}_{j+1}^{j} = (\mathbf{I}_{j}^{j+1})^{T}\). In case of piecewise linear B-splines, this definition coincides with the well known prolongation and restriction operators from finite element textbooks obtained by interpolation, see, e.g., [8].

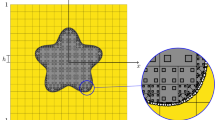

We will exemplify the construction in case of quadratic and cubic B-splines on the interval, see, e.g., [7], as follows. We equidistantly subdivide the interval [0, 1] into 2j subintervals and obtain 2j and 2j + 1, respectively, B-splines for p = 2, 3 and the corresponding quadratic and cubic spline space V j r which is given on this partition, respectively, see Fig. 1 for an illustration. Note that the two boundary functions which do not vanish at the boundary were removed in order to guarantee that \(V _{j}^{r} \subset H_{0}^{r}(\varOmega )\). Moreover, recall that the B-splines are L 2 normalized according to (13) which means that B i j is of the form \(B_{i}^{j}(\zeta ) = 2^{j/2}B(2^{j}\zeta - i)\) if B i j is an interior function, and correspondingly for the boundary functions.

Quadratic (p = 2) (left) and cubic (p = 3) (right) L 2-normalized B-splines (see (13)) on level j = 3 on the interval [0, 1], yielding basis functions for \(V _{j}^{r} \subset H_{0}^{r}(\varOmega )\)

In case of quadratic B-splines (p = 2), the restriction operator \(\mathbf{I}_{j+1}^{j}\) reads

For cubic B-splines (p = 3), it has the form

The normalization factor 2−1∕2 stems from the L 2-normalization (13). The matrix entries are scaled in the usual fashion such that their rows sum to two. From these restriction operators for one dimensions, one obtains the related restriction operators on arbitrary unit cubes [0, 1]n via tensor products. Finally, we set \(\mathbf{I}_{j}^{J}:= \mathbf{I}_{J-1}^{J}\mathbf{I}_{J-2}^{J-1}\cdots \mathbf{I}_{j}^{j+1}\) and \(\mathbf{I}_{J}^{j}:= \mathbf{I}_{j}^{j+1}\mathbf{I}_{j+1}^{j+2}\cdots \mathbf{I}_{J-1}^{J}\) to define prolongations and restrictions between arbitrary levels j and J.

In order to derive the explicit form of the discretized BPX-preconditioner, for given functions \(u_{J},v_{J} \in V _{J}\) with expansion coefficients u J, k and v J, ℓ , respectively, we conclude from (29) that

Next, one can introduce the mass matrix \(\mathbf{M}_{J} = [(B_{k}^{J} \circ \mathbf{F}^{-1},B_{\ell}^{J} \circ \mathbf{F}^{-1})_{L_{2}(\varOmega )}]_{k,\ell}\) and obtains by the use of restrictions and prolongations

The mass matrices which appear in this expression can be further suppressed since M J is spectrally equivalent to the identity matrix. Finally, the discretized BPX-preconditioner to be implemented is of the simple form

involving only restrictions and prolongations. A further simple improvement can be obtained by replacing the scaling factor 2−2jr by \(\mathop{\mathrm{diag}}\nolimits (\mathbf{A}_{j})^{-1}\), where \(\mathop{\mathrm{diag}}\nolimits (\mathbf{A}_{j})\) denotes the diagonal matrix built from the diagonal entries of the stiffness matrix A j . This diagonal scaling has the same effect as the levelwise scaling by 2−2jr but improves the condition numbers considerably, particularly if parametric mappings are involved. Thus, the discretized BPX-preconditioner takes on the form

which we will use in the subsequent computations presented in Tables 1 and 2. If the condition number \(\kappa (\mathbf{A}_{j_{0}})\) is already high in absolute numbers on the coarsest level j 0, it is worth to use its exact inverse on the coarse grid, i.e., to apply instead of (32) the operator

see [12, 46]. Another substantial improvement of the BPX-preconditioner can be achieved by replacing the diagonal scaling on each level by, e.g., a SSOR preconditioning as follows. We decompose the system matrix as \(\mathbf{A}_{j} = \mathbf{L}_{j} + \mathbf{D}_{j} + \mathbf{L}_{j}^{T}\) with the diagonal matrix D j , the lower triangular part L j , and the upper triangular part L j T. Then we replace the diagonal scaling on each level of the BPX-preconditioner (32) by the SSOR preconditioner, i.e., instead of (32) we apply the preconditioner

In doing so, the condition numbers can be improved impressively. In Table 3, we list the ℓ 2-condition numbers for the BPX-preconditioned Laplacian in case of cubic B-splines in two spatial dimensions. By comparing the numbers with those found in Tables 1 and 2 one can infer that the related condition numbers are all reduced by a factor about five. Note that the setup, storage and application of the operator defined in (33) is still of optimal linear complexity.

Finally, we provide numerical results in order to demonstrate the preconditioning and to specify the dependence on the spatial dimension n and the spline degree p. We consider an approximation of the homogeneous Dirichlet problem for the Poisson equation on the n-dimensional unit cube \(\hat{\varOmega }= (0,1)^{n}\) for n = 1, 2, 3. The mesh on level j is obtained by subdividing the cube j-times dyadically into 2n subcubes of mesh size h j = 2−j. On this subdivision, we consider the B-splines of degree p = 1, 2, 3, 4 as defined in Sect. 2.1. The ℓ 2-condition numbers of the related stiffness matrices, preconditioned by the BPX-preconditioner (32), are shown in Table 1. The condition numbers seem to be independent of the level j, but they depend on the spline degree p and the space dimension n for n > 1. For fourth order problems on the sphere, corresponding results for the bi-Laplacian with and without BPX preconditioning were presented in [43].

We study next the dependence of the condition numbers on the parametric mapping F. We consider the case n = 2 in case of a smooth mapping (see the plot on the right hand side of Table 2 for an illustration of the mapping). As one can see from Table 2, the condition numbers are at most about a factor of five higher than the related values in Table 1. Nearly the same observation holds if we replace the parametric mapping by a \(\mathcal{C}^{0}\)-parametrization which maps the unit square onto an L-shaped domain, see [11].

If we consider a singular map F, that is, a mapping that does not satisfy (11), the condition numbers grow considerably as expected, see [11]. But even in this case, the BPX-preconditioner with SSOR acceleration (33) is able to drastically reduce the condition numbers of the system matrix in all examples, see Table 3.

3 Wavelets and the FWT (Fast Wavelet Transform)

Returning to the abstract setting at the beginning of Sect. 2.2, it will now be specified how to construct and realize the FWT (fast wavelet transform) for preconditioning. I would like to emphasize at this point that wavelets are, more importantly than for preconditioning, an appropriate theoretical tool to derive adaptive discretizations in case of singularities in the domain or data. In fact, they enable one to not only prove convergence of a corresponding adaptive scheme but also optimal complexity of these schemes, see [16] and several subsequent papers or [48] for a more recent survey.

3.1 Some Basic Notions

In view of the problem formulation (6), we need to have a wavelet basis for the space H 0 r(Ω) and its dual. We formulate these basic properties for a general Hilbert space H, following [18] or [38]. A wavelet basis for H, shortly wavelets, is understood here as a collection of functions

which are indexed by elements \(\lambda\) from an infinite index set \(\mathbb{I}_{H}\). Each of the indices \(\lambda\) comprises different information \(\lambda = (j,\mathbf{k},\mathbf{e})\) such as the refinement scale or level of resolution j and a spatial location \(\mathbf{k} = \mathbf{k}(\lambda ) \in \mathbb{Z}^{n}\). In more than one space dimension, the basis functions are built from taking tensor products of certain univariate functions, and in this case the third index e contains information on the type of wavelet. We will frequently use the symbol \(\vert \lambda \vert:= j\) to access the resolution level j. In the univariate case on all of \(\mathbb{R}\), \(\psi _{H,\lambda }\) is typically generated by means of shifts and dilates of a single function ψ, i.e., \(\psi _{\lambda } =\psi _{j,k} = 2^{j/2}\psi (2^{j} \cdot -k)\), \(j,k \in \mathbb{Z}\), normalized with respect to \(\|\cdot \|_{L_{2}(\mathbb{R})}\). On bounded domains which can be represented as unions of mappings of tensor product domains, the structure of the functions is essentially the same up to modifications near the boundary.

We assume that the wavelets satisfy the following crucial properties.

Riesz basis property (R): Every v ∈ H has a unique expansion in terms of Ψ H ,

and its expansion coefficients satisfy the norm equivalence

where \(\|\cdot \|:=\| \cdot \|_{\ell_{2}(\mathbb{I}_{H})}\).

Locality (L): The functions \(\psi _{H,\lambda }\) have compact support which decreases with increasing level \(j = \vert \lambda \vert \), i.e.,

Cancellation property (CP): There exists an integer \(\tilde{d} = \tilde{d} _{H}\) such that

This means that integrating against a wavelet has the effect of taking an \(\tilde{m}\) th order difference which annihilates the smooth part of v. This property is for wavelets defined on Euclidean domains typically realized by constructing Ψ H in such a way that it possesses a dual or biorthogonal basis \(\tilde{\varPsi }_{H} \subset H'\) such that the multiresolution spaces \(\tilde{S} _{j}:= \text{span}\{\tilde{\psi }_{H,\lambda }: \vert \lambda \vert < j\}\) contain all polynomials of order \(\tilde{m}\). Here dual basis means that \(\langle \psi _{H,\lambda },\tilde{\psi }_{H,\nu }\rangle =\delta _{\lambda,\nu }\), \(\lambda,\nu \in \mathbb{I}_{H}\).

A few remarks on these properties should be made. In (R), the norm equivalence (36) is crucial since it means control over a function measured in \(\|\cdot \|_{H}\) from above and below by its expansion coefficients: small changes in the coefficients only cause small changes in the function. Together with the locality (L), this also means that local changes stay local. This stability is an important feature which is used for deriving optimal preconditioners. Finally, the cancellation property (CP) entails that smooth functions have small wavelet coefficients which, on account of (36) may be neglected in a controllable way. Moreover, (CP) can be used to derive quasi-sparse representations of a wide class of operators which is important for compression purposes, see, e.g., [48].

By duality arguments one can show that (36) is equivalent to the existence of a biorthogonal collection which is dual or biorthogonal to Ψ H ,

which is a Riesz basis for H′, that is, for any \(\tilde{v} = \tilde{\mathbf{v}} ^{T}\,\tilde{\varPsi }_{H} \in H'\) one has

see [19]. Here the tilde expresses that the collection \(\tilde{\varPsi }_{H}\) is a dual basis to a primal one for the space identified by the subscript, so that \(\tilde{\varPsi }_{H} =\varPsi _{H'}\). Here and in the following, we will view Ψ H both as in (34) as a collection of functions as well as a (possibly infinite) column vector containing all functions always assembled in some fixed unspecified order. For a countable collection of functions \(\varTheta\) and some single function \(\sigma\), the term \(\langle \varTheta,\sigma \rangle\) is then the column vector with entries \(\langle \theta,\sigma \rangle\), \(\theta \in \varTheta\), and correspondingly \(\langle \sigma,\varTheta \rangle\) the row vector. For two collections \(\varTheta,\varSigma\), the quantity \(\langle \varTheta,\varSigma \rangle\) is then a (possibly infinite) matrix with entries \((\langle \theta,\sigma \rangle )_{\theta \in \varTheta,\ \sigma \in \varSigma }\) for which \(\langle \varTheta,\varSigma \rangle =\langle \varSigma,\varTheta \rangle ^{T}\). This also implies for a (possibly infinite) matrix C that \(\langle \mathbf{C}\varTheta,\varSigma \rangle = \mathbf{C}\langle \varTheta,\varSigma \rangle\) and \(\langle \varTheta,\mathbf{C}\varSigma \rangle =\langle \varTheta,\varSigma \rangle \mathbf{C}^{T}\).

In this notation, the biorthogonality or duality conditions (39) can be expressed shortly as

with the infinite identity matrix I.

Wavelets with the above properties can be obtained in the following way. We start from an anchor basis \(\varPsi =\{\psi _{\lambda }:\lambda \in \mathbb{I} = \mathbb{I}_{H}\}\) which is a Riesz basis for L 2(Ω), meaning that Ψ is scaled such that \(\|\psi _{\lambda }\|_{L_{2}(\varOmega )} \sim 1\). Moreover, its dual basis \(\tilde{\varPsi }\) is also a Riesz basis for L 2(Ω). Ψ and \(\tilde{\varPsi }\) are constructed in such a way that rescaled versions of both bases \(\varPsi,\tilde{\varPsi }\) form Riesz bases for a whole range of (closed subspaces of) Sobolev spaces \(H^{s}:= H^{s}(\varOmega )\), for \(0 < s <\gamma, \tilde{\gamma }\), respectively, or subspaces of these. Consequently, one can derive that for each \(s \in (-\tilde{\gamma },\gamma )\) the collection

is a Riesz basis for H s [18], i.e., it holds

for each \(s \in (-\tilde{\gamma },\gamma )\). Such a scaling represented by a diagonal matrix D s introduced in (42) will play an important role later on. Concrete constructions of wavelet bases with the above properties for parameters \(\gamma, \tilde{\gamma }\leq 5/2\) can be found in [22] which cover the situation in (6) for r = 1, 2.

3.2 Multiscale Decomposition of Function Spaces

In this section, the basic construction principles of the biorthogonal wavelets with properties (R), (L) and (CP) are summarized, see, e.g., [18]. Their cornerstones are multiresolution analyses of the function spaces under consideration as in Sect. 2.2 and the concept of stable completions. These concepts are free of Fourier techniques and can therefore be applied to derive constructions of wavelets on domains or manifolds which are subsets of \(\mathbb{R}^{n}\).

Multiresolution of \(\boldsymbol{L}_{\boldsymbol{2}}\) (univariate case). Practical constructions of wavelets typically start out with multiresolution analyses of function spaces. Consider a multiresolution \(\mathcal{S}\) of L 2 which consists of closed subspaces S j of L 2, called trial spaces, such that they are nested and their union is dense in L 2,

The index j is the refinement level which appeared already in the elements of the index set \(\mathbb{I}\) in (34), starting with some coarsest level \(j_{0} \in \mathbb{N}_{0}\). We abbreviate for a finite subset \(\varTheta \subset L_{2}\) the linear span of \(\varTheta\) as

Typically the multiresolution spaces S j have the form

for some finite index set Δ j , where the set \(\{\varPhi _{j}\}_{j=j_{0}}^{\infty }\) is uniformly stable in the sense that

holds uniformly in j. Here we have used again the shorthand notation

and Φ j denotes both the (column) vector containing the functions ϕ j, k as well as the set of functions (45).

The collection Φ j is called single scale basis since all its elements live only on one scale j. In the present context of multiresolution analysis, Φ j is also called generator basis or shortly generators of the multiresolution. We assume that the ϕ j, k are compactly supported with

It follows from (46) that they are scaled such that

holds. It is known that nestedness (44) together with stability (46) implies the existence of matrices M j, 0 such that the two-scale relation

holds. Any set of functions satisfying an equation of this form, the refinement or two-scale relation, will be called refinable. The matrices M j, 0 are up to boundary modifications exactly the intergrid operators occurring in (30).

Denoting by [X, Y ] the space of bounded linear operators from a normed linear space X into the normed linear space Y, one has that

is uniformly sparse which means that the number of entries in each row or column is uniformly bounded. Furthermore, one infers from (46) that

where the corresponding operator norm is defined as

Since the union of \(\mathcal{S}\) is dense in L 2, a basis for L 2 can be assembled from functions which span any complement between two successive spaces S j and S j+1, i.e.,

where

The functions Ψ j are called wavelet functions or shortly wavelets if, among other conditions detailed below, the union \(\{\varPhi _{j} \cup \varPsi _{j}\}\) is still uniformly stable in the sense of (46). Since (51) implies \(S(\varPsi _{j}) \subset S(\varPhi _{j+1})\), the functions in Ψ j must also satisfy a matrix-vector relation of the form

with a matrix M j, 1 of size \((\#\varDelta _{j+1}) \times (\#\nabla _{j})\). Furthermore, (51) is equivalent to the fact that the linear operator composed of M j, 0 and M j, 1,

is invertible as a mapping from \(\ell_{2}(\varDelta _{j} \cup \nabla _{j})\) onto \(\ell_{2}(\varDelta _{j+1})\). One can also show that the set \(\{\varPhi _{j} \cup \varPsi _{j}\}\) is uniformly stable if and only if

The particular cases that will be important for practical purposes are when not only M j, 0 and M j, 1 are uniformly sparse but also the inverse of M j . We denote this inverse by G j and assume that it is split into

A special situation occurs when M j is an orthogonal matrix,

which corresponds to the case of L 2 orthogonal wavelets [25]. A systematic construction of more general M j , G j for spline-wavelets can be found in [22], see also [18] for more examples, including the hierarchical basis.

Thus, the identification of the functions Ψ j which span the complement of S(Φ j ) in S(Φ j+1) is equivalent to completing a given refinement matrix M j, 0 to an invertible matrix M j in such a way that (55) is satisfied. Any such completion M j, 1 is called stable completion of M j, 0. In other words, the problem of the construction of compactly supported wavelets can equivalently be formulated as an algebraic problem of finding the (uniformly) sparse completion of a (uniformly) sparse matrix M j, 0 in such a way that its inverse is also (uniformly) sparse. The fact that inverses of sparse matrices are usually dense elucidates the difficulties in the constructions. Constructions that yield compactly supported wavelets are particularly suited for computations in numerical analysis.

Combining the two-scale relations (49) and (53), one can see that M j performs a change of bases in the space S j+1,

Conversely, applying the inverse of M j to both sides of (57) results in the reconstruction identity

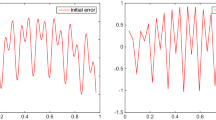

An example of the structure of the matrices M j and G j is given in Fig. 2.

Fixing a finest resolution level J, one can repeat the decomposition (51) so that \(S_{J} = S(\varPhi _{J})\) can be written in terms of the functions from the coarsest space supplied with the complement functions from all intermediate levels,

Thus, every function v ∈ S(Φ J ) can be written in its single-scale representation

as well as in its multi-scale form

with respect to the multiscale or wavelet basis

Often the single-scale representation of a function may be easier to compute and evaluate while the multi-scale representation allows one to separate features of the underlying function characterized by different length scales. Since therefore both representations are advantageous, it is useful to determine the transformation between the two representations, commonly referred to as the Wavelet Transform,

where

The previous relations (57) and (58) indicate that this will involve the matrices M j and G j . In fact, T J has the representation

where each factor has the form

Schematically T J can be visualized as a pyramid scheme

Accordingly, the inverse transform T J −1 can be written also in product structure (64) in reverse order involving the matrices G j as follows:

where each factor has the form

The corresponding pyramid scheme is then

Property (55) and the fact that M j and G j can be applied in (# Δ j+1) operations uniformly in j entails that the complexity of applying T J or T J −1 using the pyramid scheme is of order \(\mathcal{O}(\#\varDelta _{J}) = \mathcal{O}(\dim \,S_{J})\) uniformly in J. For this reason, T J is called the Fast Wavelet Transform (FWT). Note that one should not explicitly assemble T J or T J −1. In fact, due to the particular band structure of M j and G j , this would result in matrices with \(\mathcal{O}(J\,\#\varDelta _{J})\) entries. In Table 4 at the end of this section, spectral condition numbers for the FWT for different constructions of biorthogonal wavelets on the interval computed in [46] are displayed.

Since \(\cup _{j\geq j_{0}}S_{j}\) is dense in L 2, a basis for the whole space L 2 is obtained when letting \(J \rightarrow \infty \) in (62),

Theorem 7 ([18])

The multiscale transformations T J are well-conditioned in the sense

if and only if the collection Ψ defined by (70) is a Riesz basis for L 2.

A detailed construction of the dual wavelets \(\tilde{\varPsi }\), can be found in [18, 39].

Multiresolution of Sobolev spaces. Let now \(\mathcal{S}\) be a multiresolution sequence consisting of closed subspaces of H s with the property (44) whose union is dense in H s. The following result from [18] ensures under which conditions norm equivalences hold for the H s-norm.

Theorem 8

Let \(\{\varPhi _{j}\}_{j=j_{0}}^{\infty }\) and \(\{\tilde{\varPhi }_{j}\}_{j=j_{0}}^{\infty }\) be uniformly stable, refinable, biorthogonal collections. If the Jackson-type estimate

and the Bernstein inequality

hold for

then for

we have the norm equivalence

For many applications it suffices to have established (76) only for certain s > 0 for which one only needs to require (72) and (73) for \(\{\varPhi _{j}\}_{j=j_{0}}^{\infty }\). The Jackson estimates (72) of order \(\tilde{d}\) for \(S(\tilde{\varPhi }_{j})\) imply the cancellation properties (CP) (38), see, e.g., [20].

Remark 9

When the wavelets live on \(\varOmega \subset \mathbb{R}^{n}\), (72) means that all polynomials up to order \(\tilde{d}\) are contained in \(S(\tilde{\varPhi }_{j})\). One also says that \(S(\tilde{\varPhi }_{j})\) is exact of order \(\tilde{d}\). Because of the biorthogonality conditions, this implies that the wavelets ψ j, k are orthogonal to polynomials up to order \(\tilde{d}\) or have \(\tilde{d}\) th order vanishing moments. By Taylor expansion, this in turn yields (38).

For a summary of different constructions of biorthogonal wavelets on \(\mathbb{R}\) and bounded domains in \(\mathbb{R}^{n}\), see, e.g., [39]. We display spectral condition numbers for the FWT for two different constructions of biorthogonal wavelets on the interval in Table 4. The first column denotes the finest level on which the spectral condition numbers of the FWT are computed. The next column contains the numbers for the construction of biorthogonal spline-wavelets on the interval from [22] for the case \(d = 2, \tilde{d} = 4\) while the last column displays the condition numbers for a scaled version derived in [12]. We observe that the absolute numbers stay constant and low even for high levels j. We will see later in Sect. 3.3 how the transformation T J is used for preconditioning.

We see in Fig. 3 some primal biorthogonal wavelets of order d = 2 which consist of piecewise linear B-splines. These can be employed in the construction in wavelets on manifolds [24] which were optimized and implemented to construct biorthogonal wavelet bases on the sphere in [40], see the right graphic in Fig. 3.

Primal wavelets for d = 2 on [0, 1] (left) and on a sphere as constructed in [40] (right)

3.3 Elliptic Boundary Value Problems in Wavelet Coordinates

We now derive a representation of the elliptic boundary value problem (6) in terms of (initially infinite) wavelet coordinates.

Let for H = H 0 r(Ω) the collection Ψ H be a wavelet basis with corresponding dual \(\tilde{\varPsi }_{H}\) which satisfies the properties (R), (L) and (CP) from Sect. 3.1. Expanding the solution u = u T Ψ H , the right hand side \(f = \mathbf{f}^{T}\tilde{\varPsi }_{H}\) and recalling the definition of A in (6), the wavelet representation of the elliptic boundary value problem (6) is given by

where

Then the mapping property (5) and the Riesz basis property (R) yield the following fact.

Proposition 10

The infinite matrix A is a boundedly invertible mapping from \(\ell_{2} =\ell _{2}(\mathbb{I}_{H})\) into itself, and one has

The proof follows by combining the isomorphism relation (5) for the elliptic operator with the Riesz basis property (43). This entails the following consequence with respect to preconditioning. Let for \(\mathbb{I} = \mathbb{I}_{H}\) the symbol \(\varLambda\) denote any finite subset of the index set \(\mathbb{I}\). For the corresponding set of wavelets \(\varPsi _{\varLambda }:=\{\psi _{\lambda }:\ \lambda \in \varLambda \}\), denote by \(S_{\varLambda }:= \text{span}\,\varPsi _{\varLambda }\) the respective finite-dimensional subspace of H. For the wavelet representation of A in terms of \(\varPsi _{\varLambda }\), \(\mathbf{A}_{\varLambda }:= a(\varPsi _{\varLambda },\varPsi _{\varLambda })\), we then have that \(\kappa _{2}(\mathbf{A}_{\varLambda }) \sim 1\) independent of \(\varLambda\), on account of the ellipticity of the operator. In other words, representations of A with respect to properly scaled wavelet bases for H entail well-conditioned system matrices \(\mathbf{A}_{\varLambda }\) independent of \(\varLambda\).

Fast wavelet transform. We briefly explain now in the situation of uniform refinements, i.e., when S(Φ J ) = S(Ψ J), how the FWT T J from (64) can be used for preconditioning linear elliptic operators, together with a diagonal scaling induced by the norm equivalence (76) [21]. We recall the notation from Sect. 3.2 where the wavelet basis is in fact the (unscaled) anchor basis from Sect. 3.1. Thus, the norm equivalence (36) using the scaled wavelet basis Ψ H is the same as (76) in the anchor basis. Recall that the norm equivalence (76) implies that every v ∈ H s can be expanded uniquely in terms of the Ψ and its expansion coefficients v satisfy

where D s is a diagonal matrix with entries \(\mathbf{D}_{(j,k),(j',k')}^{s} = 2^{sj}\delta _{j,j'}\delta _{k,k'}\). Depending on the order of the elliptic operator, we have \(H \subset H^{r}(\varOmega )\) for r = 1 or r = 2. We have, therefore, already identified the diagonal (scaling) matrix D J consisting of the finite portion of the matrix D = D 1 for which \(j_{0} - 1 \leq j \leq J - 1\). The representation of A with respect to the (unscaled) wavelet basis Ψ J can be expressed in terms of the FWT T J , that is,

where Φ J is the single-scale basis for S(Ψ J). Thus, we first set up the operator equation as in finite element settings in terms of the single-scale basis Φ J . Applying the FWT T J together with D J yields that the operator

has uniformly bounded condition numbers independent of J. This can be seen by combining the properties of A according to (5) with the norm equivalences (36) and (40).

It is known that the boundary adaptations of the generators and wavelets aggravate the absolute values of the condition numbers. Nevertheless, these constants can be substantially reduced by an operator-adapted transformation which takes into account only the coarsest discretization level and, thus, is inexpensive [12]. Numerical tests confirm that the absolute constants can further be improved by taking instead of D J −1 the inverse of the diagonal of \(\langle \varPsi ^{J},A\varPsi ^{J}\rangle\) for the scaling in (81) [12, 46].

In Table 5 we display the condition numbers for discretizations using the weak form of the elliptic operator −Δ + I on (0, 1)n in up to three dimensions using boundary adapted biorthogonal spline-wavelets in the case \(d = 2,\ \tilde{d} = 4\) with such a scaling and additional shifts of small eigenvalues which is an inexpensive operation [12].

4 Conclusion and Outlook

The central theme of this paper was to present optimal multilevel preconditioners which enable us to reduce the spectral condition number of the system matrix to be independent of the grid spacing h. Specifically in the context of isogeometric analysis in Sect. 2, the issue arises how the spectral condition numbers depend on the polynomial degree p; we have seen a corresponding behaviour already in Table 1. From a theoretical point of view, it is not clear yet how to estimate the dependence on p and how to remedy its influence.

Some recent results in this directions may be promising. In [29], a full geometric multigrid method was proposed for problems in isogeometric analysis with an increased number of smoothing steps to account for the dependence of the spectral condition number on the polynomial degree p. A different approach is presented with different multigrid approaches involving high-and low-order variants in [49].

I expect that the intergrid operators used in Sect. 2 will also be efficient for isogeometric collocation methods as presented in [2] although it is not clear how to prove optimality of the corresponding preconditioner.

This paper dealt with preconditioners for discretizations on uniform grids which provide best approximations for PDEs with smooth solutions. In case when the solution is not smooth, adaptivity may capture the optimal degrees of freedom when compared to a best N-term approximation. Also in an adaptive method one has to iteratively solve linear systems of equations which again requires a preconditioner. For a BPX-type or FWT preconditioner, the same principles can immediately be applied as long as the spaces generated in the adaptive process are nested as in (17). Of course, then the intergrid operators in Sect. 2.3 have to be adapted accordingly.

References

Y. Achdou, O. Pironneau, Computational Methods for Option Pricing (SIAM, Philadelphia, 2005)

F. Auriccio, L. Beirão da Veiga, T.J.R. Hughes, A. Reali, C. Sangalli, Isogeometric collocation methods. Math. Models Methods Appl. Sci. 20(11), 2075–2107 (2010)

O. Axelsson, P.S. Vassilevski, Algebraic multilevel preconditioning methods I. Numer. Math. 56, 157–177 (1989)

O. Axelsson, P.S. Vassilevski, Algebraic multilevel preconditioning methods II. SIAM J. Numer. Anal. 27, 1569–1590 (1990)

L. Beirão da Veiga, A. Buffa, C. Sangalli, R. Vázquez, Mathematical analysis of variational isogeometric methods. Acta Numer. 23, 157–287 (2014)

L. Beirão da Veiga, D. Cho, L.F. Pavarino, S. Scacchi, Overlapping Schwarz methods for isogeometric analysis. SIAM J. Numer. Anal. 50(3), 1394–1416 (2012)

C. de Boor, A Practical Guide to Splines, rev. edn. (Springer, New York, 2001)

D. Braess, Finite Elements: Theory, Fast Solvers and Applications in Solid Mechanics, 2nd edn. (Cambridge University Press, Cambridge, 2001)

D. Braess, W. Hackbusch, A new convergence proof for the multigrid method including the V-cycle. SIAM J. Numer. Anal. 20, 967–975 (1983)

J.H. Bramble, J.E. Pasciak, J. Xu, Parallel multilevel preconditioners. Math. Comput. 55, 1–22 (1990)

A. Buffa, H. Harbrecht, A. Kunoth, G. Sangalli, BPX preconditioning for isogeometric analysis. Comput. Methods Appl. Mech. Eng. 265, 63–70 (2013)

C. Burstedde, Fast optimised wavelet methods for control problems constrained by Elliptic PDEs, PhD Dissertation, Mathematisch-Naturwissenschaftliche Fakultät, Universität Bonn, Bonn, 2005, http://hss.ulb.uni-bonn.de/2005/0682/0682.htm

C. Canuto, A. Tabacco, K. Urban, The wavelet element method, part I: construction and analysis. Appl. Comput. Harm. Anal. 6, 1–52 (1999)

N. Clarke, K. Parrot, Multigrid for American option pricing with stochastic volatility. Appl. Math. Financ. 6, 177–195 (1999)

A. Cohen, Numerical Analysis of Wavelet Methods. Studies in Mathematics and its Applications, vol. 32 (Elsevier, Amsterdam/Boston, 2003)

A. Cohen, W. Dahmen, R. DeVore, Adaptive wavelet methods for elliptic operator equations—convergence rates. Math. Comput. 70, 27–75 (2001)

A. Cohen, I. Daubechies, J.-C. Feauveau, Biorthogonal bases of compactly supported wavelets. Commun. Pure Appl. Math. 45, 485–560 (1992)

W. Dahmen, Wavelet and multiscale methods for operator equations. Acta Numer. 6, 55–228 (1997)

W. Dahmen, Wavelet methods for PDEs—some recent developments. J. Comput. Appl. Math. 128, 133–185 (2001)

W. Dahmen, Multiscale and wavelet methods for operator equations, in Multiscale Problems and Methods in Numerical Simulation, ed. by C. Canuto. C.I.M.E. Lecture Notes in Mathematics, vol. 1825 (Springer, Heidelberg, 2003), pp. 31–96

W. Dahmen, A. Kunoth, Multilevel preconditioning. Numer. Math. 63, 315–344 (1992)

W. Dahmen, A. Kunoth, K. Urban, Biorthogonal spline wavelets on the interval—stability and moment conditions. Appl. Comput. Harm. Anal. 6, 132–196 (1999)

W. Dahmen, R. Schneider, Composite wavelet bases for operator equations. Math. Comput. 68, 1533–1567 (1999)

W. Dahmen, R. Schneider, Wavelets on manifolds I: construction and domain decomposition. SIAM J. Math. Anal. 31, 184–230 (1999)

I. Daubechies, Orthonormal bases of compactly supported wavelets. Commun. Pure Appl. Math. 41, 909–996 (1988)

R.A. DeVore, A. Kunoth, Multiscale, Nonlinear and Adaptive Approximations (Springer, Heidelberg/New York, 2009)

K.P.S. Gahalaut, J.K. Kraus, S.K. Tomar, Multigrid methods for isogeometric discretization. Comput. Methods Appl. Mech. Eng. 253(1), 413–425 (2013)

S.L. Heston, A closed-form solution for options with stochastic volatility with applications to bond and currency options. Rev. Financ. Stud. 6(2), 327–343 (1993)

C. Hofreither, W. Zulehner, On full multigrid schemes for isogeometric analysis, NuMa-Report No. 2014–03, Johannes-Kepler-Universität Linz, May 2014, http://www.numa.uni-linz.ac.at/Publications/

M. Holtz, A. Kunoth, B-spline based monotone multigrid methods with an application to the pricing of American options, in Multigrid, Multilevel and Multiscale Methods, ed. by P. Wesseling, C.W. Oosterlee, P. Hemker. (Electr.) Proc. EMG, 2005

M. Holtz, A. Kunoth, B-spline based monotone multigrid methods. SIAM J. Numer. Anal. 45(1), 1175–1199 (2007)

J.C. Hull, Options, Futures and Other Derivatives (Prentice Hall International Editions, Upper Saddle River, 2000)

S. Jaffard, Wavelet methods for fast resolution of elliptic problems. SIAM J. Numer. Anal. 29, 965–986 (1992)

S.K. Kleiss, C. Pechstein, B. Jüttler, S. Tomar, IETI—isogeometric tearing and interconnecting. Comput. Methods Appl. Mech. Eng. 247–248, 201–215 (2012)

R. Kornhuber, Adaptive Monotone Multigrid Methods for Nonlinear Variational Problems (Teubner, Stuttgart, 1997)

R. Kraft, Adaptive and linearly independent multilevel B-splines, in Surface Fitting and Multiresolution Methods, ed. by A. Le Méhauté, C. Rabut, L.L. Schumaker (Vanderbilt University Press, Nashville, 1997), pp. 209–218

A. Kunoth, Multilevel Preconditioning (Shaker-Verlag, Aachen, 1994)

A. Kunoth, Wavelet Methods—Elliptic Boundary Value Problems and Control Problems. Advances in Numerical Mathematics (Teubner, Stuttgart, 2001)

A. Kunoth, Optimized wavelet preconditioning, in Multiscale, Nonlinear and Adaptive Approximations, ed. by R.A. DeVore, A. Kunoth (Springer, Heidelberg/New York, 2009), pp. 325–378

A. Kunoth, J. Sahner, Wavelets on manifolds: an optimized construction. Math. Comput. 75, 1319–1349 (2006)

A. Kunoth, Chr. Schneider, K. Wiechers, Multiscale methods for the valuation of American options with stochastic volatility. Int. J. Comput. Math. 89(9), 1145–1163 (2012)

J. Maes, A. Bultheel, A hierarchical basis preconditioner for the biharmonic equation on the sphere. IMA J. Numer. Anal. 26(3), 563–583 (2006)

J. Maes, A. Kunoth, A. Bultheel, BPX-type preconditioners for 2nd and 4th order elliptic problems on the sphere. SIAM J. Numer. Anal. 45(1), 206–222 (2007)

C.W. Oosterlee, On multigrid for linear complementary problems with application to American-style options. Electron. Trans. Numer. Anal. 15, 165–185 (2003)

P. Oswald, On discrete norm estimates related to multilevel preconditioners in the finite element method, in Constructive Theory of Functions, ed. by K.G. Ivanov, P. Petrushev, B. Sendov. Proceedings of the International Conference Varna 1991, Bulgarian Academy of Sciences, Sofia, 1992, pp. 203–214

R. Pabel, Wavelet methods for PDE constrained elliptic control problems with dirichlet boundary control, Diploma Thesis, Institut für Angewandte Mathematik, Universität Bonn (2006), Shaker-Verlag Aachen (2007). doi:10.2370/236_232

L.L. Schumaker, Spline Functions: Basic Theory. Cambridge Mathematical Library, 3rd edn. (Cambridge University Press, Cambridge, 2007)

R. Stevenson, Adaptive wavelet methods for solving operator equations: an overview, in Multiscale, Nonlinear and Adaptive Approximations, ed. by R.A. DeVore, A. Kunoth (Springer, Heidelberg/New York, 2009), pp. 543–598

H. Sundar, G. Stadler, G. Biros, Comparison of multigrid algorithms for high-order continuous finite element discretizations, submitted, 2014. [arXiv:1402.5938]

K. Urban, Wavelet Methods for Elliptic Partial Differential Equations (Oxford University Press, Oxford, 2009)

A.-V. Vuong, C. Giannelli, B. Jüttler, B. Simeon, A hierarchical approach to adaptive local refinement in isogeometric analysis. Comput. Methods Appl. Mech. Eng. 200(49–52), 3554–3567 (2011)

J. Xu, Iterative methods by space decomposition and subspace correction. SIAM Rev. 34(4), 581–613 (1992)

J. Xu, L. Chen, R.H. Nochetto, Optimal multilevel methods for H(grad), H(curl), and H(div) systems on graded and unstructured grids, in Multiscale, Nonlinear and Adaptive Approximations, ed. by R.A. DeVore, A. Kunoth (Springer, Heidelberg/New York, 2009), pp. 599–659.

H. Yserentant, On the multilevel splitting of finite element spaces. Numer. Math. 49, 379–412 (1986)

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Rights and permissions

Copyright information

© 2015 Springer International Publishing Switzerland

About this paper

Cite this paper

Kunoth, A. (2015). Multilevel Preconditioning for Variational Problems. In: Jüttler, B., Simeon, B. (eds) Isogeometric Analysis and Applications 2014. Lecture Notes in Computational Science and Engineering, vol 107. Springer, Cham. https://doi.org/10.1007/978-3-319-23315-4_11

Download citation

DOI: https://doi.org/10.1007/978-3-319-23315-4_11

Published:

Publisher Name: Springer, Cham

Print ISBN: 978-3-319-23314-7

Online ISBN: 978-3-319-23315-4

eBook Packages: Mathematics and StatisticsMathematics and Statistics (R0)