Abstract

This paper studies the problem of Non-symmetric Joint Diagonalization (NsJD) of matrices, namely, jointly diagonalizing a set of complex matrices by one matrix multiplication from the left and one multiplication with possibly another matrix from the right. In order to avoid ambiguities in the solutions, these two matrices are restricted to lie in the complex oblique manifold. We derive a necessary and sufficient condition for the uniqueness of solutions, and characterize the Hessian of global minimizers of the off-norm cost function, which is typically used to measure joint diagonality. By exploiting existing results on Jacobi algorithms, we develop a block-Jacobi method that guarantees local convergence to a pair of joint diagonalizers at a quadratic rate. The performance of the proposed algorithm is investigated by numerical experiments.

Access provided by Autonomous University of Puebla. Download conference paper PDF

Similar content being viewed by others

Keywords

- Non-symmetric joint diagonalization of matrices

- Complex oblique manifold

- Uniqueness conditions

- Block Jacobi algorithm

- Local quadratic convergence

1 Introduction

Joint Diagonalization (JD) of a set of matrices has attracted considerable attentions in the areas of statistical signal processing and multivariate statistics. Its applications include linear blind source separation (BSS), beamforming, and direction of arrival (DoA) estimation, cf. [1]. Classic literature focuses on the problem of symmetric joint diagonalization (SJD) of matrices. Namely, a set of matrices are to be diagonalized via matrix congruence transforms, i.e. multiplication from the left and the right with a matrix and its (Hermitian) transposed, respectively.

In this work, we consider the problem of Non-symmetric Joint Diagonalization (NsJD) of matrices, where the two matrices multiplied from the left and the right are different. Such a general form has been studied in the scheme of multiple-access multiple-input multiple-output (MIMO) wireless transmission, cf. [2]. In the work of [3], NsJD approaches have demonstrated its application in solving the problem of independent vector analysis. Moreover, the problem of NsJD is closely related to the problem of the canonical polyadic decomposition of tensors. We refer to [4, 5] for further discussions on the latter subject.

One contribution of this work is the development of a block Jacobi method for solving the problem of NsJD at a super-linear rate of convergence. Jacobi-type methods have a long history in solving problems of matrix joint diagonalization. Early work in [6] employs a Jacobi algorithm for solving the unitary joint diagonalization problem based on the common off-norm cost function. In the non-unitary setting, Jacobi-type methods have been developed for both the log-likelihood formulation, cf. [7], and the common off-norm case, cf. [8, 9]. The current work provides an extension of [10], which only considers the problem in the real symmetric setting.

2 Uniqueness of Non-symmetric JD

In this work, we denote by \((\cdot )^{\top }\) the matrix transpose, \((\cdot )^{\mathsf {H}}\) the Hermitian transpose, \(\overline{(\cdot )}\) the complex conjugate of entries of a matrix, and by Gl(m) the set of all \(m \times m\) invertible complex matrices. Let \(\{C_{i}\}_{i=1}^{n}\) be a set of \(m{\times }m\) complex matrices, constructed by

where \(A_{L},A_{R} \in Gl(m)\) and \({\varOmega }_{i} = {\text {diag}} \big (\omega _{i1}, \ldots , \omega _{im} \big ) \in \mathbb {C}^{m \times m}\) with \({\varOmega }_{i} \ne 0\). It is worth noticing that there is no relation between the matrices \(A_{L}\) and \(A_{R}\). The task of NsJD is to find a pair of matrices \(X_{L},X_{R} \in Gl(m)\) such that the set of matrices

are jointly diagonalized. In order to investigate properties of any algorithm that aims at finding such a joint diagonalizer, it is fundamental to understand under what conditions there exists a unique solution. In the remainder of this section, we therefore elaborate the uniqueness properties of the non-symmetric joint diagonalization problem (2).

As it is known from the SJD case, there is also an inherent permutation and scaling ambiguity here. Let \(D_{L}, D_{R} \in Gl(m)\) be diagonal and \(P \in Gl(m)\) be a permutation matrix. If \(X_{L}^{*} \in Gl(m)\) and \(X_{R}^{*} \in Gl(m)\) are the joint diagonalizers of problem (2), then so is the pair of \((X_{L}^{*}D_{L}P, X_{R}^{*}D_{R}P)\). In other words, the joint diagonalizers can only be identified up to individual scaling and a joint permutation. We define the set of two jointly column-wise permuted diagonal \((m \times m)\)-matrices by

As the set \(\mathcal {G}(m)\) admits a group structure, we can define an equivalence class on \(Gl(m) \times Gl(m)\) as follows.

Definition 1

(Essential Equivalence). Let \((X_{L},X_{R}) \in Gl(m) \times Gl(m)\), then \((X_{L},X_{R})\) is said to be essentially equivalent to \((Y_{L},Y_{R}) \in Gl(m) \times Gl(m)\), and vice versa, if there exists \((E_{L},E_{R}) \in \mathcal {G}(m)\) such that

Moreover, we say that the solution of problem (2) is essentially unique, if it admits a unique solution on the set of equivalence classes.

Due to the fact that

we assume without loss of generality that the \(C_{i} = {\varOmega }_{i}\), \(i=1,\dots ,n\), are already diagonal. In other words, we investigate the question of under what conditions the set \(\mathcal {G}(m)\) admits the only solutions to the joint diagonalization problem (2), when the \(C_{i}\)’s are already diagonal.

In order to characterize the uniqueness conditions, we need to define a measure of collinearity for diagonal matrices. Recall \({\varOmega }_{i} = {\text {diag}}(\omega _{i1}, \ldots , \omega _{im}) \in \mathbb {C}^{m \times m}\) for \(i = 1, \ldots , n\). For a fixed diagonal position k, we denote by \({z}_{k} := [\omega _{1k}, \ldots , \omega _{nk}]^{\top } \in \mathbb {C}^{n}\) the vector consisting of the k-th diagonal element of each matrix, respectively. Then, the collinearity measure for the set of \({\varOmega }_{i}\)’s is defined by

where \(c({z}_k,{z}_l)\) is the cosine of the complex angle between two vectors \({v},{w} \in \mathbb {C}^n\), computed as

Here, \(\Vert {v}\Vert \) denotes the Euclidean norm of a vector v. Note, that \(0 \le \rho \le 1\) and that \(\rho = 1\) if and only if there exists a complex scalar \(\omega \in \mathbb {C}\) and a pair \({z}_k, {z}_l\), \(k \ne l\) so that \({z}_k = \omega {z}_l\). In other words, \(\rho =1\) if and only if there exist two positions (k, k) and (l, l) such that the corresponding entries in the matrices \({\varOmega }_i\) only differ by multiplication with a complex scalar \(\omega \). We adopt the methods for uniqueness analysis of symmetric joint diagonalization cases, developed in [11], to the NsJD setting.

Lemma 1

Let \({\varOmega }_{i} \in \mathbb {C}^{m \times m}\), for \(i = 1, \ldots , n\), be diagonal, and let \(X_{L}, X_{R} \in Gl(m)\) so that \(X_{L}^{\mathsf {H}} {\varOmega }_{i} X_{R}\) is diagonal as well. Then the pair \((X_{L},X_{R})\) is essentially unique if and only if \(\rho ({\varOmega }_1, \ldots , {\varOmega }_{n}) < 1\).

Proof

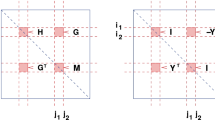

First, consider the case \(m=2\) and let

Then \(X_{L}^{\mathsf {H}} {\varOmega }_{i} X_{R}\) is diagonal for \(i=1, \ldots , n\), if and only if

for \(i=1, \ldots , n\). The corresponding system of linear equations reads as

which only has a unique trivial solution if and only if the coefficient matrix in \(\omega \)’s has rank 2, which in turn is equivalent to \(\rho ({\varOmega }_{1}, \ldots , {\varOmega }_{n})<1\). Specifically, the trivial solution, i.e.

together with the invertibility of \(X_{L}\) and \(X_{R}\) yields that either

Therefore, one can conclude that \((X_{L},X_{R}) \in \mathcal {G}(2)\). For the case \(m >2\), if \(\rho = 1\) then there exists a pair (k, l) such that \(|c(z_k,z_l)|=1\) and the same argument as above shows that \(\rho = 1\) implies the non-uniqueness of the joint diagonalizer.

For the reverse direction of the statement, we assume that the joint diagonalizer is not in \(\mathcal {G}(m)\). We further assume that one of the \({\varOmega }_{i}\)’s, say \({\varOmega }_{1}\), is invertible. Then

for \(i=1, \ldots , n\), gives the simultaneous eigendecomposition of the diagonal matrices \({\varOmega }_i {\varOmega }_{1}^{-1}\). Since we assume \((X_{L},X_{R}) \notin \mathcal {G}(m)\), this eigendecomposition is not unique and thus each matrix \({\varOmega }_i {\varOmega }_{1}^{-1}\) must have at least two identical eigenvalues and these eigenvalues must be located at the same positions (k, k) and (l, l) for all the matrices \({\varOmega }_i {\varOmega }_{1}^{-1}\). In other words, there exists a pair (k, l) with \(k \ne l\) such that

which is equivalent to \(|c(z_k,z_l)|=1\) and hence \(\rho ({\varOmega }_{1}, \ldots ,{\varOmega }_{n})=1\). If all the \({\varOmega }_{i}\)’s are singular, we distinguish between two cases. Firstly, assume that there is a position on the diagonals, say k, where all \(\omega _{ik}=0\). Then \(|c(z_k,z_l)|=1\) holds true for any \(k \ne l\) and thus \(\rho =1\). Secondly, if there is no common position where all the \({\varOmega }_{i}\)’s have a zero entry, there exists an invertible linear combination, say \({\varOmega }_{0}\), which can also be diagonalized via the same transformations. Then by considering a new set \(\{{\varOmega }_{i}\}_{i=0}^{n}\), the same argument as from (13) to (14) for the invertible case applies by replacing \({\varOmega }_1\) with \({\varOmega }_0\). \(\blacksquare \)

3 Analysis of the Joint Diagonality Measure

To deal with the scaling ambiguity, we restrict the search space for the diagonalizing matrices to the quotient space

cf. [12] for further details. As representatives for one equivalent class, we choose elements from the set of complex oblique matrices, i.e.

where \({\text {ddiag}}(Z)\) forms a diagonal matrix, whose diagonal entries are just those of Z, and \(I_{m}\) is the \(m{\times }m\) identity matrix. As a measure for the joint diagonality, we choose

where \({\text {off}} (Z) = Z - {\text {ddiag}}(Z)\) and \(\Vert \cdot \Vert _{\mathrm {F}}\) is the Frobenius norm of matrices.

The convergence rate of the Jacobi method depends on a non-degenerated Hessian form of the cost function at the optimal solution. In what follows, we therefore characterize the critical points of (17) and specify their Hessian form. Let us denote the set of all \(m{\times }m\) matrices with zero diagonal entries by

Then, for any \(X \in {Op}(m)\), the following map

where \(Z = [z_{1},\ldots ,z_{m}] \in \mathfrak {off}(m)\) and \(e_{i}\) is the i-th standard basis vector, is a local and smooth parameterization around X. Let \(\mathcal {X} := (X_{L},X_{R}) \in Op^{2}(m) := Op(m) \times Op(m)\) and denote by \(\mathcal {H} := (H_{L},H_{R}) \in T_{\mathcal {X}}Op^{2}(m)\) the tangent vector at \(\mathcal {X}\). The first derivative of f evaluated at \(\mathcal {X}\) in tangent direction \(\mathcal {H}\) yields

Since any joint diagonalizer \(\mathcal {X}^{*}\) is a global minimum, it satisfies \({\text {D}} f(\mathcal {X}^{*}) \mathcal {H} = 0\). We use \(\mu _{\mathcal {X}} := (\mu _{X_{L}},\mu _{X_{R}})\) as the local parameterization of \(Op^{2}(m)\) and compute the Hessian form \(\mathsf {H}_{f}(\mathcal {X}^{*}) :T_{\mathcal {X}^{*}} Op^{2}(m) \times T_{\mathcal {X}^{*}} Op^{2}(m) \rightarrow \mathbb {R}\). Let \(\mathcal {X}^{*}=(X_{L}^{*},X_{R}^{*})\) be a pair of joint diagonalizers. Without loss of generality assume that \(X_{L}^{*\mathsf {H}}A_{L}\) is diagonal and denoted by \(\varLambda _{L} := {\text {diag}}(\lambda _{L1},\ldots , \lambda _{Lm})\). Similarly, we denote \(\varLambda _{R} := {\text {diag}}(\lambda _{R1},\ldots , \lambda _{Rm}) = A_{R}^{\mathsf {H}} X_{R}^{*}\). A tedious but direct calculation leads to

where \(\delta _{ij}\) is the j-th diagonal entry of the diagonal matrix \({\varDelta }_{i} := X_{L}^{*} C_{i} X_{R}^{*} = \varLambda _{L} {\varOmega }_{i} \varLambda _{R}\).

Clearly, the Hessian of f at \(\mathcal {X}^{*}\) is at least positive semi-definite, and diagonal in terms of \(2 \times 2\) blocks, with respect to the standard basis of the parameter space \(\mathfrak {off}(m) \times \mathfrak {off}(m)\). Then, the definiteness of the Hessian simply depends on the determinant of \(B_{jk}\)’s, which is computed by

By the Cauchy-Schwarz inequality, \({\text {det}}(B_{jk})\) is non-negative, and is equal to zero if and only if there is a pair of positions (j, k), so that \(z_{j} \in \mathbb {R}^{n}\) and \(z_{k}\) are linearly dependent, i.e. \(\rho ({\varOmega }_{1},\ldots ,{\varOmega }_{n}) = 1\). Thus, we conclude.

Lemma 2

Let the NsJD problem (2) have a unique joint diagonalizer. Then the Hessian of the off-norm function (17) at the joint diagonalizer is positive definite.

4 Block-Jacobi for Non-symmetric Joint Diagonalization

In this section, we develop a block Jacobi algorithm to minimize the cost function (17). Firstly, let us define the complex one dimensional subspace

It is clear that \(\oplus _{i \ne j} (V_{ij} \times V_{ji}) = \mathfrak {off}(m) \times \mathfrak {off}(m)\). We then define

yielding a vector space decomposition of the tangent space \(T_{\mathcal {X}} Op^{2}(m)\).

The block Jacobi-type method iteratively employs the search along the subspaces \(\mathcal {V}_{ij}(X)\) in a cyclic manner. More precisely, let \(({\varTheta }_{jk},{\varTheta }_{kj}) \in V_{ij} \times V_{ji}\) and denote \({\theta } =[\theta _{jk}~\theta _{kj}]^{\top } \in \mathbb {C}^{2}\). For any point \(\mathcal {X} \in Op^{2}(m)\), we construct a family of maps \(\big \{ \nu _{jk}^{(\mathcal {X})} \big \}_{j \ne k}^{m}\) by

The algorithm is presented in Algorithm 1. It is readily seen with (21) that the \(\mathcal {V}_{ij}\) are orthogonal with respect to \(\mathsf {H}_{f}{(\mathcal {X}^{*})}\). Therefore, the following result guarantees the super linear convergence rate of the block Jacobi method to an exact joint diagonalizer in case that this diagonalizer is essentially unique.

Theorem 1

([13]). Let M be an n-dimensional manifold and let \(x^{*}\) be a local minimum of the smooth cost function \(f :M \rightarrow \mathbb {R}\) with nondegenerate Hessian \(\mathsf {H}_{f}{(x^{*})}\). Let \(\mu _x\) be a family of local parameterizations of M and let \(\oplus _i V_i\) be a decomposition of \(\mathbb {R}^n\). If the subspaces \(\mathcal {V}_i:=T_{0}\mu _{x^{*}} (V_i) \subset T_{x^{*}}M\) are orthogonal with respect to \(\mathsf {H}_{f}{(x^{*})}\), then the Block-Jacobi method is locally quadratic convergent to \(x^{*}\).

For an experimantal evaluation of this result, we consider the task of jointly diagonalizing a set of non-symmetric matrices \(\{\widetilde{C}_{i}\}_{i=1}^{n}\), constructed by

where \(A \in \mathbb {C}^{m \times m}\) is a randomly picked matrix in \(\mathcal {OB}(m)\), the modulus of diagonal entries of \(\varLambda _{i}\) are drawn from a uniform distribution on the interval (9, 11), \(E_{i} \in \mathbb {C}^{m \times m}\) represents the additive noise, whose entries are generated from a uniform distribution on the unit interval \((-0.5,0.5)\), and \(\varepsilon \in \mathbb {R}\) is the noise level. We set \(m=5\), \(n=20\), and run six tests in accordance with increasing noise, by using \(\varepsilon = d \times 10^{-2}\) where \(d = 0, \ldots , 5\). Each experiment was initialized with the same point, which is randomly drawn within an appropriate neighbourhood of the true joint diagonalizer.

The convergence of algorithms is measured by the distance of the accumulation point \(\mathcal {X}^{*} := (X_{L}^{*},X_{R}^{*})\) to the current iterate \(\mathcal {X}^{(k)} := (X_{L}^{(k)},X_{R}^{(k)})\), i.e., by \(\Vert X_{L}^{(k)}-X_{L}^{*}\Vert _{F} + \Vert X_{R}^{(k)}-X_{R}^{*}\Vert _{F}\). According to Fig. 1, our proposed algorithm converges locally quadratically fast to a pair of joint diagonalizers under the NsJD setting, i.e., when \(\varepsilon = 0\), whereas with an increasing level of noise, the convergence rate slows down accordingly with a tendency of more gradual slopes.

References

Chabriel, G., Kleinsteuber, M., Moreau, E., Shen, H., Tichavsky, P., Yeredor, A.: Joint matrices decompositions and blind source separation: a survey of methods, identification, and applications. IEEE Sig. Process. Mag. 31(3), 34–43 (2014)

Chabriel, G., Barrere, J.: Non-symmetrical joint zero-diagonalization and mimo zero-division multiple access. IEEE Trans. Sig. Process. 59(5), 2296–2307 (2011)

Shen, H., Kleinsteuber, M.: A matrix joint diagonalization approach for complex independent vector analysis. In: Theis, F., Cichocki, A., Yeredor, A., Zibulevsky, M. (eds.) LVA/ICA 2012. LNCS, vol. 7191, pp. 66–73. Springer, Heidelberg (2012)

Kolda, T.G., Bader, B.W.: Tensor decompositions and applications. SIAM Rev. 51(3), 455–500 (2009)

Cichocki, A., Mandic, D.P., Phan, A.H., Caiafa, C.F., Zhou, G., Zhao, Q., de Lathauwer, L.: Tensor decompositions for signal processing applications: From two-way to multiway component analysis. IEEE Sig. Process. Mag. 32(2), 145–163 (2015)

Cardoso, J.F., Souloumiac, A.: Jacobi angles for simultaneous diagonalisation. SIAM J. Matrix Anal. Appl. 17(1), 161–164 (1996)

Pham, D.T.: Joint approximate diagonalization of positive definite Hermitian matrices. SIAM J. Matrix Anal. Appl. 22(4), 1136–1152 (2001)

Wang, F., Liu, Z., Zhang, J.: Nonorthogonal joint diagonalization algorithm based on trigonometric parameterization. IEEE Trans. Sig. Process. 55(11), 5299–5308 (2007)

Souloumiac, A.: Nonorthogonal joint diagonalization by combining givens and hyperbolic rotations. IEEE Trans. Sig. Process. 57(6), 2222–2231 (2009)

Shen, H., Hüper, K.: Block Jacobi-type methods for non-orthogonal joint diagonalisation. In: Proceedings of the 34th IEEE ICASSP, pp. 3285–3288 (2009)

Kleinsteuber, M., Shen, H.: Uniqueness analysis of non-unitary matrix joint diagonalization. IEEE Trans. Sig. Process. 61(7), 1786–1796 (2013)

Kleinsteuber, M., Shen, H.: A geometric framework for non-unitary joint diagonalization of complex symmetric matrices. In: Nielsen, F., Barbaresco, F. (eds.) GSI 2013. LNCS, vol. 8085, pp. 353–360. Springer, Heidelberg (2013)

Kleinsteuber, M., Shen, H.: Block-jacobi methods with newton-steps andnon-unitary joint matrix diagonalization. In: Accepted at the 2nd Conference on Geometric Science of Information (GSI) (2015)

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Rights and permissions

Copyright information

© 2015 Springer International Publishing Switzerland

About this paper

Cite this paper

Shen, H., Kleinsteuber, M. (2015). A Block-Jacobi Algorithm for Non-Symmetric Joint Diagonalization of Matrices. In: Vincent, E., Yeredor, A., Koldovský, Z., Tichavský, P. (eds) Latent Variable Analysis and Signal Separation. LVA/ICA 2015. Lecture Notes in Computer Science(), vol 9237. Springer, Cham. https://doi.org/10.1007/978-3-319-22482-4_37

Download citation

DOI: https://doi.org/10.1007/978-3-319-22482-4_37

Published:

Publisher Name: Springer, Cham

Print ISBN: 978-3-319-22481-7

Online ISBN: 978-3-319-22482-4

eBook Packages: Computer ScienceComputer Science (R0)