Abstract

In the previous sections, the models that we considered described a homogeneous population and could be considered as toy models. A first generalization consists in considering multitype population dynamics. The demographic rates of a subpopulation depend on its own type. The ecological parameters are functions of the different types of the individuals competiting with each other. Indeed, we assume that the type has an influence on the reproduction or survival abilities, but also on the access to resources. Some subpopulations can be more adapted than others to the environment.

Access provided by Autonomous University of Puebla. Download chapter PDF

Similar content being viewed by others

1 Multitype models

In the previous sections, the models that we considered described a homogeneous population and could be considered as toy models. A first generalization consists in considering multitype population dynamics. The demographic rates of a subpopulation depend on its own type. The ecological parameters are functions of the different types of the individuals competiting with each other. Indeed, we assume that the type has an influence on the reproduction or survival abilities, but also on the access to resources. Some subpopulations can be more adapted than others to the environment.

For simplicity, the examples that we consider now deal with only two types of individuals. Let us consider two sub-populations characterized by two different types 1 and 2. For i = 1, 2, the growth rates of these populations are r 1 and r 2. Individuals compete for resources either inside the same species (intra-specific competition) or with individuals of the other species (inter-specific competition). As before, let K be the scaling parameter describing the capacity of the environment. The competition pressure exerted by an individual of type 1 on an individual of type 1 (resp. type 2) is given by \(\frac{c_{11}} {K}\) (resp. \(\frac{c_{21}} {K}\)). The competition pressure exerted by an individual of type 2 is, respectively, given by \(\frac{c_{12}} {K}\) and \(\frac{c_{22}} {K}\). The parameters c ij are assumed to be positive.

By similar arguments as in Subsection 3.1, the large K-approximation of the population dynamics is described by the well-known competitive Lotka-Volterra dynamical system. Let x 1(t) (resp. x 2(t)) be the limiting renormalized type 1 population size (resp. type 2 population size). We get

This system has been extensively studied and its long time behavior is well known. There are 4 possible equilibria: the unstable equilibrium (0, 0) and three stable ones: \(( \frac{r_{1}} {c_{11}},0)\), \((0, \frac{r_{2}} {c_{22}} )\) and a non-trivial equilibrium \((x_{1}^{{\ast}},x_{2}^{{\ast}})\) given by

Of course, the latter is possible if the two coordinates are positive. The (unique) solution of (6.1) converges to one of the stable equilibria, describing either the fixation of one species or the co-existence of both species. The choice of the limit depends on the signs of the quantities \(r_{2}c_{11} - r_{1}c_{21}\) and \(r_{1}c_{22} - r_{2}c_{12}\) which, respectively, quantify the invasion ability of the subpopulation 2 (resp. 1) in a type 1 (resp. type 2) monomorphic resident population.

One could extend (6.1) to negative coefficients c ij , describing a cooperation effect of species j on the growth of species i. The long time behavior can be totally different. For example, the prey–predator models have been extensively studied in ecology (see [39], Part 1). The simplest prey–predator system

with \(r_{1},r_{2},c_{12},c_{21} > 0\), has periodic solutions.

Stochastic models have also been developed, based on this two type-population model. Following the previous sections, a first point of view consists in generalizing the logistic Feller stochastic differential equation to this two-dimensional framework. The stochastic logistic Lotka-Volterra process is then defined by

where the Brownian motions B 1 and B 2 are independent and give rise to the demographic stochasticity (see Cattiaux-Méléard [20]). Another point of view consists in taking account the environmental stochasticity (see Evans, Hening, Schreiber [34]).

Of course, we could also study multi-dimensional systems corresponding to multi-type population models. In what follows we are more interested in modeling the case where the types of the individuals belong to a continuum. That will allow us to add mutation events where the offspring of an individual may randomly mutate and create a new type.

2 Continuum of types and measure-valued processes

Even if the evolution appears as a global change in the state of a population, its basic mechanisms, mutation and selection, operate at the level of individuals. Consequently, we model the evolving population as a stochastic system of interacting individuals, where each individual is characterized by a vector of phenotypic trait values. The trait space \(\mathcal{X}\) is assumed to be a closed subset of \(\mathbb{R}^{d}\), for some d ≥ 1.

We will denote by \(M_{F}(\mathcal{X})\) the set of all finite non-negative measures on \(\mathcal{X}\). Let \(\mathcal{M}\) be the subset of \(M_{F}(\mathcal{X})\) consisting of all finite point measures:

Here and below, δ x denotes the Dirac mass at x. For any \(\mu \in M_{F}(\mathcal{X})\) and any measurable function f on \(\mathcal{X}\), we set \(\left < \mu,f\right > =\int _{\mathcal{X}}fd\mu\).

We wish to study the stochastic process (Y t , t ≥ 0), taking its values in \(\mathcal{M}\), and describing the distribution of individuals and traits at time t. We define

\(N_{t} =\langle Y _{t},1\rangle \in \mathbb{N}\) standing for the number of individuals alive at time t, and \(X_{t}^{1},\ldots,X_{t}^{N_{t}}\) describing the individuals’ traits (in \(\mathcal{X}\)).

We assume that the birth rate of an individual with trait x is b(x) and that for a population \(\nu =\sum _{ i=1}^{N}\delta _{x^{i}}\), its death rate is given by \(d(x,C {\ast}\nu (x)) = d(x,\sum _{i=1}^{N}C(x - x^{i}))\). This death rate takes into account the intrinsic death rate of the individual; it does not depend not only on its phenotypic trait x but also on the competition pressure exerted by the other individuals alive, modeled by the competition kernel C. Let p(x) and m(x, z)dz be, respectively, the probability that an offspring produced by an individual with trait x carries a mutated trait and the law of this mutant trait.

Thus, the population dynamics can be roughly summarized as follows. The initial population is characterized by a (possibly random) counting measure \(\nu _{0} \in \mathcal{M}\) at time 0, and any individual with trait x at time t has two independent random exponentially distributed “clocks”: a birth clock with parameter b(x), and a death clock with parameter d(x, C ∗ Y t (x)). If the death clock of an individual rings, this individual dies and disappears. If the birth clock of an individual with trait x rings, this individual produces an offspring. With probability 1 − p(x) the offspring carries the same trait x; with probability p(x) the trait is mutated. If a mutation occurs, the mutated offspring instantly acquires a new trait z, picked randomly according to the mutation step measure m(x, z)dz. When one of these events occurs, all individuals’ clocks are reset to 0.

We are looking for a \(\mathcal{M}\)-valued Markov process \((Y _{t})_{t\geq 0}\) with infinitesimal generator L, defined for all real bounded functions ϕ and \(\nu \in \mathcal{M}\) by

The first term in (6.4) captures the effect of births without mutation, the second term the effect of births with mutation, and the last term the effect of deaths. The density-dependence makes the third term nonlinear.

3 Path construction of the process

Let us justify the existence of a Markov process admitting L as infinitesimal generator. The explicit construction of (Y t ) t ≥ 0 also yields two side benefits: providing a rigorous and efficient algorithm for numerical simulations (given hereafter) and establishing a general method that will be used to derive some large population limits (Chapter 7).

We make the biologically natural assumption that the trait dependency of birth parameters is “bounded,” and at most linear for the death rates. Specifically, we assume

Assumption 6.1.

There exist constants \(\bar{b}\) , \(\bar{d}\) , \(\bar{C}\) , and α and a probability density function \(\bar{m}\) on \(\mathbb{R}^{d}\) such that for each \(\nu =\sum _{ i=1}^{N}\delta _{x^{i}}\) and for \(x,z \in \mathcal{X}\) , \(\zeta \in \mathbb{R}\) ,

These assumptions ensure that there exists a constant \(\hat{C}\), such that for a population measure \(\nu =\sum _{ i=1}^{N}\delta _{x^{i}}\), the total event rate, obtained as the sum of all event rates, is bounded by \(\ \hat{C}N(1 + N)\).

Let us now give a pathwise description of the population process (Y t ) t ≥ 0. We introduce the following notation.

Notation 1.

Let \(\mathbb{N}^{{\ast}} = \mathbb{N}\setminus \{0\}\). Let \(H = (H^{1},\ldots,H^{k},\ldots ): \mathcal{M}\mapsto (\mathbb{R}^{d})^{\mathbb{N}^{{\ast}} }\) be defined by \(H\left (\sum _{i=1}^{n}\delta _{x_{i}}\right ) = (x_{\sigma (1)},\ldots,x_{\sigma (n)},0,\ldots,0,\ldots )\), where \(\sigma\) is a permutation such that \(x_{\sigma (1)} \curlyeqprec \ldots \curlyeqprec x_{\sigma (n)}\), for some arbitrary order \(\curlyeqprec \) on \(\mathbb{R}^{d}\) (for example, the lexicographic order).

This function H allows us to overcome the following (purely notational) problem. Choosing a trait uniformly among all traits in a population \(\nu \in \mathcal{M}\) consists in choosing i uniformly in \(\{1,\ldots,\left < \nu,1\right >\}\), and then in choosing the individual number i (from the arbitrary order point of view). The trait value of such an individual is thus H i(ν).

We now introduce the probabilistic objects we will need.

Definition 6.1.

Let \((\Omega,\mathcal{F},P)\) be a (sufficiently large) probability space. On this space, we consider the following four independent random elements:

-

(i)

a \(\mathcal{M}\)-valued random variable Y 0 (the initial distribution),

-

(ii)

Poisson point measures \(N_{1}(ds,di,d\theta )\), and \(N_{3}(ds,di,d\theta )\) on \(\mathbb{R}_{+} \times \mathbb{N}^{{\ast}}\times \mathbb{R}^{+}\), with the same intensity measure \(\:ds\left (\sum _{k\geq 1}\delta _{k}(di)\right )d\theta \:\) (the “clonal” birth and the death Poisson measures),

-

(iii)

a Poisson point measure \(N_{2}(ds,di,dz,d\theta )\) on \(\mathbb{R}_{+} \times \mathbb{N}^{{\ast}}\times \mathcal{X}\times \mathbb{R}^{+}\), with intensity measure \(\:ds\left (\sum _{k\geq 1}\delta _{k}(di)\right )dzd\theta \:\) (the mutation Poisson point measure).

Let us denote by \((\mathcal{F}_{t})_{t\geq 0}\) the canonical filtration generated by these processes.

We finally define the population process in terms of these stochastic objects.

Definition 6.2.

Assume (H). A \((\mathcal{F}_{t})_{t\geq 0}\)-adapted stochastic process \(Y = (Y _{t})_{t\geq 0}\) is called a population process if a.s., for all t ≥ 0,

Let us now show that if Y solves (6.5), then Y follows the Markovian dynamics we are interested in.

Proposition 6.3.

Assume Assumption 6.1 holds and consider a solution (Y t ) t≥0 of (6.5) such that \(\,\mathbb{E}(\sup _{t\leq T}\langle Y _{t},\mathbf{1}\rangle ^{2}) < +\infty,\ \forall T > 0\) . Then (Y t ) t≥0 is a Markov process. Its infinitesimal generator L is defined by (6.4) . In particular, the law of (Y t ) t≥0 does not depend on the chosen order \(\curlyeqprec \) .

Proof.

The fact that (Y t ) t ≥ 0 is a Markov process is classical. Let us now consider a measurable bounded function ϕ. With our notation, \(Y _{0} =\sum _{ i=1}^{\left <Y _{0},1\right >}\delta _{H^{i }(Y _{0})}\). A simple computation, using the fact that a.s., \(\phi (Y _{t}) =\phi (Y _{0}) +\sum _{s\leq t}(\phi (Y _{s-} + (Y _{s} - Y _{s-})) -\phi (Y _{s-}))\), shows that

Taking expectations, we obtain

Differentiating this expression at t = 0 leads to (6.4). □

Let us show the existence and some moment properties for the population process.

Theorem 6.4.

-

(i)

Assume Assumption 6.1 holds and that \(\mathbb{E}\left (\left < Y _{0},1\right >\right ) < \infty \) . Then the process (Y t ) t≥0 defined in Definition 6.2 is well defined on \(\mathbb{R}_{+}\) .

-

(ii)

If furthermore for some p ≥ 1, \(\mathbb{E}\left (\left < Y _{0},1\right >^{p}\right ) < \infty \) , then for any \(T < \infty \) ,

$$\displaystyle{ \mathbb{E}(\sup _{t\in [0,T]}\left < Y _{t},1\right >^{p}) < +\infty. }$$(6.6)

Proof.

We first prove (ii). Consider the process (Y t ) t ≥ 0. We introduce for each n the stopping time \(\tau _{n} =\inf \left \{t \geq 0,\;\left < Y _{t},1\right > \geq n\right \}\). Then a simple computation using Assumption 6.1 shows that, dropping the non-positive death terms,

Using the inequality \((1 + x)^{p} - x^{p} \leq C_{p}(1 + x^{p-1})\) and taking expectations, we thus obtain, the value of C p changing from one line to the other,

The Gronwall Lemma allows us to conclude that for any \(T < \infty \), there exists a constant C p, T , not depending on n, such that

First, we deduce that τ n tends a.s. to infinity. Indeed, if not, one may find \(T_{0} < \infty \) such that \(\epsilon _{T_{0}} = P\left (\sup _{n}\tau _{n} < T_{0}\right ) > 0\). This would imply that \(\,\mathbb{E}\left (\sup _{t\in [0,T_{0}\wedge \tau _{n}]}\left < Y _{t},1\right >^{p}\right ) \geq \epsilon _{T_{0}}n^{p}\ \) for all n, which contradicts (6.7). We may let n go to infinity in (6.7) thanks to the Fatou Lemma. This leads to (6.6).

Point (i) is a consequence of point (ii). Indeed, one builds the solution (Y t ) t ≥ 0 step by step. One only has to check that the sequence of jump instants T n goes a.s. to infinity as n tends to infinity. But this follows from (6.6) with p = 1. □

4 Examples and simulations

Let us remark that Assumption 6.1 is satisfied in the case where

and b, d, and α are bounded functions.

In the case where moreover, p ≡ 1, this individual-based model can also be interpreted as a model of “spatially structured population,” where the trait is viewed as a spatial location and the mutation at each birth event is viewed as dispersal. This kind of models has been introduced by Bolker and Pacala [13, 14] and Law et al. [54], and mathematically studied by Fournier and Méléard [35]. The case C ≡ 1 corresponds to a density-dependence in the total population size.

Later, we will consider the particular set of parameters, taken from Kisdi [49] and corresponding to a model of asymmetric competition:

and m(x, z)dz is a Gaussian law with mean x and variance \(\sigma ^{2}\) conditioned to stay in [0, 4]. As we will see in Chapter 7, the constant K scaling the strength of competition also scales the population size (when the initial population size is proportional to K). In this model, the trait x can be interpreted as body size. Equation (6.9) means that body size influences the birth rate negatively, and creates asymmetrical competition reflected in the sigmoid shape of C (being larger is competitively advantageous).

Let us give now an algorithmic construction of the population process (in the general case), giving the size N t of the population and the trait vector X t of all individuals alive at time t.

At time t = 0, the initial population Y 0 contains N 0 individuals and the corresponding trait vector is \(\mathbf{X}_{0} = (X_{0}^{i})_{1\leq i\leq N_{0}}\). We introduce the following sequences of independent random variables, which will drive the algorithm.

-

The type of birth or death events will be selected according to the values of a sequence of random variables \((W_{k})_{k\in \mathbb{N}^{{\ast}}}\) with uniform law on [0, 1].

-

The times at which events may be realized will be described using a sequence of random variables \((\tau _{k})_{k\in \mathbb{N}}\) with exponential law with parameter \(\hat{C}\).

-

The mutation steps will be driven by a sequence of random variables \((Z_{k})_{k\in \mathbb{N}}\) with law \(\bar{m}(z)dz\).

We set T 0 = 0 and construct the process inductively for k ≥ 1 as follows.

At step k − 1, the number of individuals is N k−1, and the trait vector of these individuals is \(\mathbf{X}_{T_{k-1}}\).

Let \(T_{k} = T_{k-1} + \frac{\tau _{k}} {N_{k-1}(N_{k-1} + 1)}\). Notice that \(\frac{\tau _{k}} {N_{k-1}(N_{k-1} + 1)}\) represents the time between jumps for N k−1 individuals, and \(\hat{C}(N_{k-1} + 1)\) gives an upper bound of the total rate of events affecting each individual.

At time T k , one chooses an individual i k = i uniformly at random among the N k−1 alive in the time interval \([T_{k-1},T_{k})\); its trait is \(X_{T_{k-1}}^{i}\). (If N k−1 = 0, then Y t = 0 for all t ≥ T k−1.)

-

If \(0 \leq W_{k} \leq \frac{d(X_{T_{k-1}}^{i},\sum _{j=1}^{I_{k-1}}C(X_{ T_{k-1}}^{i} - X_{ T_{k-1}}^{j}))} {\hat{C}(N_{k-1} + 1)} = W_{1}^{i}(\mathbf{X}_{ T_{k-1}})\), then the chosen individual dies, and \(N_{k} = N_{k-1} - 1\).

-

If \(W_{1}^{i}(\mathbf{X}_{ T_{k-1}}) < W_{k} \leq W_{2}^{i}(\mathbf{X}_{ T_{k-1}})\), where

$$\displaystyle{ W_{2}^{i}(\mathbf{X}_{ T_{k-1}}) = W_{1}^{i}(\mathbf{X}_{ T_{k-1}}) + \frac{[1 - p(X_{T_{k-1}}^{i})]b(X_{T_{k-1}}^{i})} {\hat{C}(N_{k-1} + 1)}, }$$then the chosen individual gives birth to an offspring with trait \(X_{T_{k-1}}^{i}\), and N k = N k−1 + 1.

-

If \(W_{2}^{i}(\mathbf{X}_{ T_{k-1}}) < W_{k} \leq W_{3}^{i}(\mathbf{X}_{ T_{k-1}},Z_{k})\), where

$$\displaystyle{ W_{3}^{i}(\mathbf{X}_{ T_{k-1}},Z_{k}) = W_{2}^{i}(\mathbf{X}_{ T_{k-1}})+\frac{p(X_{T_{k-1}}^{i})b(X_{T_{k-1}}^{i})\,m(X_{T_{k-1}}^{i},X_{T_{k-1}}^{i} + Z_{k})} {\hat{C}\bar{m}(Z_{k})(N_{k-1} + 1)}, }$$then the chosen individual gives birth to a mutant offspring with trait \(X_{T_{k-1}}^{i} + Z_{k}\), and N k = N k−1 + 1.

-

If \(W_{k} > W_{3}^{i}(\mathbf{X}_{T_{k-1}},Z_{k})\), nothing happens, and N k = N k−1.

Then, at any time t ≥ 0, the number of individuals and the population process are defined by

The simulation of Kisdi’s example (6.9) can be carried out following this algorithm. We can show a very wide variety of qualitative behaviors depending on the value of the parameters σ, p and K.

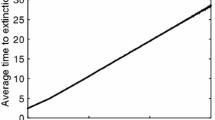

In the following figures (cf. Champagnat-Ferrière-Méléard [21]), the upper part gives the distribution of the traits in the population at any time, using a grey scale code for the number of individuals holding a given trait. The lower part of the simulation represents the dynamics of the total population size N t .

These simulations will serve to illustrate the different mathematical scalings described in Chapter 7. In Fig. 6.1 (a)–( c), we see the qualitative and quantitative effects of increasing scalings K, from a finite trait support process for small K to a wide population density for large K. The simulations of Fig. 6.2 involve birth and death processes with large rates (see Section 7.2) given by

and small mutation step σ K . There is a noticeable qualitative difference between Fig. 6.2 (a) where η = 1∕2, and Fig. 6.2 (b) where η = 1. In the latter, we observe strong fluctuations in the population size and a finely branched structure of the evolutionary pattern, revealing a new form of stochasticity in the large population approximation.

Numerical simulations of trait distributions (upper panels, darker means higher frequency) and population size (lower panels) for accelerated birth and death and concurrently increased parameter K. The parameter η (between 0 and 1) relates the acceleration of demographic turnover to the increase of system size K. (a) Case η = 0. 5. (b) Case η = 1. The initial population is monomorphic with trait value 1. 2 and contains K individuals.

5 Martingale Properties

We give some martingale properties of the process \((Y _{t})_{t\geq 0}\), which are the key point of our approach.

Theorem 6.5.

Suppose Assumption 6.1 holds and that for some p ≥ 2, \(E\left (\left < Y _{0},1\right >^{p}\right ) < \infty \).

-

(i)

For all measurable functions ϕ from \(\mathcal{M}\) into \(\mathbb{R}\) such that for some constant C, for all \(\nu \in \mathcal{M}\) , \(\vert \phi (\nu )\vert +\vert L\phi (\nu )\vert \leq C(1 + \left < \nu,1\right >^{p})\) , the process

$$\displaystyle{ \phi (Y _{t}) -\phi (Y _{0}) -\int _{0}^{t}L\phi (Y _{ s})ds }$$(6.10)is a càdlàg \((\mathcal{F}_{t})_{t\geq 0}\) -martingale starting from 0.

-

(ii)

Point (i) applies to any function \(\phi (\nu ) = \left < \nu,f\right >^{q}\) , with 0 ≤ q ≤ p − 1 and with f bounded and measurable on \(\mathcal{X}\) .

-

(iii)

For such a function f, the process

$$\displaystyle\begin{array}{rcl} & & M_{t}^{f} =\langle Y _{ t},f\rangle -\langle Y _{0},f\rangle -\int _{0}^{t}\int _{ \mathcal{X}}\bigg\{\bigg((1 - p(x))b(x) - d(x,C {\ast} Y _{s}(x))\bigg)f(x) \\ & & \qquad \quad + p(x)b(x)\int _{\mathcal{X}}f(z)\,m(x,z)dz\bigg\}Y _{s}(dx)ds {}\end{array}$$(6.11)is a càdlàg square-integrable martingale starting from 0 with quadratic variation

$$\displaystyle\begin{array}{rcl} & & \langle M^{f}\rangle _{ t} =\int _{ 0}^{t}\int _{ \mathcal{X}}\bigg\{\bigg((1 - p(x))b(x) - d(x,C {\ast} Y _{s}(x))\bigg)f^{2}(x) \\ & & \qquad \qquad + p(x)b(x)\int _{\mathcal{X}}f^{2}(z)\,m(x,z)dz\bigg\}Y _{ s}(dx)ds. {}\end{array}$$(6.12)

Proof.

The proof follows the proof of Theorem 2.8. First of all, note that point (i) is immediate thanks to Proposition 6.3 and (6.6). Point (ii) follows from a straightforward computation using (6.4). To prove (iii), we first assume that \(E\left (\left < Y _{0},1\right >^{3}\right ) < \infty \). We apply (i) with \(\phi (\nu ) =\langle \nu,f\rangle\). This gives us that M f is a martingale. To compute its bracket, we first apply (i) with \(\phi (\nu ) = \left < \nu,f\right >^{2}\) and obtain that

is a martingale. On the other hand, we apply the Itô formula to compute \(\left < Y _{t},f\right >^{2}\) from (6.11). We deduce that

is a martingale. Comparing (6.13) and (6.14) leads to (6.12). The extension to the case where only \(E\left (\left < Y _{0},1\right >^{2}\right ) < \infty \) is straightforward by a localization argument, since also in this case, \(E(\langle M^{f}\rangle _{t}) < \infty \) thanks to (6.6) with p = 2. □

References

B. Bolker, S.W. Pacala. Using moment equations to understand stochastically driven spatial pattern formation in ecological systems. Theor. Pop. Biol. 52, 179–197, 1997.

B. Bolker, S.W. Pacala. Spatial moment equations for plant competition: Understanding spatial strategies and the advantages of short dispersal. Am. Nat. 153, 575–602, 1999.

P. Cattiaux and S. Méléard. Competitive or weak cooperative stochastic Lotka-Volterra systems conditioned on non-extinction. J. Math. Biology 6, 797–829, 2010.

N. Champagnat, R. Ferrière and S. Méléard: Unifying evolutionary dynamics: From individual stochastic processes to macroscopic models. Theor. Pop. Biol. 69, 297–321, 2006.

S. N. Evans, A. Hening & S. Schreiber. Protected polymorphisms and evolutionary stability of patch-selection strategies in stochastic environments. In press, Journal of Mathematical Biology.

N. Fournier and S. Méléard. A microscopic probabilistic description of a locally regulated population and macroscopic approximations. Ann. Appl. Probab. 14, 1880–1919 (2004).

J. Hofbauer and K. Sigmund. Evolutionary Games and Population Dynamics. Cambridge Univ. Press (2002).

E. Kisdi. Evolutionary branching under asymmetric competition. J. Theor. Biol. 197, 149–162, 1999.

R. Law, D. J. Murrell and U. Dieckmann. Population growth in space and time: Spatial logistic equations. Ecology 84, 252–262, 2003.

Author information

Authors and Affiliations

Rights and permissions

Copyright information

© 2015 Springer International Publishing Switzerland

About this chapter

Cite this chapter

Bansaye, V., Méléard, S. (2015). Population Point Measure Processes. In: Stochastic Models for Structured Populations. Mathematical Biosciences Institute Lecture Series(), vol 1.4. Springer, Cham. https://doi.org/10.1007/978-3-319-21711-6_6

Download citation

DOI: https://doi.org/10.1007/978-3-319-21711-6_6

Publisher Name: Springer, Cham

Print ISBN: 978-3-319-21710-9

Online ISBN: 978-3-319-21711-6

eBook Packages: Mathematics and StatisticsMathematics and Statistics (R0)