Abstract

Given that a program has been shown to terminate using a particular proof, it is natural to ask what we can infer about its complexity. In this paper we outline a new approach to tackling this question in the context of term rewrite systems and recursive path orders. From an inductive proof that recursive path orders are well-founded, we extract an explicit realiser which bounds the derivational complexity of rewrite systems compatible with these orders. We demonstrate that by analysing our realiser we are able to derive, in a completely uniform manner, a number of results on the relationship between the strength of path orders and the bounds they induce on complexity.

This work is supported by FWF (Austrian Science Fund) project P-25781.

Access provided by Autonomous University of Puebla. Download conference paper PDF

Similar content being viewed by others

Keywords

- Termination Proofs

- Recursive Path Ordering

- Term Rewriting Systems (TRS)

- Derivational Complexity

- Primitive Recursion

These keywords were added by machine and not by the authors. This process is experimental and the keywords may be updated as the learning algorithm improves.

1 Introduction

Proof theory emphasises proofs over theorems, as put most succinctly by Kreisel’s famous question “What more do we know if we have proved a theorem by restricted means than if we merely know that it is true?”. One application of this quest in the context of program analysis is the link between termination and complexity. Is it possible to derive computational content from a given termination argument, so that we can automatically deduce bounds on the complexity of our programs?

We study this question in the abstract framework of term rewrite systems and recursive path orders, which we take to encompass multiset path orders, lexicographic path orders, and recursive path orders with status. Our main contribution is to analyse the proof that recursive path orders are well-founded and extract an explicit term in Gödel’s system \(\intercal \) which bounds the derivational complexity of rewrite systems reducing under these orders. Our framework is uniform in the sense that our term applies to any variant of recursive path order studied, by just adapting its parameters. We then demonstrate that a simple analysis of our term allows us to uniformly derive the well-known primitive recursive bounds on the derivational complexity of multiset path orders [1] (see Theorem 1) and the multiple recursive bounds on lexicographic path orders [2] (see Theorem 2).

The emphasis of this work is less on the technical results achieved, but in the method used to achieve them. Our re-derivation of the standard bounds for multiset and lexicographic path orders contrasts greatly to the somewhat ad-hoc originally carried out by Hofbauer and Weiermann, and are much more closely related to the study of Buchholz [3], which forms the starting point of our work. However, whereas in [3] complexity bounds are obtained via a suitable formalisation of termination proofs in fragments of arithmetic and rely on Parson’s fundamental work [4], our focus is on extracting an explicit subrecursive bound. Therefore, not only is our proof completely elementary and self-contained, but our concrete realising term is amenable to a much finer analysis of complexity for restricted path orders.

In addition to the aforementioned results, we obtain a novel derivational complexity analysis of recursive path orders with status [5], where we confirm that the induced complexity is multiple recursive, which follows as a corollary to our general boundedness result Theorem 2. This general bound is not surprising in the context of the well-known multiple-recursive bound on the lexicographic path orders and follows with relative ease from earlier work [6]. However, our smooth framework allows us to get rid of the technicalities involved in earlier work.

Throughout the history of term rewriting, a general link has been sought between the strength of a termination argument and the complexity of rewrite systems it admits. An early attempt at such a correspondence is the so-called Cichon’s principle, which states that the derivational complexity function of a TRS \(\mathcal {R}\) for which termination is provable using a termination order of order type \(\alpha \) is eventually dominated by a function from the slow-growing hierarchy \(\mathsf {G}_{\alpha }\) along \(\alpha \), cf. [7]. Unfortunately, while this principle holds for the standard recursive path orders, it is not true in general, even for its relaxed version as proposed by Touzet [8] - see [9] for a proof. It is now accepted that the link between termination orders and complexity is dependent in a much more subtle way on the structure of the termination proof. Therefore, we believe that applying proof-theoretic techniques to analyse the computational meaning of path orders could provide some important insight into the relationship between termination and complexity. This approach has already been successfully pioneered in e.g. [10, 11], and we hope that the work outlined here constitutes a first step towards a similarly successful program in the context of rewriting.

2 Recursive Path Orders

We assume familiarity with term rewriting [5, 12], and recall only some basic notation. Let \(\mathcal {V}\) denote a countable infinite set of variables, \(\mathcal {F}\) a finite set of function symbols, and \({{\mathrm{\mathcal {T}}}}(\mathcal {F},\mathcal {V})\) (\(\mathcal {T}\) for short) the set of terms constructed from these. A term rewrite system (TRS) \(\mathcal {R}\) over \({{\mathrm{\mathcal {T}}}}(\mathcal {F},\mathcal {V})\) is a finite set of rewrite rules \(l \rightarrow r\). For a given term t, |t| denotes its size (the total number of variables and function symbols in t), \(\mathsf {dp}(t)\) its depth (the maximal number of nesting function symbols) and \(\mathsf{Var}(t)\) the set of variables in t. The rewrite relation is denoted as \(\mathrel {\mathrel {\rightarrow }_{\mathcal {R}}}\) and we use the standard notations for its transitive and reflexive closure. The derivation height of a term s with respect to a well-founded, finitely branching relation \(\rightarrow \) is defined as: \({{\mathrm{\mathsf {dh}}}}(s,\rightarrow ) = \max \{ n \mid \exists t \; s \rightarrow ^n t \}\). The derivational complexity function \(\mathsf {dc}_{\mathcal {R}}\) is defined as follows: \(\mathsf {dc}_{\mathcal {R}}(n) \mathrel {:=}\max \{ {{\mathrm{\mathsf {dh}}}}(t,\mathrel {\mathrel {\rightarrow }_{\mathcal {R}}}) \mid |{t}| \leqslant n \}\).

Well-founded path orders are a powerful method for proving the termination of rewrite systems, and recursive path orders are one of the best known of these. For an arbitrary relation \(>\) defined on some set X, we let \(>_\mathsf {mul}\) and \(>_\mathsf {lex}\) denote respectively the multiset and lexicographic extensions of \(>\) to finite tuples \(X^n\). We write \(<_\mathsf {mul}\) for the reverse of \(>_\mathsf {mul}\), and analogously for all other annotated inequality symbols used below.

Definition 1

(Recursive path order). Let \(\succ \) be a well-founded precedence (i.e. a proper order) on a finite signature \(\mathcal {F}\). The recursive path order (RPO) \(\mathrel {{\succ }_{\mathsf {rpo}}}\) with respect to some status function \(\tau :\mathcal {F}\rightarrow \{\mathsf {mul},\mathsf {lex}\}\) is defined recursively as follows: we say that \(t=f({t_1},\dots ,{t_{n}})\mathrel {{\succ }_{\mathsf {rpo}}}s\) if one of the following holds:

-

(a)

\(t_i\mathrel {{\succeq }_{\mathsf {rpo}}} s\) for some \(i=1,\ldots ,m\);

-

(b)

\(s=g({s_1},\dots ,{s_{m}})\) with \(f\succ g\) and \(t\mathrel {{\succ }_{\mathsf {rpo}}}s_i\) for all \(i=1,\ldots ,n\);

-

(c)

\(s=f({s_1},\dots ,{s_{n}})\), \(t\mathrel {{\succ }_{\mathsf {rpo}}}s_i\) for all \(i=1,\ldots ,n\) and \(({t_1},\dots ,{t_{n}})\succ _{\mathsf{rpo},\tau (f)}({s_1},\dots ,{s_{n}})\).

Here \(\mathrel {{\succeq }_{\mathsf {rpo}}}\) denotes the reflexive closure of \(\mathrel {{\succ }_{\mathsf {rpo}}}\).

Recall that the multiset path order \(\mathrel {{\succ }_{\mathsf {mpo}}}\) is the instance of RPO for which \(\tau (f)=\mathsf {mul}\) for all f, and analogously for the lexicographic path-order. We say that a TRS \(\mathcal {R}\) is compatible with \(\mathrel {{\succ }_{\mathsf {rpo}}}\) for some suitable choice of \(\succ \) and \(\tau \) if \({\mathcal {R}} \subseteq {\mathrel {{\succ }_{\mathsf {rpo}}}}\). It is easy (but tedious) to show that \(\mathrel {{\succ }_{\mathsf {rpo}}}\) is closed under both substitutions and contexts, and therefore from compatibility we obtain that \({\mathrel {\mathrel {\rightarrow }_{\mathcal {R}}}} \subseteq {\mathrel {{\succ }_{\mathsf {rpo}}}}\). In the latter case, we say that \(\mathcal {R}\) is reducing with respect to \(\mathrel {{\succ }_{\mathsf {rpo}}}\). It is well-known that \(\mathrel {{\succ }_{\mathsf {rpo}}}\) is well-founded, so any TRS compatible with \(\mathrel {{\succ }_{\mathsf {rpo}}}\) is terminating.

There are two well-known basic strategies to show that a RPO is well-founded. One can either appeal to some variant of the minimal-bad-sequence argument to show that there cannot exist an infinite descending chain of terms \(t_0\mathrel {{\succ }_{\mathsf {rpo}}}t_1\mathrel {{\succ }_{\mathsf {rpo}}}\ldots \), either in the form of Kruskal’s theorem or directly applied to path orders as in [13], or alternatively one can take what is essentially the contrapositive of this statement and proceed via a series of nested inductions on terms, as in e.g. [3, 14]. This second approach is most amenable for the purposes of program extraction, so we sketch the inductive proof below.

Theorem 1

\(\mathrel {{\succ }_{\mathsf {rpo}}}\) is well-founded.

Proof

Let \(t\in \mathrm{WF}\) denote that t is a well-founded term, i.e. there are no infinite descending sequences starting from t. We prove that

Then, since we trivially have \(x\in \mathrm{WF}\) for all variables x, we obtain \(\forall t(t\in \mathrm{WF})\) from (1) by well-founded induction over the structure of terms. Therefore it remains to prove \(\forall f A(f)\). Let us fix, for now, \(f\in \mathcal {F}\) and \({t_1},\dots ,{t_{n}}\in \mathrm{WF}\) and make the following assumptions:

-

(A)

\(\forall g\prec f A(g)\)

-

(B)

\(\forall ({s_1},\dots ,{s_{n}}) \prec _{\mathsf{rpo},\tau (f)}({t_1},\dots ,{t_{n}})({s_1},\dots ,{s_{n}}\in \mathrm{WF}\rightarrow f({s_1},\dots ,{s_{n}})\in \mathrm{WF})\),

where we note that \(\prec _{\mathsf{rpo},\tau (f)}\) is only ever applied to tuples of well-founded terms. We prove that \(f({t_1},\dots ,{t_{n}})\in \mathrm{WF}\) is well-founded by showing that

using induction over \(\mathrel {{\blacktriangleleft }}\), where \(\mathrel {{\blacktriangleleft }}\) denotes the immediate subterm relation. Let us fix s and assume that \(\forall s' \mathrel {{\blacktriangleleft }}s\; B(s')\). Then if \(t=f({t_1},\dots ,{t_{n}})\mathrel {{\succ }_{\mathsf {rpo}}}s\) there are three possibilities:

-

(a)

\(t_i\mathrel {{\succeq }_{\mathsf {rpo}}} s\) for some \(i=1,\ldots ,n\), in which case \(s\in \mathrm{WF}\) by assumption that \(t_i\in \mathrm{WF}\);

-

(b)

\(s=g({s_1},\dots ,{s_{m}})\) with \(f\succ g\) and \(t\mathrel {{\succ }_{\mathsf {rpo}}}s_i\) for all i. Then by our induction hypothesis we must have \({s_1},\dots ,{s_{m}}\in \mathrm{WF}\), and therefore by assumption (A) we have \(g({s_1},\dots ,{s_{m}})\in \mathrm{WF}\) too;

-

(c)

\(s=f({s_1},\dots ,{s_{n}})\) with \(t\mathrel {{\succ }_{\mathsf {rpo}}}s_i\) for all i and \(({t_1},\dots ,{t_{n}})\succ _{\mathsf{rpo},\tau (f)}({s_1},\dots ,{s_{n}})\). Then again \({s_1},\dots ,{s_{n}}\in \mathrm{WF}\), and this time by assumption (B) we have \(f({s_1},\dots ,{s_{n}})\in \mathrm{WF}\).

This establishes B(s), and thus by \(\mathrel {{\blacktriangleleft }}\)-induction we obtain \(\forall s B(s)\) and hence well-foundedness of \(f({t_1},\dots ,{t_{n}})\). We now carry out two further inductions to eliminate the assumptions (A) and (B) in turn. First, from (A) \(\rightarrow \) (B)\(\rightarrow ({t_1},\dots ,{t_{n}}\in \mathrm{WF}\rightarrow f({t_1},\dots ,{t_{n}})\in \mathrm{WF})\) and well-founded induction over \((\mathrm{WF},\succ _{\mathsf{rpo},\tau (f)})\) we obtain (A)\(\rightarrow A(f)\), and this yields \(\forall f A(f)\) by induction on \((\mathcal {F},\succ )\), and we’re done. \(\Box \)

2.1 A Finitary Formulation of Theorem 1

In general, we know that an arbitrary rewrite system \(\mathcal {R}\) compatible with some \(\mathrel {{\succ }_{\mathsf {rpo}}}\) must terminate by well-foundedness of \(\mathrel {{\succ }_{\mathsf {rpo}}}\). However, for a fixed rewrite system, the full strength of Theorem 1 is never used, since unlike \(\mathrel {{\succ }_{\mathsf {rpo}}}\) the rewrite relation \(\rightarrow _\mathcal {R}\) is only finitely branching. Rather, \(\mathcal {R}\) will always lie in some finitary approximation of \(\mathrel {{\succ }_{\mathsf {rpo}}}\), where the size of this approximation will depend in some suitable sense on the ‘size’ of \(\mathcal {R}\). Thus, in order to successfully analyse the complexity of the termination proof, we are not interested in analysing the well-foundedness of \(\mathrel {{\succ }_{\mathsf {rpo}}}\) itself, but only these finitary approximations to it.

A precise characterisation of the approximation of \(\mathrel {{\succ }_{\mathsf {rpo}}}\) needed to prove well-foundedness of a given TRS is established by Buchholz in [3], and we reformulate his idea below in a slightly simplified way (the simplification being possible because here we do not consider varyadic function symbols).

In what follows, we use the abbreviation \(\mathsf{RPO}(>,t,s)\) for the statement that one of the conditions (a)–(c) in Definition 1 holds for s, t and \(>\). Thus one defines \(\mathrel {{\succ }_{\mathsf {rpo}}}\) by \(f({t_1},\dots ,{t_{n}})\mathrel {{\succ }_{\mathsf {rpo}}}s \ \text {iff} \ \mathsf{RPO}(\mathrel {{\succ }_{\mathsf {rpo}}},f({t_1},\dots ,{t_{n}}),s)\).

Definition 2

The approximation \(\succ _k\) of \(\mathrel {{\succ }_{\mathsf {rpo}}}\) is recursively defined as follows: we have \(t=f({t_1},\dots ,{t_{n}})\succ _k s\) iff

where \(\mathsf {dp}(t)\) denotes the depth of t.

We call \(\succ _k\) finitary because by definition for each t there are only finitely many s for which \(t\succ _k s\). The proof of the next theorem can essentially be read-off from Buchholz’s proof in [3]. However, our later proof extraction is based on the simplified proof given here.

Theorem 2

(Buchholz [3]). Any \(\mathcal {R}\) compatible with an \(\mathrel {{\succ }_{\mathsf {rpo}}}\) is contained in \(\succ _k\) for some k depending on \(\mathcal {R}\).

Proof

It is first shown that

-

(i)

\(t \mathrel {{\succ }_{\mathsf {rpo}}}s\) implies \(t\sigma \succ _{\mathsf {dp}(s)} s\sigma \) for any substitution \(\sigma \),

-

(ii)

\(t_j \succ _k s\) implies \(f({t_1},\dots ,{t_{n}})\succ _k f(t_1,\ldots ,t_{j-1},s,t_{j+1},\ldots ,t_n)\) for any f.

Property (i) is most easily established as in [3]; as usual \(t \mathrel {{\succ }_{\mathsf {rpo}}}s\) implies that \(\mathsf{Var}(s\sigma ) \subseteq \mathsf{Var}(t\sigma )\). Furthermore, induction on \(\mathrel {{\succ }_{\mathsf {rpo}}}\) yields that \(t \mathrel {{\succ }_{\mathsf {rpo}}}s\) implies \(\mathsf {dp}(s\sigma )\leqslant \mathsf {dp}(s)+\mathsf {dp}(t\sigma )\). Yet another induction over \(\mathrel {{\succ }_{\mathsf {rpo}}}\) derives (i), and property (ii) is similarly straightforward. Now, for a given \(\mathcal {R}\) let \(k \mathrel {:=}\max \{\mathsf {dp}(r)\; :\; l\rightarrow r\in \mathcal {R}\}\). It is then clear that if \(\mathcal {R}\) is compatible with \(\mathrel {{\succ }_{\mathsf {rpo}}}\) then \(t \mathrel {\mathrel {\rightarrow }_{\mathcal {R}}}s\) implies \(t \succ _k s\), since by (i) we have \(l\sigma \succ _k r\sigma \) for all rules \(l\rightarrow r\), and therefore \(C[l\sigma ] \succ _k C[r\sigma ]\) by induction on (ii). \(\Box \)

3 Bounding the Derivational Complexity of \(\mathcal {R}\)

We now construct a term which forms a recursive analogue to Theorem 1, but takes into account the fact that we only need to consider finitary approximations. Let \(\mathcal {R}\), a RPO \(\mathrel {{\succ }_{\mathsf {rpo}}}\) compatible with \(\mathcal {R}\) and a suitable approximation \(\succ _k\) of \(\mathrel {{\succ }_{\mathsf {rpo}}}\) be fixed for the remainder of the paper.

3.1 Term and Tree Encodings, Gödel’s System \(\intercal \)

We assume that the terms \(\mathcal {T}\) of our rewrite system can be primitive recursively encoded into \({\mathbb {N}}\), and write \(s<_\mathcal {T}t\) to denote that the code of s is less than the code of t. Let \({\mathcal {T}}^{\star }\) denote the set of all finite trees of terms. For \(T\in {\mathcal {T}}^{\star }\) we write \(\mathsf {rt}({T})\) to denote the root of T, and write \(S\subset T\) if S is an immediate subtree of T. Again, we assume that \({\mathcal {T}}^{\star }\) has been primitive recursively encoded into \({\mathbb {N}}\).

In what follows we work in the standard language of system \(\intercal \) in all finite types \(\rho \): terms are built from the usual arithmetic constants, \(\lambda \)-abstraction and application, and Gödel’s primitive recursor \(\mathsf {R}_\rho ^h(n)=hn(\lambda m<n\; . \; \mathsf {R}_\rho ^h(m))\) whose output can have arbitrary type \(\rho \). It is clear that recursion over the decidable relations \(\blacktriangleleft \) and \(\subset \) can be defined in terms of the recursor of base type \({\mathbb {N}}\), since without loss of generality we can assume that if \(s\blacktriangleleft t\) then \(s<_\mathcal {T}t\), and similarly for \(\subset \). Therefore terms build up from these forms of recursion are primitive recursive in the usual sense. On the other hand, given a well-founded lifting \(\subset _*\) of \(\subset \) to tuples \(({\mathcal {T}}^{\star })^n\) (where in the sequel \(\subset _*\) will be either the multiset or lexicographic lifting) we let \(\mathsf {TR}_{\subset _*}\) denote the transfinite recursor over \(\subset _*\) of base output type. We leave open for now how this can be formally defined within system \(\intercal \) as this will depend on the lifting.

3.2 Computing Derivation Trees

Let t be a term and let \(\varPhi _k(t)\) denote the finite tree T with root t, whose branches \((t,t_1,\ldots ,t_n)\) are precisely \(\succ _k\)-derivations \(t\succ _k t_1\succ _k\ldots \succ _k t_n\) from t; terms will be denoted by lower-case letters and derivation trees by upper-case letters. Finiteness of \(\varPhi _k(t)\) follows since \(\succ _k\) is well-founded and finitely branching. We now show how \(\varPhi _k(t)\) can be computed. Let \(t=f({t_1},\dots ,{t_{n}})\) for some \(f\in \mathcal {F}\) and terms \({t_1},\dots ,{t_{n}}\), and suppose that for each \(g\prec f\) we have a function \(F_g:({\mathcal {T}}^{\star })^{m}\rightarrow {\mathcal {T}}^{\star }\) where \(m=\mathsf {ar}(g)\) that satisfies

Suppose, in addition, that we have a function \(G_{{t_1},\dots ,{t_{n}}}:({\mathcal {T}}^{\star })^{n}\rightarrow {\mathcal {T}}^{\star }\) satisfying

Here \(\prec _{k,\tau (f)}\) abbreviates the \(\tau (f)\)-extension of the approximation \(\prec _k\) and \(\mathbf {t}={t_1},\dots ,{t_{n}}\).

Lemma 1

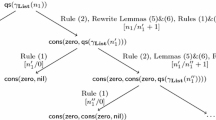

Given \({T_1},\dots ,{T_{n}}\in {\mathcal {T}}^{\star }\) define the function \(H^{F_{g\prec f},G_{\mathbf {t}},{T_1},\dots ,{T_{n}}}:\mathcal {T}\rightarrow {\mathcal {T}}^{\star }\) (where \(F_{g\prec f},G_{\mathbf {t}},{T_1},\dots ,{T_{n}}\) are treated as parameters) by subterm recursion as follows (suppressing parameters):

where [] denotes the empty tree and T[s] the subtree of T with root s. Then

for all \(s\prec _k t\), assuming (A) and (B).

Proof

By induction on \(\blacktriangleleft \). If \(s\prec _k t\) there are three possibilities. First, if \(s\preceq _k t_i\) for some i then either \(s=t_i\) or s is a child of \(t_i\) in \(\varPhi _k(t_i)\), and so \(H(s)=\varPhi _k(t_i)[s]=\varPhi _k(s)\). Otherwise, suppose \(s=g({s_1},\dots ,{s_{m}})\) for \(g\prec f\) and \(s_i\prec _k t\) for all i, by the induction hypothesis we obtain \(H(s_i)=\varPhi _k(s_i)\) and therefore

by assumption (A). Similarly, if \(s=f({s_1},\dots ,{s_{n}})\), where for all i, \(s_i\prec _k t\) and \((s_1,\dots ,s_n) \prec _{k,\tau (f)} (t_1,\dots ,t_n)\), then the induction hypothesis together with (B) yields

From this the lemma follows. \(\Box \)

Let t be a term and let \((S_i)_{i\in I}\) be a finite collection of trees. Then the tree \(t*\prod _{i\in I} S_i\) is the finite tree with root t and immediate subtrees \(S_i\).

Lemma 2

Define the function \(K^{F_{g\prec f},G_{\mathbf {t}}}:({\mathcal {T}}^{\star })^n\rightarrow {\mathcal {T}}^{\star }\) as follows:

where \(t' = f(\mathsf {rt}({T_1}),\ldots ,\mathsf {rt}({T_n}))\). Then assuming (A) and (B) we have

Proof

First, we remark that \(K^{F_{g\prec f},G_{\mathbf {t}}}\) is primitive recursive in \(F_{g\prec f}\) and \(G_{\mathbf {t}}\), since \(s\prec _k t\) is a primitive recursive predicate, and \(\prod _{s\prec _k t}\) a finite search bounded by some primitive recursive term, by definition of \(\prec _k\). Now, due to Lemma 1 we have

and this is just the tree whose branches are \(\succ _k\)-derivations \(t\succ _k s\succ _k \ldots \succ _k s_n\), which is exactly \(\varPhi _k(t)\). \(\Box \)

Lemma 3

Define function \(F_f^{F_{g\prec f}}:({\mathcal {T}}^{\star })^n\rightarrow {\mathcal {T}}^{\star }\) using the transfinite recursor over \(\subset _{\tau (f)}\) as follows

Then assuming (A), for all \({t_1},\dots ,{t_{n}}\) we have

Proof

By induction on \(\prec _{k,\tau (f)}\); suppose for all \((s_1,\dots ,s_n) \prec _{k,\tau (f)} (t_1,\dots ,t_n)\) the lemma is true. Then (B) holds for  and by Lemma 2 we have

and by Lemma 2 we have

This completes the induction step, so the result holds for all arguments \({t_1},\dots ,{t_{n}}\). \(\Box \)

Lemma 4

For each \(f\in \mathcal {F}\) there exists a function \(F_f:({\mathcal {T}}^{\star })^n\rightarrow {\mathcal {T}}^{\star }\) for \(n=\mathsf {ar}(f)\) satisfying

Proof

By Lemma 3 we construct \(F_f\) in terms of \(F_g\) for \(g\prec f\) assuming (A), and since \(\prec \) is well-founded this construction is well-defined and correct for all f. \(\Box \)

Theorem 3

There exists a function \(F:\mathcal {T}\rightarrow {\mathcal {T}}^{\star }\) primitive recursive in \(\mathsf {TR}_{\subset _{\tau (f)}}\) for \(f\in \mathcal {F}\) such that

for all terms t.

Proof

Define F using subterm recursion as

Then theorem follows by Lemma 4 and induction over the structure of t. \(\Box \)

3.3 Derivational Complexity

Let \(|{\cdot } |:{\mathcal {T}}^{\star }\rightarrow {\mathbb {N}}\) denote the recursive function which returns the length of the longest branch of trees in \({\mathcal {T}}^{\star }\).

Theorem 4

Suppose that the TRS \(\mathcal {R}\) is compatible with RPO for some suitable status function \(\tau \). Then its derivational complexity is bounded by a function primitive recursive in \(\mathsf {TR}_{\subset _{\tau (f)}}\) for \(f\in \mathcal {F}\).

Proof

By Theorem 2, if \(\mathcal {R}\) is compatible with RPO then it is compatible with \(\succ _k\) for some k, and so by Theorem 3 \(\rightarrow _\mathcal {R}\) derivations from t are contained in the tree F(t), where F is primitive recursive in the \(\mathsf {TR}_{\subset _{\tau (f)}}\). In particular, \({{\mathrm{\mathsf {dh}}}}(t,\mathrel {\mathrel {\rightarrow }_{\mathcal {R}}})\leqslant |{F(t)} |\), and therefore

which is primitive recursive in F since we can bound the search \(|{t}|\le n\) because we only need to search over a finite number of variables. \(\Box \)

We can now re-derive, in a completely uniform way, some of the well-known complexity results concerning recursive path orders. To do this, first let \(\mathsf {TR}_{\mathsf {mul}(n)}\) denote multiset recursion of lowest type over tuples \((x_1,\ldots ,x_n):{\mathbb {N}}^n\) of size n, and \(\mathsf {TR}_{\mathsf {lex}(n)}\) lexicographic recursion. Then we have the following.

Lemma 5

-

(a)

\(\mathsf {TR}_{\mathsf {mul}(n)}\) is definable from the Gödel recursor of lowest type;

-

(b)

\(\mathsf {TR}_{\mathsf {lex}(n)}\) is definable from the Gödel type 1 recursor.

Proof

Part (a) is straightforward, as one can easily find a (primitive recursive) encoding of \({\mathbb {N}}^n\) into \({\mathbb {N}}\) that preserves the multiset order.

For (b) we use induction on n. It’s clear that \(\mathsf {TR}_{\mathsf {lex}(1)}\) is just primitive recursion in the usual sense. Now, assuming that \(\mathsf {TR}_{\mathsf {lex}(n-1)}\) has been defined, use this to construct the functional \(h^H:{\mathbb {N}}\rightarrow ({\mathbb {N}}\rightarrow {\mathbb {N}}^{n-1}\rightarrow {\mathbb {N}})\rightarrow {\mathbb {N}}^{n-1}\rightarrow {\mathbb {N}}\), parametrised by \(H:{\mathbb {N}}\rightarrow {\mathbb {N}}^{n-1}\rightarrow ({\mathbb {N}}\rightarrow {\mathbb {N}}^{n-1}\rightarrow {\mathbb {N}})\rightarrow {\mathbb {N}}\), and defined by

Now by unwinding definitions we see that the term \(\mathsf {R}_{1}^{h}:{\mathbb {N}}\rightarrow {\mathbb {N}}^{n-1}\rightarrow {\mathbb {N}}\) satisfies

where now \(\mathbf {x}=(x_1,\ldots ,x_n)\). But this is just recursion over \(\mathsf {lex}{(n)}\). \(\Box \)

Corollary 1

(Hofbauer [1]). If \(\mathcal {R}\) is compatible with MPO then \(\mathcal {R}\) has primitive recursive derivational complexity.

Proof

This follows from Lemma 5(a) and the observation that \(\mathsf {TR}_{\subset _\mathsf {mul}}\) is definable from \(\mathsf {TR}_{\mathsf {mul}}\) since \(({\mathcal {T}}^{\star },\subset )\) can be recursively encoded in \(({\mathbb {N}},<)\). \(\Box \)

Corollary 2

(Weiermann [2]). If \(\mathcal {R}\) is compatible with RPO then \(\mathcal {R}\) has multiply recursive derivational complexity.

Proof

This follows analogously to Corollary 1, this time using Lemma 5(b). The fact that type one functions definable from the Gödel level 1 recursor are multiply recursive is folklore, see e.g. [15]. \(\Box \)

4 Conclusion

The most important feature of our work is not the rederivation of known complexity bounds, but in the manner in which we were able to do this. By constructing a concrete realising term F as a computational analogue to Theorem 1 which computes finitary \(\succ _k\)-derivation trees, we provided a bridge which relates the proof-theoretic complexity of well-founded recursive path orders to the derivational complexity of rewrite systems compatible with these orders.

A crucial point that we want to explore in future work is that our realising term is uniformly dependent on the parameters of the recursive path order used to prove termination, along with the size of the rewrite system, and any restriction in these parameters will cause a corresponding restriction in the complexity of F. Therefore a further, more detailed analysis of the structure of the realiser should enable us to obtain more refined complexity bounds.

For example, it follows from Weiermann’s original derivational complexity analysis of the lexicographic path order that the induced multiple recursive bound allows parametrisation in the maximal arity of the function symbols, cf. [2], see also [16, Chap. 8]. Similar results follow from Hofbauer’s analysis of the multiset path order, cf. [1]. We expect that these and similar finer characterisations of the derivational complexity induced by specific parameters of the recursive path orders can be obtained with relative ease in our context. More generally, we hope to extend these results and in particular derive new criteria on path orders which guarantee feasible complexity of rewrite systems.

As another example, one could study restricted variants of the lexicographic lifting on tuples which do not require type 1 recursion to define the corresponding recursor, giving us strengthenings of the multiset path order which allow us to prove interesting closure properties for the primitive recursive functions, an idea initiated by Cichon and Weiermann in [17].

References

Hofbauer, D.: Termination proofs by multiset path orderings imply primitive recursive derivation lengths. TCS 105, 129–140 (1992)

Weiermann, A.: Termination proofs for term rewriting systems with lexicographic path ordering imply multiply recursive derivation lengths. TCS 139, 355–362 (1995)

Buchholz, W.: Proof-theoretic analysis of termination proofs. APAL 75, 57–65 (1995)

Parsons, C.: On a number theoretic choice schema and its relation to induction. In: Proceedings of the Intuitionism and Proof Theory, pp. 459–473 (1970)

Baader, F., Nipkow, T.: Term Rewriting and All That. Cambridge University Press, Cambridge (1998)

Moser, G., Weiermann, A.: Relating derivation lengths with the slow-growing hierarchy directly. In: Nieuwenhuis, R. (ed.) RTA 2003. LNCS, vol. 2706, pp. 296–310. Springer, Heidelberg (2003)

Cichon, E.A.: Termination orderings and complexity characterisations. In: Aczel, P., Simmons, H., Wainer, S.S. (eds.) Proof Theory, pp. 171–193. Cambridge University Press, Cambridge (1992)

Touzet, H.: Encoding the hydra battle as a rewrite system. In: Brim, L., Gruska, J., Zlatuška, J. (eds.) MFCS 1998. LNCS, vol. 1450, p. 267. Springer, Heidelberg (1998)

Moser, G.: KBOs, ordinals, subrecursive hierarchies and all that. JLC (2015, to appear)

Figueira, D., Figueira, S., Schmitz, S., Schnoebelen, P.: Ackermannian and primitive-recursive bounds with dickson’s lemma. In: Proceedings of the 26th LICS, pp. 269–278. IEEE (2011)

Berardi, S., Oliva, P., Steila, S.: Proving termination with transition invariants of height \(\omega \). In: Proceedings of the 15th ICTCS, pp. 237–240 (2014)

Terese, : Term Rewriting Systems. Cambridge Tracks in Theoretical Computer Science. Cambridge University Press, Cambridge (2003)

Ferreira, M.C.F., Zantema, H.: Well-foundedness of term orderings. In: Lindenstrauss, N., Dershowitz, N. (eds.) CTRS 1994. LNCS, vol. 968, pp. 106–123. Springer, Heidelberg (1995)

Goubault-Larrecq, J.: Well-founded recursive relations. In: Fribourg, L. (ed.) CSL 2001 and EACSL 2001. LNCS, vol. 2142, p. 484. Springer, Heidelberg (2001)

Weiermann, A.: How is it that infinitary methods can be applied to finitary mathematics? Gödel’s T: a case study. JSL 63, 1348–1370 (1998)

Arai, T.: Some results on cut-elimination, provable well-orderings, induction, and reflection. APAL 95, 93–184 (1998)

Cichon, E.A., Weiermann, A.: Term rewriting theory for the primitive recursive functions. APAL 83, 199–223 (1997)

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Rights and permissions

Copyright information

© 2015 Springer International Publishing Switzerland

About this paper

Cite this paper

Moser, G., Powell, T. (2015). On the Computational Content of Termination Proofs. In: Beckmann, A., Mitrana, V., Soskova, M. (eds) Evolving Computability. CiE 2015. Lecture Notes in Computer Science(), vol 9136. Springer, Cham. https://doi.org/10.1007/978-3-319-20028-6_28

Download citation

DOI: https://doi.org/10.1007/978-3-319-20028-6_28

Published:

Publisher Name: Springer, Cham

Print ISBN: 978-3-319-20027-9

Online ISBN: 978-3-319-20028-6

eBook Packages: Computer ScienceComputer Science (R0)