Abstract

This paper describes extensions of the generalized summation-by-parts (GSBP) framework to the approximation of the second derivative with a variable coefficient and to time integration. GSBP operators for the second derivative lead to more efficient discretizations, relative to the classical finite-difference SBP approach, as they can require fewer nodes for a given order of accuracy. Similarly, for time integration, time-marching methods based on GSBP operators can be more efficient than those based on classical SBP operators, as they minimize the number of solution points which must be solved simultaneously. Furthermore, we demonstrate the link between GSBP operators and Runge-Kutta time-marching methods.

Access provided by Autonomous University of Puebla. Download conference paper PDF

Similar content being viewed by others

Keywords

- Time-marching Method

- First-derivative Operator

- Simultaneous Approximation Term (SAT)

- Pointwise Solution

- Dual Consistency

These keywords were added by machine and not by the authors. This process is experimental and the keywords may be updated as the learning algorithm improves.

1 Introduction

In this paper, we present an overview of generalized summation-by-parts (GSBP) operators [7] for the approximation of the second derivative with a variable coefficient and as time integration methods. Further details can be found in [2, 6]. The benefit of the GSBP approach is that it broadens the applicability of the SBP approach to a wider class of operators and provides a straightforward methodology to construct novel operators with the summation-by-parts (SBP) property. This enables the use of simultaneous approximation terms (SATs) for the weak imposition of initial and boundary conditions and inter-element/block coupling, leading to schemes that are provably consistent, conservative, and stable.

The GSBP framework extends the definition of SBP operators given by Kreiss and Scherer [13] to those that have a combination of (1) no repeating interior point operator, (2) nonuniform nodal distributions, and (3) operators that do not include one or both boundary nodes. The GSBP framework leads to operators that approximate the first derivative and that mimic the integration-by-parts (IBP) property of the first derivative in a similar way as [13] for such operators.

The vast majority of work on SBP-SAT schemes has been in the context of classical finite-difference SBP operators, typified by uniform nodal spacing, in computational space, and a repeating interior point operator (see the two review papers [8, 19]). There have been a number of extensions to more general operators; for example, Carpenter and Gottlieb [4] realized that the SBP property defined by Kreiss and Scherer [13] applies to a broad class of operators. They proved that using the Lagrangian interpolant, operators with the SBP property can be constructed on nearly arbitrary nodal distributions. The GSBP framework [7] unifies many of these extensions. In contrast, the extensions to the classical definition found in Carpenter et al. [5] and Abarbanel and Chertock [1] are not unified in the GSBP framework.

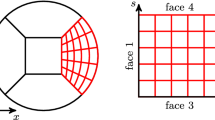

Using GSBP operators, derivatives can be approximated using a traditional finite-difference approach where h-refinement is performed by increasing the number of nodes in the mesh. Alternatively, the discretization can be implemented using an element approach where the domain is subdivided into a number of elements, each of which contains a fixed number of nodes, and h-refinement is carried out by increasing the number of elements. GSBP operators that have a repeating interior point operator can be applied in the traditional approach, while those that have a fixed nodal distribution can only be applied using an element approach.

Nordström and Lundquist [15, 18] have applied classical SBP operators as time integrators. They constructed fully discrete approximations that are provably consistent, conservative, and stable. The ideas of [15, 18] equally apply to GSBP operators, enabling the use of smaller operators for the same order of accuracy and hence more efficient time-marching methods.

The objectives of this paper are to present the extensions of the GSBP approach to the second derivative with a variable coefficient and to time integration.

2 Generalized Summation-by-Parts Operators for the Second Derivative

In this section, we review the construction of GSBP operators for the second derivative with a variable coefficient [6] that lead to stable and conservative schemes for partial differential equations (PDEs) that contain first, second, and mixed-derivative terms. GSBP operators for the first derivative are defined as follows [7]:

Definition 1 (First-Derivative GSBP Operator)

A matrix operator, \(\mathsf{D}_{1} \in \mathbb{R}^{N\times N}\), on a nodal distribution x, approximating the first derivative \(\frac{\partial \mathcal{U}} {\partial x}\), is a GSBP operator of order p if it is exact for the restriction of monomials up to degree p and

-

\(\mathsf{D}_{1} = \mathsf{H}^{-1}\mathsf{Q}\), where H is a symmetric positive-definite matrix;

-

\(\mathsf{Q} + \mathsf{Q}^{\mathrm{T}} = \mathsf{E}\); and

-

\(\mathsf{E} = \mathbf{s}_{\beta }\mathbf{s}_{\beta }^{\mathrm{T}} -\mathbf{s}_{\alpha }\mathbf{s}_{\alpha }^{\mathrm{T}}\);

where the projection vectors s β and s α are constructed such that \(\mathbf{s}_{\beta }^{\mathrm{T}}\mathbf{u}\) and \(\mathbf{s}_{\alpha }^{\mathrm{T}}\mathbf{u}\) are at least p + 1 order approximations to \(\mathcal{U}\left (\beta \right )\) and \(\mathcal{U}\left (\alpha \right )\), respectively.

We note that for diagonal-norm GSBP operators, \(\mathbf{v}^{\mathrm{T}}\mathsf{H}\mathbf{u}\) is an order 2p approximation to the L 2 inner product \(\int _{\alpha }^{\beta }\mathcal{V}\mathcal{U}\mathrm{d}x\) [7].

The application of first-derivative GSBP operators twice leads to stable and conservative schemes. However, for operators with a repeating interior point operator, they lead to an unnecessarily wide interior point operator, and in general, lead to approximations of the second-derivative that are one order less accurate than the first-derivative operator. Alternatively, we can construct distinct GSBP operators approximating the second derivative that are one order more accurate than the application of the first-derivative operator twice while retaining the ability to prove stability using the energy method—we denote such operators as order-matched. To maintain stability of the semi-discrete or fully-discrete forms of the class of PDEs of interest, certain relations need to exist between the operators used to discretize the first-derivative, second-derivative, and mixed-derivative terms. One approach is to use operators that are compatible with the first-derivative operator used to discretize mixed-derivative terms [17]. In this paper, we concentrate on diagonal-norm compatible and order-matched operators, since for the variable-coefficient case, it is unclear how to construct stable schemes using dense-norm compatible and order-matched operators (see Mattsson and Almquist [16] for a discussion and potential solution). To motivate the form of compatible and order-matched GSBP operators for the second derivative with a variable coefficient, consider the following decomposition of the application of first-derivative GSBP operators twice:

We construct compatible and order-matched GSBP operators as the application of the first-derivative operator twice plus corrective terms in order to increase the order of the resultant operator. These ideas lead to the following definition [6]:

Definition 2 (Compatible and Order-Matched Second-Derivative GSBP Operator)

A diagonal-norm order-matched GSBP operator, \(\mathsf{D}_{2}(\mathsf{B}) \in \mathbb{R}^{N\times N}\), approximating the second derivative \(\frac{\partial } {\partial x}\left (\mathcal{B}\frac{\partial \mathcal{U}} {\partial x} \right )\), is compatible with the first-derivative GSBP operator, D 1 of order p, on a nodal distribution x, if it is exact for the restriction of monomials up to degree p + 1 and is of the form

The matrices R i are symmetric negative semidefinite. Furthermore, the matrix \(\tilde{\mathsf{D}}_{1}\) is an approximation to the first derivative of at least order p + 1 and the matrix B is constructed from the restriction of the variable coefficient \(\mathcal{B}\) onto its diagonal. The remainder of the matrices are given in Definition 1.

Stability can be proven if all derivative operators share the same norm H, and the compatibility that is enforced on the second-derivative operator is with respect to the first-derivative operator used to approximate mixed-derivative terms.

Definition 2 leads to stable schemes if the operator satisfies an SBP property. In fact, GSBP operators are constructed such that they mimic the IBP property of the continuous PDE. For example, consider the linear convection-diffusion equation with a variable coefficient on the domain x ∈ [α, β]:

Applying the energy method to (3), i.e., multiplying by the solution, integrating in space, and using integration by parts, leads to

With appropriate boundary conditions, (4) can be used to show that the solution is bounded in terms of the data of the problem (for more information see [9, 10, 12]). The semi-discrete version of (3), using a diagonal-norm first-derivative GSBP operator and a compatible and order-matched GSBP operator, is given as

Applying the discrete energy method to (5), i.e., multiplying by \(\mathbf{u}^{\mathrm{T}}\mathsf{H}\) and adding the transpose of the product to itself, leads to

where \(\|\mathbf{u}\|_{\mathsf{H}}^{2} = \mathbf{u}^{\mathrm{T}}\mathsf{H}\mathbf{u}\). We see that (6) is mimetic of the continuous case (4) with the addition of a negative semidefinite term of the order of the discretization. Using appropriate SATs for the imposition of boundary conditions and inter-element coupling, (6) can be shown to be stable.

The main difficulty in deriving compatible and order-matched operators is ensuring that the R i are symmetric negative semidefinite, since it is necessary to ensure that the eigenvalues of N matrices are non-positive. This means that it is necessary to solve the eigenvalue problem of N matrices of size N × N to determine additional constraints. For compatible and order-matched operators, an alternative method is to construct the variable-coefficient operator from the constant-coefficient operator. This means that it is only necessary to solve the eigenvalue problem for one matrix. The resultant operator has the following form [6]:

where R c and \(\tilde{\mathsf{D}}_{1}\) are from the constant-coefficient operator. As an example, consider the approximation of the first and second derivative of order 3 on x ∈ [−1, 1] using 5 Chebyshev-Gauss quadrature nodes. This nodal distribution does not have nodes on the boundary of the domains and is given as, to 5 digits of precision, \(\mathbf{x} = \left [\begin{array}{ccccc} - 0.95106,& - 0.58779,&0.0,&0.58779,&0.95106 \end{array} \right ]\). The first-derivative GSBP operator has a norm matrix that is an order 6 approximation to the L 2 inner product; to 5 digits of precision, these operators are given by

The projection vectors used in the decomposition of E and to construct SATs (see Sect. 3 where this is shown for time integration) are given as

\(\mathbf{s}_{\beta }^{\mathrm{T}} = \left [\begin{array}{ccccc} 0.031677,& - 0.10191,&0.20000,& - 0.39252,&1.2628 \end{array} \right ],\)

\(\mathbf{s}_{\alpha }^{\mathrm{T}} = \left [\begin{array}{ccccc} 1.2628,& - 0.39252,&0.20000,& - 0.10191,&0.031677 \end{array} \right ].\) For the second derivative, the remaining matrices are given as

3 Time-Marching Methods Based on Generalized Summation-by-Parts Operators

This section describes the application of GSBP operators to the solution of initial value problems

This is an extension of the work presented in [15, 18] for time-marching methods based on classical SBP operators. It also draws on the concepts of dual-consistency and superconvergence presented in [11] for classical SBP operators.

The primary advantage of the GSBP approach in time is the significantly smaller number of solution points required per block for a given order of accuracy. With careful selection of SAT coefficients in a multiblock implementation, the pointwise solution within each block is decoupled from the solution in subsequent blocks. As a result, each block can be solved sequentially in time, though the pointwise solution within each block remains in general fully coupled. Thus, the reduced size of the operators possible with the GSBP approach can significantly improve the efficiency of the time integration.

Consider the application of a single-block classical SBP or GSBP time-marching method to the initial value problem (8):

where the second term on the right-hand side, the SAT penalty term, weakly enforces the initial condition. The most practical choice of SAT coefficient \(\sigma\) is − 1, which renders the temporal discretization dual consistent and L-stable [2]. In addition, if the norm associated with the GSBP operator is diagonal, then the discretization becomes algebraically stable [2]. This choice of SAT coefficient implies the superconvergence of the pointwise solution projected to the boundary at β, \(\mathbf{s}_{\beta }^{\mathrm{T}}\mathbf{y}_{\mathrm{d}}\), as well as linear functionals of the solution, \(\int _{\alpha }^{\beta }g(t)y(t)dt\), integrated with the norm of the discretization [2]. These properties all extend to the multiblock case with appropriate choice of interface SAT coefficients.

Time-marching methods based on classical SBP and GSBP operators are a subclass of Runge-Kutta (RK) methods, which are written as

with internal stage approximations:

where A and b are the coefficient matrices of the method, c is the abscissa, and h is the step size. With a dual consistent choice of SAT coefficients, classical SBP or GSBP temporal discretizations can be rearranged and written in this form. The pointwise solution mimics the RK stage approximations, and the projection of the pointwise solution to the boundary at β becomes the solution update [2]. The coefficient matrices of the equivalent RK scheme, written in terms of the SBP-SAT discretization (9), are [2]:

where \(\mathbf{c} = \frac{\mathbf{t}-\mathbb{1}\alpha } {h}\), \(h =\beta -\alpha\), and \(\mathbb{1} = [1,\ldots,1]^{\mathrm{T}}\).

This characterization of classical SBP and GSBP time-marching methods enables a direct comparison with traditional time-marching methods. It also enables common time-marching ideas to be transferred back into the GSBP realm, for example diagonally-implicit methods, where the pointwise solution within each block can be solved sequentially in time (See [2] for examples). It also highlights the fact that dual-consistent SBP and GSBP time-marching methods do not define a new class of methods. Nevertheless, the GSBP framework provides a relatively simple way of constructing high-order implicit RK schemes with high-stage order and L-stability. Furthermore, if the norm is diagonal then the resulting scheme will be algebraically stable [2].

As an example, consider dual-consistent time-marching methods based on classical SBP and Legendre-Gauss GSBP operators which are exact for third-order polynomials. The minimum number of solution points per block required for the diagonal and dense-norm classical SBP operators is 12 and 8, respectively. The rate of superconvergence obtained for these classical SBP time-marching methods is 6 and 4, respectively. In contrast, only 4 solution points per block are required for a Legendre-Gauss based GSBP operator to be exact for third-order polynomials. Furthermore, the rate of superconvergence obtained is 7 [2], higher than both of the classical SBP time-marching methods. This translates to significantly more efficient time integration.

The first derivative GSBP operator and diagonal norm of the 4-point Legendre-Gauss GSBP time-marching method discussed above are:

This is derived for the quadrature points \(\mathbf{t} = \left [\begin{array}{cccc} - 0.86114, & - 0.33998, & 0.33998, & 0.86114 \end{array} \right ]\), defined for the interval [−1, 1]. The equivalent RK scheme has the coefficient matrices:

with abscissa: \(\mathbf{c} = \left [\begin{array}{cccc} 0.069432, & 0.33001, & 0.66999, & 0.93057 \end{array} \right ]\). This RK scheme differs from the well-known Kuntzmann-Butcher Gauss RK methods [3, 14] which are one order higher, but forfeit L-stability.

4 Conclusions

The developments reviewed in this paper extend the GSBP approach to the second derivative with a variable coefficient as well as to time marching. The benefit of the GSBP approach, relative to the classical SBP approach, is that for a given order of accuracy, operators that require fewer nodes can be constructed. This leads to more efficient methods.

References

S.S. Abarbanel, A.E. Chertock, A. Yefet, Strict stability of high-order compact implicit finite-difference schemes: the role of boundary conditions for hyperbolic PDES, I. J. Comput. Phys. 160, 42–66 (2000)

P.D. Boom, D.W. Zingg, High-order implicit time-marching methods based on generalized summation-by-parts operators. SIAM J. Sci. Comput. (accepted)

J.C. Butcher, Implicit Runge-Kutta processes. Math. Comput. 18(85), 50–64 (1964)

M.H. Carpenter, D. Gottlieb, Spectral methods on arbitrary grids. J. Comput. Phys. 129(1), 74–86 (1996)

M.H. Carpenter, D. Gottlieb, S. Abarbanel, Time-stable boundary conditions for finite-difference schemes solving hyperbolic systems: methodology and application to high-order compact schemes. J. Comput. Phys. 111(2), 220–236 (1994)

D.C. Del Rey Fernández, D.W. Zingg, Generalized summation-by-parts operators for the second derivative with a variable coefficient. SIAM J. Sci. Comput. (accepted)

D.C. Del Rey Fernández, P.D. Boom, D.W. Zingg, A generalized framework for nodal first derivative summation-by-parts operators. J. Comput. Phys. 266(1), 214–239 (2014)

D.C. Del Rey Fernández, J.E. Hicken, D.W. Zingg, Review of summation-by-parts operators with simultaneous approximation terms for the numerical solution of partial differential equations. Comput. Fluids 95(22), 171–196 (2014)

B. Gustafsson, High Order Difference Methods for Time Dependent PDE (Springer, Berlin, 2008)

B. Gustafsson, H.O. Kreiss, J. Oliger, Time-Dependent Problems and Difference Methods, 2nd edn. Pure and Applied Mathematics (Wiley, New York, 2013)

J.E. Hicken, D.W. Zingg, Superconvergent functional estimates from summation-by-parts finite-difference discretizations. SIAM J. Sci. Comput. 33(2), 893–922 (2011)

H.O. Kreiss, J. Lorenz, Initial-Boundary Value Problems and the Navier-Stokes Equations. Classics in Applied Mathematics, vol. 47 (SIAM, Philadelphia, 2004)

H.O. Kreiss, G. Scherer, Finite element and finite difference methods for hyperbolic partial differential equations, in Mathematical Aspects of Finite Elements in Partial Differential Equations (Academic, New York/London, 1974), pp. 195–212

J. Kuntzmann, Neuere Entwicklungen der Methode von Runge und Kutta. ZAMM J. Appl. Math. Mech. / Z. Angew. Math. Mech. 41(S1), T28–T31 (1961)

T. Lundquist, J. Nordström, The SBP-SAT technique for initial value problems. J. Comput. Phys. 270(1), 86–104 (2014)

K. Mattsson, M. Almquist, A solution to the stability issues with block norm summation by parts operators. J. Comput. Phys. 15, 418–442 (2013)

K. Mattsson, M. Svärd, M. Shoeybi, Stable and accurate schemes for the compressible Navier-Stokes equations. J. Comput. Phys. 227(4), 2293–2316 (2008)

J. Nordström, T. Lundquist, Summation-by-parts in time. J. Comput. Phys. 251, 487–499 (2013)

M. Svärd, J. Nordström, Review of summation-by-parts schemes for initial-boundary-value-problems. J. Comput. Phys. 268(1), 17–38 (2014)

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Rights and permissions

Copyright information

© 2015 Springer International Publishing Switzerland

About this paper

Cite this paper

Del Rey Fernández, D.C., Boom, P.D., Zingg, D.W. (2015). Generalized Summation by Parts Operators: Second Derivative and Time-Marching Methods. In: Kirby, R., Berzins, M., Hesthaven, J. (eds) Spectral and High Order Methods for Partial Differential Equations ICOSAHOM 2014. Lecture Notes in Computational Science and Engineering, vol 106. Springer, Cham. https://doi.org/10.1007/978-3-319-19800-2_17

Download citation

DOI: https://doi.org/10.1007/978-3-319-19800-2_17

Publisher Name: Springer, Cham

Print ISBN: 978-3-319-19799-9

Online ISBN: 978-3-319-19800-2

eBook Packages: Mathematics and StatisticsMathematics and Statistics (R0)