Abstract

This chapter is organized as follows. Section 5.1 introduces Kriging, which is also called Gaussian process (GP) or spatial correlation modeling. Section 5.2 details so-called ordinary Kriging (OK), including the basic Kriging assumptions and formulas assuming deterministic simulation. Section 5.3 discusses parametric bootstrapping and conditional simulation for estimating the variance of the OK predictor. Section 5.4 discusses universal Kriging (UK) in deterministic simulation. Section 5.5 surveys designs for selecting the input combinations that gives input/output data to which Kriging metamodels can be fitted; this section focuses on Latin hypercube sampling (LHS) and customized sequential designs. Section 5.6 presents stochastic Kriging (SK) for random simulations. Section 5.7 discusses bootstrapping with acceptance/rejection for obtaining Kriging predictors that are monotonic functions of their inputs. Section 5.8 discusses sensitivity analysis of Kriging models through functional analysis of variance (FANOVA) using Sobol’s indexes. Section 5.9 discusses risk analysis (RA) or uncertainty analysis (UA). Section 5.10 discusses several remaining issues. Section 5.11 summarizes the major conclusions of this chapter, and suggests topics for future research. The chapter ends with Solutions of exercises, and a long list of references.

Access provided by Autonomous University of Puebla. Download chapter PDF

Similar content being viewed by others

Keywords

These keywords were added by machine and not by the authors. This process is experimental and the keywords may be updated as the learning algorithm improves.

This chapter is organized as follows. Section 5.1 introduces Kriging, which is also called Gaussian process (GP) or spatial correlation modeling. Section 5.2 details so-called ordinary Kriging (OK), including the basic Kriging assumptions and formulas assuming deterministic simulation. Section 5.3 discusses parametric bootstrapping and conditional simulation for estimating the variance of the OK predictor. Section 5.4 discusses universal Kriging (UK) in deterministic simulation. Section 5.5 surveys designs for selecting the input combinations that gives input/output data to which Kriging metamodels can be fitted; this section focuses on Latin hypercube sampling (LHS) and customized sequential designs. Section 5.6 presents stochastic Kriging (SK) for random simulations. Section 5.7 discusses bootstrapping with acceptance/rejection for obtaining Kriging predictors that are monotonic functions of their inputs. Section 5.8 discusses sensitivity analysis of Kriging models through functional analysis of variance (FANOVA) using Sobol’s indexes. Section 5.9 discusses risk analysis (RA) or uncertainty analysis (UA). Section 5.10 discusses several remaining issues. Section 5.11 summarizes the major conclusions of this chapter, and suggests topics for future research. The chapter ends with Solutions of exercises, and a long list of references.

5.1 Introduction

In the preceding three chapters we focussed on linear regression metamodels (surrogates, emulators); namely, low-order polynomials. We fitted those models to the input/output (I/O) data of the—either local or global—experiment with the underlying simulation model; this simulation model may be either deterministic or random. We used these metamodels for the explanation of the simulation model’s behavior, and for the prediction of the simulation output for input combinations that were not yet simulated.

In the present chapter, we focus on Kriging metamodels. The name Kriging refers to Danie Krige (1919–2013), who was a South African mining engineer. In the 1960s Krige’s empirical work in geostatistics—see Krige (1951)—was formalized by the French mathematician George Matheron (1930–2000), using GPs—see Matheron (1963).

Note: A standard textbook on Kriging in geostatistics involving “spatial datan” is Cressie (1993); more recent books are Chilès and Delfiner (2012) and Stein (1999).

Kriging was introduced as a metamodel for deterministic simulation models or “computer models” in Sacks et al. (1989). Simulation models have k-dimensional input combinations where k is a given positive integer, whereas geostatistics considers only two or three dimensions.

Note: Popular textbooks on Kriging in computer models are Forrester et al. (2008) and Santner et al. (2003). A popular survey article is Simpson et al. (2001).

Kriging for stochastic (random) simulation models was briefly discussed in Mitchell and Morris (1992). Next, Van Beers and Kleijnen (2003) details Kriging in such simulation models, simply replacing the deterministic simulation output by the average computed from the replications that are usual in stochastic simulation. Although Kriging has not yet been frequently applied in stochastic simulation, we believe that the track record Kriging achieved in deterministic simulation holds promise for Kriging in stochastic simulation; also see Kleijnen (2014).

Note: Kleijnen (1990) introduced Kriging into the discrete-event simulation community. A popular review article is Kleijnen (2009). The classic discussion of Kriging in stochastic simulation is Ankenman et al. (2010). More references will follow in the next sections of this chapter.

Kriging is also studied in machine learning. A popular textbook is Rasmussen and Williams (2006). Web sites on GPs in machine learning are

http://www.gaussianprocess.org/

http://ml.dcs.shef.ac.uk/gpss/

Besides the Anglo-Saxon literature, there is a vast French literature on Kriging, inspired by Matheron’s work; see

http://www.gdr-mascotnum.fr/documents.html.

Typically, Kriging models are fitted to data that are obtained for larger experimental areas than the areas used in low-order polynomial regression metamodels; i.e., Kriging models are global instead of local. Kriging models are used for prediction. The final goals are sensitivity analysis and risk analysis—as we shall see in this chapter—and optimization—as we shall see in the next chapter; these goals were also discussed in Sect. 1.2

5.2 Ordinary Kriging (OK) in Deterministic Simulation

In this section we focus on OK, which is the simplest form of universal Kriging (UK), as we shall see in Sect. 5.4. OK is popular and successful in practical deterministic simulation, as many publications report.

Note: These publications include Chen et al. (2006), Martin and Simpson (2005), and Sacks et al. (1989). Recently, Mehdad and Kleijnen (2015a) also reports that in practice OK is likely to give better predictors than UK.

In Sect. 5.2.1 we present the basics of OK; in Sect. 5.2.2 we discuss the problems caused by the estimation of the (hyper)parameters of OK.

5.2.1 OK Basics

OK assumes the following metamodel:

where μ is the constant mean E[y(x)] in the given k-dimensional experimental area, and M(x) is the additive noise that forms a Gaussian (multivariate normal) stationary process with zero mean. By definition, a stationary process has a constant mean, a constant variance, and covariances that depend only on the distance between the input combinations (or “points” in \( \mathbb{R}^{k} \)) x and \( \mathbf{x}^{{\prime}} \) (stationary processes were also defined in Definition 3.2).

Because different Kriging publications use different symbols for the same variable, we now discuss our symbols. We use x—instead of d—because the Kriging literature uses x for the combination of inputs—even though the design of experiments (DOE) literature and the preceding chapters use d for the combination of design variables (or factors); d determines products such as \( x_{j}x_{j^{{\prime}}} \) with \( j,j^{{\prime}} = 1,\ldots,k \). The constant mean μ in Eq. (5.1) is also denoted by β 0; also see the section on UK (Sect. 5.4). Ankenman et al. (2010) calls M(x) the extrinsic noise to distinguish it from the intrinsic noise in stochastic simulation. OK assumes that the simulation output is deterministic (say) w. We distinguish between y (metamodel output) and w (simulation model output), whereas most Kriging publications do not distinguish between y and w (we also distinguished between y and w in the preceding chapters on linear regression; an example of our use of y and w is the predictor formula in Eq. (5.2) below). We try to stick to the symbols used in the preceding chapters; e.g., to denote the number of dimensions we use k (not d, which is used in some Kriging publications), \( \mathbf{\varSigma } \) (not Γ) to denote a covariance matrix, and \( \mathbf{\sigma } \) (not γ or \( \mathbf{\varSigma (x}_{0},.) \)) to denote a vector with covariances.

OK with its constant mean μ does not imply a flat response surface. Actually, OK assumes that M(x) has positive covariances so \( \mathrm{cov}[y(\mathbf{x}),y(\mathbf{x}^{{\prime}})] \) > 0. Consequently, if it happens that y(x) > μ, then \( E[y(\mathbf{x}^{{\prime}})] \) > μ is “very likely” (i.e., the probability is greater than 0.50)—especially if x and \( \mathbf{x}^{{\prime}} \) lie close in \( \mathbb{R}^{k} \). However, a linear regression metamodel with white noise implies cov\( [y(\mathbf{x}),y(\mathbf{x}^{{\prime}})] = 0 \); see the definition of white noise that we gave in Definition 2.3.

OK uses a linear predictor. So let \( \mathbf{w} = \left (w(\mathbf{x}_{1}) \mbox{, $\ldots,$ }w(\mathbf{x}_{n})\right )^{{\prime}} \) denote the n observed values of the simulation model at the n so-called old points (in machine learning these old points are called the “training set”). OK computes the predictor \( \widehat{y}(\mathbf{x}_{0}) \) for a new point x 0 as a linear function of the n observed outputs at the old points:

where w i = f sim(x i ) and f sim denotes the mathematical function that is defined by the simulation model itself (also see Eq. (2.6); the weight \( \lambda _{i} \) decreases with the distance between the new input combination x 0 and the old combination x i , as we shall see in Eq. (5.6); i.e., the weights \( \boldsymbol{\lambda }^{{\prime}} \) = \( (\lambda _{1} \), …, \( \lambda _{n}) \) are not constants (whereas \( \boldsymbol{\beta } \) in linear regression remains constant). Notice that x i = (x i; j ) (i = 1, …, n; j = 1, …, k) so \( \mathbf{X}^{{\prime}} = (\mathbf{x}_{1},\ldots,\mathbf{x}_{n}) \) is a k × n matrix.

To determine the optimal values for the weights \( \boldsymbol{\lambda } \) in Eq. (5.2), we need to specify a criterion for OK. In fact, OK (like other types of Kriging) uses the best linear unbiased predictor (BLUP), which (by definition) minimizes the mean squared error (MSE) of the predictor:

moreover, the predictor must be unbiased so

This bias constraint implies that if the new point coincides with one of the old points, then the predictor must be an exact interpolator; i.e., \( \widehat{y}(\mathbf{x}_{i}) = w(\mathbf{x}_{i}) \) with i = 1, …, n (also see Exercise 5.2 below).

Note: Linear regression uses as criterion the sum of squared residuals (SSR), which gives the least squares (LS) estimator. This estimator is not an exact interpolator, unless n = q where q denotes the number of regression parameters; see Sect. 2.2.1

It can be proven that the solution of the constrained minimization problem defined by Eqs. (5.3) and (5.4) implies that \( \boldsymbol{\lambda } \) must satisfy the following condition where \( \mathbf{1} = (1,\ldots,1)^{{\prime}} \) is an n-dimensional vector with all elements equal to 1 (a more explicit notation would be 1 n ):

Furthermore, it can be proven that the optimal weights are

where \( \mathbf{\mathbf{\varSigma }} = \mathbf{(} \) cov\( (y_{i},y_{i^{{\prime}}})) \)—with \( i,i^{{\prime}} = 1,\ldots,n \)—denotes the n × n symmetric and positive definite matrix with the covariances between the metamodel’s “old” outputs (i.e., outputs of input combinations that have already been simulated), and \( \boldsymbol{\sigma }(x_{0}) \) = (cov(y i , y 0)) denotes the n-dimensional vector with the covariances between the metamodel’s n “old” outputs y i and y 0, where y 0 denotes the metamodel’s new output. Equation (5.1) implies \( \mathbf{\mathbf{\varSigma }} = \mathbf{\mathbf{\varSigma }}_{M} \), but we suppress the subscript M until we really need it; see the section on stochastic simulation (Sect. 5.6). Throughout this book, we use Greek letters to denote unknown parameters (such as covariances), and bold upper case letters for matrixes and bold lower case letters for vectors.

Finally, it can be proven (see, e.g., Lin et al. 2004) that Eqs. (5.1), (5.2), and (5.6) together imply

We point out that this predictor varies with \( \boldsymbol{\sigma }(x_{0}) \); given are the Kriging parameters μ and \( \mathbf{\varSigma } \)—where \( \mathbf{\varSigma } \) depends on the given old input data X—and the old simulation output w(X). So we might replace \( \widehat{y}(\mathbf{x}_{0}) \) by \( \widehat{y}(\mathbf{x}_{0}\vert \mu,\mathbf{\varSigma,X,w)} \) or \( \widehat{y}(\mathbf{x}_{0}\vert \mu,\mathbf{\varSigma,X)} \)—because the output w of a deterministic simulation model is completely determined by X—but we do not use this unwieldy notation.

Exercise 5.1

Is the conditional expected value of the predictor in Eq. (5.7) smaller, equal, or larger than the unconditional mean μ if that condition is as follows: w 1 > μ, w 2 = μ, …, w n = μ?

Exercise 5.2

Use Eq. (5.7) to derive the predictor if the new point is an old point, so x 0 = x i .

The Kriging predictor’s gradient \( \nabla (\widehat{y}) = (\partial \widehat{y}/\partial x_{1},\ldots,\partial \widehat{y}/\partial x_{k}) \) results from Eq. (5.7); details are given in Lophaven et al. (2002, Eq. 2.18). Gradients will be used in Sect. 5.7 and in the next chapter (on simulation optimization). We should not confuse \( \nabla (\widehat{y}) \) (the gradient of the Kriging metamodel) and ∇(w), the gradient of the underlying simulation model. Sometimes we can indeed compute ∇(w) in deterministic simulation (or estimate ∇(w) in stochastic simulation); we may then use ∇(w) (or \( \widehat{\nabla }(w) \)) to estimate better Kriging metamodels; see Qu and Fu (2014), Razavi et al. (2012), Ulaganathan et al. (2014), and Viana et al. (2014)’s references numbered 52, 53, and 54 (among the 221 references in that article).

If we let τ 2 denote the variance of y—where y was defined in Eq. (5.1)—then the MSE of the optimal predictor \( \widehat{y}(\mathbf{x}_{0}) \)—where \( \widehat{y}(\mathbf{x}_{0}) \) was defined in Eq. (5.7)—can be proven to be

Because the predictor \( \widehat{y}(\mathbf{x}_{0}) \) is unbiased, this MSE equals the predictor variance—which is often called the Kriging variance. We denote this variance by \( \sigma _{OK}^{2} \), the variance of the OK predictor. Analogously to the comment we made on Eq. (5.7), we now point out that this MSE depends on \( \boldsymbol{\sigma }(x_{0}) \) only because the other factors in Eq. (5.8) are fixed by the old I/O data (we shall use this property when selecting a new point in sequential designs; see Sect. 5.5.2).

Exercise 5.3

Use Eq. (5.8) to derive that \( \sigma _{OK}^{2} = 0 \) if x 0 equals one of the points already simulated; e.g., x 0 = x 1 .

Because \( \sigma _{OK}^{2} \) is zero if x 0 is an old point, the function \( \sigma _{OK}^{2}(\mathbf{x}_{0}) \) has many local minima if n > 1—and has many local maxima too; i.e., \( \sigma _{OK}^{2}(\mathbf{x}_{0}) \) is nonconcave. Experimental results of many experiments suggest that \( \sigma _{OK}^{2}(\mathbf{x}_{0}) \) has local maxima at x 0 approximately halfway between old input combinations x i ; see part c of Fig. 5.2 below. We shall return to this characteristic in Sect. 6.3.1 on “efficient global optimization” (EGO).

Obviously, the optimal weight vector \( \boldsymbol{\lambda }_{o} \) in Eq. (5.6) depends on the covariances—or equivalently the correlations—between the outputs of the Kriging metamodel in Eq. (5.1). Kriging assumes that these correlations are determined by the “distance” between the input combinations. In geostatistics, Kriging often uses the Euclidean distance (say) h between the inputs x g and \( \mathbf{x}_{g^{{\prime}}} \) with \( g,g^{{\prime}} = 0,1,\ldots,n \) (so g and \( g^{{\prime}} \) range between 0 and n and consequently x g and \( \mathbf{x}_{g^{{\prime}}} \) cover both the new point and the n old points):

where \( \Vert \mathbf{\bullet }\Vert _{2} \) denotes the L 2 norm. This assumption means that

which is called an isotropic correlation function; see Cressie (1993, pp. 61–62).

In simulation, however, we often assume that the Kriging metamodel has a correlation function—which implies a covariance function—that is not isotropic, but is anisotropic; e.g., in a separable anisotropic correlation function we replace Eq. (5.10) by the product of k one-dimensional correlation functions:

Because Kriging assumes a stationary process, the correlations in Eq. (5.11) depend only on the distances in the k dimensions:

also see Eq. (5.9). So, \( \rho (x_{g;j},x_{g^{{\prime}};j}) \) in Eq. (5.11) reduces to \( \rho (h_{g;g^{{\prime}};j}) \). Obviously, if the simulation model has a single input so k = 1, then these isotropic and the anisotropic correlation functions are identical. Furthermore, Kriging software standardizes (scales, codes, normalizes) the original simulation inputs and outputs, which affects the distances h; also see Kleijnen and Mehdad (2013).

Note: Instead of correlation functions, geostatisticians use variograms, covariograms, and correlograms; see the literature on Kriging in geostatistcs in Sect. 5.1.

There are several types of correlation functions that give valid (positive definite) covariance matrices for stationary processes; see the general literature on GPs in Sect. 5.1, especially Rasmussen and Williams (2006, pp. 80–104). Geostatisticians often use so-called Matérn correlation functions, which are more complicated than the following three popular functions—displayed in Fig. 5.1 for a single input with parameter \( \theta = 0.5 \):

-

Linear: \( \rho (h) = \mbox{ max}\,(1 -\theta h,0) \)

-

Exponential: \( \rho (h) =\exp (-\theta h) \)

-

Gaussian: \( \rho (h) =\exp (-\theta h^{2}) \)

Note: It is straightforward to prove that the Gaussian correlation function has its point of inflection at \( h = 1/\sqrt{2\theta } \), so in Fig. 5.1 this point lies at h = 1. Furthermore, the linear correlation function gives correlations ρ(h) that are smaller than the exponential function gives, for \( \theta \) > 0 and h > 0; Fig. 5.1 demonstrates this behavior for \( \theta = 0.5 \). Finally, the linear correlation function gives ρ(h) smaller than the Gaussian function does, for (roughly) \( \theta \) > 0.45 and h > 0. There are also correlation functions ρ(h) that do not monotonically decrease as the lag h increases; this is called a “hole effect” (see http://www.statistik.tuwien.ac.at/ public/ dutt/ vorles/ geost_03/node80.html).

In simulation, a popular correlation function is

where \( \theta _{j} \) quantifies the importance of input j—the higher \( \theta _{j} \) is, the less effect input j has— and p j quantifies the smoothness of the correlation function—e.g., p j = 2 implies an infinitely differentiable function. Figure 5.1 has already illustrated an exponential function and a Gaussian function, which correspond with p = 1 and p = 2 in Eq. (5.13). (We shall discuss better measures of importance than \( \theta _{j} \), in Sect. 5.8.)

Exercise 5.4

What is the value of ρ(h) in Eq. (5.13) with p > 0 when h = 0 and \( h = \infty \) , respectively?

Exercise 5.5

What is the value of \( \theta _{j} \) in Eq. (5.13) with p j > 0 when input j has no effect on the output?

Note: The choice of a specific type of correlation function may also affect the numerical properties of the Kriging model; see Harari and Steinberg (2014b).

Because ρ(h) in Eq. (5.13) decreases as the distance h increases, the optimal weights \( \boldsymbol{\lambda }_{o} \) in Eq. (5.6) are relatively high for old inputs close to the new input to be predicted.

Note: Some of the weights may be negative; see Wackernagel (2003, pp. 94–95). If negative weights give negative predictions and all the observed outputs w i are nonnegative, then Deutsch (1996) sets negative weights and small positive weights to zero while restandardizing the sum of the remaining positive weights to one to make the predictor unbiased.

It is well known that Kriging results in bad extrapolation compared with interpolation; see Antognini and Zagoraiou (2010). Our intuitive explanation is that in interpolation the new point is surrounded by relatively many old points that are close to the new point; let us call them “close neighbors”. Consequently, the predictor combines many old outputs that are strongly positively correlated with the new output. In extrapolation, however, there are fewer close neighbors. Note that linear regression also gives minimal predictor variance at the center of the experimental area; see Eq. (6.7).

5.2.2 Estimating the OK Parameters

A major problem in OK is that the optimal Kriging weights \( \lambda _{i} \) (i = 1, …, n) depend on the correlation function of the assumed metamodel—but it is unknown which correlation function gives a valid metamodel. In Kriging we usually select either an isotropic or an anisotropic type of correlation function and a specific type of decay such as linear, exponential, or Gaussian; see Fig. 5.1. Next we must estimate the parameter values; e.g. \( \theta _{j} \) (j = 1, …, k) in Eq. (5.13). For this estimation we usually select the maximum likelihood (ML) criterion, which gives the ML estimators (MLEs) \( \widehat{\theta }_{j} \). ML requires the selection of a distribution for the metamodel output y(x) in Eq. (5.1). The standard distribution in Kriging is a multivariate normal, which explains the term GP. This gives the log-likelihood function

where \( \left \vert \cdot \right \vert \) denotes the determinant and \( \mathbf{R(\boldsymbol{\theta })} \) denotes the correlation matrix of y. Obviously, MLE requires that we minimize

We denote the resulting MLEs by a “hat”, so the MLEs are \( \widehat{\mu } \), \( \widehat{\tau }^{2} \), and \( \widehat{\mathbf{\boldsymbol{\theta }}} \). This minimization is a difficult mathematical problem. The classic solution in Kriging is to “divide and conquer”—called the “profile likelihood” or the “concentrated likelihood” in mathematical statistics—as we summarize in the following algorithm (in practice we use standard Kriging software that we shall list near the end of this section).

Algorithm 5.1

-

1.

Initialize \( \widehat{\boldsymbol{\theta }} \), which defines \( \widehat{\mathbf{R}} \).

-

2.

Compute the generalized least squares (GLS) estimator of the mean:

$$ \displaystyle{ \widehat{\mu }= (\mathbf{1}^{T}\widehat{\mathbf{R}}^{-1}\mathbf{1)}^{-1}\mathbf{1}^{^{{\prime}} }\widehat{\mathbf{R}}^{-1}\mathbf{y.} } $$(5.16) -

3.

Substitute \( \widehat{\mu } \) resulting from Step 2 and \( \widehat{\mathbf{R}} \) resulting from Step 1 into the MLE variance estimator

$$ \displaystyle{ \widehat{\tau }^{2} = \frac{(\mathbf{w-}\widehat{\mu }\mathbf{\mathbf{1}})^{^{{\prime}} }\widehat{\mathbf{R}}^{-1}(\mathbf{w-}\widehat{\mu }\mathbf{\mathbf{1}})} {n}. } $$(5.17)Comment: \( \widehat{\tau }^{2} \) has the denominator n, whereas the denominator n − 1 is used by the classic unbiased estimator assuming R = I.

-

4.

Solve the remaining problem in Eq. (5.15):

$$ \displaystyle{ \mbox{ Min }\widehat{\tau }^{2}\vert \widehat{\mathbf{R}}\vert ^{-n}. } $$(5.18)Comment: This equation can be found in Lophaven et al. (2002, equation 2.25). To solve this nonlinear minimization problem, Lophaven et al. (2002) applies the classic Hooke-Jeeves heuristic. Gano et al. (2006) points out that this minimization problem is difficult because of “the multimodal and long near-optimal ridge properties of the likelihood function”; i.e., this problem is not convex.

-

5.

Use the \( \widehat{\boldsymbol{\theta }} \) that solves Eq. (5.18) in Step 4 to update \( \widehat{\mathbf{R}} \), and substitute this updated \( \widehat{\mathbf{R}} \) into Eqs. (5.16) and (5.17).

-

6.

If the MLEs have not yet converged, then return to Step 2; else stop.

Note: Computational aspects are further discussed in Bachoc (2013), Butler et al. (2014), Gano et al. (2006), Jones et al. (1998), Li and Sudjianto (2005), Lophaven et al. (2002), Marrel et al. (2008), and Martin and Simpson (2005).

This difficult optimization problem implies that different MLEs may result from different software packages or from initializing the same package with different starting values; the software may even break down. The DACE software uses lower and upper limits for \( \theta _{j} \), which are usually hard to specify. Different limits may give completely different \( \widehat{\theta }_{j} \), as the examples in Lin et al. (2004) demonstrate.

Note: Besides MLEs there are other estimators of \( \mathbf{\boldsymbol{\theta }} \); e.g., restricted MLEs (RMLEs) and cross-validation estimators; see Bachoc (2013), Rasmussen and Williams (2006, pp. 116–124), Roustant et al. (2012), Santner et al. (2003, pp. 66–68), and Sundararajan and Keerthi (2001). Furthermore, we may use the LS criterion. We have already shown estimators for covariances in Eq. (3.31), but in Kriging the number of observations for a covariance of a given distance h decreases as that distance increases. Given these estimates for various values of h, Kleijnen and Van Beers (2004) and Van Beers and Kleijnen (2003) use the LS criterion to fit a linear correlation function.

Let us denote the MLEs of the OK parameters by \( \widehat{\mathbf{\boldsymbol{\psi }}} = (\widehat{\mu },\hat{\tau }^{2},\widehat{\mathbf{\boldsymbol{\theta }}}^{{\prime}})^{{\prime}} \) with \( \widehat{\mathbf{\boldsymbol{\theta }}}^{{\prime}} = (\widehat{\theta }_{1},\ldots, \) \( \widehat{\theta }_{k}) \) in case of an anisotropic correlation function such as Eq. (5.13); obviously, \( \widehat{\mathbf{\varSigma }} =\hat{\tau } ^{2}\widehat{\mathbf{R}}(\widehat{\mathbf{\boldsymbol{\theta }}}) \). Plugging these MLEs into Eq. (5.7), we obtain the predictor

This predictor depends on the new point x 0 only through \( \widehat{\mathbf{\boldsymbol{\sigma }}}\mathbf{(x}_{0}) \), because \( \widehat{\mu } \) and \( \widehat{\mathbf{\varSigma }}^{-1}(\mathbf{w-}\widehat{\mu }\mathbf{1}) \) depend on the old I/O. The second term in this equation shows that this predictor is nonlinear (likewise, weighted least squares with estimated weights gives a nonlinear estimator in linear regression metamodels; see Sect. 3.4.4). However, most publications on Kriging compute the MSE of this predictor by simply plugging the MLEs of the Kriging parameters τ 2, \( \mathbf{\sigma }(\mathbf{x}_{0}) \), and \( \mathbf{\varSigma } \) into Eq. (5.8):

We shall discuss a bootstrapped estimator of the true MSE of this nonlinear predictor, in the next section (Sect. 5.3).

Note: Martin and Simpson (2005) discusses alternative approaches—namely, validation and Akaike’s information criterion (AIC)—and finds that ignoring the randomness of the estimated Kriging parameters underestimates the true variance of the Kriging predictor. Validation for estimating the variance of the Kriging predictor is also discussed in Goel et al. (2006) and Viana and Haftka (2009). Furthermore, Thiart et al. (2014) confirms that the plug-in MSE defined in Eq. (5.20) underestimates the true MSE, and discusses alternative estimators of the true MSE. Jones et al. (1998) and Spöck and Pilz (2015) also imply that the plug-in estimator underestimates the true variance. Stein (1999) gives asymptotic results for Kriging with \( \widehat{\mathbf{\psi }} \).

We point out that Kriging gives a predictor plus a measure for the accuracy of this predictor; see Eq. (5.20). Some other metamodels—e.g., splines—do not quantify the accuracy of their predictor; see Cressie (1993, p. 182). Like Kriging, linear regression metamodels do quantify the accuracy; see Eq. (3.41).

The MSE in Eq. (5.20) is also used to compute a two-sided symmetric (1 −α) confidence interval (CI) for the OK predictor at x 0, where \( \widehat{\sigma }_{ \mbox{ OK}}^{2}\{\widehat{y}(\mathbf{x}_{ 0},\widehat{\boldsymbol{\psi }})\} \) equals MSE\( [\widehat{y}(\mathbf{x}_{0},\widehat{\boldsymbol{\psi }})] \) and (say) a ± b denotes the interval [a − b, a + b]:

There is much software for Kriging. In our own experiments we have used DACE, which is a free-of-charge MATLAB toolbox well documented in Lophaven et al. (2002). Alternative free software is the R package DiceKriging—which is well documented in Roustant et al. (2012)—and the object-oriented software called the “ooDACE toolbox”—documented in Couckuyt et al. (2014). PeRK programmed in C is documented in Santner et al. (2003, pp. 215–249). More free software is mentioned in Frazier (2011) and in the textbooks and websites mentioned in Sect. 5.1; also see the Gaussian processes for machine learning (GPML) toolbox, detailed in Rasmussen and Nickisch (2010). We also refer to the following four toolboxes (in alphabetical order):

MPERK on

http://www.stat.osu.edu/~comp_exp/jour.club/MperkManual.pdf

STK on

http://sourceforge.net/projects/kriging/

http://octave.sourceforge.net/stk/,

SUMO on

http://www.sumo.intec.ugent.be/,

and Surrogates on https://sites.google.com/site/felipeacviana/surroga testoolbox.

Finally, we refer to the commercial JMP/SAS site:

https://www.jmp.com/en_us/software/feature-index.html#K.

Note: For large data sets, the Kriging computations may become problematic; solutions are discussed in Gramacy and Haaland (2015) and Meng and Ng (2015).

As we have already stated in Sect. 1.2, we adhere to a frequentist view in this book. Nevertheless, we mention that there are many publications that interpret Kriging models in a Bayesian way. A recent article is Yuan and Ng (2015); older publications are referenced in Kleijnen (2008). Our major problem with the Bayesian approach to Kriging is that we find it hard to come up with prior distributions for the Kriging parameters \( \mathbf{\boldsymbol{\psi }} \), because we have little intuition about the correlation parameters \( \mathbf{\boldsymbol{\theta }} \); e.g., what is the prior distribution of \( \boldsymbol{\theta } \), in the Kriging metamodel of the M∕M∕1 simulation model?

Note: Kriging seems related to so-called moving least squares (MLS), which originated in curve and surface fitting and fits a continuous function using a weighted least squares (WLS) criterion that gives more weight to old points close to the new point; see Lancaster and Salkauskas (1986) and also Forrester and Keane (2009) and Toropov et al. (2005).

The Kriging metamodel may also include qualitative inputs besides quantitative inputs. The challenge is to specify a valid covariance matrix; see Zhou et al. (2011).

5.3 Bootstrapping and Conditional Simulation for OK in Deterministic Simulation

In the preceding section we mentioned that in the present section we discuss a bootstrap approach to estimating the MSE of the nonlinear predictor with plugged-in estimated Kriging parameters \( \widehat{\boldsymbol{\psi }} \) in Eq. (5.19). We have already discussed the general principles of bootstrapping in Sect. 3.3.5 Now we discuss parametric bootstrapping of the GP assumed in OK that was specified in Eq. (5.1). We also discuss a bootstrap variant called “conditional simulation”. Hasty readers may skip this section, because parametric bootstrapping and its variant are rather complicated and turn out to give CIs with coverages and lengths that are not superior compared with the CI specified in Eq. (5.21).

5.3.1 Bootstrapped OK (BOK)

For bootstrapping we use the notation that we introduced in Sect. 3.3.5 So we denote bootstrapped data by the superscript ∗; e.g., (X,w ∗) denotes the original input and the bootstrapped output of the simulation model. We define bootstrapped estimators analogously to the original estimators, but we compute the bootstrapped estimators from the bootstrapped data instead of the original data; e.g., we compute \( \widehat{\boldsymbol{\psi }} \) from (X,w), but \( \widehat{\boldsymbol{\psi }}^{{\ast}} \) from (X,w ∗). We denote the bootstrap sample size by B and the bth bootstrap observation in this sample by the subscript b with b = 1, …, B.

Following Kleijnen and Mehdad (2013), we define the following (1 + n)-dimensional Gaussian or “normal” (N1+n ) distribution:

where all symbols were defined in the preceding section. Obviously, Eq. (5.22) implies \( y\left (\mathbf{x}\right ) \) \( \sim \mbox{ N}_{n}\left (\mu \mathbf{1}_{n},\boldsymbol{\varSigma }\right ) \).

Li and Zhou (2015) extends Den Hertog et al. (2006)’s bootstrap method for estimating the variance from univariate GP models to so-called “pairwise meta-modeling” of multivariate GP models assuming nonseparable covariance functions. We saw that if x 0 gets closer to an old point x, then the predictor variance decreases and—because OK is an exact interpolator in deterministic simulation—this variance becomes exactly zero when x 0 = x. Furthermore, N1+n in Eq. (5.22) implies that the distribution of the new output—given the n old outputs—is the conditional normal distribution

We propose the following BOK pseudo-algorithm.

Algorithm 5.2

-

1.

Use \( \mbox{ N}_{k}\left (\widehat{\mu }\mathbf{1}_{k},\widehat{\boldsymbol{\varSigma }}\right ) \) B times to sample the n old outputs \( \mathbf{y}_{b}^{{\ast}}(\mathbf{X},\widehat{\boldsymbol{\psi }}) = (y_{1;b}^{{\ast}}(\mathbf{X},\widehat{\boldsymbol{\psi }}) \), …, \( y_{k;b}^{{\ast}}(\mathbf{X},\widehat{\boldsymbol{\psi }}))^{{\prime}} \) where \( \widehat{\boldsymbol{\psi }} \) is estimated from the old simulation I/O data (X, w). For each new point x 0 repeat steps 2 through 4 B times.

-

2.

Given the n old bootstrapped outputs \( \mathbf{y}_{b}^{{\ast}}(\mathbf{X},\widehat{\boldsymbol{\psi }}) \) of step 1, sample the new output \( y_{b}^{{\ast}}(\mathbf{x}_{0},\widehat{\boldsymbol{\psi }}) \) from the conditional normal distribution defined in Eq. (5.23).

-

3.

Using the n old bootstrapped outputs \( \mathbf{y}_{b}^{{\ast}}(\mathbf{X},\widehat{\boldsymbol{\psi }}) \) of step 1, compute the bootstrapped MLE \( \widehat{\boldsymbol{\psi }}_{b}^{{\ast}} \). Next calculate the bootstrapped predictor

$$ \displaystyle{ \widehat{y}(\mathbf{x}_{0},\widehat{\boldsymbol{\psi }}_{b}^{{\ast}}) =\widehat{\mu }_{ b}^{{\ast}} +\widehat{\boldsymbol{\sigma }} (\mathbf{x}_{ 0},\widehat{\mathbf{\mathbf{\theta }}}_{b}^{{\ast}})^{^{{\prime}} }\widehat{\boldsymbol{\varSigma }}^{-1}(\widehat{\mathbf{\mathbf{\theta }}}_{ b}^{{\ast}})[\mathbf{y}_{ b}^{{\ast}}(\mathbf{X},\widehat{\boldsymbol{\psi }}) -\widehat{\mu }_{ b}^{{\ast}}\mathbf{1}_{ n}]. } $$(5.24) -

4.

Given \( \widehat{y}(\mathbf{x}_{0},\widehat{\boldsymbol{\psi }}_{b}^{{\ast}}) \) of step 3 and \( y_{b}^{{\ast}}(\mathbf{x}_{0},\widehat{\boldsymbol{\psi }}) \) of step 2, compute the bootstrap estimator of the squared prediction error (SPE):

$$ \displaystyle{\mbox{ SPE}_{b}^{{\ast}} = \mbox{ SPE}[\widehat{y}(\mathbf{x}_{ 0},\widehat{\boldsymbol{\psi }}_{b}^{{\ast}})] = [\widehat{y}(\mathbf{x}_{ 0},\widehat{\boldsymbol{\psi }}_{b}^{{\ast}}) - y_{ b}^{{\ast}}(\mathbf{x}_{ 0},\widehat{\boldsymbol{\psi }})]^{2}.} $$ -

5.

Given the B bootstrap samples SPE b ∗ (b = 1, …, B) resulting from steps 1 through 4, compute the bootstrap estimator of \( \mbox{ MSPE}[\widehat{y}(\mathbf{x}_{0})] \) (this MSPE was defined in Eq. (5.8):

$$ \displaystyle{ \mbox{ MSPE}^{{\ast}}\mbox{ = }\frac{\sum _{b=1}^{B}\mbox{ SPE}_{ b}^{{\ast}}} {B}. } $$(5.25)

If we ignore the bias of the BOK predictor \( \widehat{y}(\mathbf{x}_{0},\widehat{\boldsymbol{\psi }}^{{\ast}}) \), then Eq. (5.25) gives \( \widehat{\sigma }^{2}[\widehat{y}(\mathbf{x}_{0},\widehat{\boldsymbol{\psi }}^{{\ast}})] \) which is the bootstrap estimator of \( \sigma ^{2}[\widehat{y}(\mathbf{x}_{0}\vert \widehat{\boldsymbol{\psi }})] \). We abbreviate \( \widehat{\sigma }^{2}[\widehat{y}(\mathbf{x}_{0},\widehat{\boldsymbol{\psi }}^{{\ast}})] \) to \( \widehat{\sigma }_{ \mbox{ BOK}}^{2} \). The standard error (SE) of \( \widehat{\sigma }_{\mbox{ BOK}}^{2} \) follows from Eq. (5.25):

We apply t B−1 (t-statistic with B − 1 degrees of freedom) to obtain a two-sided symmetric (1 −α) CI for \( \sigma _{\mbox{ BOK}}^{2} \):

Obviously, if \( B \uparrow \infty \), then \( t_{^{_{B-1;\alpha /2}}} \downarrow z_{\alpha /2} \) where \( z_{^{_{\alpha /2}}} \) denotes the α∕2 quantile of the standard normal variable \( z \sim \mbox{ N}\left (0,1\right ) \); typically B is so high (e.g., 100) that we can indeed replace \( t_{^{_{B-1;\alpha /2}}} \) by z α∕2.

Figure 5.2 illustrates BOK for the following test function, taken from Forrester et al. (2008, p. 83):

This function has one local minimum at x = 0. 01, and one global minimum at x = 0. 7572 with output w = −6. 02074; we shall return to this function in the next chapter, in which we discuss simulation optimization. The plot shows that each of the B bootstrap samples has its own old output values y b ∗. Part (a) displays only B = 5 samples to avoid cluttering-up

BOK for the test function in Forrester et al. (2008): (a) jointly sampled outputs at 5 equi-spaced old and 98 equi-spaced new points, for B = 5; (b) Kriging predictions for 98 new points based on 5 old points sampled in (a); (c) estimated predictor variances and their 95 % CIs for B = 100

the plot. Part (b) shows less “wiggling” than part (a); \( \widehat{y}(\mathbf{x},\widehat{\boldsymbol{\psi }}_{b}^{{\ast}}) \), which are the predictions at old points, coincide with \( \mathbf{y}_{b}^{{\ast}}(\mathbf{X},\widehat{\boldsymbol{\psi }}) \), which are the values sampled in part (a). Part (c) uses B = 100.

To compute a two-sided symmetric (1 −α) CI for the predictor at x 0, we may use the OK point predictor \( \widehat{y}(\mathbf{x}_{0},\widehat{\boldsymbol{\psi }}) \) and \( \widehat{\sigma }_{\mbox{ BOK}}^{2} \)(equal to the MSE in Eq. (5.25)):

If \( \widehat{\sigma }_{\mbox{ OK}}^{2} \) < \( \ \widehat{\sigma }_{ \mbox{ BOK}}^{2} \), then this CI is longer and gives a higher coverage than the CI in Eq. (5.21). Furthermore, we point out that Yin et al. (2010) also finds empirically that a Bayesian approach accounting for the randomness of the estimated Kriging parameters gives a wider CI—and hence higher coverage—than an approach that ignores this estimation.

5.3.2 Conditional Simulation of OK (CSOK)

We denote conditional simulation (CS) of OK by CSOK. This method ensures \( \hat{y}(\mathbf{x},\hat{\boldsymbol{\psi }}_{b}^{{\ast}}) = w(\mathbf{x}) \); i.e., in all the bootstrap samples the prediction at an old point equals the observed value. Part (a) of Fig. 5.3 may help understand Algorithm 5.3 for CSOK, which copies steps 1 through 3 of Algorithm 5.2 for BOK in the preceding subsection.

CSOK for the test function in Forrester et al. (2008): (a) predictions at 98 new points, for B = 5; (b) estimated predictor variances and their 95 % CIs for B = 100, and OK’s predictor variances

Note: Algorithm 5.3 is based on Kleijnen and Mehdad (2013), which follows Chilès and Delfiner (2012, pp. 478–650). CS may also be implemented through the R software package called “DiceKriging”; see Roustant et al. (2012).

Algorithm 5.3

-

1.

Use N\( _{n}(\widehat{\mu }\mathbf{1}_{n},\widehat{\boldsymbol{\varSigma }}) \) B times to sample the n old outputs \( \mathbf{y}_{b}^{{\ast}}(\mathbf{X},\widehat{\boldsymbol{\psi }}) = (y_{1;b}^{{\ast}}(\mathbf{X},\widehat{\boldsymbol{\psi }}) \), …, \( y_{k;b}^{{\ast}}(\mathbf{X},\widehat{\boldsymbol{\psi }}))^{^{^{{\prime}}} } \) where \( \widehat{\boldsymbol{\psi }} \) is estimated from the old simulation I/O data (X, w). For each new point x 0, repeat steps 2 through 4 B times.

-

2.

Given the n old bootstrapped outputs \( \mathbf{y}_{b}^{{\ast}}(\mathbf{X},\widehat{\boldsymbol{\psi }}) \) of step 1, sample the new output \( y_{b}^{{\ast}}(\mathbf{x}_{0},\widehat{\boldsymbol{\psi }}) \) from the conditional normal distribution in Eq. (5.23).

-

3.

Using the k old bootstrapped outputs \( \mathbf{y}_{b}^{{\ast}}(\mathbf{X},\widehat{\boldsymbol{\psi }}) \) of step 1, compute the bootstrapped MLE \( \widehat{\boldsymbol{\psi }}_{b}^{{\ast}} \). Next calculate the bootstrapped predictor

$$ \displaystyle{ \widehat{y}(\mathbf{x}_{0},\widehat{\boldsymbol{\psi }}_{b}^{{\ast}}) =\widehat{\mu }_{ b}^{{\ast}} +\widehat{\boldsymbol{\sigma }} (\mathbf{x}_{ 0})^{^{{\prime}} }\widehat{\boldsymbol{\varSigma }}^{-1}(\widehat{\mathbf{\boldsymbol{\theta }}}_{ b}^{{\ast}})[\mathbf{y}_{ b}^{{\ast}}(\mathbf{X},\widehat{\boldsymbol{\psi }}) -\widehat{\mu }_{ b}^{{\ast}}\mathbf{1}_{ n}]. } $$(5.29) -

4.

Combining the OK estimator defined in Eq. (5.19) and the BOK estimator defined in Eq. (5.29), compute the CSOK predictor

$$ \displaystyle\begin{array}{rcl} \widehat{y}_{\mbox{ CSOK}}(\mathbf{x}_{0},b)& =& \widehat{\mu }+\widehat{\boldsymbol{\sigma }}(\mathbf{x}_{0})^{^{{\prime}} }\widehat{\boldsymbol{\varSigma }}^{-1}(\mathbf{w}-\widehat{\mu }\mathbf{1}_{ n})+[y_{b}^{{\ast}}(\mathbf{x}_{ 0},\widehat{\boldsymbol{\psi }}) -\widehat{ y}(\mathbf{x}_{0},\widehat{\boldsymbol{\psi }}_{b}^{{\ast}})]. {}\end{array} $$(5.30)

Given these B estimators \( \widehat{y}_{\mbox{ CSOK}}(\mathbf{x}_{0},b) \) (b = 1, …, B), compute the CSOK estimator of \( \mbox{ MSPE}[\widehat{y}(\mathbf{x}_{0})] \):

We abbreviate \( \widehat{\sigma }^{2}[\widehat{Y }_{\mbox{ CSOK}}(\mathbf{x}_{0})] \) to \( \widehat{\sigma }_{ \mbox{ CSOK}}^{2} \). Mehdad and Kleijnen (2014) proves that \( \widehat{\sigma }_{\mbox{ CSOK}}^{2} \leq \widehat{\sigma }_{\mbox{ BOK}}^{2} \); in practice, it is not known how much smaller \( \widehat{\sigma }_{ \mbox{ CSOK}}^{2} \) is than \( \widehat{\sigma }_{ \mbox{ BOK}}^{2} \). We therefore apply a two-sided asymmetric (1 −α) CI for \( \sigma _{\mbox{ OK}}^{2} \) using \( \widehat{\sigma }_{ \mbox{ CSOK}}^{2} \) and the chi-square statistic χ B−1 2 (this CI replaces the CI for BOK in Eq. (5.28), which assumes B IID variables):

Part (b) of Fig. 5.3 displays \( \widehat{\sigma }_{\mbox{ CSOK}}^{2} \) defined in Eq. (5.31) and its 95 % CIs defined in Eq. (5.32) based on B = 100 bootstrap samples; it also displays \( \widehat{\sigma }_{\mbox{ OK}}^{2} \) following from Eq. (5.20). Visual examination of this part suggests that \( \widehat{\sigma }_{ \mbox{ CSOK}}^{2} \) tends to exceed \( \widehat{\sigma }_{\mbox{ OK}}^{2} \).

Next, we display both \( \widehat{\sigma }_{CSOK}^{2} \) and \( \widehat{\sigma }_{BOK}^{2} \) and their CIs, for various values of B, in Fig. 5.4. This plot suggests that \( \widehat{\sigma }_{\mbox{ CSOK}}^{2} \) is not significantly smaller than \( \widehat{\sigma }_{BOK}^{2} \). These results seem reasonable, because both CSOK and BOK use \( \widehat{\boldsymbol{\psi }} \), which is the sufficient statistic of the GP computed from the same (X, w). CSOK seems simpler than BOK, both computationally and conceptually. CSOK gives better predictions for new points close to old points; but then again, BOK is meant to improve the predictor variance—not the predictor itself.

CIs for BOK versus CSOK for various B values, using the test function in Forrester et al. (2008)

We may use \( \widehat{\sigma }_{\mbox{ CSOK}}^{2} \) to compute a CI for the OK predictor, using the analogue of Eq. (5.28):

Moreover, we can derive an alternative CI; namely, a distribution-free two-sided asymmetric CI based on the so-called percentile method (which we defined in Eq. (3.14)). We apply this method to \( \widehat{y}_{\mbox{ CSOK}}(\mathbf{x}_{0},b) \) (b = 1, …, B), which are the B CSOK predictors defined in Eq. (5.30). Because the percentile method uses order statistics, we now denote \( \widehat{y}_{\mbox{ CSOK}}(\mathbf{x}_{0},b) \) by \( \widehat{y}_{\mbox{ CSOK;}\ b} \) (x 0), apply the usual subscript (. ) (e.g., (B α∕2)) to denote order statistics (resulting from sorting the B values from low to high), and select B such that B α∕2 and B(1 −α∕2) are integers:

An advantage of the percentile method is that this CI does not include negative values if the simulation output is not negative; also see Sect. 5.7 on bootstrapping OK to preserve known characteristics of the I/O functions (nonnegative outputs, monotonic I/O functions, etc.). We do not apply the percentile method to BOK, because BOK gives predictions at the n old points that do not equal the observed old simulation outputs w i .

For OK, BOK, and CSOK Mehdad and Kleijnen (2015a) studies CIs with a nominal coverage of 1 −α and reports the estimated expected coverage \( 1 - E(\widehat{\alpha }) \) and the estimated expected length E(l) of the CIs, for a GP with two inputs so k = 2 and an anisotropic Gaussian correlation function such as Eq. (5.13) with p = 2. In general, we prefer the CI with the shortest length, unless this CI gives too low coverage. The reported results show that OK with \( \widehat{\sigma }_{ \mbox{ OK}} \) gives shorter lengths than CSOK with \( \widehat{\sigma }_{\mbox{ CSOK}} \), and yet OK gives estimated coverages that are not significantly lower. The percentile method for CSOK gives longer lengths than OK, but its coverage is not significantly better than OK’s coverage. Altogether the results do not suggest that BOK or CSOK is superior, so we recommend OK when predicting a new output; i.e., OK seems a robust method.

Exercise 5.6

Consider the three alternative CIs that use OK, BOK, and CSOK, respectively. Do you think that the length of such a CI for a new point tends to decrease or increase as n (number of old points) increases?

5.4 Universal Kriging (UK) in Deterministic Simulation

UK replaces the constant μ in Eq. (5.1) for OK by \( \mathbf{f}(\mathbf{x})^{^{{\prime}} }\mathbf{\boldsymbol{\beta }} \) where f(x) is a q × 1 vector of known functions of x and \( \mathbf{\boldsymbol{\beta }} \) is a q × 1 vector of unknown parameters (e.g., if k = 1, then UK may replace μ by β 0 +β 1 x, which is called a “linear trend”):

The disadvantage of UK compared with OK is that UK requires the estimation of additional parameters. More precisely, besides β 0 UK involves q − 1 parameters, whereas OK involves only β 0 = μ. We conjecture that the estimation of the extra q − 1 parameters explains why UK has a higher MSE. In practice, most Kriging models do not use UK but OK

Note: This higher MSE for UK is also discussed in Ginsbourger et al. (2009) and Tajbakhsh et al. (2014). However, Chen et al. (2012) finds that UK in stochastic simulation with CRN may give better estimates of the gradient; also see Sect. 5.6. Furthermore, to eliminate the effects of estimating \( \mathbf{\boldsymbol{\beta }} \) in UK, Mehdad and Kleijnen (2015b) applies intrinsic random functions (IRFs) and derives the corresponding intrinsic Kriging (IK) and stochastic intrinsic Kriging (SIK). An IRF applies a linear transformation such that \( \mathbf{f}(\mathbf{x})^{^{{\prime}} }\mathbf{\boldsymbol{\beta }} \) in Eq. (5.35) vanishes. Of course, this transformation also changes the covariance matrix \( \mathbf{\varSigma }_{M} \), so the challenge becomes to determine a covariance matrix of IK that is valid (symmetric and “conditionally” positive definite). Experiments suggest that IK outperforms UK, and SIK outperforms SK. Furthermore, a refinement of UK is so-called blind Kriging, which does not assume that the functions f(x) are known. Instead, blind Kriging chooses these functions from a set of candidate functions, assuming heredity (which we discussed below Eq. (4.11)) and using Bayesian techniques (which we avoid in this book; see Sect. 5.2). Blind Kriging is detailed in Joseph et al. (2008) and also in Couckuyt et al. (2012). Finally, Deng et al. (2012) compares UK with a new Bayesian method that also tries to eliminate unimportant inputs in the Kriging metamodel; the elimination of unimportant inputs we discussed in Chap. 4 on screening.

5.5 Designs for Deterministic Simulation

An n × k design matrix X specifies the n combinations of the k simulation inputs. The literature on designs for Kriging in deterministic simulation abounds, and proposes various design types. Most popular are Latin hypercube designs (LHDs). Alternative types are orthogonal array, uniform, maximum entropy, minimax, maximin, integrated mean squared prediction error (IMSPE), and “optimal” designs.

Note: Many references are given in Chen and Zhou (2014), Damblin et al. (2013), Janssen (2013), and Wang et al. (2014). Space-filling designs that account for statistical dependencies among the k inputs—which may be quantitative or qualitative—are given in Bowman and Woods (2013). A textbook is Lemieux (2009). More references are given in Harari and Steinberg (2014a), and Kleijnen (2008, p. 130). Relevant websites are

and

http://www.spacefillingdesigns.nl/.

LHDs are specified through Latin hypercube sampling (LHS). Historically speaking, McKay et al. (1979) invented LHS not for Kriging but for risk analysis using deterministic simulation models (“computer codes”); LHS was proposed as an alternative for crude Monte Carlo sampling (for Monte Carlo methods we refer to Chap. 1). LHS assumes that an adequate metamodel is more complicated than a low-order polynomial (these polynomial metamodels and their designs were discussed in the preceding three chapters). LHS does not assume a specific metamodel that approximates the I/O function defined by the underlying simulation model; actually, LHS focuses on the input space formed by the k–dimensional unit cube defined

by the standardized simulation inputs. LHDs are one of the space-filling types of design (LHDs will be detailed in the next subsection, Sect. 5.5.1).

Note: It may be advantageous to use space-filling designs that allow sequential addition of points; examples of such designs are the Sobol sequences detailed on

http://en.wikipedia.org/wiki/Sobol_sequence#References.

We also refer to the nested LHDs in Qian et al. (2014) and the “sliced” LHDs in Ba et al. (2014), Li et al. (2015), and Yang et al. (2014); these sliced designs are useful for experiments with both qualitative and quantitative inputs. Furthermore, taking a subsample of a LHD—as we do in validation—destroys the LHD properties. Obviously, the most flexible method allowing addition and elimination of points is a simple random sample of n points in the k-dimensional input space.

In Sect. 5.5.1 we discuss LHS for designs with a given number of input combinations, n; in Sect. 5.5.2 we discuss designs that determine n sequentially and are customized.

5.5.1 Latin Hypercube Sampling (LHS)

Technically, LHS is a type of stratified sampling based on the classic Latin square designs, which are square matrixes filled with different symbols such that each symbol occurs exactly once in each row and exactly once in each column. Table 5.1 is an example with k = 3 inputs and five levels per input; input 1 is the input of real interest, whereas inputs 2 and 3 are nuisance inputs or block factors (also see our discussion on blocking in Sect. 2.10). This example requires only n = 5 × 5 = 25 combinations instead of 53 = 125 combinations. For further discussion of Latin (and Graeco-Latin) squares we refer to Chen et al. (2006).

Note: Another Latin square—this time, constructed in a systematic way—is shown in Table 5.2. This design, however, may give a biased estimator of the effect of interest. For example, suppose that the input of interest (input 1) is wheat, and wheat comes in five varieties. Suppose further that this table determines the way wheat is planted on a piece of land; input 2 is the type of harvesting machine, and input 3 is the type of fertilizer. If the land shows a very fertile strip that runs from north-west to south-east (see the main diagonal of the matrix in this table), then the effect of wheat type 1 is overestimated. Therefore randomization should be applied to protect against unexpected effects. Randomization makes such bias unlikely—but not impossible. Therefore random selection may be corrected if its realization happens to be too systematic. For example, a LHD may be corrected to give a “nearly” orthogonal design; see Hernandez et al. (2012), Jeon et al. (2015), and Vieira et al. (2011).

The following algorithm details LHS for an experiment with n combinations of k inputs (also see Helton et al. (2006b).

Algorithm 5.4

-

1.

Divide the range of each input into n > 1 mutually exclusive and exhaustive intervals of equal probability. Comment: If the distribution of input values is uniform on [a, b], then each interval has length (b − a)∕n. If the distribution is Gaussian, then intervals near the mode are shorter than in the tails.

-

2.

Randomly select one value for x 1 from each interval, without replacement, which gives n values x 1; 1 through x 1; n .

-

3.

Pair these n values with the n values of x 2, randomly without replacement.

-

4.

Combine these n pairs with the n values of x 3, randomly without replacement to form n triplets.

-

5.

And so on, until a set of nn-tupples is formed.

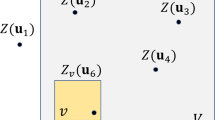

Table 5.3 and Fig. 5.5 give a LHD example with n = 5 combinations of the two inputs x 1 and x 2; these combinations are denoted by as in fig. 5.5. The table shows that each input has five discrete levels, which are labelled 1 through 5. If the inputs are continuous, then the label (say) 1 may denote a value within interval 1; see Fig. 5.5.

LHS does not imply a strict mathematical relationship between n (number of combinations actually simulated) and k (number of simulation inputs), whereas DOE uses (for example) n = 2k so n drastically increases with k. Nevertheless, if LHS keeps n “small” and k is “large”, then the resulting LHD covers the experimental domain \( \mathbb{R}^{k} \) so sparsely that the fitted

Kriging model may be an inadequate metamodel of the underlying simulation model. Therefore a well-known rule-of-thumb for LHS in Kriging is n = 10k; see Loeppky et al. (2009).

Note: Wang et al. (2014) recommends n = 20k. Furthermore, Hernandez et al. (2012) provides a table for LHDs with acceptable nonorthogonality for various (n, k) combinations with n ≤ 1, 025 and k ≤ 172.

Usually, LHS assumes that the k inputs are independently distributed—so their joint distribution becomes the product of their k individual marginal distributions—and the marginal distributions are uniform (symbol U) in the interval (0, 1) so \( x_{j} \sim \mathrm{ U}(0,1) \). An alternative assumption is a multivariate Gaussian distribution, which is completely characterized by its covariances and means. For nonnormal joint distributions, LHS may use Spearman’s correlation coefficient (discussed in Sect. 3.6.1); see Helton et al. (2006b). If LHS assumes a nonuniform marginal distribution for x j (as we may assume in risk analysis, discussed in Sect. 5.9), then LHS defines n—mutually exclusive and exhaustive—subintervals [l j; g , \( u_{j^{{\prime}}g} \)] (g = 1, …, n) for the standardized x j such that each subinterval has the same probability; i.e., P(l j; g ≤ x j ≤ u j; g ) = 1∕n. This implies that near the mode of the x j distribution, the subintervals are relatively short, compared with the subintervals in the tails of this distribution.

In LHS we may either fix the value of x j to the middle of the subinterval g so x j = (l j; g + u j; g )/2 or we may sample the value of x j within that subinterval accounting for the distribution of its values. Fixing x j is attractive when we wish to estimate the sensitivity of the output to the inputs (see Sect. 5.8, in which we shall discuss global sensitivity analysis through Sobol’s indexes). A random x j is attractive when we wish to estimate the probability of the output exceeding a given threshold as a function of an uncertain input x j , as we do in risk analysis (see Sect. 5.9).

LHDs are noncollapsing; i.e., if an input turns out to be unimportant, then each remaining individual input is still sampled with one observation per subinterval. DOE, however, then gives multiple observations for the same value of a remaining input—which is a waste in deterministic simulation (in stochastic simulation it improves the accuracy of the estimated intrinsic noise). Kriging with an anisotropic correlation function may benefit from the noncollapsing property of LHS, when estimating the correlation parameters \( \theta _{j} \). Unfortunately, projections of a LHD point in n dimensions onto more than one dimension may give “bad” designs. Therefore standard LHS is further refined, leading to so-called maximin LHDs and nearly-orthogonal LHDs.

Note: For these LHDs we refer to Damblin et al. (2013), Dette and Pepelyshev (2010), Deutsch and Deutsch (2012), Georgiou and Stylianou (2011), Grosso et al. (2009), Janssen (2013), Jourdan and Franco (2010), Jones et al. (2015), Ranjan and Spencer (2014) and the older references in Kleijnen (2008, p. 130).

In a case study, Helton et al. (2005) finds that crude Monte Carlo and LHS give similar results if these two methods use the same “big” sample size. In general, however, LHS is meant to improve results in simulation applications; see Janssen (2013).

There is much software for LHS. For example, Crystal Ball, @Risk, and Risk Solver provide LHS, and are add-ins to Microsoft’s Excel spreadsheet software. LHS is also available in the MATLAB Statistics toolbox subroutine lhs and in the R package DiceDesign. We also mention Sandia’s DAKOTA software:

5.5.2 Sequential Customized Designs

The preceding designs for Kriging have a given number of input combinations n and consider only the input domain \( \mathbf{x} \in \mathbb{R}^{k} \); i.e., these designs do not consider the output. Now we present designs that select n input combinations sequentially and consider the specific I/O function f sim of the underlying simulation model so these designs are application-driven or customized. We notice that the importance of sequential sampling is also emphasized in Simpson et al. (2004), reporting on a panel discussion.

Note: Sequential designs for Kriging metamodels of deterministic simulation models are also studied in Busby et al. (2007), Crombecq et al. (2011), Koch et al. (2015), and Jin et al. (2002). Sequential LHDs ignoring the output (e.g., so-called “replicated LHDs”) are discussed in Janssen (2013). Our sequential customized designs are no longer LHDs (even though the first stage may be a LHD), as we shall see next.

The designs discussed so far in this section, are fixed sample or one shot designs. Such designs suit the needs of experiments with real systems; e.g., agricultural experiments may have to be finished within a single growing season. Simulation experiments, however, proceed sequentially—unless parallel computers are used, and even then not the whole experiment is finished in one shot. In general, sequential statistical procedures are known to be more “efficient” in the sense that they require fewer observations than fixed-sample procedures; see, e.g., Ghosh and Sen (1991). In sequential designs we learn about the behavior of the underlying system as we experiment with this system and collect data. (The preceding chapter on screening also showed that sequential designs may be attractive in simulation.) Unfortunately, extra computer time is needed in sequential designs for Kriging if we re-estimate the Kriging parameters when new I/O data become available. Fortunately, computations may not start from scratch; e.g., we may initialize the search for the MLEs in the sequentially augmented design from the MLEs in the preceding stage.

Note: Gano et al. (2006) updates the Kriging parameters only when the parameter estimates produce a poor prediction. Toal et al. (2008) examines five update strategies, and concludes that it is bad not to update the estimates after the initial design. Chevalier and Ginsbourger (2012) presents formulas for updating the Kriging parameters and predictors for designs that add I/O data either purely sequential (a single new point with its output) or batch-sequential (batches of new points with their outputs). We shall also discuss this issue in Sect. 5.6 on SK.

Kleijnen and Van Beers (2004) proposes the following algorithm for specifying a customized sequential design for Kriging in deterministic simulation.

Algorithm 5.5

-

1.

Start with a pilot experiment using some space-filling design (e.g., a LHD) with only a few input combinations; use these combinations as the input for the simulation model, and obtain the corresponding simulation outputs.

-

2.

Fit a Kriging model to the I/O simulation data resulting from Step 1.

-

3.

Consider (but do not yet simulate) a set of candidate combinations that have not yet been simulated and that are selected through some space-filling design; find the “winner”, which is the candidate combination with the highest predictor variance.

-

4.

Use the winner found in Step 3 as the input to the simulation model that is actually run, which gives the corresponding simulation output.

-

5.

Re-fit (update) the Kriging model to the I/O data that is augmented with the I/O data resulting from Step 4. Comment: Step 5 refits the Kriging model, re-estimating the Kriging parameters \( \boldsymbol{\psi } \); to save computer time, this step might not re-estimate \( \boldsymbol{\psi } \).

-

6.

Return to Step 3 until either the Kriging metamodel satisfies a given goal or the computer budget is exhausted.

Furthermore, Kleijnen and Van Beers (2004) compares this sequential design with a sequential design that uses the predictor variance with plugged -in parameters specified in Eq. (5.20). The latter design selects as the next point the input combination that maximizes this variance. It turns out that the latter design selects as the next point the input farthest away from the old input combinations, so the final design spreads all its points (approximately) evenly across the experimental area—like space-filling designs do. However, the predictor variance may also be estimated through cross-validation (we have already discussed cross-validation of Kriging models below Eq. (5.20)); see Fig. 5.6, which we discuss next.

Figure 5.6 displays an example with a fourth-order polynomial I/O function f sim with two local maxima and three local minima; two minima occur at the border of the experimental area. Leave-one-out cross-validation means successive deletion of one of the n old I/O observations (which are already simulated), which gives the data set (X −i , w −i ). (i = 1, …, n). Next, we compute the Kriging predictor, after re-estimating the Kriging parameters. For each of three candidate points, the plot shows the three Kriging predictions computed from the original data set (no data deleted), and computed after deleting observation 2 and observation 3, respectively; the two extreme inputs (x = 0 and x = 10) are not deleted because Kriging does not extrapolate well. The point that is most difficult to predict turns out to be the candidate point x = 8. 33 (the highest candidate point in the plot). To quantify this prediction uncertainty, we may jackknife the predictor variances, as follows.

In Sect. 3.3.3, we have already discussed jackknifing in general (jackknifing is also applied to stochastic Kriging, in Chen and Kim (2013)). Now, we calculate the jackknife’s pseudovalue J for candidate point j as the weighted average of the original and the cross-validation predictors, letting c denote the number of candidate points and n the number of points already simulated and being deleted successively:

From these pseudovalues we compute the classic variance estimator (also see Eq. (3.12)):

Figure 5.7 shows the candidate points that are selected for actual simulation. The pilot sample consists of four equally spaced points; also see Fig. 5.6. The sequential design selects relative few points in subareas that generate an approximately linear I/O function; the design selects many points near the edges, where the function changes much. So the design favors points in subareas that have “more interesting” I/O behavior.

Note: Lin et al. (2002) criticizes cross-validation for the validation of Kriging metamodels, but in this section we apply cross-validation for the estimation of the prediction error when selecting the next design point in a customized design. Kleijnen and Van Beers (2004)’s method is also applied by Golzari et al. (2015).

5.6 Stochastic Kriging (SK) in Random Simulation

The interpolation property of Kriging is attractive in deterministic simulation, because the observed simulation output is unambiguous. In random simulation, however, the observed output is only one of the many possible values. Van Beers and Kleijnen (2003) replaces w i (the simulation output at point i with i = 1, …, n) by \( \overline{w}_{i} =\sum _{ r=1}^{m_{i}}w_{i;r}/m_{i} \) (the average simulated output computed from m i replications). These averages, however, are still random, so the interpolation property loses its intuitive appeal. Nevertheless, Kriging may be attractive in random simulation because Kriging may decrease the predictor MSE at input combinations close together.

Note: Geostatisticians often use a model for (random) measurement errors that assumes a so-called nugget effect which is white noise; see Cressie (1993, pp. 59, 113, 128) and also Clark (2010). The Kriging predictor is then no longer an exact interpolator. Geostatisticians also study noise with heterogeneous variances; see Opsomer et al. (1999). In machine learning this problem is studied under the name heteroscedastic GP regression; see Kleijnen (1983) and our references in Sect. 5.1. Roustant et al. (2012) distinguishes between the nugget effect and homogeneous noise, such that the former gives a Kriging metamodel that remains an exact interpolator, whereas the latter does not. Historically speaking, Danie Krige worked in mining engineering and was confronted with the “nugget effect”; i.e., gold diggers may either miss the gold nugget “by a hair” or hit it “right on the head”. Measurement error is a fundamentally different issue; i.e., when we measure (e.g.) the temperature on a fixed location, then we always get different values when we repeat the measurement at points of time “only microseconds apart”, the “same” locations separated by nanomillimeters only, using different measurement tools or different people, etc.

In deterministic simulation, we may study numerical problems arising in Kriging. To solve such numerical noise, Lophaven et al. (2002, Eq. 3.16) and Toal et al. (2008) add a term to the covariance matrix \( \varSigma _{M} \) (also see Eq. (5.36) below); this term resembles the nugget effect, but with a “variance” that depends on the computer’s accuracy.

Note: Gramacy and Lee (2012) also discusses the use of the nugget effect to solve numerical problems, but emphasizes that the nugget effect may also give better statistical performance such as better CIs. Numerical problems are also discussed in Goldberg et al. (1998), Harari and Steinberg (2014b), and Sun et al. (2014).

In Sect. 5.6.1 we discuss a metamodel for stochastic Kriging (SK) and its analysis; in Sect. 5.6.2 we discuss designs for SK.

5.6.1 A Metamodel for SK

In the analysis of random (stochastic) simulation models—which use pseudorandom numbers (PRNs)—we may apply SK, adding the intrinsic noise term \( \varepsilon _{r}(\mathbf{x}) \) for replication r at input combination x to the GP metamodel in Eq.(5.1) for OK with the extrinsic noise M(x):

where \( \varepsilon _{r}(\mathbf{x}) \) has a Gaussian distribution with zero mean and variance Var\( [\varepsilon _{r}(\mathbf{x})] \) and is independent of the extrinsic noise M(x). If the simulation does not use CRN, then \( \mathbf{\varSigma }_{\varepsilon } \)—the covariance matrix for the intrinsic noise—is diagonal with the elements Var\( [\varepsilon (\mathbf{x})] \) on the main diagonal. If the simulation does use CRN, then \( \mathbf{\varSigma }_{\varepsilon } \) is not diagonal; obviously, \( \mathbf{\varSigma }_{\varepsilon } \) should still be symmetric and positive definite. (Some authors—e.g. Challenor (2013)—use the term “aleatory” noise for the intrinsic noise, and the term “epistemic noise” for the extrinsic noise in Kriging; we use these alternative terms in Chaps. 1 and 6.)

Averaging the m i replications gives the average metamodel output \( \overline{y}(\mathbf{x}_{i}) \) and average intrinsic noise \( \overline{\varepsilon }(\mathbf{x}_{i}) \), so Eq. (5.36) is replaced by

Obviously, if we obtain m i replicated simulation outputs for input combination i and we do not use CRN, then \( \mathbf{\varSigma }_{\overline{\varepsilon }} \) is a diagonal matrix with main-diagonal elements Var\( [\varepsilon (\mathbf{x}_{i})]/m_{i} \). If we do use CRN and m i is a constant m, then \( \mathbf{\varSigma }_{\overline{\varepsilon }} = \mathbf{\varSigma }_{\varepsilon }/m \) where \( \mathbf{\varSigma }_{\varepsilon } \) is a symmetric positive definite matrix.

SK may use the classic estimators of Var\( [\varepsilon (\mathbf{x}_{i})] \) using m i > 1 replications, which we have already discussed in Eq. (2.27):

Instead of these point estimates of the intrinsic variances, SK may use another Kriging metamodel for the variances Var\( [\varepsilon (\mathbf{x}_{i})] \)—besides the Kriging metamodel for the mean \( E[y_{r}(\mathbf{x}_{i})] \)— to predict the intrinsic variances. We expect this alternative to be less volatile than \( s^{2}(w_{i}) \); after all, \( s^{2}(w_{i}) \) is a chi-square variable (with m i − 1 degrees of freedom) and has a large variance. Consequently, \( s^{2}(w_{i}) \) is not normally distributed so the GP assumed for \( s^{2}(w_{i}) \) is only a rough approximation. Because \( s^{2}(w_{i}) \geq 0 \), Goldberg et al. (1998) uses \( \log [s^{2}(w_{i})] \) in the Kriging metamodel. Moreover, we saw in Sect. 3.3.3 that a logarithmic transformation may make the variable normally distributed. We also refer to Kamiński (2015) and Ng and Yin (2012).

Note: Goldberg et al. (1998) assumes a known mean E[y(x)], and a Bayesian approach using Markov chain Monte Carlo (MCMC) methods. Kleijnen (1983) also uses a Bayesian approach but no MCMC. Both Goldberg et al. (1998) and Kleijnen (1983) do not consider replications. Replications are standard in stochastic simulation; nevertheless, stochastic simulation without replication is studied in (Marrel et al. 2012). Risk and Ludkovski (2015) applies SK with estimated constant mean \( \widehat{\mu } \) (like OK does) and mean function \( f(\mathbf{x};\widehat{\beta }) \) (like UK does), and reports several case studies that give smaller MSEs for \( f(\mathbf{x};\widehat{\beta }) \) than for \( \widehat{\mu } \).

SK uses the OK predictor and its MSE replacing \( \mathbf{\varSigma }_{M}^{} \) by \( \mathbf{\varSigma }_{M}^{} + \mathbf{\varSigma }_{\overline{\varepsilon }} \) and w by \( \overline{\mathbf{w}} \), so the SK predictor is

and its MSE is

also see Ankenman et al. (2010, Eq. 25).

The output of a stochastic simulation may be a quantile instead of an average (Eq. (5.37) does use averages). For example, a quantile may be relevant in chance-constrained optimization; also see Eq. (6.35) and Sect. 6.4 on robust optimization. Chen and Kim (2013) adapts SK for the latter type of simulation output; also see Bekki et al. (2014), Quadrianto et al. (2009), and Tan (2015).

Note: Salemi et al. (2014) assumes that the simulation inputs are integer variables, and uses a Gaussian Markov random field. Chen et al. (2013) allows some inputs to be qualitative, extending the approach for deterministic simulation in Zhou et al. (2011). Estimation of the whole density function of the output is discussed in Moutoussamy et al. (2014).

There is not much software for SK. The Matlab software available on the following web site is distributed “without warranties of any kind”:

http://www.stochastickriging.net/.

The R package “DiceKriging” accounts for heterogeneous intrinsic noise; see Roustant et al. (2012). The R package “mlegp” is available on

http://cran.r-project.org/web/packages/mlegp/mlegp.pdf.

Software in C called PErK may also account for a nugget effect; see Santner et al. (2003, pp. 215–249).

In Sect. 5.3 we have already seen that ignoring the randomness of the estimated Kriging parameters \( \widehat{\mathbf{\boldsymbol{\psi }}} \) tends to underestimate the true variance of the Kriging predictor. To solve this problem in case of deterministic simulation, we may use parametric bootstrapping or its refinement called conditional simulation. (Moreover, the three variants—plugging-in \( \widehat{\mathbf{\boldsymbol{\psi }}} \), bootstrapping, or conditional simulation—may give predictor variances that reach their maxima for different new input combinations; these maxima are crucial in simulation optimization through “efficient global optimization”, as we shall see in Sect. 6.3.1). In stochastic simulation, we obtain several replications for each old input combination—see Eq. (5.37)—so a simple method for estimating the true predictor variance uses distribution-free bootstrapping. We have already discussed the general principles of bootstrapping in Sect. 3.3.5 Van Beers and Kleijnen (2008) applies distribution-free bootstrapping assuming no CRN, as we shall see in the next subsection (Sect. 5.6.2). Furthermore. Yin et al. (2009) also studies the effects that the estimation of the Kriging parameters has on the predictor variance.

Note: Mehdad and Kleijnen (2015b) applies stochastic intrinsic Kriging (SIK), which is more complicated than SK. Experiments with stochastic simulations suggest that SIK outperforms SK.

To estimate the true variance of the SK predictor, Kleijnen and Mehdad (2015a) applies the Monte Carlo method, distribution-free bootstrapping, and parametric bootstrapping, respectively—using an M/M/1 simulation model for illustration.

5.6.2 Designs for SK

Usually SK employs the same designs as OK and UK do for deterministic simulation. So, SK often uses a one-shot design such as a LHD; also see Jones et al. (2015) and MacCalman et al. (2013).

However, besides the n × k matrix with the n design points \( \mathbf{x}_{i} \in \mathbb{R}^{k} \) (i = 1, …, n) we need to select the number of replications m i . In Sect. 3.4.5 we have already discussed the analogous problem for linear regression metamodels; a simple rule-of-thumb is to select m i such that with 1 −α probability the average output is within γ % of the true mean; see Eq. (3.30).

Note: For SK with heterogeneous intrinsic variances but without CRN (so \( \varSigma _{\varepsilon } \) is diagonal), Boukouvalas et al. (2014) examines optimal designs (which we also discussed for linear regression metamodels in Sect. 2.10.1). That article shows that designs that optimize the determinant of the so-called Fisher information matrix (FIM) outperform space-filling designs (such as LHDs), with or without replications. This FIM criterion minimizes the estimation errors of the GP covariance parameters (not the parameters \( \mathbf{\boldsymbol{\beta }} \) of the regression function \( \mathbf{f}(\mathbf{x})^{{\prime}}\mathbf{\beta } \)). That article recommends designs with at least two replications at each point; the optimal number of replications is determined through an optimization search algorithm. Furthermore, that article proposes the logarithmic transformation of the intrinsic variance when estimating a metamodel for this variance (we also discussed such a transformation in Sect. 3.4.3). Optimal designs for SK with homogeneous intrinsic variances (or a nugget effect) are also examined in Harari and Steinberg (2014a), and Spöck and Pilz (2015).

There are more complicated approaches. In sequential designs, we may use Algorithm 5.5 for deterministic simulation, but we change Step 3—which finds the candidate point with the highest predictor variance—such that we find this point through distribution-free bootstrapping based on replication, as we shall explain below. Figure 5.8 is reproduced from Van Beers and Kleijnen (2008); it displays a fixed LHS design with n = 10 values for the traffic rate x in an M/M/1 simulation with experimental area 0. 1 ≤ x ≤ 0. 9, and a sequentialized design that is stopped after simulating the same number of observations (namely, n = 10). The plot shows that the sequentialized design selects more input values in the part of the input range that gives a drastically increasing (highly nonlinear) I/O function; namely 0. 8 < x ≤ 0. 9. It turns out that this design gives better Kriging predictions than the fixed LHS design does—especially for small designs, which are used in expensive simulations.

The M/M/1 simulation in Fig. 5.8 selects a run-length that gives a 95 % CI for the mean simulation output with a relative error of no more than 15 %. The sample size for the distribution-free bootstrap method is selected to be B = 50.

To estimate the predictor variance, Van Beers and Kleijnen (2008) uses distribution-free bootstrapping and treats the observed average bootstrapped outputs \( \overline{w}_{i}^{{\ast}} \) (i = 1, …, n) as if they were the true mean outputs; i.e., the Kriging metamodel is an exact interpolator of \( \overline{w}_{i}^{{\ast}} \) (obviously, this approach ignores the split into intrinsic and extrinsic noise that SK assumes).

Note: Besides the M/M/1 simulation, Van Beers and Kleijnen (2008) also investigates an (s, S) inventory simulation. Again, the sequentialized design for this (s, S) inventory simulation gives better predictions than a fixed-size (one-shot) LHS design; the sequentialized design concentrates its points in the steeper part of the response surface. Chen and Li (2014) also determines the number of replications through a relative precision requirement, but assumes linear interpolation instead of Kriging; that article also provides a comparison with the approach in Van Beers and Kleijnen (2008).