Abstract

In computer modeling, errors and uncertainties inevitably arise due to the mathematical idealization of physical processes stemming from insufficient knowledge regarding accurate model forms as well as the precise values of input parameters. While these errors and uncertainties are quantifiable, compensations between them can lead to multiple model forms and input parameter sets exhibiting a similar level of agreement with available experimental observations. Such non-uniqueness makes the selection of a single, best computer model (i.e. model form and values for its associate parameters) unjustifiable. Therefore, it becomes necessary to evaluate model performance based not only on the fidelity of the predictions to available experiments but also on a model’s ability to sustain such fidelity given the incompleteness of knowledge regarding the model itself, such an ability will herein be referred to as robustness. In this paper, the authors present a multi-objective approach to model calibration that accounts for not only the model’s fidelity to experiments but also its robustness to incomplete knowledge. With two conflicting objectives, the multi-objective model calibration results in a family of non-dominated solutions exhibiting varying levels of fidelity and robustness effectively forming a Pareto front. The Pareto front solutions can be grouped depending on their nature of compromise between the two objectives, which can in turn help determine clusters in the parameter domain. The knowledge of these clusters can shed light on the nature of compensations as well as aid in the inference of uncertain input parameters. To demonstrate the feasibility and application of this new approach, we consider the computer model of a structural steel frame with uncertain connection stiffness parameters under static loading conditions.

Access provided by Autonomous University of Puebla. Download conference paper PDF

Similar content being viewed by others

Keywords

- Model calibration

- Info-gap decision theory

- Non-dominated sorting genetic algorithm (NSGA-II)

- K-means clustering

- Multi-objective optimization

23.1 Introduction

Regardless of sophistication and extent of detail included, computer models at best approximate the segment of reality they are built to represent. Thus, the use of computer models for decision making mandates that the validity (i.e. worthiness) of model predictions be assessed. Traditionally, this process has solely focused on the fidelity of model predictions to experiments. However, when evaluated according to a fidelity metric alone, compensations between uncertainties and errors that originate from various sources during the model development process can inflate the apparent capabilities of inferior models. Such compensations can lead to multiple models yielding predictions of similar fidelity to the available experiments, widely referred to as non-uniqueness [1].

Non-uniqueness occurs when an incorrectly assigned parameter value compensates for another incorrect parameter value or acts to offset model bias. Compensations between parameters may occur when correlated parameters are calibrated simultaneously (which can be prevented by calibrating each parameter using an independent observable) or when available experimental observations are insufficient (which can be alleviated by further data collection). Compensations may also result from the definitions of the test-analysis correlation metrics, for instance when errors in predictions of multiple outputs are lumped together in one metric. In such situations, considering errors in each of the outputs separately might be useful in determining what role compensations play during model calibration. Compensations might also be due to the inherent imperfectness of model form in that the model parameters might be calibrated to values that counter the model bias. In such situations, explicitly considering the model bias through the training of an independent error model can alleviate the problem. Oftentimes, all of these factors take place in varying degrees making it difficult to come up with one overarching solution.

These compensations during parameter calibration make it difficult (and in fact, unjustifiable) to select one set of calibrated parameter values. This difficulty can be alleviated by ensuring the robustness of a model’s fidelity against uncertainty in the calibrated input parameters. Robustness in model predictions, as defined by the info-gap decision theory, means that the model predictions reproduce observables within a predefined fidelity threshold even when the inherent uncertainties are considered. Hence, what might be a viable approach to address the difficulties model calibration faces due to compensations is to seek parameter value sets that not only yield a satisfying level of agreement with observations but also do so while being able to accommodate variability in their own imperfectness.

The above statement effectively converts model calibration into a multi-objective problem with two conflicting objectives (see Ben-Haim and Hemez [2] for a discussion on the conflicting nature of fidelity and robustness), in which one objective cannot be improved without compromising the other. Accordingly, in this study, model calibration is treated as a multi-objective problem by considering both the model’s fidelity to measurements and robustness of this fidelity to parameter uncertainty. Multi-objective optimization supplies a family of plausible solutions each with a different compromise between the conflicting objectives, known as Pareto front, which visually displays the trade-off between these two conflicting objectives.

The shape of the Pareto front depends on the nature of the optimization problem. Herein, the desired outcome for the robustness objective (horizon of uncertainty) is the larger-the-better and the outcome for the fidelity objective (error threshold) is the smaller-the-better, which leads to a monotonically increasing Pareto front. Such Pareto front shapes tend to have distinct regions depending on the nature of the compromise between objectives: regions where gains and losses between objectives are not balanced (gaining in one objective implies a significant loss in the other) and a region where gains and losses between objectives are balanced. These distinct regions on the Pareto front tend to be associated with distinct clusters in the input parameter space. Knowing the distribution of input parameters in the input space can allow (i) the modeler to evaluate the extent of the compensation between model input parameters and (ii) aid in the decisions regarding the inferred parameter values.

This paper is organized as follows. Section 23.2 provides a background review of earlier reported studies in which clustering of Pareto optimal solutions is evaluated. Section 23.3 presents a discussion on the multi-objective model calibration integrated with clustering algorithms as well as an overview of the algorithms implemented for both multi-objective optimization and clustering. Section 23.4 illustrates its application by means of a case study of a two bay, two story steel moment resisting frame with imprecisely known connection parameters. Section 23.5 concludes the study by overviewing both the contributions and limitations of the proposed methodology.

23.2 Background Review

Cluster analysis divides a dataset into distinct groups, referred to as “clusters,” where data points within a cluster are similar to one another and different from the data points of other clusters. Clustering is used to discover hidden “structures” within a given set of data. Cluster analysis identifies patterns among data points to group similar data together, revealing relationships in the data that were previously unknown. Analyzing the data in clusters helps to outline hidden correlations within the system at hand. Furthermore, revealing relationships in the system informs decision makers who need extensive knowledge of the system to implement it for its intended use. Cluster analysis is widely used in many fields, such as data mining [3], exploratory data analysis [4] and vector quantization [5].

Cluster analysis has previously been used to cluster Pareto optimum solutions to aid in the selection of a single optimum solution (especially when the Pareto front contains a larger number of solutions). For instance, Zio and Bazzo [6] proposed a two-steps procedure to reduce the number of optimal solutions in the Pareto front. In their study, a subtractive clustering algorithm is used to cluster the solutions into “families.” For each family, a “head of the family” is selected to effectively reduce the number of possible solutions on the Pareto front aiding the decision making process. Finally, they represented and analyzed the reduced Pareto front by Level diagrams. Veerappa and Letier [10] implemented a hierarchical clustering method and clustered the Pareto optimal solutions based on the cost-weighted Jaccard distance function for cost-value based requirements selection problem. Jaccard distance measures the similarity (or lack thereof) between clusters as the ratio of their intersection over their union. The cost-weighted Jaccard distance function is a version of Jaccard distance that applies weights based on the importance of each objective.

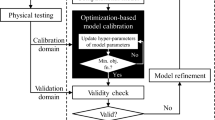

23.3 Methodology

23.3.1 Fidelity in Model Calibration

Combined effects of incompleteness of model form and the imprecision in the input parameters yield deviations between model predictions and observable reality. Such deviations can be quantified as shown in Eq. 23.1:

where, k represents the imprecise parameters to be calibrated selected based on their sensitivity and uncertainty, R(k) represents the fidelity metric (error) between model prediction, M(k), and experimental observation, E.

Given the inevitable uncertainties in experimental observations and numerical calculations, aiming to reach a perfect agreement between model predictions and experimental observations would have little meaning. A more rational approach instead is to aim to reach sufficient agreement between the two. Herein, sufficiency can be defined by a preset fidelity threshold as shown in Eq. 23.2:

where Rc indicates the error tolerance (which is related to desired level of fidelity) between model prediction and experiment.

23.3.2 Overview of Info-Gap Decision Theory

Info-gap decision theory supplies a non-probabilistic approach to decision making under uncertainty. The Info-gap approach uses convex, nested sets of uncertainty models to quantify the allowable range of uncertainty the system can tolerate before it fails to meet the preset performance criteria [7].

Equation 23.3 shows the info-gap model with uncertain calibrated parameters. In this equation, k once again represents imprecise parameters and \( \overset{\sim }{k} \) represents the nominal values for these parameters, which herein will be obtained through calibration. The nominal values are the vector of best estimate model parameters that result in the smallest error between experiments and simulations [8]. The uncertainty in parameters, commonly referred to as horizon of uncertainty, is represented by notation α.

The function R(k) represents the fidelity of the model considering uncertain calibrated parameters. By increasing the model’s horizon of uncertainty, α, the uncertain parameters are allowed to vary within a defined range. For each given value of α, the uncertain parameters will result in a new worst-case fidelity as shown in the Fig. 23.1 (left). Therefore, \( \widehat{R}\left(\alpha \right) \) is a non-decreasing function of α as shown in Fig. 23.1 (right).

As shown in Fig. 23.1, as the horizon of uncertainty increases, \( \widehat{R}\left(\alpha \right) \) eventually exceeds the predefined value of fidelity threshold, Rc. The maximum horizon of uncertainty in which uncertain parameters are allowed to vary without failing to satisfy the fidelity requirement is defined as \( \widehat{\alpha} \), as shown in Eq. 23.5. Thus, a larger \( \widehat{\alpha} \) is desired and representative of greater robustness of the model.

23.3.3 Overview of Multi-objective Optimization

Multi-objective problems arise when multiple objectives influence the problem in a conflicting manner. Multi-objective optimization supplies a means to obtain solutions for such problems in that conflicting objectives are optimized simultaneously under given constraints. Multi-objective optimization can be expressed as shown in the following equation:

where f represents the each objective function and h represents the constrain function.

Multi-objective optimization results in a set of compromising solutions, which represent the trade-off between conflicting objectives. Referred to as Pareto front, these compromising solutions provide the optimal solutions in the solution space, within which improving one objective is not possible without degrading the other(s). It must therefore be obvious to the reader that when multiple objectives are not conflicting, obtaining a single optimal solution becomes possible and multi-objective optimization would not be needed.

Once the Pareto front is determined, decision makers can select solution within this optimal solutions set taking the trade-off between multiple objectives into account. The shape of Pareto front has important significance for this study as it indicates the nature of the trade-off between the different objective functions. The shape of the Pareto front can be used to infer the nature of the relationship between objectives. For instance, in Fig. 23.2, regions where the slope of the Pareto front is steep (or shallow) indicate solutions where slight improvement in one objective requires a significant sacrifice in the other. In Fig. 23.2, three distinct regions can be identified with distinctly different trade-off characteristics (indicated with gray highlights).

23.3.4 Application of K-Means Clustering to Pareto Front

K-means clustering belongs to partitioning methods, one of the most commonly used clustering algorithms due to its implementation simplicity and computational efficiency. K-means clustering algorithm is a centroid based clustering method [9] that partitions data into a user-defined number of clusters, each of which is associated with a centroid.

The main procedure of K-means clustering algorithm can be broken down into four components (i) selecting a user defined number of data points as the initial set of cluster centroids, (ii) assigning each point to the cluster with the nearest centroid, (iii) recalculating the centroid of each cluster, and (iv) repeating assignment of each point to the cluster with the nearest centroid.

The algorithm starts with randomly choosing the number the predefined number of solutions in the Pareto front as an initial set of cluster centroids. Then, each solution in the Pareto front is assigned to the cluster with nearest centroid. The “nearest centroid” is measured by the Euclidean distance between each solution in the Pareto front and the corresponding closest centroid. To express the formulation of Euclidean distance, let us consider a N-dimensional Pareto front set which contains n solutions, N n = (x 1 , x 2 , … , x n ), where these n solutions are partitioned into m sets, H = (h 1 , h 2 , … , h m ), where m ≤ n.

where m represents the number of centroids (i.e. number of clusters) and C i represents the centroid of each cluster.

According to the distances calculated in Eq. 23.7, each of the n data-points are assigned to the nearest one of the m centroids and next, the centroid of each cluster is recalculated. This iterative procedure is repeated, until the convergence condition is satisfied or when the iterative procedure reaches the predefined number of iterations. The convergence condition is satisfied when the distribution of clusters do not change more than a predefined amount by recalculating the centroid of each cluster.

K-means clustering algorithm assigns solutions in the Pareto front into non-overlapping clusters, where solutions within a cluster have similar characters. For instance, within one cluster, we may observe that the value of objective 2 exhibits small changes for varying values of objective 1. What is interesting to note that if the problem in hand is constituted of proper continuous functions, then each of clusters in Pareto front would be associated with parameters that also have similar values. Observing the clusters formed in the parameter space corresponding to the clusters in the solution space can help decision makers evaluate the effect of compensations in model calibration and aid in the model parameter selection (note that we are indicating an inverse analysis from the solution space to the parameter space).

23.4 Case Study

The proposed methodology is demonstrated on a case study of a two bay, two story steel moment resisting frame, shown in Fig. 23.3. The frame is built with beams and columns that have uniform cross-sections. Two external horizontal, static loads are applied to the top and the first story of this steel moment resisting frame (Fig. 23.3). It is assumed that the loads applied do not cause deformations beyond the elastic range. Material properties and physical dimensions of the portal frame members are provided in Table 23.1.

Connection stiffness between the substructures in steel moment resisting frames is typically highly uncertain. In the frame system shown in Fig. 23.3, four linear rotational springs are assigned to represent beam to column connections located at the top and bottom of the first story left and center columns. The stiffness of these rotational springs are represented by parameters K1 and K2. All other beam to column connections are assumed rigid and columns are pin connected to the foundation. The connection stiffness values, K1 and K2, are treated as the calibration parameters. The exact stiffness values of those linear rotational springs are provided in Table 23.2 along with their plausible ranges (i.e. parameter space).

23.4.1 Multi-objective Optimization: Robustness Versus Nominal Fidelity

In this case study, the error between model predictions and measured observables as well as the robustness of the model predictions given uncertain parameters are implemented as objectives. A finite element model is developed and used to predict the maximum lateral displacement at the first story of the portal frame under static lateral loading. Synthetic experimental data are obtained by using sets of input parameters with exact values while model predictions are obtained in a separate process using sets of parameters with imprecise values. Inaccurately calibrated parameters result in disagreements between predictions and experiments for maximum lateral displacement at the first story, quantified by the fidelity metric (refer back to Eq. 23.1). According to the proposed procedure explained in Sects. 23.3.1 and 23.3.2, the fidelity threshold, Rc, is predefined to equal 0.5 and the horizon of uncertainty, α, is varied from 0.01 to 0.5 by a step size of 0.1. NSGA-II is implemented to solve the multi-objective optimization problem with 100 generations with 200 individuals in each to obtain the Pareto front.

The conflicting relationship between objectives develops as robustness to parameter uncertainty should decrease when the fidelity threshold is increase [2]. Thus, the shape of the Pareto front reflects this monotonic trade-off relationship between the two objectives. K-means clustering algorithm is implemented to cluster the solutions in the Pareto front using three clusters. The number of clusters to evaluate is determined by considering the change in slope of the Pareto front (recall Fig. 23.2).

The result of a clustered multi-objective optimization is shown in Fig. 23.4, which provides a clear visualization of the spread of solution groups along the Pareto Front. The distribution of calibrated parameters corresponding to each cluster is shown in Fig. 23.5. It should be noted that this case study resulted in a partial Pareto front, which is possible with the NSGA-II algorithm. Decision makers may use information gained from the clusters to select a parameter set with which model outcomes balance both of the objectives. Decision makers may first select the cluster containing the most solutions satisfying their requirements. Next, the parameter sets corresponding to this cluster may be determined.

In Fig. 23.5, the correlation between the two calibration parameters is evident, illustrated by the linear distribution of parameter sets. There clearly exists a relationship where K1 increases K2 also increases.

23.4.2 Multi-objective Optimization: Robustness Versus Fidelity Threshold

According to the methodology presented in Section 23.3.2, a larger robustness is desired meaning the model is capable of higher uncertainty in parameters. The fidelity threshold quantifies the error between predictions and measurements. Hence, minimizing the fidelity threshold corresponds to a low level of error allowed between predictions and experiments. Maximizing the robustness causes the corresponding fidelity threshold to increase. This trade-off relationship between the two objectives is revealed by the shape of the Pareto front. NSGA-II is implemented to solve the multi-objective optimization problem with 100 generations and 200 individuals in each generation. K-means clustering algorithm is applied to the solutions. Figure 23.6 presents a robustness function (recall Fig. 23.1 right) for a select number of Pareto optimal solutions. Plotting robustness functions for the Pareto optimal solutions then effectively results in the Pareto front itself (notice the similarity between Figs. 23.4 and 23.6).

23.4.3 New Predictions for Portal Frame by Using Parameter Sets in Each of Clusters

In this section, a series of new predictions for the steel moment resisting frame is presented. Based on the parameter sets shown in Fig. 23.5. Each of these parameter sets is used in the finite element model to predict the maximum lateral displacement (drift), maximum moment and maximum shear force at the first story as well as the maximum lateral displacement at the roof of the portal frame. The following results show that using parameter value sets in each cluster results in a different prediction as well as solutions that are clustered (Figs. 23.7, 23.8, and 23.9).

23.5 Conclusion

Model calibration considering both the model’s fidelity to measurements and robustness to parameter uncertainty results in a multi-objective problem. Multi-objective optimization provides insight to the trade-off between objectives through a Pareto front. However, understanding the compromise between parameters alone is not sufficient information for decision makers, who are often required to select a best-performing data, which maintains each of their requirements. The selection task becomes more burdensome when the set of non-dominated solutions is large. For this reason, the authors proposed a methodology that implements the k-means clustering algorithm to cluster the solutions in the Pareto front making the selection task more feasible. The main idea of this methodology is to categorize possible solutions into different groups based on their spread on the Pareto front. Calibrated parameter values may then be selected from among these groups of solutions.

Furthermore, this study can also help decision makers to select among the clusters obtained. This can be achieved by evaluating the distribution of calibration parameters within each cluster. A key benefit of clustering Pareto front solutions is the ability to evaluate the extent of the compensation between model input parameters. Clusters with large distributions indicate severe compensations between parameters. Therefore, model developer may select a parameter set with the least amount of compensations, or determine steps to reduce compensations if the compensations are too large.

The proposed methodology has been applied to case study with a steel moment resisting frame. The results show that this methodology makes it more feasible to select the optimal group of solutions, which will narrow down the range to select calibration parameters. A separate robustness analysis presents the trade-off between robustness to uncertainty parameters and fidelity threshold. Hence, within a certain fidelity threshold, the model can tolerate the maximum allowable level of uncertainty.

References

Berman A, Flannelly WG (1971) Theory of incomplete models of dynamic structures. AIAA J 9(8):1481–1487

Ben-Haim Y, Hemez FM (2004) Robustness-to-uncertainty, fidelity-to-data and prediction looseness of models. In: Proceedings of the 22nd international modal analysis conference (IMAC-XXII), pp 26–29

Fayyad UM, Piatetsky-Shapiro G, Smyth P, Uthurusamy R (1996) Advances in knowledge discovery and data mining. AAAI Press/MIT Press, Menlo Park

Xu R, Wunsch D (2005) Survey of clustering algorithms. Neural Networks, IEEE Transactions 16(3):645–678

Yair E, Zeger K, Gersho A (1992) Competitive learning and soft competition for vector quantizer design. Signal Processing, IEEE Transactions 40(2):294–309

Zio E, Bazzo R (2011) A clustering procedure for reducing the number of representative solutions in the Pareto Front of multiobjective optimization problems. Eur J Oper Res 210(3):624–634

Ben-Haim Y (2006) Info-gap decision theory: decisions under severe uncertainty. Academic Press, Oxford

Atamturktur S, Liu Z, Cogan S, Juang H (2014) Calibration of imprecise and inaccurate numerical models considering fidelity and robustness: a multi-objective optimization-based approach. Struct Multidiscip Optim 50(6):1–13

Awasthi P, Balcan MF (2013) Center based clustering: a foundational perspective. Survey chapter in handbook of cluster analysis (manuscript)

Veerappa V, Letier E (2011) Understanding clusters of optimal solutions in multi-objective decision problems. In: 19th IEEE international conference on requirements engineering (RE), IEEE, pp 89–98

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Rights and permissions

Copyright information

© 2015 The Society for Experimental Mechanics, Inc.

About this paper

Cite this paper

Atamturktur, S., Stevens, G., Cheng, Y. (2015). Clustered Parameters of Calibrated Models When Considering Both Fidelity and Robustness. In: Atamturktur, H., Moaveni, B., Papadimitriou, C., Schoenherr, T. (eds) Model Validation and Uncertainty Quantification, Volume 3. Conference Proceedings of the Society for Experimental Mechanics Series. Springer, Cham. https://doi.org/10.1007/978-3-319-15224-0_23

Download citation

DOI: https://doi.org/10.1007/978-3-319-15224-0_23

Publisher Name: Springer, Cham

Print ISBN: 978-3-319-15223-3

Online ISBN: 978-3-319-15224-0

eBook Packages: EngineeringEngineering (R0)