Abstract

This chapter is concerned with the perception and simulation of self-motion in virtual environments, and how spatial presence and other higher cognitive and top-down factors can contribute to improve the illusion of self-motion (“vection”) in virtual reality (VR). In the real world, we are used to being able to move around freely and interact with our environment in a natural and effortless manner. Current VR technology does, however, hardly allow for natural, life-like interaction between the user and the virtual environment. One crucial shortcoming is the insufficient and often unconvincing simulation of self-motion, which frequently causes disorientation, unease, and motion sickness. The specific focus of this chapter is the investigation of potential relations between higher-level factors like presence on the one hand and self-motion perception in VR on the other hand. Even though both presence and self-motion illusions have been extensively studied in the past, the question whether/how they might be linked to one another has received relatively little attention by researchers so far. After reviewing relevant literature on vection and presence, we present data from two experiments, which explicitly investigated potential relations between vection and presence and indicate that there might indeed be a direct link between these two phenomena. We discuss theoretical and practical implications from these findings and conclude by sketching a tentative theoretical framework that discusses how a broadened view that incorporates both presence and vection research might lead to a better understanding of both phenomena, and might ultimately be employed to improve not only the perceptual effectiveness of a given VR simulation, but also its behavioural and goal/application-specific effectiveness.

Access provided by Autonomous University of Puebla. Download chapter PDF

Similar content being viewed by others

Keywords

- Behavioural effectiveness

- Cognitive factors

- Experimentation

- Framework

- Higher-level factors

- Human factors

- Human-computer interfaces

- Immersion

- Perception-action loop

- Perceptual effectiveness

- Perceptually-Oriented Ego-Motion Simulation

- Presence

- Self-motion illusion

- Self-Motion Simulation

- Spatial Presence

- Vection

- Virtual environments

- Virtual reality

This chapter is concerned with the perception and simulation of self-motion in virtual environments, and how spatial presence and other higher cognitive and top-down factors can contribute to improve the illusion of self-motion (“vection”) in virtual reality (VR). In the real world, we are used to being able to move around freely and interact with our environment in a natural and effortless manner. Current VR technology does, however, hardly allow for natural, life-like interaction between the user and the virtual environment. One crucial shortcoming in current VR is the insufficient and often unconvincing simulation of self-motion, which frequently causes disorientation, unease, and motion sickness (Lawson et al. 2002). We posit that a realistic perception of self-motion in VR is a fundamental constituent for spatial presence and vice versa. Thus, by improving both spatial presence and self-motion perception in VR, we aim to eventually enable perceptual realism and performance levels in VR similar to the real world. Prototypical examples that currently pose considerable challenges include basic tasks like spatial orientation and distance perception, as well as applied scenarios like training and entertainment applications. Users frequently get lost easily in VR while navigating, and simulated distances appear to be compressed and underestimated compared to the real world (Chance et al. 1998; Creem-Regehr et al. 2005; Ruddle 2013; Hale and Stanney 2014; Witmer and Sadowski 1998).

The specific focus of this chapter is the investigation of potential relations between presence and other higher-level factors on the one hand and self-motion perception in VR on the other hand. Even though both presence and self-motion illusions have been extensively studied in the past, the question whether/how they might be linked to one another has received relatively little attention by researchers so far. After a brief review of the relevant literature on vection and presence, we will present data from two experiments which explicitly investigated potential relations between vection and presence and indicate that there might indeed be a direct link between these two phenomena (Riecke et al. 2004, 2006a). In the last part of this chapter, we will discuss the theoretical and practical implications from these findings for our understanding of presence and self-motion perception. We will conclude by sketching a tentative theoretical framework that discusses how a broadened view that incorporates both presence and vection research might lead to a better understanding of both phenomena, and might ultimately be employed to improve not only the perceptual effectiveness of a given VR simulation, but also its behavioural and goal/application-specific effectiveness.

The origins of the work presented here were inspired by an EU-funded project on “Perceptually Oriented Ego-motion Simulation” (POEMS-IST-2001-39223). The goal there was to take first steps towards establishing a lean and elegant self-motion simulation paradigm that is powerful enough to enable convincing self-motion perception and effective self-motion simulation in VR, without (or while hardly) moving the user physically. This research was guided by the long-term vision of achieving cost-efficient, lean and elegant self-motion simulation that enables compelling perception of self-motion and quick, intuitive, and robust spatial orientation while traveling in VR, with performance levels similar to the real world. Our approach to tackle this goal was to concentrate on perceptual aspects and task-specific effectiveness rather than aiming for perfect physical realism (Riecke et al. 2005c). This approach focuses on multi-modal stimulation of our senses using VR technology, where vision, auditory information, and vibrations let users perceive that they are moving in space. Importantly, we broadened the research perspective by connecting the concepts of top-down or high-level phenomena like spatial presence and reference frames to vection research (Riecke 2011). It is well-known that quite compelling self-motion illusions can occur both in the real world and in VR. Hence, the investigation of such self-motion illusions in VR was used as a starting point in order to study how self-motion simulation can eventually be improved in VR.

Spatial presence occupies an important role in this context, as we expected this to be an essential factor in enabling robust and effortless spatial orientation and task performance. Furthermore, according to our spatial orientation framework (von der Heyde and Riecke 2002; Riecke 2003), we propose that spatial presence is a necessary prerequisite for quick, robust, and effortless spatial orientation behaviour in general and for automatic spatial updating in particular. Thus, increasing spatial presence would in turn be expected to increase the overall convincingness and perceived realism of the simulation, thus bringing us one step closer to our ultimate goal of real world-like interaction with and navigation through the virtual environment. A first step towards this goal would be to show that increasing spatial presence in a VR simulation increases perception of illusory self-motion. This issue will be elaborated upon in more detail in Sect. 9.4.

1 Motivation and Background

Although virtual reality technology has been developing at an amazing pace during the last decades, existing virtual environments and simulations are still not able to evoke a compelling illusion of self-motion that occurs without any delay to the visual motion onset (Hettinger et al. 2014; Riecke 2011; Schulte-Pelkum 2007). Similarly, presence – i.e., the feeling of being and acting in the simulated virtual environment – is often limited or disrupted for users exposed to a VR simulation: Slater and Steed have introduced the concept of breaks in presence (BIP), which describes the frequent phenomenon that users suddenly become aware of the real environment and do not feel present in the VR simulation anymore (Slater and Steed 2000).

While the use of VR applications has widely spread in various fields, such as entertainment, training, research, and education, there are a number of problems that users are confronted with. In this section, we will highlight some of these problems that we see as crucial for the further use and promotion of VR technology.

1.1 Spatial Orientation Problems in VR

One important limitation of most VR setups stems from the observation that users get easily disoriented or lost while navigating through virtual environments (e.g., Chance et al. 1998; Ruddle 2013). Moreover, it is not yet fully understood where exactly these problems arise from. Several studies have shown that allowing for physical motions can increase spatial orientation ability, compared to situations where only visual information about the travelled path is available (Bakker et al. 1999; Chance et al. 1998; Klatzky et al. 1998; Riecke et al. 2010; Ruddle and Lessels 2006; Waller et al. 2004). Ruddle and Lessels demonstrated for example that allowing participants to physically walk around while wearing a head-mounted-display (HMD) dramatically improved performance for a navigational search task, whereas adding only physical rotation did not show any improvement (Ruddle and Lessels 2006). Other studies, however, showed that physical rotations are critical for basic spatial orientation tasks (Bakker et al. 1999; Chance et al. 1998; Riecke et al. 2010; see, however, Avraamides et al. 2004) but not sufficient for more complex tasks (Ruddle and Peruch 2004; Ruddle 2013). In apparent conflict to the above-mentioned studies, there are also several experiments that demonstrate that physical motions do not necessarily improve spatial orientation at all (Kearns et al. 2002; Riecke et al. 2002, 2005a; Waller et al. 2003). Highly naturalistic visual stimuli alone can even be sufficient for enabling good spatial orientation (Riecke et al. 2002) and/or automatic spatial updating (Riecke et al. 2005a, 2007) if they include useful landmarks, whereas simple optic flow typically seems insufficient (Bakker et al. 1999; Klatzky et al. 1998; Riecke et al. 2007; Riecke 2012). Especially when the visually displayed stimulus is sparse, display parameters such as the absolute size and field of view (FOV) of the displayed stimulus, but also the type of display itself (e.g., HMD vs. monitor vs. curved or flat projection screen) become critical factors (Bakker et al. 1999; Bakker et al. 2001; Klatzky et al. 1998; Riecke et al. 2005b; Tan et al. 2006).

We propose that spatial presence in the simulated scene might play an important – although often neglected – role in understanding the origins of the spatial orientation deficits typically observed in VR. In particular, the potential interference between the reference frames provided by the physical surroundings and the simulated virtual environment should be considered, as will be elaborated upon in Sect. 9.3.1 (see also Avraamides and Kelly 2008; May 1996, 2004; Riecke and McNamara submitted; Wang 2005).

1.2 Spatial Misperception in VR

Apart from the spatial orientation problems often observed in VR, there are also serious although well-known systematic misperceptions associated with many VR displays. Several studies showed for example that especially head-mounted displays (HMDs) often lead to systematic distortions of both perceived distances and turning angles (Bakker et al. 1999, 2001; Creem-Regehr et al. 2005; Grechkin et al. 2010; Riecke et al. 2005b; Tan et al. 2006). The amount of systematic misperception in VR is particularly striking in terms of perceived distance: While distance estimations using blindfolded walking to previously seen targets are typically rather accurate and without systematic errors for distances up to 20 m for targets in the real world (Loomis et al. 1992, 1996; Rieser et al. 1990; Thomson 1983), comparable experiments where the visual stimuli were presented in VR typically report compression of distances as well as a general underestimation of egocentric distances, especially if HMDs are used (Creem-Regehr et al. 2005; Grechkin et al. 2010; Thompson et al. 2004; Willemsen et al. 2008; Witmer and Sadowski 1998). Even a wide-FOV (140° × 90°) HMD-like Boom display resulted in a systematic underestimation of about 50 % for simulated distances between 10 and 110 ft (Witmer and Kline 1998). A similar overestimation and compression in response range for HMDs has also been observed for visually simulated rotations (Riecke et al. 2005b). So far, only projection setups with horizontal field of views of 180° or more could apparently enable close-to-veridical perception (Plumert et al. 2004; Riecke et al. 2002, 2005b; see, however, Grechkin et al. 2010), even though the FOV alone is not sufficient to explain the systematic misperception of distances in VR (Knapp and Loomis 2004). Hence, further research is required to compare and evaluate different display setups and simulation paradigms in terms of their effectiveness for both spatial presence and self-motion simulation.

1.3 The Challenge of Self-Motion Simulation

When we move through our environment, either by locomotion or transportation in a vehicle, virtually all of our senses are activated. The human senses that are considered as most essential for self-motion perception are the visual and vestibular modalities (Dichgans and Brandt 1978; Howard 1982). Most motion simulators are designed to provide stimulation for these two senses. The most common design for motion platforms is the Stewart Platform, which has six degrees of freedom and uses six hydraulic or electric actuators that are arranged in a space-efficient way to support the moving platform (Kemeny and Panerai 2003). Typically, a visualization setup is mounted on top of the motion platform, and users are presented with visual motion in a simulated environment while the platform mimics the corresponding physical accelerations. Due to technical limitations of the motion envelope, however, the motion platform cannot display exactly the same forces that would occur during the corresponding motion in the real world, but only mimic them using sophisticated motion cueing and washout algorithms that ideally move the simulator back to an equilibrium position at a rate below the motion human detection threshold (e.g., Berger et al. 2010; Conrad et al. 1973). To simulate a forward acceleration, for example, an initial forward motion of the platform is typically combined with tilting the motion platform backwards to mimic the feeling of being pressed into the seat and to simulate the change of gravito-inertial force vector.

Apart from being rather large and costly, the most common problem associated with current motion simulators is the frequent occurrence of severe motion sickness (Bles et al. 1998; Guedry et al. 1998; Kennedy et al. 2010; Lawson et al. 2002). As already mentioned, the technical limitation in self-motion simulation is imposed by the fact that most existing motion platforms have a rather limited motion range. Consequently, they can only reproduce some aspects of the to-be-simulated motion veridically, and additional filtering is required to reduce the discrepancy between the intended motion and what the actual platform is able to simulate (e.g., Berger et al. 2010; Conrad et al. 1973). The tuning of these “washout filters” is a tedious business, and is typically done manually in a trial-and-error approach where experienced evaluators collaborate with washout filter experts who iteratively adjust the filter parameters until the evaluators are satisfied. While this manual approach might be feasible for some specific applications, a more general theory and understanding of the multi-modal simulation parameters and their relation to human self-motion perception is needed to overcome the limitations and problems associated with the manual approach. Such problems are evident for example in many flight and driving applications, where training in the simulator has been shown to cause misadapted behaviour that can be problematic in the corresponding real-world task (Boer et al. 2000; Burki-Cohen et al. 2003; Mulder et al. 2004). Attempts to formalize a comprehensive theory of motion perception and simulation in VR are, however, limited by our insufficient understanding of what exactly is needed to convey a convincing sensation of self-motion to users of virtual environments, and how this is related to the multi-modal sensory stimulation and washout filters in particular (Grant and Reid 1997; Stroosma et al. 2003; Telban and Cardullo 2001). Over the last decade, we investigated the possibility that not only the motion cueing algorithms and filter settings, but also high-level factors such as spatial presence might have an influence on the magnitude and believability of the perceived self-motion in a motion simulator. In order to increase spatial presence in the simulator, we provided realistic, consistent multi-modal stimulation to visual, auditory and tactile senses, and evaluated how vection and presence develop under different combinations of conditions (Riecke et al. 2005c, e; Riecke 2011; Schulte-Pelkum 2007).

Such above-mentioned shortcomings of most current VR setups limit the potential use of virtual environments for many applications. If virtual environments are to enable natural, real life-like behaviour that is indistinguishable from the real world or at least equally effective, then there is still a lot of work to be done, both in the fields of presence and self-motion simulation. VR technology is more and more turning into a standard tool for researchers who study self-motion perception, and many motion simulators use immersive setups such as head-mounted-displays (HMDs), wide-screen projection setups or 3D display arrays. It is thus important to systematically investigate potential influences of presence on self-motion perception and vice versa. It is possible that inconsistent findings in the recent self-motion perception literature might partly be attributable to uncontrolled influences of presence or other higher-level factors. Similarly, in presence research, the possibility that perceived self-motion in VR might have an effect on the extent to which one feels present in the simulated environment has received only little attention so far.

The following sections will provide brief literature overviews on self-motion illusions (“vection”) (Sect. 9.2) and some relevant aspects of the concept of presence (Sect. 9.3), followed by some theoretical considerations regarding how these two phenomena might be inter-related. In this context, we present and discuss in Sects. 9.4 and 9.5 results from two of our own experiments that demonstrate that not only low-level, bottom-up factors (as was often believed), but also higher cognitive contributions, top-down effects, and spatial presence in particular, can enhance self-motion perception and might thus be important factors that should receive more research attention. We finish the chapter by proposing an integrative theoretical framework that sketches how spatial presence and vection might be inter-related, and what consequences this implies in terms of applications and research questions (Sects. 9.6 and 9.7).

2 Literature Overview on the Perception of Illusory Self-Motion (Vection)

In this section,Footnote 1 we will provide a brief review of the literature on self-motion illusions that is relevant for the current context. More comprehensive reviews on visually induced vection are provided by, e.g., Andersen (1986), Dichgans and Brandt (1978), Howard (1982, 1986), Mergner and Becker (1990), Warren and Wertheim (1990). Vection with a specific focus on VR, motion simulation, and undesirable side-effects has more recently been reviewed in Hettinger et al. (2014), Lawson and Riecke (2014), Palmisano et al. (2011), Riecke and Schulte-Pelkum (2013), Riecke (2011), Schulte-Pelkum (2007).

When stationary observers view a moving visual stimulus that covers a large part of the FOV, they can experience a very compelling and embodied illusion of self-motion in the direction opposite to the visual motion. Many of us have experienced this illusion in real life: For example, when we are sitting in a stationary train and watch a train pulling out from the neighbouring track, we will often (erroneously) perceive that the train we are sitting in is starting to move instead of the train on the adjacent track (von Helmholtz 1866). This phenomenon of illusory self-motion has been termed “vection” and has been investigated for well over a century (von Helmholtz 1866; Mach 1875; Urbantschitsch 1897; Warren 1895; Wood 1895). Vection has been shown to occur for all motion directions and along all motion axes: Linear vection can occur for forward-backward, up-down, or sideways motion (Howard 1982). Circular vection can be induced for upright rotations around the vertical (yaw) axis, and similarly for the roll axis (frontal axis along the line of sight, like in a “tumbling room”), and also around the pitch axis (an imagined line passing through the body from left to right). The latter two forms of circular vection are especially nauseating, since they include a strong conflict between visual and gravitational cues and in particular affect the perceived vertical (Bles et al. 1998).

One of the most frequently investigated types of vection is circular vection around the earth-vertical axis. In this special situation where the observer perceives self-rotation around the earth-vertical axis, there is no interfering effect of gravity, since the body orientation always remains aligned with gravity during illusory self-rotation. In a typical classic circular vection experiment, participants are seated inside a rotating drum that is painted with black and white vertical stripes, a device called optokinetic drum. After the drum starts to rotate, the onset latency until the participant reports perceiving vection is measured. The strength of the illusion is measured either by the duration of the illusion, or by some indication of perceived speed or intensity of rotation, e.g., by magnitude estimation or by letting the participant press a button every time they think they have turned 90° (e.g., Becker et al. 2002).

In a similar manner, linear vection can be induced by presenting optic flow patterns that simulate translational motion. The traditional method used to induce linear vection in the laboratory is to use two monitors or screens facing each other, with the participant's head centred between the two monitors and aligned parallel to the screens, such that they cover a large part of the peripheral visual field (Berthoz et al. 1975; Johansson 1977; Lepecq et al. 1993). Optic flow presented in this peripheral field induces strong linear vection. For example, Johansson (1977) showed that observers perceive an “elevator illusion”, i.e., upward linear vection, when downward optic flow is shown. Other studies used monitors or projection screens in front of the participant to show expanding or contracting optic flow fields (Andersen and Braunstein 1985; Palmisano 1996). Comparing different motion directions shows greater vection facilitation for up-down (elevator) vection, presumably because visual motion does not suggest a change in the gravito-inertial vector as compared to front-back or left-right motion (Giannopulu and Lepecq 1998; Trutoiu et al. 2009).

In recent times, VR technology has been successfully introduced to perceptual research as a highly flexible research tool (Hettinger et al. 2014; Mohler et al. 2005; Nakamura and Shimojo 1999; Palmisano 1996, 2002; Riecke et al. 2005c). It has been shown that both linear and circular vection can be reliably induced using modern VR technology, and the fact that this technology allows for precise experimental stimulus control under natural or close-to-natural stimulus conditions is much appreciated by researchers (see reviews in Hettinger et al. 2014; Lawson and Riecke 2014; Palmisano et al. 2011; Riecke and Schulte-Pelkum 2013; Riecke 2011; Schulte-Pelkum 2007).

Before discussing possible inter-relations between presence and vection, let us first consider the most relevant findings from the literature on both vection (subsections below) and presence (Sect. 9.3). Traditionally, the occurrence of the self-motion illusion has been thought to depend mainly on bottom-up or low-level features of the visual stimulus. In the following, we will review some of the most important low-level parameters that have been found to influence vection (Sects. 9.2.1, 9.2.2, 9.2.3, 9.2.4, 9.2.5 and 9.2.6) and conclude this section with a discussion of possible higher-level or top-down influences on vection (Sect. 9.2.7).

2.1 Size of the Visual FOV

Using an optokinetic drum, Brandt and colleagues found that visual stimuli covering a large FOV induce stronger circular vection and result in shorter onset latencies than when smaller FOVs are used (Brandt et al. 1973). The strongest vection was observed when the entire FOV was stimulated. Limiting the FOV systematically increased onset latencies and reduced vection intensities. It was also found that a black and white striped pattern of 30° diameter that was viewed in the periphery of the visual field induces strong vection, at levels comparable to full field stimulation, whereas the identical 30° stimulus did not induce vection when it was viewed in the central FOV. This observation led to the conclusion of a “peripheral dominance” for illusory self-motion perception. Conversely, the central FOV was thought to be more important for the perception of object motion (as opposed to self-motion). However, this view was later challenged by Andersen and Braunstein (1985) and Howard and Heckmann (1989). Andersen and Braunstein showed that a centrally presented visual stimulus showing an expanding radial optic flow pattern that covered only 7.5° was sufficient to induce forward linear vection when viewed through an aperture. Howard and Heckmann (1989) proposed that the reason Brandt et al. (1973) found a peripheral dominance was likely due to a confound of misperceived foreground-background relations: When the moving stimulus is perceived to be in the foreground relative to a static background (e.g., the mask being used to cover parts of the FOV), it will not induce vection. They suspected that this might have happened to the participants in the Brandt et al. study, and they could confirm their hypothesis in their experiment by placing the moving visual stimulus either in front or in the back of the depth plane of the rotating drum. Their data showed that a central display would induce vection if it is perceived to be in the background. Thus, the original idea of peripheral dominance for self-motion perception should be reassessed. The general notion that larger FOVs are more effective for inducing vection, however, does hold true. In fact, when the perceived depth of the stimulus is controlled for, the perceived intensity of vection increases linearly with increasing stimulus size, independent of stimulus eccentricity (how far in the periphery the stimulus is presented) (Nakamura 2008). For virtual reality applications, this means that large-FOV displays are better suitable for inducing a compelling illusion of self-motion.

2.2 Foreground-Background Separation Between a Stationary Foreground and a Moving Background

As already briefly mentioned in the subsection above, a moving stimulus has to be perceived to be in the background in order to induce vection. A number of studies have investigated this effect (Howard and Heckmann 1989; Howard and Howard 1994; Nakamura 2006; Ohmi et al. 1987). All those studies found a consistent effect of the depth structure of the moving stimulus on vection: Only moving stimuli that are perceived to be in the background will reliably induce vection. If a stationary object is seen behind a moving stimulus, no vection will occur (Howard and Howard 1994). That is, the perceived foreground-background or figure-ground relationship can essentially determine the occurrence and strength of vection (Kitazaki and Sato 2003; Ohmi et al. 1987; Seno et al. 2009). Following the reasoning of Dichgans and Brandt, one could argue that the very occurrence of vection might be due to our inherent assumption of a stable environment (Dichgans and Brandt 1978) or a “rest frame” (Prothero and Parker 2003; Prothero 1998): When we see a large part of the visual scene move in a uniform manner, especially if it is at some distance away from us, it seems reasonable to assume that this is caused by ourselves moving in the environment, rather than the environment moving relative to us. The latter case occurs only in very rare cases in natural occasions, such as in the train illusion, where our brain is fooled to perceive self-motion. It has been shown that stationary objects in the foreground will increase vection if they partly occlude a moving background (Howard and Howard 1994), and that a foreground that moves slowly in the direction opposite to that of the background will also facilitate vection (Nakamura and Shimojo 1999). In Sect. 9.4, we will present some recent data that extend these findings to more natural stimuli and discuss implications for self-motion simulation from an applied perspective.

2.3 Spatial Frequency of the Moving Visual Pattern

Diener et al. (1976) observed that moving visual patterns that contained high spatial frequencies are perceived to move faster than similar visual patterns of lower spatial frequencies, even though both move at identical angular velocities. This means that a vertical grating pattern with, e.g., 20 contrasts (such as black and white stripes) per given visual angle will be perceived to move faster than a different pattern with only 10 contrasts within the same visual angle. Palmisano and Gillam (1998) revealed that there is an interaction between the spatial frequency of the presented optic flow and the retinal eccentricity: While high spatial frequencies produce most compelling vection in the central FOV, peripheral stimulation results in stronger vection if lower spatial frequencies are presented. This finding contradicts earlier notions of peripheral dominance (see Sect. 9.2.1) and shows that both high- and low spatial frequency information is involved in the perception of vection, and that mechanisms of self-motion perception differ depending on the retinal eccentricity of the stimulus. In the context of VR, this implies that fine detail included in the graphical scene may be more beneficial in the central FOV, while stimuli in the periphery might be rendered at lower resolution and fidelity, thus reducing overall simulation cost (see also discussion in Wolpert 1990).

2.4 Velocity and Direction of the Visual Stimulus

Howard and Brandt et al. reported that the intensity and perceived speed of self-rotation in circular vection around the yaw axis is linearly proportional to the velocity of the optokinetic stimulus up to values of approximately 90°/s (Brandt et al. 1973; Howard 1986). Note that the perceived velocity interacts with the spatial frequency of the stimulus, as detailed in Sect. 9.2.3. While Brandt et al. (1973) report that the vection onset latency for circular vection is more or less constant for optical velocities up to 90°/s, others report that very slow movement below the vestibular threshold results in earlier vection onset (Wertheim 1994). This apparent contradiction might, however, be due to methodological differences: While Brandt et al. accelerated the optokinetic drum in darkness up to a constant velocity and measured vection onset latency from the moment the light was switched on, the studies where faster vection onset was found for slow optical velocities typically used sinusoidal motion with the drum always visible.

Similar relations between stimulus velocity and vection have been observed for linear motion: Berthoz et al. (1975) found a more or less linear relationship between perceived self-motion velocity and stimulus velocity up to a certain level where an upper limit of the sensation of vection was reached. Interestingly, thresholds for backward and downward vection have been found to be lower than for forward and upward vection, respectively (Berthoz and Droulez 1982). The authors assumed that this result reflects normal human behaviour: While we perceive forward motion quite often and are thus well used to it, we are hardly exposed to linear backward motions, such that our sensitivity for them might be lower. In general, so-called elevator (up-down) vection is perceived earlier and as more compelling than other motion directions (Giannopulu and Lepecq 1998; Trutoiu et al. 2009). This might be related to up-down movements being aligned with the direction of gravity for upright observers, such that gravitational and acceleration directions are parallel. Interestingly, Kano found that onset latencies for vertical linear vection are significantly shorter than for forward and backward vection when observers are seated upright, but this difference disappeared when participants observed the identical stimuli in a supine position (Kano 1991). It is possible that this effect might be related to different utricular and macular sensitivities of the vestibular system, but it is yet unclear how retinal and gravitational reference frames interact during vection.

Although vection is generally enhanced when the visuo-vestibular conflict is reduced, e.g., in patients whose vestibular sensitivity is largely reduced, such as bilaterally labyrinth defective participants (Cheung et al. 1989; Johnson et al. 1999), Palmisano and colleagues showed convincingly that adding viewpoint jitter to a vection-in-depth visual stimulus consistently enhances vection, even though it should enhance the sensory conflict between visual and vestibular cues (Palmisano et al. 2000, 2011).

2.5 Eye Movements

It has long been recognized that eye movements influence the vection illusion. Mach (1875) was the first to report that vection will develop faster if observers fixate a stationary target instead of letting their eyes follow the stimulus motion. This finding has been replicated many times (e.g., Becker et al. 2002; Brandt et al. 1973). Becker et al. investigated this effect in an optokinetic drum by systematically varying the instructions how to “watch” the stimulus: In one condition, participants had to follow the stimulus with their eyes, thus not suppressing the optokinetic nystagmus (OKN, which is the reflexive eye movement that also occurs in natural situations, e.g., when one looks out of the window while riding a bus). In other conditions, participants either had to voluntarily suppress the OKN by fixating a stationary target that was presented on top of the moving stimulus, or they were asked to stare through the moving stimulus. Results showed that vection developed faster with the eyes fixating a stationary fixation point as compared to participants staring through the stimulus. Vection took longest to develop when the eyes moved naturally, following the stimulus motion. Besides fixating and staring, looking peripherally or shifting one’s gaze between central and peripheral regions can also improve forward linear vection (Palmisano and Kim 2009).

2.6 Non-visual Cues and Multimodal Consistency

Most of the earlier vection literature has been concerned with visually induced vection. Vection induced by other sensory modalities, such as moving acoustic stimuli, has therefore received little attention, even though auditorily induced circular vection and nystagmus have been reported as early as 1923 (Dodge 1923) and since been replicated by several researchers (Hennebert 1960; Lackner 1977; Marme-Karelse and Bles 1977), see also reviews in Riecke et al. (2009b) and Väljamäe (2009). Lackner (1977) demonstrated, for example, that a rotating sound field generated by an array of loudspeakers could induce vection in blindfolded participants. More recent studies demonstrated that auditory vection can also be induced by headphone-based auralization using generic head-related transfer functions (HRTFs), both for rotations and translations (Larsson et al. 2004; Riecke et al. 2005e, 2009b; Väljamäe et al. 2004; Väljamäe 2009). Several factors were found to enhance auditory vection (see also reviews in Riecke et al. 2009b; Väljamäe 2009): For example, both the realism of the acoustic simulation and the number of sound sources were found to enhance vection. It is important to keep in mind, however, that auditory vection occurs only in about 25–70 % of participants and is far less compelling than visually induced vection, which can be indistinguishable from actual motion (Brandt et al. 1973). Hence, auditory cues alone are not sufficient to reliably induce a compelling self-motion sensation. However, adding consistent spatialized auditory cues to a naturalistic visual stimulus can enhance both vection and overall presence in the simulated environment, compared to non-spatialized sound or no sound (Keshavarz et al. 2013; Riecke et al. 2005d, 2009b). Similarly, moving sound fields can enhance “biomechanical” vection induced by stationary participants stepping along a rotating floor platter (Riecke et al. 2011). This suggests that multi-modal consistency might be beneficial for the effectiveness of self-motion simulations.

This notion is supported by Wong and Frost, who showed that circular vection is facilitated when participants are provided with an initial physical rotation (“jerk”) that accompanies the visual motion onset (Wong and Frost 1981). Even though the physical motion did not match the visual motion quantitatively, the qualitatively correct physical motion signal accompanying the visual motion supposedly reduced the visuo-vestibular cue conflict, thus facilitating vection.

Similar vection-facilitating effects have more recently been reported for linear vection when small linear forward jerks of only a few centimetres accompanied the onset of a visually displayed linear forward motion in VR. This has been shown for both passive movements of the observer (Berger et al. 2010; Riecke et al. 2006b; Riecke 2011; Schulte-Pelkum 2007) and for active, self-initiated motion cueing using a modified manual wheelchair (Riecke 2006) or a modified Gyroxus gaming chair where participants controlled the virtual locomotion by leaning into the intended motion direction (Feuereissen 2013; Riecke and Feuereissen 2012). For passive motions, combining vibrations and small physical movements (jerks) together was more effective in enhancing vection than either vibrations or jerks alone (Schulte-Pelkum 2007, exp. 6).

Helmholtz suggested already in 1866 that vibrations and jerks that naturally accompany self-motions play an important role for self-motion illusions, in that we expect to experience at least some vibrations or jitter (von Helmholtz 1866). Vibrations can nowadays easily be included in VR simulations and are frequently used in many applications. Adding subtle vibrations to the floor or seat in VR simulations has indeed been shown to enhance not only visually-induced vection (Riecke et al. 2005c; Schulte-Pelkum 2007), but also biomechanically-induced vection (Riecke et al. 2009a) and auditory vection (Riecke et al. 2009a; Väljamäe et al. 2006; Väljamäe 2007), especially if accompanied by a matching simulated engine sound (Väljamäe et al. 2006, 2009). These studies provide scientific support for the usefulness of including vibrations to enhance the effectiveness of motion simulations – which is already common practice in many motion simulation applications. It remains, however, an open question whether the vection-facilitating effect of adding vibrations originates from low-level, bottom-up factors (e.g., by decreasing the reliability of the vestibular and tactile signals indicating “no motion”) or whether the effect is mediated by higher-level and top-down factors (e.g., the vibrations increasing the overall believability and naturalism of the simulated motion), or both.

As both vibrations and minimal motion cueing can be added to existing VR simulations with relatively little effort and cost, their vection-facilitating effect is promising for many VR applications. Moreover, these relatively simple means of providing vibrations or jerks were shown to be effective despite being physically incorrect – while jerks normally need to be in the right direction to be effective and be synchronized with the visual motion onset, their magnitude seems to be of lesser importance. Indeed, for many applications there seems to be a surprisingly large coherence zone in which visuo-vestibular cue conflicts are either not noticed or at the least seem to have little detrimental effect (van der Steen 1998). Surprisingly, physical motion cues can enhance visually-induced vection even when they do not match the direction or phase of the visually-displayed motion (Wright 2009): When participants watched sinusoidal linear horizontal (left-right) oscillations on a head-mounted display, they reported more compelling vection and larger motion amplitudes when they were synchronously moved (oscillated) in the vertical (up-down) and thus orthogonal direction. Similar enhancement of perceived vection and motion amplitude was observed when both the visual and physical motions were in the vertical direction, even though visual and physical motions were always in opposite directions and thus out of phase by 180° (e.g., the highest visually depicted view coincided with the lowest point of their physical vertical oscillatory motion). In fact, the compellingness and amplitude of the perceived self-motion was not significantly smaller than in a previous study where visual and inertial motion was synchronized and not phase-shifted (Wright et al. 2005). Moreover, for both horizontal and vertical visual motions, perceived motion directions were almost completely dominated by the visual, not the inertial motion. That is, while there was some sort of “visual capture” of the perceived motion direction, the extent and convincingness of the perceived self-motion was modulated by the amount of inertial acceleration.

In two recent studies, Ash et al. showed that vection is enhanced if participants’ active head movements are updated in the visual self-motion display, compared to a condition where the identical previously recorded visual stimulus was replayed while observers did not make any active head-movements (Ash et al. 2011a, b). This means that vection was improved by consistent multisensory stimulation where sensory information from own head-movements (vestibular and proprioceptive) matched visual self-motion information on the VR display (Ash et al. 2011b). In a second study with similar setup, Ash et al. (2011a) found that adding a deliberate display lag between the head and display motion modestly impaired vection. This finding is highly important since in most VR applications, end-to-end system lag is present, especially in cases of interactive, multisensory, real-time VR simulations. Despite technical advancement, it is to be expected that this limitation cannot be easily overcome in the near future.

Seno and colleagues demonstrated that air flow provided by a fan positioned in front of observers’ face significantly enhanced visually induced forward linear vection (Seno et al. 2011b). Backward linear vection was not facilitated, however, suggesting that the air flow needs to at least qualitatively match the direction of simulated self-motion, similar to head wind.

Although multi-modal consistency in general seems to enhance vection, there seems to be at least one exception: while biomechanical cues from walking on a circular treadmill can elicit vection by themselves in blindfolded participants (Bles 1981; Bles and Kapteyn 1977) and also enhance visually induced vection (Riecke et al. 2009b; Väljamäe 2009) as well as biomechanically induced circular vection (Riecke et al. 2011), linear treadmill walking can neither by itself reliably induce vection, nor does it reliably enhance visually-induced vection, as discussed in detail in Ash et al. (2013) and Riecke and Schulte-Pelkum (2013).

It remains puzzling how adding velocity-matched treadmill walking to a visual motion simulation can impair vection (Ash et al. 2012; Kitazaki et al. 2010; Onimaru et al. 2010) while active head motions and simulated viewpoint jitter clearly enhance vection (Palmisano et al. 2011). More research is needed to better understand under what conditions locomotion cues facilitate or impair linear vection, and what role the artificiality of treadmill walking might play. Nevertheless, the observation that self-motion perception can, at least under some circumstances, be impaired if visual and biomechanical motion cues are matched seems paradoxical (as it corresponds to natural eyes-open walking) and awaits further investigation. These results do, however, suggest that adding a walking interface to a VR simulator might potentially (at least in some cases) decrease instead of increase the sensation of self-motion and thus potentially decrease the overall effectiveness of the motion simulation. Thus, caution should be taken when adding walking interfaces, and each situation should be carefully tested and evaluated as one apparently cannot assume that walking will always improve the user experience and simulation effectiveness.

Note that there are also considerable differences between different people’s susceptibility to vection and different vection-inducing stimuli, so it can be difficult to predict a specific person’s response to a given situation. Palmisano and colleagues made recent progress towards that challenge, though, and showed that the strength of linear forward vection could be predicted by analysing participants’ postural sway patterns without visual cues (Palmisano et al. 2014), which is promising.

In conclusion, there can often be substantial benefits in providing coherent self-motion cues in multiple modalities, even if they can only be matched qualitatively. Budget permitting, allowing for actual physical walking or full-scale motion or motion cueing on 6 degrees of freedom (DoF) motion platforms is clearly desirable and might be necessary for specific commercial applications like flight or driving simulation. When budget, space, or personnel is more limited, however, substantial improvements can already be gained by relatively moderate and affordable efforts, especially if consistent multi-modal stimulation and higher-level influences are thoughtfully integrated. Although they do not provide physically accurate simulation, simple means such as including vibrations, jerks, spatialized audio, or providing a perceptual-cognitive framework of movability can go a long way (Lawson and Riecke 2014; Riecke and Schulte-Pelkum 2013; Riecke 2009, 2011). Even affordable, commercially available motion seats or gaming seats can provide considerable benefits to self-motion perception and overall simulation effectiveness (Riecke and Feuereissen 2012).

As we will discuss in our conceptual framework in Sect. 9.6 in more detail, it is essential to align and tailor the simulation effort with the overarching goal: e.g., is the ultimate goal physical correctness, perceptual effectiveness, or behavioural realism? Or is there a stronger value put on user’s overall enjoyment, engagement, and immersion, as in the case of many entertainment applications, which represent a considerable and increasing market share?

2.7 Cognitive, Attentional, and Higher-Level Influences on Vection

The previous subsections summarized research demonstrating a clear effect of perceptual (low-level) factors and bottom-up processes on illusory self-motion perception. In the remainder of this section, we would like to point out several studies which provide converging evidence that not only low-level factors, but also cognitive, higher-level processes as well as attention might play an important role in the perception of illusory self-motion, especially in a VR context (see also reviews in Riecke and Schulte-Pelkum (2013) and Riecke (2009, 2011)). That is, we will argue that vection can also be affected by what is outside of the moving stimulus itself, for example by the way we move and look at a moving stimulus, our pre-conceptions, intentions, and how we perceive and interpret the stimuli, which is of particular importance in the context of VR.

As mentioned in Sect. 9.2.2, it has already been proposed in 1978 that the occurrence of vection might be linked to our inherent assumption of a stable environment (Dichgans and Brandt 1978). Perhaps this is why the perceived background of a vection-inducing stimulus is typically the dominant determinant of the presence of vection and modulator of the strength of vection, even if the background is not physically further away than the perceived foreground (Howard and Heckmann 1989; Ito and Shibata 2005; Kitazaki and Sato 2003; Nakamura 2008; Ohmi et al. 1987; Seno et al. 2009). This “object and background hypothesis for vection” has been elaborated upon and confirmed in an elegant set of experiments using perceptually bistable displays like the Rubin’s vase that can be perceived either as a vase or two faces (Seno et al. 2009). In daily life, the more distant elements comprising the background of visual scenes are generally stationary and therefore any retinal movement of those distant elements is more likely to be interpreted as a result of self-motion (Nakamura and Shimojo 1999). In VR simulations, these findings could be used to systematically reduce or enhance illusory self-motions depending on the overall simulation goal, e.g., by modifying the availability of real or simulated foreground objects (e.g., dashboards), changing peripheral visibility of the surrounding room (e.g., by controlling lighting conditions), or changing tasks/instructions (e.g., instructions to pay attention to instruments which are typically stationary and in the foreground).

In the study by Andersen and Braunstein described in Sect. 9.2.2, the authors remark that pilot experiments had shown that in order to perceive any self-motion, participants had to believe that they could actually be moved in the direction of perceived vection (Andersen and Braunstein 1985). Accordingly, participants were asked to stand in a movable booth and looked out of a window to view the optic flow pattern. Similarly, in a study by Lackner who showed that circular vection can be induced in blindfolded participants by a rotating sound field, participants were seated on a chair that could be rotated (Lackner 1977). Note that by making participants believe that they could, in fact, be moved physically, Andersen and Braunstein were able to elicit vection with a visual FOV as small as 7.5°, and Lackner (1977) and Larsson et al. (2004) were able to induce vection simply by presenting a moving sound field to blindfolded listeners. Under these conditions of limited or weak sensory stimulation, cognitive factors seem to become a relevant factor. It is possible that cognitive factors generally have an effect on vection, but that this has not been recognized so far due to a variety of reasons. For example, the cognitive manipulations might not have been powerful enough, or sensory stimulation might have been so strong that ceiling level was already reached, which is likely to be the case in an optokinetic drum that covers the full visible FOV.

In this context, a study by Lepecq and colleagues is of particular importance, as it explicitly addressed cognitive influences on linear vection (Lepecq et al. 1995): They found that 7 year old children perceive vection earlier when they are previously shown that the chair they are seated on can physically move in the direction of simulated motion – even though this never happened during the actual experiment. Interestingly, this vection-facilitating influence of pre-knowledge was not present in 11 year old children.

Prior knowledge of whether or not physical motions are possible do show some effect on adults as well: In a circular vection study in VR, 2/3 of the participants were fooled into believing that they physically moved when they were previously shown that the whole experimental setup can indeed be moved physically (Riecke et al. 2005e; Riecke 2011; Schulte-Pelkum 2007). Note, however, that neither vection onset times, nor vection intensity or convincingness were significantly affected by the cognitive manipulation. In another study, Palmisano and Chan (2004) demonstrated that cognitive priming can also affect the time course of vection: Adult participants experienced vection earlier when they were seated on a potentially movable chair and were primed towards paying attention to self-motion sensation, compared to a condition where they were seated on a stationary chair and instructed to attend to object motion, not self-motion.

Providing such a cognitive-perceptual framework of movability has recently been shown to also enhance auditory vection (Riecke et al. 2009a). When blindfolded participants were seated on a hammock chair while listening to binaural recordings of rotating sound fields, auditory circular vection was facilitated when participants’ feet were suspended by a chair-attached footrest as compared to being positioned on solid ground. This supports the common practice of seating participants on potentially moveable platforms or chairs in order to elicit auditory vection (Lackner 1977; Väljamäe 2007, 2009).

There seems to be mixed evidence about the potential effects of attention and cognitive load on vection. Whereas Trutoiu et al. (2008) observed vection facilitation when participants had to perform a cognitively demanding secondary task, vection inhibition was reported by Seno et al. (2011a). When observers in Kitazaki and Sato (2003) were asked to specifically pay attention to one of two simultaneously presented upward and downward optic flow fields of different colours, the non-attended flow field was found to determine vection direction. This might, however, also be explained by attention modulating the perceived depth-ordering and foreground-background relationship, as discussed in detail in Seno et al. (2009). Thus, while attention and cognitive load can clearly affect self-motion illusions, further research is needed to elucidate underlying factors and explain seemingly conflicting findings. A recent study suggests that vection can even be induced when participants are not consciously aware of any global display motion, which was cleverly masked by strong local moving contrasts (Seno et al. 2012).

Studies on auditorily induces circular vection also showed cognitive or top-down influences: sound sources that are normally associated with stationary objects (so-called “acoustic landmarks” like church bells) proved more potent in inducing circular vection in blindfolded participants than artificial sounds (e.g., pink noise) or sound typically generating from moving objects (e.g., driving vehicles or foot steps) (Larsson et al. 2004; Riecke et al. 2005e).

A similar mediation of vection via higher-level mechanisms was observed when a globally consistent visual stimulus of a natural scene was compared to an upside-down version of the same stimulus (Riecke et al. 2005e, 2006a). Even though the inversion of the stimulus left the physical stimulus characteristics (i.e., the image statistics and thus bottom-up factors) essentially unaltered, both participants’ rated presence in the simulated environment and the rated convincingness of the illusory self-motion were significantly reduced. This strongly suggests a higher-level or top-down contribution to presence and the convincingness of self-motion illusions. We posit that the natural, ecologically more plausible upright stimulus might have more easily been accepted as a stable “scene”, which in turn facilitated both presence and the convincingness of vection. The importance of a naturalistic visual stimulus is corroborated by a study from Wright et al. (2005) that demonstrated that visual motion of a photo-realistic visual scene can dominate even conflicting inertial motion cues in the perception of self-motion.

Already 20 years ago, Wann and Rushton (1994) stressed the importance of an ecological context and a naturalistic optic array for studying self-motion perception. Traditional vection research has, however, used abstract stimuli like black and white striped patterns or random dot displays, and only recently have more naturalistic stimuli become more common in self-motion research (Mohler et al. 2005; Riecke et al. 2005c, 2006a; van der Steen and Brockhoff 2000). One might expect that more natural looking stimuli have the potential of not only inducing stronger vection, but also higher presence. Consequently, it seems appropriate to consider possible interactions between presence and vection.

Even though presence is typically not assessed or discussed in vection studies, it is conceivable that presence might nevertheless have influenced some of those results: For example, Palmisano (1996) found that forward linear vection induced by a simple random dot optic flow pattern was increased if stereoscopic information was provided, compared to non-stereoscopic displays. Even though presence was not measured in this experiment, it is generally known that stereoscopic displays increase presence (Freeman et al. 2000; IJsselsteijn et al. 2001). In another study, van der Steen and Brockhoff (2000) found unusually short vection onset latencies, both for forward linear and circular yaw vection. They used an immersive VR setup consisting of a realistic cockpit replica of an aircraft on a motion simulator with a wide panoramic projection screen. Visual displays showed highly realistic scenes of landscapes as would be seen from an airplane. Even though presence was not assessed here, it is possible that the presumably high level of presence might have contributed to the strong vection responses of the observers.

In conclusion, cognitive factors seem to become more relevant when stimuli are ambiguous or have only weak vection-inducing power, as in the case of auditory vection (Riecke et al. 2009a) or sparse or small-FOV visual stimuli (Andersen and Braunstein 1985). It is conceivable that cognitive factors generally have an effect on vection, but that this has not been widely recognized for methodological reasons. For example, the cognitive manipulations might not have been powerful enough or free of confounds, or sensory stimulation might have been so strong that ceiling level was already reached, which is likely the case in an optokinetic drum that completely covers the participant’s field of vision.

3 A Selective Review on Presence

“Presence” denotes the phenomenon that users who are experiencing a simulated world in VR can get a very compelling illusion of being and acting in the simulated environment instead of the real environment, a state also described as “being there” or “spatial presence” (Hartmann et al. 2014). Several different definitions for presence have been suggested in the literature, and comprehensive reviews of different conceptualizations, definitions, and measurement methods are provided in the current book and, e.g., Biocca (1997), IJsselsteijn (2004), Lee (2004), Loomis (1992), Nash et al. (2000), Sadowski and Stanney (2002), Schultze (2010), Steuer (1992).

The fact that presence does occur, even though current VR technology can afford only relatively sparse and insufficient sensory stimulation, is remarkable by itself. Even with the most sophisticated current immersive VR technology, a simulated environment will never be seriously mistaken as reality by any user, even if one’s attention might be primarily drawn to the virtual environment. So, what is presence, and what is its relevance for the use of current VR systems?

One central problem associated with the concept of presence is its rather diffuse definition, which evokes theoretical and methodological problems. In order to theoretically distinguish presence from other related concepts, the term “immersion” is often used to clarify that presence (and in particular “spatial presence”) is about the sensation of being at another place than where one’s own body is physically located, while immersion usually refers to a psychological process of being completely absorbed in a certain physical or mental activity (e.g., reading a book or playing a game), such that one loses track of time and of the outside world (Jennett et al. 2008; Wallis and Tichon 2013). Note that we distinguish here between “immersion” as the psychological process and “immersiveness” as the medium’s ability to afford the psychological process of immersion (Vidyarthi 2012), which is and extension of what Slater (1999) referred to as “system immersion”. “Immersive VR”, then, describes VR systems that have the technical prerequisites and propensities (e.g., high perceptual realism and fidelity) to create an immersive experience in the user. It has been pointed out that presence and immersion or involvement are logically distinct phenomena, even though they seem to be empirically related (Haans and IJsselsteijn 2012). A captivating narrative or content in VR might draw off attention from sensorimotor mismatches due to poor simulation fidelity, such as a noticeable delay of a visual scene that is experienced using a head-tracked HMD. On the other hand, a low-tech device such as a book can be highly immersive, depending on its form and content. It is commonly assumed that highly immersive VR systems can also create a high sense of presence, but the relation between the concepts still remains unclear, and attempts to capture these phenomena in one comprehensive theoretical framework are rare (Haans and IJsselsteijn 2012; Vidyarthi 2012).

The most frequently used measurement methods of presence rely on post-exposure self-report questionnaires like the Presence Questionnaire (PQ) by Witmer et al. (2005), or the IGroup Presence Questionnaire (IPQ) by Schubert et al. (2001). Here, VR users are asked to report from memory the intensity of presence they perceived in the preceding VR-scene. Factor analytic surveys suggest that such questionnaires seem to be able to reliably identify different aspects of presence, and a number of questionnaires have gained a significant level of acceptance in the community, with reliability measures of Cronbach’s α at .85 for the IPQ, for example. However, some authors have questioned the validity of self-report measures of presence, and suggested physiological measures, such as heart-rate, skin conductance or event-evoked cortical responses etc. as more objective alternatives that allow for real-time measurement of presence (Slater and Garau 2007; Slater 2004). The idea is that a high level of perceived presence of a user in a simulated environment should be associated with similar physiological reactions as in the real world. Following this logic, Meehan et al. observed systematic changes in a number of physiological responses when users approached a simulated virtual pit that induced fear, which correlated with reported levels of presence (Meehan et al. 2002). Freeman et al. (2000) used postural responses to visual scenes of a driving simulator as a measure of presence. Postural responses to visual scenes depicting accelerations, braking, taking a curve etc. from the perspective of a rally car driver were stronger in conditions with stereoscopic visual stimulation in which reported presence was higher.

While such approaches might potentially help circumventing some of the problems associated with subjective report measures of presence, their utility remains unclear so far. Recently, the fMRI paradigm has been adopted in presence research, and some neural correlates of presence have been observed (Bouchard et al. 2012; Hoffman et al. 2003). However, this endeavor is only at its beginning yet, and this method will be practicable only to a limited number of research labs, at least for the near future.

Finally, another approach in this field is the use of behavioral measures (Bailenson et al. 2004; Wallis and Tichon 2013). If users could intuitively behave in a virtual environment in a natural manner and perform tasks as well as in reality, such as wayfinding, controlling a vehicle in a simulation etc., one central goal in VR research might be considered as fulfilled. Behavioural measures have the advantage that they can be recorded unobtrusively, in an ongoing perception-action-loop. Differential analyses of behavioural outcomes and their relation to presence have the potential to reveal new insights to this field. Along this line, a recent study about simulator-based training efficacy showed that reported presence levels of trainees in a train simulator correlated moderately with overall training efficacy after 1 year, but was not sensitive to performance differences in three different simulator types used in the study. In contrast, a perceptual judgment task about speed perception was able to predict different training efficacy of the three types of simulators (Wallis and Tichon 2013).

What becomes apparent from the considerations so far is that depending on the purpose and context of the VR simulation, be it training, entertainment, research, education etc., the relevance of presence and other concepts might vary, and there might be interactions. We will argue that a pragmatic, behaviorally oriented approach appears promising for the near future.

For the purpose of our study, the definition by Witmer and Singer which states that “…presence is defined as the subjective experience of being in one place or environment, even when one is physically situated in another” (Witmer and Singer 1998) describes well the relevant aspects of spatial presence in the context of self-motion simulation in VR, as we will outline in the following.

3.1 Presence and Reference Frames

One important aspect VR simulations we would like to point out here is that in any VR application, the user is always confronted with two, possibly competing, egocentric representations or reference frames: On the one hand, there is the real environment (i.e., the physical room where the VR setup is situated). On the other hand, there is the computer-generated VE, which provides an intended reference frame or representation that might interfere with the real world reference frame unless they present the same environment in perfect spatio-temporal alignment. Riecke and von der Heyde proposed that the degree to which users accept the VE as their primary reference frame might be directly related to the degree of spatial presence experience in the VE (von der Heyde and Riecke 2002; Riecke 2003). In their framework, the consistency or lack of interference between the VR and real world reference frame is hypothesized to be a necessary prerequisite for enabling compelling spatial presence. Conversely, any interference between conflicting egocentric reference frames is expected to decrease spatial presence and thereby also automatic spatial updating and natural, robust spatial orientation in the VE (Riecke et al. 2007; Riecke 2003). This notion of conflicting reference frames is closely related to the sensorimotor interference hypothesis proposed by May and Wang, which attributes the difficulty of imagined perspective switches (at least in part) to processing costs resulting from an interference between the sensorimotor and the to-be-imagined perspective (May 1996, 2004; Wang 2005; see also discussion in Avraamides and Kelly 2008; Riecke and McNamara submitted).

This emphasizes the importance of reducing users’ awareness of the physical surroundings, which has already been recognized by many researchers and VR designers. If not successful, a perceived conflict between competing egocentric reference frames arises which can critically disrupt presence, i.e., the feeling of being and acting in the virtual environment (IJsselsteijn 2004; Slater and Steed 2000), see also Hartmann et al.’s chapter in this volume (Hartmann et al. 2014).

3.2 Resence and Self-Motion Perception

In the following, we will review a selection of papers that investigated presence in the context of self-motion perception. Slater and colleagues found a significant positive association between extent and amount of body movement and subjective presence in virtual environments (Slater et al. 1998). Participants experienced a VE through a head-tracked HMD, and depending on task condition, one group was required to move their head and body a lot, while the other group could do the task without much body movement. The group that had to move more showed much higher presence ratings in the post-experimental presence questionnaires. It is plausible that the more an observer wearing an HMD experiences perceptual consequences of his or her own body movements in the simulated environment, the more he or she will experience presence in the simulated VE and not in the real world.

There are several studies that investigated the influence of stereoscopic presentation on presence and vection: Freeman, IJsselsteijn and colleagues observed that presence and postural responses were increased when observers watched a stereoscopic movie that was shot from the windshield of a rally car, as compared to a monoscopic version of the film (Freeman et al. 2000; IJsselsteijn et al. 2001). Vection, however, was not improved by the stereoscopic presentation. Note that in the studies by Freeman et al. and IJsselsteijn et al., presence was assessed with only one post-test question: Participants were simply asked to rate how much they felt present in the displayed scene as if they were “really there”. Participants were to place a mark in the scale depicting a continuum between the extremes “not at all there” and “completely there” on a line connecting the two points.

Since presence is conceptualized as a multi-dimensional construct, it is possible that assessing presence with only one item was too course to reveal a correlation with vection. This motivated us to perform a more fine-grained analysis on possible relations between presence and vection using the IPQ presence questionnaire (see Sect. 9.4.5).

3.3 Conclusions

In the preceding two subsections, we reviewed the relevant literature on vection and presence, and extracted a number of observations that indicate that attentional, cognitive, and higher-level factors might affect the occurrence and strength of vection. Since VR is increasingly being used as a standard tool in vection research, it seems worthwhile to investigate possible connections between presence and vection, be they correlational or causal. Previous studies that failed to show such a connection have the limitation that presence was assessed only coarsely (Freeman et al. 2000; IJsselsteijn et al. 2001). Furthermore, a number of studies measured vection but not presence, even though factors that are known to influence presence (such as stereoscopic viewing) were manipulated (Palmisano 1996). Given these circumstances, we aimed to perform a more detailed investigation of the potential relations between presence and vection. We were guided by the hypothesis that the different dimensions, which in sum constitute presence, might have differential influences on different aspects of the self-motion illusion. We decided to measure presence using the IPQ presence questionnaire by Schubert et al. (2001), and to assess vection by measuring vection onset latency, vection intensity, and the convincingness of illusory self-motion. Correlation analyses between the IPQ presence scales and the three vection measures are the core of the analysis.

4 Experiments Investigating the Relations Between Spatial Presence, Scene Consistency and Self-Motion Perception

In the following, we will briefly present the results of two of our own studies that directly addressed the potential relations between presence, naturalism of the stimulus, reference frames, and self-motion perception. A detailed description of the experiments can be found in Riecke et al. (2006a) (Experiment 1) and Riecke et al. (2004) (Experiment 2). Based on the above-mentioned idea that vection depends on the assumption of a stable environment, we expected that the sensation of vection should be enhanced if the presented visual stimulus (e.g., a virtual environment) is more easily “accepted” as a real world-like stable reference frame. That is, we predicted that vection in a simulated environment should be enhanced if participants feel spatially present in that environment and might thus more readily expect the virtual environment to be stable, just like the real world is expected to be stable.

Presence has been conceptualized as a multi-dimensional construct, and is usually measured with questionnaires where users are asked to provide subjective ratings about the degree to which they felt present in the VR environment after exposure, as discussed above (IJsselsteijn 2004; Nash et al. 2000; Sadowski and Stanney 2002; Schultze 2010). Despite being aware of problems associated with this introspective measurement method, we decided to use the Igroup Presence Questionnaire (IPQ) by Schubert et al. (2001) for our current study, which allowed us to test specific hypotheses about relations between different constituents of presence and vection. Using factor analyses, Schubert et al. extracted three factors that constitute presence based on a sample of 246 participants. These three factors were interpreted as spatial presence – the relation between one’s body and the VE as a space; involvement – the amount of attention devoted to the VE; and realness – the extent to which the VE is accepted as reality. The results of our own correlation analyses between vection in VR and the IPQ presence scores will be presented later in Sect. 9.4.5.

The goal of the first study presented here in more detail (henceforth named Experiment 1)Footnote 2 was to determine whether vection can be modulated by the nature of the vection-inducing visual stimulus, in particular whether or not it depicts a natural scene that allows for the occurrence of presence or not. On the one hand, the existence of such higher-level contributions would be of considerable theoretical interest, as it challenges the prevailing opinion that the self-motion illusion is mediated solely by the physical stimulus parameters, irrespective of any higher cognitive contributions. On the other hand, it would be important for increasing the effectiveness and convincingness of self-motion simulations: Physically moving the observer on a motion platform is rather costly, labour-intensive, and requires a large laboratory setup and safety measures. Thus, if higher-level and top-down mechanisms could help to improve the simulation from a perceptual level and in terms of effectiveness for the given task, this would be quite beneficial, especially because these factors can often be manipulated with relatively simple and cost-effective means, especially compared to using full-fledged motion simulators. The second study to be presented (subsequently referred to as Experiment 2) is an extension to the first study and investigated effects of minor modifications of the projection screen (Riecke et al. 2004; Riecke and Schulte-Pelkum 2006).

4.1 Methods

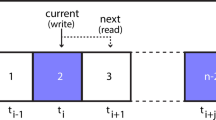

In the following, we will present the main results of Experiment 1 & 2 together with a novel reanalysis and discussion of possible causal relations between presence and self-motion perception. In both experiments, participants were seated in front of a curved projection screen (45° × 54° FOV) and were asked to rate circular vection induced by rotating visual stimuli that depicted either a photorealistic roundshot of a natural scene (the Tübingen market place, see Fig. 9.1, top) or scrambled (globally inconsistent) versions thereof that were created by either slicing the original roundshot horizontally and randomly reassembling it (Fig. 9.1, condition b) or by scrambling image parts in a mosaic-like manner (Fig. 9.1, condition B).

Setup and subset of the stimuli used in Experiment 1 and 2 (Riecke et al. 2004, 2006a). Top left: Participant seated in front of curved projection screen displaying a view of the Tübingen market place. Top right: 360° roundshot of the Tübingen Market Place. Bottom: 54° × 45° view of three of the stimuli discussed here. Left: Original, globally consistent image (a, A, a’, A’), Middle: 2 slices per 45° FOV (b, b’), and Right: 2 × 2 mosaics per 45° × 45° FOV (B, B’). Note that the original stimuli were presented in colour

4.2 Hypotheses

Scene scrambling was expected to disrupt the global consistency of the scene and pictorial depth cues contained therein. We expected that this should impair the believability of the stimuli and in particular spatial presence in the simulated scene. All of these factors can be categorized as cognitive or higher-level contributions (Riecke et al. 2005e; Riecke 2009, 2011). Note, however, that scene scrambling had only minor effects on bottom-up factors (physical stimulus properties) like the image statistics. Thus, any effect of global scene consistency on vection should accordingly be attributed to cognitive, top-down effects, and might be mediated by spatial presence in the simulated scene.

The original experiment followed a 2 (session: mosaic, slices) × 4 (scrambling severity: intact, 2, 8, 32 mosaics/slices per 45° FOV) × 2 (rotation velocity: 20°/s, 40°/s) × 2 (turning direction) within-subject factorial design with two repetitions per condition. In terms of our current purpose of discussing the relation between presence and vection, the comparison between the globally consistent and the most moderate scrambling level (2 slices/mosaics per 45° FOV) is the most critical, and we will constrain our discussion to those conditions (i.e., we omit the 8 & 32 slices/mosaics condition and the 40°/s conditions, which are discussed in detail in Riecke et al. 2006a). Presence was measured for each visual stimulus using the 14-item Igroup Presence Questionnaire (IPQ, Schubert et al. 2001) after the vection experiments.

4.3 Results and Discussion